Conversation With ChatGPT Was Enough to Develop Part of a CPU

That's one way to fall down the ChatGPT rabbit hole.

A team of researchers with New York University (NYU) has done the seemingly impossible: they've successfully designed a semiconductor chip with no hardware definition language. Using only plain English - and the definitions and examples within it that can define and describe a semiconductor processor - the team showcased what human ingenuity, curiosity, and baseline knowledge can do when aided by the AI prowess of ChatGPT.

While surprising, it goes further: the chip wasn't only designed. It was manufactured; it was benchmarked, and it worked. The two hardware engineers' usage of plain English showcases just how valuable and powerful ChatGPT can be (as if we still had doubts, following the number of awe-inspiring things it's done already).

The chip designed by the research team and ChatGPT wasn't a full processor; nothing in the way of an Intel or AMD processor like the ones in our list of best CPUs. But it's an element of a whole CPU: the logic responsible for creating a novel 8-bit accumulator-based microprocessor architecture. Accumulators are essentially registers (memory) where the results of intermediate calculations are stored until a main calculation is completed. But they're integral to how CPUs work; perhaps other necessary bits can also be designed.

Usually, teams work in several stages to bring a chip to design and manufacturing; one such stage has to do with translating the "plain English" that describes the chip and its capabilities to a chosen Hardware Descriptor Language (HDL) (such as Verilog), which represents the actual geometry, density and general disposition of the different elements within the chip that's required for the etching itself.

ChatGPT being a pattern recognition machine (just like humans - although we're both a bit more than that as well), it's incredible help with languages of any kind: vocal, written, and, here specifically, hardware-based. ChatGPT allowed the engineers to skip the HDL stage, which, while impressive, must leave HDL engineering specialists slightly nervous. Especially since the researchers said they expect fewer human-induced errors in the HDL translation process, contribute to productivity gains, shorten design time and time to market, and allow for more creative designs.

“We’re interested in knowing just how good the models are,” he said. “A lot of people look at these models and say, ‘These models are only toys, really.’ And I don’t think that they’re toys. They’re not everywhere yet, but they definitely will be, and that was why we did Chip Chat—almost like as a proof of concept demonstration.”

Dr. Hammond Pearce, NYU Tandon

One thing that’s a bit more concerning (or debatable, at least) is the desire to eliminate the need for HDL fluency among chip designers. Being an extremely specialized and complex field, it’s a relatively rare skill that’s very hard to master.

“The big challenge with hardware description languages is that not many people know how to write them,” Dr. Pearce said. “It’s quite hard to become an expert in them. That means we still have our best engineers doing menial things in these languages because there are just not that many engineers to do them.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Of course, automating parts of this process will be a definite boon. It could alleviate the human bottleneck by speeding up already-existing specialists even as new ones are brought up and trained. But there’s a risk to putting this skill entirely dependent on a software-based machine that depends on electricity (and server connectivity, in ChatGPT’s case) to run.

There’s also the matter of trusting what’s essentially an inscrutable software black box and its outputs. We’ve seen what can happen with prompt injection, and LLMs aren’t immune from vulnerabilities. We could even consider them to have expanded vulnerabilities since, besides being a piece of software, it’s a piece of software that results from training. And it isn’t science fiction to consider the option of a chip-based LLM being infected during its training phase towards introducing a “demonically clever” hardware-based back door leading to... somewhere. This may sound hyperbolic, and yes, it’s on the absolute low end of the possibility scale; but with mutating malware and other nasty surprises springing from even today’s versions of Large Language Models, what’s to say of what’ll be spewing from them tomorrow?

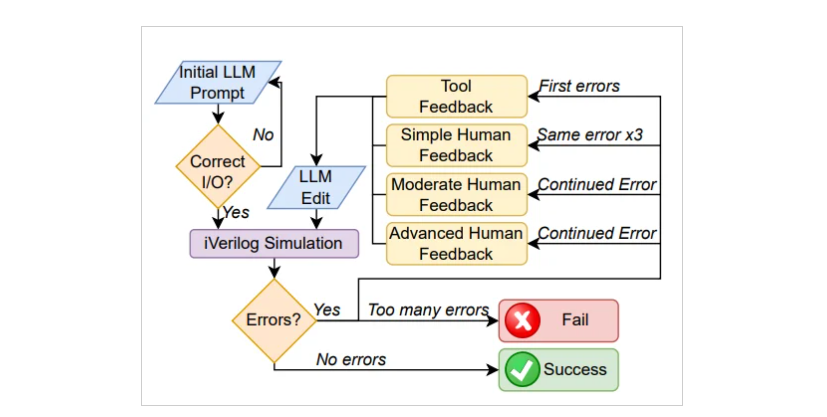

The researchers used commercially and publicly available Large Language Models (LLMs) to work on eight hardware design examples, working through the plain English text towards its Verilog (HDL) equivalent in live back-and-forth interaction between the engineers and the LLM.

“This study resulted in what we believe is the first fully AI-generated HDL sent for fabrication into a physical chip,” said NYU Tandon’s Dr. Hammond Pearce, research assistant professor, and a research team member. “Some AI models, like OpenAI’s ChatGPT and Google’s Bard, can generate software code in different programming languages, but their application in hardware design has not been extensively studied yet. This research shows AI can benefit hardware fabrication too, especially when it’s used conversationally, where you can have a kind of back-and-forth to perfect the designs.”

There are already several Electronic Design Automation (EDA) tools, with AIs showing impressive results in chip layout and other elements. But ChatGPT isn’t a piece of specialized software; apparently, it can write poetry and do an EDA cameo. The road toward becoming an EDA designer now has a much lower knowledge barrier for entry. Perhaps one day, enough bits and pieces of the CPU will be opened up so that anyone with enough determination (and invaluable help) of ChatGPT can design their CPU architecture at home.

Yes, many questions can be asked about what that means. But doesn’t it have potential?

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

Alvar "Miles" Udell ReplyOf course, automating parts of this process will be a definite boon.

I do believe I remember reading articles that AMD used automation to help design Phenom-Bulldozer processor families because it was cheaper and faster than using engineers through the entire process, even though it resulted in massive die waste, inefficiency, and an inferior product, and that Zen was the first archetecture to go back to human designed from the ground up, which is why the gains were so massive over even Piledriver. -

Using an AI-written HDL could also turn out to be risky in Chip design methodology. These test cases may not provide enough data to draw formal statistical conclusions.Reply

While they still created an 8-bit accumulator-based microprocessor (von Neumann type design) with the same kinds of functionality as a comparable PIC product, I'm not sure whether we can solely depend on AI's outcome in this chip design process.

Also, since they did not automate any part of this process, and each conversation was done manually, this can seriously limit the scale of this experiment. -

It appears that although ChatGPT-4 performed adequately, it still required human feedback for most conversations to be both successful and compliant.Reply

It also had problem and difficulty understanding exactly what specific Verilog lines would cause the error messages from "iverilog", hence while fixing even "minor" errors, ChatGPT-4 would often require several messages.

If we focus on these scripted benchmark tests, the final and overall outcome actually "heavily" relies on these early instructions, initial prompt and feedback. Even IF suppose there are MINOR errors, it will take several iterations of feedback, because LLMs can't fully correlate between an Error and the fix required.

So if the early prompts are not satisfactory, the conversation needs to be "restarted" from an earlier point. There were also bugs and discrepancies when the processor got into the "simulation" stage, after running some programs on it.

So this proof of concept experiment is kind of a mixed bag, but this is just my own "constructive" criticism. -

bit_user Reply

I thought that was motivated by their desire to use the same toolchain and design flow for the CPU team as the GPU team was using, to facilitate the creation of APUs.Alvar Miles Udell said:I do believe I remember reading articles that AMD used automation to help design Phenom-Bulldozer processor families because it was cheaper and faster than using engineers through the entire process, even though it resulted in massive die waste, inefficiency, and an inferior product,

Anyway, you can't compare the state of the art in layout & routing between 15 years ago and today. BTW, that's also the backend part of the design process, and not at all what the article is talking about.

That's far too simplistic. Zen wasn't just a redesign, but a re-think about how AMD approached CPU architectures. Jim Keller described it as protocol-driven.Alvar Miles Udell said:Zen was the first archetecture to go back to human designed from the ground up, which is why the gains were so massive over even Piledriver.

"We used to build computers such that you had a front end, a fetch, a dispatch, an execute, a load store, an L2 cache. If you looked at the boundaries between them, you'd see 100 wires doing random things that were dependent on exactly what cycle or what phase of the clock it was. Now these interfaces tend to look less like instruction boundaries – if I send an instruction from here to there, now I have a protocol. So the computer inside doesn't look like a big mess of stuff connected together, it looks like eight computers hooked together that do different things. There’s a fetch computer and a dispatch computer, an execution computer, and a floating-point computer. If you do that properly, you can change the floating-point without touching anything else.

That's less of an instruction set thing – it’s more ‘what was your design principle when you build it’, and then how did you do it. The thing is, if you get to a problem, you could say ‘if I could just have these five wires between these two boxes, I could get rid of this problem’. But every time you do that, every time you violate the abstraction layer, you've created a problem for future Jim. I've done that so many times, and like if you solve it properly, it would still be clean, but at some point if you hack it a little bit, then that kills you over time."

Source: https://www.anandtech.com/show/16762/an-anandtech-interview-with-jim-keller-laziest-person-at-tesla

Of course, I'm sure there were other differences, as well. But, the gains in Zen definitely weren't simply due to using full-custom layout. -

Hooda Thunkett So, can it give us the answer to the ultimate question of life, the universe, and everything?Reply -

newtechldtech This is called "cheating" not true AI , when you give the program hints inside your chat ...Reply

Stop the propaganda ! this AI is fake. will never work without including information where to look ! -

bit_user Reply

You know the "A" in "AI" stands for "Artificial", right? They're not saying it's a general intelligence, just that it can do certain complex tasks.newtechldtech said:This is called "cheating" not true AI , when you give the program hints inside your chat ...

Stop the propaganda ! this AI is fake. will never work without including information where to look !

BTW, try training a human engineer to design microprocessors without hints or feedback. -

kep55 Reply

"This may sound hyperbolic, and yes, it’s on the absolute low end of the possibility scale;"Admin said:A team of New York University researchers followed the ChatGPT prompt rabbit hole for long enough to develop a functional 8-bit accumulator-based microprocessor architecture that's part of a CPU.

Conversation With ChatGPT Was Enough to Develop Part of a CPU : Read more

Nothing is impossible. It's how probable something can happen. And where humans are involved, the probabilities increase rapidly. -

Anon#1234 Reply

I agree on 50% of this, GPT 4 is basically auto suggest on steroids so it's not actually that intelligent but it's only going to accelerate growth for better models in the future.newtechldtech said:This is called "cheating" not true AI , when you give the program hints inside your chat ...

Stop the propaganda ! this AI is fake. will never work without including information where to look ! -

Imagine AI teaching kids and students in schools and universities in future, online that it. Not classroom.Reply