Gigabyte Launches EPYC Server With 4 Nvidia A100

Rome's new Ampere-theater.

Gigabyte has announced its new server for AI, high-performance computing (HPC), and data analytics. The G262-ZR0 machine is one of the industry's first servers with four Nvidia A100 compute GPUs. The 2U system will be cheaper when compared to Gigabyte's and Nvidia's servers with eight A100 processors, but will still provide formidable performance.

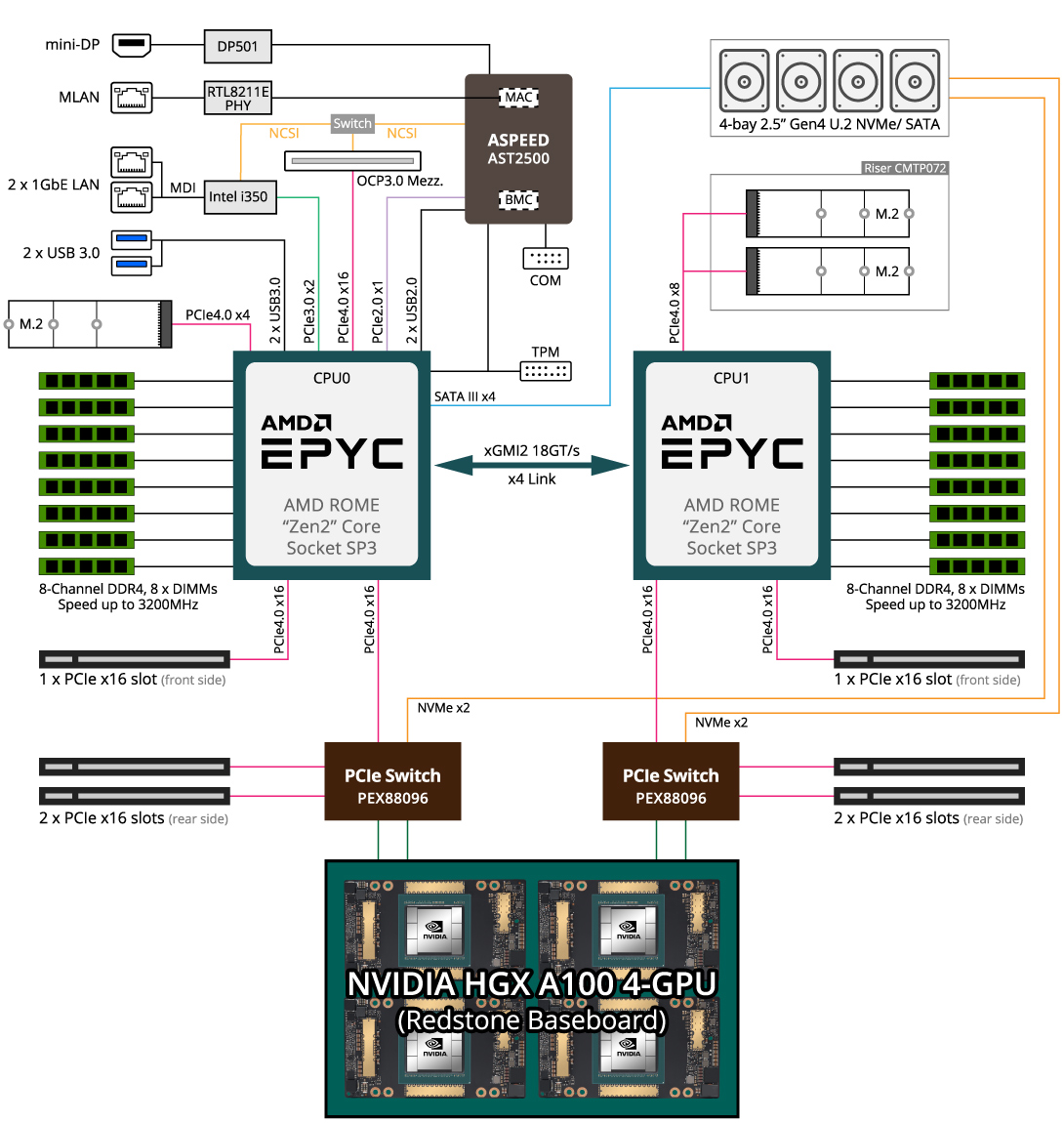

The Gigabyte G262-ZR0 is based on two AMD EPYC 7002-series 'Rome' processors with up to 64 cores per CPU as well as four Nvidia A100 GPUs 40GB (with 1.6TB/s bandwidth) or 80GB (with 2.0TB/s bandwidth) of onboard HBM2 memory. Four Nvidia A100 processors feature 13,824 FP64 CUDA cores, 27,648 FP32 CUDA cores as well as an aggregated performance of 38.8 FP64 TFLOPS and 78 FP32 TFLOPS.

The machine can be equipped with 16 RDIMM or LRDIMM DDR4-3200 memory modules, three M.2 SSDs with a PCIe 4.0 x4 interface, and four 2.5-inch HDDs or SSDs with a SATA or a Gen4 U.2 interface,

The machine also has two GbE ports, six low-profile PCIe Gen4 x16 expansion slots, one OCP 3.0 Gen4 x16 mezzanine slot, an ASpeed AST2500 BMC, and two 3000W 80+ Platinum redundant PSUs.

Gigabyte says that its G262-ZR0 machine will provide the highest GPU compute performance possible in a 2U chassis, which will be its main competitive advantage.

Gigabyte did not disclose pricing of its G262-ZR0 server, but it will naturally be significantly cheaper than an 8-way NVIDIA DGX A100 system or a similar machine from Gigabyte.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.