HoloLens And Acer MR HMD Play Nice Together

Microsoft thinks of the HoloLens and its new crop of “mixed reality” headsets like the ones from Acer and HP as devices that are all on the same continuum. We’ve stated before that we take some issue with that designation, but to prove its point, Microsoft showed us a demo wherein multiple HoloLens headsets and Acer HMDs worked together at the same time on the same experience.

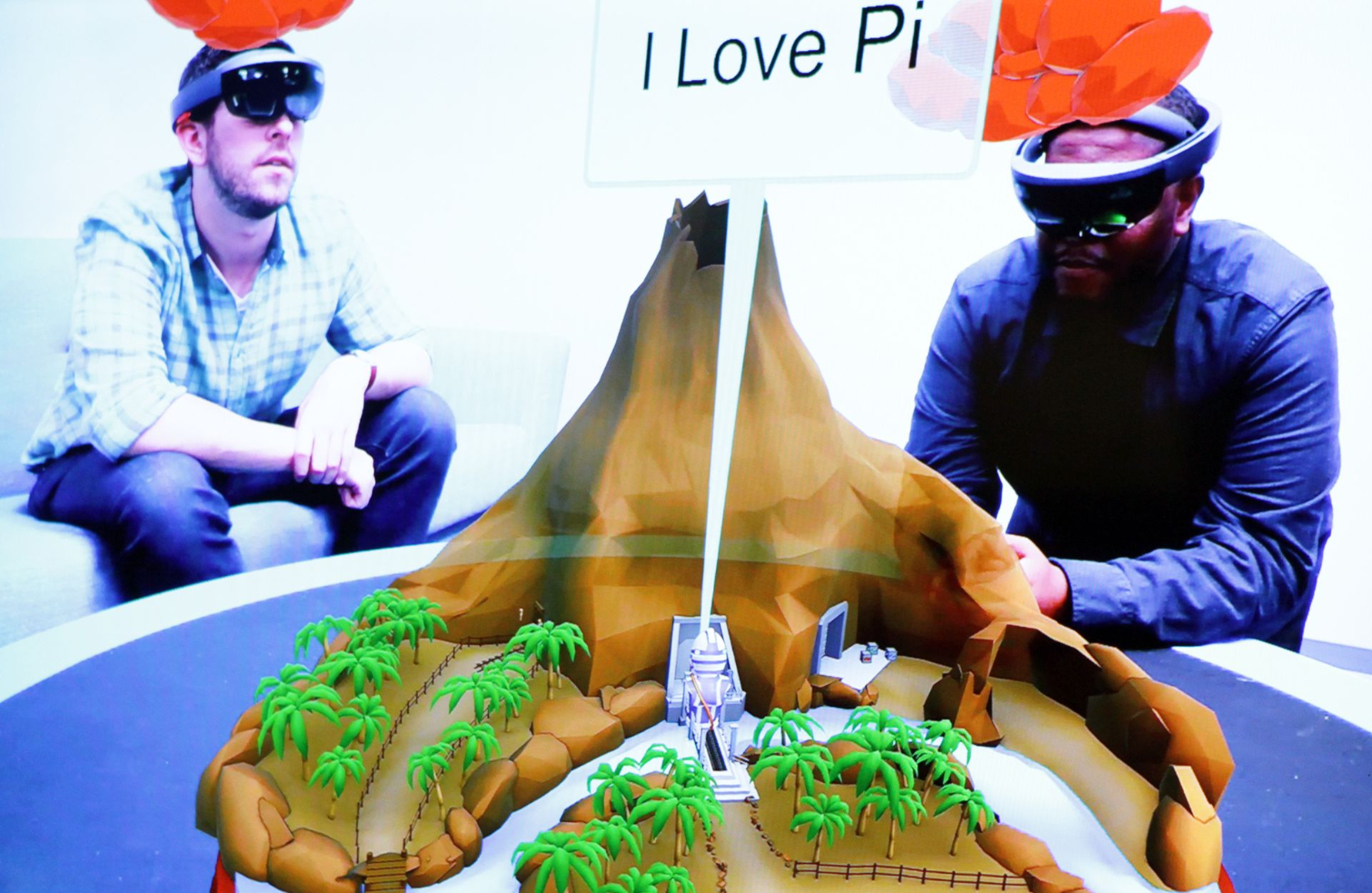

We saw this same demo in two different settings at Build 2017--in a room on the show floor (what Microsoft called “The Hub”) and in a special “Mixed Reality University” event where we were guided through the process of creating the actual demo in Unity.

Dollhouses And Problem-Solving

The demo is fairly simple: Working together, multiple parties have to navigate a few basic problem-solving activities to enter a mine and launch a rocket ship. (We know how bizarre that sounds...just stick with us here.)

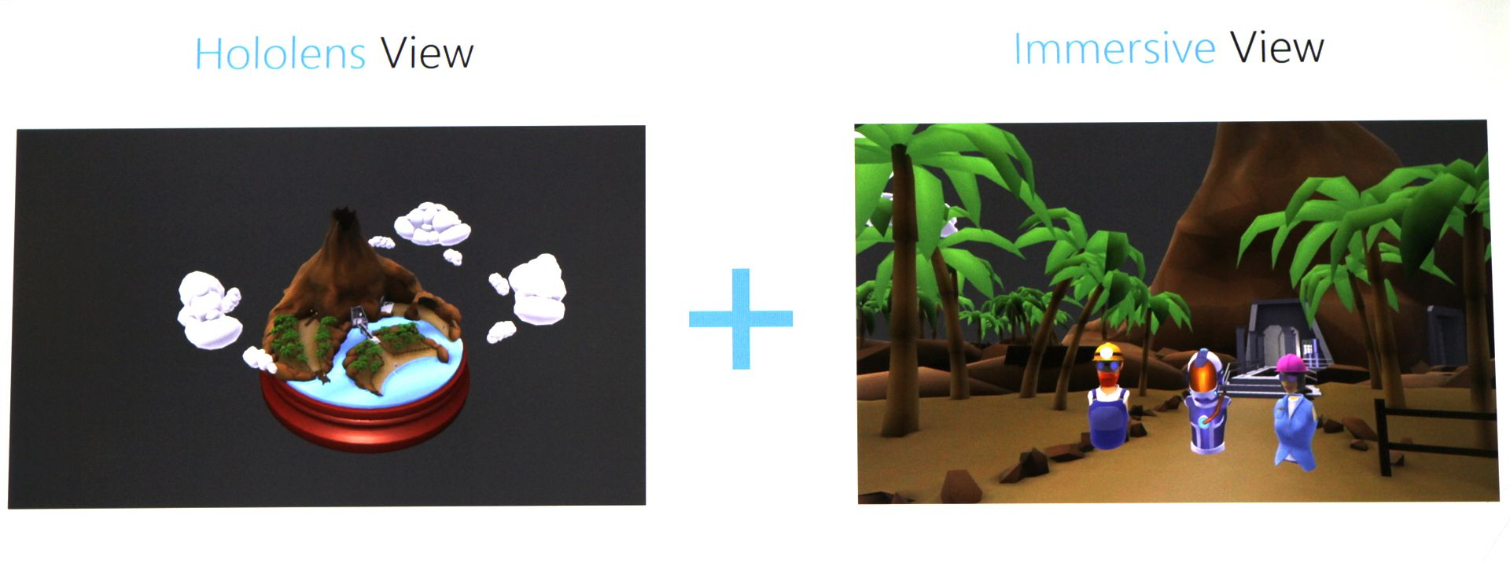

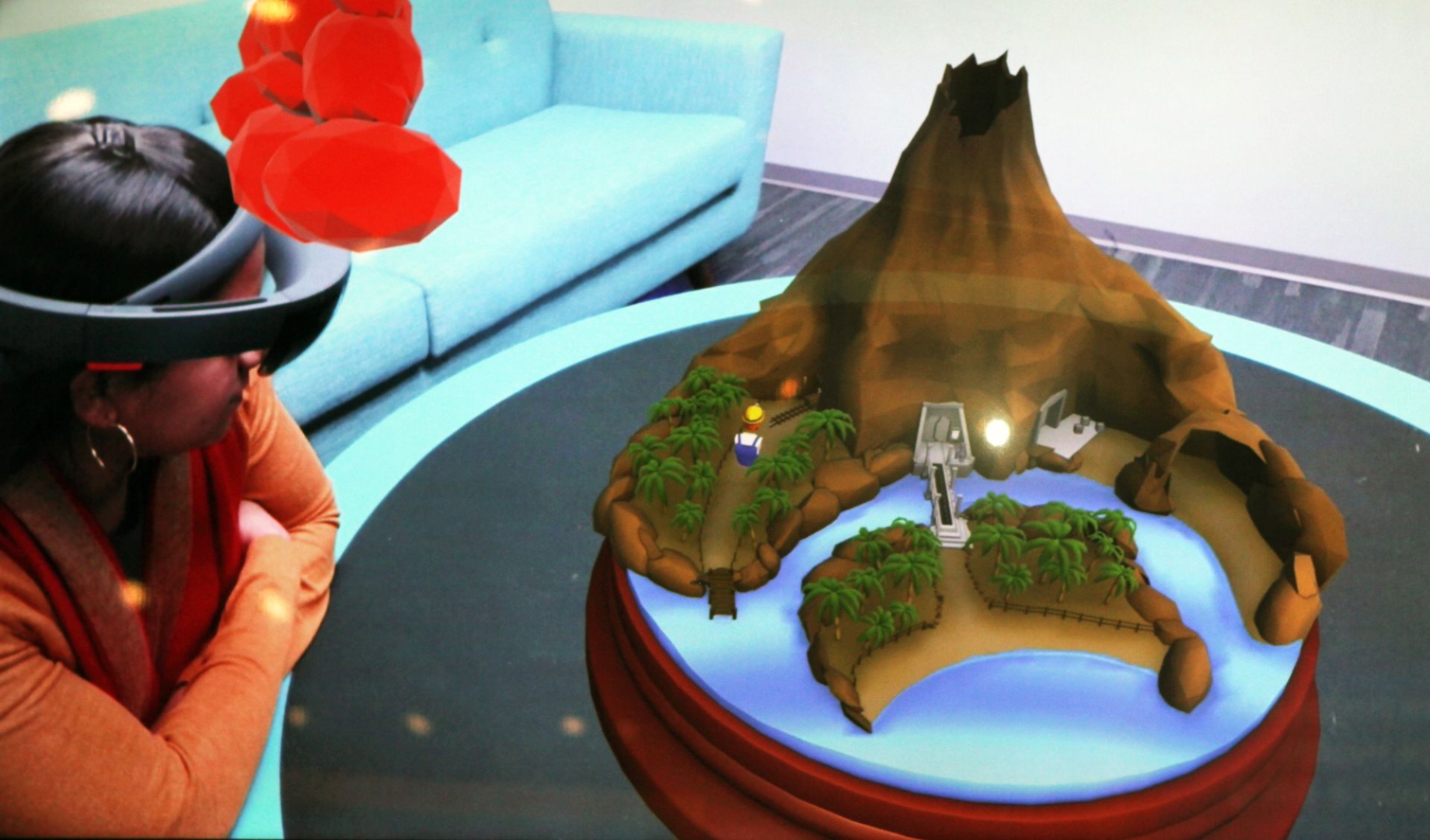

One group is wearing Acer HMDs and is seeing the environment in first-person VR, at human scale. The other group is wearing HoloLens headsets and is seeing the whole thing--an island with a volcano on it--in “dollhouse” form and in mixed reality. That means the island is rendered small enough that it fits onto a large, circular coffee table, and the HoloLens wearers have a god’s-eye view. They can see the island perched on the table, but because the HoloLens has see-through lenses, they can see the real world around it, too.

The HoloLens wearers can see the Acer HMD wearers represented as tiny little characters on the island. The Acer HMD wearers don’t know that the HoloLens wearers are there at all--until the demo’s hosts tell you to look up. There they see that the HoloLens wearers are represented as faceless clouds. They move and titter about, though, as if they’re sentient, because--well, they represent sentient humans.

(Yes, this has an unsettling psychological effect all the way around. We need to talk it out with our therapists.)

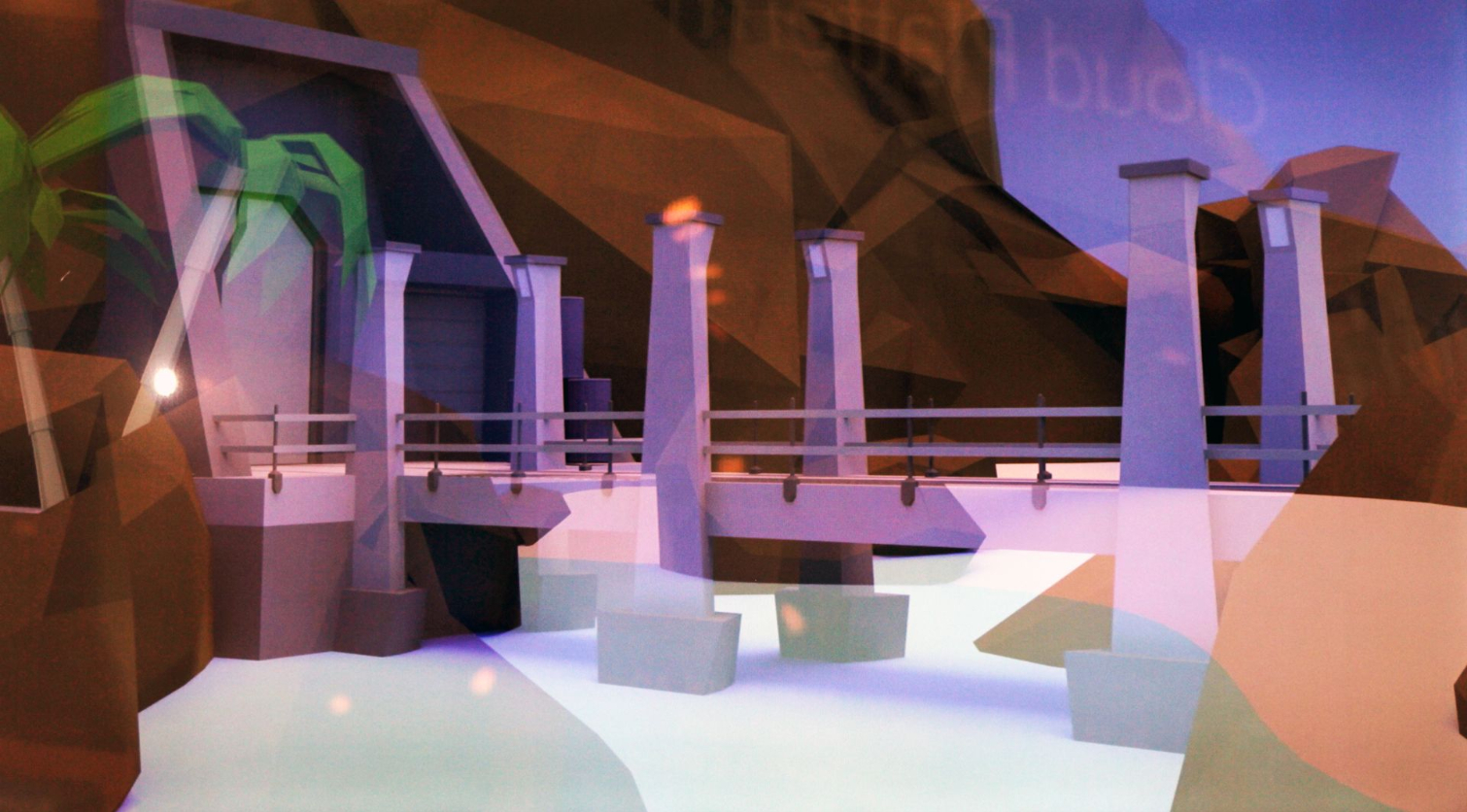

The game is this: The HoloLens folks will see clues pop up over the island. They have to shout the clues to the Acer HMD wearers, who use them to figure out the problem in front of them. For example, one of the clues had to do with Pi; the Acer HMD wearers had to punch in 3-1-4 as a code on a door. Another HoloLens-level clue was something about red, green, and blue (RGB, we get it), and the Acer HMD people had to stack three colored blocks in that order to open another door.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You get the gist. There were just a few clues, and then the HoloLens gods watched the Acer HMD mortals enter a volcano. Inside was a rocket. It took off, shot through the top of the volcano, jettisoned its spent fuel tanks, and sailed off through a hole in the ceiling and into outer space.

Fin.

Proving Mixed Reality

Like many tradeshow demos, this one was a little silly and focused more on the fun than the technical details. What it did call attention to, though, and for good reason, is the fact that the experience was 1) running on multiple devices--6-8 or so, depending on how many people were in the demo room at a time--and was 2) running on two different types of devices simultaneously, which 3) offered players two completely different perspectives on the game.

Yet all of the above was perfectly synced up in real time. That is frankly rather astonishing, and more than anything we’ve seen to date, it proves Microsoft’s notion of mixed reality--that mixed reality is the continuum, and that devices with differing functionality exist on different parts of the same continuum, and that those devices can work together because they run on the same platform.

Unity Unity

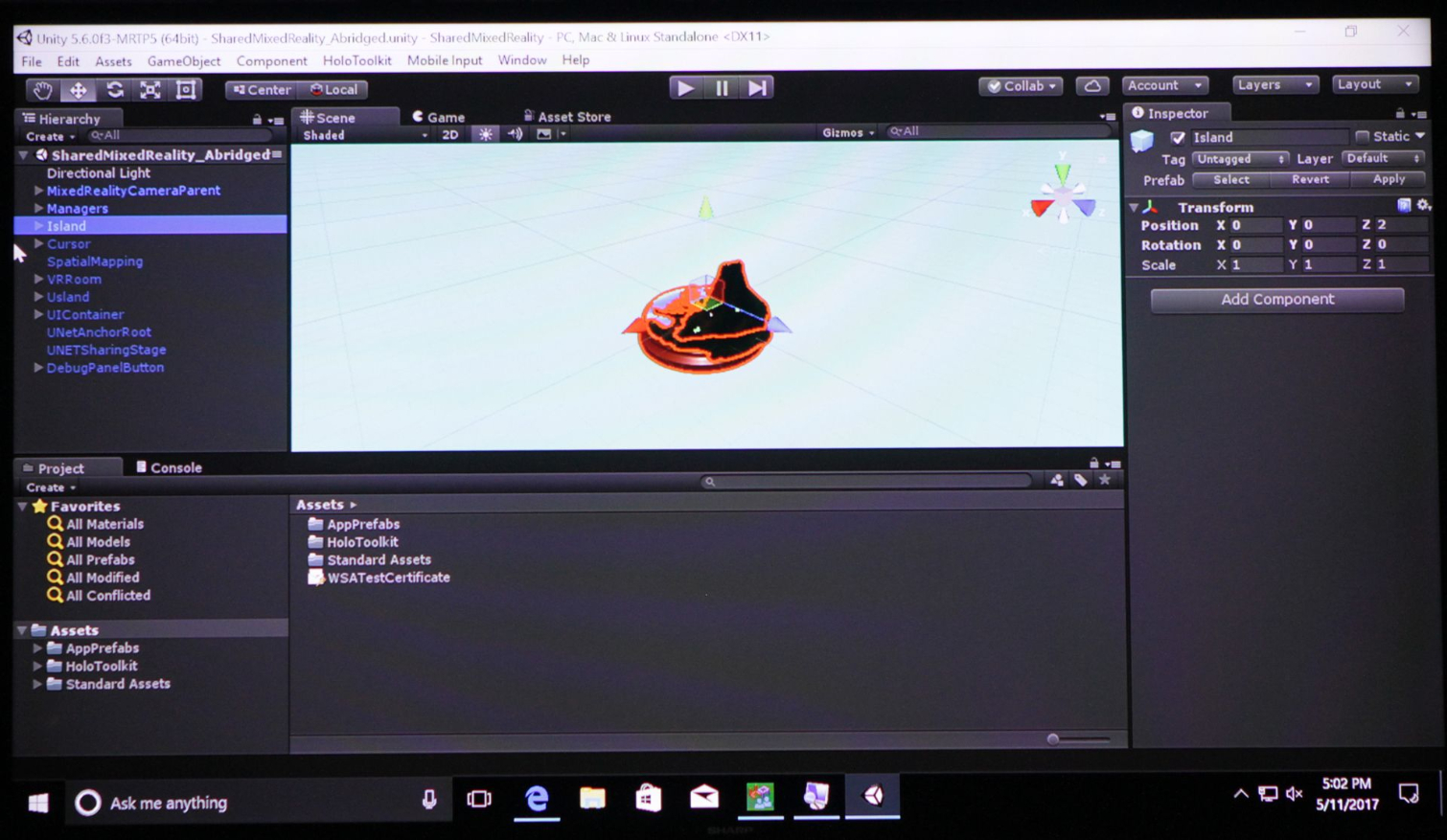

So what manner of devilry was this? To be clear, the happy HoloLens/WMR HMD playtime demo was created with and facilitated by Unity. Presumably, there’s a way to do this in Unreal, but at the “Mixed Reality University” event, we were told a bit about how Unity made it work, specifically.

First, a HoloLens device has to start the session as a host. Then, that device finds a common reference point for all other devices in the session. The first HoloLens sends the location info to a second HoloLens, which must then “find” that common point. Once they’ve synced to the same spot, they can share it with other devices. That’s the origination point of the scene, which is the shared coordinate between the HoloLens devices and the Acer HMDs.

Unity’s Unet (a multiplayer technology first announced back in 2014) underpins the communication aspect of the whole thing.

At the “university” session, we did some more tinkering--enable this, disable that--but to be honest, game development is a little over our heads. But at the end of it, we had “created” this island demo, and we were able to run it with all of our group’s HoloLens and Acer HMDs.

Our takeaway from that experience is that it doesn’t seem especially difficult to get these two types of devices working together. When you see “black box” demos (meaning you see the product but get no glimpse under the hood), you can never know if some engineer kludged together a last-minute hack or workaround to make the thing sing. By showing us the simple steps for how this island demo was put together--and mind you, this was done for multiple groups at a time, multiple times per day--Microsoft was reiterating that these are all mixed reality devices, on its continuum, and that they can truly work together.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

bit_user Cool story, but that Island demo proves nothing about the devices used.Reply

Seriously, you could've taken either the bird's-eye or on-the-ground viewers and given them a VGA screen + keyboard + mouse with which to do the same task. It might've been a bit more cumbersome, but it would've worked. Importantly, it'd have done so without proving that a VGA screen is some kind of mixed reality device that we hadn't yet noticed.

So, as cool as the demo sounds, it really proves nothing (aside from showing off Unity's nice collaboration feature that probably works just as well with Rift & Vive HMDs). -

DeadlyDays Reply19723928 said:Cool story, but that Island demo proves nothing about the devices used.

Seriously, you could've taken either the bird's-eye or on-the-ground viewers and given them a VGA screen + keyboard + mouse with which to do the same task. It might've been a bit more cumbersome, but it would've worked. Importantly, it'd have done so without proving that a VGA screen is some kind of mixed reality device that we hadn't yet noticed.

So, as cool as the demo sounds, it really proves nothing (aside from showing off Unity's nice collaboration feature that probably works just as well with Rift & Vive HMDs).

Isn't that the point of the demo, that you can interchangeably use any VR headset. Microsoft is a Software Developer, SDK's being hardware agnostic is a selling point? unless I'm missing something? Them building HoloLens isn't to push hardware sales, it is to push their software?

-

Kahless01 that would be a great way to play black and white. I think I still have my copy laying around the house.Reply -

bit_user Reply

The article points out that this is a feature of Unity. Nothing is said about it being enabled by Microsoft, other than the fact that it works with Hololens and the VR HMDs built to their specification.19724614 said:19723928 said:Cool story, but that Island demo proves nothing about the devices used.

Seriously, you could've taken either the bird's-eye or on-the-ground viewers and given them a VGA screen + keyboard + mouse with which to do the same task. It might've been a bit more cumbersome, but it would've worked. Importantly, it'd have done so without proving that a VGA screen is some kind of mixed reality device that we hadn't yet noticed.

So, as cool as the demo sounds, it really proves nothing (aside from showing off Unity's nice collaboration feature that probably works just as well with Rift & Vive HMDs).

Isn't that the point of the demo, that you can interchangeably use any VR headset. Microsoft is a Software Developer, SDK's being hardware agnostic is a selling point? unless I'm missing something?

Yes, but that case would be undermined if none of MS' APIs were required by the demo. And then there's the point about the demo "proving" the VR HMD is actually MR.19724614 said:Them building HoloLens isn't to push hardware sales, it is to push their software?

Remember, MS' long-term goal is to lure people into their walled garden. So, to the extent that this demo doesn't depend on MS proprietary technologies, it doesn't directly serve that end.

-

alextheblue Reply

Yeah those rascals, with the multiplatform releases, multiplatform development tools, encouraging and supporting third-party MR devices, using open multiplatform software like Unity. Working with and for developers, users, and OEMs of all stripes. What are they up to? It can't just be part of their business model, you see - that's too simple. Too obvious! Where's their evil master plan? Mind control? Weather control? Skynet? Oh God it's Skynet isn't it! That's why they originally called their cloud storage "SkyDrive" before changing it to mask their intent!! We're onto you, Microsoft!19727586 said:Remember, MS' long-term goal is to lure people into their walled garden. -

bit_user Reply

They want developers to use their APIs and sell apps through their store. It's not much different than Apple or Google. Notice how you can't disable the store, in non-enterprise versions of Windows 10? If your allegiance to MS is so strong you can't even see that, I feel sorry for you. None of these companies is altruistic. IMO, MS is no better than Apple - just weaker.19732172 said:Where's their evil master plan? Mind control? Weather control? Skynet? Oh God it's Skynet isn't it! That's why they originally called their cloud storage "SkyDrive" before changing it to mask their intent!! We're onto you, Microsoft!

-

bit_user P.S. the fact that they used Unity for this was probably a fall-back plan. In the future, I expect to see a DirectReality API from them, or something like that.Reply

They want the only path to other platforms to be through them. If you run your app on Android, they want it to use their APIs, sell through their store, and be part of their ecosystem. So, ultimately, you can expect to see them drop Unity.