Intel Delivers 10,000 Aurora Supercomputer Blades, Benchmarks Against Nvidia and AMD

Started in 2015, still not finished.

With two exaflops of performance, the Intel-powered Aurora supercomputer is expected to beat the AMD-powered Frontier supercomputer, currently the fastest in the world, and take the lead on the Top 500 list of the fastest supercomputers. However, due to Intel's continued delays in delivering the hardware, Aurora has not yet submitted a benchmark to the Top 500 committee, so it didn't make the list announced today. Intel shared new details about the system today and announced at the ISC conference that it has delivered 'over' 10,000 operational blades for the Aurora supercomputer — but with the caveat that these aren't the actual blades needed for full deployment. We'll cover the details below.

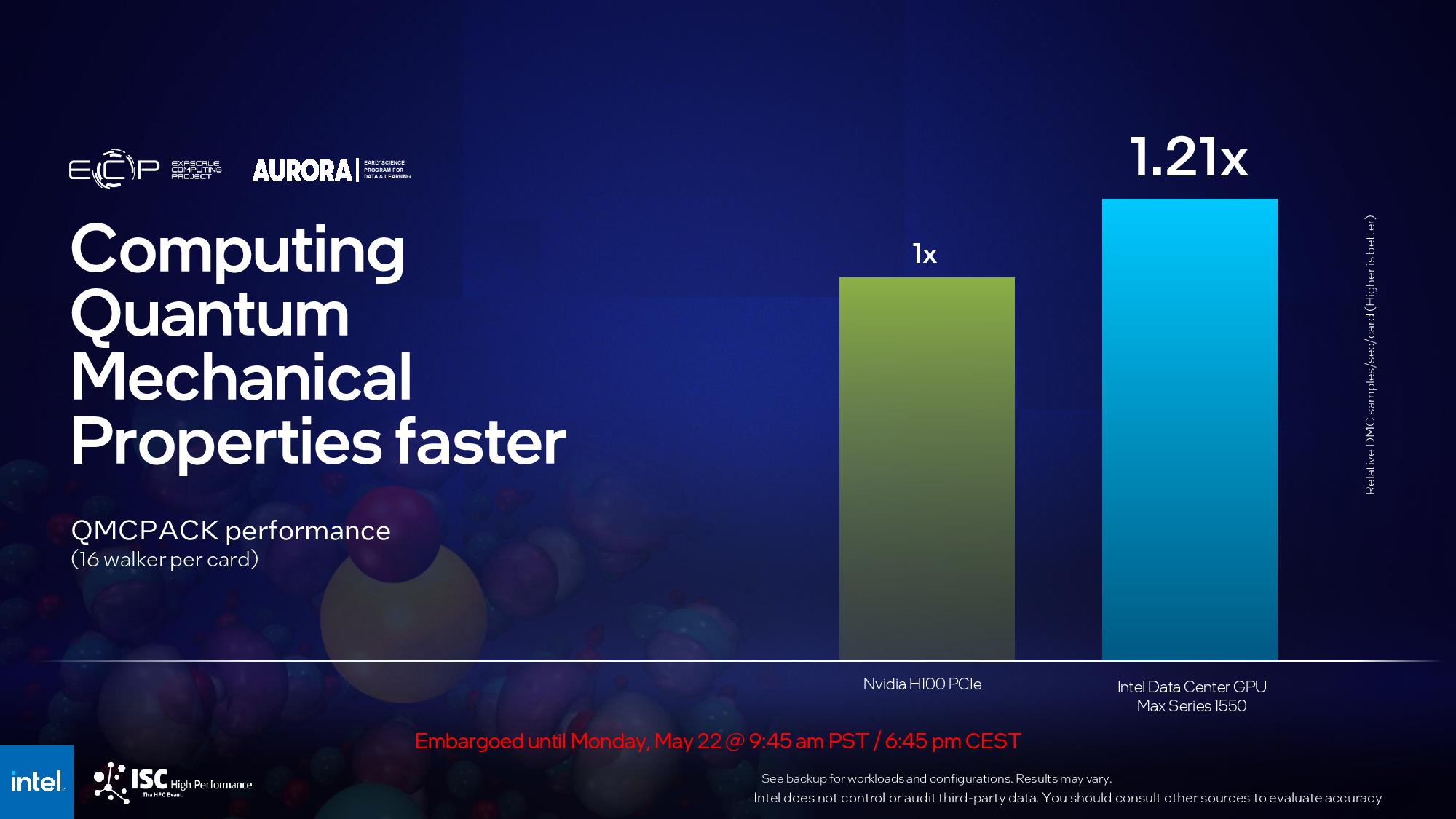

However, Intel says the system will be fully operational later this year and shared benchmarks with Aurora going head-to-head against AMD and Nvidia-powered supercomputers, claiming a 2X performance advantage over AMD's MI250X GPUs, and a 20% gain over Nvidia's H100 GPUs.

Intel says it has delivered the silicon for 'over' 10,000 blades — both the fourth-gen Sapphire Rapids Xeon chips and Ponte Vecchio GPUs — to the Argonne Leadership Computing Facility (ALCF).

However, Aurora is designed to operate with Intel's HBM-equipped Sapphire Rapids "Xeon Max" chips, which have been perpetually delayed. Due to those delays, Intel initially began shipping ALCF the non-HBM Sapphire Rapids chips, and the facility began populating Aurora with the standard non-HBM Sapphire Rapids as a stop-gap measure.

Intel is now providing the faster HBM-equipped Xeon Max chips to ALCF, but not all of the 10,000 blades it promotes as being delivered have the Max chips under the hood. We inquired with Intel, and company representatives confirmed that not all of the blades are equipped with the final Xeon Max silicon. The company tells us that approximately 75% of the blades contain the final Xeon Max revision of the silicon. Presumably, that is the bottleneck that is holding the system back from submitting a benchmark for the Top500 list.

The system consists of 166 racks with 64 blades per rack, for a total of 10,624 blades, so the 'over' 10,000 delivered blades are likely enough for the system to be operational — just not at full performance.

Intel also shared more specs for the Aurora supercomputer, including detailed specs that you can see in the slide above. With 21,248 CPUs and 63,744 Ponte Vecchio GPUs, Aurora will either meet or exceed two exaflops of performance when it comes fully online before the end of the year. The system also features 10.9 petabytes (PB) of DDR5 memory, 1.36 PB of HBM attached to the CPUs, 8.16 PB of GPU memory, and 230 PB of storage capacity that delivers 31 TB/s of bandwidth (other interesting details are included in the slide above).

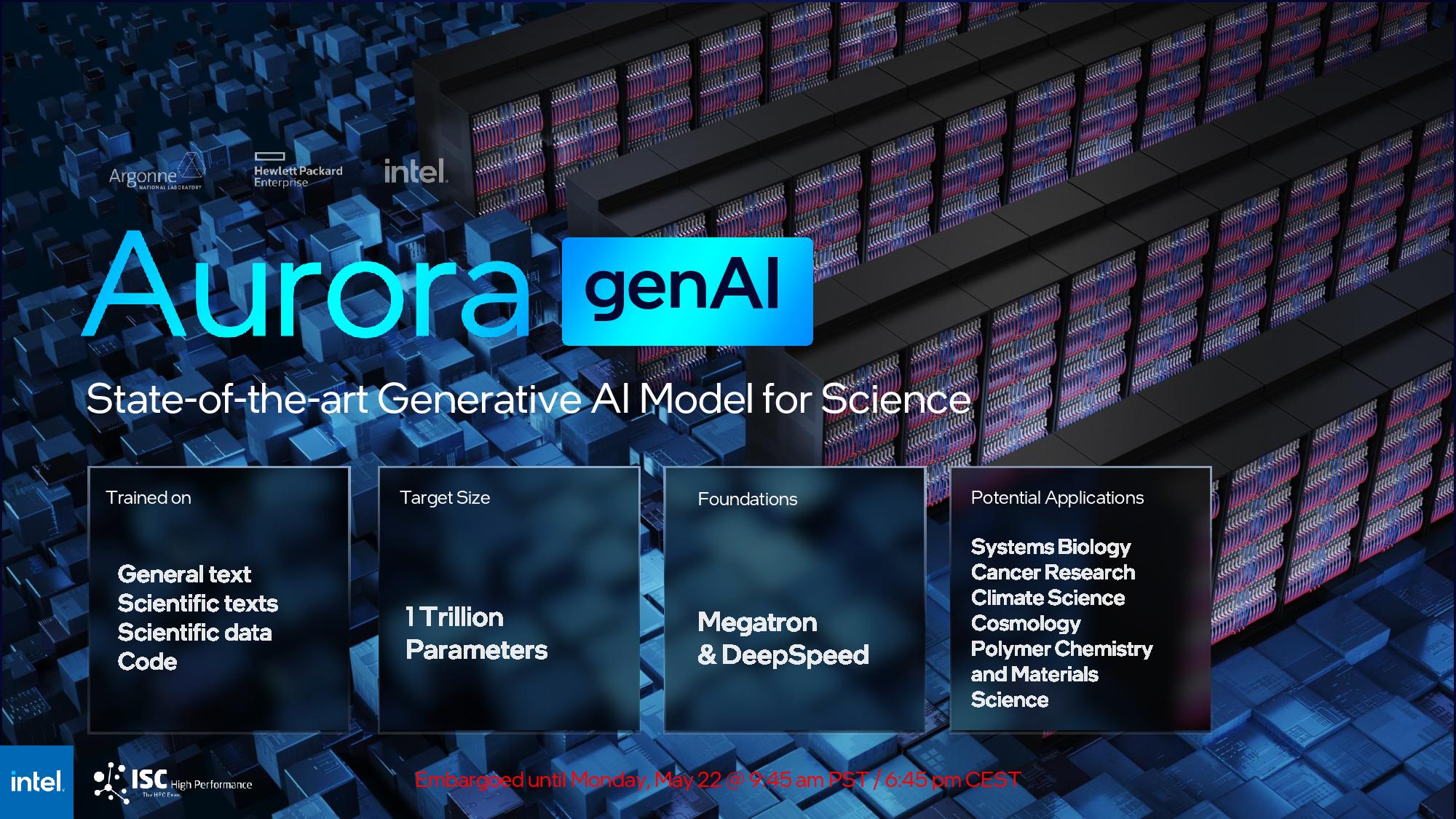

Intel also revealed that Aurora would begin executing generative AI workloads on a host of workloads. The 'Aurora GPT' large language model will be science-oriented and have 1 trillion parameters with Megatron and DeepSpeed underpinnings. Intel provided the following summation of the project:

"These generative AI models for science will be trained on general text, code, scientific texts and structured scientific data from biology, chemistry, materials science, physics, medicine and other sources. The resulting models (with as many as 1 trillion parameters) will be used in a variety of scientific applications, from the design of molecules and materials to the synthesis of knowledge across millions of sources to suggest new and interesting experiments in systems biology, polymer chemistry and energy materials, climate science and cosmology. The model will also be used to accelerate the identification of biological processes related to cancer and other diseases and suggest targets for drug design."

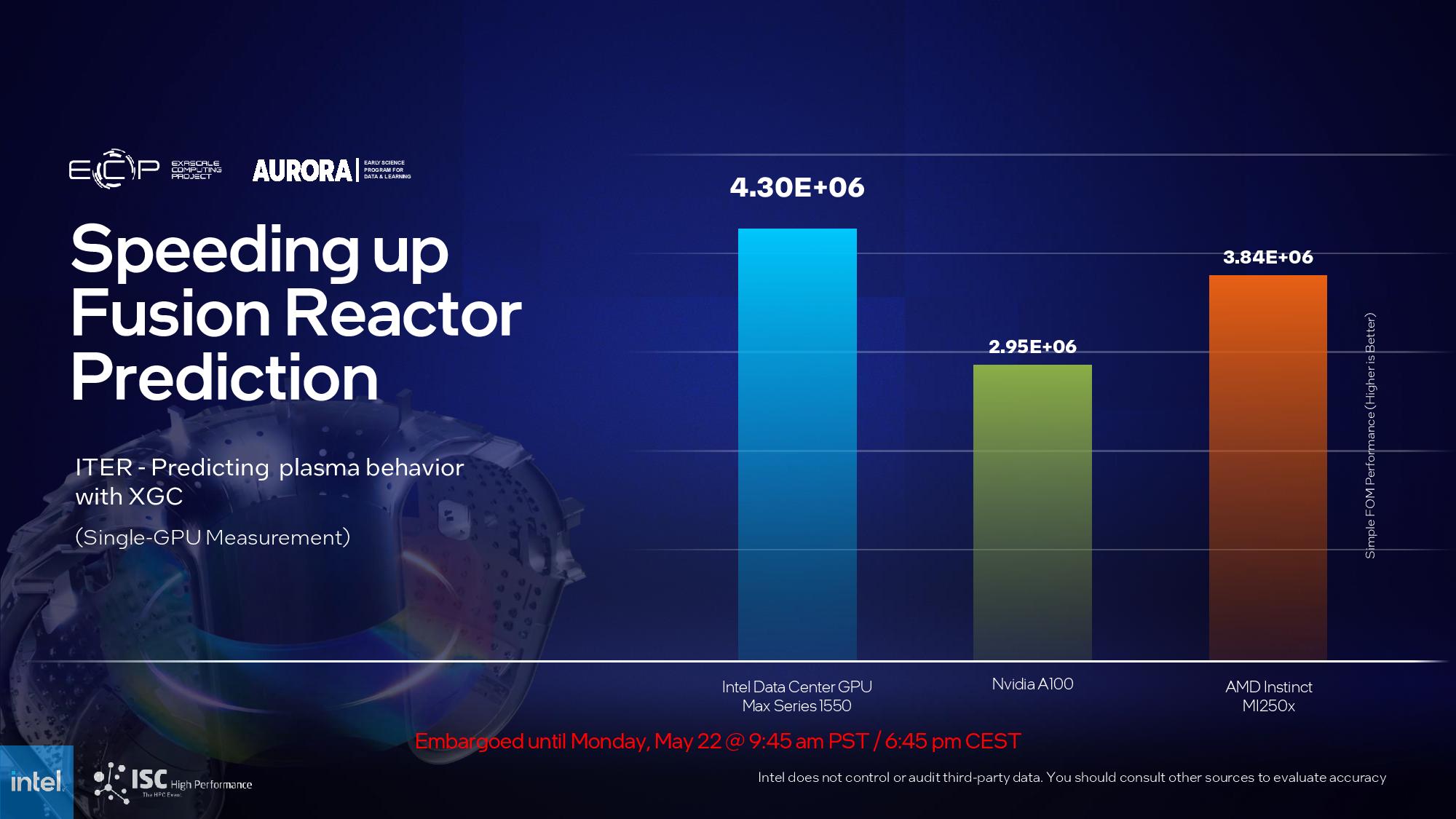

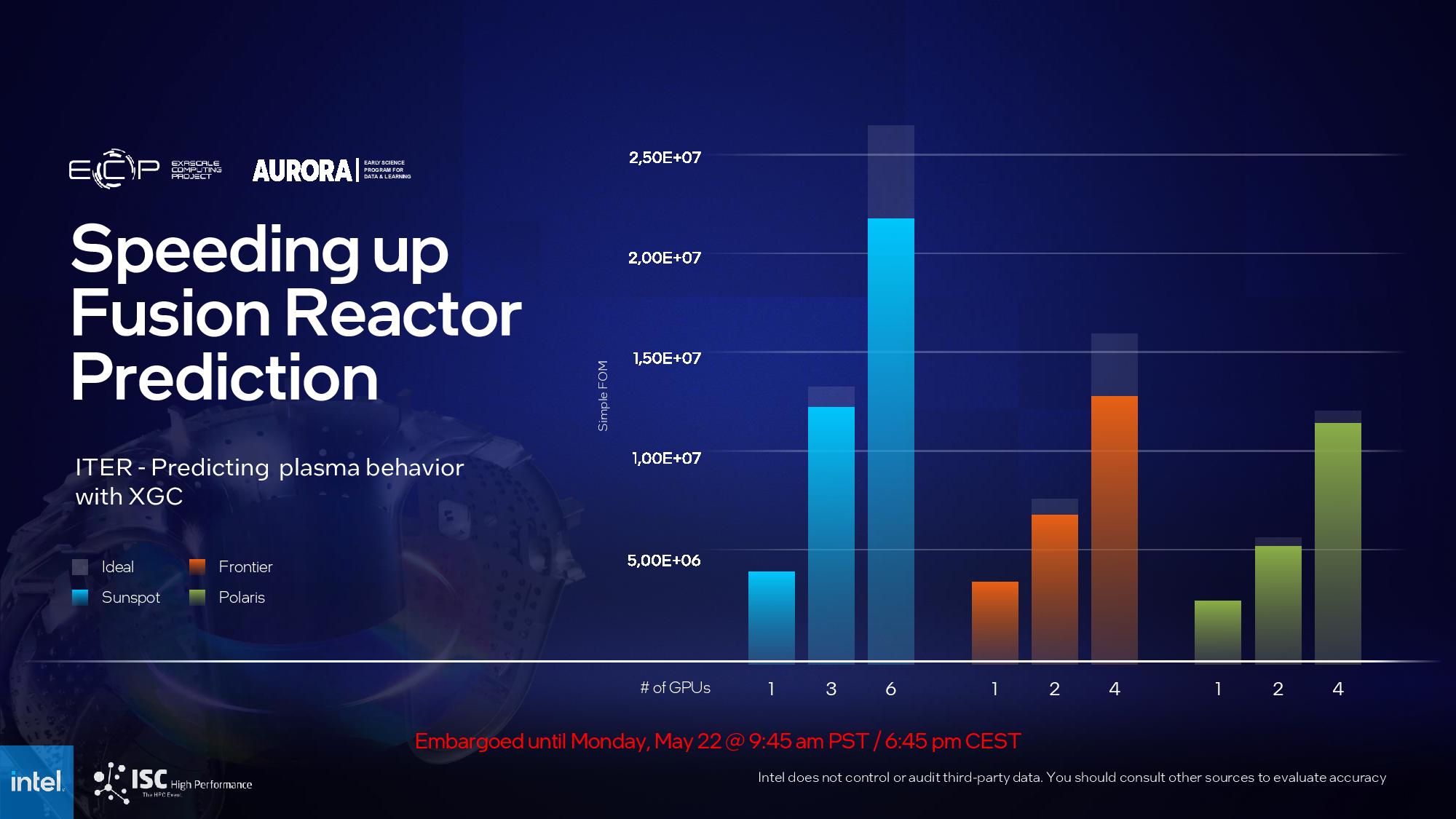

Intel also teased a few benchmarks from the Sunspot system, a smaller two-rack version of Aurora with 128 total nodes. Intel compared Sunspot's performance against extrapolated numbers that represent 'similarly-sized' Polaris supercomputer with Nvidia A100 GPUs, and the Crusher supercomputer that's powered by AMD's MI250X GPUs. Unfortunately, Intel did not provide test notes or details of these configurations, so take the results with more than the usual grain of salt.

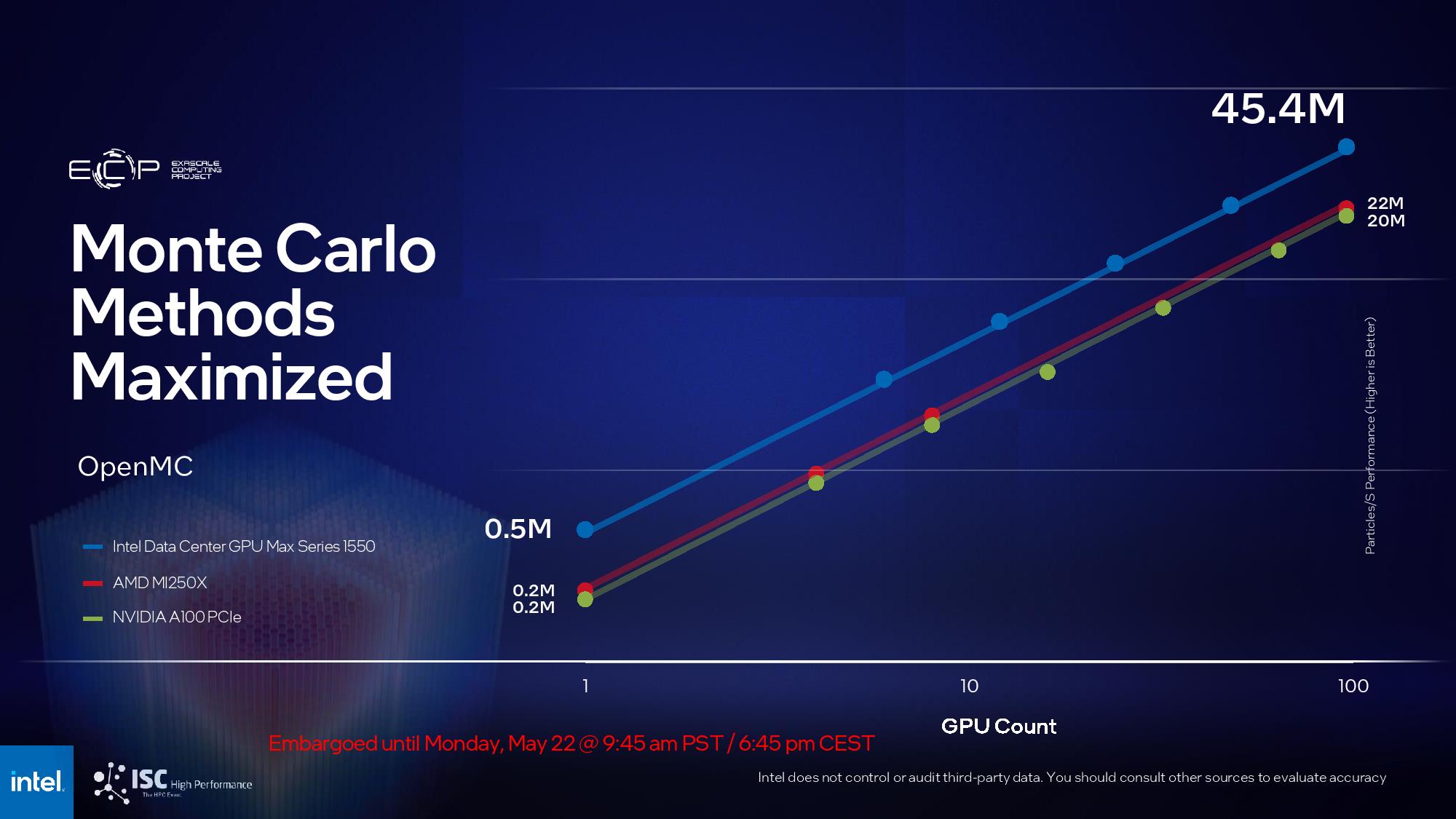

In a test of a single node in a reactor prediction workload, Intel claims its system is 45% faster than the Nvidia contender and 12% faster than the AMD system. Turning to scalability metrics, Intel claims that by normalizing the number of total GPUs used in the test systems to 96 GPUs (the AMD and Nvidia nodes have four GPUs apiece, whereas the Intel system has six per node), Sunspot delivers more than twice the performance of both the AMD and Nvidia systems in the Monte Carlo workload. For 90 nodes in the NWChemEx workload, Intel claims it is 72% faster than a 90-node Nvidia-powered Solaris system.

The Aurora supercomputer was first announced in 2015, with a predicted finish date in 2018. Back then, the system was designed to use the Knights Hill processors that were later canceled. The system has seen numerous redesigns and reschedules in the years since, with the new Aurora being announced in 2019 with one exaflop of performance to be delivered in 2021. Yet another rescheduling in late 2021 claimed the system would deliver two exaflops upon completion, which is now slated for later this year.

The long and winding road continues, but it does finally appear that the end is at least in sight. Intel tells us it will deliver all of the Xeon Max processors to finish the system soon, and that the system will be complete and submit its first Top 500 benchmark before the end of the year.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Kamen Rider Blade Aurora was supposed to be finished in 2018.Reply

It's 2023, and they're promishing to finish by the end of the year?

WTF happened with the time tables?

The machine is estimated to consume around 60 MW. For comparison, the fastest computer in the world today, Frontier uses 21 MW while Summit uses 13 MW.

So Frontier delivers slightly over 1 ExaFlop for 21 MW's worth of power consumption.

And this Aurora is going to deliver 2 ExaFlops for 60 MW?

Something went horribly wrong here. -

thestryker Seems like it'll be ready for November so we'll see what the real world performance looks like. Intel's HPC strategy definitely relies on this being a success along with OneAPI. I'm looking forward to seeing how the CPU based HBM is leveraged in the HPC space.Reply

They're vendor provided benchmarks so grain of salt, but it's likely based on available configurations. If you look at the numbers Intel's 3 are faster than nvidia's 4 and slightly slower than AMD's 4.hotaru251 said:img #2

intel # of gpu; 1, 3, 6,

amd & nvidia # gpu: 1, 2, 4. -

hotaru251 Reply

ofc, but when you are comparing specs between stuff if it isnt 1:1 then you already doing it wrong.thestryker said:so grain of salt

Not everything scales the same thus even if you do the math based on whats given doesn't mean that is what will actually be truth. -

bit_user Reply

Good catch. But, I think Intel's defense would be that it's a graph intending to show scaling linearity. That's what the shadows are meant to depict. Even then, Nvidia scales better @ 4 than Intel does @ 3. So, that's not really such a great counterpoint.hotaru251 said:img #2

intel # of gpu; 1, 3, 6,

amd & nvidia # gpu: 1, 2, 4. -

bit_user Reply

Do you even know what they're comparing? Polaris is using A100's, which launched 3 years ago and are made on TSMC N7. Ponte Vecchio uses a mix of TSMC N5, N7, and Intel 7.thestryker said:If you look at the numbers Intel's 3 are faster than nvidia's 4 and slightly slower than AMD's 4.

The other point of note is where I started: cost. It's hard to get pricing details, especially ones not subject to current AI-driven market distortions, but I think you'd find the product costs of each Xe GPU Max are multiple times that of a single A100, even back when it launched.

Today, the only reason you wouldn't buy a H100 is because you can't.