From Opteron to Milan: Crusher Supercomputer Comes Online With New AMD CPUs and MI250X GPUs

AMD's MI250X notches big wins against Nvidia's V100 GPUs

Today, the Oak Ridge Leadership Computing Facility (OLCF) announced that Crusher, a small iteration of the $600 million Frontier supercomputer that will be the United States' first exascale machine, is now online and generating impressive results. Crusher's 192 HPE Cray EX blades are crammed into 1.5 cabinets that consume 1/100th the size of the previous 4,352 square foot Titan Supercomputer, yet the new system delivers faster overall performance.

Crusher features the same architectural components as the 1.5-exaflop Frontier supercomputer, which each HPE Cray EX blade packing one 64-core AMD EPYC "Trento" 7A53 CPU and four AMD "Aldebaran" MI250X GPUs, but Frontier won't be available to researchers until January 1, 2023.

However, researchers are now using Crusher to ready their scientific code for Frontier today, and with impressive results. Highlights include a 15-fold speedup over the Nvidia and IBM-powered Summit supercomputer with the Cholla astrophysics code that has been rewritten for Frontier, with 3-fold of the improvement chalked up to hardware improvements while another five-fold of improvement comes from software optimizations. Meanwhile, the NuCCOR nuclear physics code has seen an 8-fold speedup with the MI250X GPUs compared to the Nvidia V100 GPUs used in Summit. Additionally, the OLCF announced that LSMS materials code that crunches through large-scale simulations up to 100,000 atoms has also been successfully run on Crusher and will scale to run on the full Frontier system. The OLCF also touts an 80% increase over previous unspecified systems with Transformer deep learning model workloads.

It isn't surprising that Crusher's new hardware outperforms the Titan Supercomputer — that old sprawling supercomputer came online in 2013 with 200 cabinets that housed 18,688 AMD Opteron 6274 16-core CPUs, 18,688 Nvidia Tesla K20X GPUs, and the Gemini interconnect, all of which consumed a total of 8.2 MW of power. The system was spread out over 4,352 square feet and delivered 17.6 petaFLOPS of sustained performance in Linpack and a theoretical peak of 27 petaFLOPS.

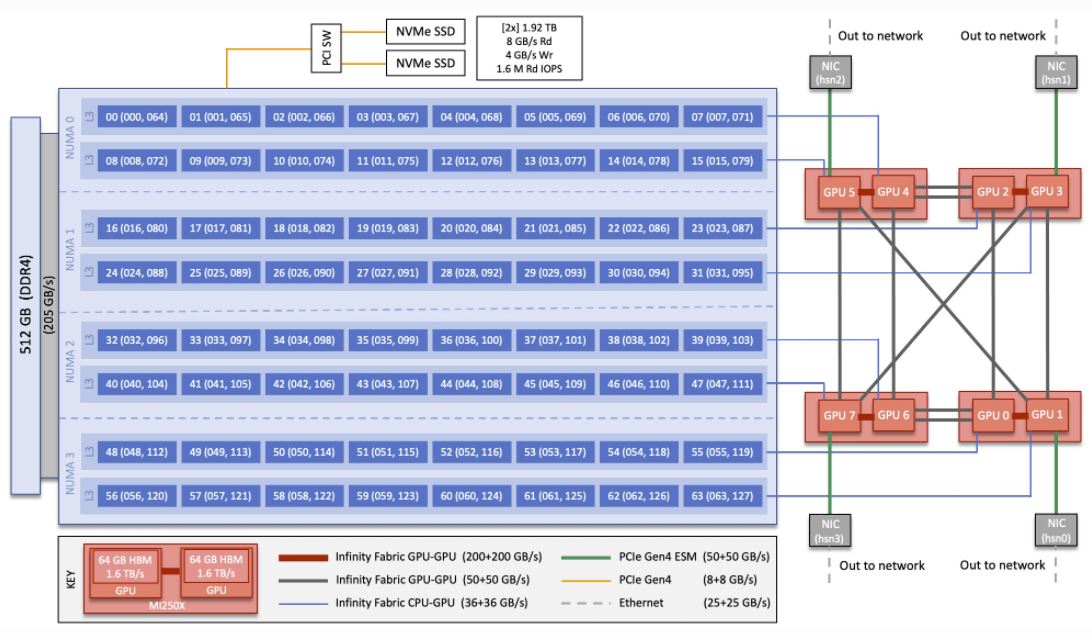

In contrast, Crusher only spans 1.5 cabinets, one with 128 nodes and the other with 64, for a total of 192 nodes that consume 44 square feet of space. Each water-cooled node comes with a single 64-core custom Zen 3 chip, the "Trento" EPYC 7A53 processor that AMD hasn't shared much detail about, though we do know it is an EPYC Milan derivative. The chip's I/O die is rumored to employ Infinity Fabric 3.0 to enable a coherent memory interface with GPUs.

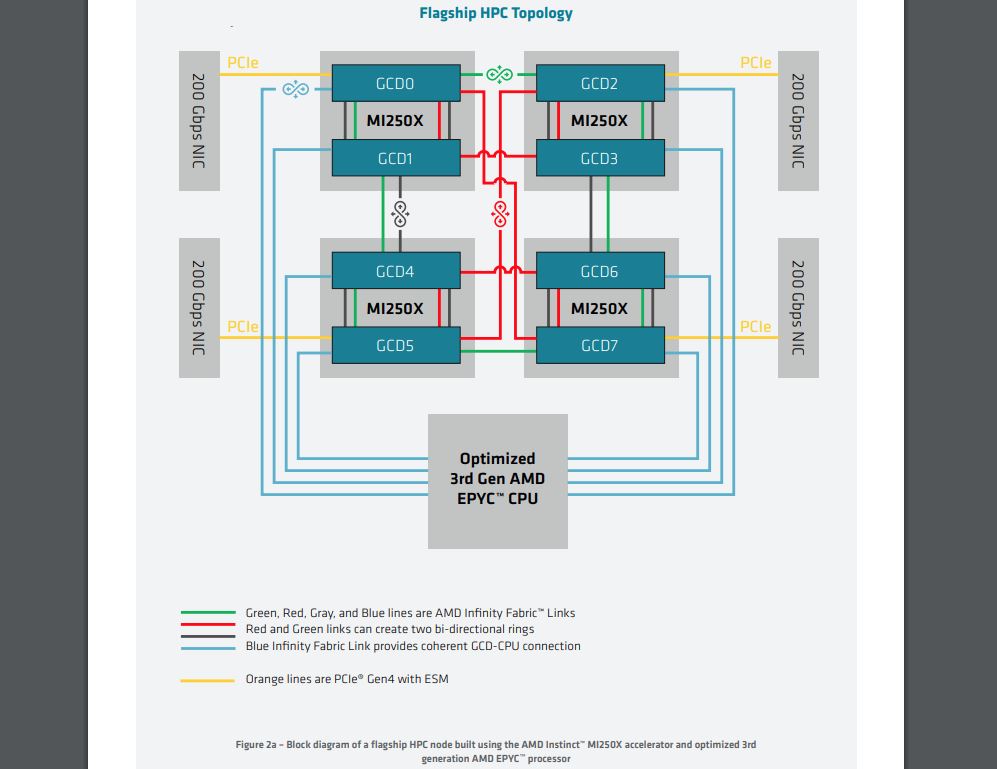

The Trento chip is paired with 512GB of DDR4 memory (205 GB/s) and four AMD MI250X accelerators, each of which comes armed with two ~790mm^2 Graphics Compute Dies (GCDs) that wield the CDNA2 architecture and communicate across a 200 GB/s bus. In effect, these four 550W GPUs serve as the equivalent of eight GPUs in each node.

Each Trento CPU is carved up into four NUMA domains. Each domain (and its affiliated two banks of L3 cache) connects to two GCDs (one GPU) with a coherent memory interface at 36+36 GB/s over the Infinity Fabric, yielding 288 GB/s of total CPU-to-GPU bandwidth spread among the eight GCDs in the node.

Meanwhile, each MI250X GPU houses an HPE Slingshot 200 GBps (25 GB/s) Ethernet NIC (via a PCIe root complex) that connects to the HPE Slingshot network, for 100 GB/s of network bandwidth per node. All of this is compute horsepower is connected to a 250 PB storage appliance that offers a peak of 2.5 TB/s of throughput and uses the IBM Spectrum Scale filesystem.

The OLCF hasn't yet released power consumption figures, or peak performance in Linpack, for the Crusher system. However, we know that each 768 MI250X delivers a peak of 53 TFLOPS of double-precision, meaning a theoretical peak of roughly 40 PetaFLOPS (assuming linear scaling).

Frontier will represent the first exascale-class supercomputer in the United States, but only because the oft-delayed Intel-powered Aurora supercomputer has been delayed again until 2023. However, Intel has changed its performance projection for the Sapphire Rapids and Ponte Vecchio-powered Aurora to a peak of two ExaFLOPS from the original projection of 1.5, which would give it the lead over Frontier, at least as far as peak measurements go. It would also purportedly tie the AMD-powered 2-ExaFLOP El Capitan system scheduled to come online in 2023.

That means all three of the US exascale-class systems will be faster than China's two new exascale systems, the Sunway Ocealite and Tianhe-3 supercomputers, that both have purportedly reached ~1 ExaFLOP of performance but haven't been listed on the Top 500 for political reasons.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

HPE and AMD delivered Frontier on time in 2021, but the system is still undergoing integration and testing, sparking claims that the deployment has run into technical challenges. The delivery timeline is somewhat contested, with the DoE claiming the system is on schedule, but that could simply boil down to semantics (the use of 'acceptance' vs. 'available'). In either case, the US Department of Energy says Frontier is on-track to be available to researchers in January 2023.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Soaptrail I know this is overly simplistic but they are replacing 18,688 Opteron CPU's with 320 EPYC CPU's, that is a huge reduction!Reply -

jeremyj_83 Reply

I think it is only 192 Epycs since each node is only a single 64 core chip. There are 768 GPUs though.Soaptrail said:I know this is overly simplistic but they are replacing 18,688 Opteron CPU's with 320 EPYC CPU's, that is a huge reduction! -

Kamen Rider Blade So, does that mean there's more physical room / cooling capacity at the Titan Data Center for expansion of the Super Computer?Reply -

jeremyj_83 Reply

The old Titan took up 4,352 ft2 in 200 cabinets and used 8.2 MW of power for a total of 17.6 petaFLOPS of sustained performance (27 petaFLOPS theoretical). The new Crusher needs only 44 ft2 in 1.5 cabinets but power draw isn't given and neither is sustained performance. However, each of the 768 GPUs can theoretically do 53 teraFLOPS so we have a theortical of 40.7 petaFLOPS. That gives Crusher 1.5x more theoretical performance in 1% of the total space required for Titan.Kamen Rider Blade said:So, does that mean there's more physical room / cooling capacity at the Titan Data Center for expansion of the Super Computer?

We can assume that Crusher will use less far power as well. Each GPU is 550W and each CPU is 280W (assumed since Zen 3 are usually 280W for 64 core models). If all GPUs and CPUs are running at max draw that puts consumption at 476KW. Even if that number is doubled for RAM, NIC, and storage power that will put power draw around 1MW. In theory we have a system with 1.5x more performance using 1/8 the power and need 1% the total floor space in 10 years. -

Kamen Rider Blade Reply

So I'll take that as a YES! =Djeremyj_83 said:The old Titan took up 4,352 ft2 in 200 cabinets and used 8.2 MW of power for a total of 17.6 petaFLOPS of sustained performance (27 petaFLOPS theoretical). The new Crusher needs only 44 ft2 in 1.5 cabinets but power draw isn't given and neither is sustained performance. However, each of the 768 GPUs can theoretically do 53 teraFLOPS so we have a theortical of 40.7 petaFLOPS. That gives Crusher 1.5x more theoretical performance in 1% of the total space required for Titan.

We can assume that Crusher will use less far power as well. Each GPU is 550W and each CPU is 280W (assumed since Zen 3 are usually 280W for 64 core models). If all GPUs and CPUs are running at max draw that puts consumption at 476KW. Even if that number is doubled for RAM, NIC, and storage power that will put power draw around 1MW. In theory we have a system with 1.5x more performance using 1/8 the power and need 1% the total floor space in 10 years.