Intel Demos 3D XPoint Optane Memory DIMMs, Cascade Lake Xeons Come In 2018

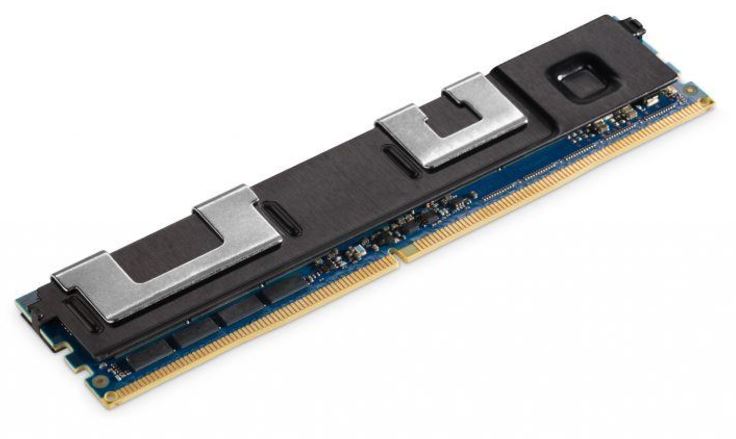

Using 3D Xpoint as an intermediary memory layer between DRAM and NAND is one of the most promising aspects of Intel's and Micron's new persistent media, but the speedy new DIMM form factor retreated into the shadows after the Storage Visions 2015 conference. The disappearance fueled many of the rumors that 3D XPoint isn't living up to the initial endurance claims, largely because using the new media as memory will require much more endurance than storage devices need. Aside from Intel CEO Brian Krzanich's casual mention during the company's Q4 2016 earnings call that products were shipping, we haven't seen much new information.

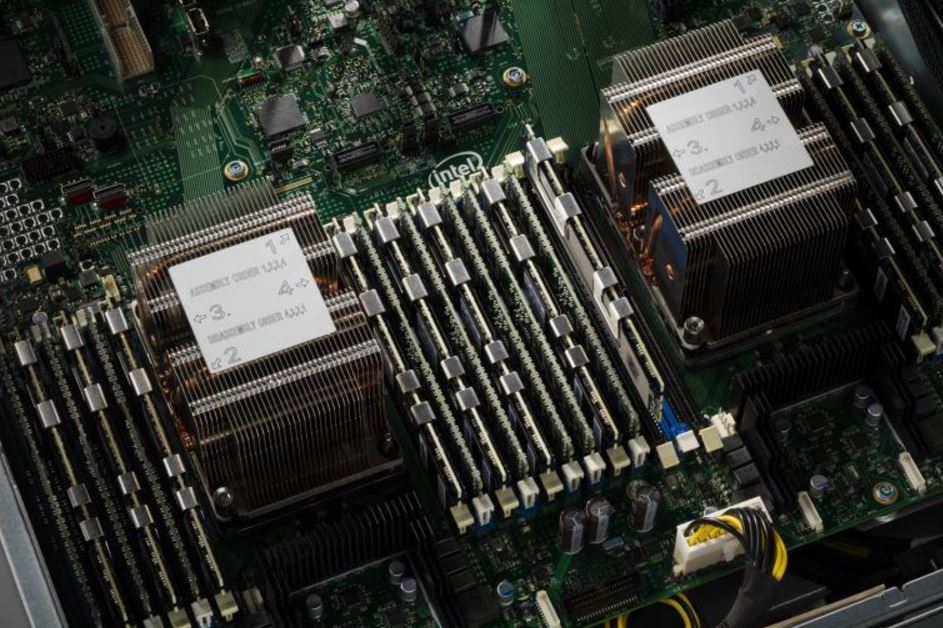

That changed this week as Intel conducted the first public demo of working Optane memory DIMMs at the SAP Sapphire conference. Intel demoed the DIMMs working in SAP's HANA in-memory data analytics platform. Neither company revealed any performance data from the demo, but the reemergence of the Optane DIMMs is encouraging.

3D XPoint-powered Optane DIMMs will deliver more density and a lower price point than DDR4 solutions, which is a boon for the booming in-memory compute space, HPC, virtualization, and public clouds. Intel will ship the DIMMs in 2018 in tandem with the Cascade Lake refresh of the Intel Xeon Scalable family platform.

In the interim, Intel offers its value-add Memory Drive Technology in tandem with its new "Cold Stream" DC P4800X Optane SSDs (which we've tested). The software merges the DC P4800X into the memory subsystem so that it appears as part of a single large memory pool to the host. The software doesn't require any changes to the existing operating system or applications, but it's supported only on Intel Xeon platforms. The Memory Drive Technology is probably one of the most important aspects of the DC P4800X launch, as other methods of enhancing the addressable memory pool tend to be kludgy and require significant optimization of the entire platform at both the hardware and software level, which hinders adoption. A plug-and-play SSD solution will enjoy tremendous uptake, but moving up to the DIMM form factor addressed with memory semantics will provide even more of a benefit. The industry has been hard at work enabling the ecosystem, so servers and applications should greet the new DIMM form factor with open arms.

Amazon Web Services recently announced that it's developing new instances that offer up to 16TB of memory for SAP HANA and other in-memory workloads, and new AWS HANA clusters can pack a walloping 34TB of memory spread among 17 nodes. AWS hasn't released an official launch date for the new instances, but in light of the recent SAP HANA demonstration with Optane DIMMs and the fact that the new AWS instances aren't available yet, it's easy to speculate that Intel's finest are a factor in enabling the hefty memory allocation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Peter2001 Pardon moi by ignorance. Does this mean we could have ssds working as memory aswell ?Reply -

derekullo If you have virtual memory turned on then you are already using an ssd as memory when you run out of ram.Reply -

derekullo The end goal was to have 3d Xpoint as the replacement for both ram and hard drives/ssd.Reply

But as the article mentions, the endurance of 3d Xpoint isn't up to par versus our normal ddr4 ram.

For example, you may read and write a combined 100 gigabytes a day to ram loading windows, playing games and maybe some work stuff.

But you may only read 30 gigabytes and write 10 gigabytes from your ssd/hard drive.

Writes are where the endurance factor is applicable. -

SockPuppet "The end goal was to have 3d Xpoint as the replacement for both ram and hard drives/ssd."Reply

No, it isn't. You've made that up yourself.

"But as the article mentions, the endurance of 3d Xpoint isn't up to par versus our normal ddr4 ram."

No, it didn't. You've made that up yourself.

"For example, you may read and write a combined 100 gigabytes a day to ram loading windows, playing games and maybe some work stuff."

So what?

"But you may only read 30 gigabytes and write 10 gigabytes from your ssd/hard drive."

Good thing Xpoint isn't an SSD or Hard drive, then.

"Writes are where the endurance factor is applicable."

Again, so what? We've established the "endurance factor" came directly from your anus. -

urbanj Reply19711519 said:The end goal was to have 3d Xpoint as the replacement for both ram and hard drives/ssd.

But as the article mentions, the endurance of 3d Xpoint isn't up to par versus our normal ddr4 ram.

.

Please don't misquote material.

The article had its own assumptions in it, which it seems you are taking as fact.

-

DerekA_C I don't see how it is going to be more affordable then dd4 is anything Intel sells cheap? I mean really is there anything that Intel gives an actual deal on? shoot they still charge over $110 for a dual core pile of crap with no hyper threading. and as they sell more of any one unit they give a sale for a day or two that is no more then $15 whoopy freaking doody price locking and strong holding the market has always been Intel's strategy they just find more loopholes and ways of doing it until they get caught and sued again.Reply -

derekullo Reply19712319 said:"The end goal was to have 3d Xpoint as the replacement for both ram and hard drives/ssd."

No, it isn't. You've made that up yourself.

"But as the article mentions, the endurance of 3d Xpoint isn't up to par versus our normal ddr4 ram."

No, it didn't. You've made that up yourself.

"For example, you may read and write a combined 100 gigabytes a day to ram loading windows, playing games and maybe some work stuff."

So what?

"But you may only read 30 gigabytes and write 10 gigabytes from your ssd/hard drive."

Good thing Xpoint isn't an SSD or Hard drive, then.

"Writes are where the endurance factor is applicable."

Again, so what? We've established the "endurance factor" came directly from your anus.

At first I was going to quote where you were wrong, but then i realized you have 212 trolls/responses and no solutions.

My anus is still more helpful than you. -

manleysteele This is the usage case presented by Intel. It is not the only usage case that can be imagined, nor is the best that can be imagined.Reply

I'm not saying that it won't be useful for the people who need to extend their memory space far beyond what is possible or affordable using ram. It obviously would be very useful in this problem domain.

What I want is 1-2 TBytes of bootable storage sitting on the memory bus. I don't know if or when I'll be able to buy that, but unless someone smarter than me, (Not that steep a hill to climb when it comes to this stuff), comes up with a better usage case that I don't even know I want yet, that's what I'm waiting for. -

genz One of the things that people reading this article would realize when they compare the DC P4800 which is the only device on the market right now, is that it is a completely viable replacement for RAM.Reply

RAM max bandwidth is largely a factor of clockspeed. Clockspeed is useless in most applications which is why DDR 3200 vs 1866 vs 4000 shows negligible difference in most games and apps outside of server markets. Latency matters more.

This is also why a 90MB/s read SSD will perform so much better than a 110MB/s HDD as a system drive. It still takes way more time for the data to start reading from the harddrive as the disk has to spin into position.

XPoint RAM has 60x lower latency than an SSD. Below 10ms, which is 100,000hz or 0.1Mhz. If you factor in the controller as we are talking about a minimum 375GB device that is an array of 16GB microchips in 'RAID' like array, and make a standard assumption of a 1Ghz ARM cpu running the controller, you need to push the data from about 25 to 32 chips, and defrag, arrange, etc etc without ever using more than 100 CPU operations per transfer minus the clock delay of the actual Xpoint chip itself and the controller and PCI-E interface and the software.

Let's compare this to DDR4. The fastest DDR4 4000 comes in at CAS 10 or 11. That's 4000mhz which is 0.25NS times 10 which is 2.5ns, but any time you actually measure this with SIS sandra you get 10 to 30ms... about 40 to 120 times slower. Why? The memory controller is on the Northbridge and isn't part of the equation as it's latency isn't counted in the math for RAM, but is counted in the math of XPoint as the "memory" controller on that is on teh PCI card. There are no Xpoint memory controllers in CPUs today, so we are looking at the slowest element being completely ignored from the DDR side of the race.

I would put money down that once the 'controller' becomes an actual XPoint slot and IMC on the board, that will go down to DDR4 levels of latency. I would put more money down that at that point, all that swap memory having latency that isn't your SSD + your RAM will mean that your actual user experience will be far better without extra bandwidth. Why? Look at the first 3.5inch SSDs and how they kicked HDDs butt with almost the same read write performance. -

derekullo Reply19713954 said:One of the things that people reading this article would realize when they compare the DC P4800 which is the only device on the market right now, is that it is a completely viable replacement for RAM.

RAM max bandwidth is largely a factor of clockspeed. Clockspeed is useless in most applications which is why DDR 3200 vs 1866 vs 4000 shows negligible difference in most games and apps outside of server markets. Latency matters more.

This is also why a 90MB/s read SSD will perform so much better than a 110MB/s HDD as a system drive. It still takes way more time for the data to start reading from the harddrive as the disk has to spin into position.

XPoint RAM has 60x lower latency than an SSD. Below 10ms, which is 100,000hz or 0.1Mhz. If you factor in the controller as we are talking about a minimum 375GB device that is an array of 16GB microchips in 'RAID' like array, and make a standard assumption of a 1Ghz ARM cpu running the controller, you need to push the data from about 25 to 32 chips, and defrag, arrange, etc etc without ever using more than 100 CPU operations per transfer minus the clock delay of the actual Xpoint chip itself and the controller and PCI-E interface and the software.

Let's compare this to DDR4. The fastest DDR4 4000 comes in at CAS 10 or 11. That's 4000mhz which is 0.25NS times 10 which is 2.5ns, but any time you actually measure this with SIS sandra you get 10 to 30ms... about 40 to 120 times slower. Why? The memory controller is on the Northbridge and isn't part of the equation as it's latency isn't counted in the math for RAM, but is counted in the math of XPoint as the "memory" controller on that is on teh PCI card. There are no Xpoint memory controllers in CPUs today, so we are looking at the slowest element being completely ignored from the DDR side of the race.

I would put money down that once the 'controller' becomes an actual XPoint slot and IMC on the board, that will go down to DDR4 levels of latency. I would put more money down that at that point, all that swap memory having latency that isn't your SSD + your RAM will mean that your actual user experience will be far better without extra bandwidth. Why? Look at the first 3.5inch SSDs and how they kicked HDDs butt with almost the same read write performance.

The phrase you are looking for is IOPs.

A typical hard drive is between 60 and 100 IOPs depending on queue depth.

http://www.storagereview.com/wd_black_4tb_review_wd4001faex

A high end ssd such as the Samsung 850 Pro has around 10000 iops at a queue depth of 1 with a read speed of about 500 megabytes a second.

http://www.storagereview.com/samsung_ssd_850_pro_review

So even though the drive itself in total bandwidth is only about 5 times faster ... 100 megabytes a second versus around 500 megabytes a second, the responsiveness which is directly related to the total IOPs is over 1000 times faster, 60-100 IOPS versus 10000 IOPs.

IOPs is highly similar to the latency, but IOPs is much easier for some people to understand than saying 1 instruction every 10 milliseconds versus 10000 IOPs.