Intel Launches New Xeon CPU, Announces Deep Learning Inference Accelerator

Intel provided a broad set of updates at the SC16 supercomputing conference. Intel continues to be the dominant CPU supplier for supercomputing applications, although both AMD and IBM still have a presence, albeit a declining one. Surprisingly, the biggest threat to Intel's dominance in the segment doesn't stem from the CPU competition; it comes from Nvidia's GPUs. Pascal promises to increase the pressure due to its enhanced mixed precision capabilities.

Datacenter and HPC applications are quickly adopting AI-centric architectures, and Intel has intensified its focus on its Xeon Phi Knight's Landing (KNL) and Knight's Mill (KNM) products as workloads become more GPU-friendly. Xeon Phi products provide the benefit of additional capabilities, such as bootability and integrated networking, which Intel hopes will help stave off the GPU competition. Intel announced a new CPU at the show and also brought forth its new FPGA-powered Deep Learning Inference Accelerator.

Intel Xeon E5-2699A

Intel is diversifying its portfolio with Xeon Phi products and Altera-powered FPGAs, but CPUs remain the bread and butter. The company introduced its new Intel Xeon E5-2699A v4, which is a newer, faster version of the existing Broadwell E5-2699 v4. Like its predecessor, it features 22 cores and 55MB of L3 cache, but Intel increased the base clock from 2.2GHz to 2.4GHz. The boost clock also moves forward in lockstep from 3.4GHz to 3.6GHz. The company cited vague improvements to the now-mature 14nm process that eke out a 4.8% gain in LINPACK performance.

The announcement is somewhat underwhelming. The E5-2699A v4 carries an MSRP of $4,938, which is a hefty 20% increase over the $4,115 for its predecessor. A big price increase for a relatively small performance gain may not float all boats, but multiple CPUs in a single server platform magnify the impact (albeit with some loss to scaling). This might move the needle enough to boost application license utilization for "normal" users, which would justify the price. In the bleeding edge of HPC, an additional 4.8% gain will certainly generate some interest, but the Purley platform is on the near horizon, so the window of interest may be short.

Speaking of Purley, Intel also has its Skylake-EP, which is the next-generation Xeon Purley platform, on display at the show. Intel has general availability slated for mid-2017. The new chips boast support for AVX-512 instructions, which accelerate floating-point calculations and encryption algorithms. Intel also confirmed that the new chips would have integrated Omni-Path capabilities (which we suspected), which is a key portion of Intel's strategy to gain a foothold in the lucrative networking market.

Omni-Path Updates

Intel stated that its Omni-Path Architecture (OPA) had become the standard for 100GBps systems since it began shipping nine months ago. Other networking giants might contest those claims, but Intel claims OPA powers 28 of the top 500 supercomputers and accounts for 66% of the overall 100GBps market.

OPA does bring several advantages, and on-package implementations for both Knights Landing/Mill (KNL/KNM) and Xeon Purley processors reduces the amount of hardware required for bleeding-edge networking, such as the need for fewer switches. Intel claims OPA reduces cost by 37%. According to Intel, OPA also delivers on the performance front with 9% higher performance than competing alternatives, and that error detection and correction features don't come with an additional latency impact.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel's current stable of 100 deployed OPA clusters may seem small, but penetration should balloon when it becomes a commonplace addition to the Purley and KNL platforms. Intel is now ramping the -M KNL variant, which features the integrated OPA adapter. Intel also has its silicon photonics in the pipeline, so we expect OPA to continue to gain traction, particularly in the HPC market.

AI Developments

GPUs are an irreplaceable part of today's supercomputer hierarchy, but they require a CPU-driven platform, which is a good thing for Intel. One of Intel's key developments is its 72-core Knights Landing (KNL) platform, which it claims offers 5x the performance and 8x the power efficiency of competing previous-generation GPU solutions. KNL is also bootable, which is a key value proposition. Intel noted that the KNL line is moving along swimmingly and that the 72-core monsters have now found homes in more than 50 large-scale deployments. Nine of those are on the supercomputing Top500 list. The processors have been in high demand and will be widely available by mid-2017.

Knights Mill, which is the next iteration of the Knights Landing platform, is coming in 2017. Few details are known about the AI-centric design, other than it supports mixed precision to improve machine learning and claims to offer higher single precision performance, as well.

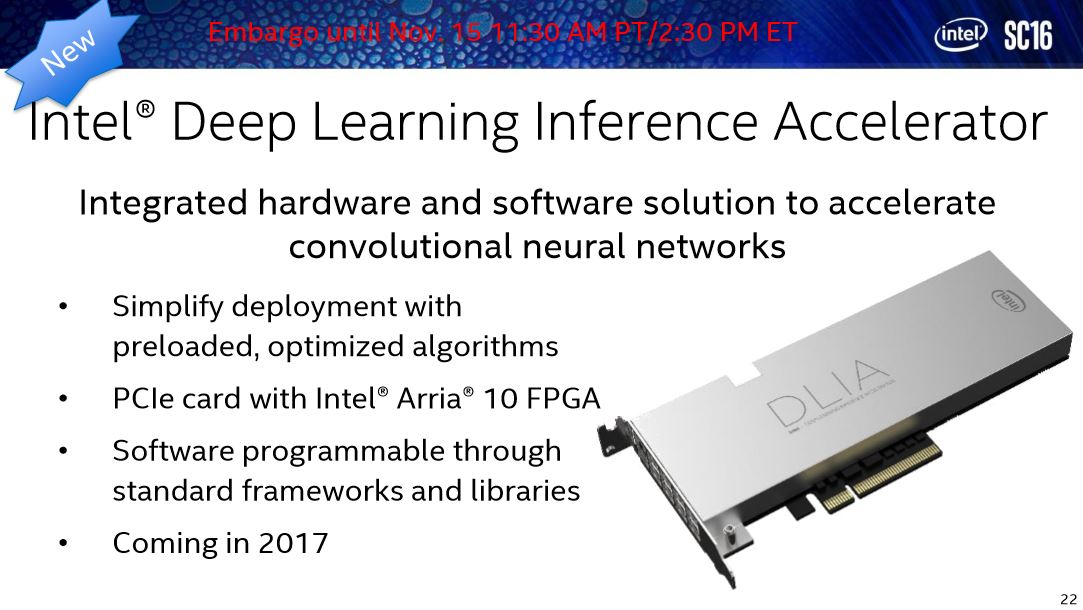

Intel announced its Deep Learning Inference Accelerator, which slots in as a PCIe device. The card houses an Intel Arria 10 FPGA and comes with a set of tools designed to demystify and simplify AI deployments. Intel positions the Xeon Phi KNL/KML for training and the new accelerator for the inference side of the workload.

The AI ecosystem is experiencing rapid development. For instance, TensorFlow is just celebrating its first birthday as an open source program. As with any new technology enjoying a rapid uptake and development, changes are coming quickly. FPGAs are reprogrammable, so they are well suited for rapid updates. Also, the cards come loaded with optimized algorithms and support standard frameworks and libraries, which is intended to speed adoption. Intel announced the card this week, but it will not be available until 2017.

Details are light (well, non-existent) on the new Knights Mill and Deep Learning Inference Accelerator, but we expect to learn more during Intel's AI Day event November 17.

EDIT 11/17/2016: Clarified Intel test result comparison.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

nutjob2 They should have called it the BrainBurst architecture.Reply

Or maybe just admit it's a bunch of overpriced FPUs? Nah. Intel is desperate to stay relevant and most of their R&D budget is spent in their marketing department. -

bit_user ReplySpeaking of Purley, Intel also has its Skylake-EP, which is the next-generation Xeon Purley platform, on display at the show. Intel has general availability slated for mid-2017.

WHY IS THIS TAKING SO @#$!% LONG?!?

The new chips boast support for AVX-512 instructions, which accelerate floating-point calculations and encryption algorithms.

Note that Intel has partitioned AVX-512 instructions into different groups. Xeon Phi v2 is the first product to support AVX-512, but it doesn't support the entire set. The Purley-compatible Skylakes are actually slated to support a different subset. So, it's not strictly a generational division, unlike previous vector instruction set extensions.

https://en.wikipedia.org/wiki/AVX-512#Instruction_set

One of Intel's key developments is its 72-core Knights Landing (KNL) platform, which it claims offers 5x the performance and 8x the power efficiency of the competing GPU solutions.

You shouldn't just repeat these specious claims without qualification. As these measurements were against Kepler-generation GPUs, they weren't really "competing GPU solutions".

KNL is also bootable, which is a key value proposition.

I think the bigger value proposition is that they can integrate with legacy codebases, without the sort of rewrite necessary to exploit CUDA. You can program them in any language, and run basically any existing software on them.

But for people who prize FLOPS/$ or FLOPS/W above all else, GPUs (and FPGAs) are still where it's at. ...unless you can afford to make your own ASIC, like Google.

-

Paul Alcorn Reply18878271 said:

One of Intel's key developments is its 72-core Knights Landing (KNL) platform, which it claims offers 5x the performance and 8x the power efficiency of the competing GPU solutions.

You shouldn't just repeat these specious claims without qualification. As these measurements were against Kepler-generation GPUs, they weren't really "competing GPU solutions".

KNL is also bootable, which is a key value proposition.

I think the bigger value proposition is that they can integrate with legacy codebases, without the sort of rewrite necessary to exploit CUDA. You can program them in any language, and run basically any existing software on them.

But for people who prize FLOPS/$ or FLOPS/W above all else, GPUs (and FPGAs) are still where it's at. ...unless you can afford to make your own ASIC, like Google.

Yes, in the article I linked we specify that the claims aren't against newer GPU's (copy/pasted below) and we noted those are Intel's claims in this piece, but will add "previous-gen".

It is notable that these results are from internal Intel testing, some of which the company generated with previous-generation GPUs. Intel indicated this is due to limited sample availability.

GPU and FPGA are still king on a FLOPS/$ FLOPS/W perspective, but I'm curious of a comparison to a GPU if you also included the CPU in the equation, as it still requires one. The GPU would probably still win, but it would be interesting to see a more direct comparison.