Marvel's Avengers Gets DLSS 2.1 Support, and We Tested It

4K 60fps at max settings is possible on 2080 Super and above, sort of.

Marvel's Avengers came out last month, and it can be a bit of a beast to run — especially if you want to run it at maximum quality and aren't running one of the best graphics cards from the top of our GPU benchmarks hierarchy. While the GeForce RTX 3080 and GeForce RTX 3090 perform quite well, previous gen cards like the GeForce RTX 2080 Ti can struggle. All the shadows, volumetric lighting, reflections, ambient occlusion, and other effects can tax even the burliest of PCs, particularly at 4K. But DLSS 2.1 support means the game now has an ultra performance mode, or 9X upscaling. You'd think that would make the game a piece of cake to run on any RTX GPU, but that's not quite true — at least not in our initial testing.

This isn't a full performance analysis, so I'm only testing at 4K using the very high preset, with the enhanced water simulation and enhanced destruction options set to very high as well (these last two are Intel extras, apparently). For ease of testing, I'm just running around the Chimera, the floating helicarrier that serves as your home base. A few settings aren't quite maxed out with the very high preset, but it's close enough for some quick benchmarks.

The test PC is my usual Core i9-9900K, 32GB DDR4-3600, and M.2 SSD storage. Since DLSS is only available on Nvidia RTX GPUs, that limits GPU options quite a bit. I tested everything from the RTX 2060 up through the RTX 3090, though I skipped the older 2070 and 2080 and only included the Super variants. Let's start with the non-DLSS performance.

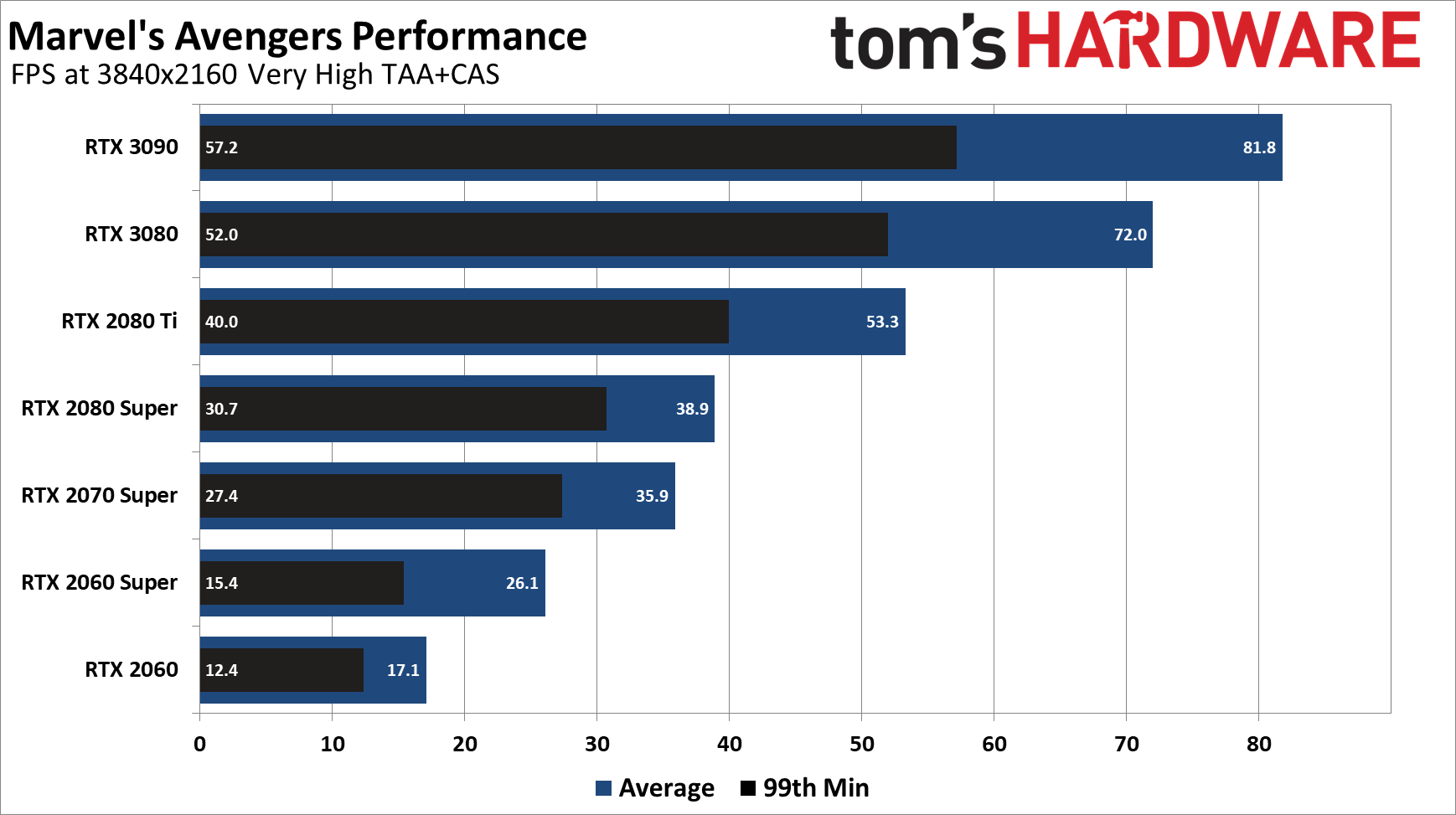

Okay then, RTX 3090 and RTX 3080 handle this pretty well, but everything else comes up well short of 60 fps. It's particularly alarming to see how badly the RTX 2060 does here, and I assume the 6GB VRAM is at least partially to blame. The thing is, the RTX 2060 Super has 8GB VRAM, and it's still coming in below 30 fps. There's a bit more variability between runs than with built-in benchmarks, but I've checked each card with multiple runs, and the results are relatively consistent.

In our usual test suite of nine games, the 2060 Super ends up about 15% faster than the RTX 2060 at 4K ultra. In Marvel's Avengers, it's a 53% advantage for the 2060 Super. That same greater-than-usual gap applies elsewhere as well. The 2070 Super is 38% faster than the 2060 Super, wherein our normal suite it's only 20% faster. But then the 2080 Super is only 8% faster than the 2070 Super (normally, it's a 15% gap). The 2080 Ti opens things back up; however: it's 37% faster than the 2080 Super, vs. 20% in our regular suite. The RTX 3080 does just about normal, beating the 2080 Ti by 35%, and the RTX 3090 is 14% faster than the 3080.

That's the baseline performance, with temporal anti-aliasing and AMD FidelityFX CAS (contrast aware sharpening), both of which have a relatively low impact on performance. Enabling DLSS disables both TAA and CAS, and there are four modes available, so let's see what happens with the higher quality DLSS modes first.

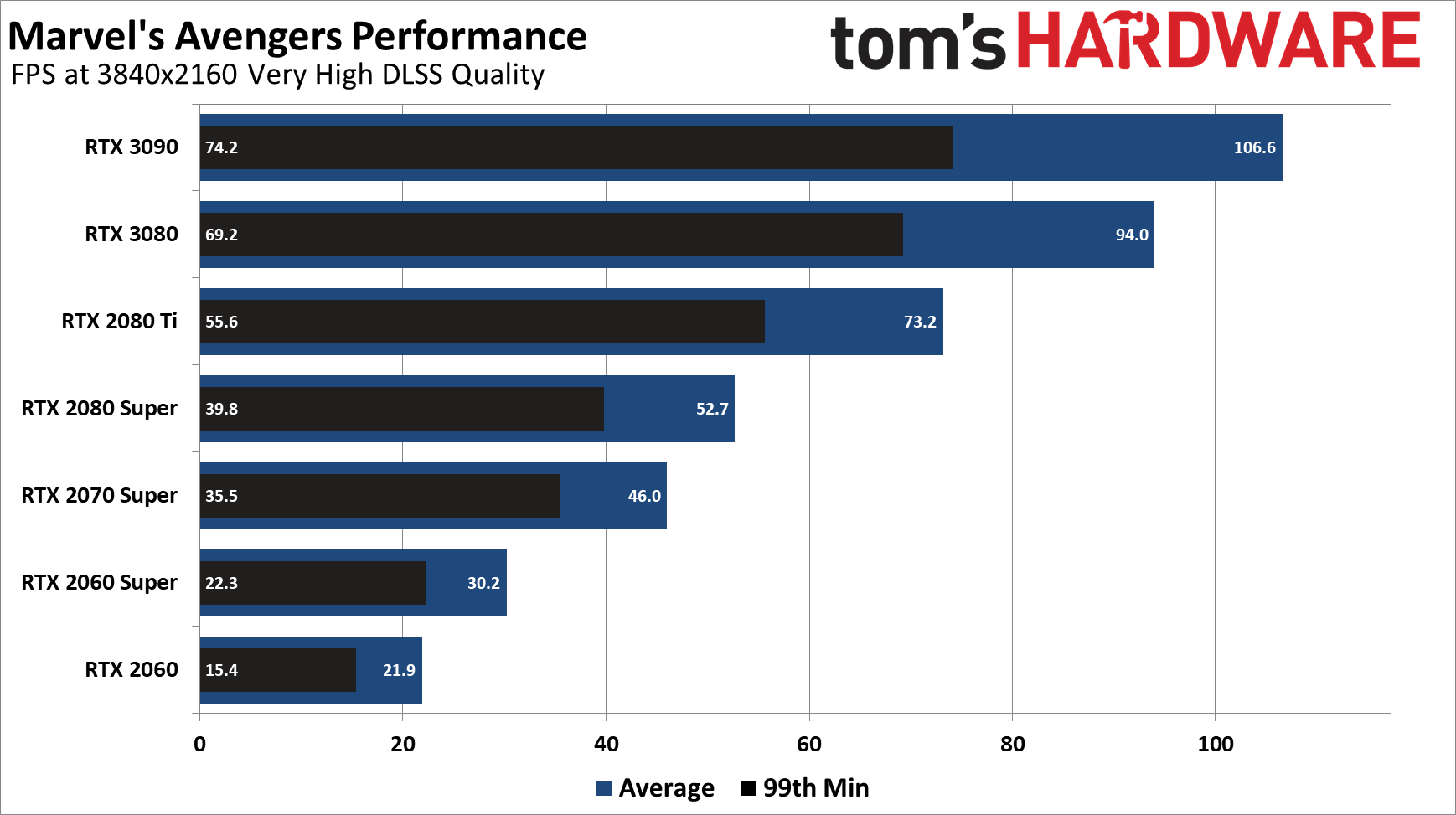

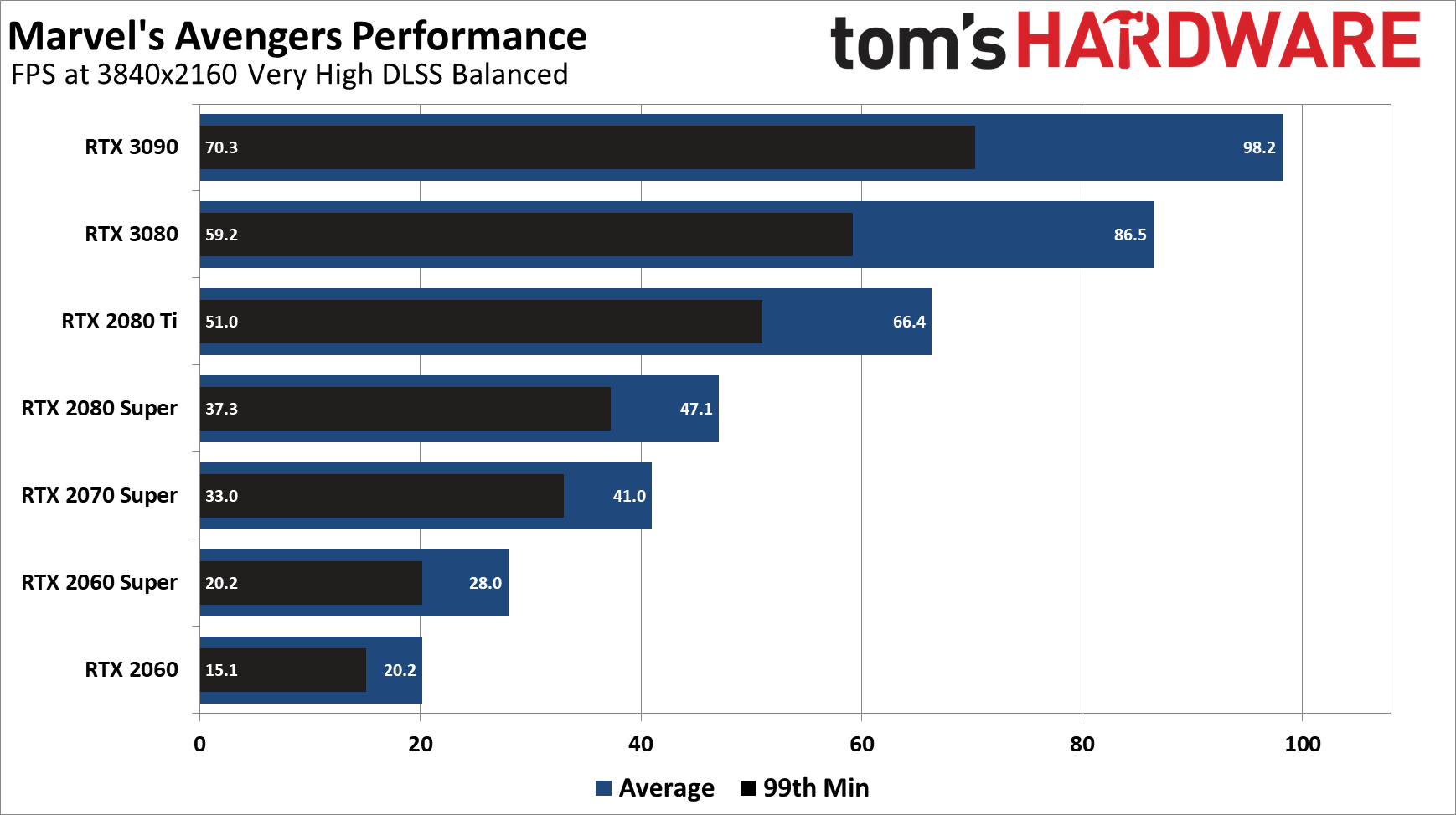

Theoretically, DLSS Quality mode does 2x upscaling, and DLSS Balanced mode does 3x upscaling, but performance for the two modes seems to be swapped. Perhaps the initial release of DLSS support in Marvel's Avengers switched the two modes, or somehow the 3x upscaling results in worse performance. Whatever the case, DLSS Balanced performance is worse than DLSS Quality performance on every GPU we tested, and for these tests we've used the in-game labels.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

We mentioned this to Nvidia, and were informed that the reason for the odd performance is because of the way Marvel's Avengers currently works. The game engine's post processing is being run at different resolutions in each case. Quality Mode = 1280x720, Balanced Mode = 2232x1256, Performance Mode = 1920x1080. I'm not quite sure why this would be the case (other than perhaps it's just a bug), but was told Nvidia and Nixxes are working together on the implementation, so things may change in the near future.

Right now, DLSS Balanced improves performance by 7-25% relative to non-DLSS, with the 2060 Super falling short of where we'd expect it to land. There might be some bug right now that's only affecting the 2060 Super performance, but we retested that card multiple times and couldn't get better results. The other GPUs are in the 15-25% improvement range, with the 3080 and 3090 both showing 20% higher performance.

DLSS Quality mode meanwhile shows a 15-39% improvement compared to non-DLSS. Again, the 2060 Super is at the low end of that range (16% faster), with the other six cards showing 28-37% gains. Whether this is 2X or 3X upscaling, the overall visual appearance looks quite good, but it's still only the 2080 Ti, 3080, and 3090 breaking the 60 fps mark. That's a bit surprising, considering this is likely upscaling 2217x1247 to 3840x2160. Native 1440p performance is much better than what we're showing here.

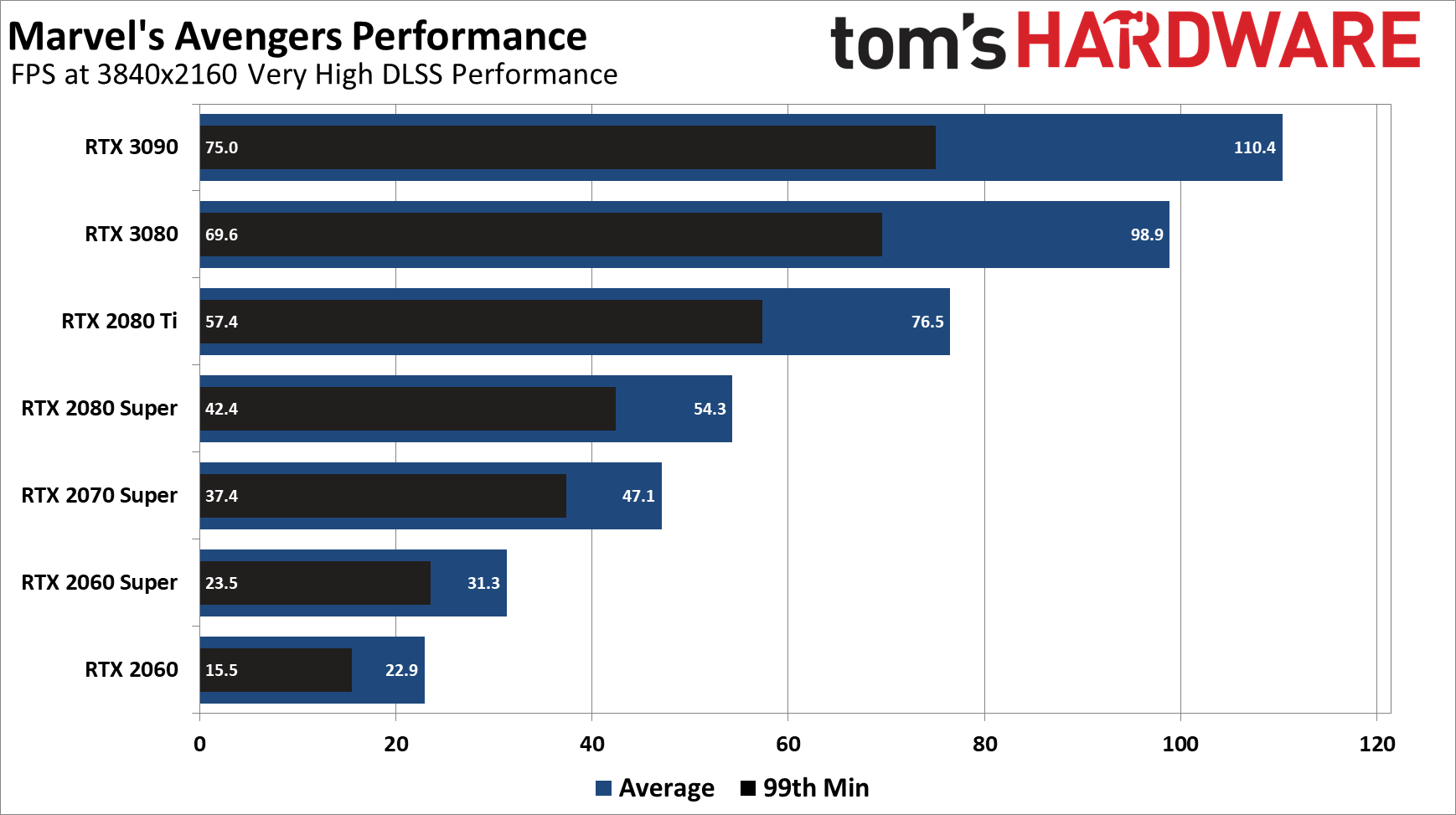

Next up, DLSS Performance mode upscales from 1080p to 4K — one-fourth of the pixels are rendered before running the DLSS algorithm. The difference between DLSS Performance and DLSS Quality isn't very large in Marvel's Avengers, either in visuals or performance. Basically, framerates are only about 5-6% higher than DLSS Quality.

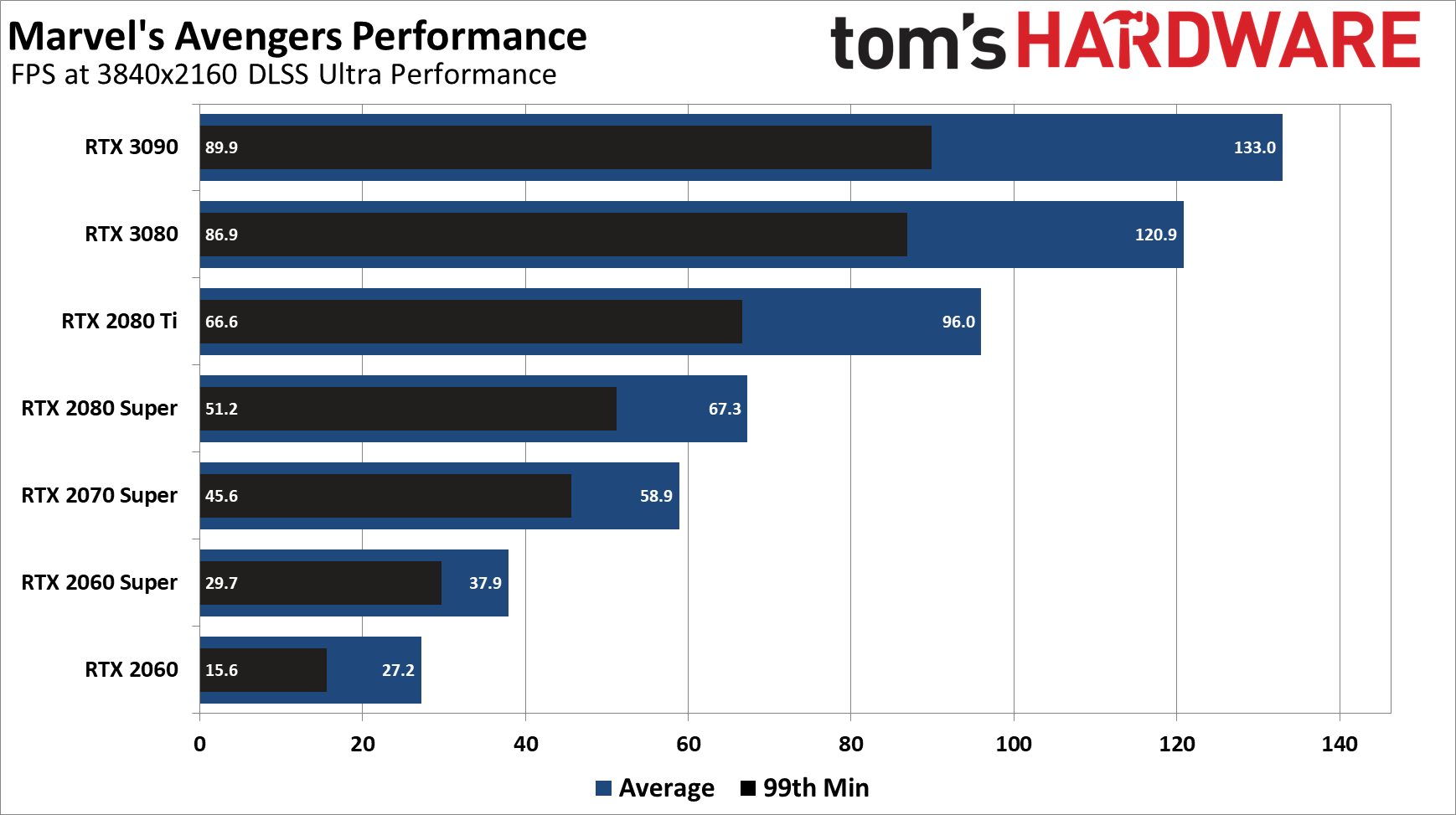

Last, we have the new DLSS Ultra Performance mode. First, it's important to note that this is mostly intended for 8K gaming — or at least, that's what Nvidia says. Still, we could run the setting with 4K, and we wanted to see how it looked. Not surprisingly, upscaling from 720p to 4K definitely doesn't look as crisp as native 4K rendering, or really even native 1080p, I'd say. But performance does improve.

Considering the difference between the rendered resolution and the DLSS upscaled resolution, the performance gains are relatively limited. Ultra Performance mode runs about 28-35% faster than Performance mode (with the RTX 2060 Super exception again). Or put another way, performance is about 60-70% better than native rendering.

My guess is that the Tensor computations become more of a bottleneck as the rendered resolution shrinks, so Ultra Performance mode ends up not helping as much as you'd expect. Put another way, the RTX 2060 Super — which again underperformed in my testing — delivered a result of 110 fps at native 720p with TAA and CAS enabled, 77 fps at 1080p, and 55 fps at 1440p. It's nowhere near those figures with the various DLSS modes.

Marvel's Avengers DLSS Image Quality Comparisons

Let's quickly wrap up with a look at image quality from the four DLSS modes compared to native rendering with TAA and CAS enabled. Unfortunately, there's camera sway even when standing still, so the images don't line up perfectly for this first gallery. Still, in general, you can see how DLSS does really well with certain aspects of the image, but there's a clear loss in fidelity at higher DLSS levels.

Look at the computer servers (to the right of the Avenger's A on the wall) to see an area where DLSS helps quite a bit. There are a bunch of extra lights that are only visible with DLSS enabled from this distance. The window up and to the right of Thor meanwhile shows a clear loss in image quality. Oddly, it looks as though the Quality mode is better than the Balanced mode in terms of image quality, even though performance is also higher with DLSS Quality, which is all the more reason to choose the Quality mode.

This second gallery is with all the camera shaking and repositioning turned off, running on the 2060 Super with the FPS overlay showing. You can see the performance is quite low, and image quality differences aren't as readily apparent. Ultra Performance mode shows some clear loss of detail, but even that result is pretty impressive considering the 720p source material. Here, Balanced may actually look a bit better than the Quality mode, though the differences are extremely slight.

Bottom line: DLSS continues to impress with its improved performance and often comparable or even better image quality relative to native rendering. There are still a few kinks to work out with Marvel's Avengers — or maybe I just exceeded the capabilities of the 2060 and 2060 Super — but overall, the addition of DLSS is a welcome option.

I've played the game quite a bit at 4K running on an RTX 3090 (yeah, my job is pretty sweet), and it just couldn't quite maintain a steady 60 fps in some areas. DLSS, even in Quality mode, provides more than enough headroom to smoothly sail past 60. With DLSS, locking in 60 fps is also possible on the RTX 2080 Ti and RTX 3080 — and a few tweaks to the settings should get the 2080 Super and 2070 Super there as well. As for the RTX 2060 and 2060 Super, maybe an update to the game will improve their performance. Right now, they're better off sticking to 1440p or 1080p.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

kristoffe Reply

why? who is going to game at 8k atm? 4 people?nofanneeded said:Still no 8K testing even with DLSS2.1 ?? -

nofanneeded Replykristoffe said:why? who is going to game at 8k atm? 4 people?

And who games on LN2 OC ? but we still see it here ...

It is cool to see how much Technology we reached.