Nvidia Jetson Xavier NX Developer Kit: Powerful A.I., Tiny Size

The Xavier NX Developer Kit has enough performance to run at least four A.I. apps at once.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

If you’re developing A.I. applications, including intelligent robots, Nvidia’s Jetson Xavier platform is one of the best choices. Announced in late 2019 but just starting to hit the market, the Xavier NX combines the power of a 384-core Volta GPU with a 6-Core Arm v8 CPU to deliver 21 TOPS (Trillion Operations Per Second) of inference goodness in a package the size and shape of a SODIMM (laptop memory module).

The Xavier NX by itself is just a chip and requires you to build your own I/O board to attach it to. But what if you just want to start using the NX to program and test? Available today for $399, the Nvidia Xavier NX Developer Kit provides you with everything you need to start developing your own AI applications: the Xavier NX SoC, a powerful fan and an I/O board that’s filled with ports and pins.

I had the chance to spend some time testing with the Nvidia Xavier NX Developer Kit and came away impressed with both its inference capability and the flexibility it provides. If you want to get into professional A.I. development, this board and platform are compact and relatively affordable. Makers and hobbyists are clearly not the target audience and they would definitely be better served by a Raspberry Pi with a Coral USB Accelerator for A.I. or even Nvidia’s own $99 Jetson Nano Developer Kit.

Nvidia Xavier NX Developer Kit Specifications

| GPU | Nvidia Volta with 384 CUDA cores, 48 Tensor Cores |

| CPU | 6-core Nvidia Carmel ARM v8 CPU with 6MB L2 cache and 4MB L3 cache. |

| RAM | 8GB LPDDR4 (on-board) |

| Storage | microSD / M.2 80mm slot |

| Display Output | 1x HDMI / 1x DisplayPort |

| USB | 4x USB 3.1 (Type-A), 1x microUSB 2.0 |

| Connectivity | 802.11ac Wi-Fi, Gigabite Ethernet |

| Camera Ports | 2x CSI-2 ports |

| GPIO | 40-pin GPIO |

| TDP | 15W, 10W (low power) |

| Size | 4.1 x 3.6 x 1.2 inches (103 mm x 90.5 mm x 31 mm) |

Design and Ports on Nvidia Xavier NX Developer Kit

At 4.1 x 3.6 x 1.2 inches, the Xavier NX Developer Kit is a little bit larger than two Raspberry Pi 3Bs next to each other. A fan comes attached and, considering the 15-watt TDP of the chip, I don’t think passive cooling would be a good idea.

All of that board real estate leaves room for a slew of useful ports and connectivity options. On the back side, you’ll find four USB Type-A 3.1 ports, gigabit Ethernet, a full-size HDMI port and a full-size DisplayPort. If you have dual monitors, you can indeed connect one to each port and enjoy the added screen real estate.

The Xavier NX Developer Kit also a micro USB port, but unlike with a Raspberry Pi, it’s used for data transfer not power. The Developer Kit is powered via a proprietary power brick that uses a barrel adapter. Our review unit came with a 65-watt brick, but Nvidia said that later revisions of the product could ship with a 45-watt model that’s presumably a little smaller.

The board has two interesting features that allow it to be compatible with some key Raspberry Pi accessories. Two CSI camera connectors will work with any Raspberry Pi camera module, including the new Raspberry Pi High Quality Camera. There’s also a 40-pin GPIO header which Nvidia says will work with Raspberry Pi HATs if you use the proper Python library when programming.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

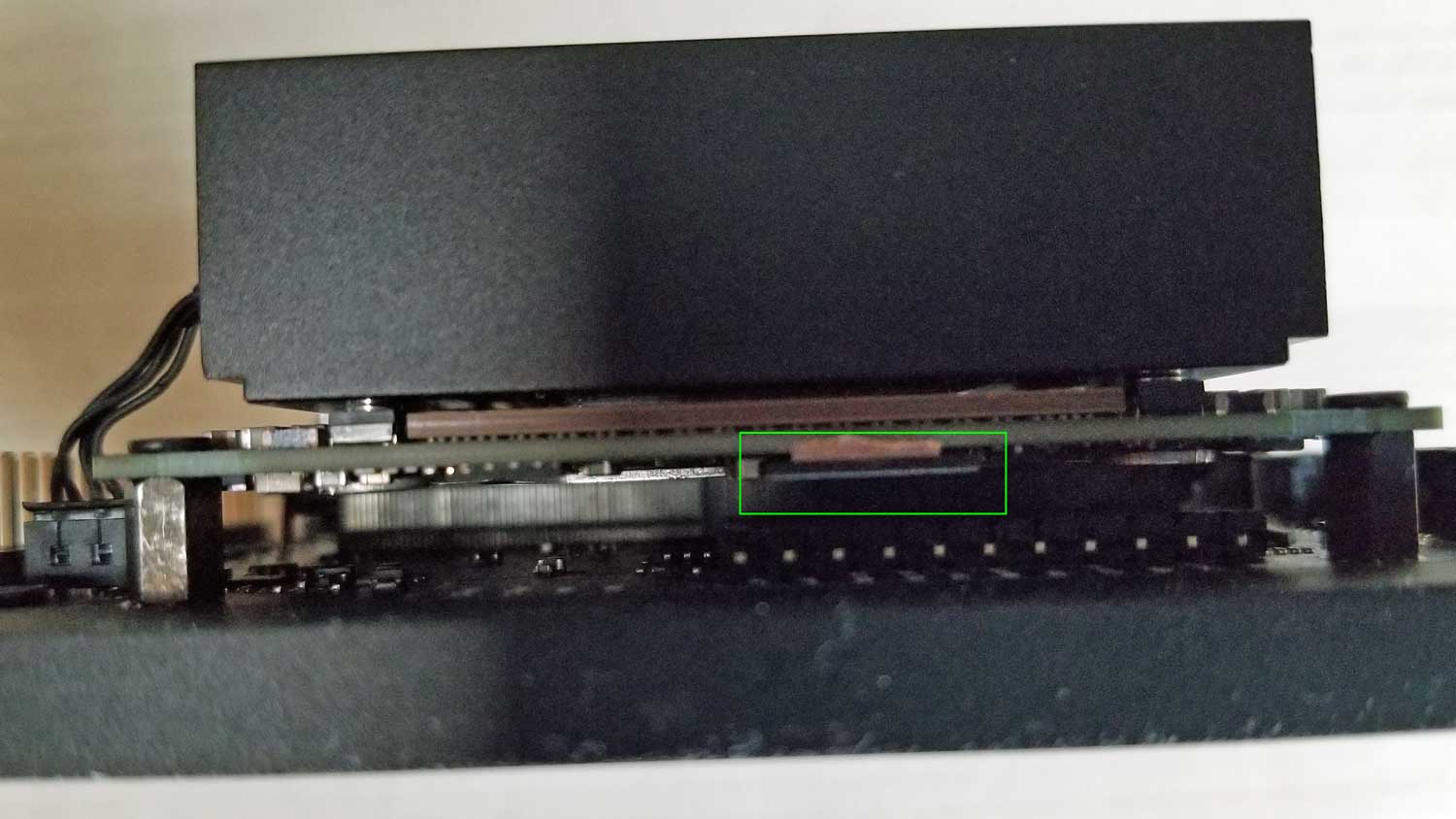

Like a Raspberry Pi, the Jetson Xavier NX Developer Kit boots off of a microSD card. That’s very convenient, because if you have several different OS images for different projects and you want to switch among them, you can just pop one card out and throw in the next one. Unfortunately, accessing the microSD card slot is a pain because it’s located on the underside of the NX Module which, when plugged in (as it is out of the box), sits just a few millimeters above the board, leaving no room for adult-sized fingers (a tweezer did the trick).

On the underside of the board, you’ll find an M.2 slot for installing an SSD and the built-in Wi-Fi card. An SSD can be necessary, particularly when you’re trying to load models off and need high-speed media to do it. And having built-in Wi-Fi is a huge improvement over the Jetson Nano Developer Kit, which only had Ethernet on the assumption, I guess, that everyone is doing their coding right next to their router.

Software for Xavier NX Developer Board

The Jetson Xavier NX runs a modified version of Ubuntu called Linux for Tegra. The look and feel are exactly like regular Ubuntu but, at least in our case, there was special Nvidia wallpaper.

Also, in the upper right corner of the screen, there’s the ability to change the power / performance profile to use either 2, 4 or all 6 cores. If you are doing lightly-threaded apps, as in the performance test we ran (see below), you’ll want the 2-core profile but if you are doing several different kinds of inference at once, then the 6-core makes more sense. You can also choose 10-watt 2 or 4 core mode if you want to save power.

Programming of Xavier NX Developer Board

Nvidia’s Jetson platform offers a number of different AI SDKs and models that are optimized for dealing with different kinds of machine learning tasks. The Deepstream SDK, for example, is used for developing Intelligent Video Analytics (IVA) applications such as face and people detection while the Isaac SDK is used specifically for training robots

There are also a slew of pre-trained models available, including BERT (Bidirectional Encoder Representations from Transformers), a language interpreter, Resnet-18 for detecting objects and Nvidia Facial landmarks / Gaze models for mapping human expressions.You can program for these models in a variety of languages, including Python.

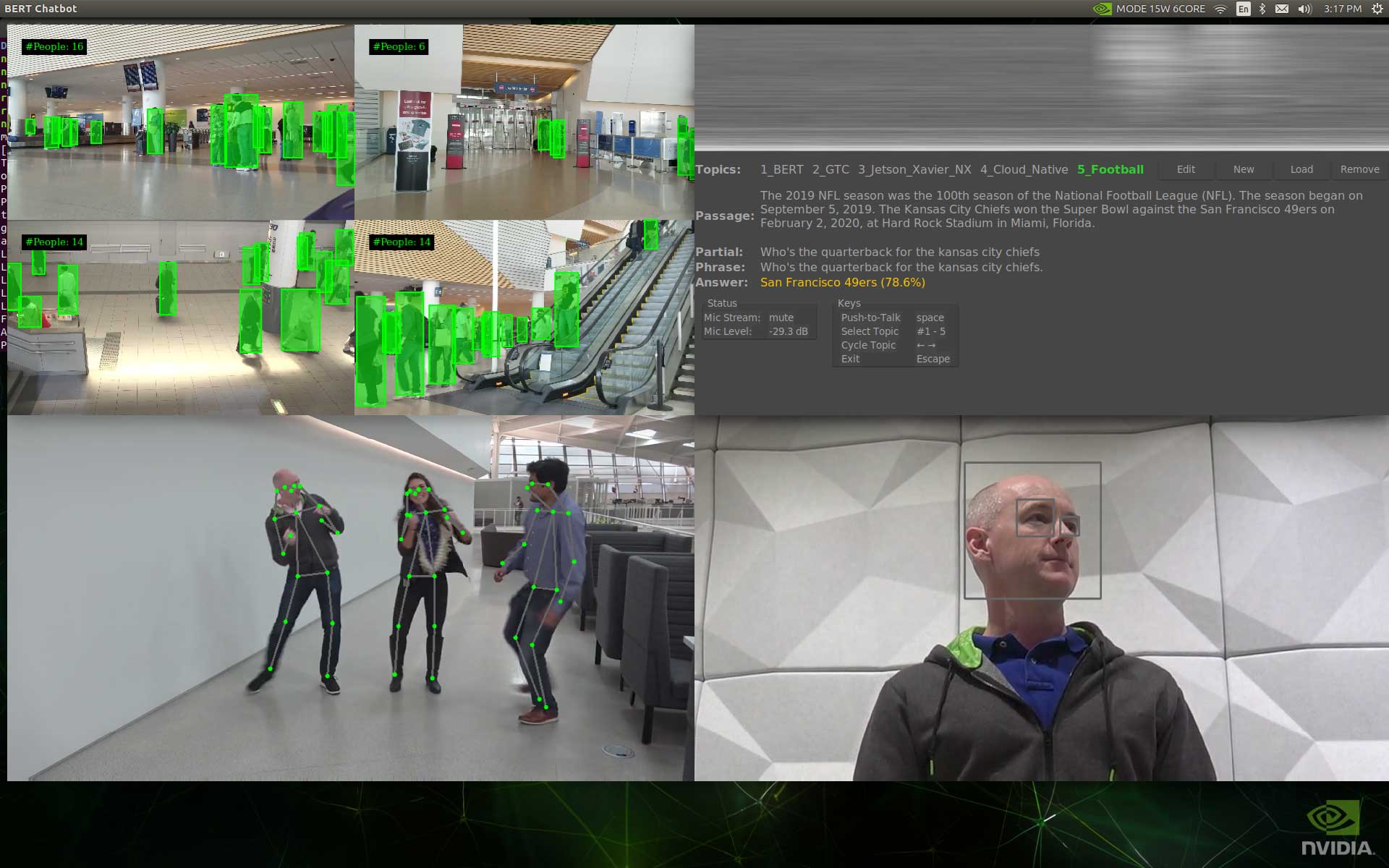

Launching Nvidia’s preloaded container demo, I saw a screen that was running four different inference applications at once. In the upper left corner, an application detected people walking by in various videos by drawing green boxes around them.

The lower left corner had a different application which detects people’s poses or the the way they are standing and moving. The lower right corner displayed a gaze detection app which can tell what a person is looking at by identifying and tracking their eyes.

The most interesting part of the demo appeared in the upper right quadrant and showed a natural language interpretation app with five different topics I could ask about. I chose the NFL 2019 season topic and used the microphone to ask questions such as “who won the Super Bowl.” The system then turned my text into speech and gave me an answer based on the text it had about the topic. Unfortunately, the A.I. here is only as good as the text you feed it; it doesn’t do much in the way of interpreting you. Since there was only one paragraph of text about the NFL season, I got weird answers when I asked for information that wasn’t in that graph such as “how many regular season games were played?”

I was also able to create my own topic, based on the history of Tom’s Hardware. I wrote that Tom’s Hardware was founded in 1996 so the system gave me the right answer when I asked “when was Tom’s hardware founded?” However, when I asked “how many years has Tom’s Hardware been around,” the system spit back 1996 at me, rather than doing the math to say “24 years.”

Watching the inference engine identify poses and people from preloaded videos is one thing, but testing it live is another. I hooked up a USB webcam and watched as the software correctly identified my features as I did a pose..

Why would you care about identifying someone’s gaze or how they are posed? If you were a robot, it would matter because it would allow you to know whether a person was trying to interact with you. For example, if there’s a hospitality robot at a hotel and I’m standing next to it and talking to my friend, I don’t want the robot interrupting our conversation to ask me if I’d like directions to the elevator. However, if the robot knows that I am gazing right at it, it can initiate a conversation without becoming an annoying disruption.

Performance of Nvidia Xavier NX Developer Kit

The point of the Nvidia Jetson Xavier NX board is to do A.I. on the edge, not in the cloud. So performance really matters and you’re not going to be able to do something like run four applications at once with a lesser processor.

Nvidia claims that Xavier NX is ten times faster than the $99 Jetson Nano which had a modest 128-core Maxwell CPU and, along with a quad-core ARM A57 processor and 4GB of RAM. That’s believable considering the higher core count and improved architecture of the Xavier NX.

We ran some of Nvidia’s recommended benchmarks on the Xavier NX and compared them to results from the Jeston Nano (note that the Nano results come from Nvidia’s press materials on the Nano from March 2019 as we were not able to test the Nano. However, these numbers were published long beter the Xavier NX was announced).

| A.I Test Model | Jetson Xavier NX (fps) | Jetson Nano (fps) |

|---|---|---|

| ResNet-50 | 871.6 | 36 |

| Inception v4 | 317.1 | 11 |

| OpenPose | 231.6 | 14 |

| VGG-19 | 63.4 | 10 |

| Unet | 142.4 | 18 |

| Tiny Yolo | 568.9 | 25 |

| Super Resolution | 157.2 | 15 |

As you can see from the table above, on some of these models, the Xavier NX is as much as 20 times faster than the Nano. This added performance is what it allows it to not only react more quickly to incoming streams but to handle multiple applications at once, which is necessary when you want to create a robot that can move, talk and interpret human body language / speech at the same time.

Dev Kit vs Xavier NX Production Module

Somewhat strangely, the developer kit is actually a tad less expensive than the bare Xavier NX SoC, even though both are similarly specked. The SoC by itself is currently selling for $459, which is $60 more than you pay to get the same thing, but with the I/O board you need for development. However, Nvidia explains that the board by itself uses “production-rated components” and is built for a 5 to 10 year operating life will the dev kit board is not. And if you’re building a series of robots for your factory, you care about the reliability more than $60.

Another difference between the dev kit and the product-level board is that the SoC comes with 16GB of on-board eMMC storage rather than requiring you to bring your own microSD card. For embedded systems, it’s probably safer not to add microSD cards as a point of failure.

Bottom Line

If you’re looking to build serious, professional A.I.-powered robots, Nvidia’s Jetson Xavier NX is a strong choice. And, before you start building those robots, you’re going to need the Xavier NX Developer Kit.

Makers may have some fun using the developer kit for one-off projects; the fact that you can use Raspberry Pi cameras and HATS with it is a real plus. However, for this kind of money, you’ll want to do more than just have some fun.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

bit_user Cool review.Reply

I just have to point out one thing:

If you want to get into professional A.I. development, this board and platform are compact and relatively affordable.

It's actually not a good deal for AI development, since it only delivers 20 TOPS of int8 inferencing performance, whereas a $400 RTX 2060 Super can do over 100 TOPS of same. And the RTX card has a lot more training horsepower, as well.

This is definitely for AI-powered robots, where you need portability and (relatively) low-power. -

GOBish How did you get these frame rates for the Xavier NX? I am trying YOLO tiny and get frame rates similar to the ones you quote for the Nano.Reply

I did verify that the GPU is being utilized. -

bit_user Reply

Using what software?GOBish said:How did you get these frame rates for the Xavier NX? I am trying YOLO tiny and get frame rates similar to the ones you quote for the Nano.

I did verify that the GPU is being utilized.

I don't have experience with the Xavier NX, but I'm sure that to get those framerates, you have to make sure you're using the Tensor cores. That probably means using Tensor RT and ensuring everything is built and run with the correct options to use the tensor cores.