Nvidia Arm Superchips to Power $160 Million Supercomputer in Barcelona

Nvidia leverages Arm to nip at Intel and AMD's supercomputing heels.

Nvidia's Grace superchip made waves when introduced earlier this year, as the company promised a supercharged Arm-based product that could take on Intel and AMD's x86 dominance in the High-Performance Computing (HPC) space. Now, as reported by HPC Wire, the company has snagged a $160 million contract (~€151 million) to provide the brains and brawn of supercomputing hardware for one of EuroHPC's supercomputing projects. The MareNostrum 5 (MareNostrum roughly translates to "our sea") will be installed in the Barcelona Supercomputing Centre (BSC) in Spain and will be operational as early as 2023.

Mare Nostrum 5 is being built as part of the EuroHPC JU project, and is expected to offer peak performance of 314 Petaflops of FP 64 computing power across both CPU and GPU accelerators, with 200 Petabytes of storage for in-access workloads, and a further 400 Petabytes of cold storage. Following trends in HPC architecture design and other projects across the EuroHPC project, it's expected that the 200 Petabyte node will be kept in a fast, NAND-based storage subsystem, while the cold storage node (also called active storage, referring to data that's crucial but not frequently accessed) will likely make use of more cost-effective, classical HDD topologies.

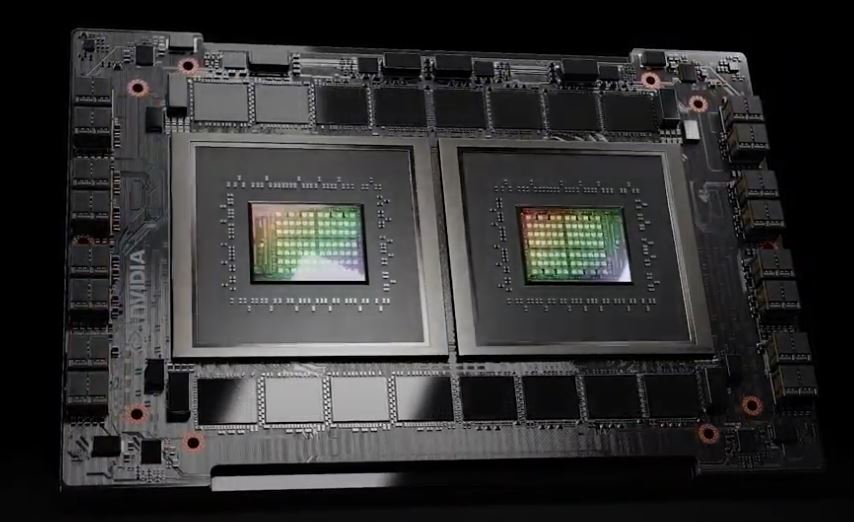

The system will employ Nvidia's 144-core, Arm-based Grace "superchips" in dual-chip configurations, paired with the company's H100 (Hopper) discrete GPU accelerators (which feature 80 billion transistors apiece with 80 GB of HBM3 memory and 3.2 TB/s bandwidth). As a result, MareNostrum 5 is projected to deliver more than 18 Exaflops of AI acceleration (typically FP8 8-bit floating-point operations), making it the fastest AI supercomputer in the European Union. Besides Nvidia's chip tech, the company's Quantum-2 (aka NDR) InfiniBand software-defined networking leveraging the company's BlueField data processing units (DPU) to keep all components talking at low latency with a high throughput of 400 GB/s - not unlike the performance achieved by Cray's Slingshot interconnect.

Educated speculation from The Next Platform estimates that MareNostrum 5 could deploy as many as 4,500 "Hopper" H100 accelerators, which would also be good for around 270 petaflops of FP64 oomph thanks to the chip's Tensor Cores. The remaining 44 Petaflops of FP64 performance are expected to be derived from the dual-Grace CPU systems, which the publication estimates as providing 3.84 teraflops per Grace chip - amounting to a likely total of around 5,730 dual Grace modules.

MareNostrum 5 is especially targeted for medical research, chemistry simulations and drug development while supporting applications like climate science and environmental engineering. Nvidia's Omniverse software package will power the development of digital twins for these applications - essentially enabling large-scale, physically-accurate simulations of industrial-scale assets and processes. The digital twin tech enables the integration of autonomous systems with real-world, real-time data streams, enabling a circular feedback system of simulation, output, and on-the-fly updates on the simulated models.

"The acquisition of MareNostrum 5 will enable world-changing scientific breakthroughs such as the creation of digital twins to help solve global challenges like climate change and the advancement of precision medicine," said Mateo Valero, director of BSC. "In addition, [BSC] is committed to developing European hardware to be used in future generations of supercomputers and helping to achieve technological sovereignty for the EU's member states."

Furthermore, as is the case with this latest generation of supercomputers being installed across Europe, MareNostrum 5 will be completely powered by renewable energies, with excess heat being repurposed - instead of just being expelled with no destination.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It's currently unclear how exactly the excess heat will be repurposed (and in what proportion). It will likely follow the same design principles as Europe's current leader in the supercomputing space, the Kajaani, Finland-installed, all-AMD-powered LUMI supercomputer, which repurposes 20% of its waste heat towards the surrounding civil district, allowing for cuts in further energy expenditure towards heating.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.