Nvidia Lowers DGX Station Pricing By 25%

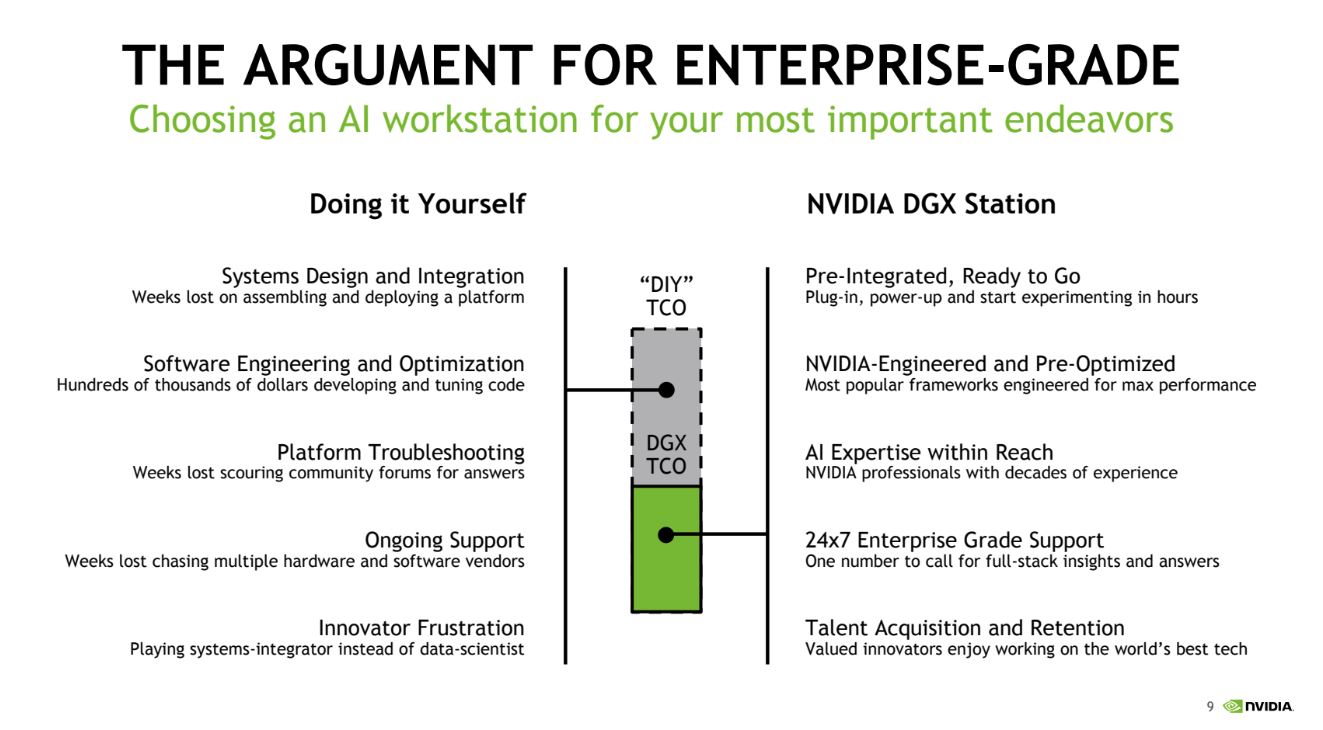

Nvidia introduced a new Jump Start program for its DGX Station that lowers the introduction pricing by 25% for the first system you purchase. Even amid the soul-crushing GPU shortage, $49,900 may seem like a steep price to pay for a system that comes wielding four GPUs, but these aren't garden-variety cards. The workstation features Nvidia's Volta V100 GPUs, which are designed for professional applications. The DGX Station, in particular, is designed specifically for deep learning developers.

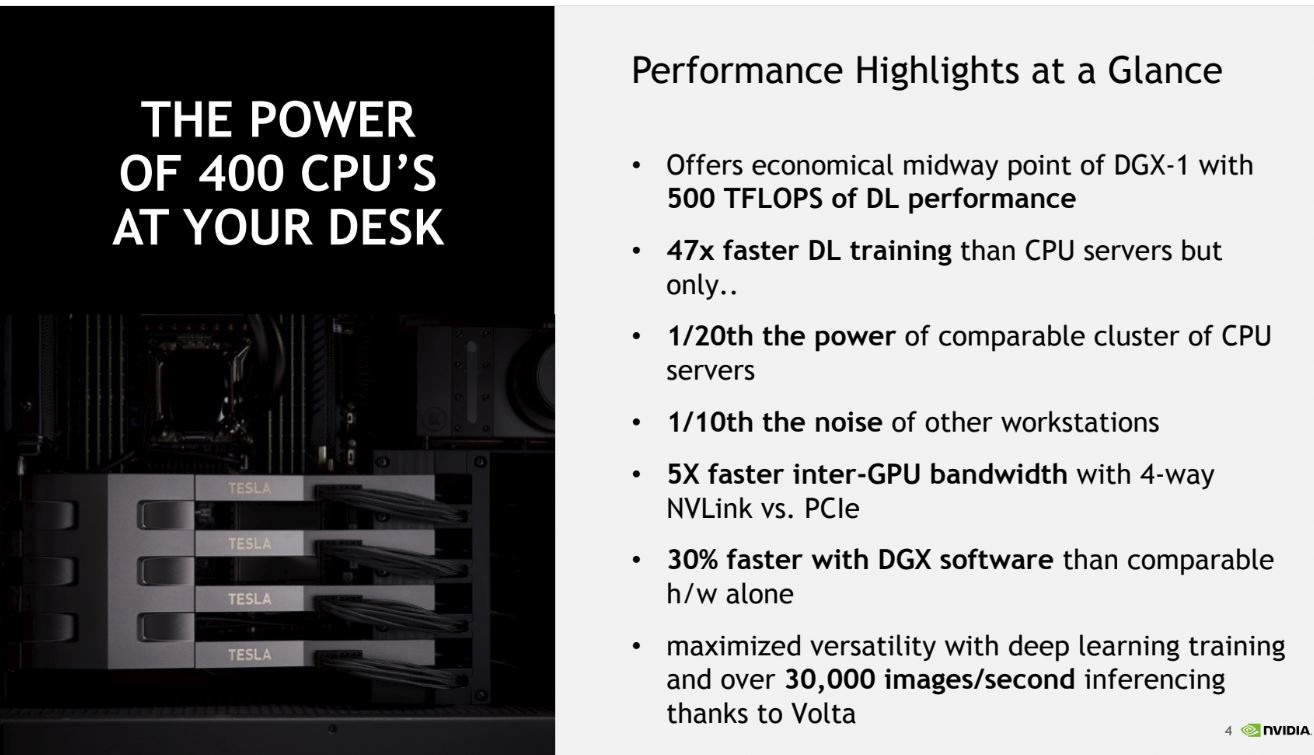

Nvidia designed its DGX Station to be a smaller workstation-class version of its DGX-1 server. The four watercooled Tesla V100s churn out up to 500 TeraFLOPS of performance inside the full-tower design. That comes courtesy of 64GB of HBM2 memory and a total of 20,480 CUDA cores.

The DGX Station comes loaded with a range of other performance-boosting additives, such as 256GB of DDR4 memory and three 1.92TB SSDs in RAID 0. It also includes a 1.92TB SSD for the operating system and a dual 10Gb LAN networking solution.

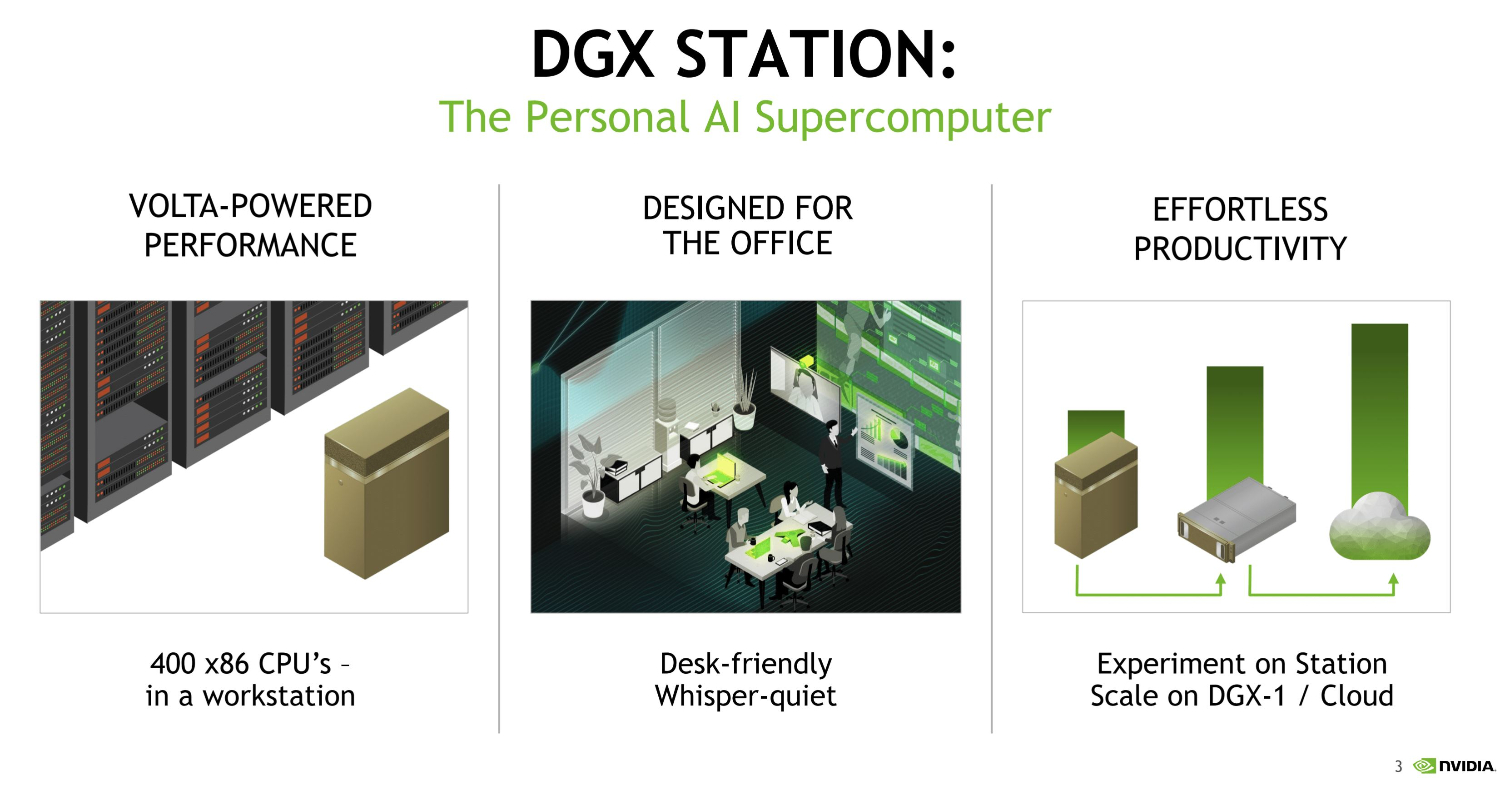

Nvidia claimed the system can outperform 400 CPUs (no mention of which CPUs) with a mere fraction of the power consumption. Granted, this system still consumes 1,500W under full load, but Nvidia claimed the water cooling ensures that it's quiet enough to set under your desk during operation. That means deep learning developers can use the system in their office, which often isn't possible with bulkier servers.

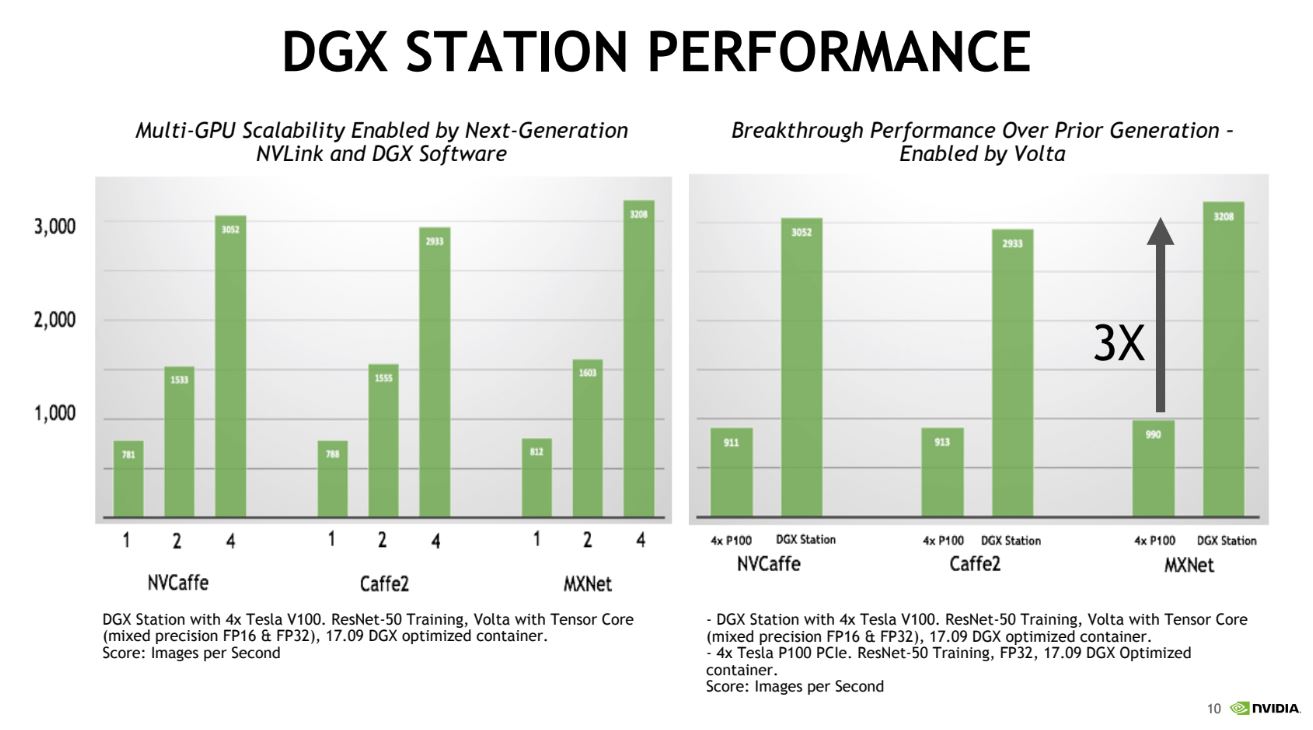

The price tag may seem extreme, but the system has exclusive capabilities. For now, it is the only workstation available that can run four V100s together using NVLink, whereas other workstations, including the ones individual devs build on their own, are limited to dual-NVLink implementations. The NVLink connection boosts performance with broader data pipes between each GPU. For instance, the four-way NVLink confers a 5x inter-GPU bandwidth benefit over a standard PCIe connection between the GPUs.

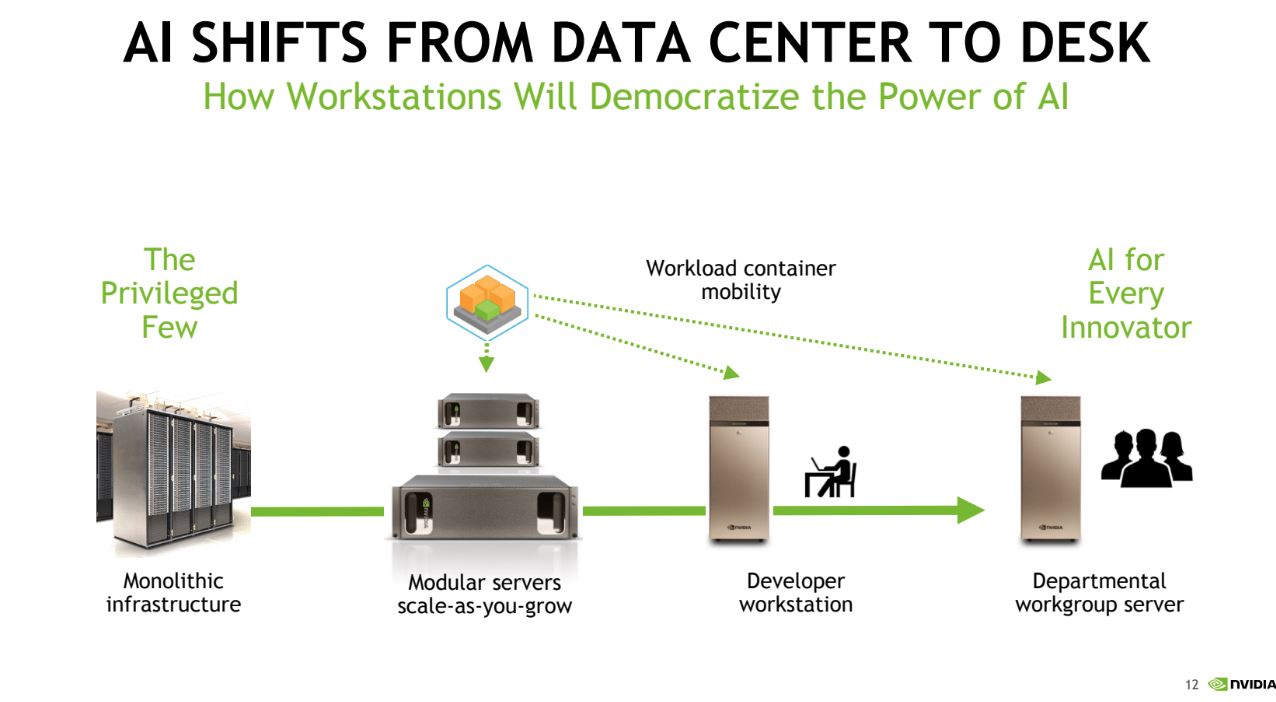

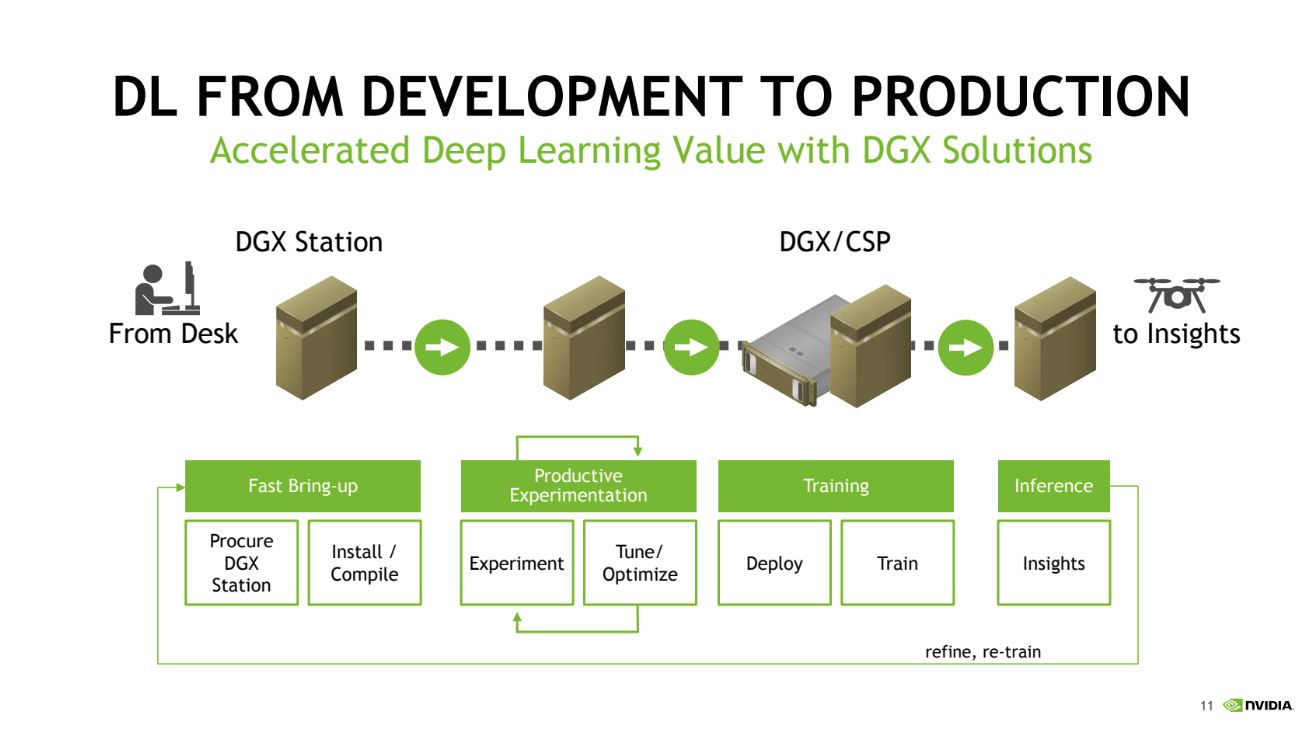

Nvidia also includes its software suite, which comes pre-qualified and loaded onto the system, which the company claims offers up to a 30% performance advantage. Developers can simply load pre-optimized containers, design their software, and then migrate it to production-class servers in remote data centers. Of course, Nvidia imagines these systems working in tandem with its DGX-1 servers (pictured above), which allows the developer to migrate the code without recompiling.

Frank Wu, the Director of Machine Learning at SAP, explained that one of the immediate benefits of the DGX station was a drastic reduction in the time it took to train deep learning models. Previously, the company had used a CPU-based system in its lab, but the DGX Station's compact and silent design allowed the company to use it in a typical office setting. The company designs the models in the office, then migrates them directly from the DGX Station to the DGX-1 server in a remote data center. SAP also found that the system has enough horsepower to support multiple simultaneous users, which is ideal for its deep learning group because the system is shared with teams in both the U.S. and in Germany. For instance, SAP's HANA group also uses the system to develop its GPU-accelerated database software.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The DGX Station's 25% discount comes via resellers, and it applies only to the first system purchase. Any follow-on purchases come with the normal $69,000 price tag. The Jump-Start program runs until April 29, 2018.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

popatim Wow, That makes the Volta card about 10x (Tflops) better then a 1080Ti and Titan XP. Amazing.Reply -

TJ Hooker Reply

The 500 TFLOPS number in the article only applies to specific applications that can make use of the tensor cores, e.g. deep learning. For general purpose single precision compute, the V100 is around 50% faster than a 1080 Ti (~15 vs ~10 TFLOPS).20765774 said:Wow, That makes the Volta card about 10x (Tflops) better then a 1080Ti and Titan XP. Amazing. -

bit_user Reply

https://www.anandtech.com/show/12170/nvidia-titan-v-preview-titanomachy/820765870 said:So, um... Can it run Crysis?

Though I'd bet it would only use one of the GPUs. -

bit_user Reply

Somewhere, I ran across a paper on how to refactor some classical HPC problem to exploit the tensor cores. Of course, there were some efficiency losses from mitigating the lower precision, but the net effect was better performance than simply using its double-precision units.20765878 said:The 500 TFLOPS number in the article only applies to specific applications that can make use of the tensor cores, e.g. deep learning.

-

TJ Hooker Reply

What about it? Are you asking what the crypto currency hashrate of this workstation would be? If so, it would depend on what you're mining, if you google tesla v100 hashrate you can find some numbers.20767753 said:What about hash power?