The refresh that wasn’t — AMD announces ‘Hawk Point’ Ryzen 8040 Series, teases Strix Point

A not-so-fresh refresh.

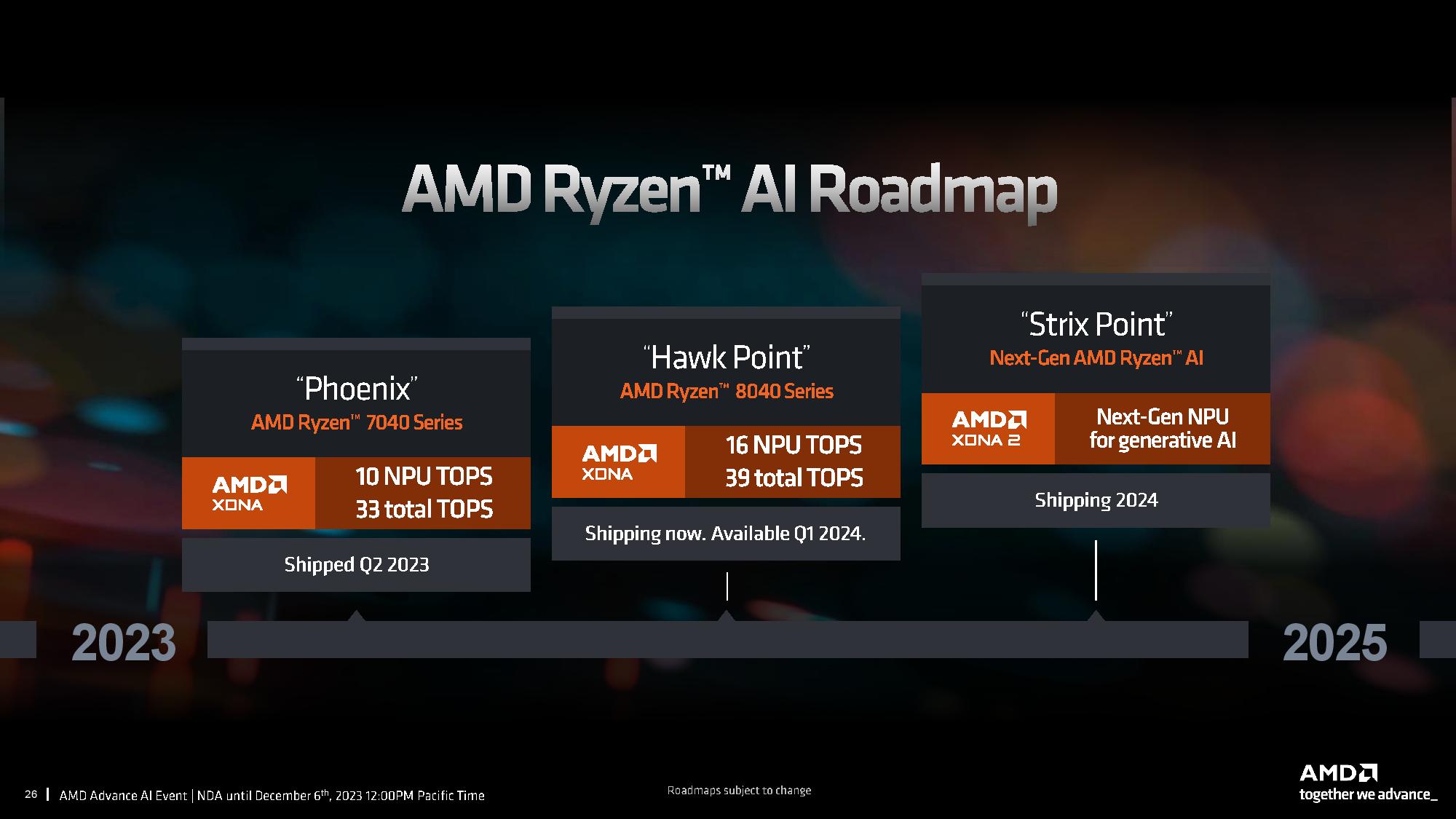

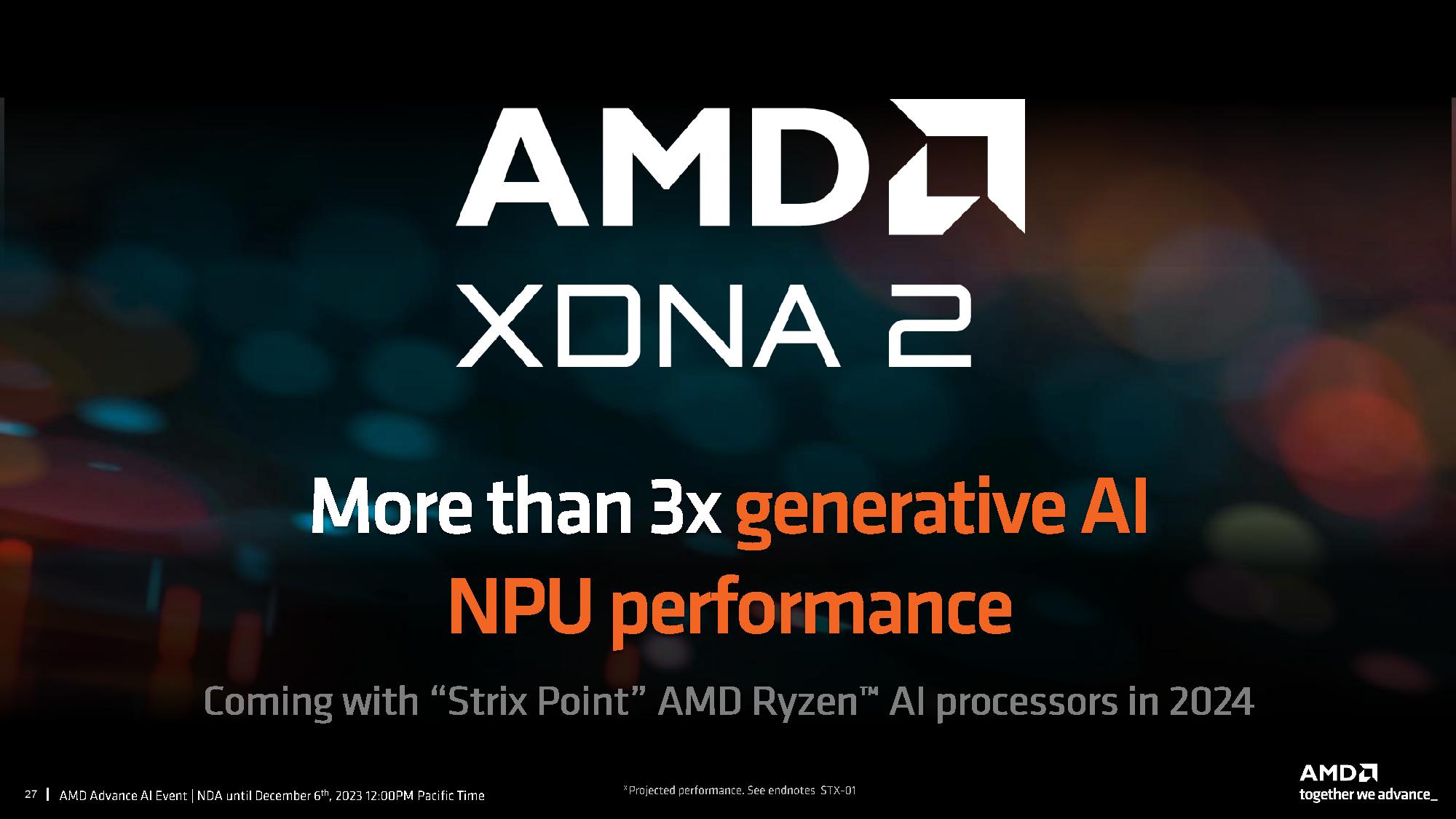

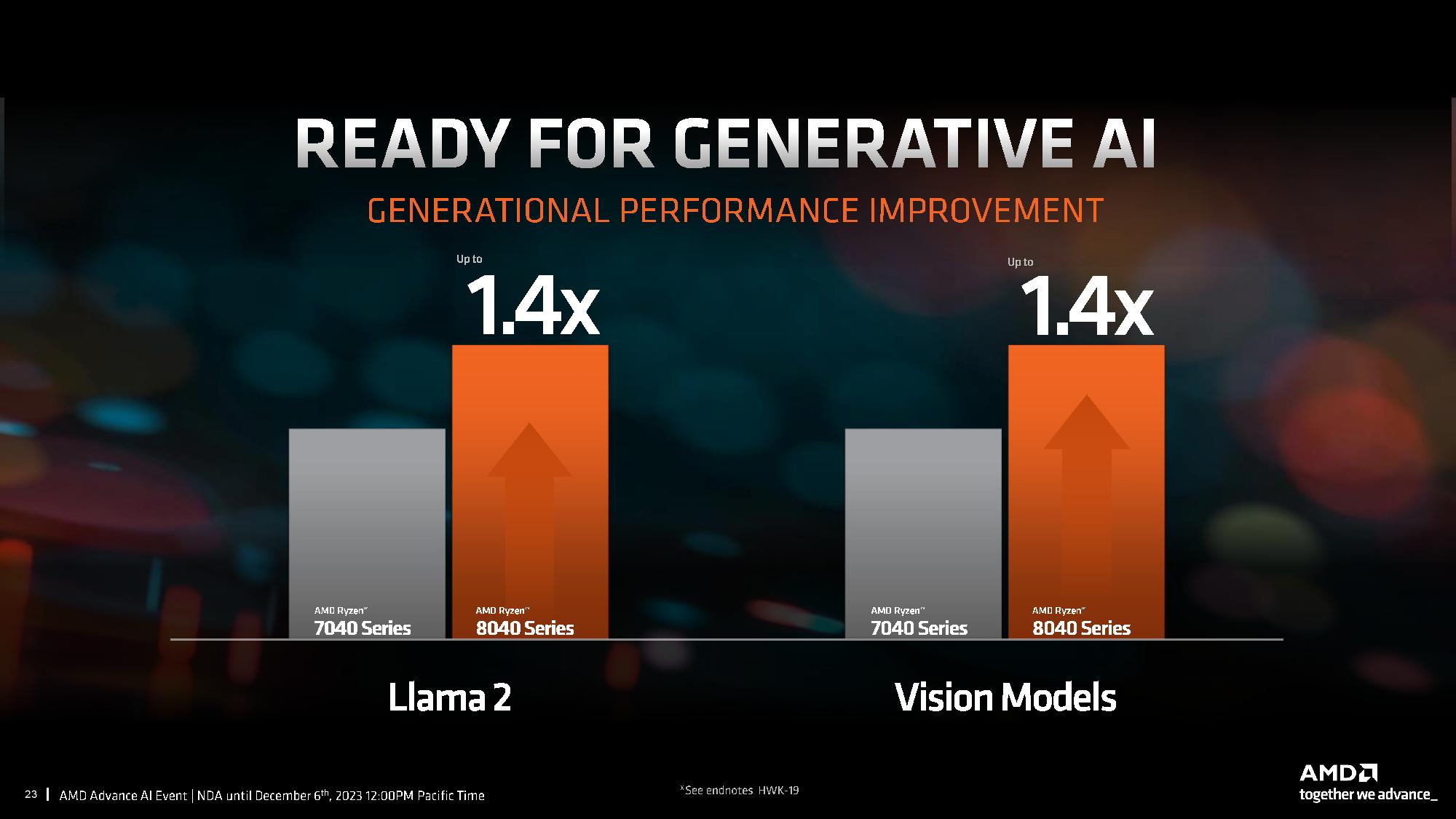

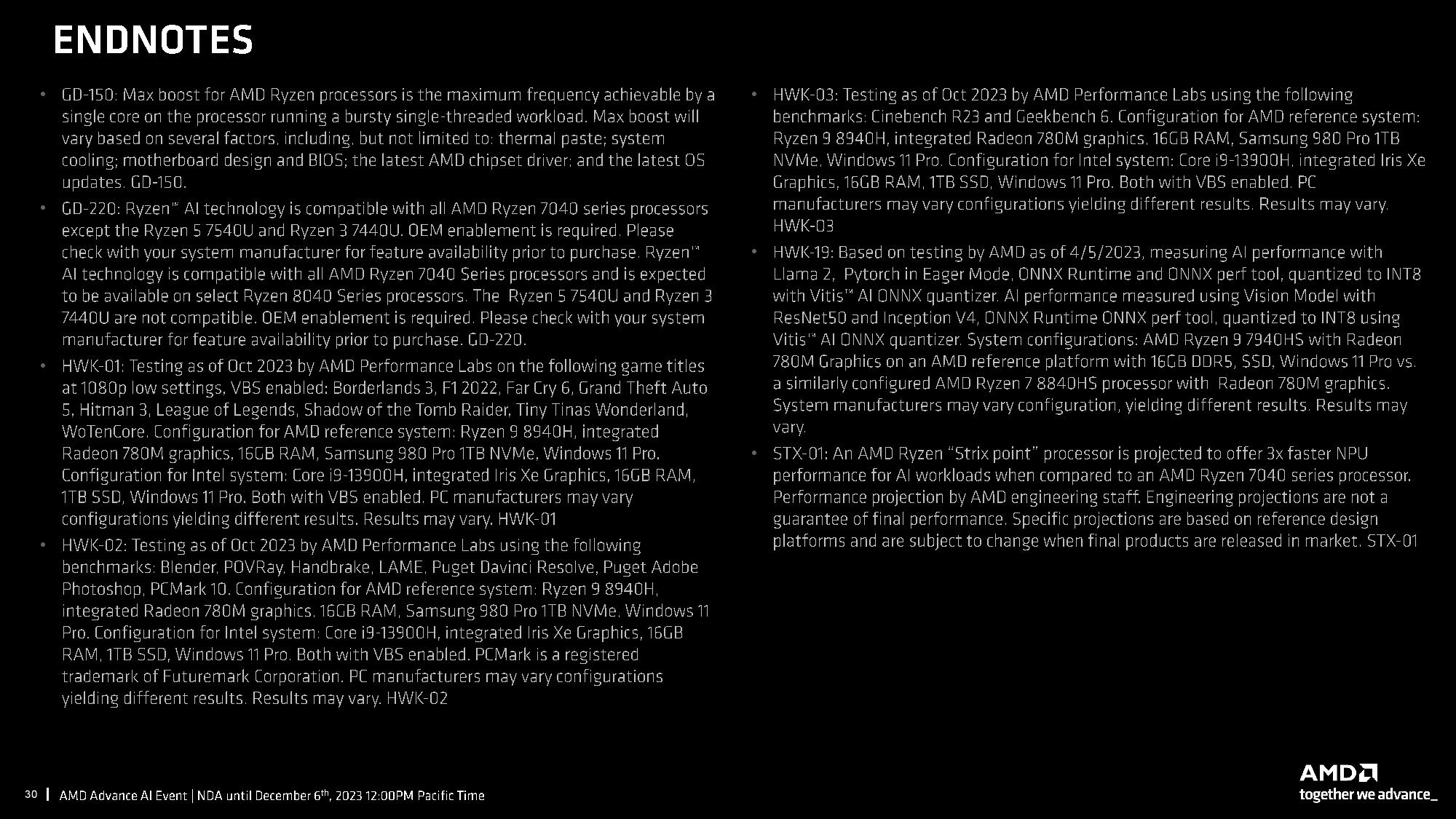

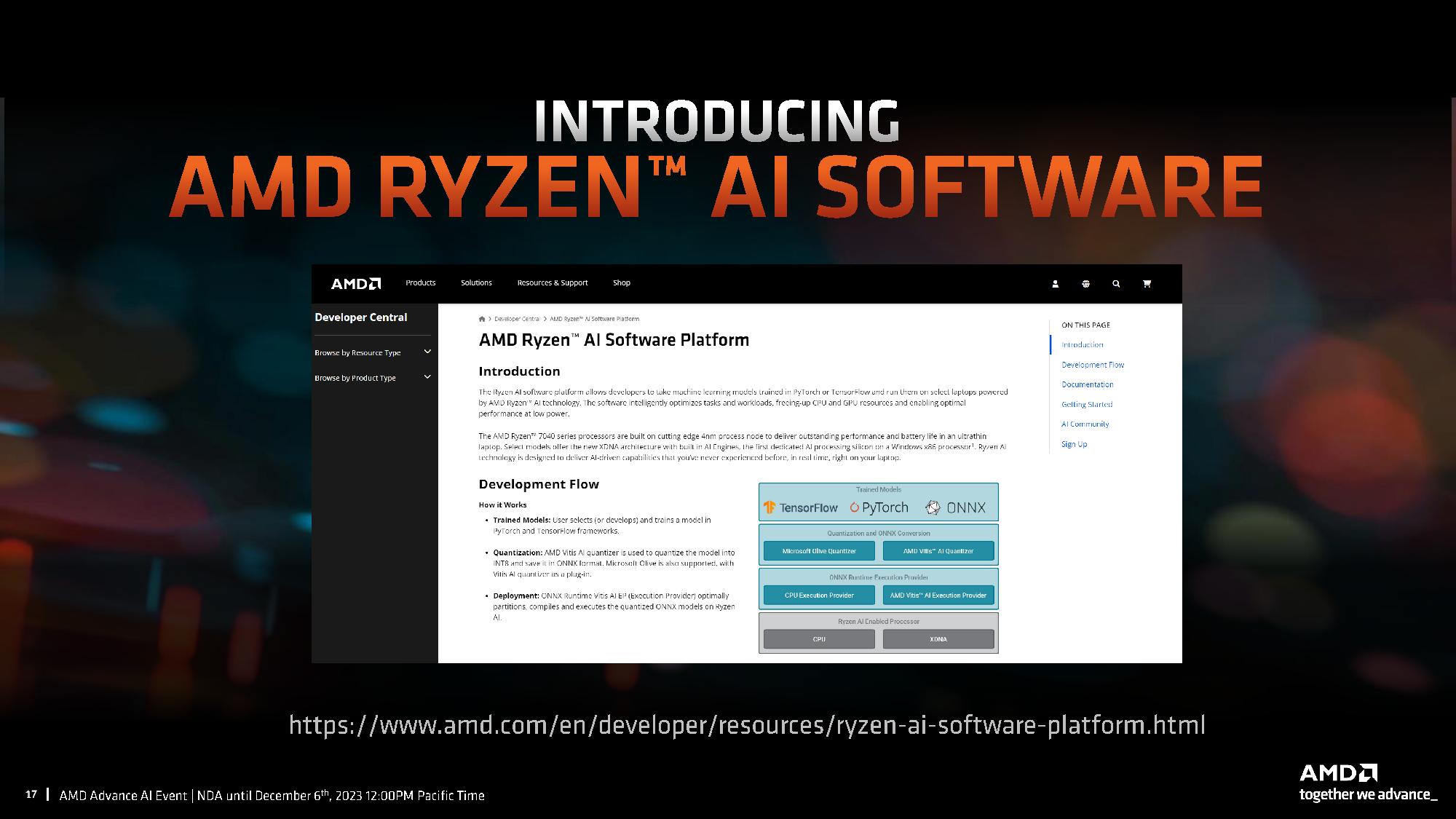

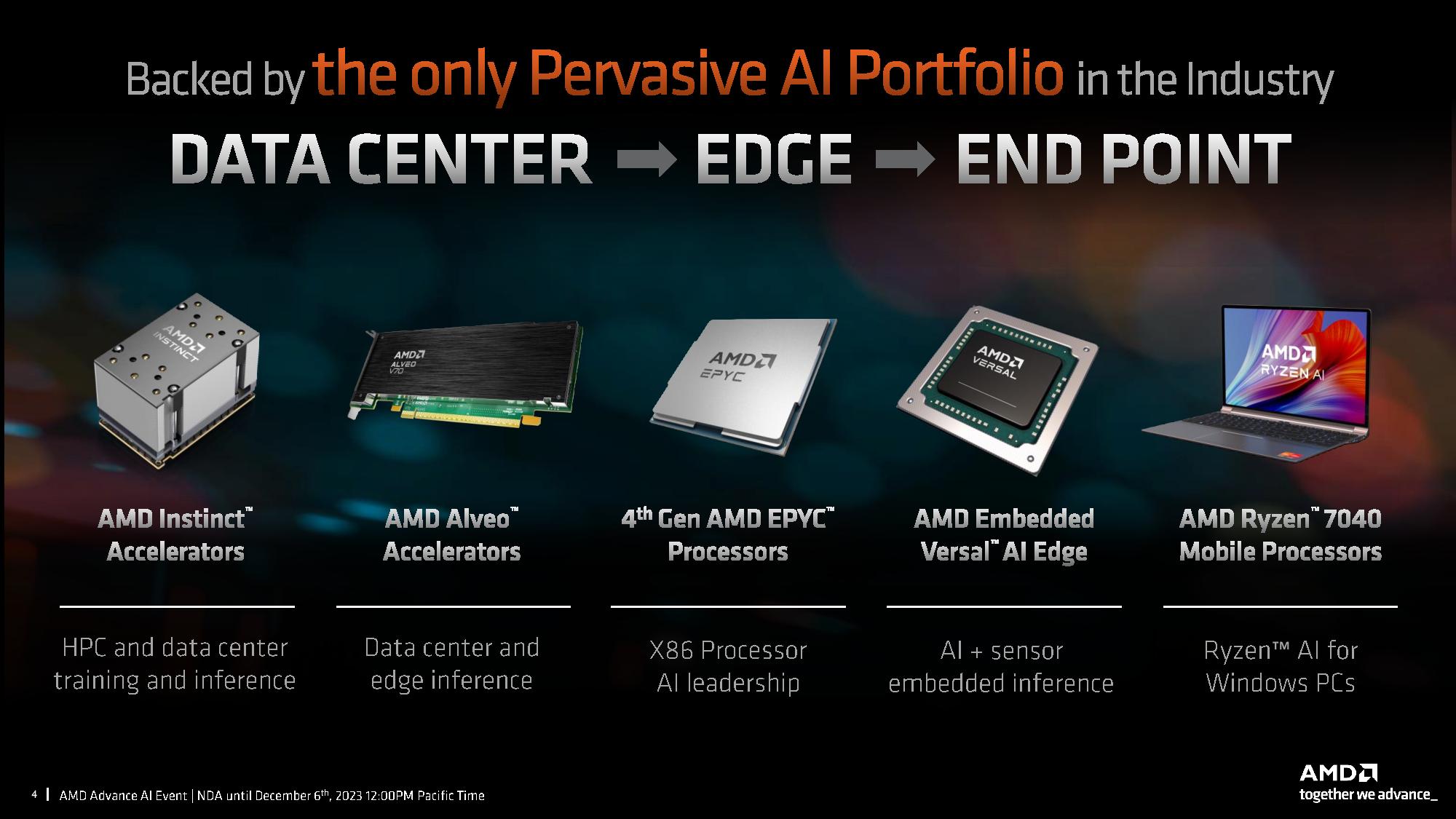

AMD announced its Ryzen 8040 series of mobile processors, codenamed “Hawk Point,” here at its Advancing AI event in San Jose, California, claiming the new processors deliver up to a 1.4X increase in some generative AI workloads. AMD also teased its future “Strix Point” processors that will arrive in 2024 with more than three times the AI performance of their predecessors, which comes courtesy of a new and improved XDNA 2 NPU engine. AMD also revealed its new Ryzen AI Software, a single-click application that allows enthusiasts and developers to deploy pre-trained AI models that work with the XDNA AI engine.

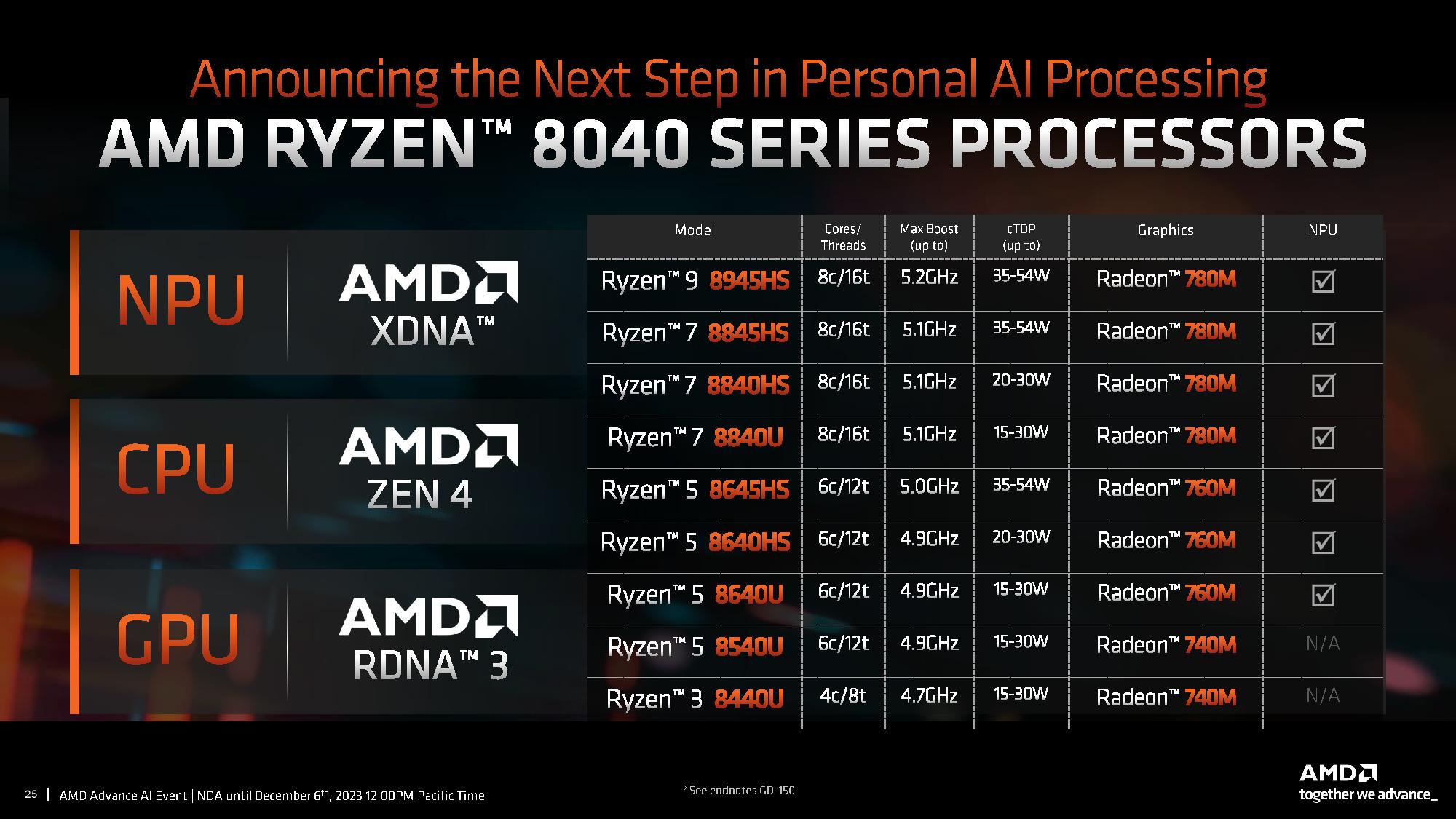

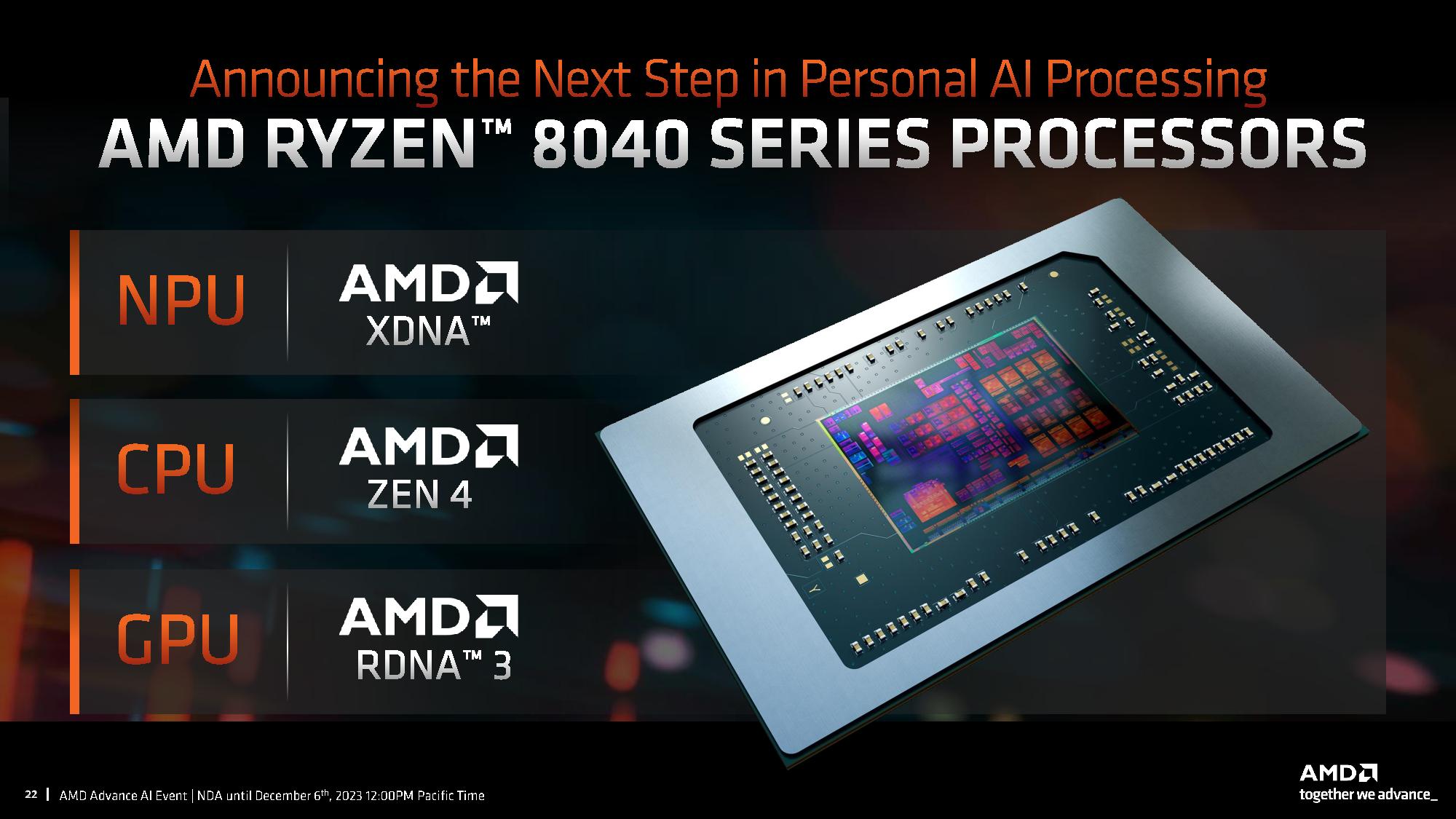

The new Hawk Point lineup is the first to come with Ryzen 8000 branding, but the chips feature the same 5nm Zen 4 cores, RDNA 3 graphics, and XDNA AI engine as the existing Ryzen 7040 “Phoenix” processors. As we typically see with refresh generations, AMD has introduced these models to give OEMs a new lineup of revamped processors for updated laptop designs. However, aside from a few models that have reduced specs, many of the new Ryzen 8040 processors appear to be nearly carbon copies of their predecessors — core counts and frequencies remain the same on most of the lineup.

That makes for what appears on the surface to be an underwhelming launch — even considering the typical lackluster improvements we expect from a refresh generation. Still, AMD has improved the performance of its in-built AI engine, and it also has plenty of other exciting developments in flight. AMD says the Ryzen 8040 series is already shipping to its partners, with devices expected to be on the market in the first quarter of next year. Let’s dive in.

AMD teases Strix Point

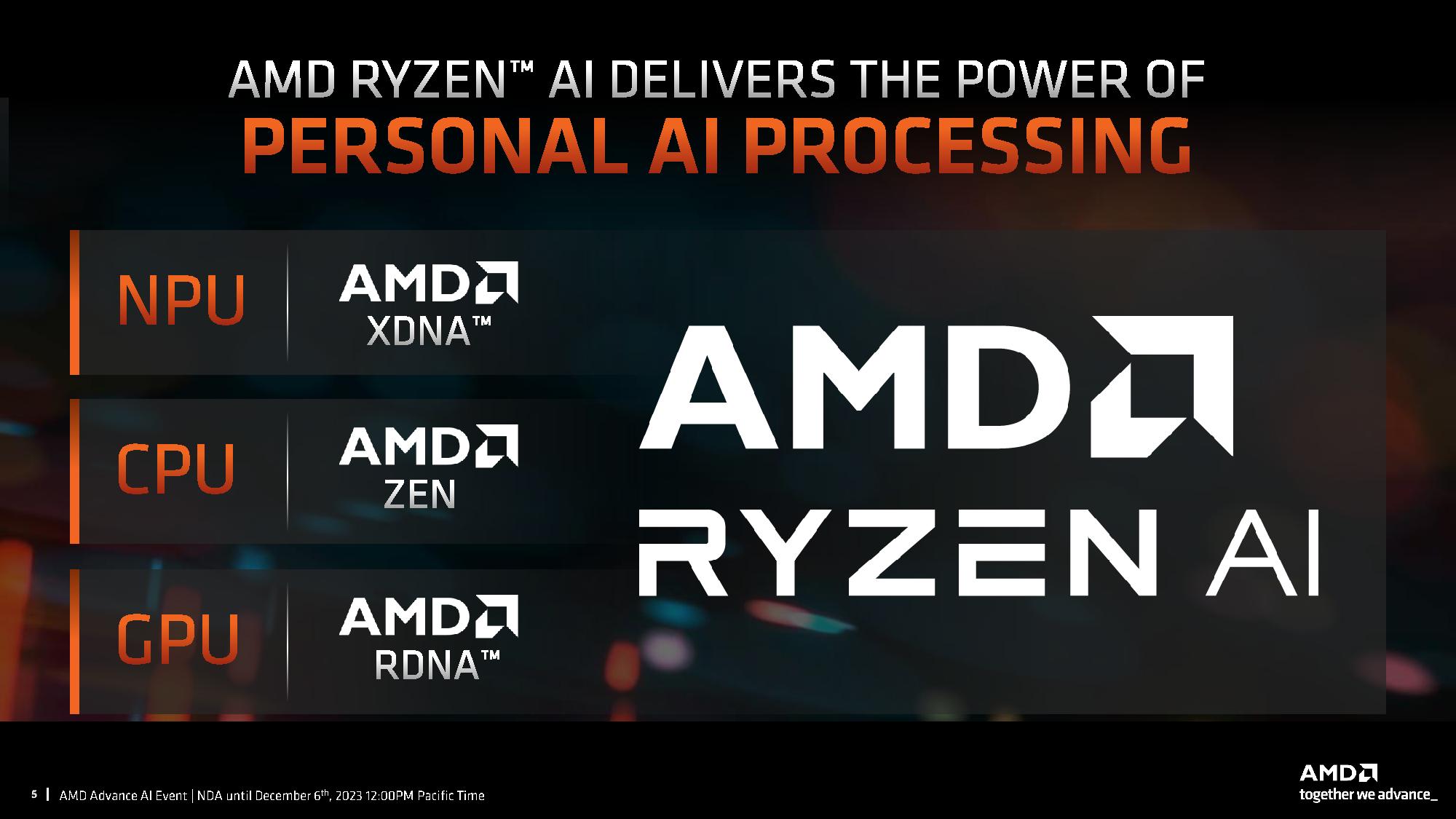

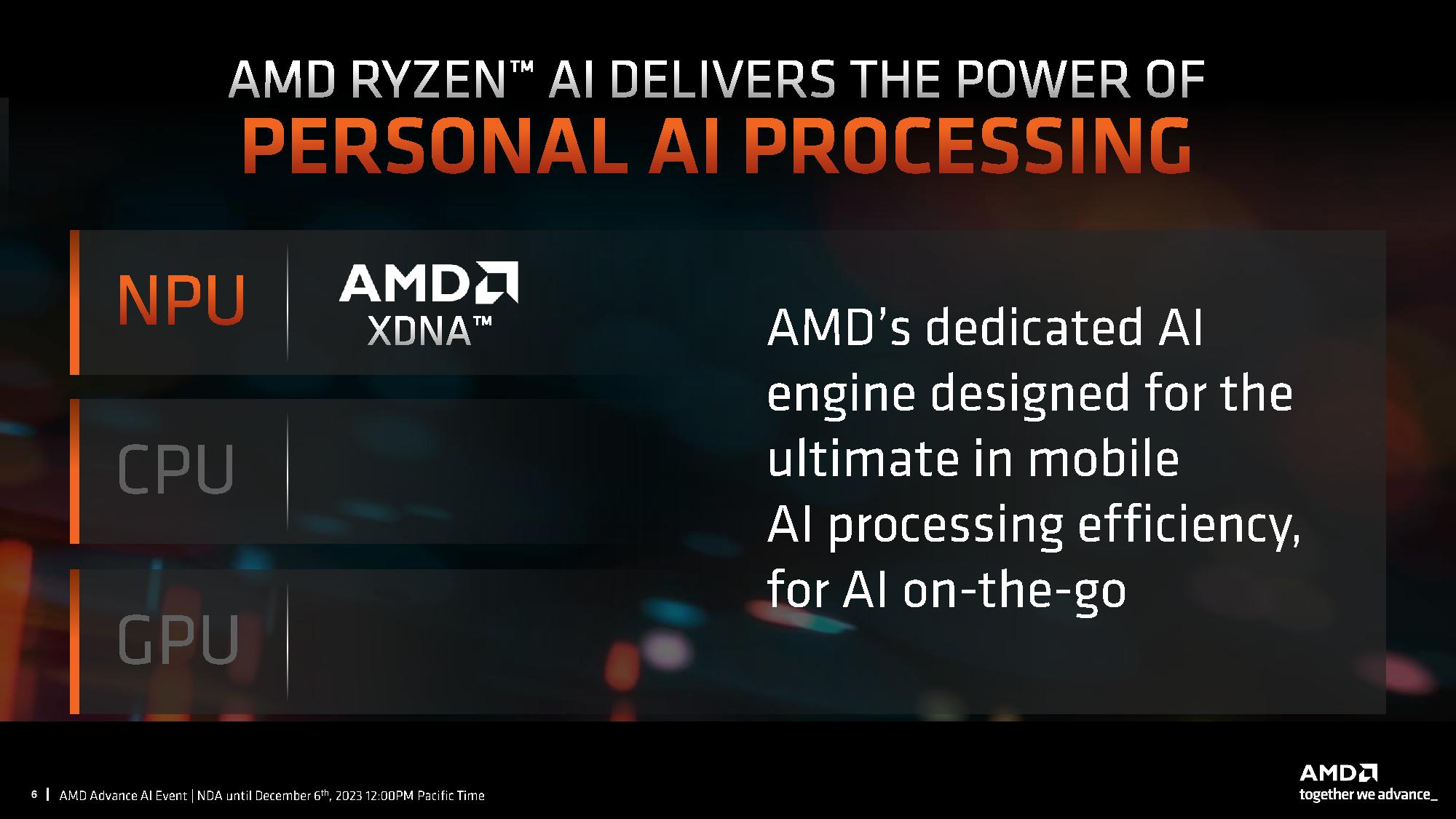

AMD’s previous-gen Ryzen 7040 series was the first to feature an NPU (Neural Processing Unit) for AI workloads. The NPU is a low-power dedicated accelerator that resides on-die with the CPU cores, consisting of the XDNA AI engine. This engine is designed to execute lower-intensity AI inference workloads, like photo, audio, and video processing, at lower power than the CPU or GPU cores using INT8 instructions. It can deliver faster response times than online services, thus boosting performance and saving battery power.

AMD teased Strix Point, its next-generation processor lineup that will arrive later in 2024. The company says these processors will feature a next-gen XDNA 2 engine that will deliver up to 3X the performance of its first-gen XDNA 1 NPU. AMD also provided performance claims for XDNA 1, saying the NPU alone delivers 10 TOPS (teraops INT8) of performance in the Phoenix 7040 series, and that increases to 16 TOPS in the Hawk Point 8040 series. AMD didn’t divulge the test methodology it used for these metrics in its deck, but we do know that the total TOPS metric in the slide represents the CPU, GPU, and NPU all working in concert.

Ryzen Hawk Point 8040 mobile processors

The 8040 series continues to focus heavily on integrated NPUs, with all but two of the lowest-end models equipped with the engine. AMD points to improved NPU performance as one of the key selling points for the 8040 series, saying the XDNA engine delivered 10 TOPS of performance in the 7040 models but improved to 16 TOPS in the new 8040 series. AMD cites increased NPU frequencies and efficiency as a source of the improved performance. You’ll have to take AMD at its word, though, as it doesn’t publish the NPU clock speeds for either the inaugural 7040 lineup or the new 8040 series, so we can’t make direct comparisons.

AMD has reshuffled its product branding to adhere to its new naming convention, with three of the “HS” models now having a ‘5’ in the product name to identify them as upper-tier models within the HS product stack. Yes, it’s more than a bit confusing.

The eight-core 16-thread Ryzen 9 8945HS has the same 45W TDP as its direct predecessor, the 7940HS, and it also has the same peak 5.2 GHz boost and 4.0 GHz base frequency. All the other specs appear identical as well, which also carries over to the 8845HS and 8645HS — we see no significant changes other than the new product naming to merit the step up to Ryzen 8000 branding. We’ve asked AMD for more details about what exactly precipitated the move to denote these as Ryzen 8000 models, and the company indicated that improved AI performance is the primary motivation; this refresh is designed primarily to boost performance in AI workloads.

We spotted a few down-clocked models in the lineup as well. These two Ryzen 8x40HS models are 28W processors designed for ultrathin laptops, but their direct predecessors used to fit into the 45W swim lane. As a result, we see the ‘0’ in the product name to denote these are now lower-tier models within the HS lineup, and we also see the reduced specs that reflect AMD’s tweaks to enable the lower power budget.

The Ryzen 7 7840HS has the same 5.1 GHz peak boost clock as its predecessor, the 7840HS, but AMD reduced the base clock by 500 MHz to accommodate the new 28W TDP. Meanwhile, the Ryzen 5 8640HS gets a substantial 800 MHz reduction in its base clock compared to the previous-gen Ryzen 5 7640HS, and AMD also shaved 100 MHz off its boost frequency.

The U-series Ryzen 5 and 7 models also slot into the 28W category. These four processors all feature the same CPU and GPU clock speeds as their predecessors, except for a 300 MHz clock rate increase for the Radeon 760M graphics engine in the Ryzen 5 8540U. Aside from AMD’s unspecified changes to NPU frequencies, these also appear to largely be the same processors as their predecessors, but with new branding.

Notably, the 'new' Ryzen 5 8540U and the Ryzen 3 8440U are amazingly fast refreshes of the previously-announced Ryzen 5 7545U and the Ryzen 3 7400U, both of which employ AMD's Zen 4c cores. The 8540U comes with 2 standard Zen 4 cores and four Zen 4c cores, whereas the Ryzen 3 8440U comes with 1 standard Zen 4 core and three Zen 4c cores.

Ryzen 8000 Series performance

As always, we should take vendor performance claims with a grain of salt. We’ve included the test setup notes at the end of the above album.

AMD provided the above benchmarks, highlighting a 1.4X increase in the Llama 2 and Vision Maker generative AI benchmarks over the Ryzen 7040 series. Again, AMD cites improved NPU frequencies and efficiency as the source of these improvements, but it hasn’t provided the clock rates for the NPU.

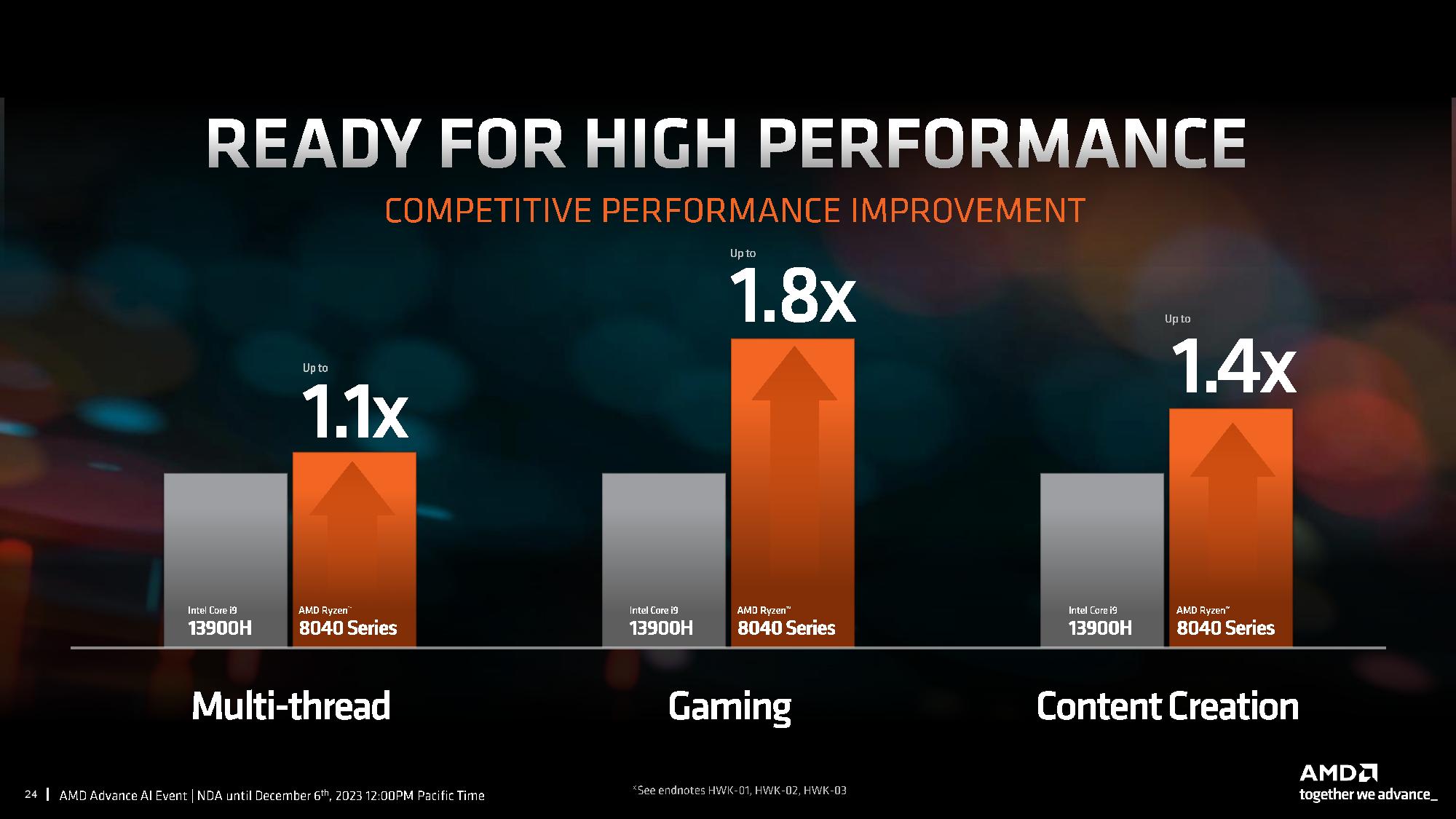

AMD didn’t provide gen-on-gen comparisons for gaming and productivity workloads, which isn’t surprising given that the Ryzen 8000 models are either the same as the prior-gen or have reduced specs. Here, we can see AMD pitting what it lists as the Ryzen 9 8940H (this SKU doesn’t exist, but we think it's supposed to be the 8945HS) and its Radeon 780M integrated graphics against the Intel Core i9-13900H with integrated Xe graphics.

AMD ran nine different gaming benchmarks, including titles like Borderlands 3, Far Cry 6, and Hitman 3, among others, to derive one metric to quantify gaming performance. Overall, AMD says its processor is 1.8X faster than the Core i9-13900H in 1080p gaming with low fidelity settings. AMD also claims a 1.1X improvement over the 13900H in the Cinebench R23 and Geekbench 6 multi-threaded benchmarks. Finally, AMD says its silicon is up to 1.4X faster than the 13900H in a spate of content creation workloads, including Blender, POV-Ray, and PCMark 10.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD Ryzen AI Software

The age of AI is upon us, and AMD has already shipped over a million processors with an in-built XDNA NPU to enable the software ecosystem. However, deploying AI models for local use, which confers performance, security, cost, and efficiency benefits, can be a daunting task.

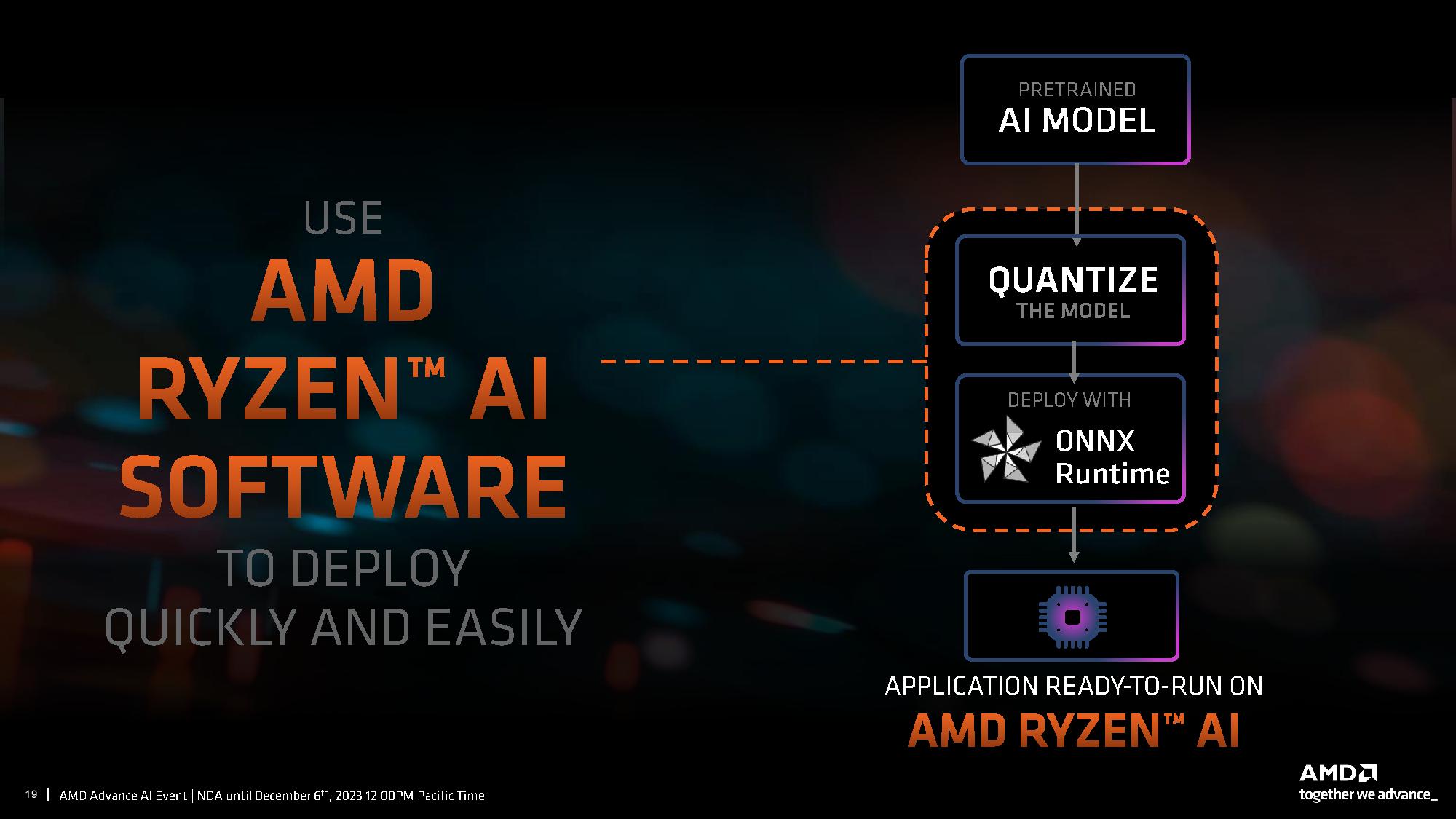

AMD’s Ryzen AI Software suite is designed to allow both enthusiasts and developers to deploy pre-trained AI models on its silicon with a one-click approach that greatly simplifies the process. Users can simply select machine learning models trained on frameworks like PyTorch or TensorFlow and use AMD’s Vitis AI quantizer to quantize the model into an ONNX format. The software then partitions and compiles the model, which then runs on the Ryzen NPU.

The Ryzen AI Software is available now for free, and AMD also has a pre-optimized model zoo on Hugging Face available for users. The software only works on Windows for now, but we’re told that a Linux version will be made available in the coming quarters.

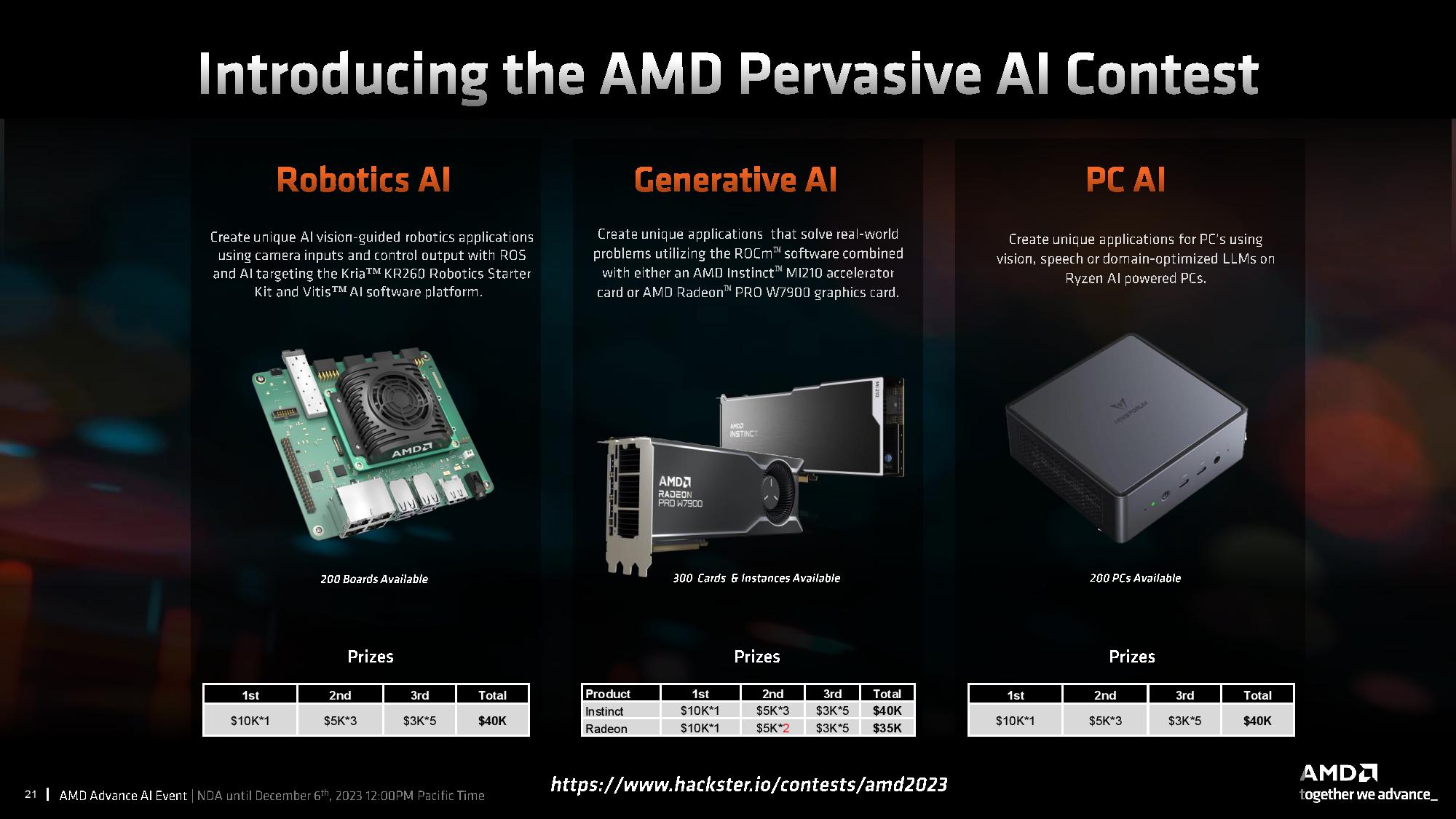

AMD also recently announced its Pervasive AI contest that provides developers with hardware and then issues cash prizes of up to $10,000 for developers that develop winning AI applications for robotics, generative AI, and PC AI platforms.

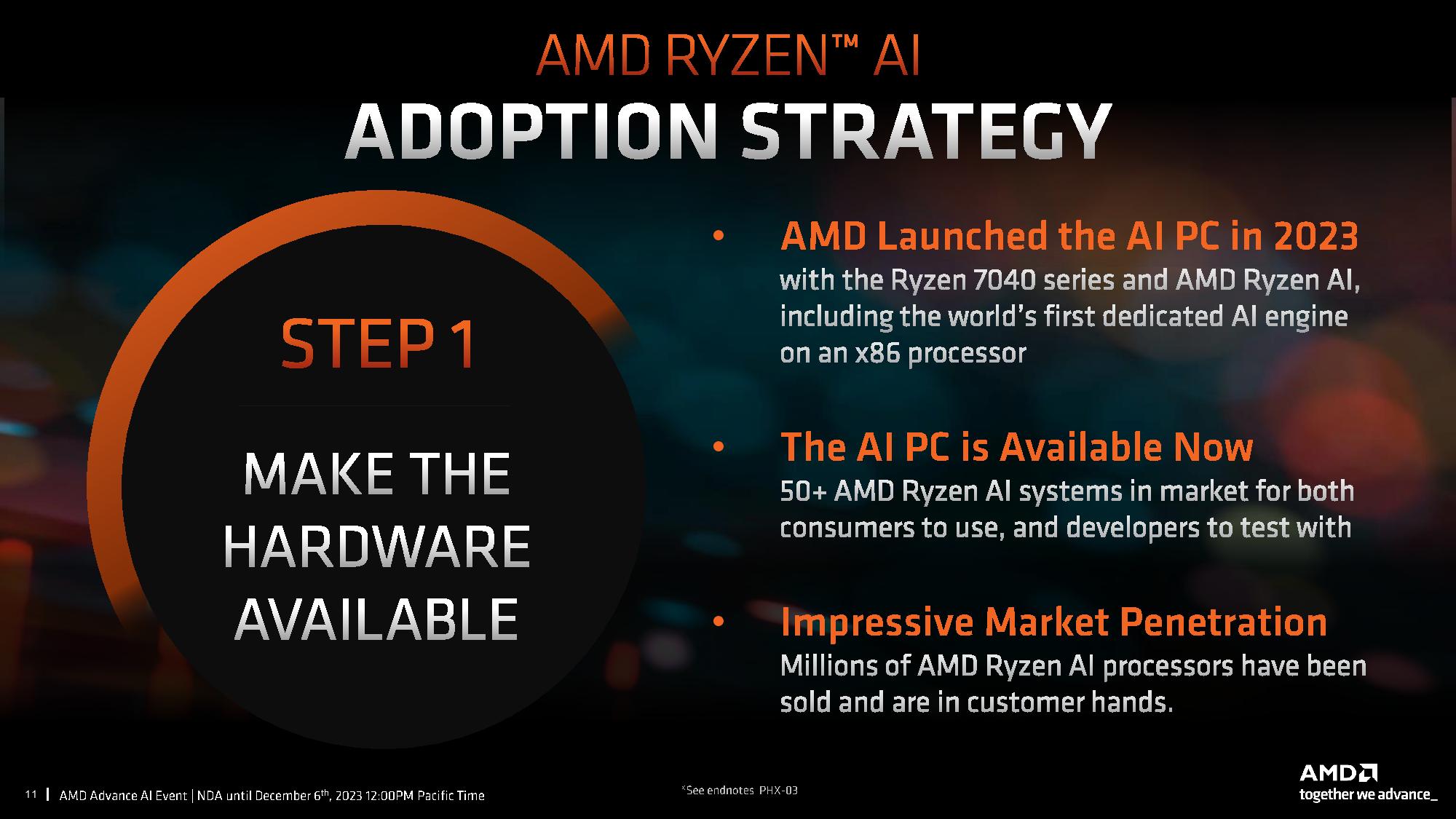

AMD outlined its approach to broadening the ecosystem of applications that leverage AI. As we’ve covered before, AMD’s general strategy has been to enable the hardware first and get it into the market, to the tune of over 1 million XDNA-enabled products so far, thus providing developers with both the motivation and the hardware to develop new applications.

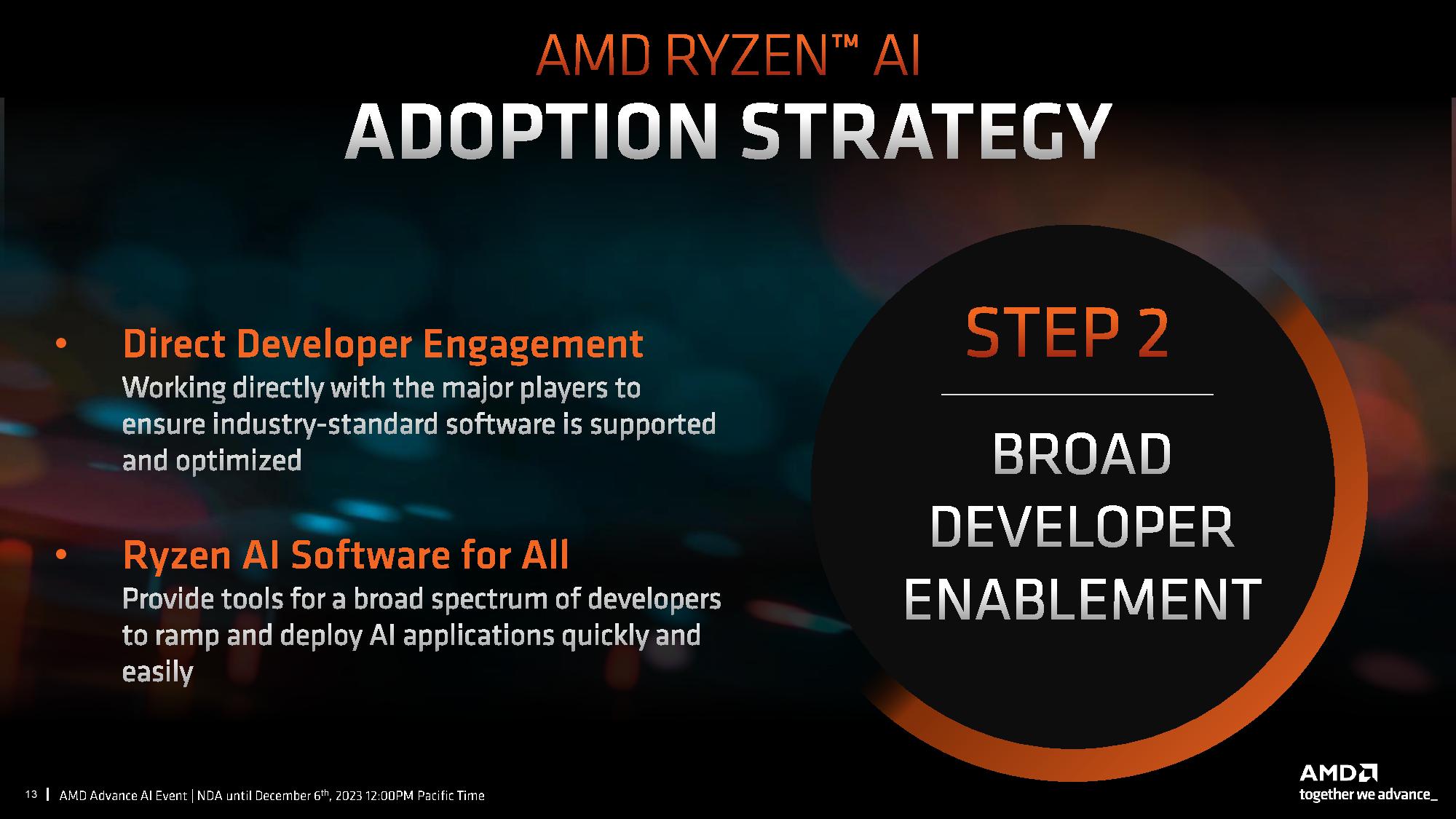

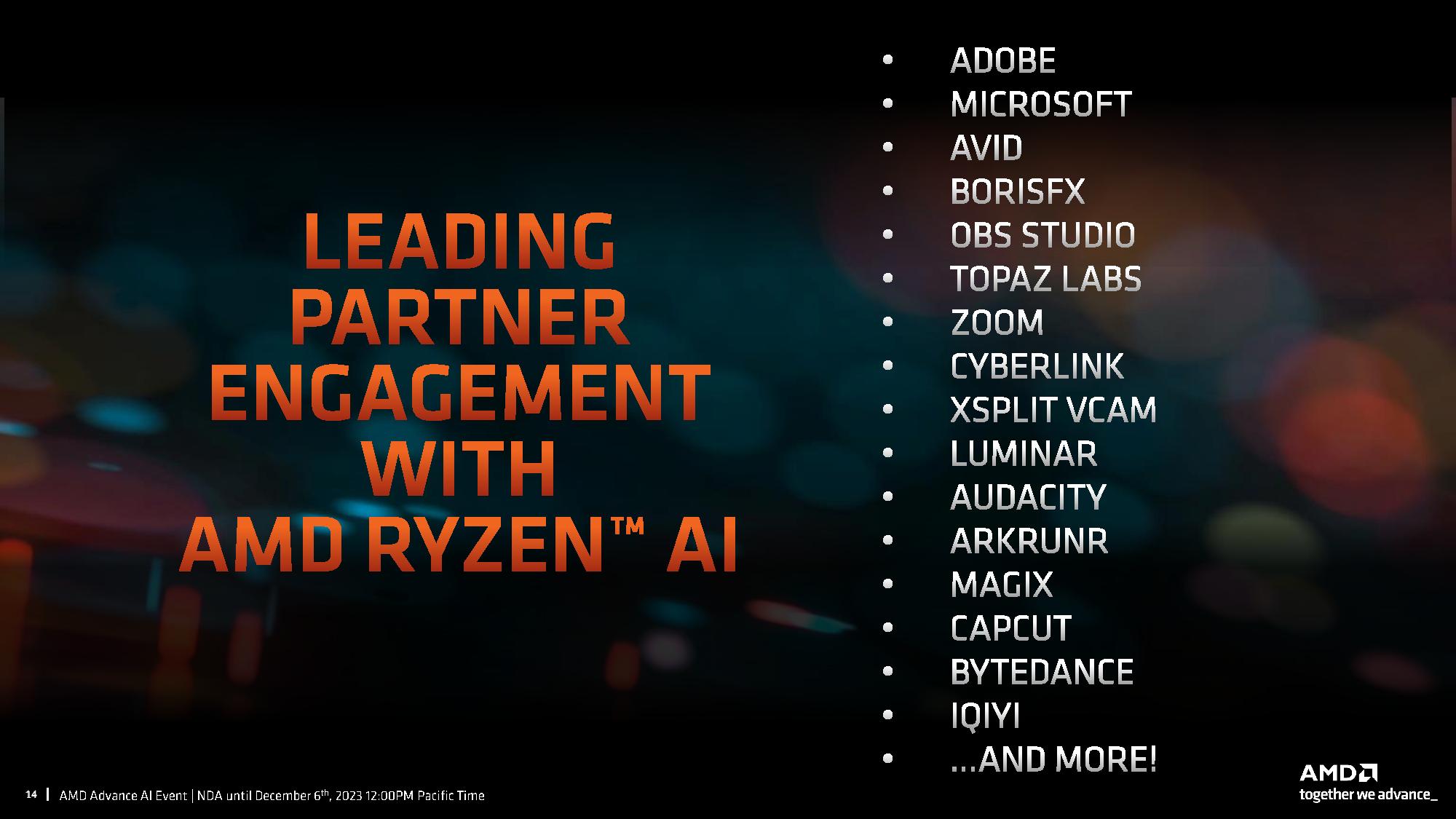

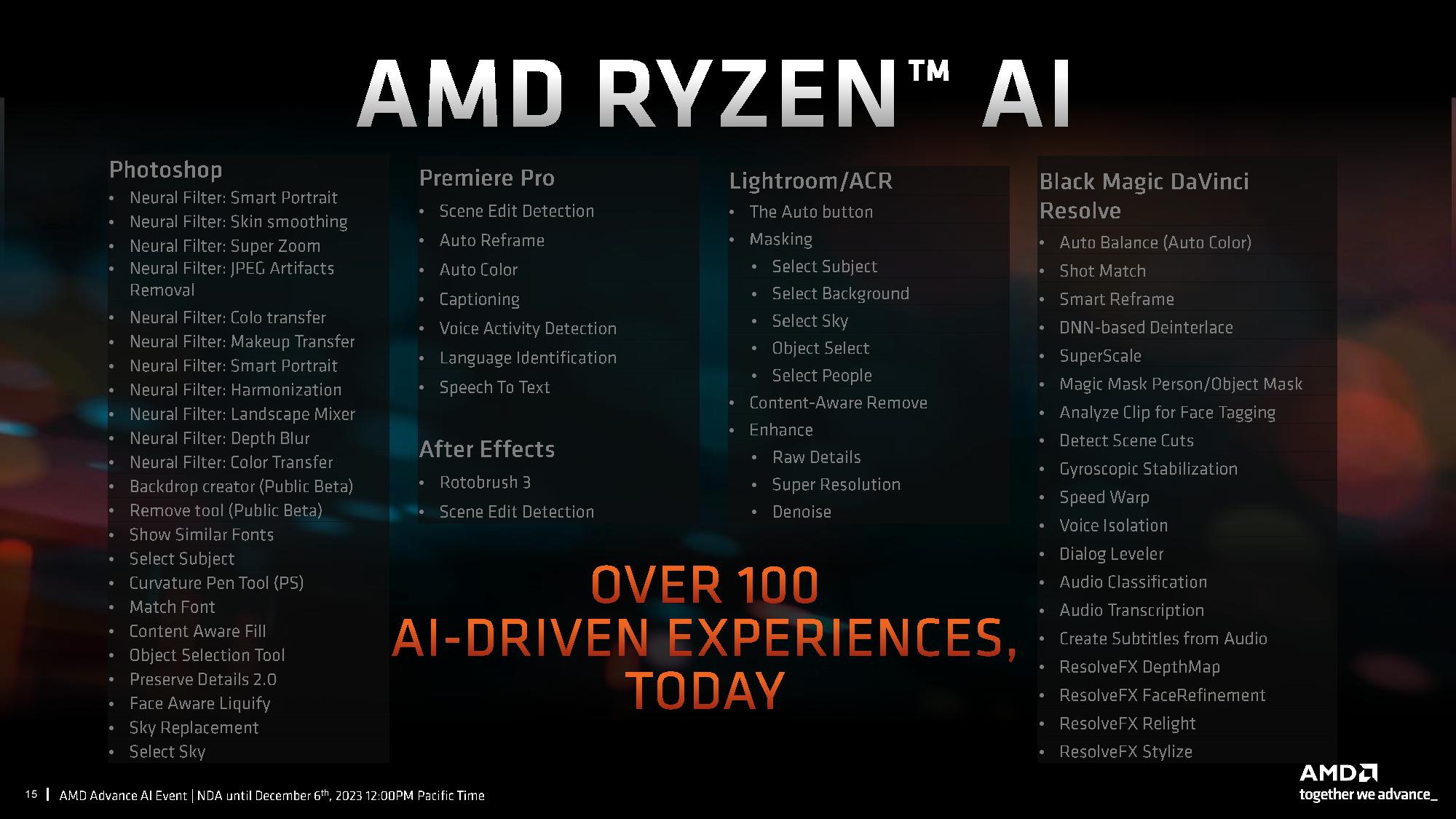

The next step is perhaps the most critical — enabling the development community to leverage the in-built AI acceleration. AMD already has a host of top-tier partners working on leveraging the NPU, like Adobe, Microsoft (there are rumors that Copilot will leverage the NPU in the future), and OBS studio, among many others. Those efforts will eventually result in more applications that fully leverage the NPU, joining over 100 AI-driven applications already present for PC users.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

thestryker So this is basically just a rebrand with some new SKUs available. Got to ride the AI wave and have a "new" release to compete with Intel 14th gen mobile I suppose. Was really hoping we'd see something a little more substantial when AMD moved to the 8xxx branding.Reply -

Giroro I still have absolutely no idea how local AI performance is supposed to help me, or anybody, when all the commercially available AI apps require expensive cloud-compute subscriptions.Reply

Very, very few people have the time, motivation, and knowledge to build and train these models locally. So why waste the die space? -

newtechldtech Reply

Games!Giroro said:I still have absolutely no idea how local AI performance is supposed to help me, or anybody, when all the commercially available AI apps require expensive cloud-compute subscriptions.

Very, very few people have the time, motivation, and knowledge to build and train these models locally. So why waste the die space? -

bit_user Reply

As their slides say, the NPU is intended to provide efficient inferencing. It's not a powerhouse on par with either the CPU cores or GPU.Giroro said:I still have absolutely no idea how local AI performance is supposed to help me, or anybody, when all the commercially available AI apps require expensive cloud-compute subscriptions.

Low-power AI is useful for things like video background removal (i.e. for video conferencing), background noise removal, presence detection, voice recognition, and AI-based quality enhancement of video content (i.e. upscaling of low-res content). I'd imagine we'll see new use cases emerge, as the compute resources to do inferencing become more ubiquitous.

The fact that this is a laptop processor is a key detail, here! Again, its value proposition is to make these AI inferencing features usable, even on battery power.

Indeed, they did start small. I've seen an annotated Phoenix die shot showing it's only something like 5% of the SoC's area, but I'm having trouble finding it.Giroro said:Very, very few people have the time, motivation, and knowledge to build and train these models locally. So why waste the die space?

To the extent you feel this way, don't overlook the fact that Intel also has a new NPU in Meteor Lake (which they call their "VPU"). Call it specsmanship, if you're cynical, but maybe both companies identified a real market need. Time will tell, based on how essential they become. -

kealii123 Reply

Hit the nail on the head. People wonder why doing things like video chat, image sharing, computational photography, heck even video editing in some circumstances is so much easier, smoother, and frankly a better experience on a 7 watt phone than a laptop or desktop. Its because ARM chips have had neural engines running on the hardware level for years. Its why Apple Silicon chips can be so freaking fast at video encoding when their raw performance barely meets an RTX 3070 laptop.bit_user said:As their slides say, the NPU is intended to provide efficient inferencing. It's not a powerhouse on par with either the CPU cores or GPU.

Low-power AI is useful for things like video background removal (i.e. for video conferencing), background noise removal, presence detection, voice recognition, and AI-based quality enhancement of video content (i.e. upscaling of low-res content). I'd imagine we'll see new use cases emerge, as the compute resources to do inferencing become more ubiquitous.

The fact that this is a laptop processor is a key detail, here! Again, its value proposition is to make these AI inferencing features usable, even on battery power.

Indeed, they did start small. I've seen an annotated Phoenix die shot showing it's only something like 5% of the SoC's area, but I'm having trouble finding it.

To the extent you feel this way, don't overlook the fact that Intel also has a new NPU in Meteor Lake (which they call their "VPU"). Call it specsmanship, if you're cynical, but maybe both companies identified a real market need. Time will tell, based on how essential they become.

But more to @Giroro 's original intention: Development. I program every day with Copilot and GPT4, but I can run the 13b parameter Llama2 locally on my 32gig macbook pro m1, and its actually really good. The issue is it takes up all of my RAM and I can't use the computer for anything else. Network latency for auto-complete sucks. Building an AI powered customer facing app is terrifying when you are 100% held hostage by OpenAI. There is no _consumer_ need for a locally running AI yet, but all the AI powered products of tomorrow need it today. -

bit_user BTW, what of Phoenix 2, with its mix of Zen 4 and 4C cores? Any news about that (or similar)?Reply

I had high hopes for the efficiency of Zen 4C running on 4nm. Any data on this would be nice to see. -

usertests Reply

Based on the "AI Roadmap" AMD provided, XDNA 2.0 in Strix Point at ~50 TOPS could easily be more performant than using the CPU+GPU. And I doubt they can get there with clock speeds alone. It needs a better design or more silicon. People will have to get used to x86 including the accelerator that hundreds of millions of mobile devices have.bit_user said:As their slides say, the NPU is intended to provide efficient inferencing. It's not a powerhouse on par with either the CPU cores or GPU. -

ThomasKinsley ReplyGiroro said:I still have absolutely no idea how local AI performance is supposed to help me, or anybody, when all the commercially available AI apps require expensive cloud-compute subscriptions.

Very, very few people have the time, motivation, and knowledge to build and train these models locally. So why waste the die space?

The only AI I care about are offline models that can be trained. ChatGPT's inadequacies have become apparent as it gives safe answers that agree with its parent company's beliefs. Other times it issues lectures to questions that are quite mundane. One-size models don't work well when the company fears being sued into oblivion for the AI's responses.

Personalizing the AI will let people use it to its fullest extent as a real assistant. That's what people really want. Trainable models are the best way to get there. -

bit_user Reply

Yes, I was only talking about the current 10 - 16 TOPS models.usertests said:Based on the "AI Roadmap" AMD provided, XDNA 2.0 in Strix Point at ~50 TOPS could easily be more performant than using the CPU+GPU. And I doubt they can get there with clock speeds alone. It needs a better design or more silicon.

As for those claims and whether Strix Point's NPU could change the balance, pay close attention to the numbers in this slide:

This tells us several things. First, the only compute-impacting change in Hawk Point will be their tweaks to the Ryzen AI block. Second, even at 30 TOPS (because they did say 3x of the 1st gen, presumably referring to Phoenix), it would merely draw on-par with the CPU + GPU cores, as those stand today - still a good improvement, but not totally game-changing.

Finally, if Strix Point indeed increases its NPU to only 30 TOPS, that seems achievable at probably just 2.5x the area of the original. You're not going to run a block 2x the size at the same clocks as Hawk Point's NPU, but they seem to have found some more headroom for better clocks - so, I'm not expecting 3x the size and the same clocks as Phoenix'. -

usertests Reply

MLID leaked in advance that Hawk Point would have 16 TOPS and Strix Point/Halo would have 45-50 TOPS. 16*3=48 aligns exactly. So if that's correct, XDNA 2.0 will be an impressive 5x uplift over Phoenix.bit_user said:Second, even at 30 TOPS (because they did say 3x of the 1st gen, presumably referring to Phoenix), it would merely draw on-par with the CPU + GPU cores, as those stand today - still a good improvement, but not totally game-changing.

It's also consistent with Snapdragon X Elite having a 45 TOPS NPU. This seems to be the level of performance that Microsoft wants for "Windows 12".

Source: https://videocardz.com/newz/amd-ryzen-8000-9000-apu-roadmap-leaks-out-strix-point-to-launch-mid-2024-fire-range-and-strix-halo-in-2025https://cdn.videocardz.com/1/2023/11/AMD-RYZEN-ZEN4-ZEN5-ROADMAP.jpg