Leak suggests that DDR6 development has already started, aiming for 21 GT/s

So early?

The transition to DDR5 started back in 2021 but this move from DDR4 to DDR5 is still in progress, and there are many new DDR4-based systems being shipped every day. But according to a slide published by @DarkMontTech, the industry has already began to develop DDR6, the next generation of the mainstream DRAM standard and it may arrive a bit earlier than we expect. Though, we have reasonable doubts about it so ensure that you take the appropriate dose of salt.

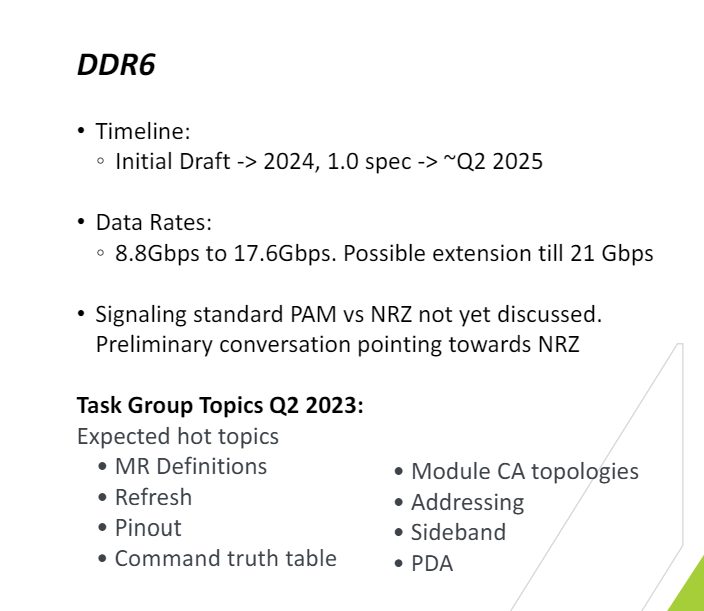

The slide, the origin of which is unknown (yet it resembles slides from one of the major high-tech companies), indicates that data transfer rates for DDR6 are set to significantly improve compared to DDR5 as it claims to start at 8.8 GT/s and going up to 17.6 GT/s. There is also a possibility of extending these rates further, potentially reaching up to 21 GT/s.

Unfortunately, the slide does not describe how these transfer rates are going to be achieved as by now even the signalling standard (PAM vs. NRZ) has not yet been decided, but preliminary conversations suggest a preference for NRZ, which seems way too high for 17.6 GT/s – 21 GT/s.

As of Q2 2023, the task group(s) reportedly was (were) formed to focus on several key aspects essential for DDR6 development. These include defining mode register parameters, refresh mechanisms, pinout configurations, and creating a command truth table. Additionally, the task group explored module command/address topologies and addressing schemes (design and arrangement of how command and address signals are routed within memory modules, which affects performance, signal integrity, and power consumption), which are vital for physical and logical organization of memory modules. Other important topics for discussion included sideband signalling and post-package (PDA) repair. Sideband signalling deals with additional data paths for improved communication, while PDA focuses on repair mechanisms to enhance the durability and lifespan of memory modules. Each of these areas are critical for ensuring the reliability and efficiency of DDR6 memory.

It is unclear whether the task group(s) developing DDR6 has (have) finalized their work. However, the slide outlines development timeline for DDR6 memory pretty clearly: the initial draft is expected to be ready in 2024. Following this, the 1.0 specification is projected to be completed by approximately Q2 2025.

The slide does not disclose when its authors expect transition to DDR5 to start, though transition to DDR5 began in 2021, about a year after the final specification of the standard was published. Assuming that DDR6 spec will be finalized in Q2 2025, transition to this technology could start as early as in the second half of 2026. Yet, this could be a bit too early as in the coming years both AMD and Intel plan to adopt rather sophisticated MRDIMM and MCRDIMM DDR5-based memory for their server platforms and they are not going to need next-gen DRAM this yearly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Reply

The slide, the origin of which is unknown (yet it resembles slides from one of the major high-tech companies), indicates that data transfer rates for DDR6 are set to significantly improve compared to DDR5 as it claims to start at 8.8 GT/s and going up to 17.6 GT/s.

The slide has been taken from "Synopsys". -

Here are all the LPDDR6 memory slides as well. :)Reply

Synopsys has laid out up to 14.4 Gbps as the highest defined data rate, with an introductory rate of 10.667 Gbps.

LPDDR6 will also use a 24-bit wide channel composed of two 12-bit sub-channels, and offer an introductory bandwidth of up to 28 GB per second and up to 38.4 GB/s using the fastest 14.4 Gbps dies.

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_1.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_2-1456x765.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_3-1456x770.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_4-1456x768.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_5-1456x765.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_6-1456x820.png

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_7-1456x822.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_8-1456x819.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_9-1456x822.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_10-1456x815.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_12-1456x816.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_13-1456x816.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_14-1456x820.jpeg

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_15-1456x815.png

https://cdn.wccftech.com/wp-content/uploads/2024/05/LPDDR6-Memory-_16-1456x816.jpeg -

TechyIT223 We need more details on GPU GDDR7 next gen memory specs. Rumors have confirmed 28Gbps as the minimum starting speed.Reply

But it's uncertain whether such high speed will be utilised by nvidia let alone AMD. -

Yes. Very likely the first iteration of GDDR7 "next-gen" GPUs would utilize the 28 Gbps dies, with only a few high-end flagship variants or their refreshes getting 32 Gbps pin speeds.Reply

But I think only Nvidia would go for this, since AMD might still stick with GDDR6/X mem instead, for their mainstream Navi 48 and 44 GPUs (speculation).

Still, this would be a huge improvement over GDDR6 which peaks out at 24 Gbps, and we have only seen up to 22.5 Gbps speeds on certain GPUs such as the RTX 4080 SUPER.

However, GDDR7 standard allows for memory bandwidths of up to 192 GB/s per chip, but it still has a 32-bit data width, so eventually, GDDR7 could also run at effective speeds of up to 48 Gb/s per bus bit, or an effective clock speed of 48 GHz.

So here is a 'pipe dream' based on this future "48 GHz" effective speed ! Because this is unlikely to happen at least on consumer gaming GPUs initially. :sleep:

A Graphics card with a narrow 64-bit bus typical of low-end GPUs could achieve 384 GB/s of bandwidth (think RX 6500 XT).

A mainstream GPU with 128-bit memory would already have a bandwidth of 768 GB/s.

And some high-performance GPUs with 256-bit and 384-bit buses could sport bandwidths of 1536 GB/s and 2304 GB/s respectively ! -

TechyIT223 What really worriers me is the pricing of next gen GPU GDDR7 cards.Reply

I would be skipping the whole next generation unless AMD has something better than nvidia.

In fact there's no way I'd pay over $1000 for a graphics card, so that basically rules out 5000 series at this point anyway. 🙁

If my current nvidia 3080 GPU fails before Radeon 8000 launches, I'll probably pick up a cheap 6900XT because frankly, after 2 years of having "good" ray tracing, I've found it to be not that memorable. -

I won't be upgrading to any discrete GPU anytime soon. Would directly go for an iGPU setup like the upcoming Strix HALO APU lineup, or Intel's next-gen Panther Lake series, or whichever lineup offers higher igpu performance.Reply

Given how powerful integrated graphics has become in recent years, and the type of games I usually play, a powerful high CU/XE core count APU should be more than enough for my casual gaming needs.

Currently rocking an RTX 4060 8GB GPU, so this will keep me busy for next few years or so, unless of course it dies (**touches wood*). :cautious:

Sorry, this is kind of OFF TOPIC:

But the current AMD Radeon 780M iGPU can already deliver over 60 FPS at 1080p in games such as Cyberpunk 2077. This is a huge leap for integrated graphics as they are getting close to the point where they can replace entry-level discrete solutions.

There's a reason why NVIDIA isn't expected to invest any more in its MX line of GPUs since both AMD & Intel are prepping some powerful integrated chips for future laptop designs.

Vanilla Skyrim (1080P / High) - 120 FPS Average

Crysis Remastered (1080P / High) - 71 FPS Average

World of Warcraft (1080P / Max) - 98 FPS Average

Genshin Impact (1080P / High) - 60 FPS Average

Spiderman Miles Morales (1080P / Low) - 74 FPS Average

Dirt 5 (1080P / Low) - 71 FPS Average

Ghostwire Tokyo (1080P / Low) - 58 FPS Average

Borderlands 3 (1080P / Med) - 73 FPS Average

God of War (1080P / Original / FSR Balanced) - 68 FPS Average

God of War (1080P / Original / Native Res) - 58 FPS Average

Mortal Kombat 11 (1080P / High) - 60 FPS Average

Red Dead Redemption 2 (1080P / Low / FSR Performance) - 71 FPS AverageThe previous gaming benchmark results from ETA Prime's last video:

CSGO (1080P / High) - 138 FPS (Average)

GTA V (1080P / Very High) - 81 FPS (Average)

Forza Horizon 5 (1080P / High) - 86 FPS (Average)

Fortnite (1080P / Medium) - 78 FPS (Average)

Doom Eternal (1080P / Medium) - 83 FPS (Average)

Horizon Zero Dawn (1080P / Perf) - 69 FPS (Average)

COD: MW2 (1080P / Recommended / FSR 1) - 106 FPS (Average)

Cyberpunk 2077 (1080P / Med+Low) - 65 FPS (Average)

KKaoxe5dd3MView: https://www.youtube.com/watch?v=KKaoxe5dd3M -

TechyIT223 Reply

Well, I can't blame you for going the igpu route and it actually makes sense if you are into casual gaming.Metal Messiah. said:I won't be upgrading to any discrete GPU anytime soon. Would directly go for an iGPU setup like the upcoming Strix HALO APU lineup, or Intel's next-gen Panther Lake series, or whichever lineup offers higher igpu performance.

Given how powerful integrated graphics has become in recent years, and the type of games I usually play, a powerful high CU/XE core count APU should be more than enough for my casual gaming needs.

Currently rocking an RTX 4060 8GB GPU, so this will keep me busy for next few years or so, unless of course it dies (**touches wood*). :cautious:

Sorry, this is kind of OFF TOPIC:

But the current AMD Radeon 780M iGPU can already deliver over 60 FPS at 1080p in games such as Cyberpunk 2077. This is a huge leap for integrated graphics as they are getting close to the point where they can replace entry-level discrete solutions.

There's a reason why NVIDIA isn't expected to invest any more in its MX line of GPUs since both AMD & Intel are prepping some powerful integrated chips for future laptop designs.

Vanilla Skyrim (1080P / High) - 120 FPS Average

Crysis Remastered (1080P / High) - 71 FPS Average

World of Warcraft (1080P / Max) - 98 FPS Average

Genshin Impact (1080P / High) - 60 FPS Average

Spiderman Miles Morales (1080P / Low) - 74 FPS Average

Dirt 5 (1080P / Low) - 71 FPS Average

Ghostwire Tokyo (1080P / Low) - 58 FPS Average

Borderlands 3 (1080P / Med) - 73 FPS Average

God of War (1080P / Original / FSR Balanced) - 68 FPS Average

God of War (1080P / Original / Native Res) - 58 FPS Average

Mortal Kombat 11 (1080P / High) - 60 FPS Average

Red Dead Redemption 2 (1080P / Low / FSR Performance) - 71 FPS AverageThe previous gaming benchmark results from ETA Prime's last video:

CSGO (1080P / High) - 138 FPS (Average)

GTA V (1080P / Very High) - 81 FPS (Average)

Forza Horizon 5 (1080P / High) - 86 FPS (Average)

Fortnite (1080P / Medium) - 78 FPS (Average)

Doom Eternal (1080P / Medium) - 83 FPS (Average)

Horizon Zero Dawn (1080P / Perf) - 69 FPS (Average)

COD: MW2 (1080P / Recommended / FSR 1) - 106 FPS (Average)

Cyberpunk 2077 (1080P / Med+Low) - 65 FPS (Average)

KKaoxe5dd3MView: https://www.youtube.com/watch?v=KKaoxe5dd3M

Pretty impressive performance numbers for the 780m igpu though.

Next gen igpu would surely be much better, efficient and faster. Imagine a 40CU count igpu 😜