Why you can trust Tom's Hardware

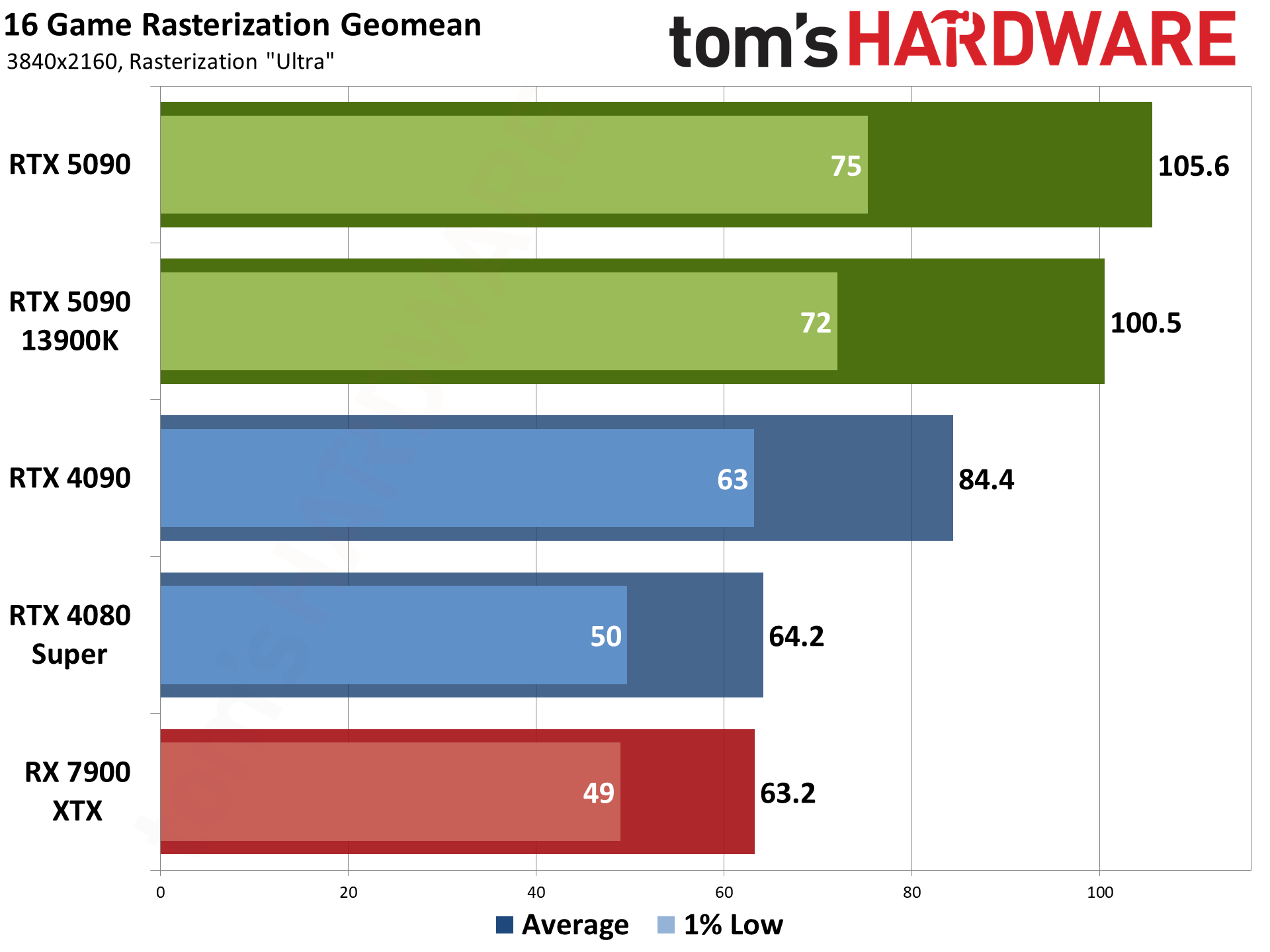

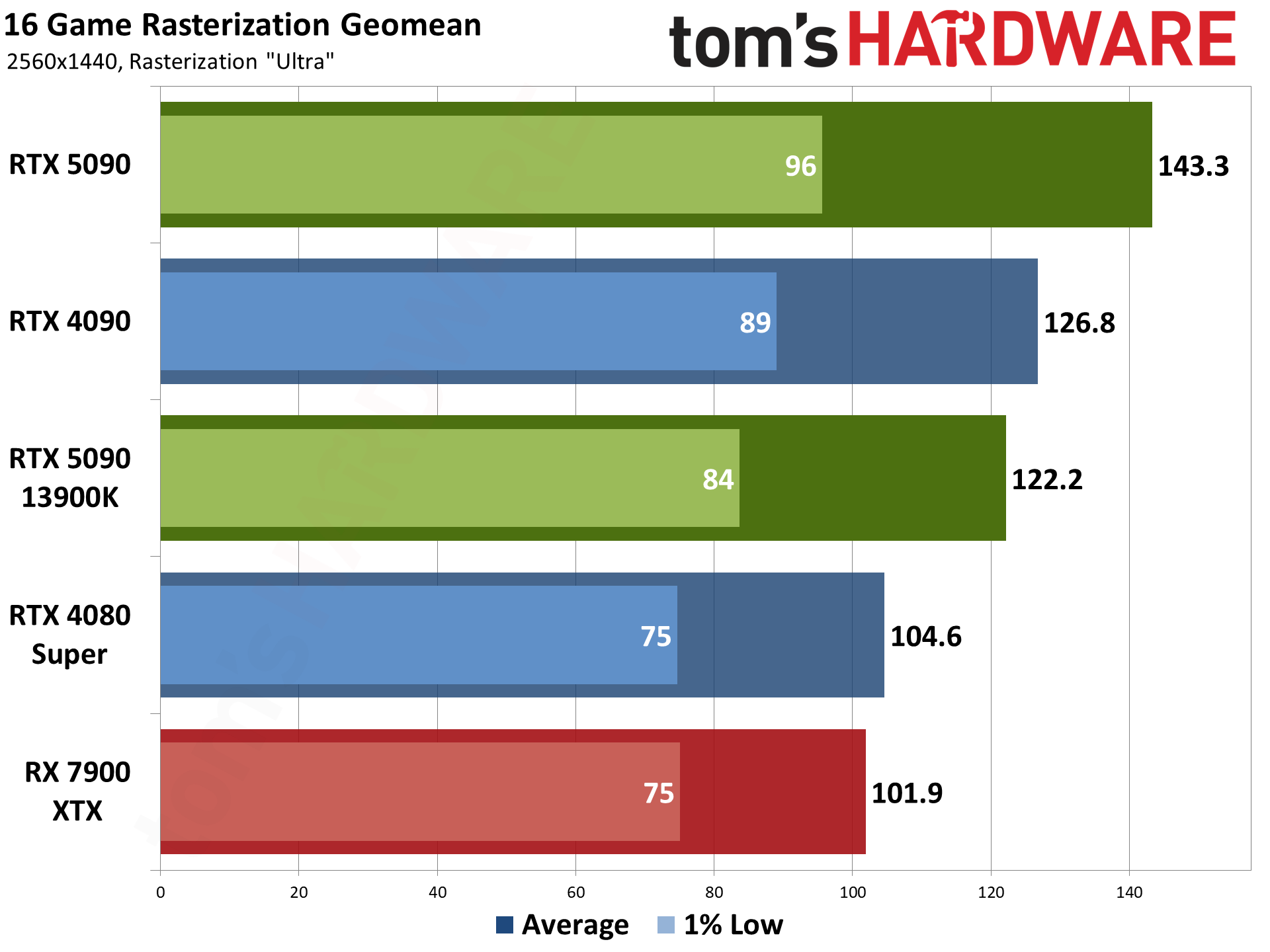

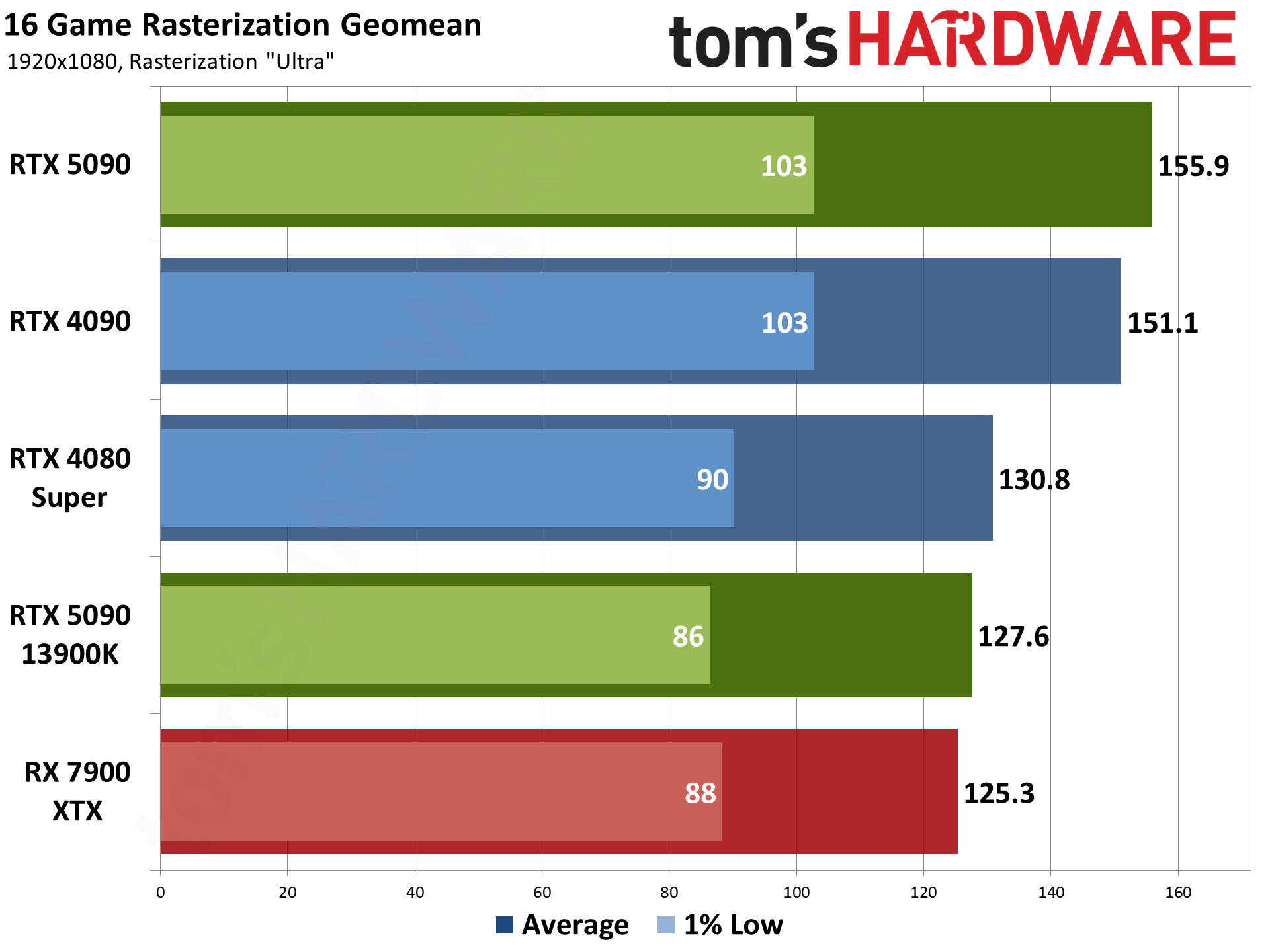

We're breaking down gaming performance into two categories: Traditional rasterization games, and ray tracing games. Each game has four test settings, though for the RTX 5090 we're mostly interesting in the 4K ultra results, less so in 1440p ultra, and 1080p ultra/medium are mostly just for reference (and to check for any odd behavior). We also have the overall performance geomean, the rasterization geomean, and the ray tracing geomean.

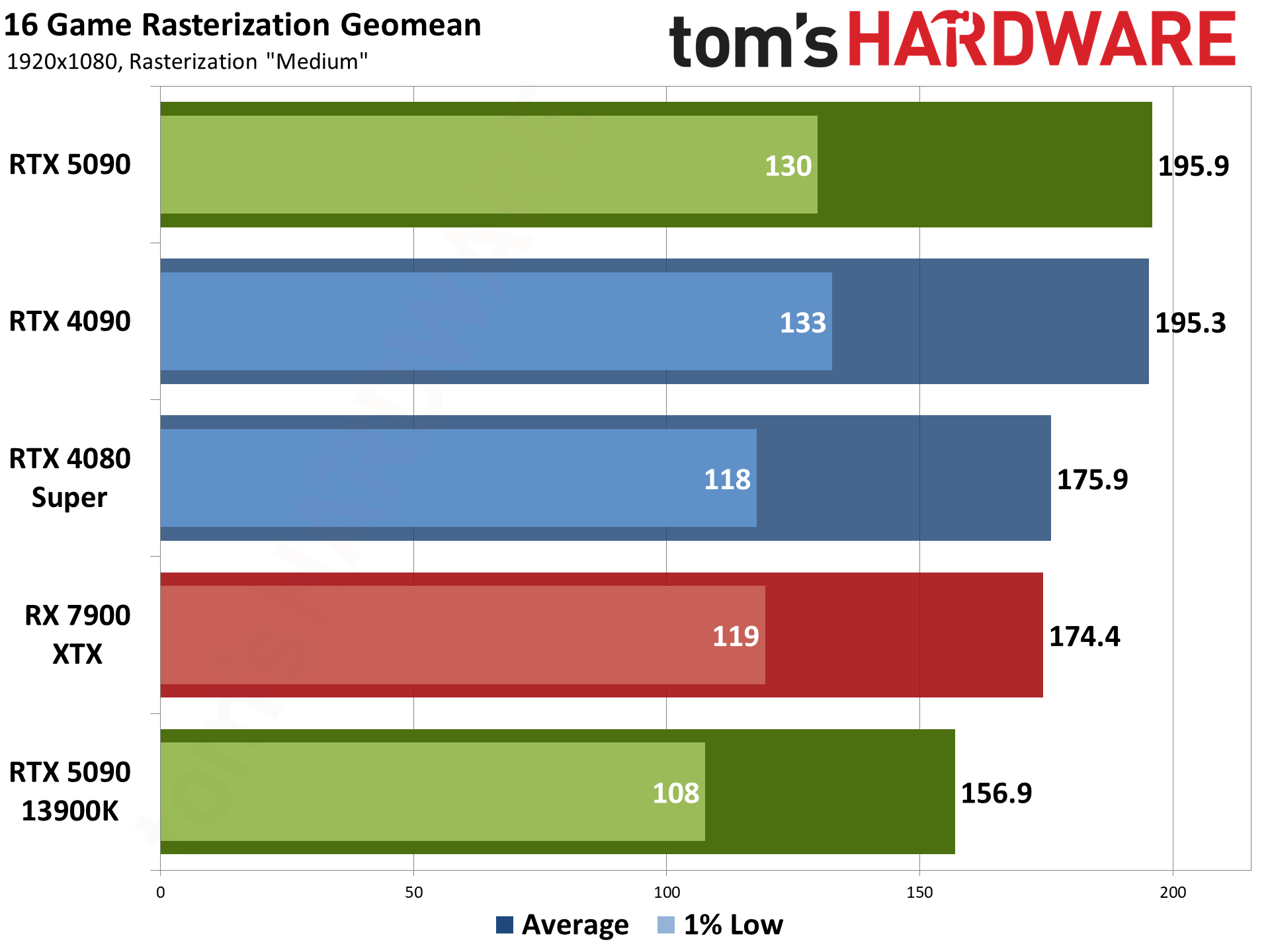

We'll start with the rasterization suite of 16 games, as that's arguably still the most useful measurement of gaming performance. Granted, an extreme GPU like the 5090 can be used with full RT enabled even at 4K, but that's something we're still working to test (on multiple GPUs so that we can actually compare the 5090 with something else).

We're providing the initial launch RTX 5090 charts now with limited commentary, letting the numbers mostly speak for themselves. We'll be retesting (and retesting some more) in the coming months, and we expect to see quite a few improvements over time, as things are definitely a bit raw and undercooked in a subset of games right now.

Our overall rasterization results help provide our baseline impressions of any new GPU. Sure, the 5090 has even better ray tracing, AI, and frame generation technology compared to the previous generation, but rasterization is used in virtually every game, while RT and DLSS 3/4 are only in a subset of games. Of course, any games that don't use RT will probably run as fast as your CPU can go for now.

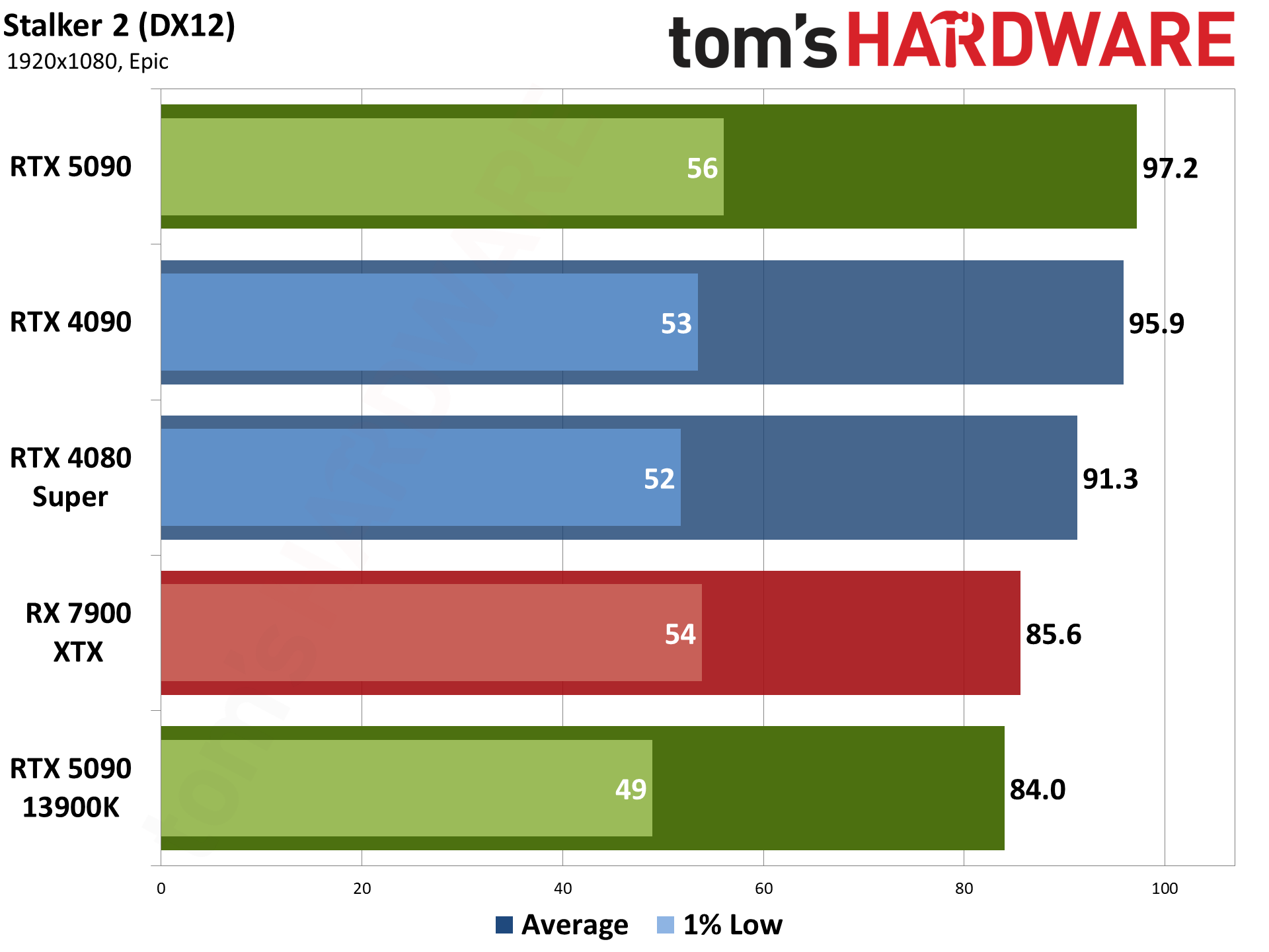

We tested the RTX 5090 Founders Edition on our Ryzen 7 9800X3D and Core i9-13900K PCs. The results are at times interesting and/or strange. The 13900K often wins at 4K ultra, but there are also games where that CPU performs very poorly. This is part of what the difficulty in reviewing the 5090 at launch. The 9800X3D also has a few oddities at times, and we can only assume that the combination of test platforms and drivers needs a bit more work. That will happen in the coming days and weeks.

As it stands, right now, the RTX 5090 ends up 25% faster than the RTX 4090 across our rasterization suite at 4K ultra. In the individual games, shown below, it ranges from 6% faster to 43% faster. But drop the resolution and things get a bit wonky. It's 13% faster at 1440p ultra, with a range of -3% to +36%. For 1080p ultra, it's 3% faster overall with a range of -17% to +25% — obviously there are some problem games if the 5090 is more than a few percent slower than the 4090. And finally, at 1080p medium it's tied in overall performance with a range of -20% to +17%, again clearly problematic.

For computer algorithms, one of the difficulties in scaling often ends up being the task of splitting up and recombining chunks of data. We called this "divide and conquer" in my CS classes. With the 5090, if we're looking at just the SM level, it has 170 units to fill up compared to 128 units on the 4090. That means in some situations (e.g. CPU limited scenarios), it takes a bit more effort to distribute all of that work across the additional SMs that the benefit of having more SMs provides.

We've seen this in the past with the 40-series GPUs, where in some cases a "slower" part can beat a "faster" part at 1080p. But it's usually at most around a 2~3 percent difference. When we're getting up to 20% lower performance from the 5090 compared to the 4090 in certain tests, that indicates a lack of optimizations and driver maturity. It makes sense to see this on a brand-new architecture, and we expect the negative performance deltas will diminish and potentially even reverse over time.

Given the $2,000 price tag, 4K ultra is clearly the target for the RTX 5090 — or maybe even higher resolutions if you have an appropriate display. We've put the 4K charts first, then 1440p, and 1080p will be at the end. Below are the individual rasterization results, in alphabetical order with limited commentary.

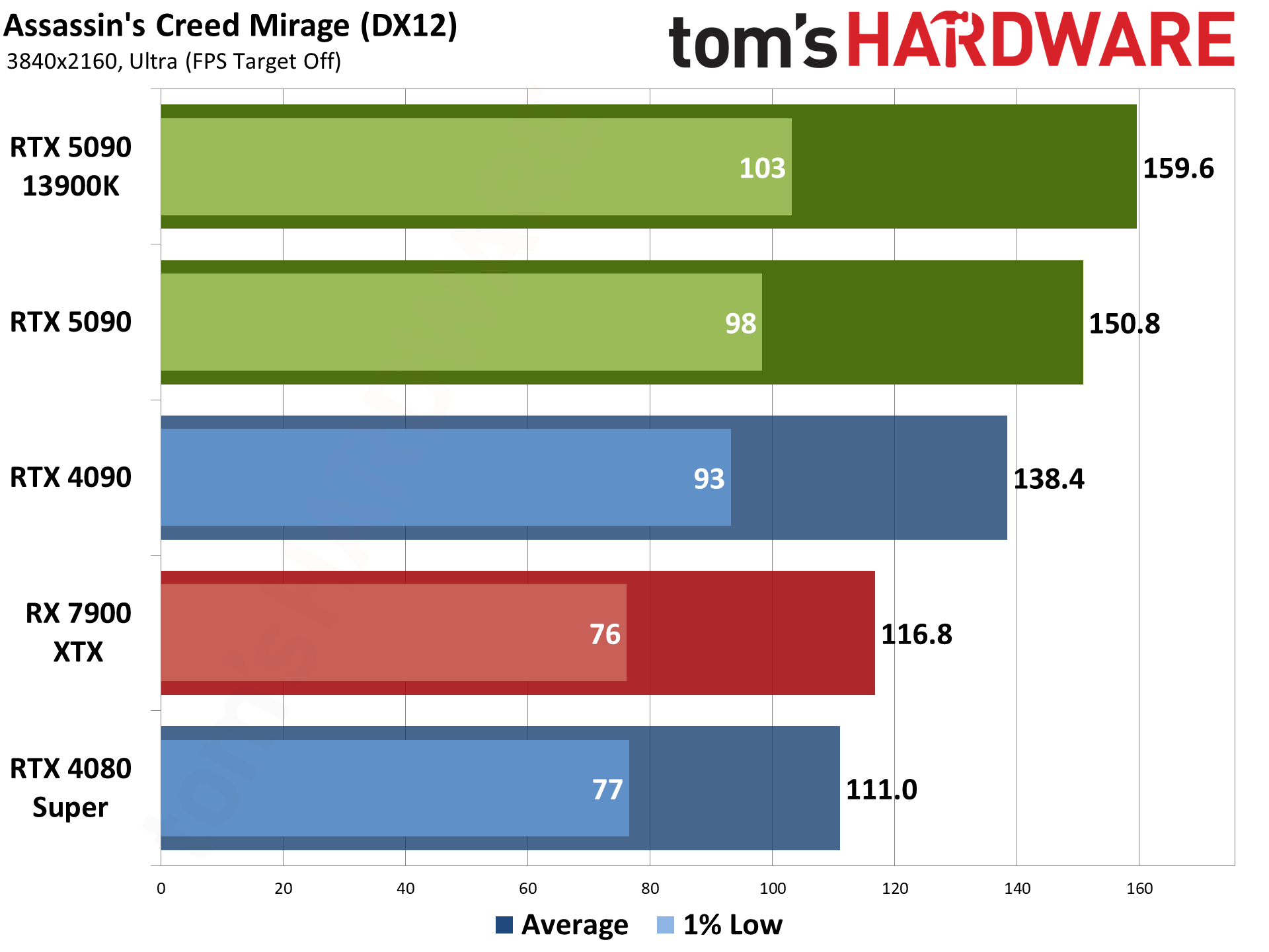

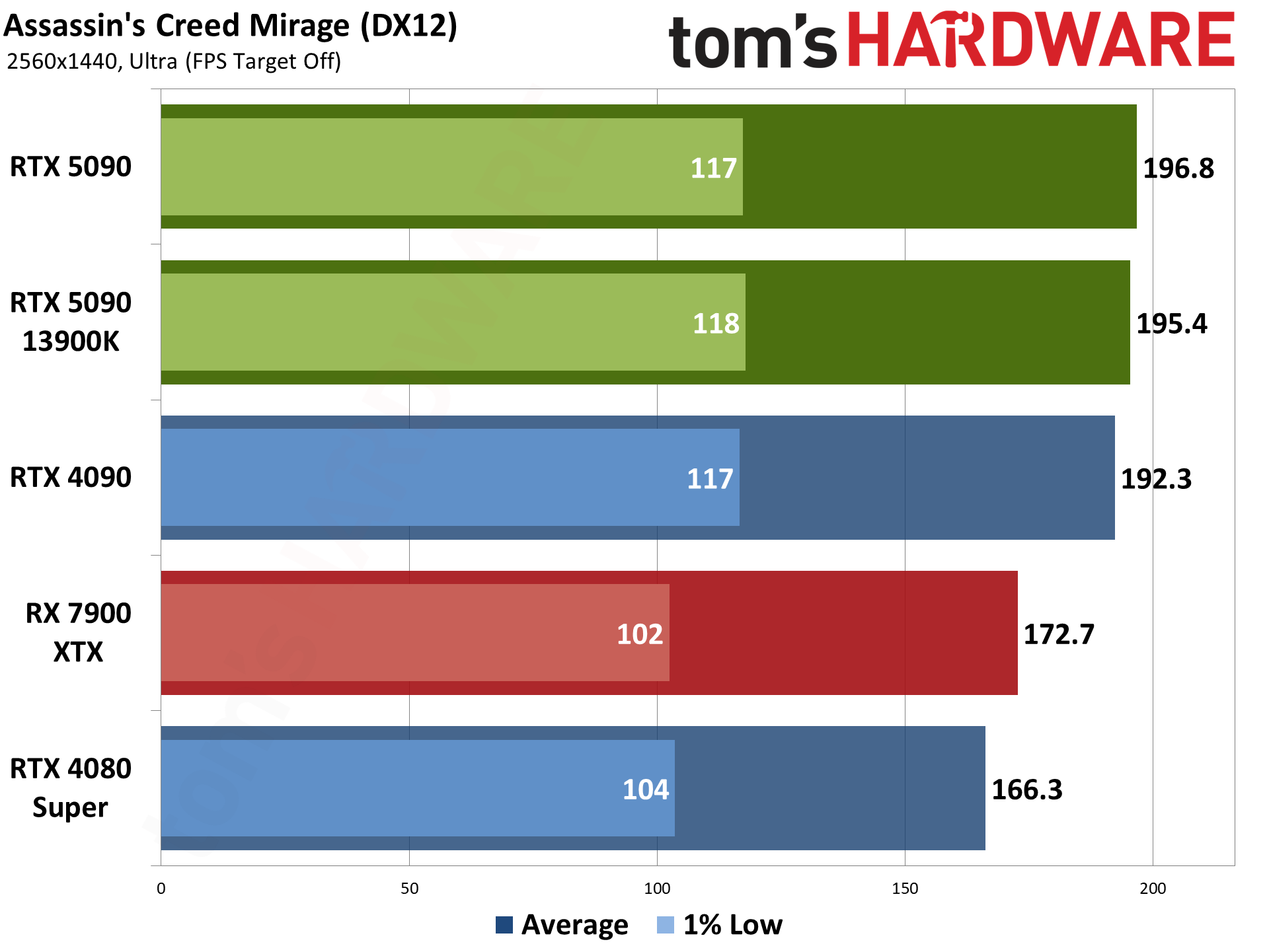

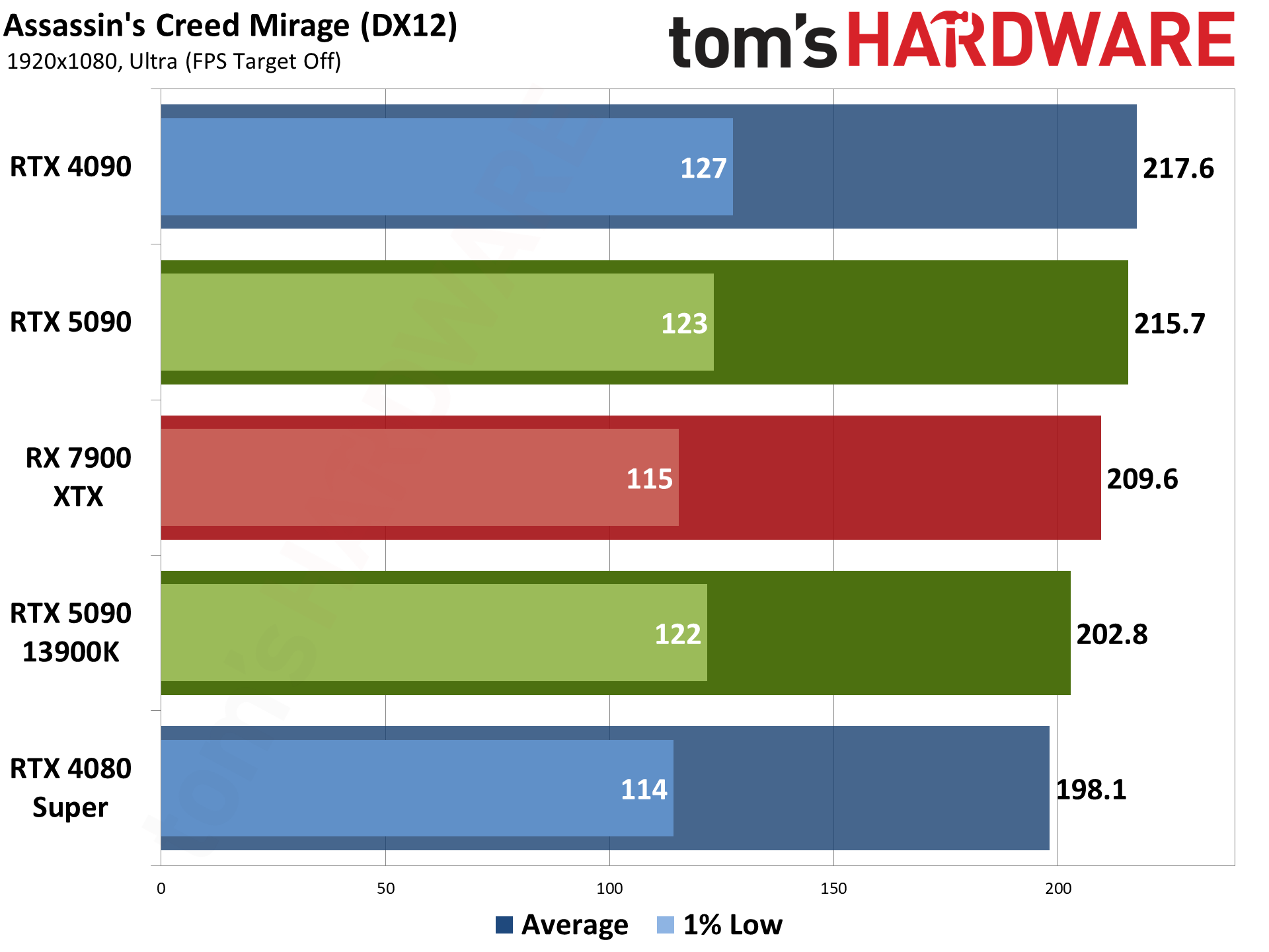

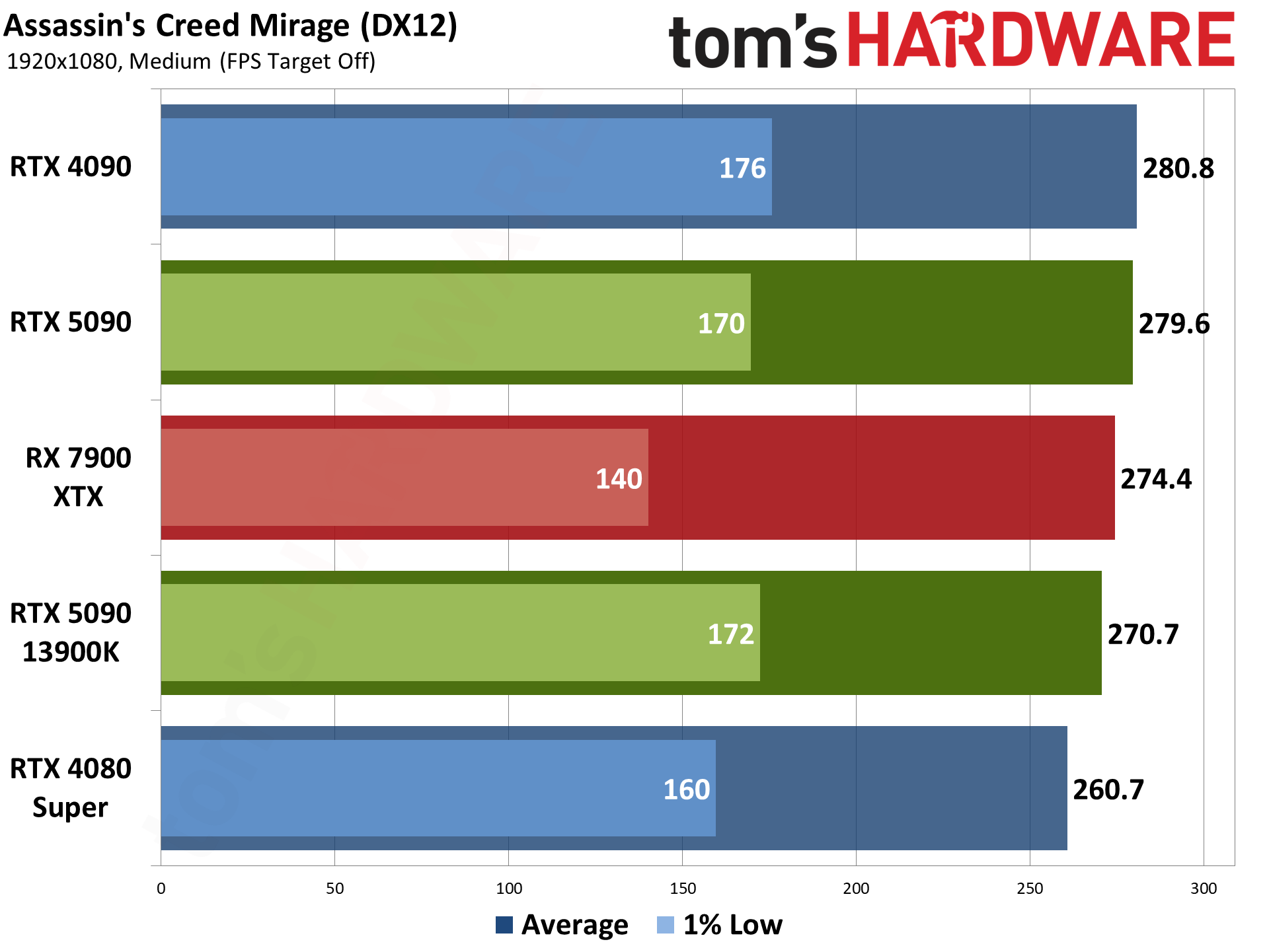

Assassin's Creed Mirage uses the Ubisoft Anvil engine and DirectX 12. It's an AMD-promoted game as well, though these days that doesn't necessarily mean it always runs better on AMD GPUs. It could be CPU optimizations for Ryzen, or more often it just means a game has FSR2 or FSR3 support — FSR2 in this case. It also supports DLSS and XeSS upscaling.

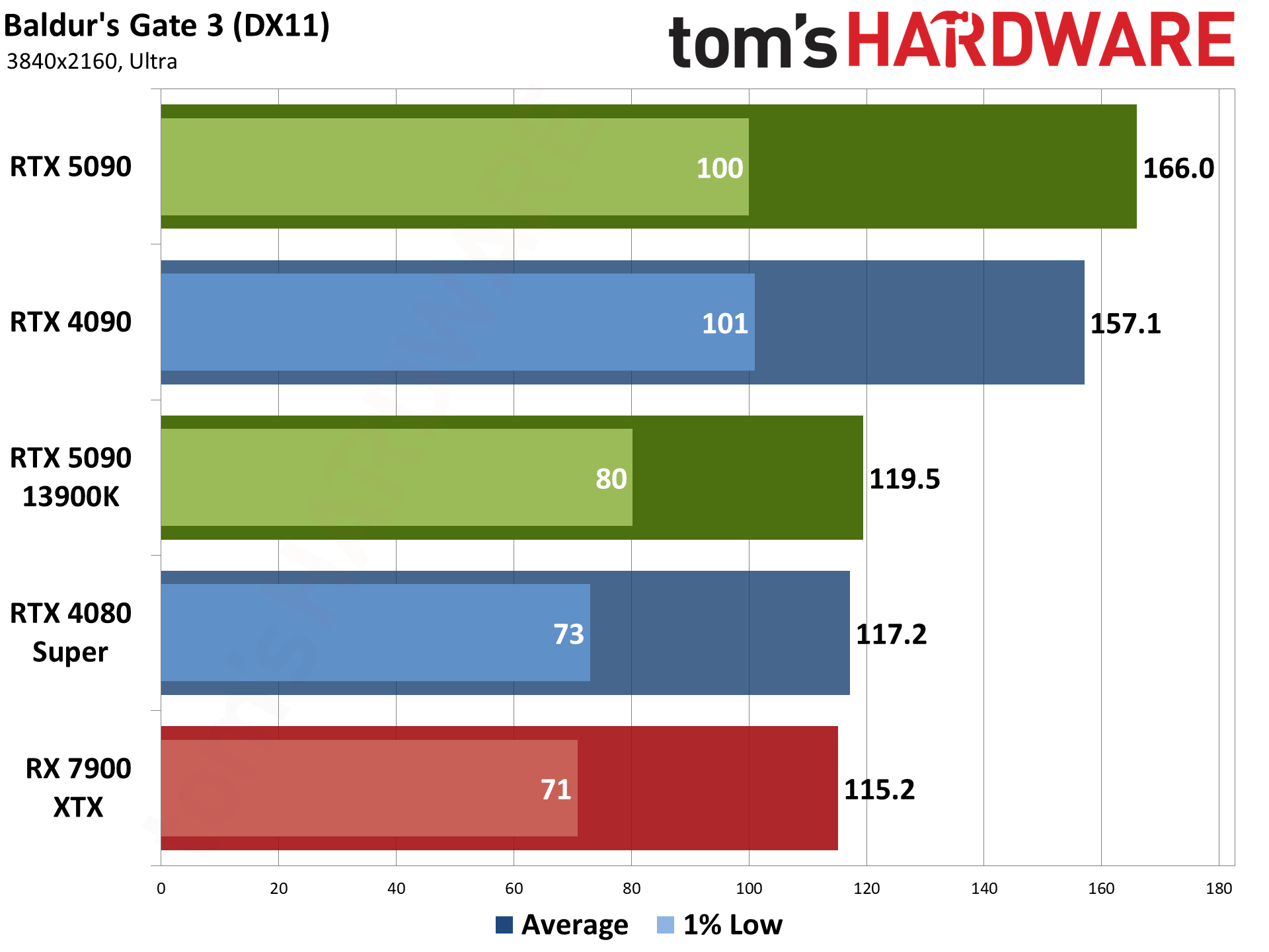

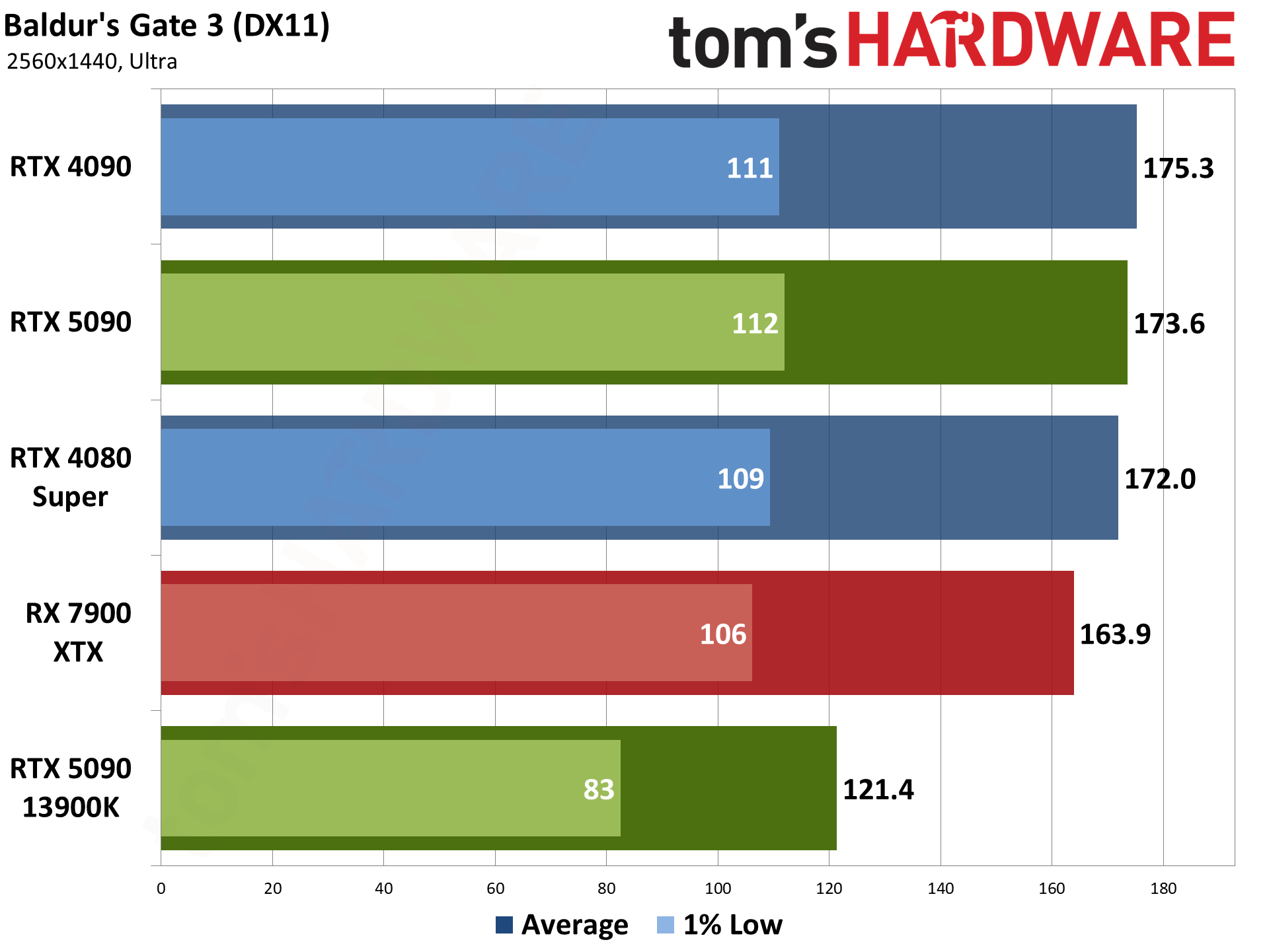

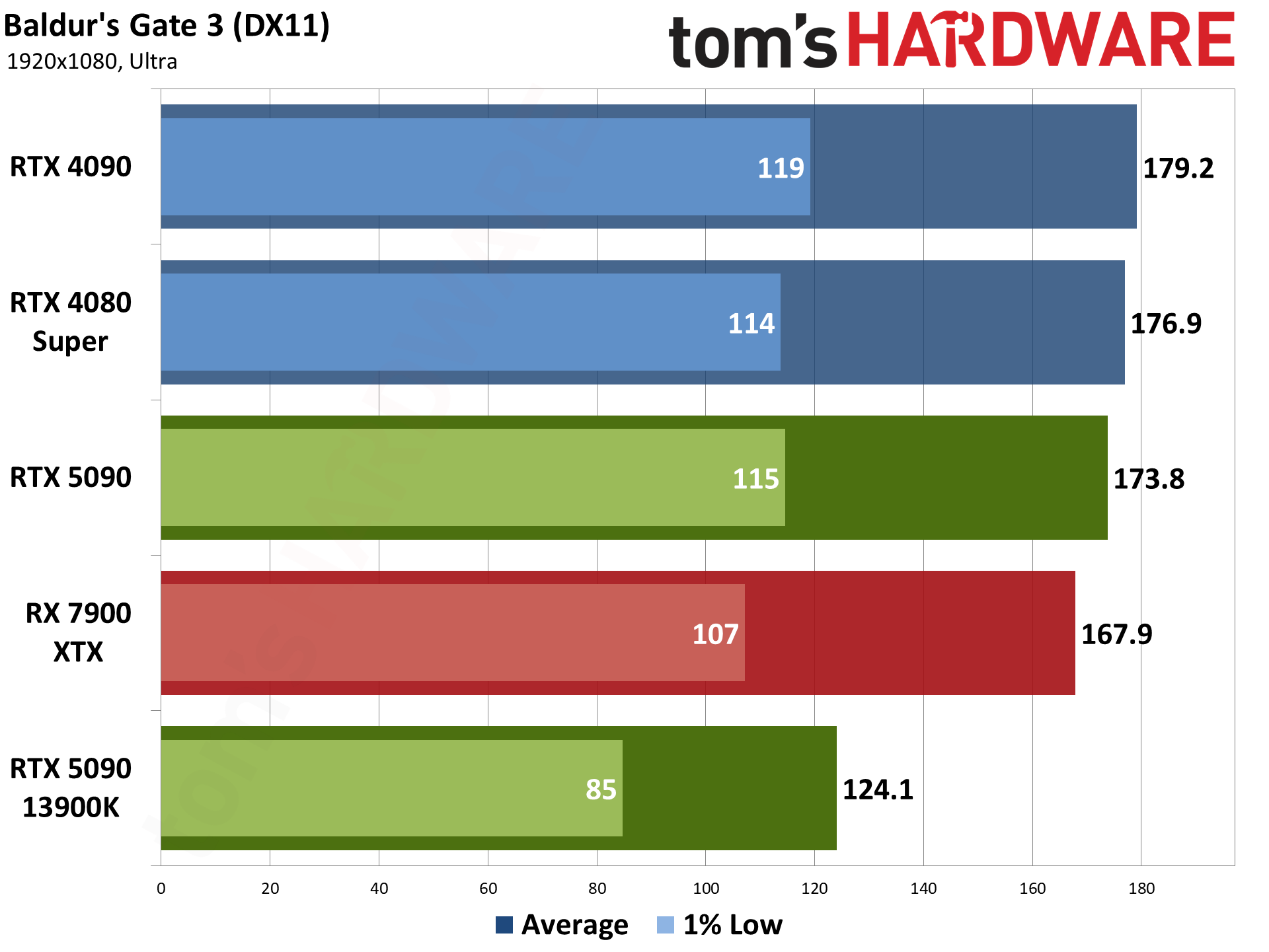

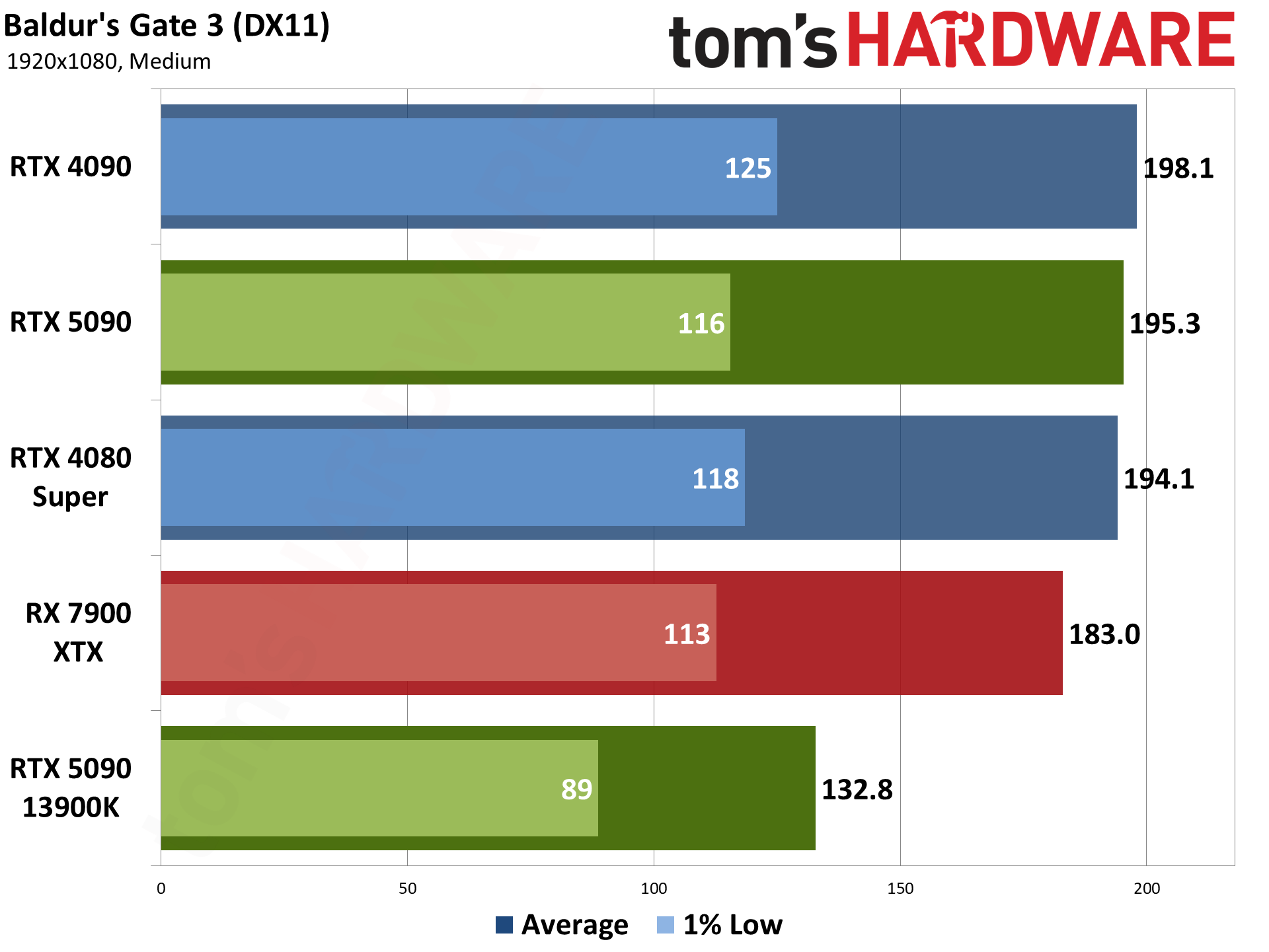

Baldur's Gate 3 is our sole DirectX 11 holdout — it also supports Vulkan, but that performed worse on the GPUs we checked, so we opted to stick with DX11. Built on Larian Studios' Divinity Engine, it's a top-down perspective game, which is a nice change of pace from the many first person games in our test suite.

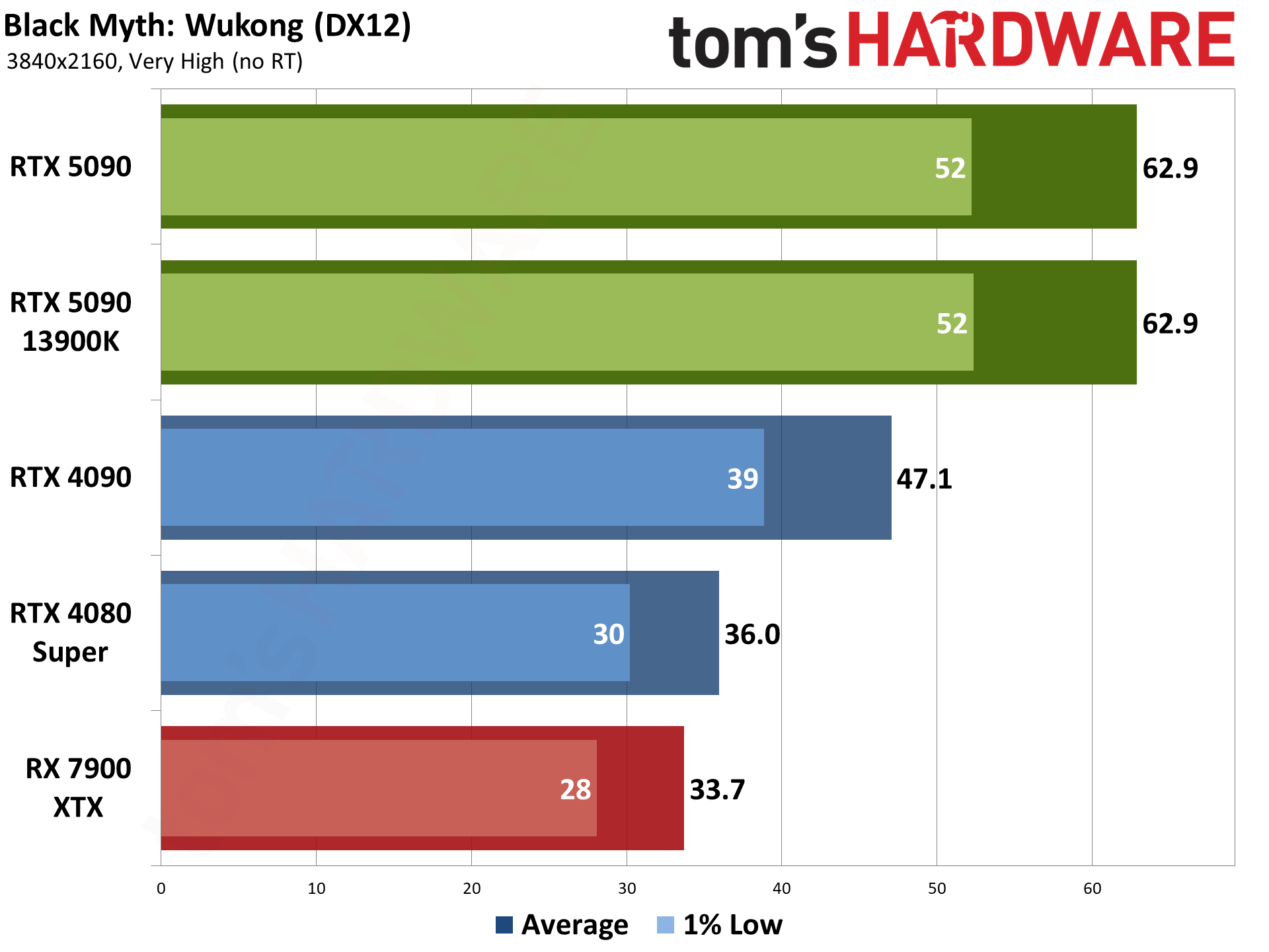

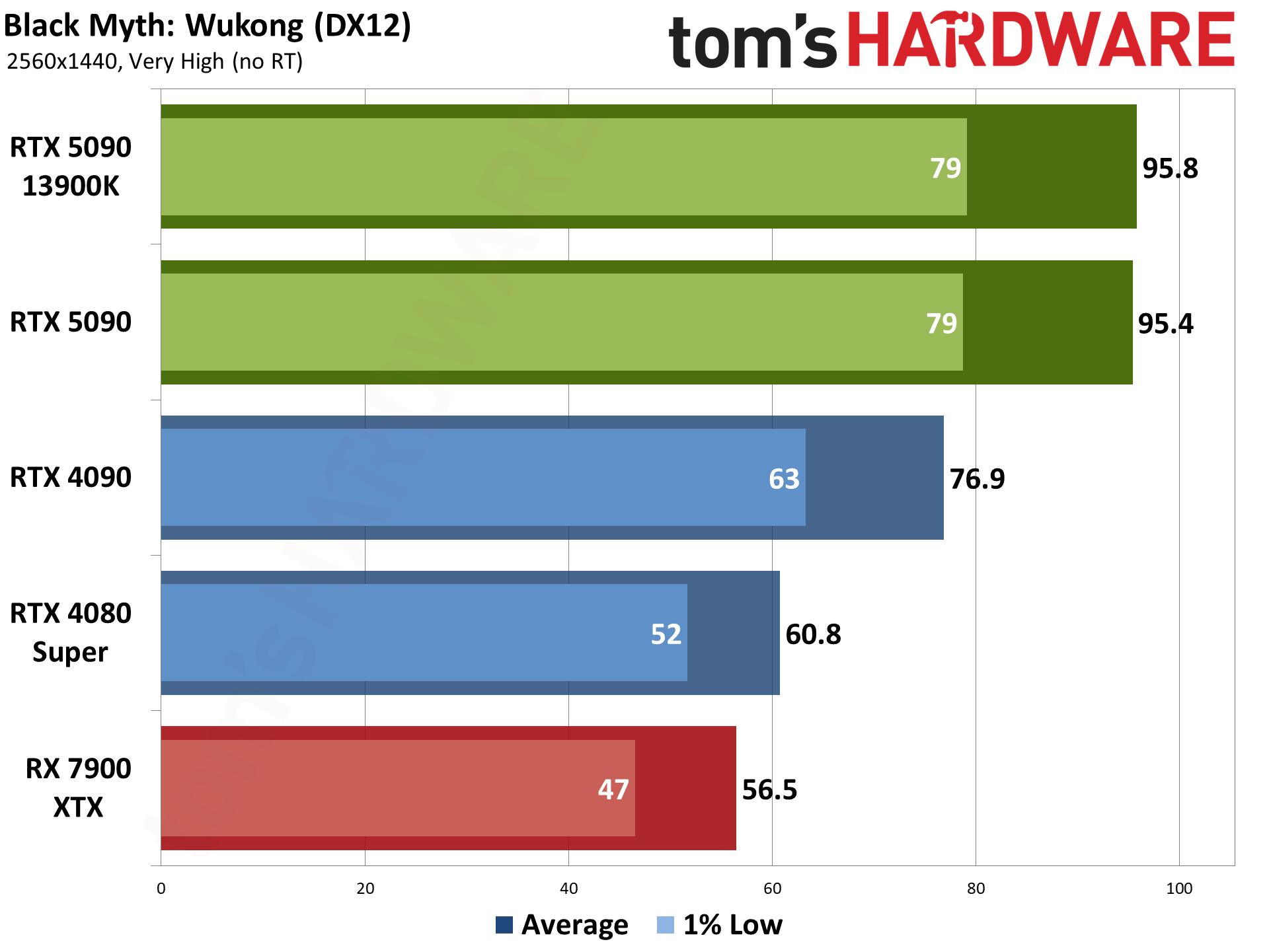

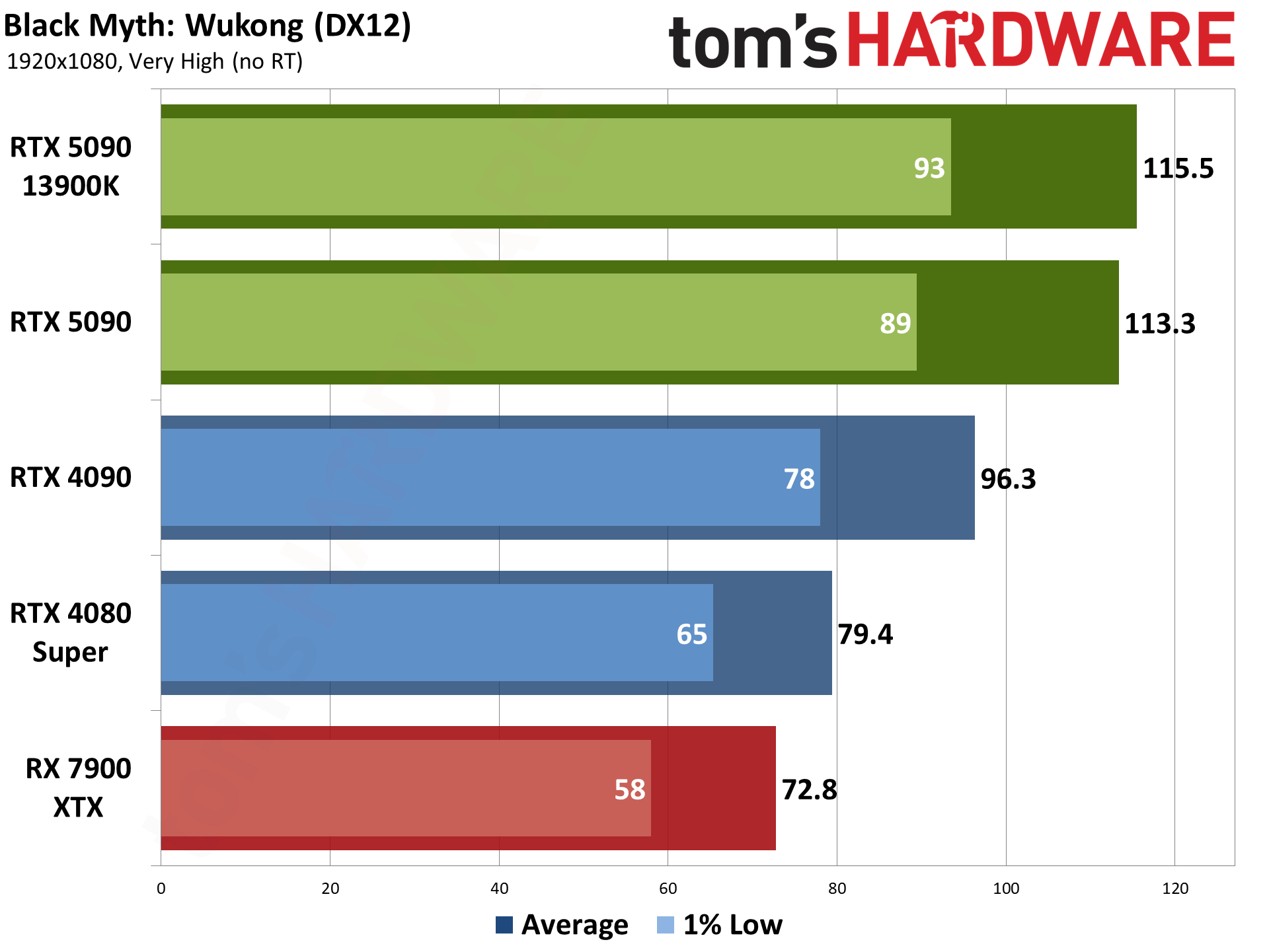

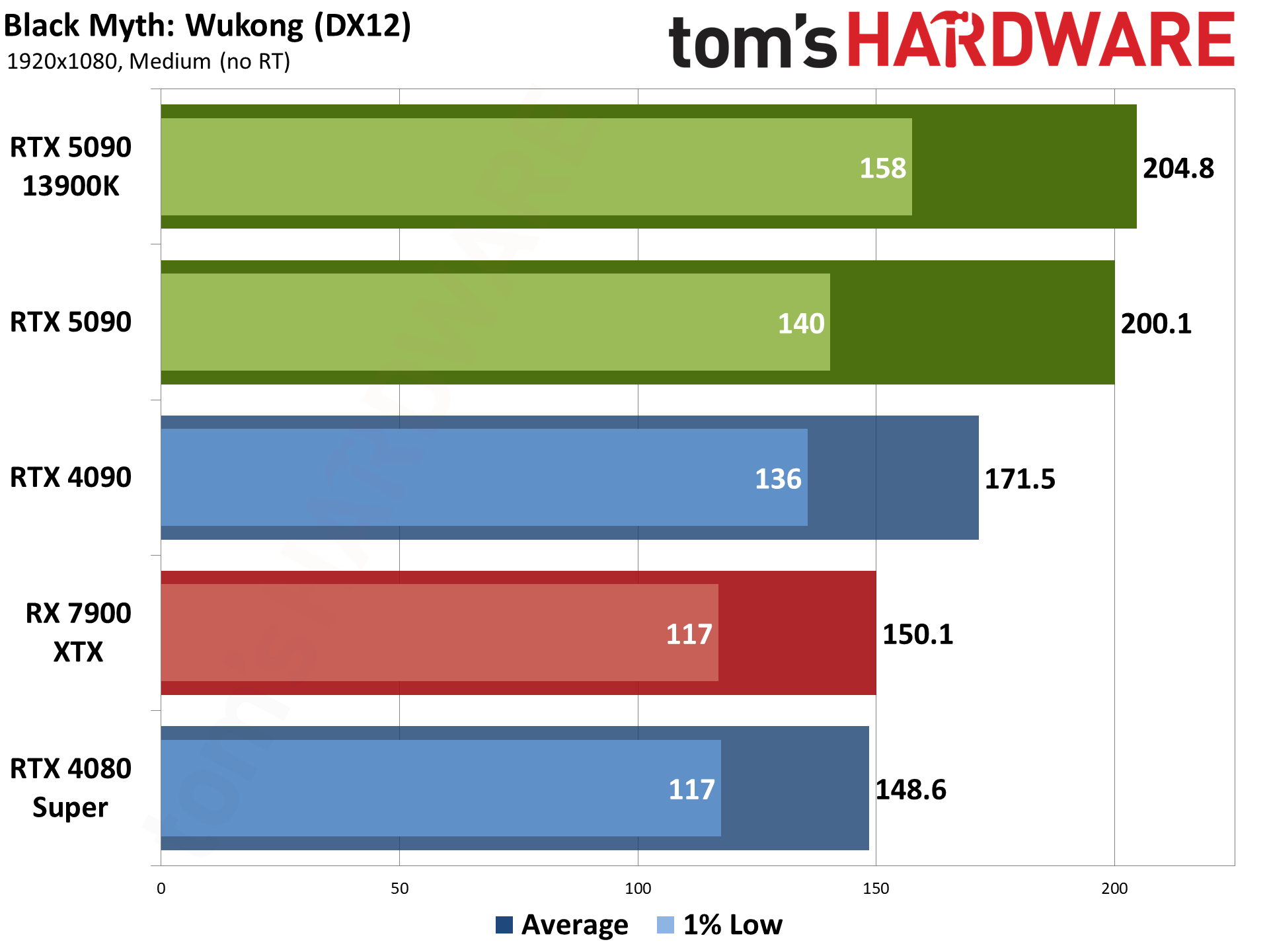

Black Myth: Wukong is one of the newer games in our test suite. Built on Unreal Engine 5, with support for full ray tracing as a high-end option, we opted to test using pure rasterization mode. Full RT may look a bit nicer, but the performance hit is quite severe. (Check our linked article for our initial launch benchmarks if you want to see how it runs with full RT enabled. We'll do supplemental testing on the 5090 as soon as we're able to find the time!)

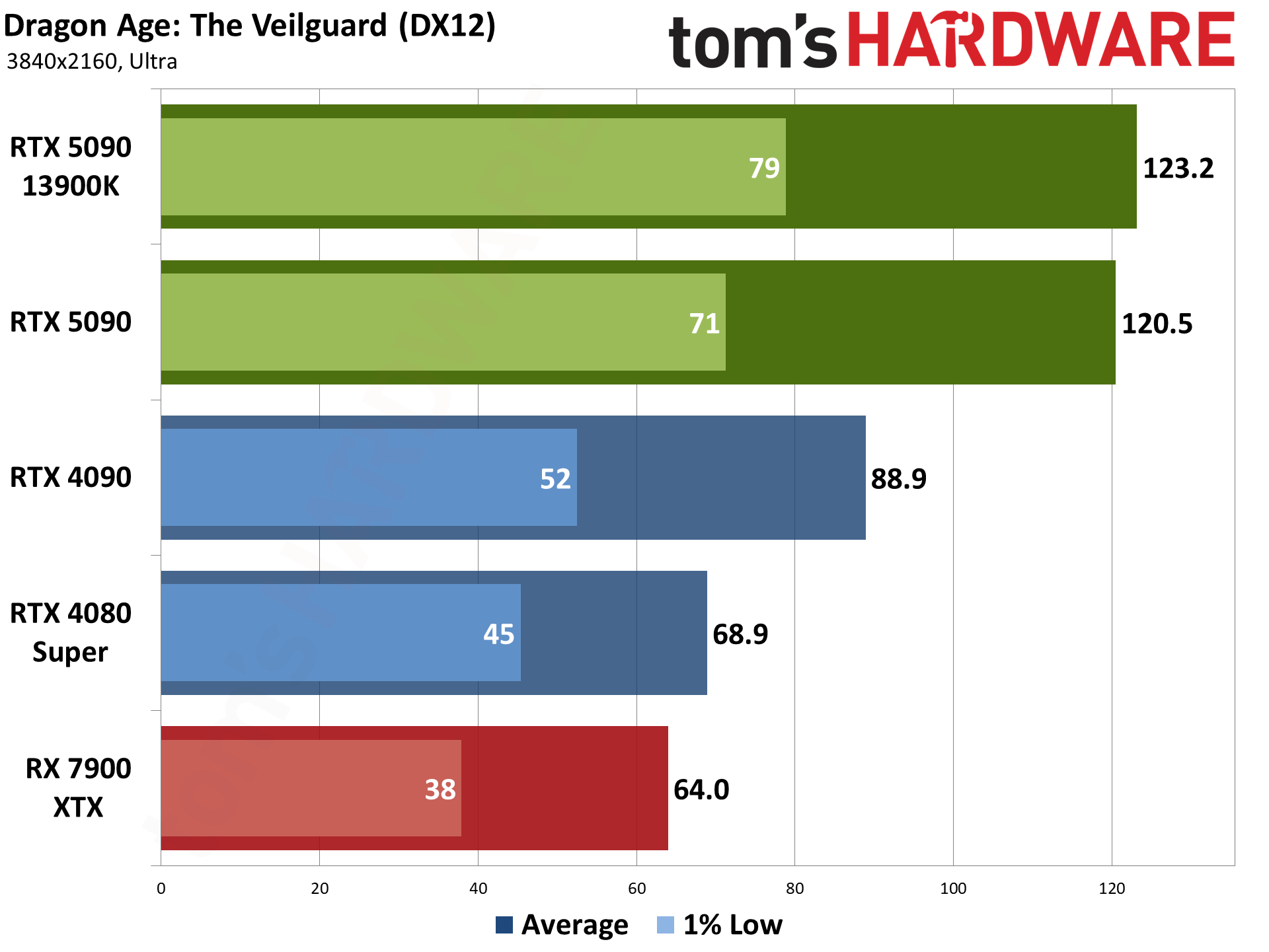

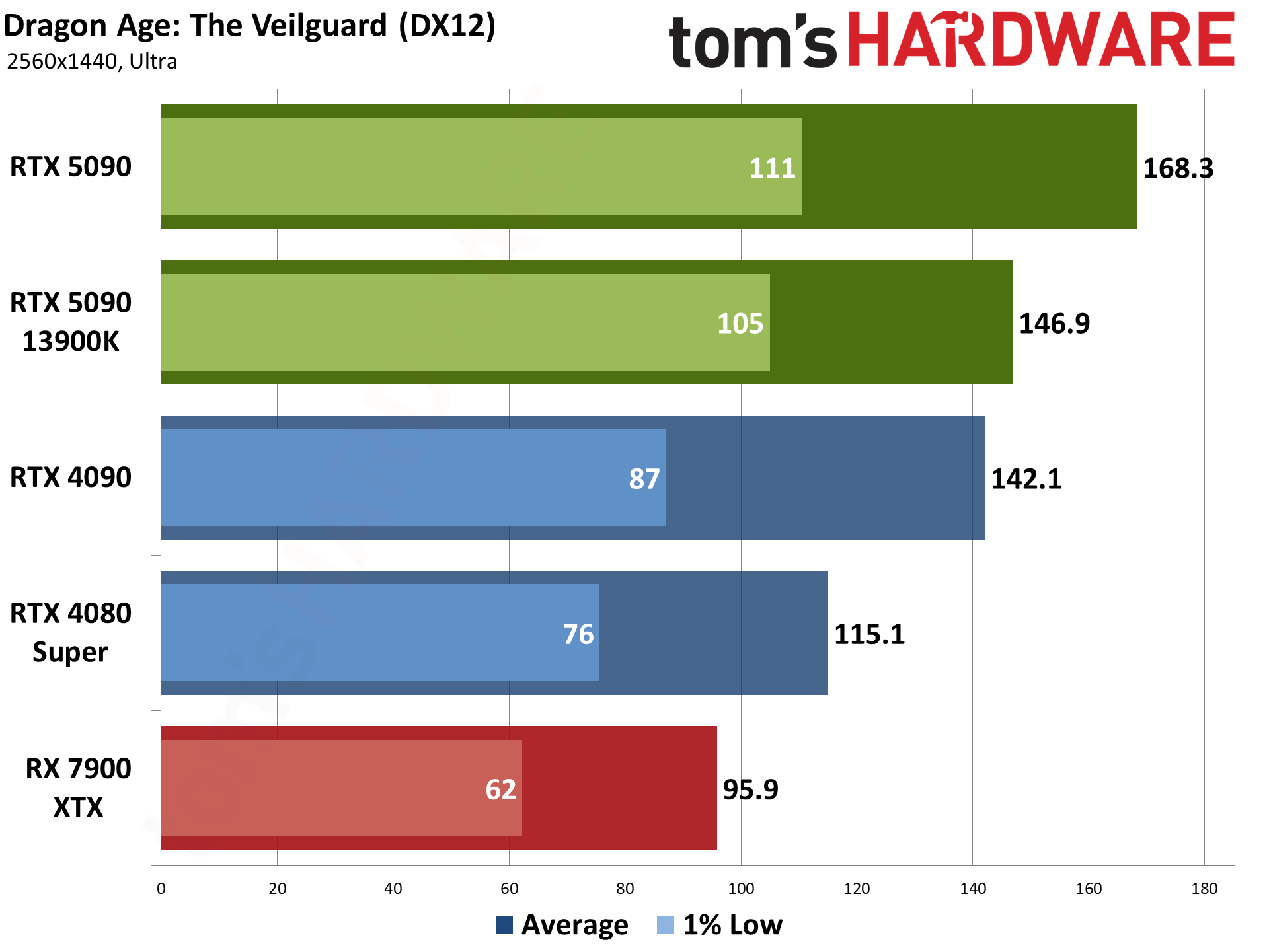

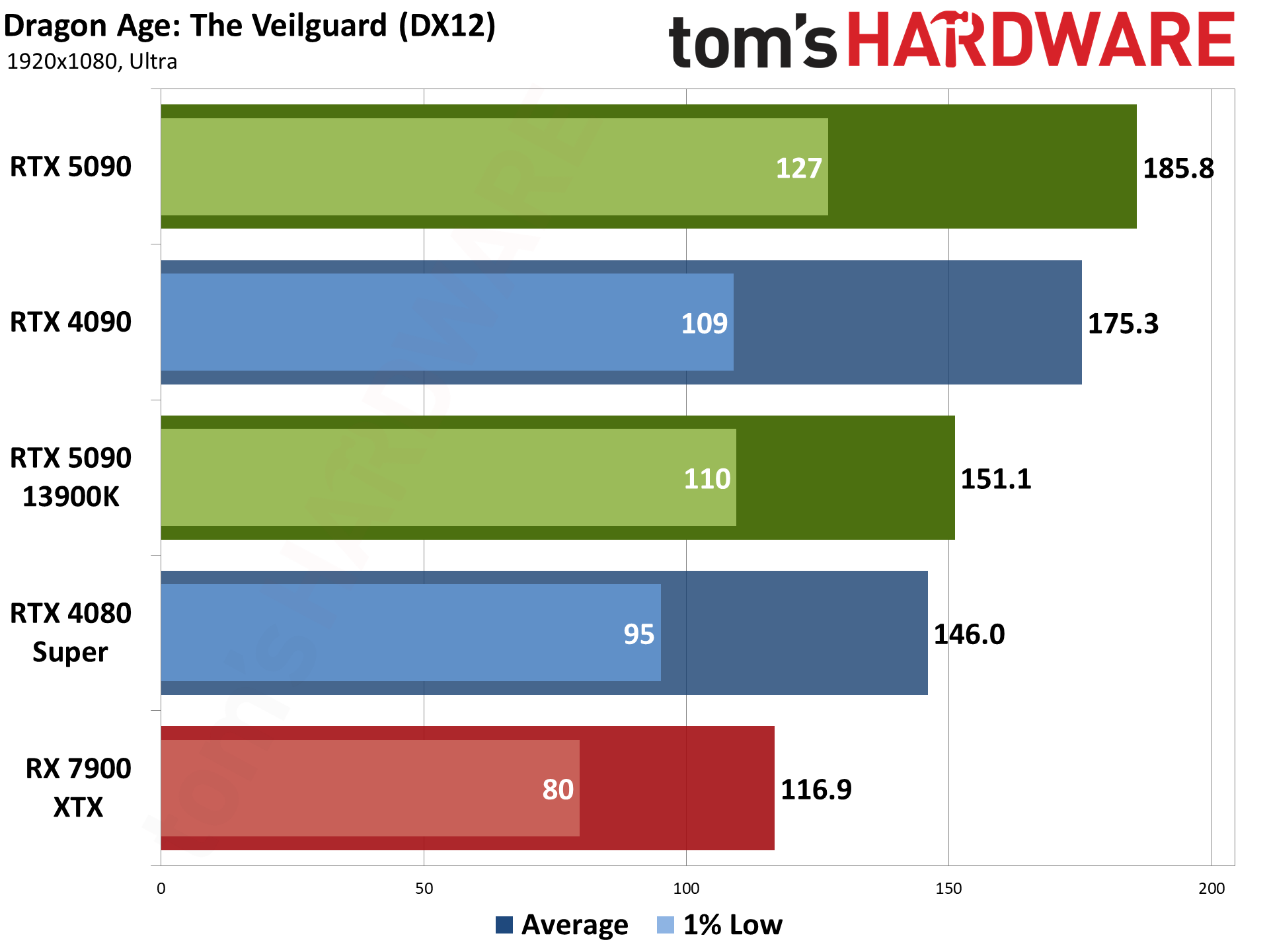

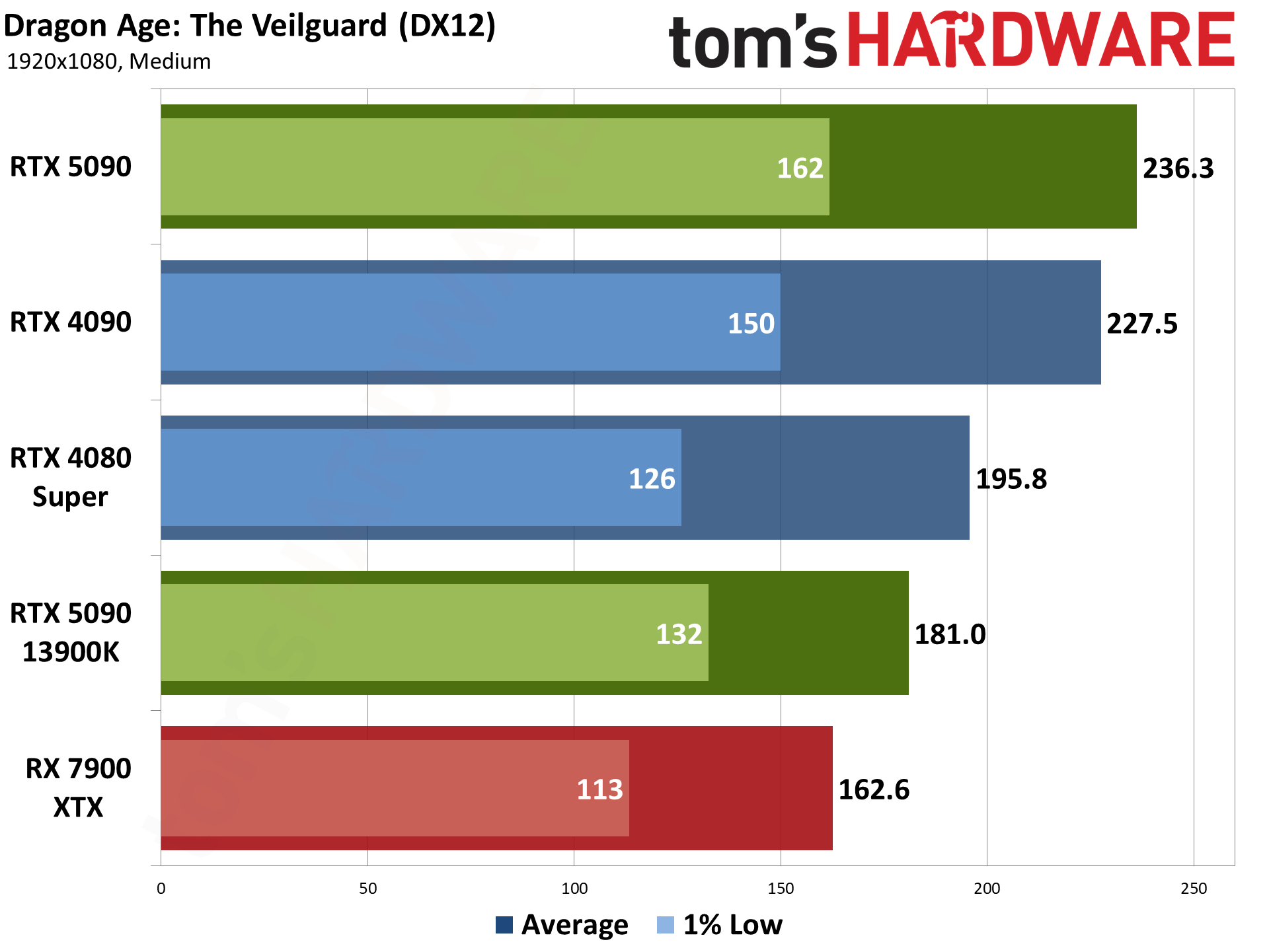

Dragon Age: The Veilguard uses the Frostbite engine and runs via the DX12 API. It's one of the newest games in my test suite, having launched this past Halloween. It's been received quite well, though, and in terms of visuals I'd put it right up there with Unreal Engine 5 games — without some of the LOD pop-in that happens so frequently with UE5.

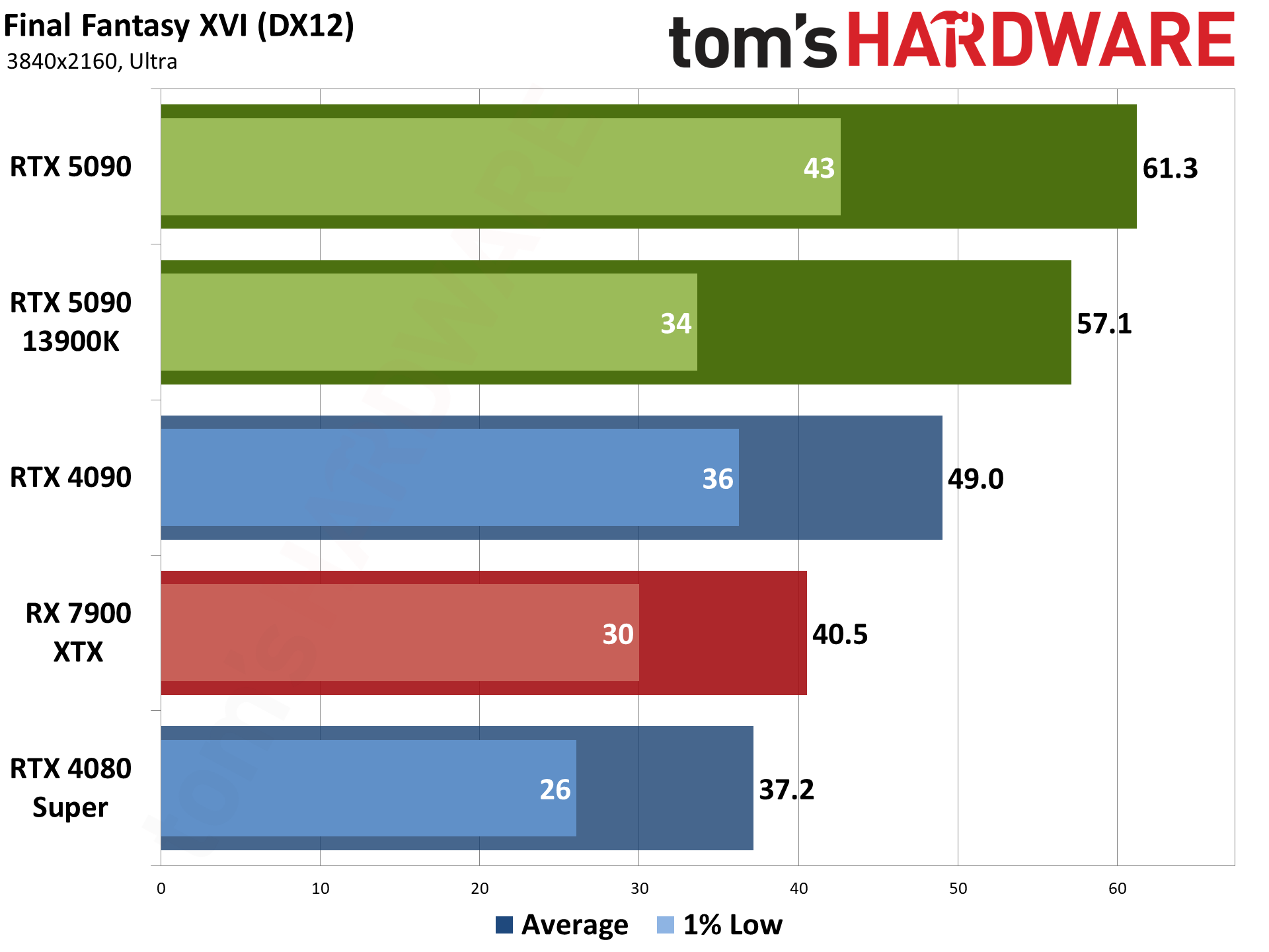

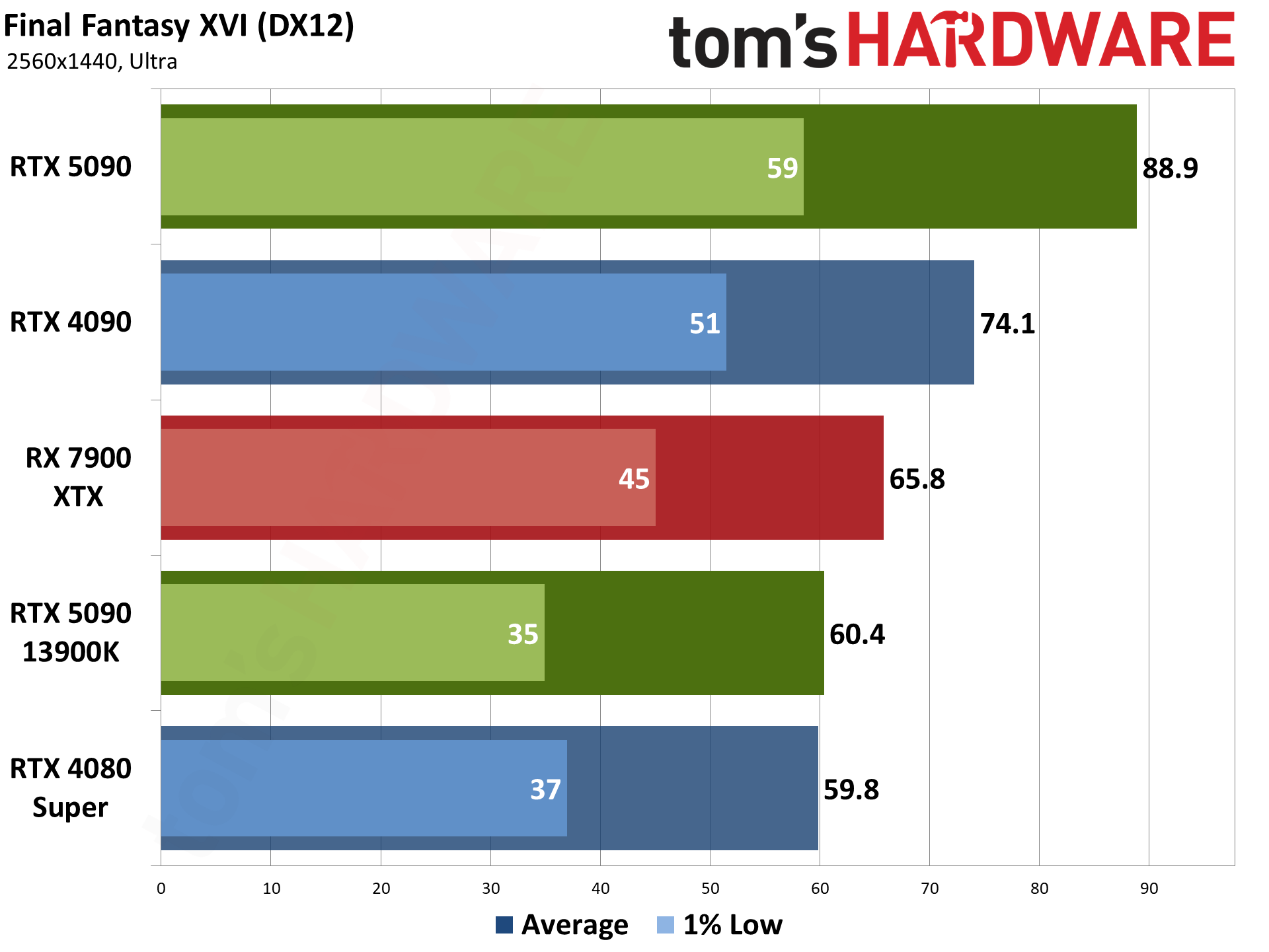

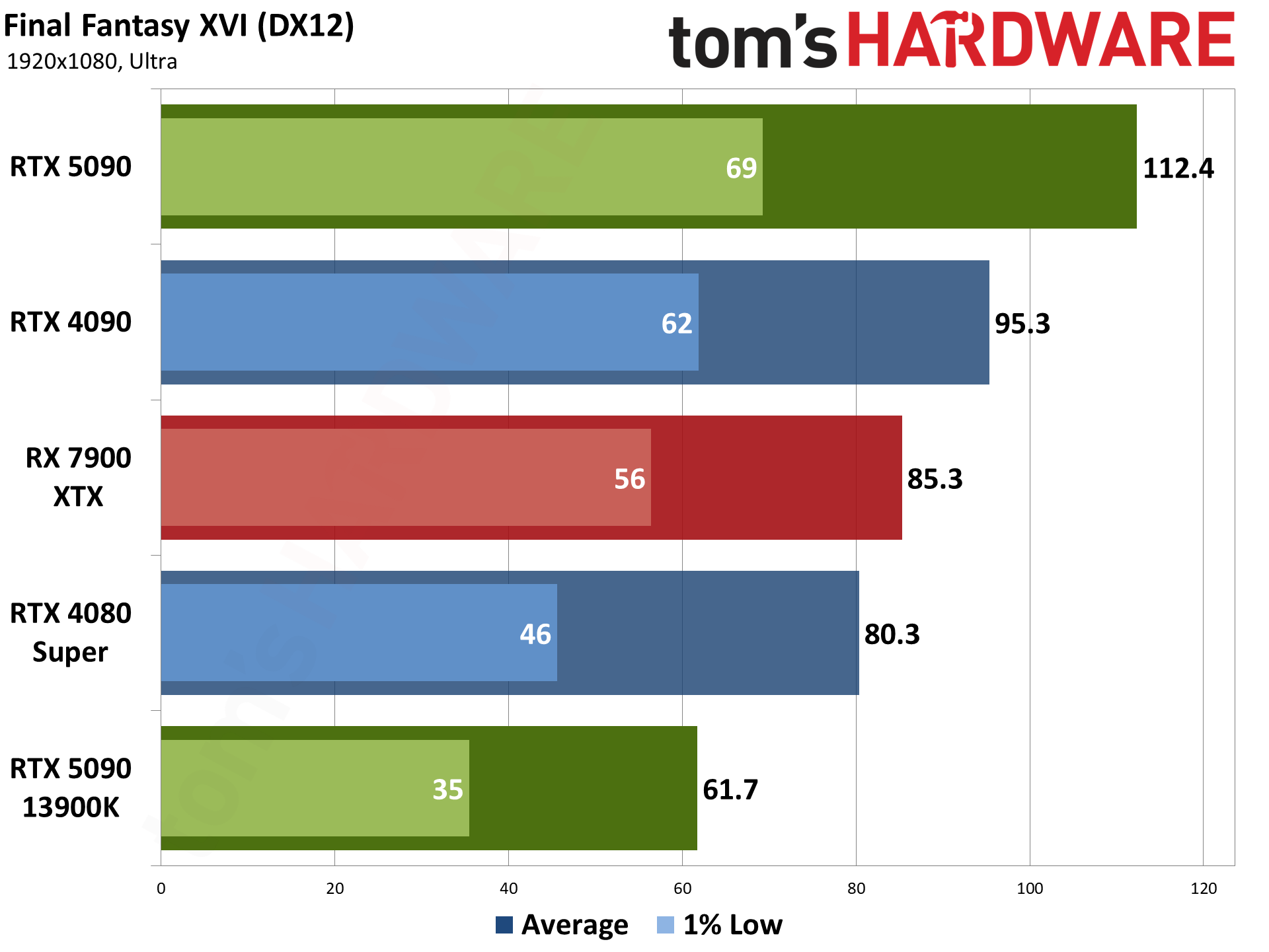

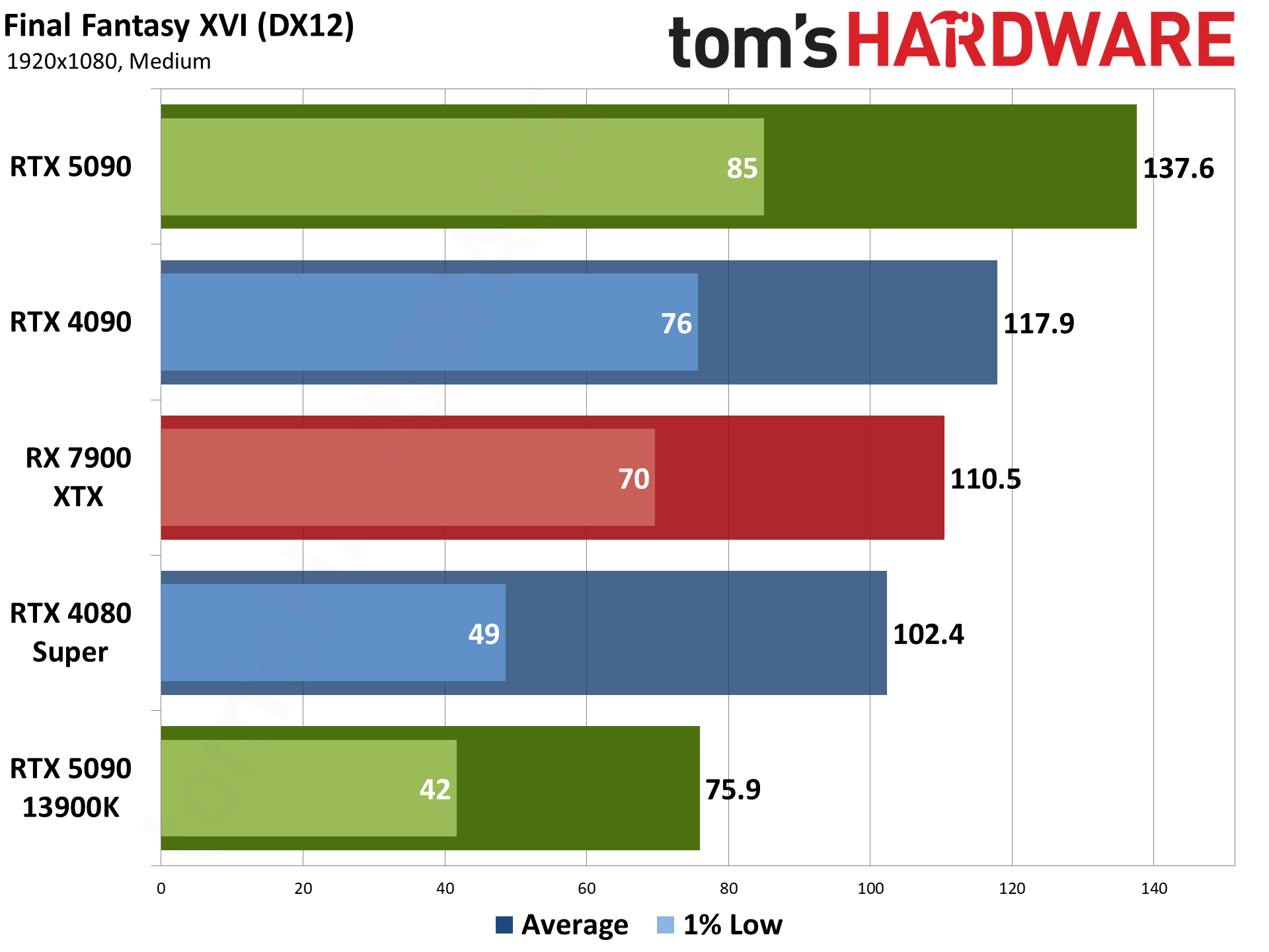

Final Fantasy XVI came out for the PS5 last year, but it only recently saw a Windows release. It's also either incredibly demanding or quite poorly optimized, but it does tend to be very GPU limited. Our test sequence consists of running a path around the town of Lost Wing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

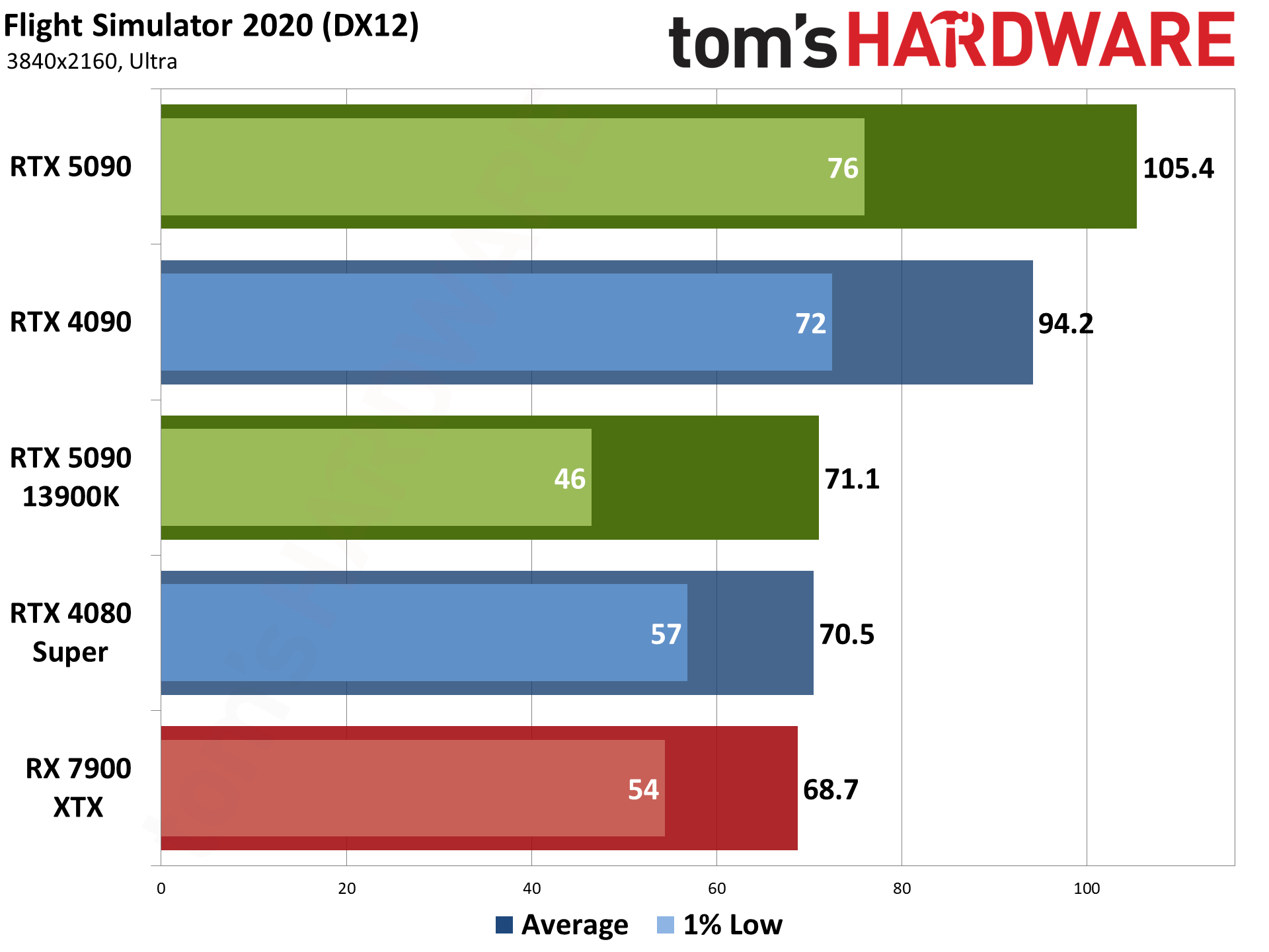

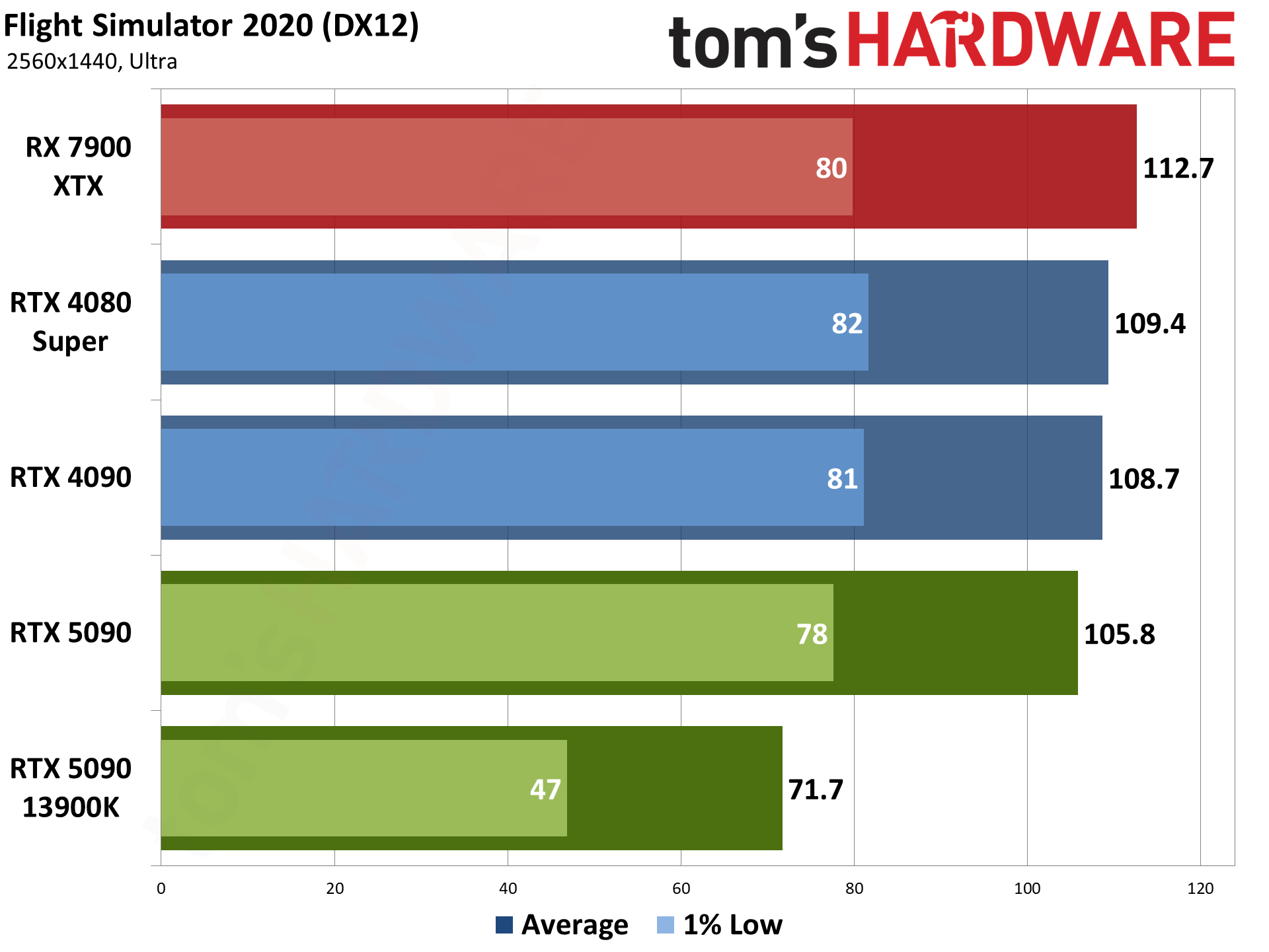

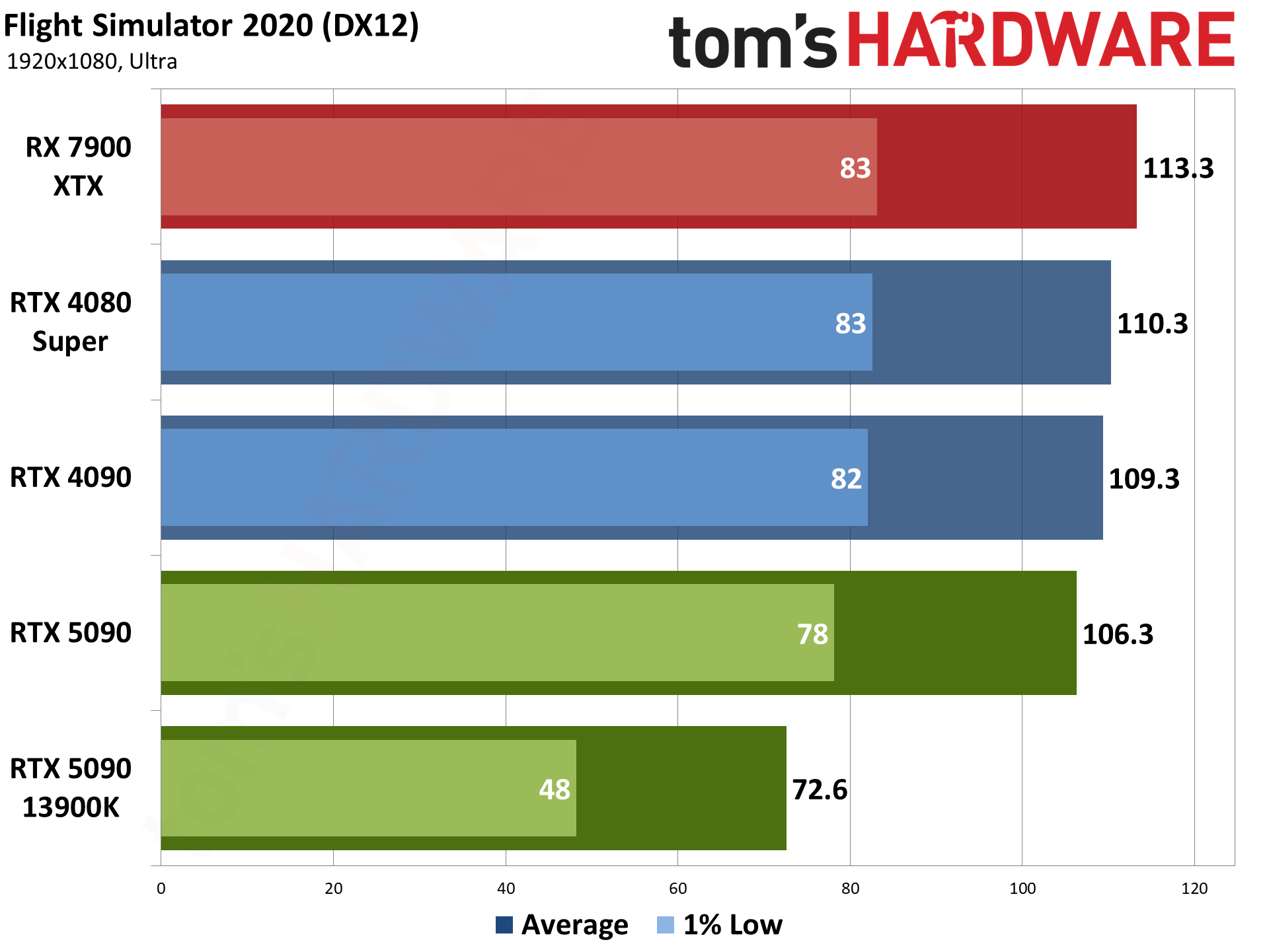

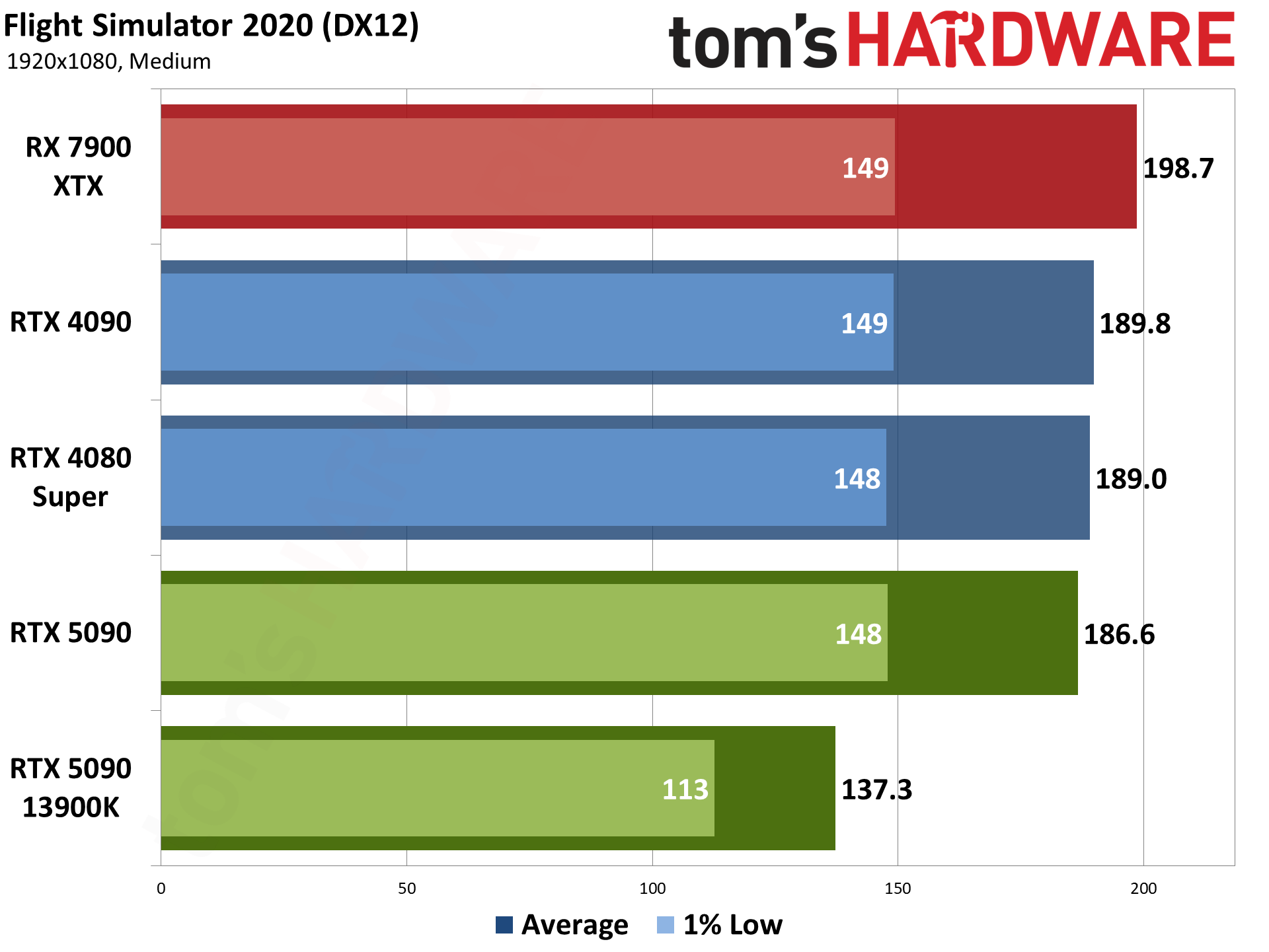

We've been using Flight Simulator 2020 for several years, and there's a new release below. But it's so new that we also wanted to keep the original around a bit longer as a point of reference. We've switched to using the 'beta' (eternal beta) DX12 path for our testing now, as it's required for DLSS frame generation even if it runs a bit slower on Nvidia GPUs.

What's interesting here is just how massive the gap is between the 13900K and the 9800X3D. Even at 4K ultra, the AMD CPU delivers 48% more performance than Intel's (slightly older) 13900K. But the 14900K would only narrow the gap by about 5%, and the Core Ultra 9 285K often performs worse than the 14900K. Flight Simulator 2020 is known to love AMD's X3D chips, though the new version isn't nearly so cache friendly.

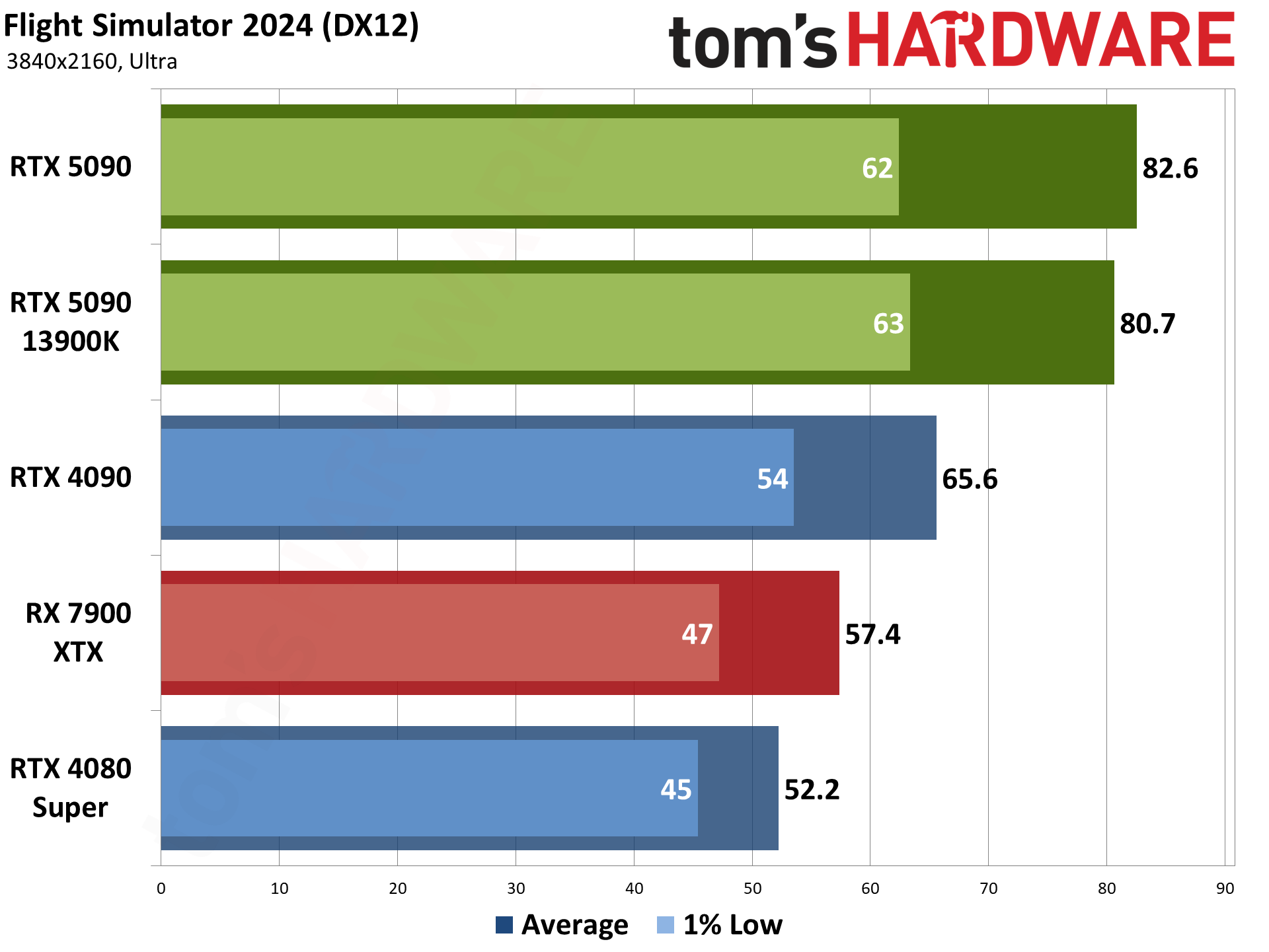

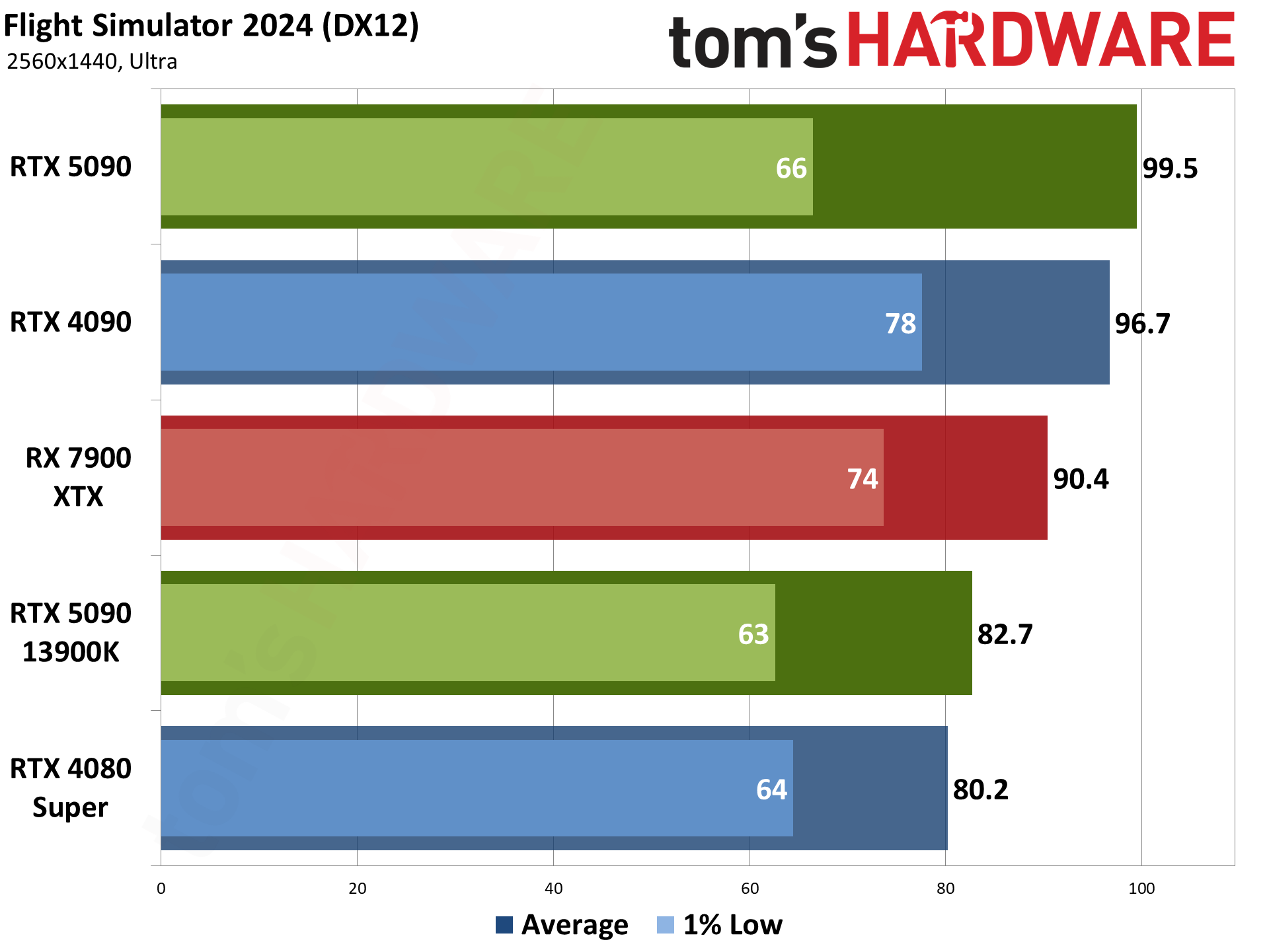

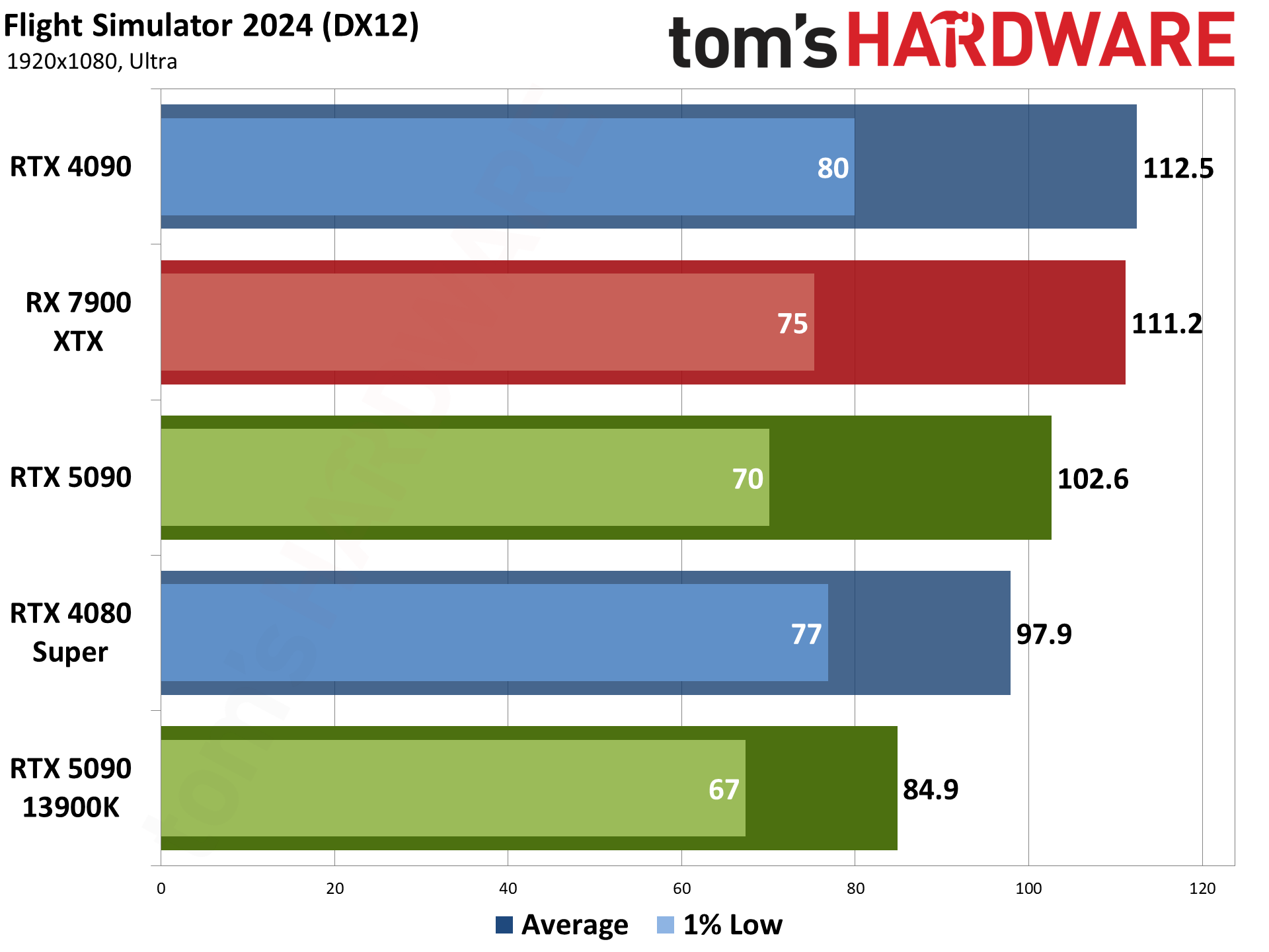

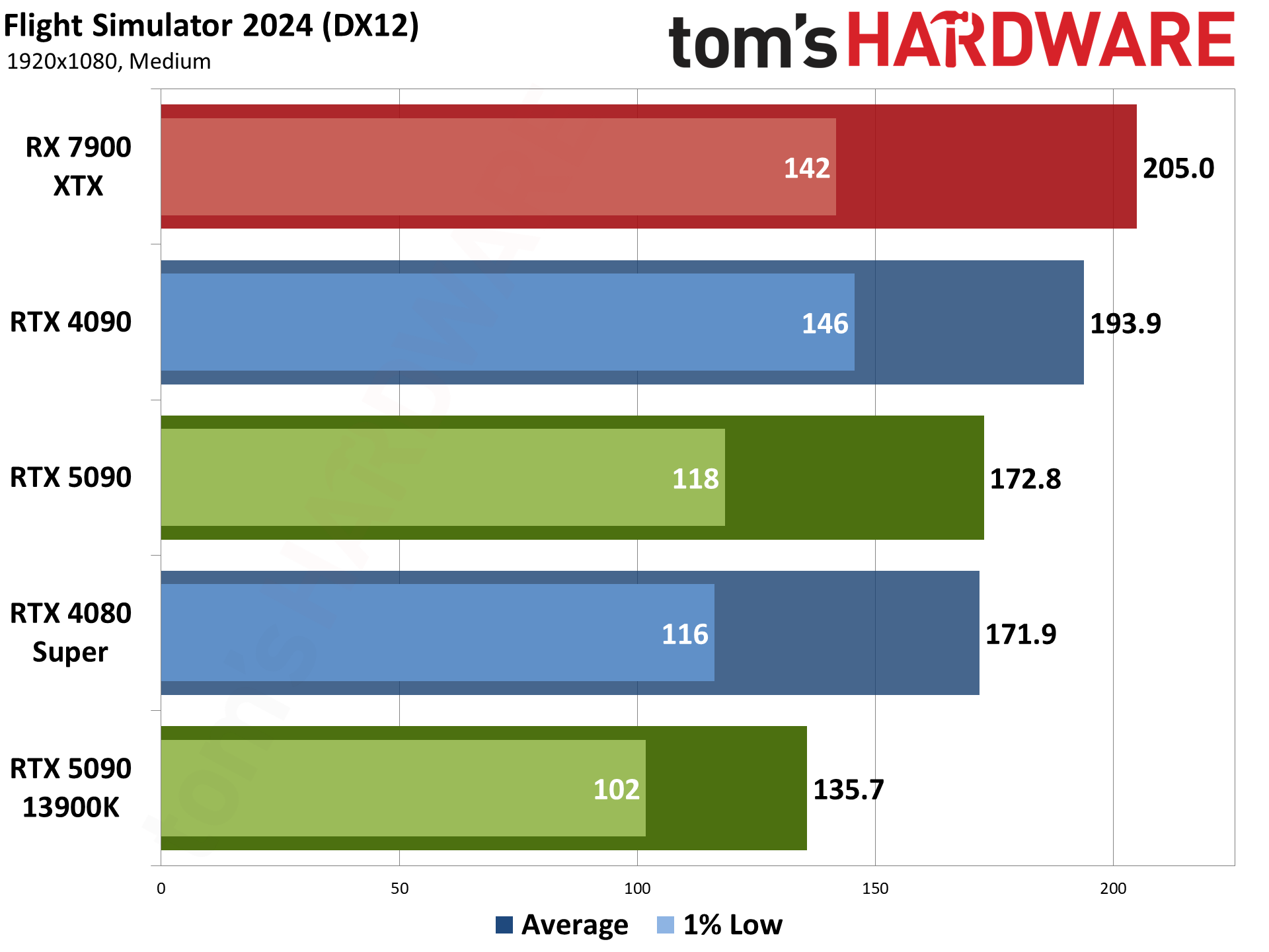

Flight Simulator 2024 is the latest release of the storied franchise, and it's even more demanding than the above 2020 release — with some differences in what sort of hardware it seems to like best. Where the 2020 version really appreciated AMD's X3D processors, the 2024 release tends to be more forgiving to Intel CPUs, thanks to improved DirectX 12 code (DX11 is no longer supported).

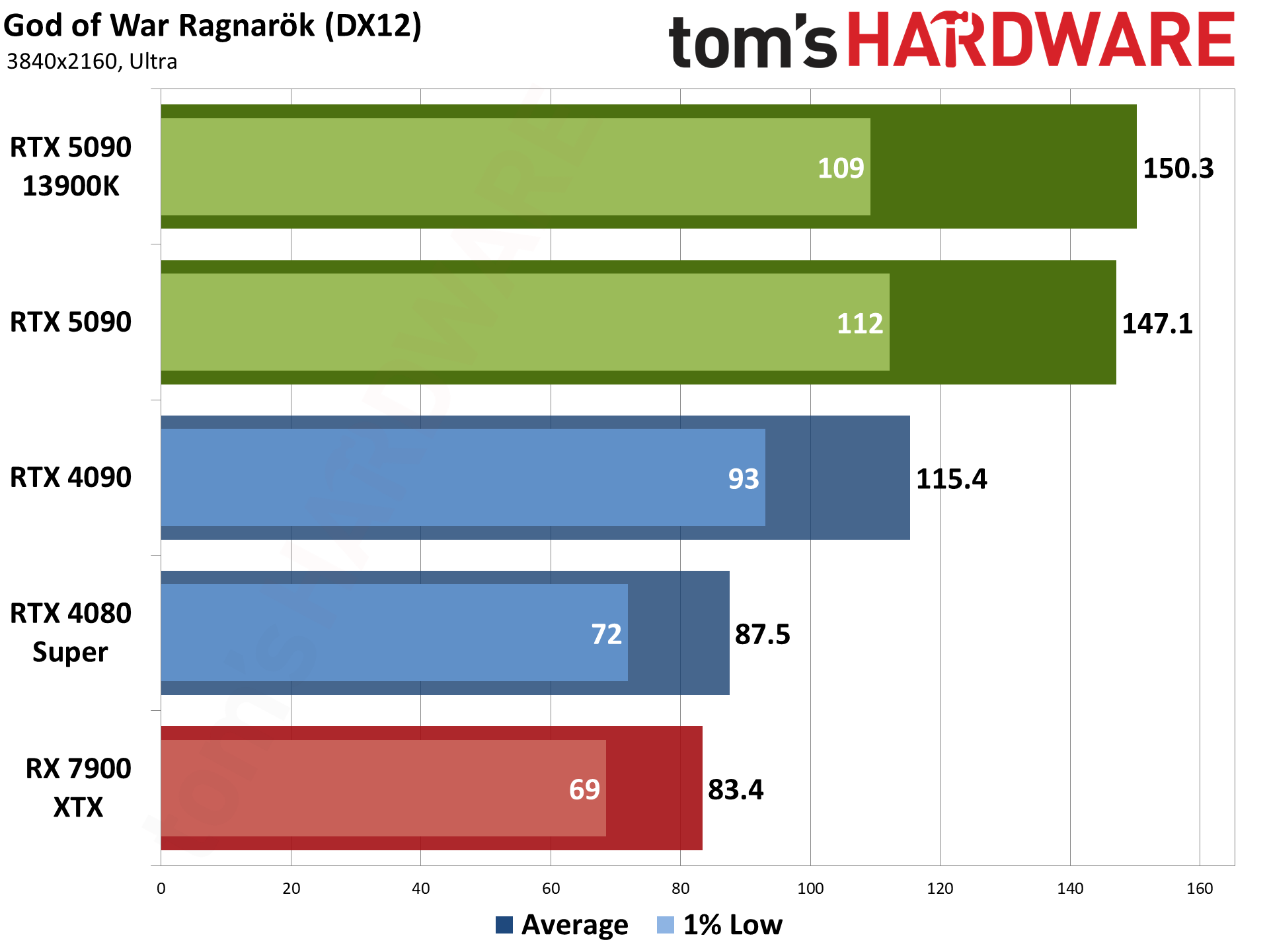

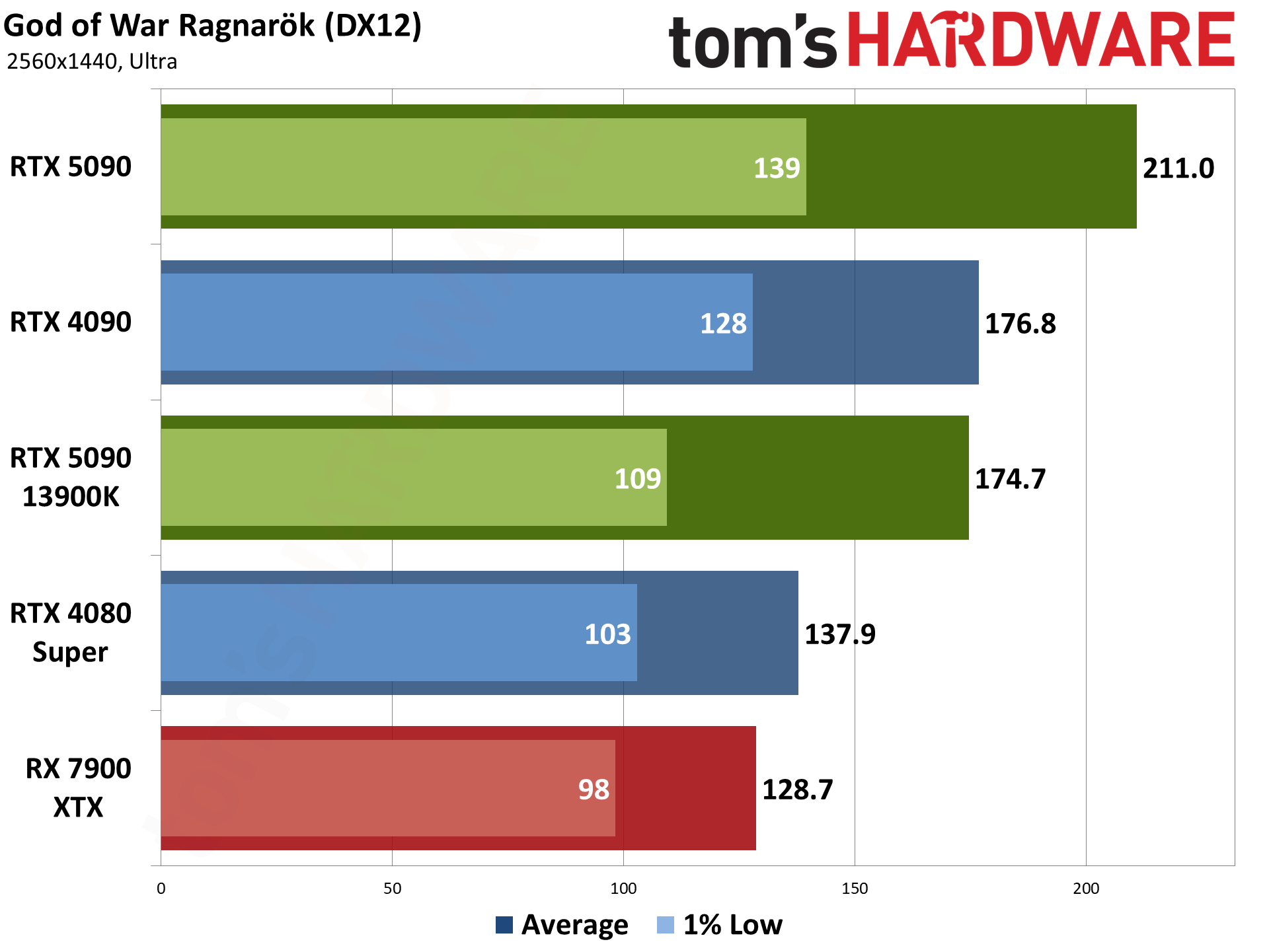

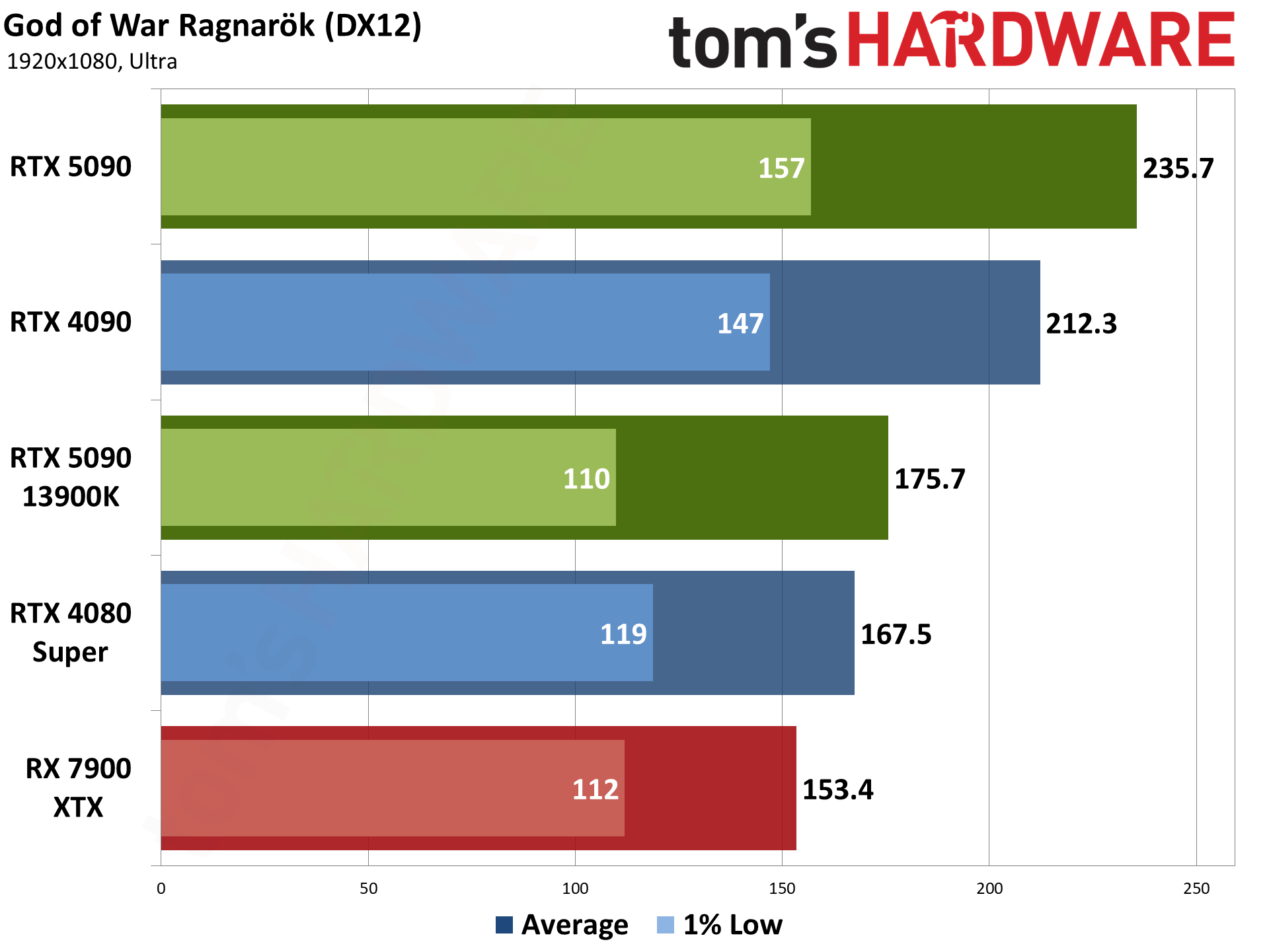

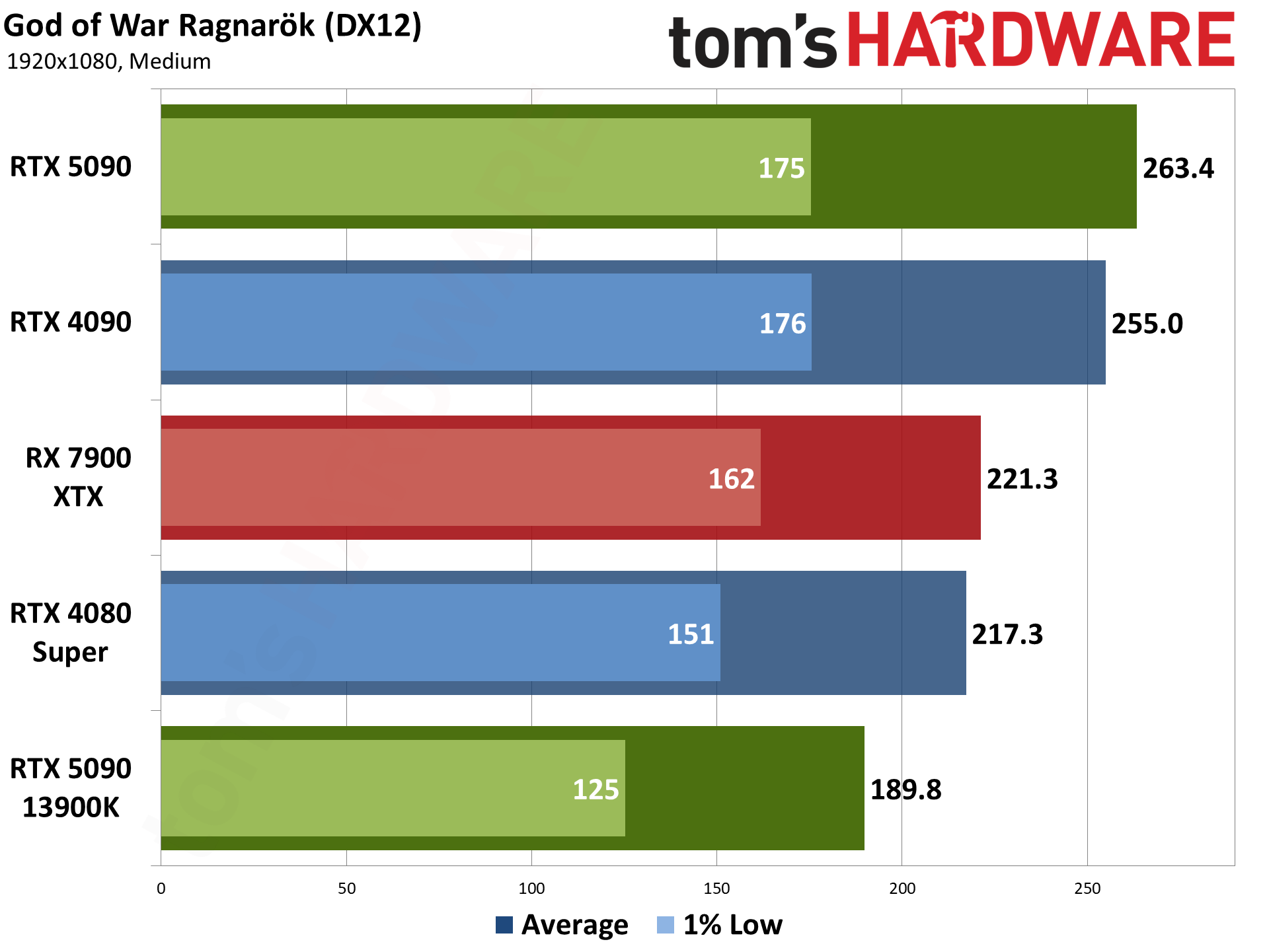

God of War Ragnarök released for the PlayStation two years ago and only recently saw a Windows version. It's AMD promoted, but it also supports DLSS and XeSS alongside FSR3. We ran around the village of Svartalfheim, which is one of the most demanding areas in the game that we've encountered.

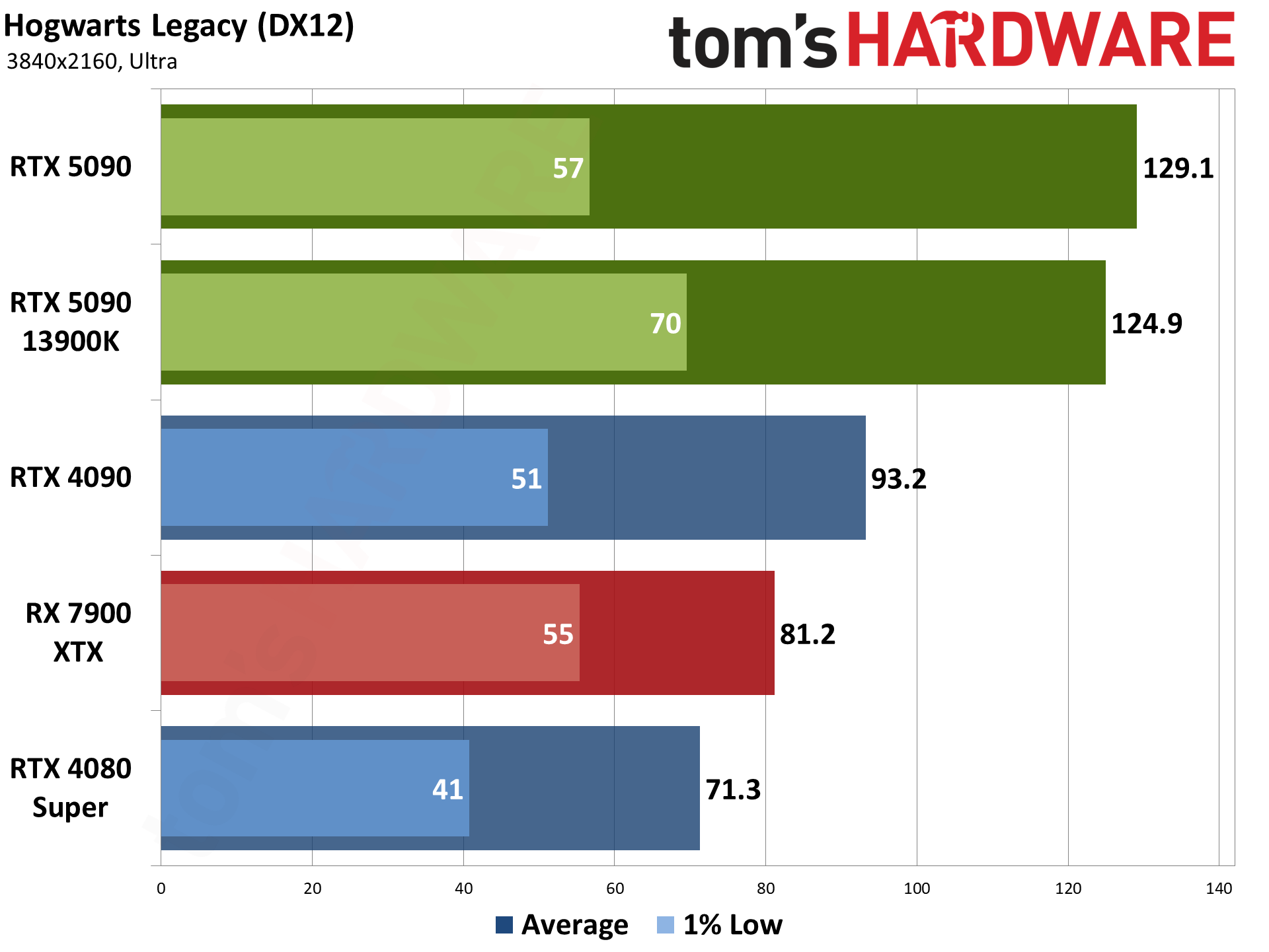

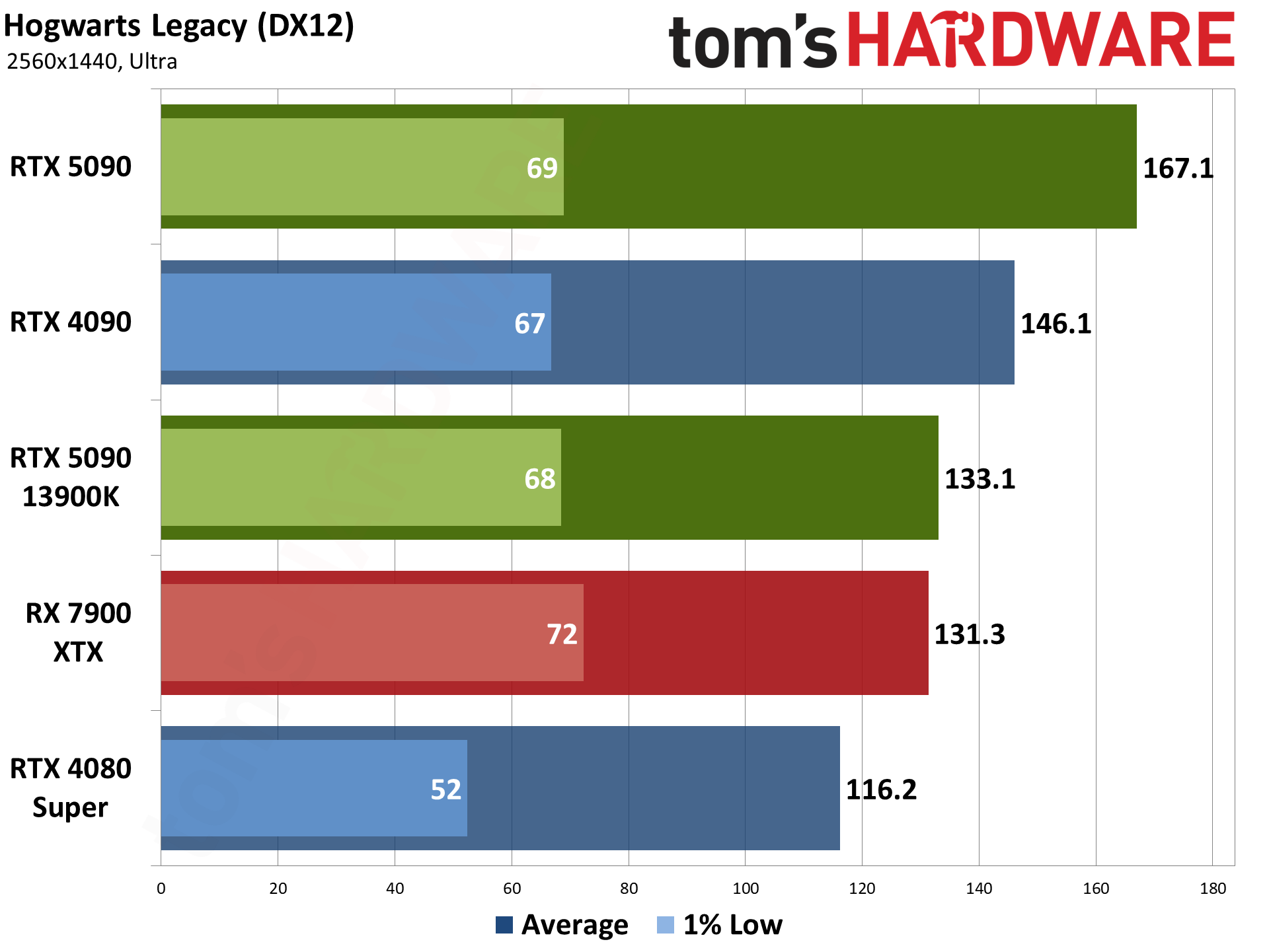

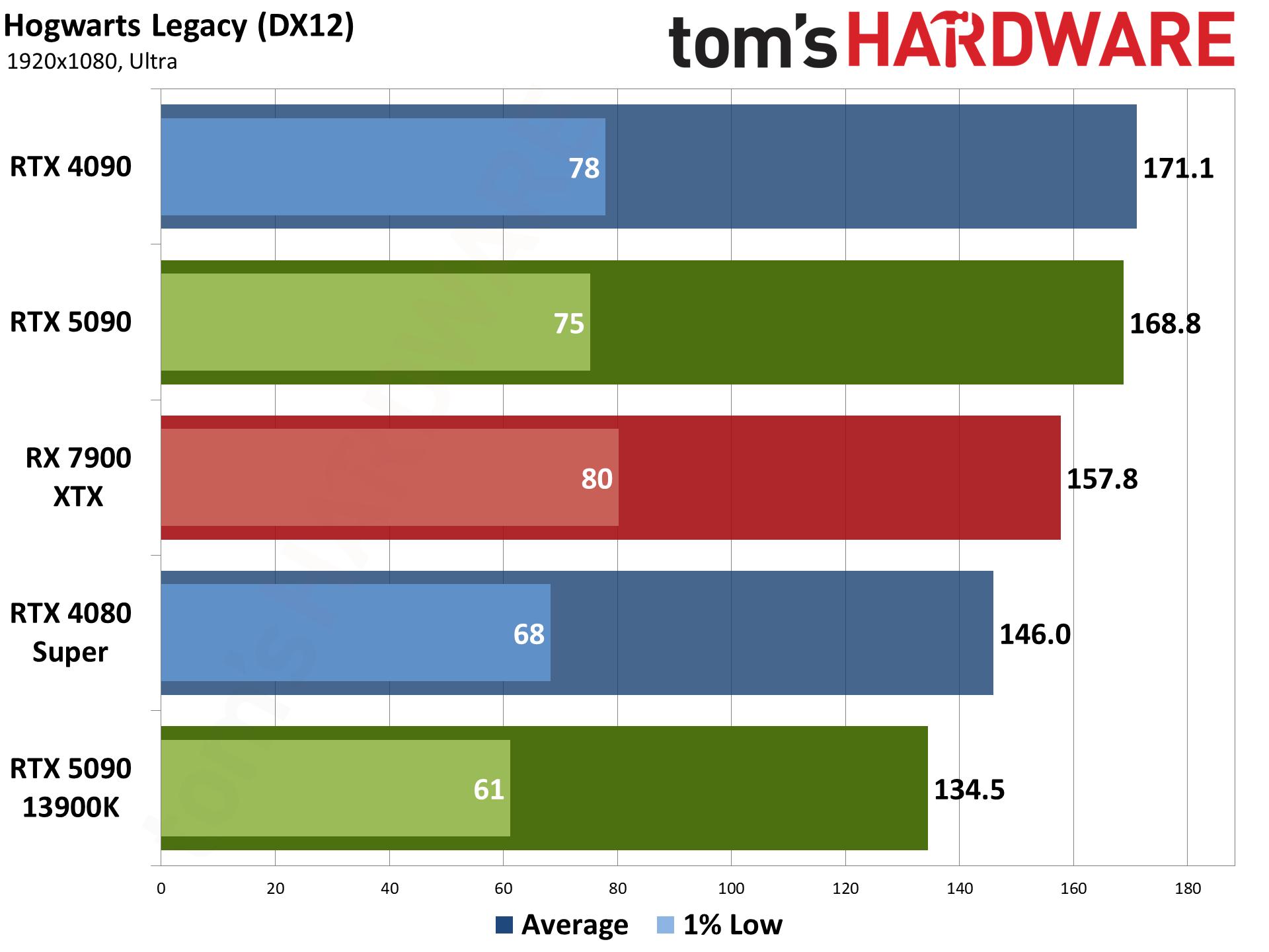

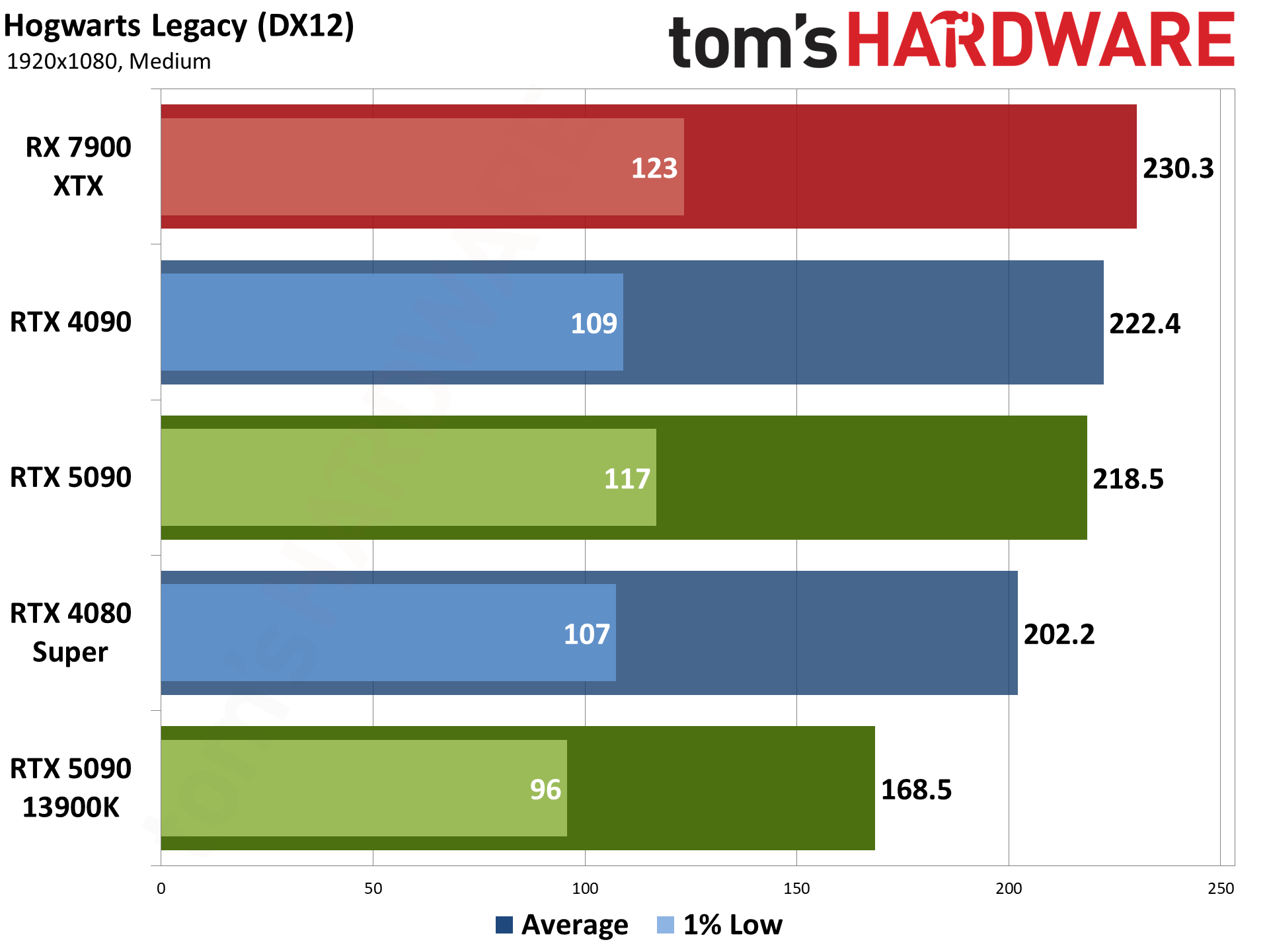

Hogwarts Legacy came out in early 2023, and it uses Unreal Engine 4. Like so many Unreal Engine games, it can look quite nice but also has some performance issues with certain settings. Ray tracing in particular can bloat memory use and tank framerates, and also causes hitching, so we've opted to test without ray tracing. (We'll do some supplemental RT tests with this one on page six.)

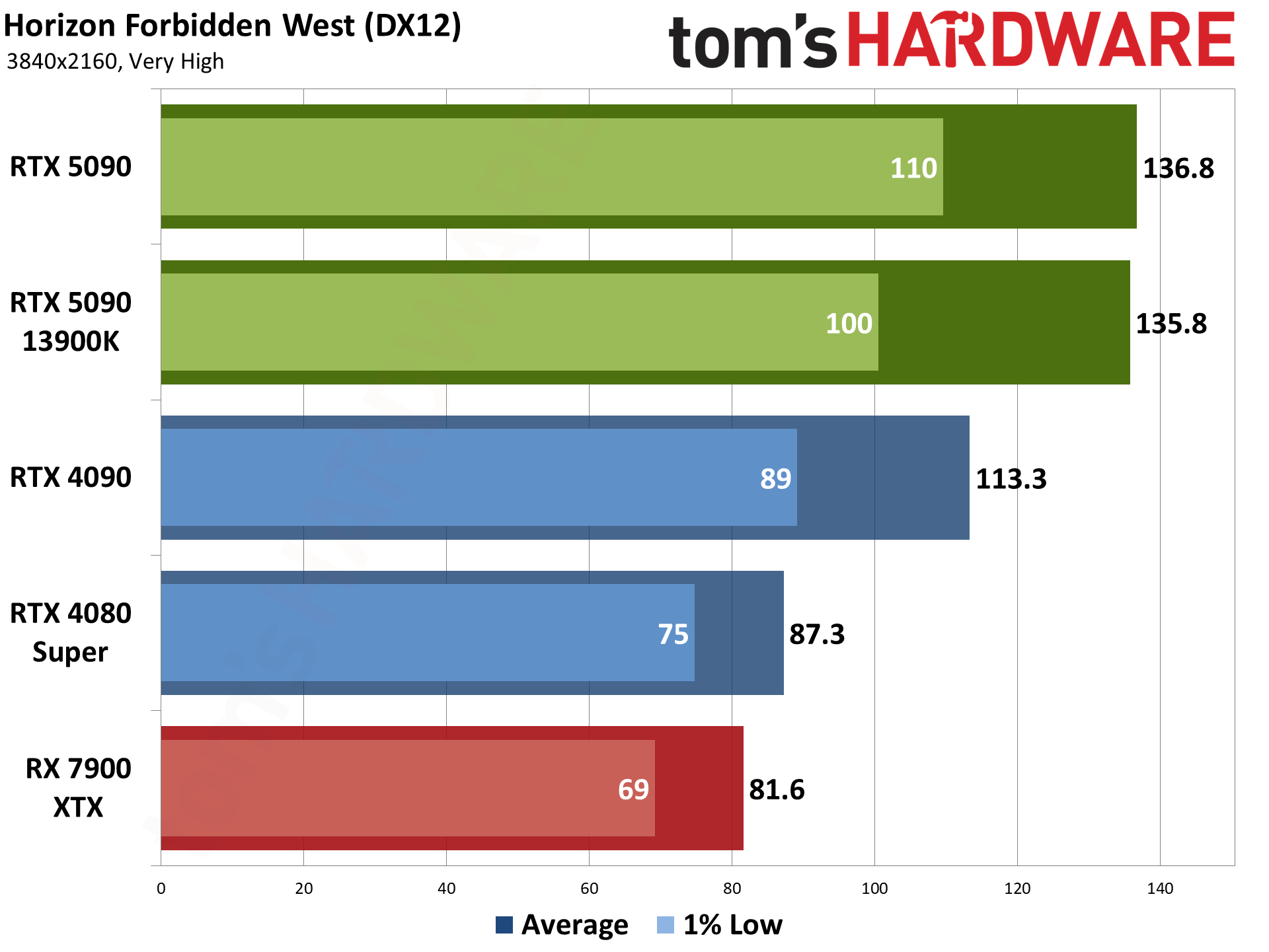

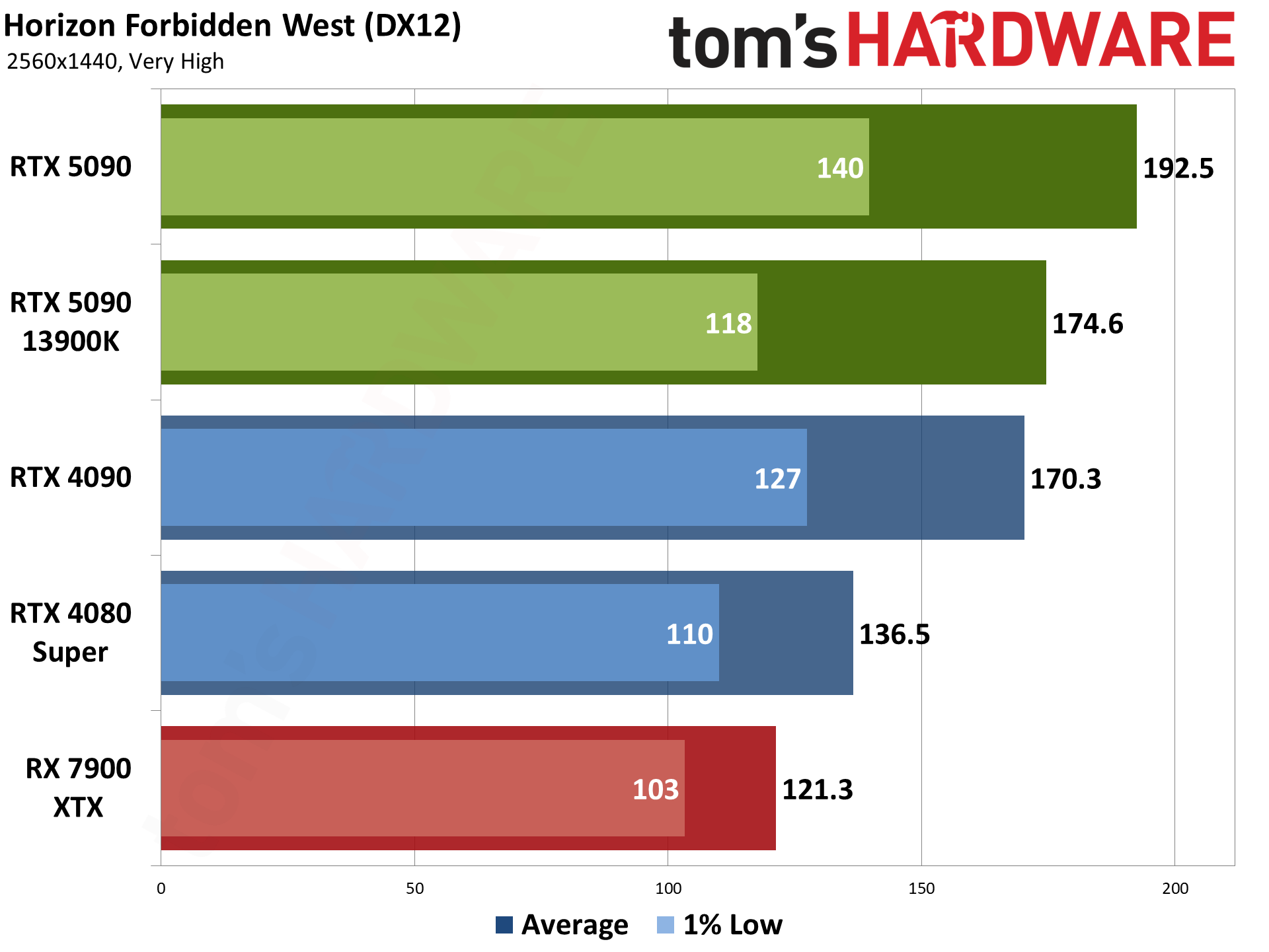

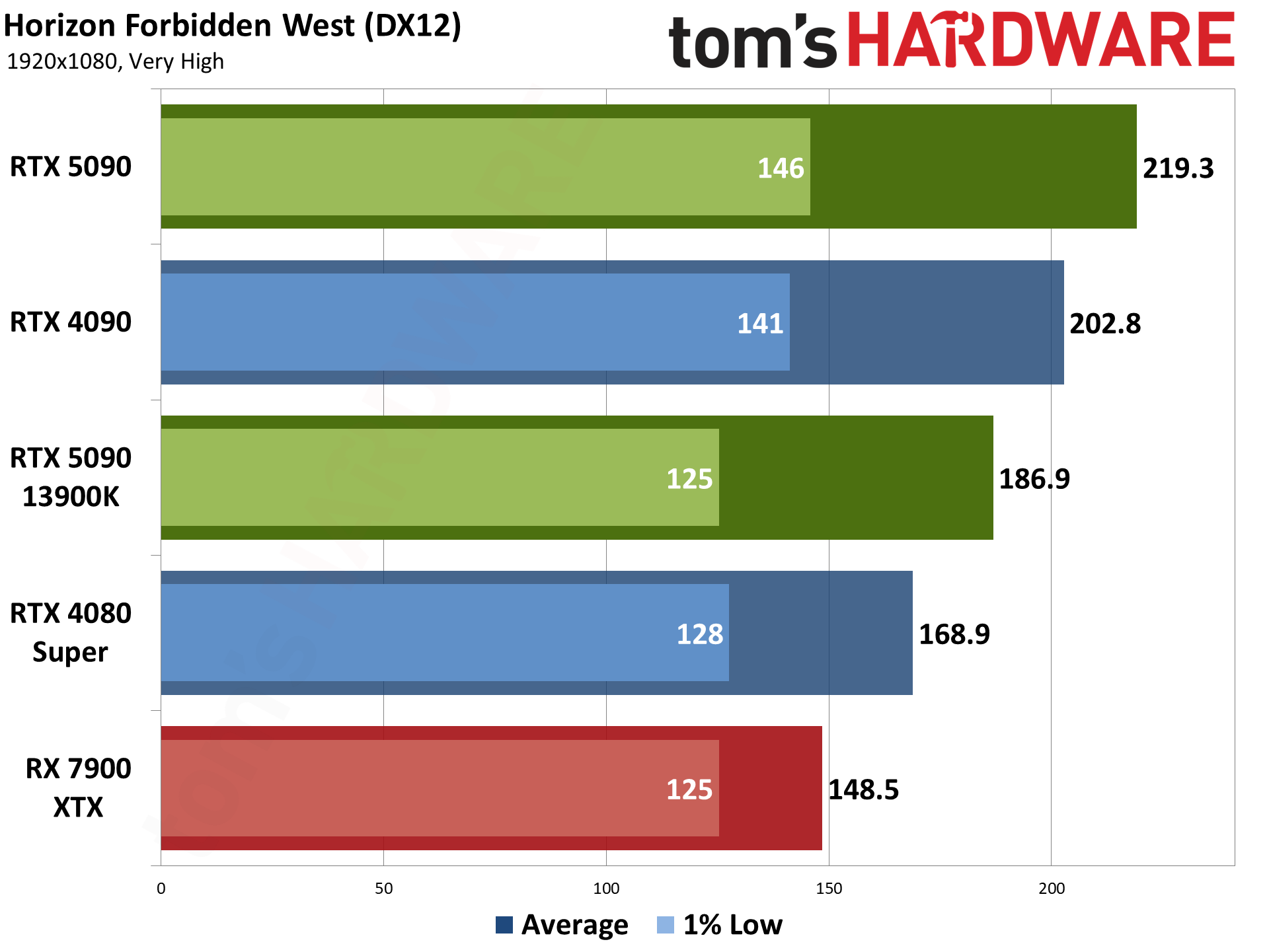

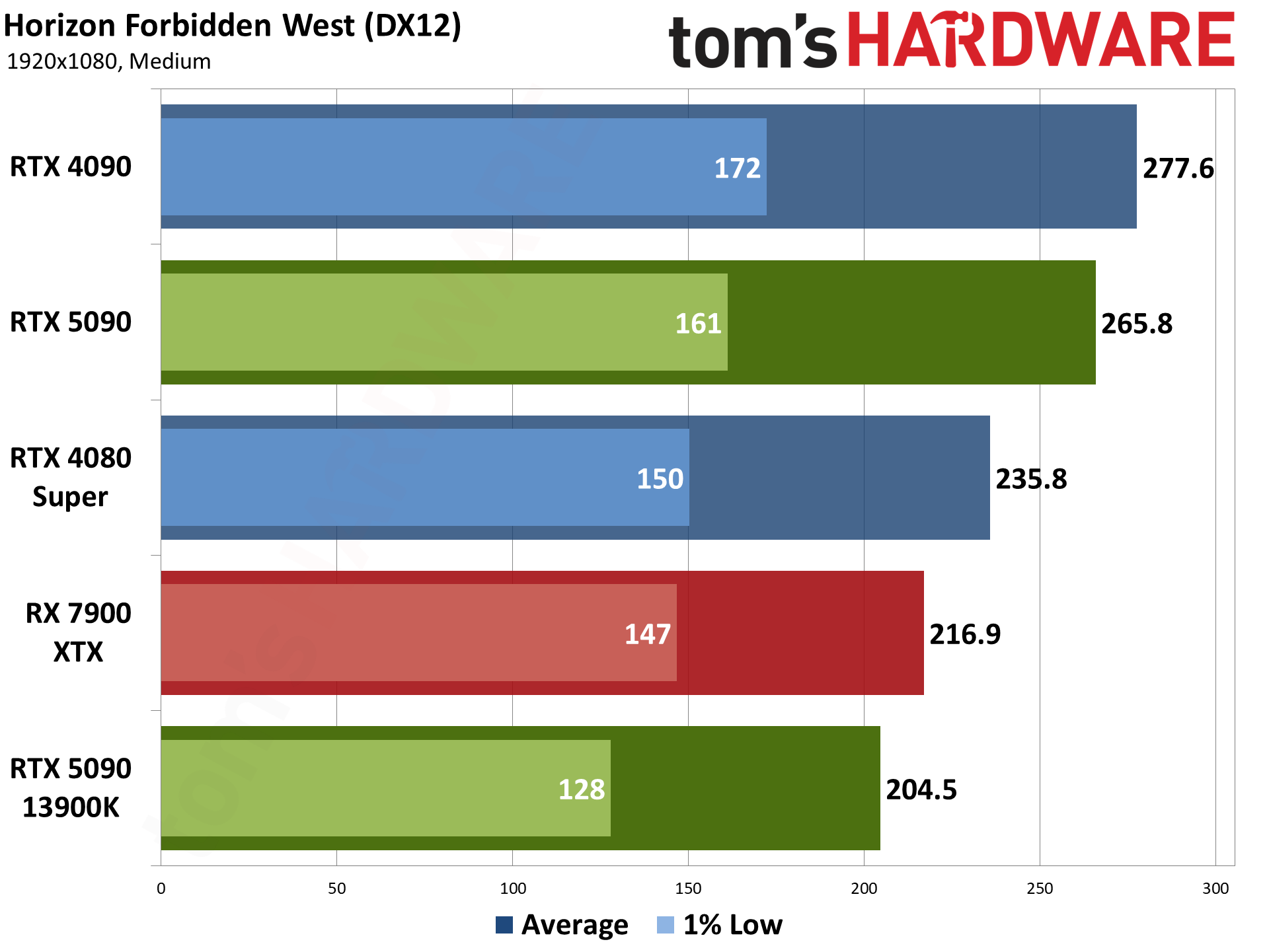

Horizon Forbidden West is another two years old PlayStation port, using the Decima engine. The graphics are good, though I've heard at least a few people that think it looks worse than its predecessor — excessive blurriness being a key complaint. But after using Horizon Zero Dawn for a few years, it felt like a good time to replace it.

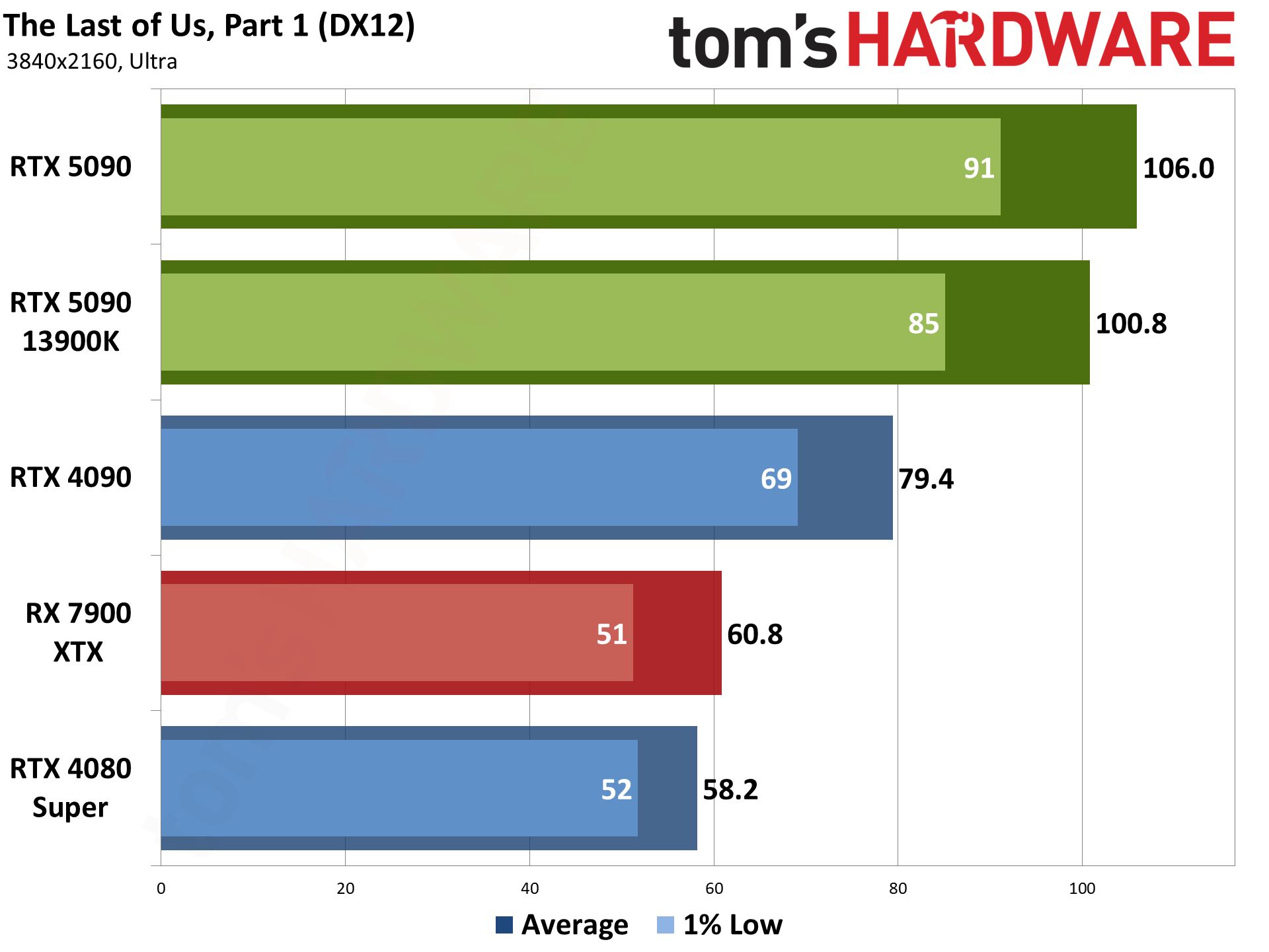

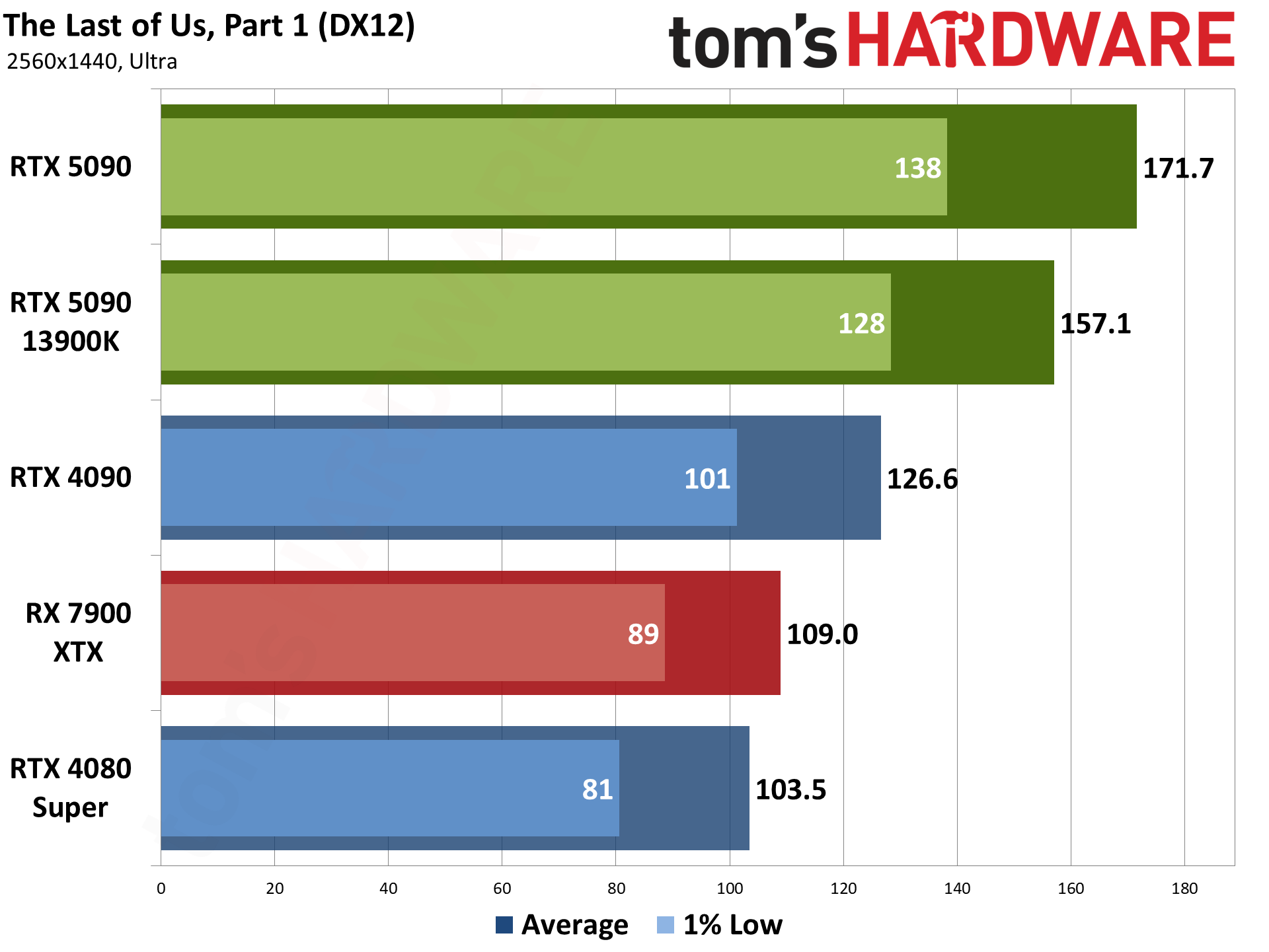

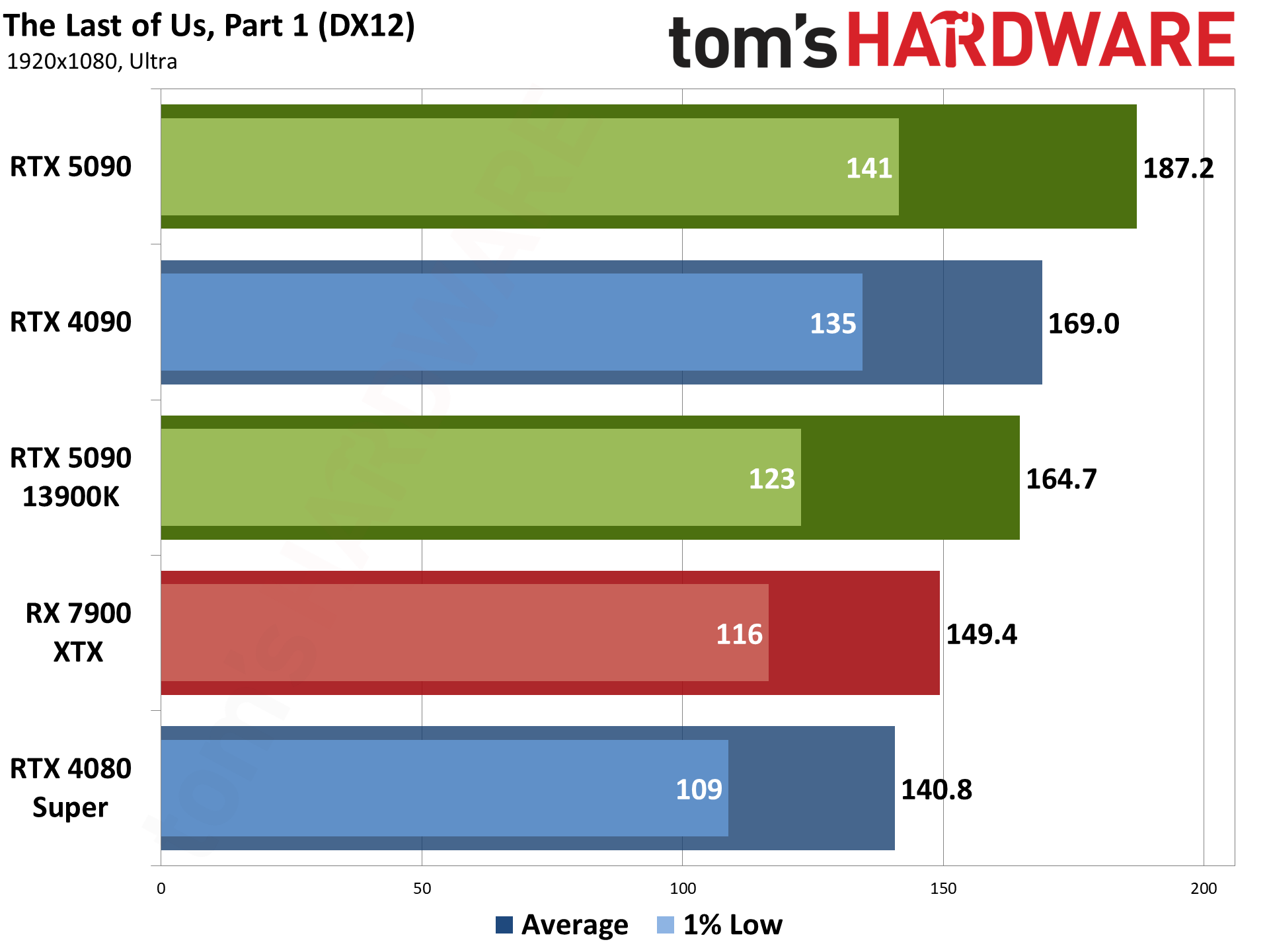

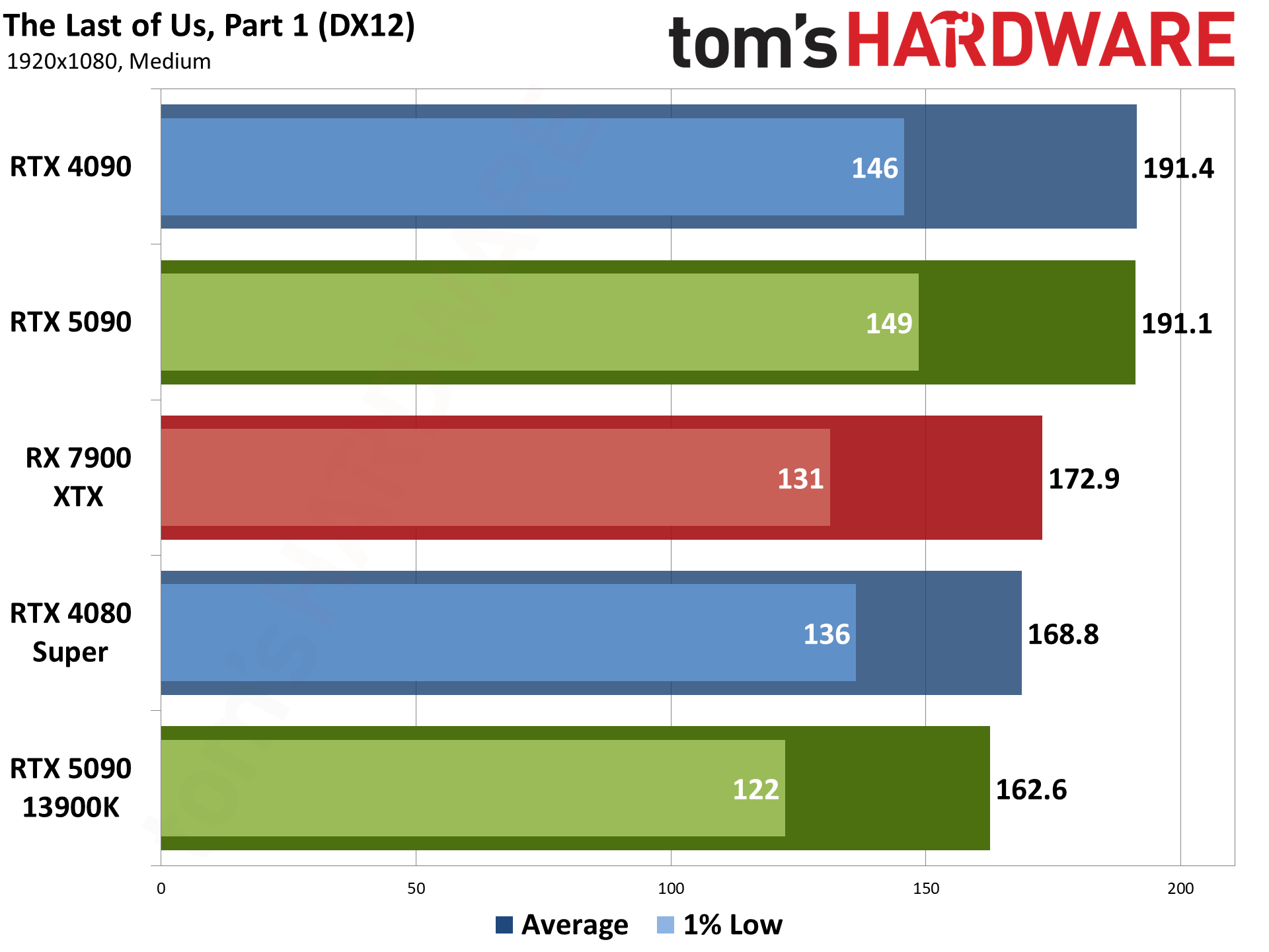

The Last of Us, Part 1 is another PlayStation port, though it's been out on PC for about 20 months now. It's also an AMD promoted game, and really hits the VRAM hard at higher quality settings. And if you have 32GB like the RTX 5090, it's not a problem.

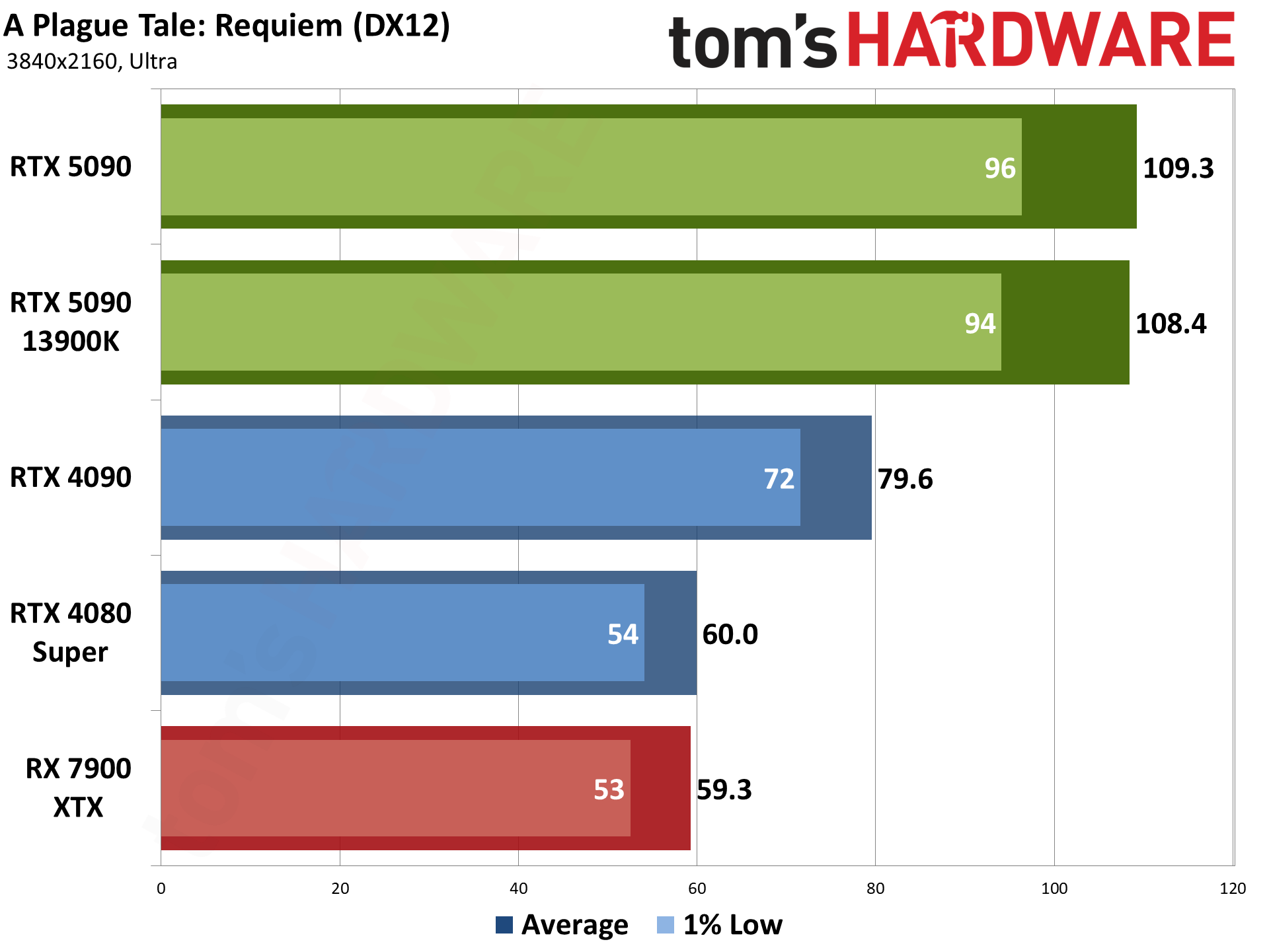

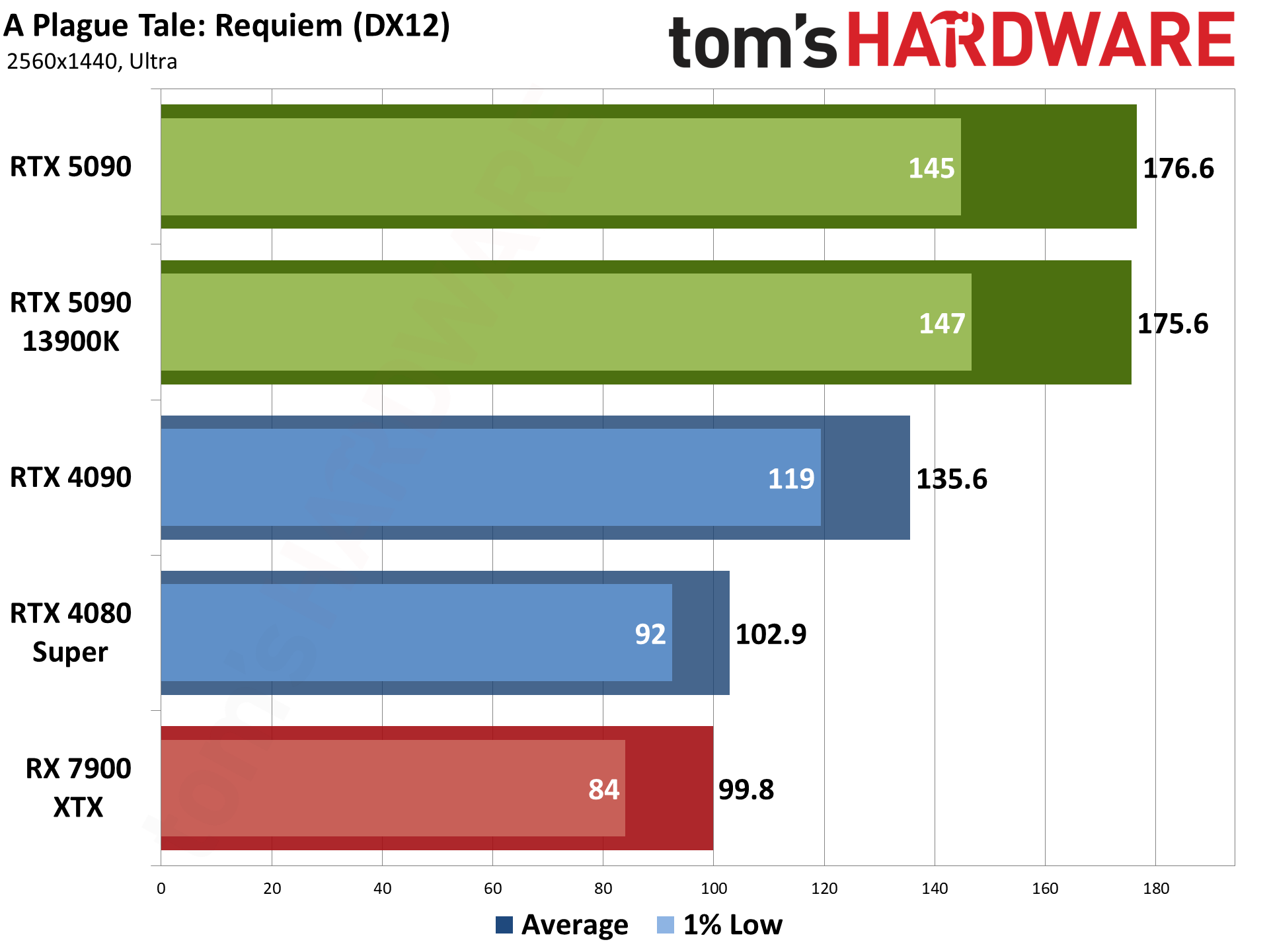

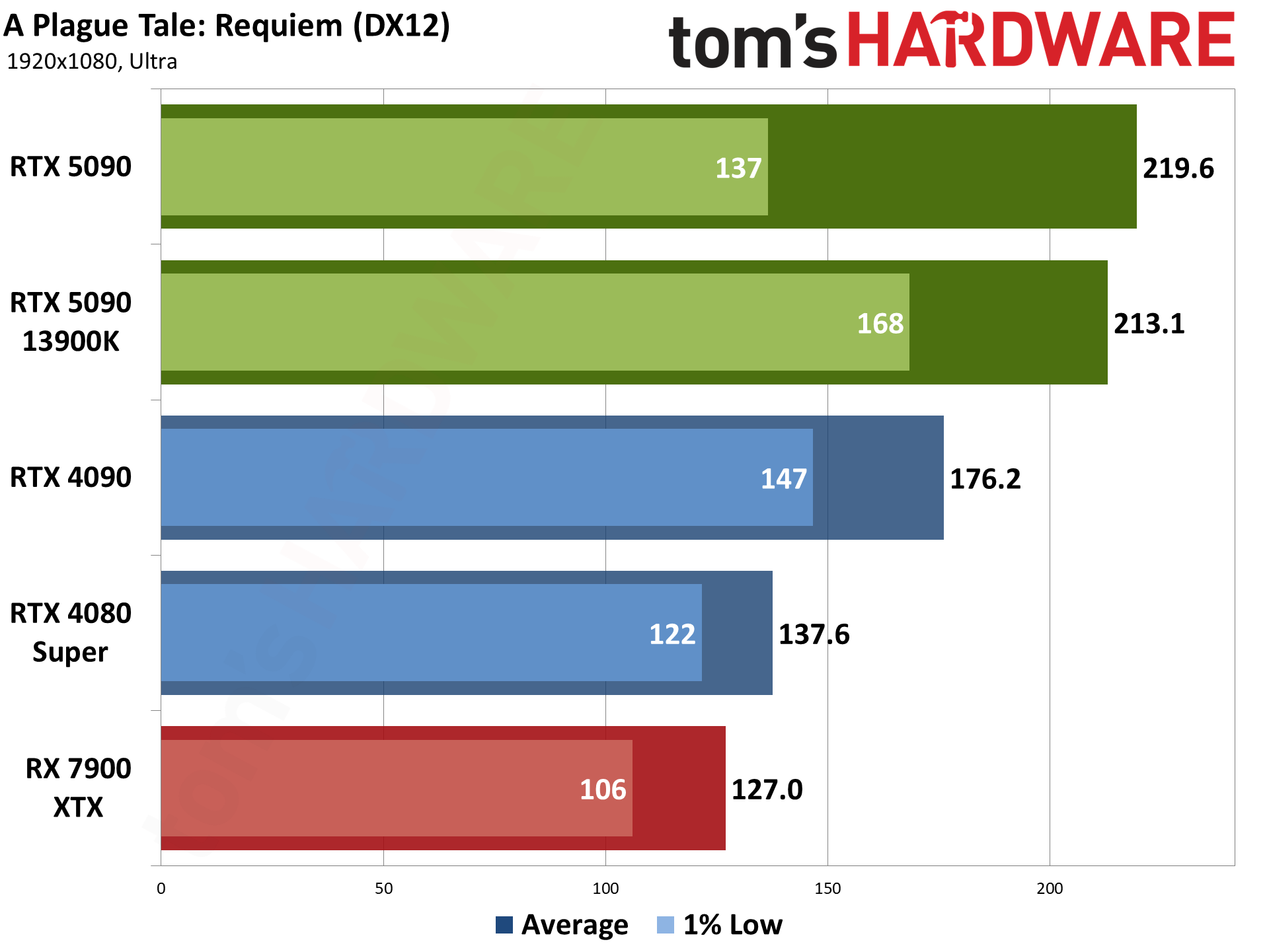

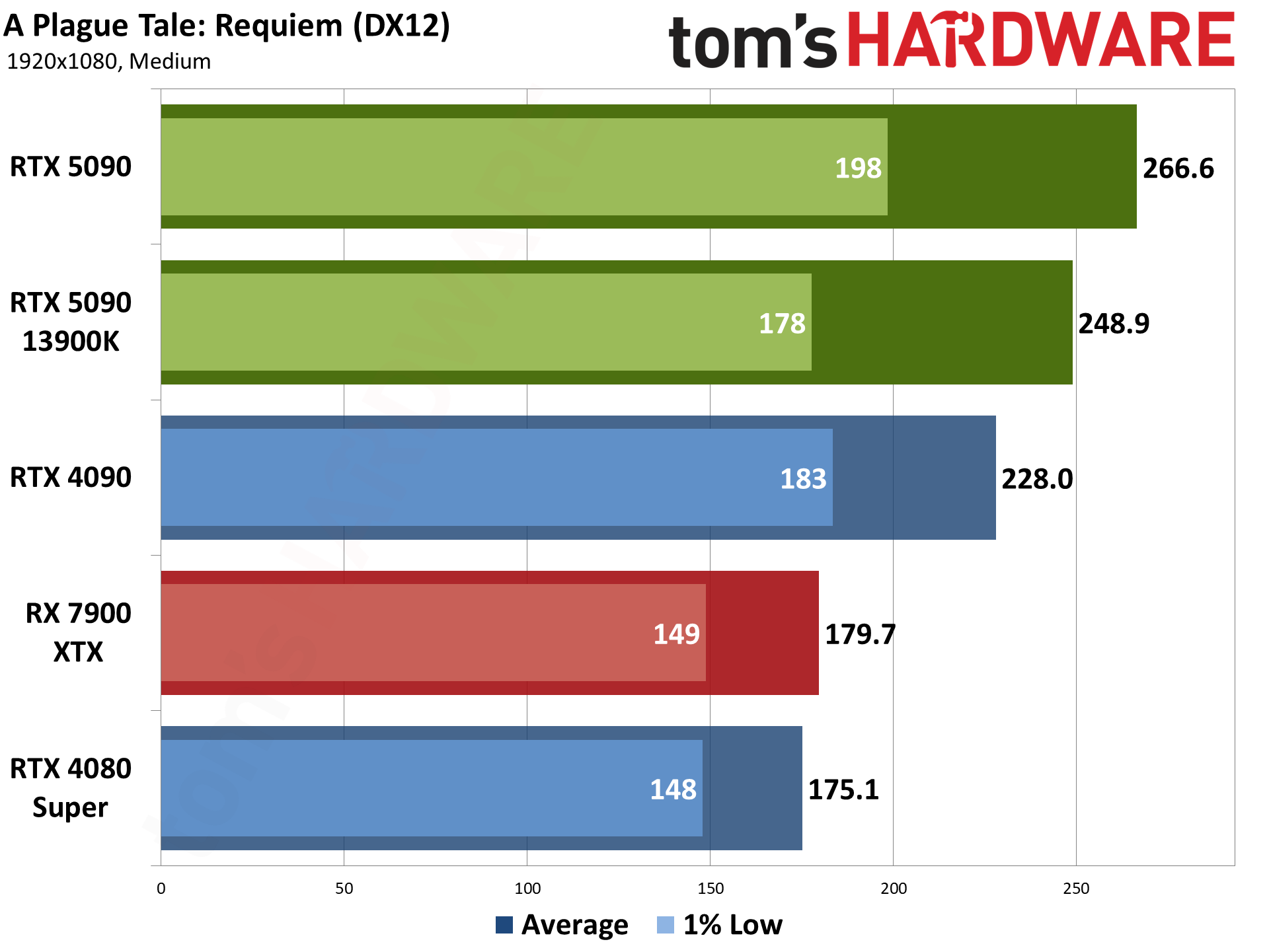

A Plague Tale: Requiem uses the Zouna engine and runs on the DirectX 12 API. It's an Nvidia promoted game that supports DLSS 3, but neither FSR or XeSS. (It was one of the first DLSS 3 enabled games as well.) It has RT effects, but only for shadows, so it doesn't really improve the look of the game and tanks performance.

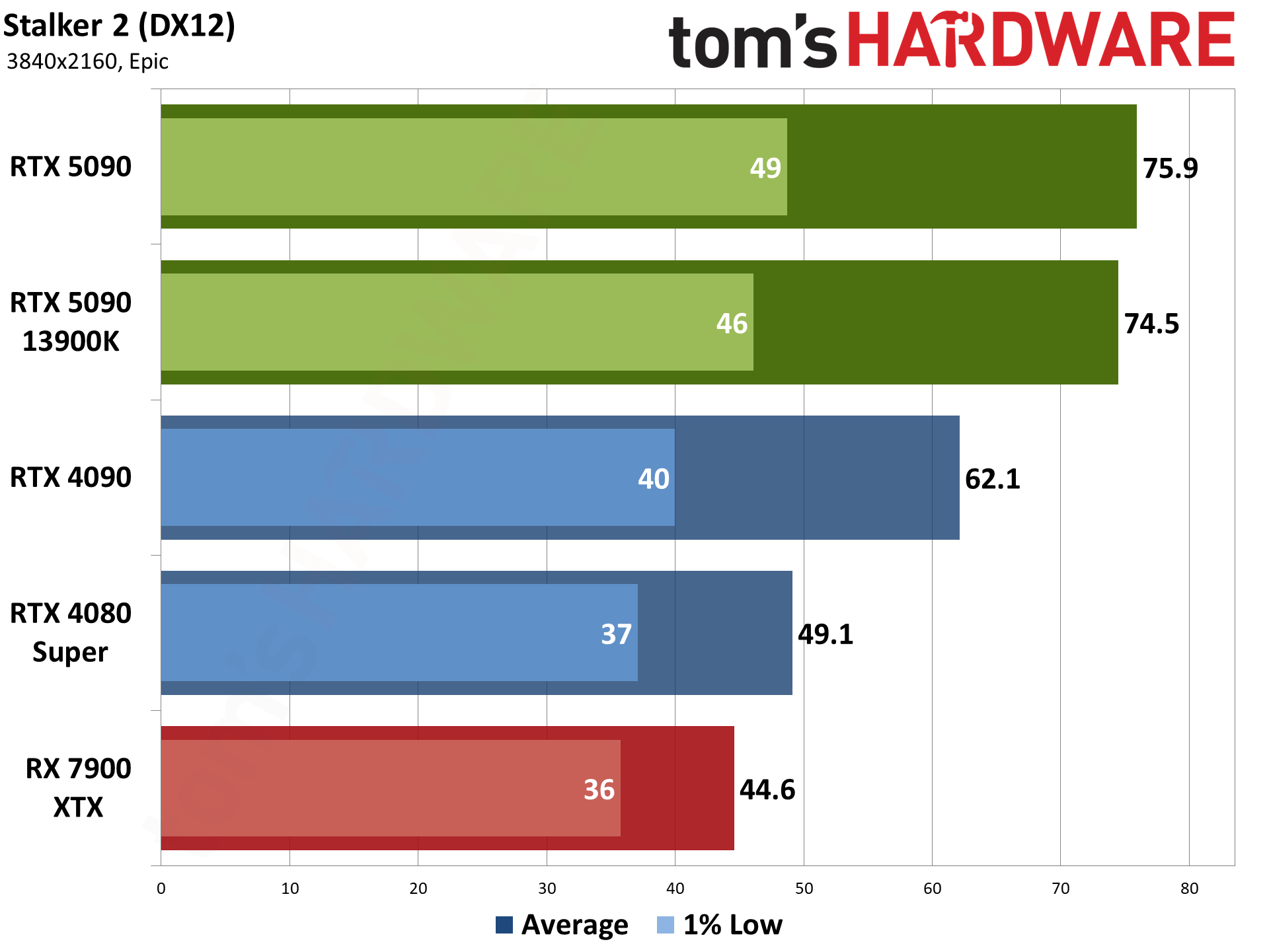

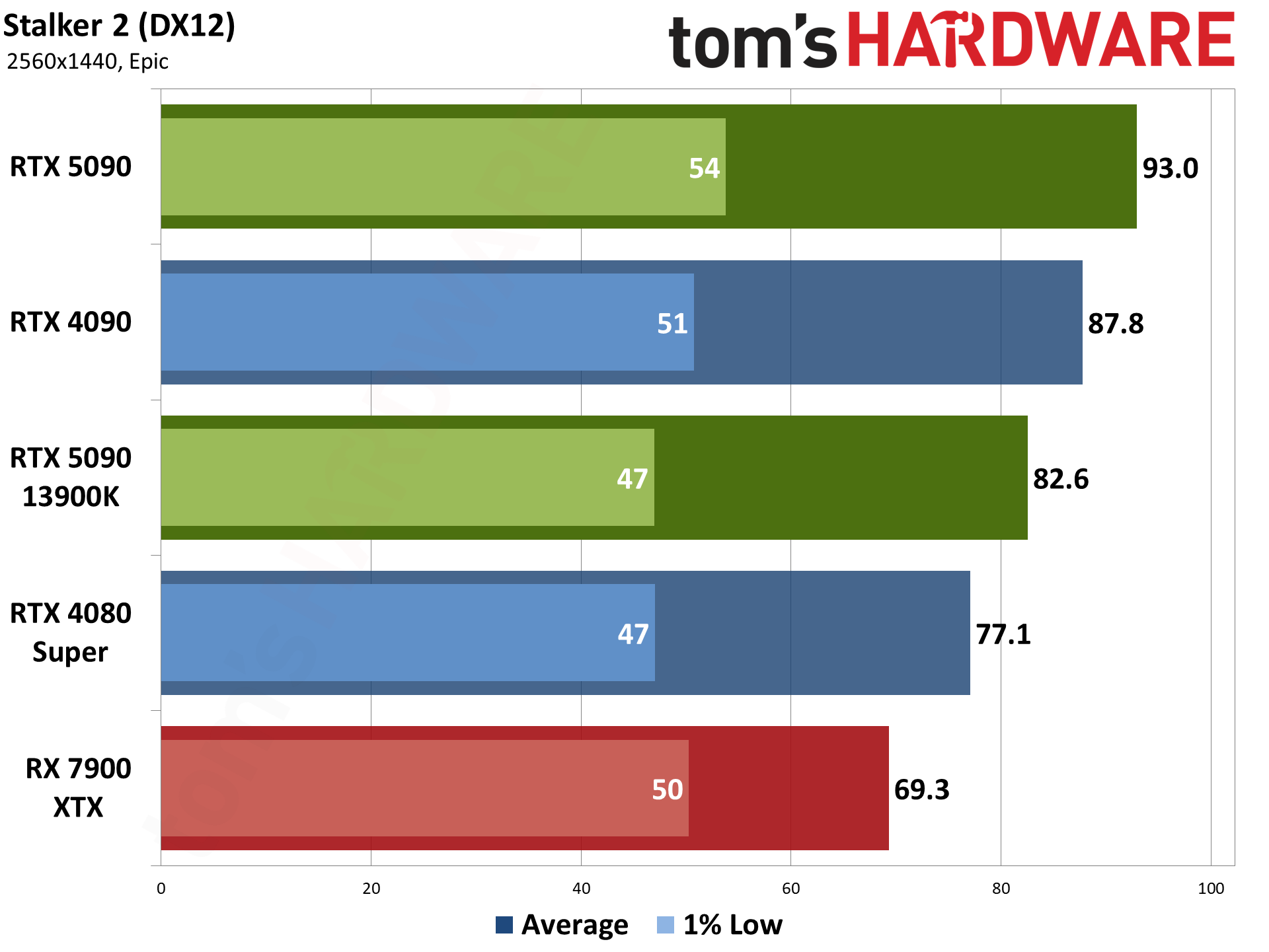

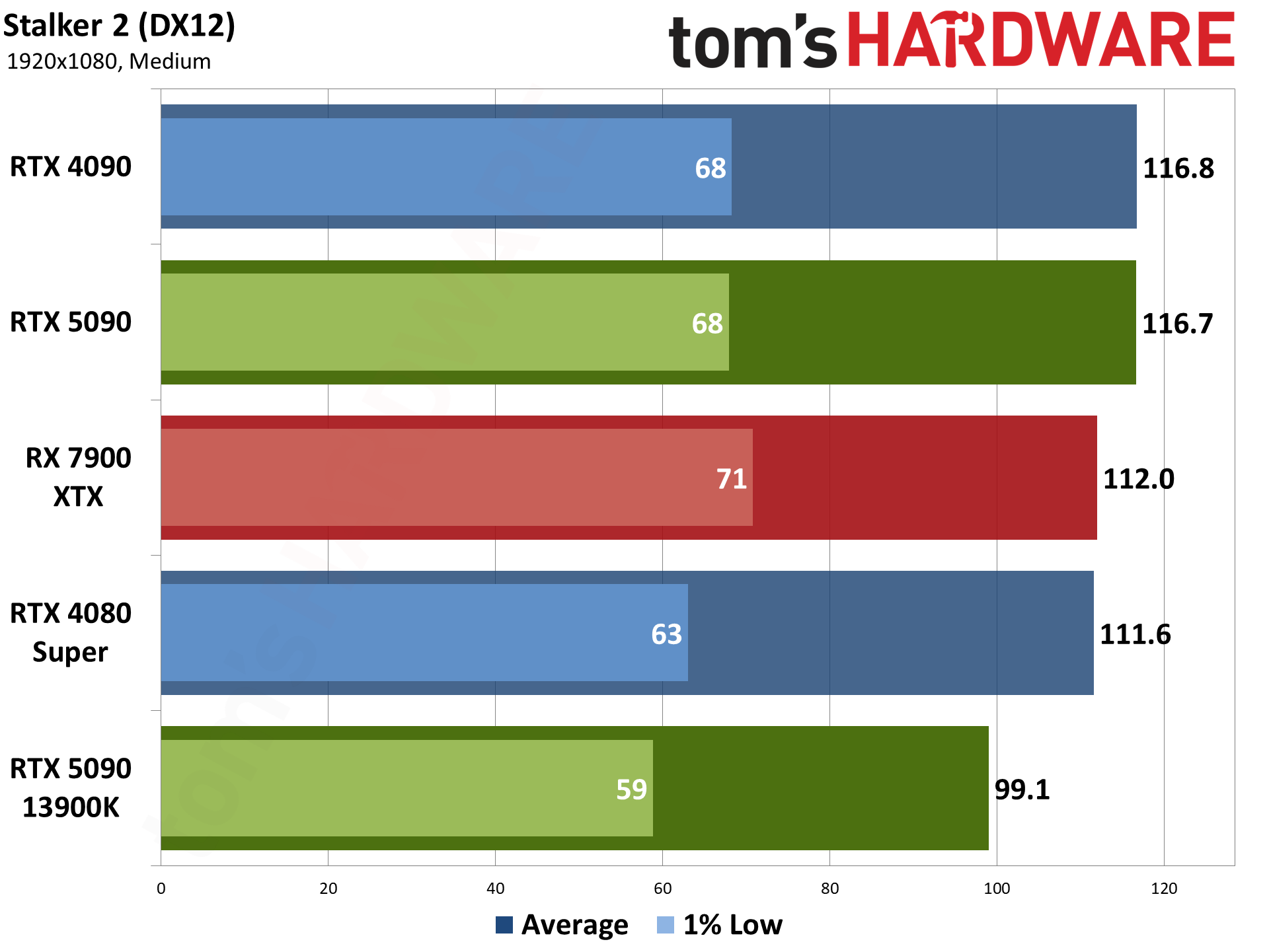

Stalker 2 is another Unreal Engine 5 game, but without any hardware ray tracing support — the Lumen engine also does "software RT" that's basically just fancy rasterization as far as the visuals are concerned, though it's still quite taxing. VRAM can also be a serious problem when trying to run the epic preset, with 8GB cards struggling at most resolutions.

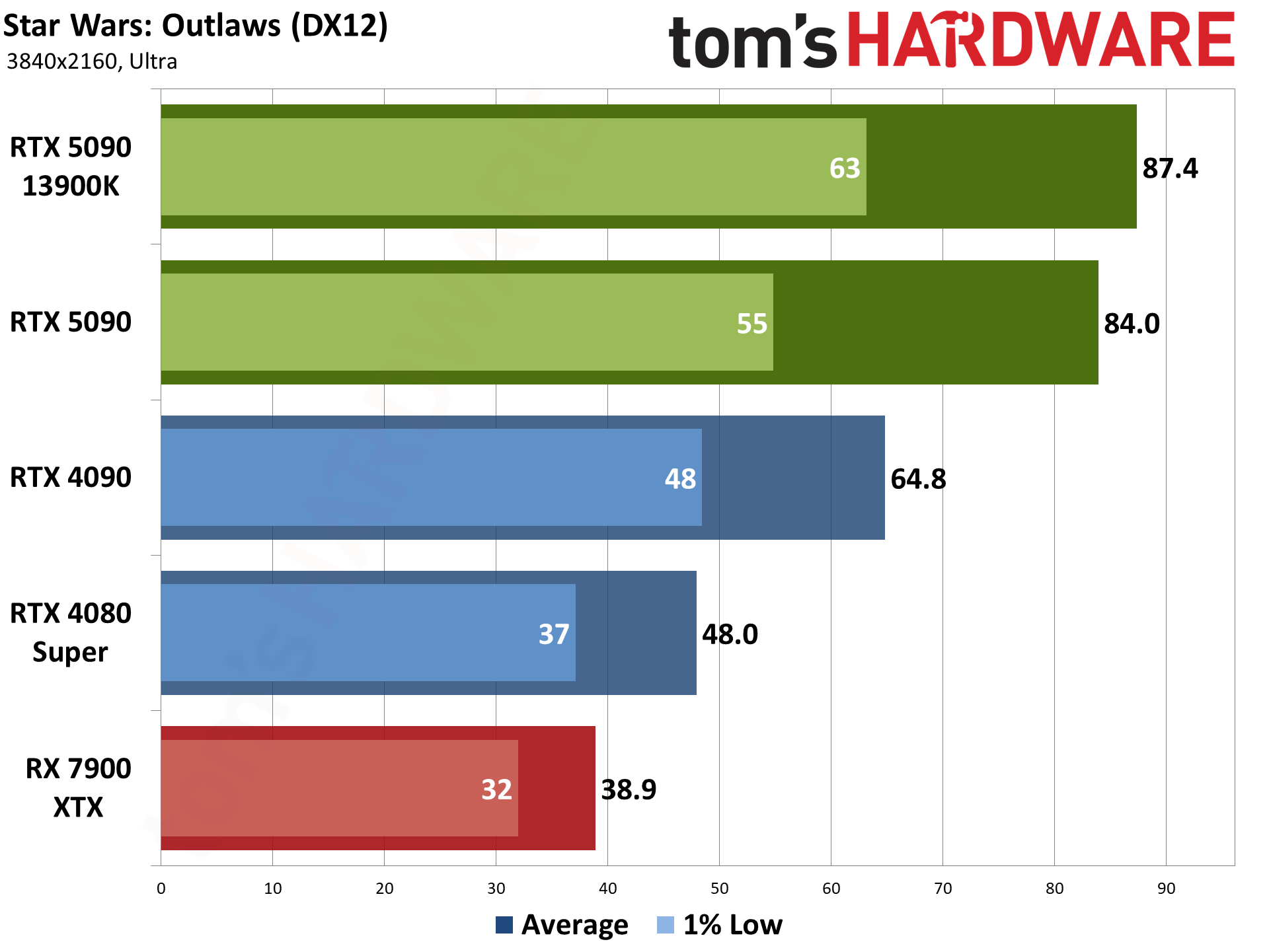

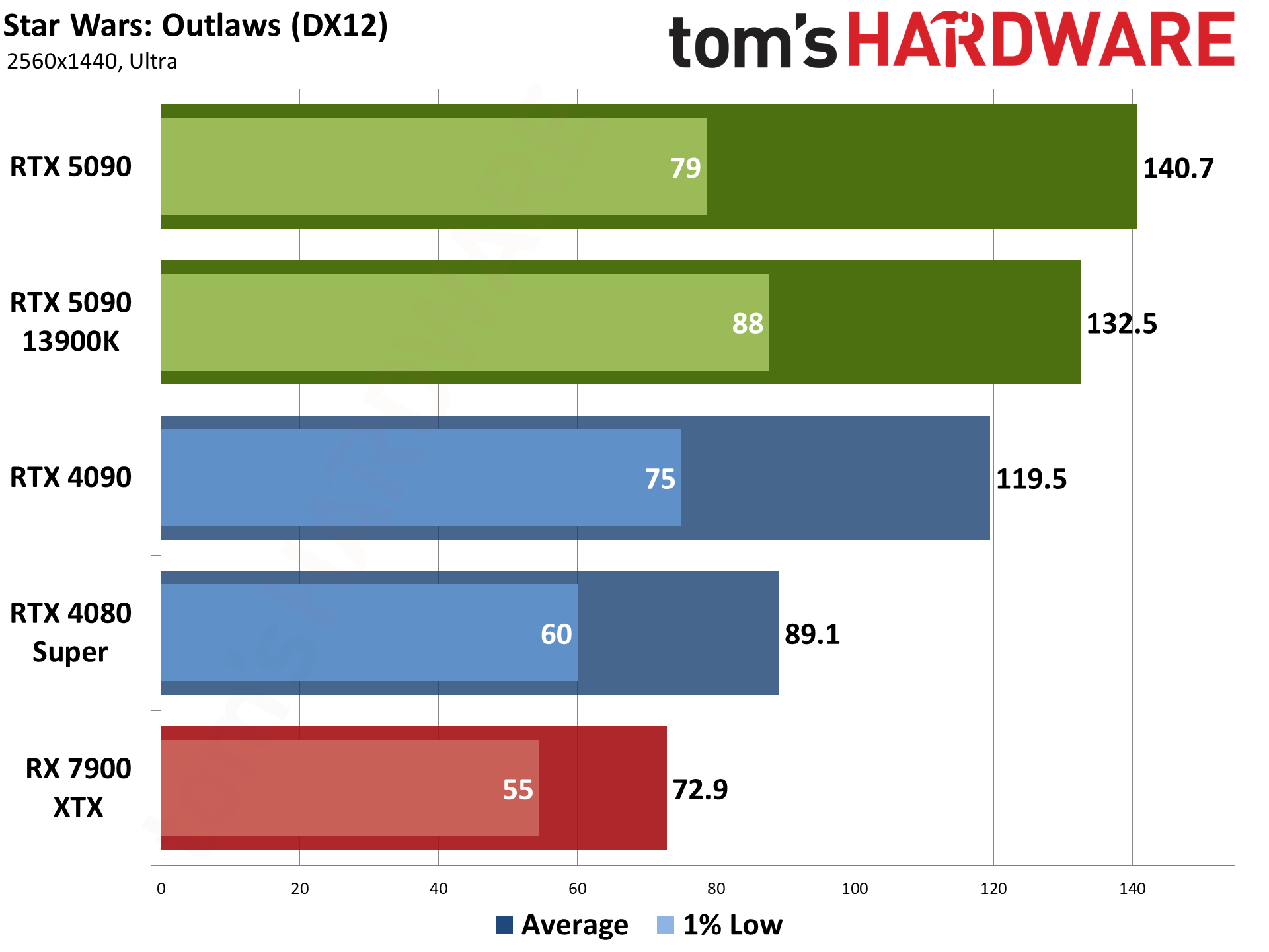

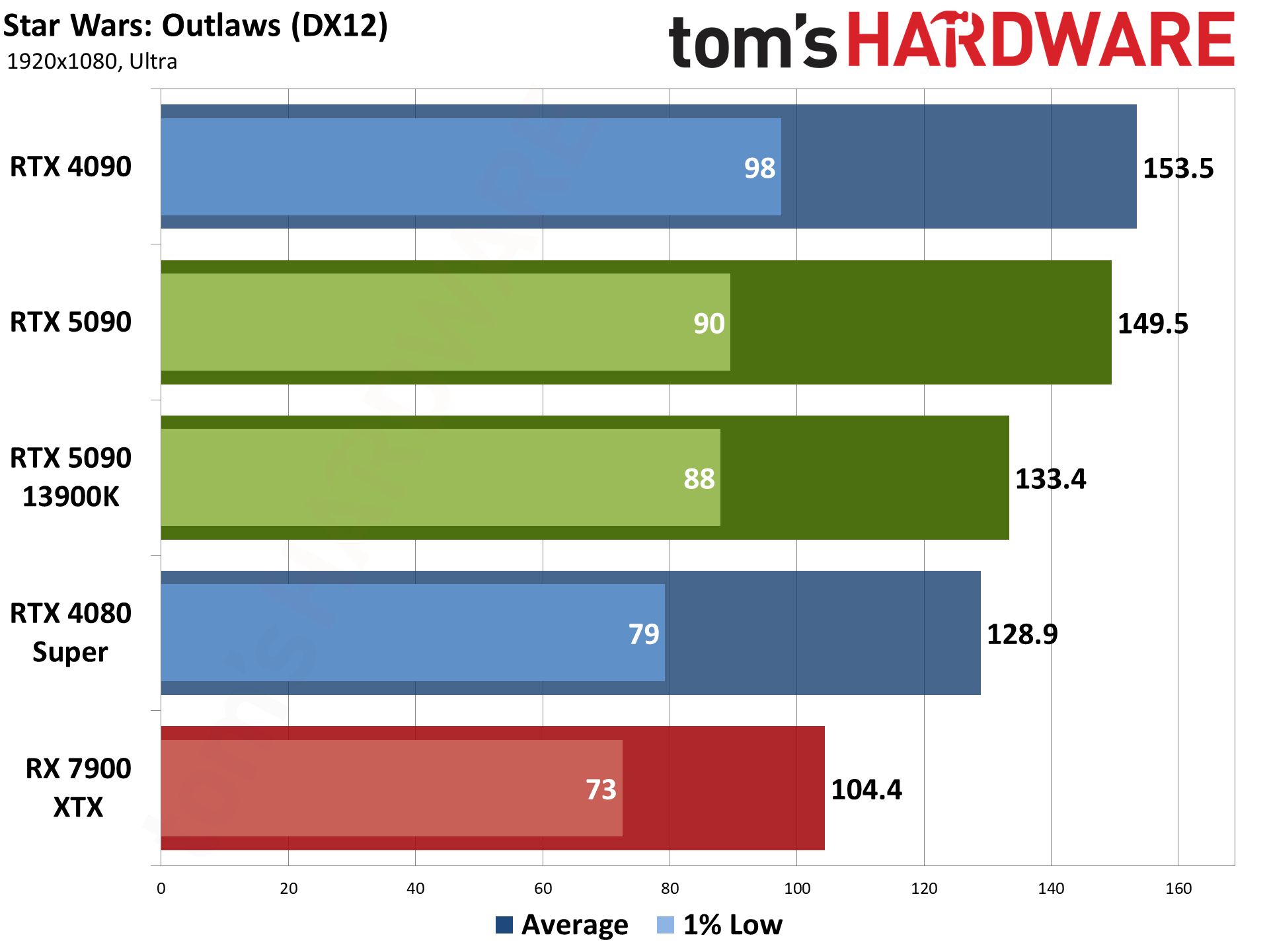

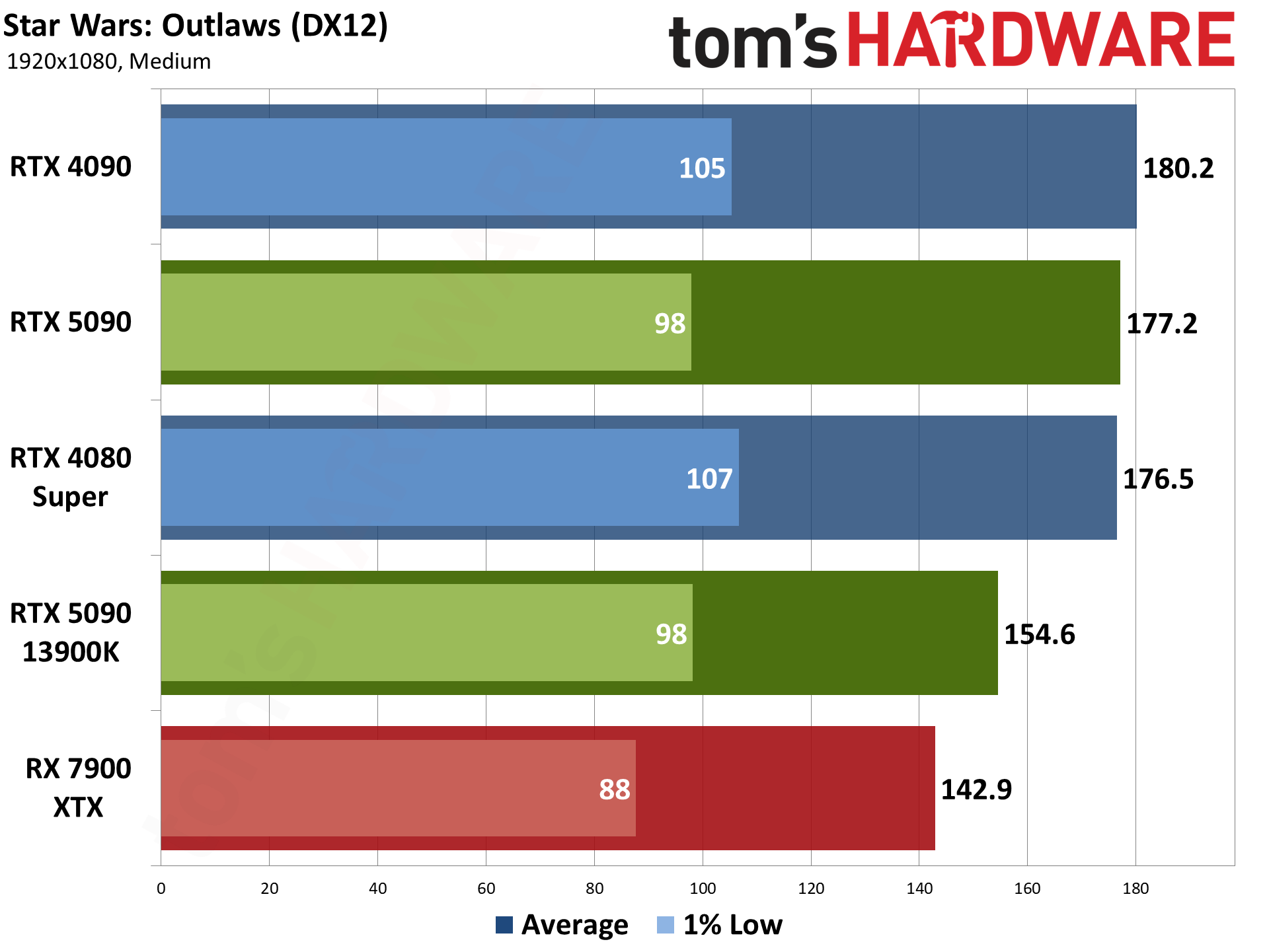

Star Wars Outlaws uses the Snowdrop engine and we wanted to include a mix of options. It also has a bunch of RT options, though our baseline tests don't enable ray tracing. We'll look at RT performance in our supplemental testing.

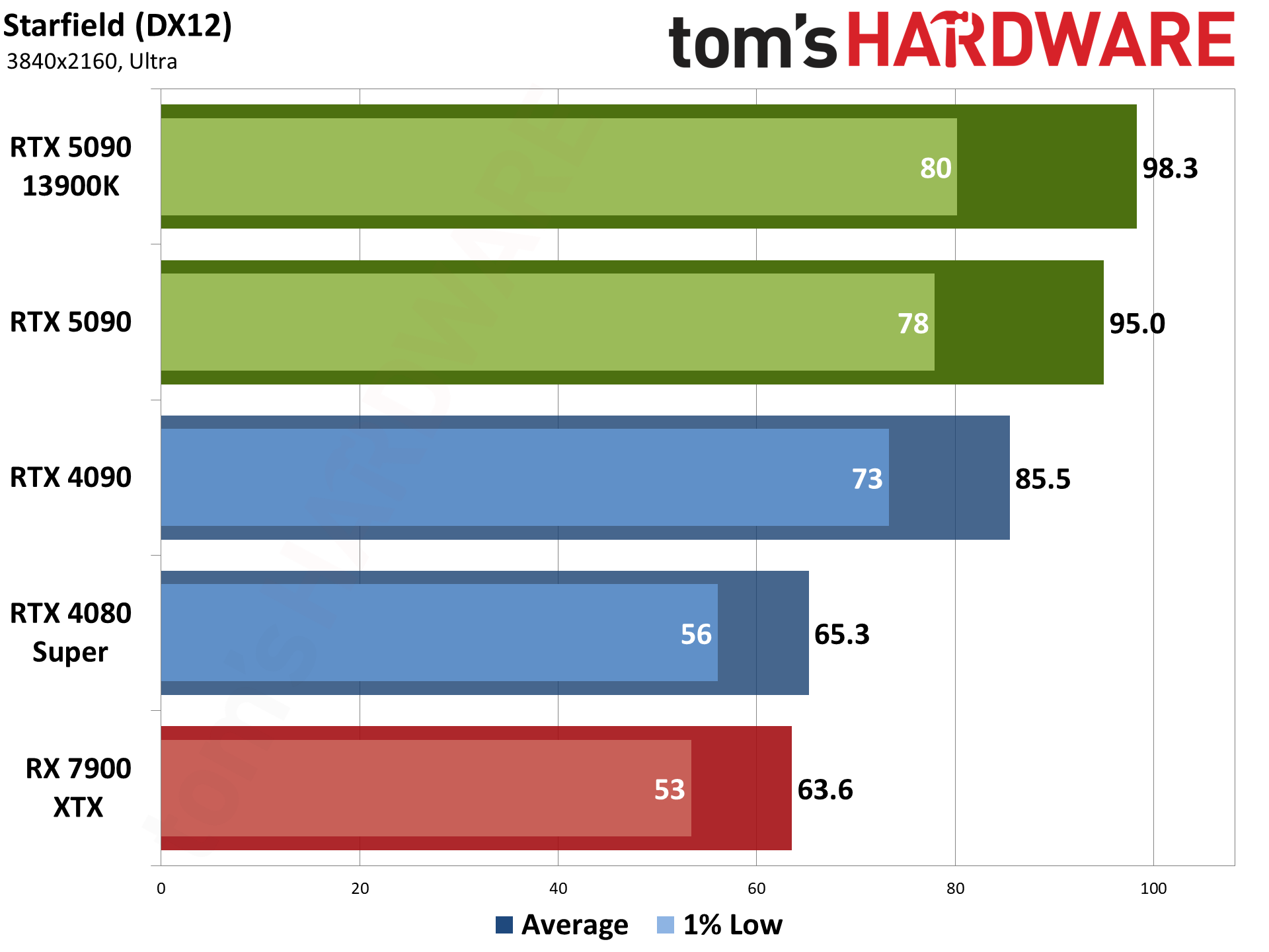

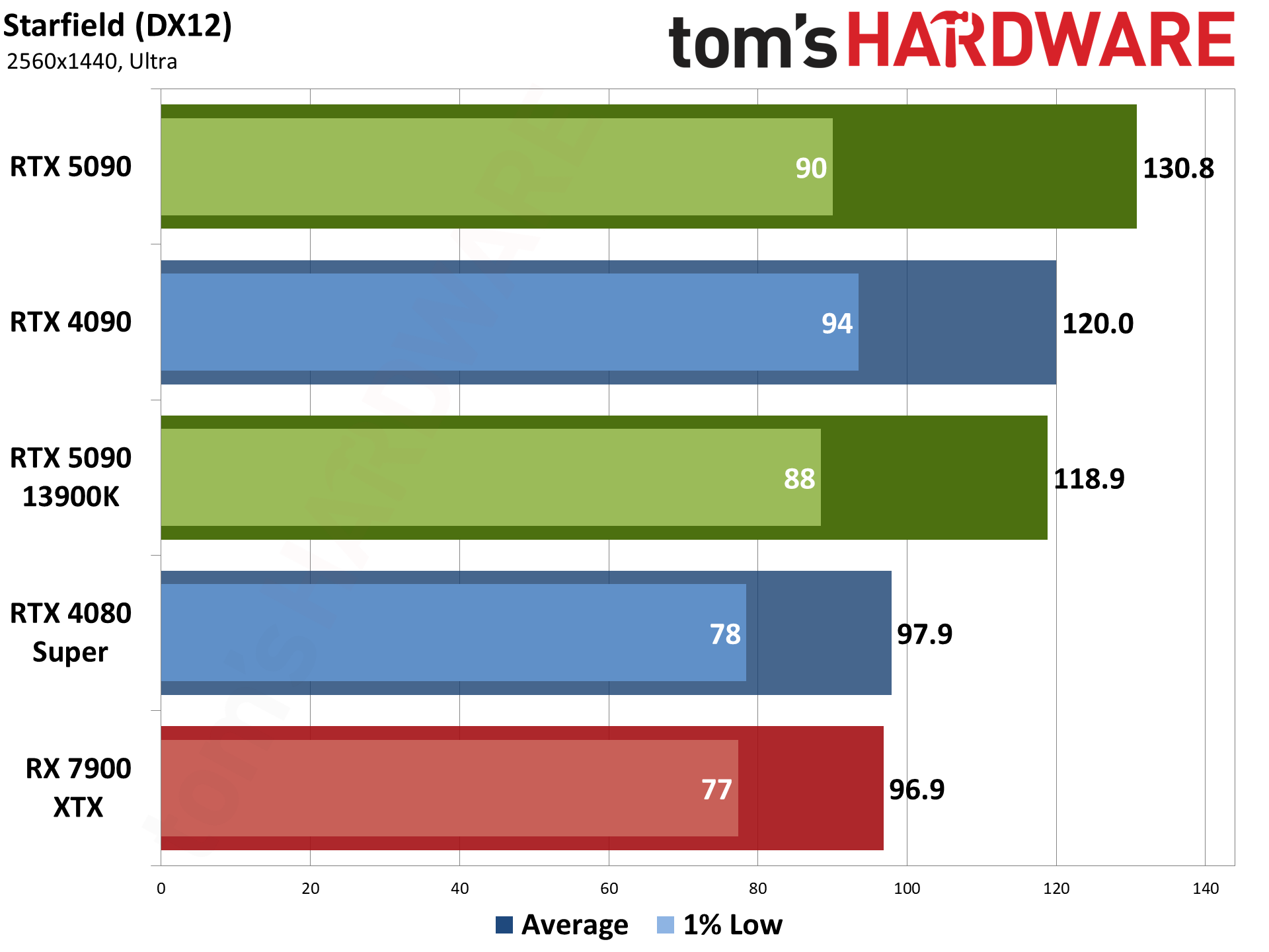

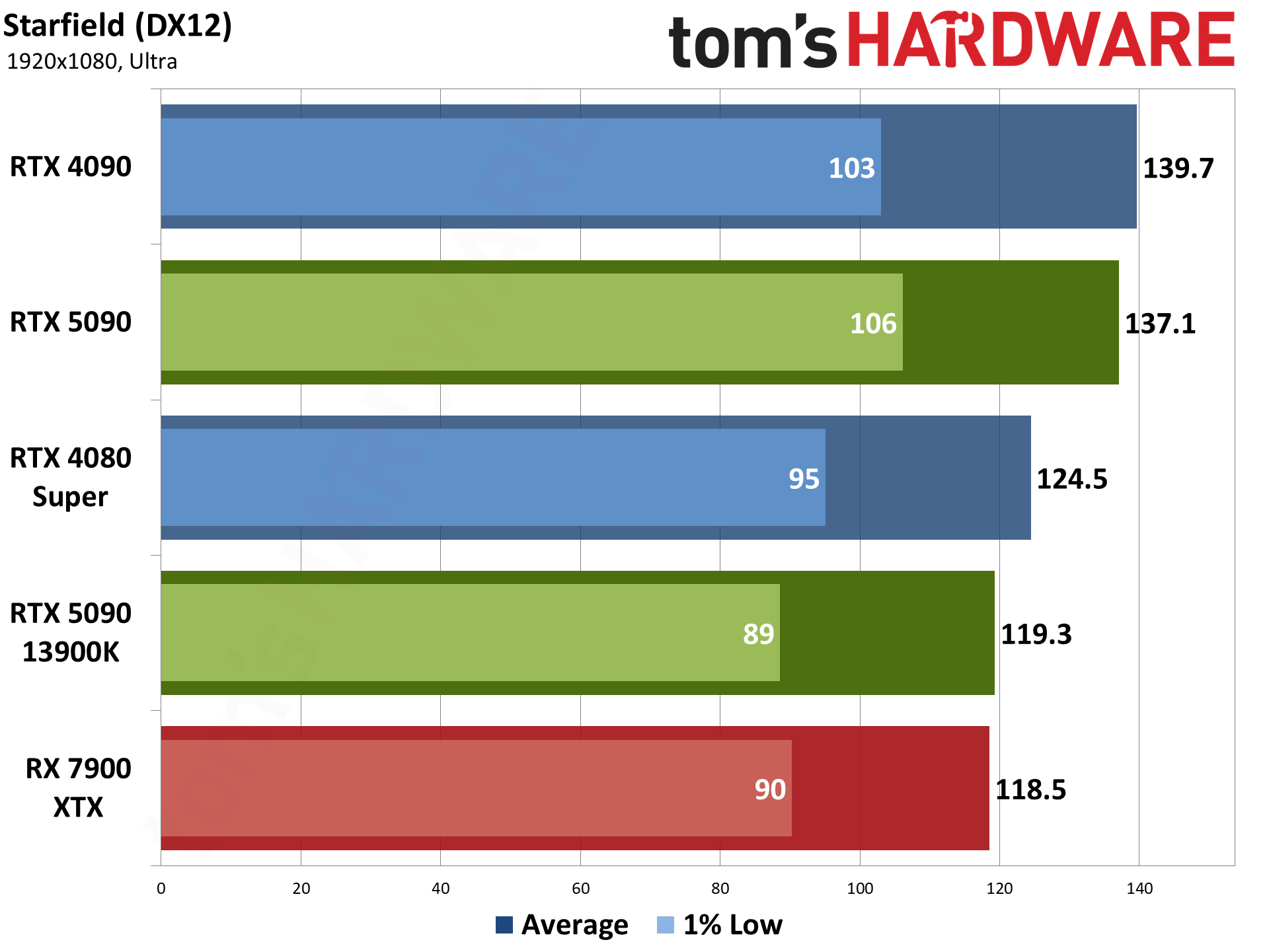

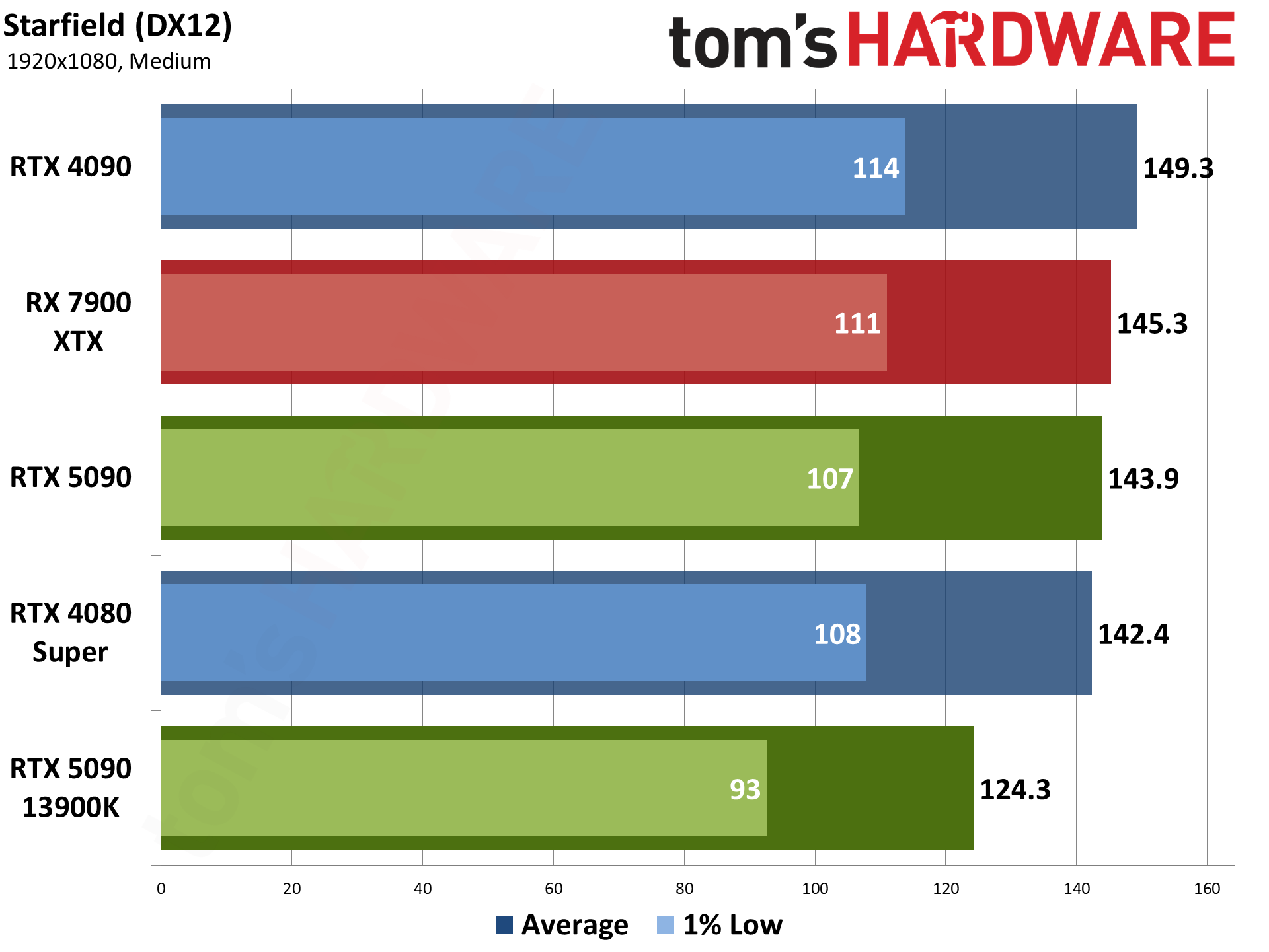

Starfield uses the Creation Engine 2, an updated engine from Bethesda where the previous release powered the Fallout and Elder Scrolls games. It's another fairly demanding game, and we run around the city Akila, which is one of the more taxing locations in the game.

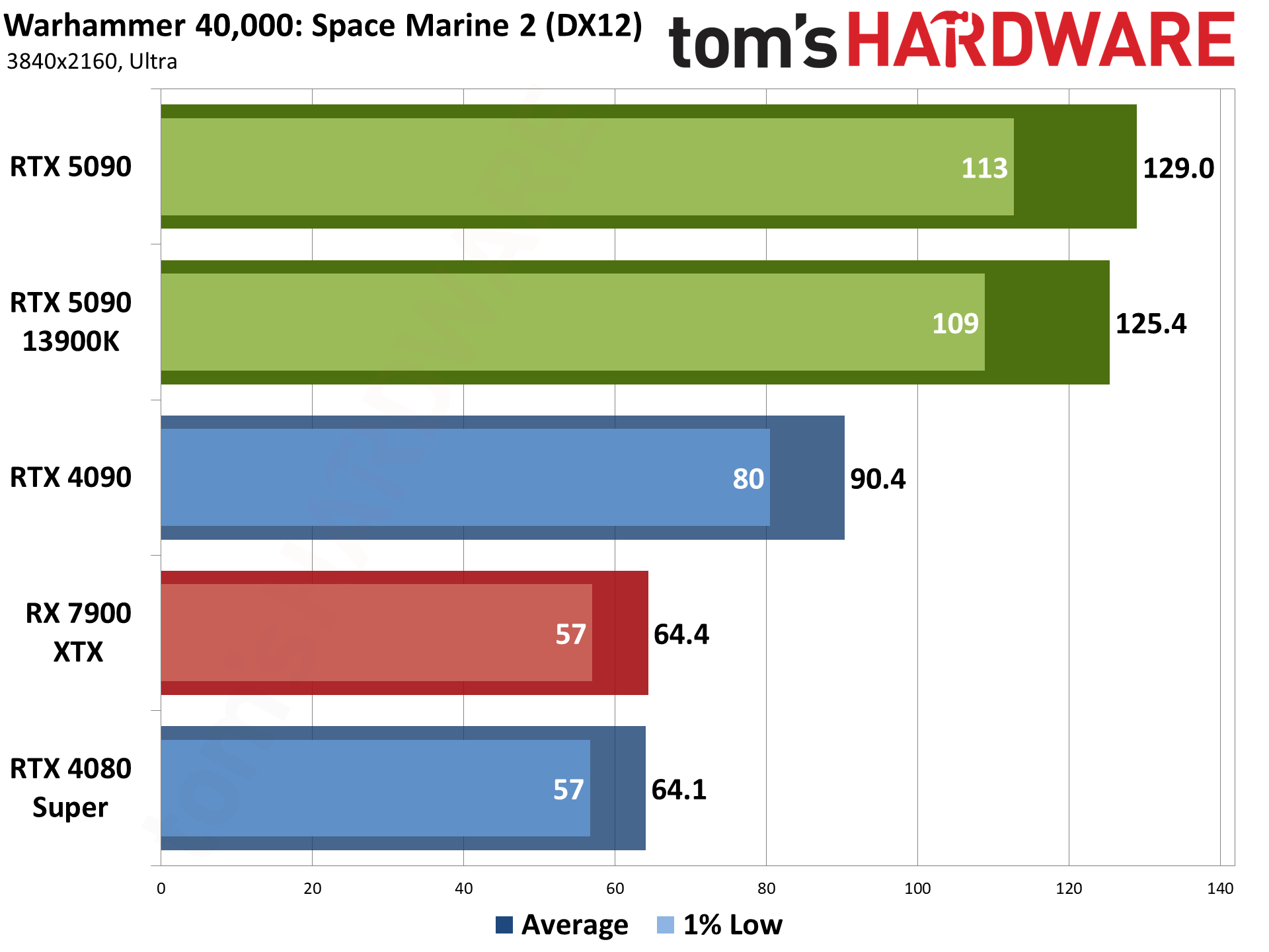

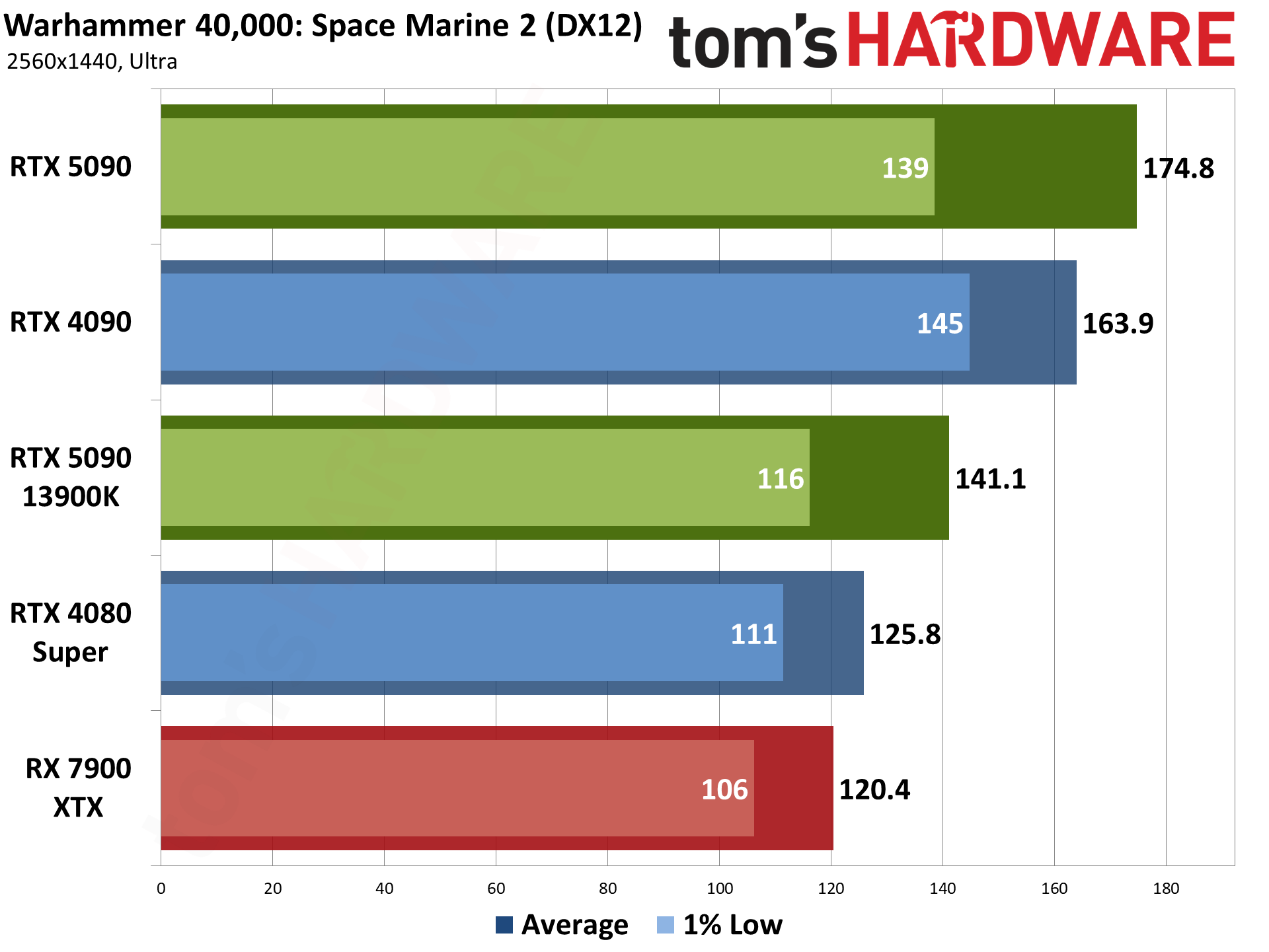

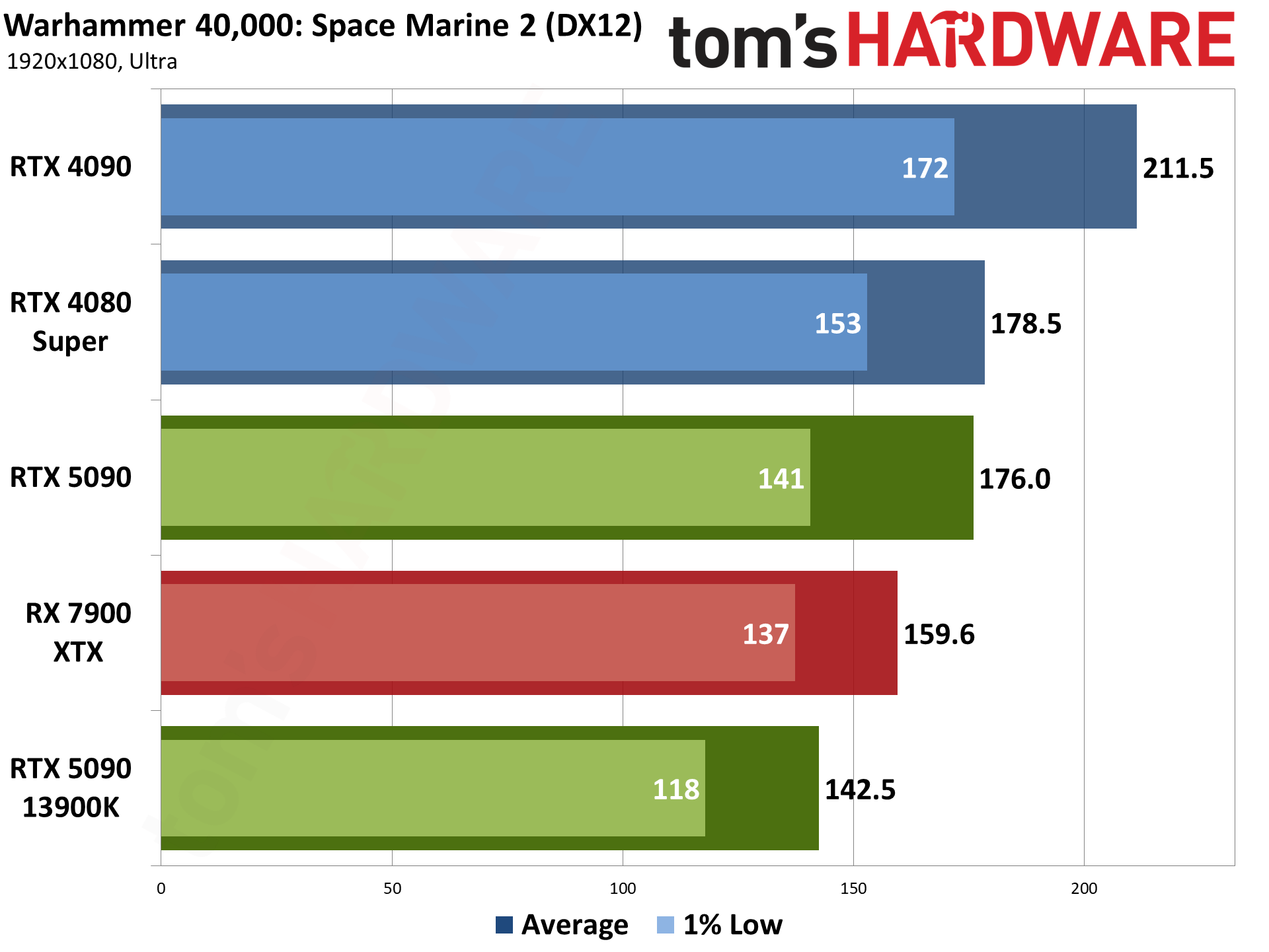

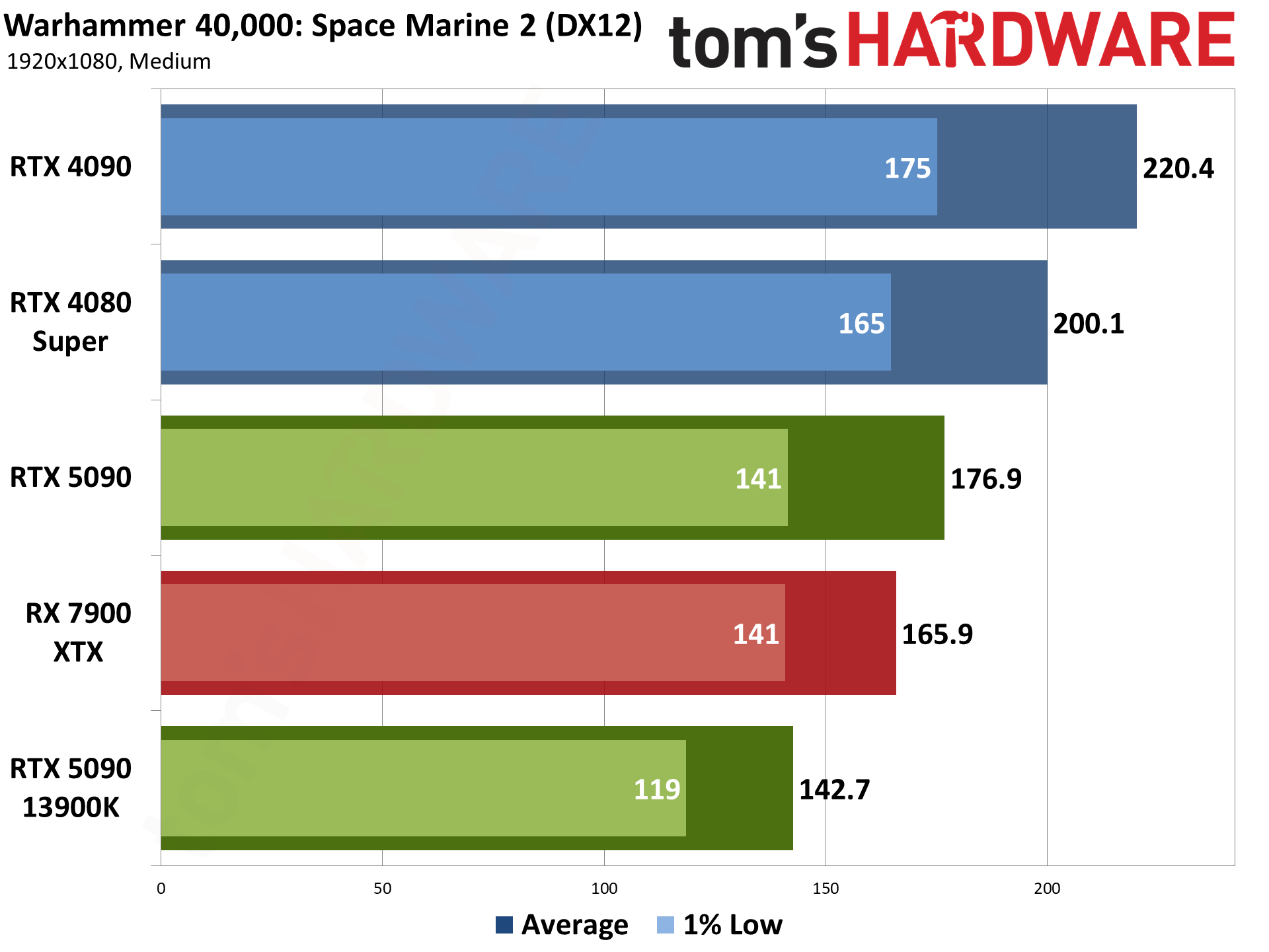

Wrapping things up, Warhammer 40,000: Space Marine 2 is yet another AMD promoted game. It runs on the Swarm engine and uses DirectX 12, without any support for ray tracing hardware. We use a sequence from the introduction, which is generally less demanding than the various missions you get to later in the game but has the advantage of being repeatable and not having enemies everywhere.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Nvidia RTX 5090 Rasterization Gaming Performance

Prev Page Nvidia RTX 5090 Test Setup Next Page Nvidia RTX 5090 Ray Tracing Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Crazyy8 Quick look, raster performance seems a bit underwhelming. Weird that the RTX 5090 can be slower than the 4090(in niche cases). Wasn't going to buy the 5090 anyway, too expensive for a plebe like me. Looking forward to DLSS 4 and how amazing(or not)it'll be.Reply -

Gururu Amazing but expected as far as I am concerned. I don't think there is a lot of need to compare against anything including AMDs new cards. It was brilliant to pair with the 13900 with crazy interesting results. Will read a few more times to glean more details. (y)Reply -

ReplyAdmin said:We also tested the RTX 5090 on our old 13900K test bed, with some at times interesting results. Some games still seem to run faster on Raptor Lake, though overall the 9800X3D delivers higher performance. The margins are of course quite small at 4K ultra.

For me, as a 13900K owner, that's a consolation :cool: -

Gaidax Okay, that IS a sick cooler that actually manages to do the job.Reply

I bet aftermarket 4 slot monstrocities will do better, but for 2 slots 600w that's insane. -

m3city Products like this should receive 3stars max. Great performance but at what cost? Is it the right direction that power draw increases at each iteration? Is it worth to chase max perf each time? For me it would be perfect if 5000 series stayed at same TDP as previous ones - meaning better design, better gpu - with understandably lower increase of perf compared to 4000. And then, 6000 series to have even reversed direction: higher perf with drop of TDP.Reply

And secondary, how come 500W gpu can be air cooled, but nerds on forums will claim you absolutely NEED water cooling for 125W ryzen, cause "highend"?. Yeah, i know 125W means more actual draw. -

redgarl Okay Jarred... you are shilling at this point.Reply

4.5 / 5 for a 2000$ GPU that barely get 27% more performances?

While consuming 100W more than a 4090?

And offering the same cost per frame value as a 4090 from 2 years ago?

Flagship or not, this is horrible.

Not to mention the worst uplift from an Nvidia GPU ever achieved... 27%...

https://i.ibb.co/4fks6Gt/reality.jpg -

redgarl Did you bench into an open bench or a PC case? I am asking because there is some major concerns of overheating because the CPU coolers is choked by the 575W of heat dissipation inside a closed PC Case. If you have an HSF for your CPU, then you are screwed.Reply

https://uploads.disquscdn.com/images/7a5bf4d586b20ffe0aa6281c57d419012a32cbdabd43b3e8050d2aa9a00d6cc1.png -

oofdragon 20% better at 4K and 15% better at 2K, all that having 30% more cores and etc............... got it. Oh boy the 5080 and 5070 are sure going to disappoint a lot of people.Reply

The good news is the RX 9070 will bring 4070 Ti Super performance to the table around $500 including the VRAM, ray tracing and dlss image quality. AMD will prolly counter the multi frame gen nonsense with something like the LSFG 3.0 is doing and smart buyers will finally have a good GPU to replace their 3080 or 6700 XT. -

vanadiel007 They should have given it code name sasquatch, because that's the chance you will be seeing these sell for $2,000 in the coming months.Reply

More like $3,000 and a lot of luck needed to find one in stock.

I pass on it. -

YSCCC a real space heater inside the case, and extremely expensive with not great raw performance increase... sounds like built for those with more money than brain or logic..Reply