Nvidia RTX Pro 6000 up close: Blackwell RTX Workstation, Max-Q Workstation, and Server variants shown

300W to 600W GPU, depending on the model.

The Nvidia Blackwell RTX Pro 6000 GPU was announced during the GTC 2025 keynote. These will use the same GB202 die that goes into Nvidia's RTX 5090 graphics card, but with some significant changes in some of the other aspects. There will be three variants of the RTX Pro 6000: the Blackwell Workstation Edition, Max-Q Workstation Edition, and Blackwell Server Edition.

The core specifications for the RTX Pro 6000 are the same across all three models. You get 188 SMs enabled, out of a potential 192 maximum from GB202. That's 10.6% more SMs, shader cores, tensor cores, RT cores, etc., relative to the RTX 5090. Clock speeds weren't given, but Nvidia does list up to 125 TFLOPS of FP32 compute via the shaders, and 4000 AI TOPS from the tensor cores. That works out to a boost clock of around 2.6 GHz, but that won't be the same for all three variants.

The RTX Pro 6000 features the full 128MB L2 cache of GB202, along with four NVENC and four NVDEC video blocks. RTX 5090 only has 96MB of L2 cache and three each for NVENC/NVDEC. It's very close to a fully enabled chip, with only 2% of the SMs disabled.

The memory configuration is the same for all three variants. As discussed in the initial RTX Pro 6000 announcement, Nvidia uses 24Gb (3GB) GDDR7 chips rather than the 2GB chips used on the consumer GeForce RTX 50-series cards. That increases the memory capacity to 48GB per PCB side, and with chips on both sides of the PCB in 'clamshell' mode, there's 96GB total. The memory has the same 28 Gbps clocks as most of the 50-series parts, with 1792 GB/s of total bandwidth.

Graphics Card | RTX Pro 6000 | RTX Pro 5000 | RTX Pro 4500 | RTX Pro 4000 |

|---|---|---|---|---|

Architecture | GB202 | GB202 | GB203 | GB203 |

Process Technology | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N |

Transistors (Billion) | 92.2 | 92.2 | 45.6 | 45.6 |

Die size (mm^2) | 750 | 750 | 378 | 378 |

SMs | 188 | 110 | 82 | 70 |

GPU Shaders (ALUs) | 24064 | 14080 | 10496 | 8960 |

Tensor Cores | 752 | 440 | 328 | 280 |

Ray Tracing Cores | 188 | 110 | 82 | 70 |

Boost Clock (MHz) | 2600 | 2500? | 2500? | 2500? |

VRAM Speed (Gbps) | 28 | 28 | 28 | 28? |

VRAM (GB) | 96 | 48 | 32 | 24 |

VRAM Bus Width | 512 | 384 | 256 | 192 |

L2 Cache | 128 | 96? | 64? | 48? |

Render Output Units | 192 | 144? | 96? | 80? |

Texture Mapping Units | 752 | 440 | 328 | 280 |

TFLOPS FP32 (Boost) | 125.1 | 70.4? | 52.5? | 44.8? |

TFLOPS FP16 (FP4/FP8 TFLOPS) | 1001 (4004) | 563 (2253) ? | 420 (1679) ? | 358 (1434) ? |

Bandwidth (GB/s) | 1792 | 1344 | 896 | 672? |

TBP (watts) | 600 | 300 | 200 | 140 |

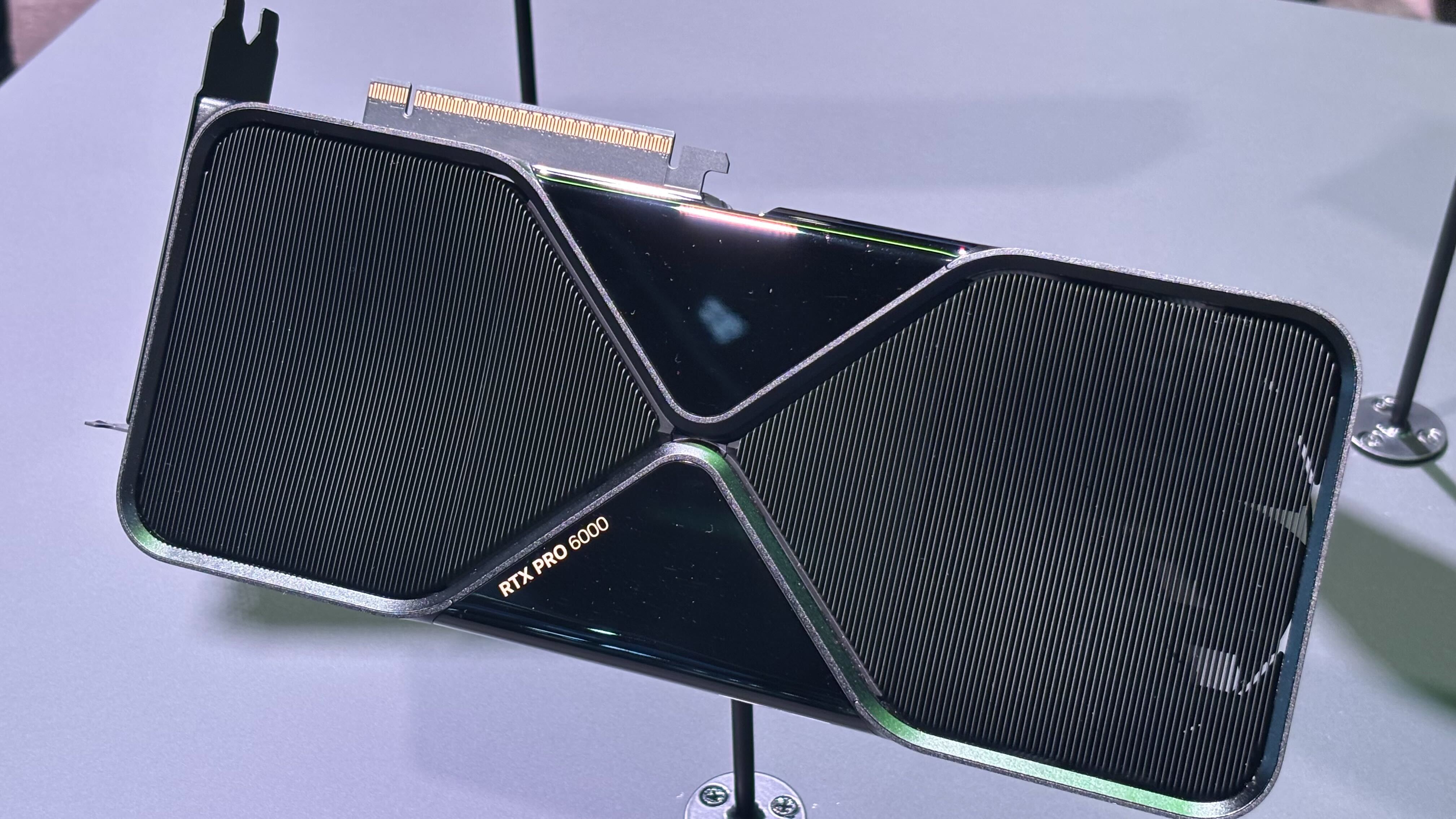

The Blackwell Workstation Edition looks basically the same as the RTX 5090, except with a glossy black finish in places rather than a matte black. TDP (TGP) for the card is 600W, 25W higher than the 5090, but otherwise, the two cards look about the same. You also get four DisplayPort 2.1b outputs, whereas the 5090 typically offers at least one HDMI 2.1b output.

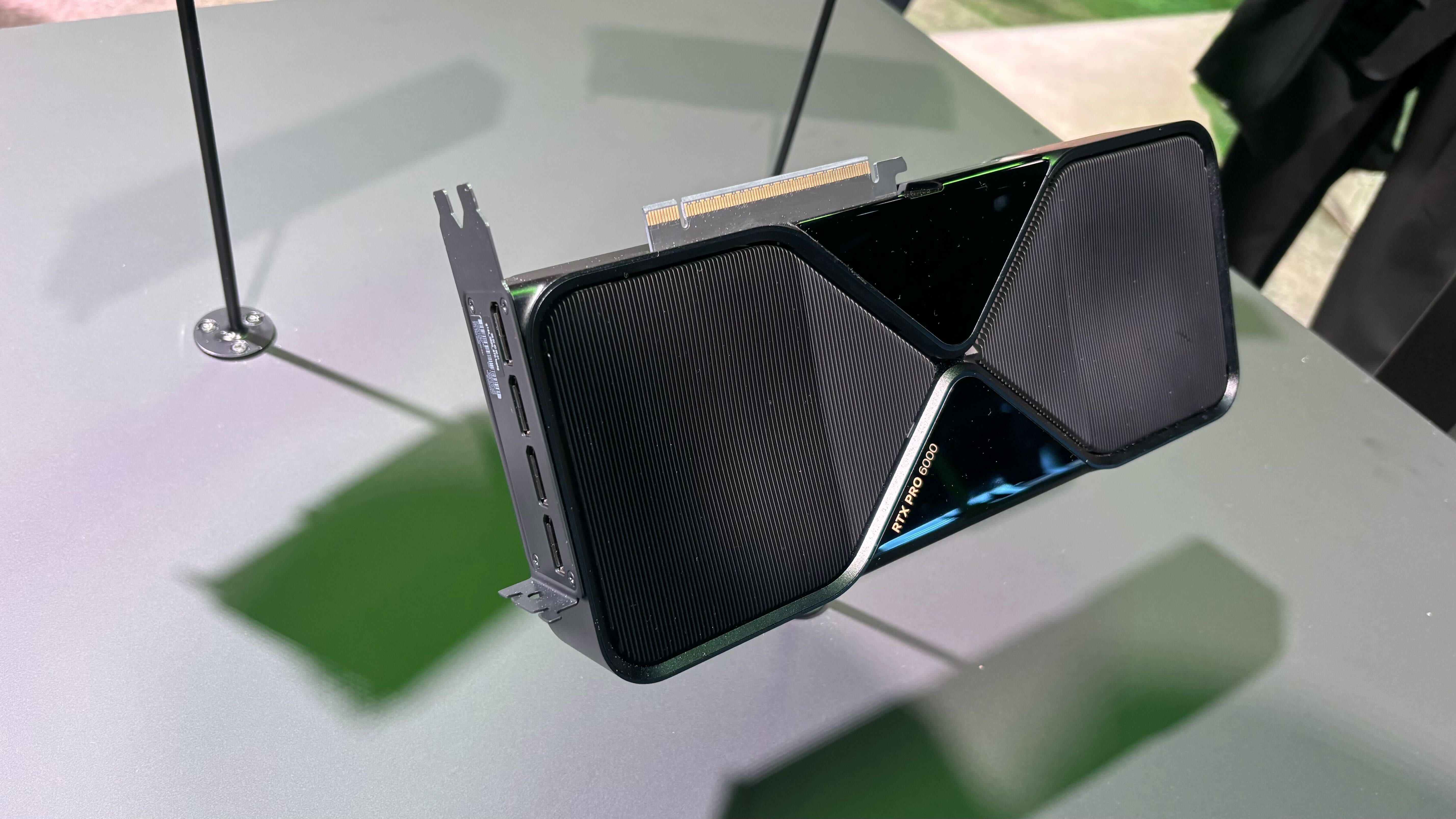

For the Max-Q Workstation Edition, the TGP gets capped at 300W. Half the power will naturally mean lower typical boost clocks for a lot of workloads, though there will undoubtedly be cases where it will still run nearly as fast as the 600W card. It also has a standard FHFL (full-height, full-length) dual-slot form factor with dual-blower fans at the back of the card. It also has four DP2.1b outputs.

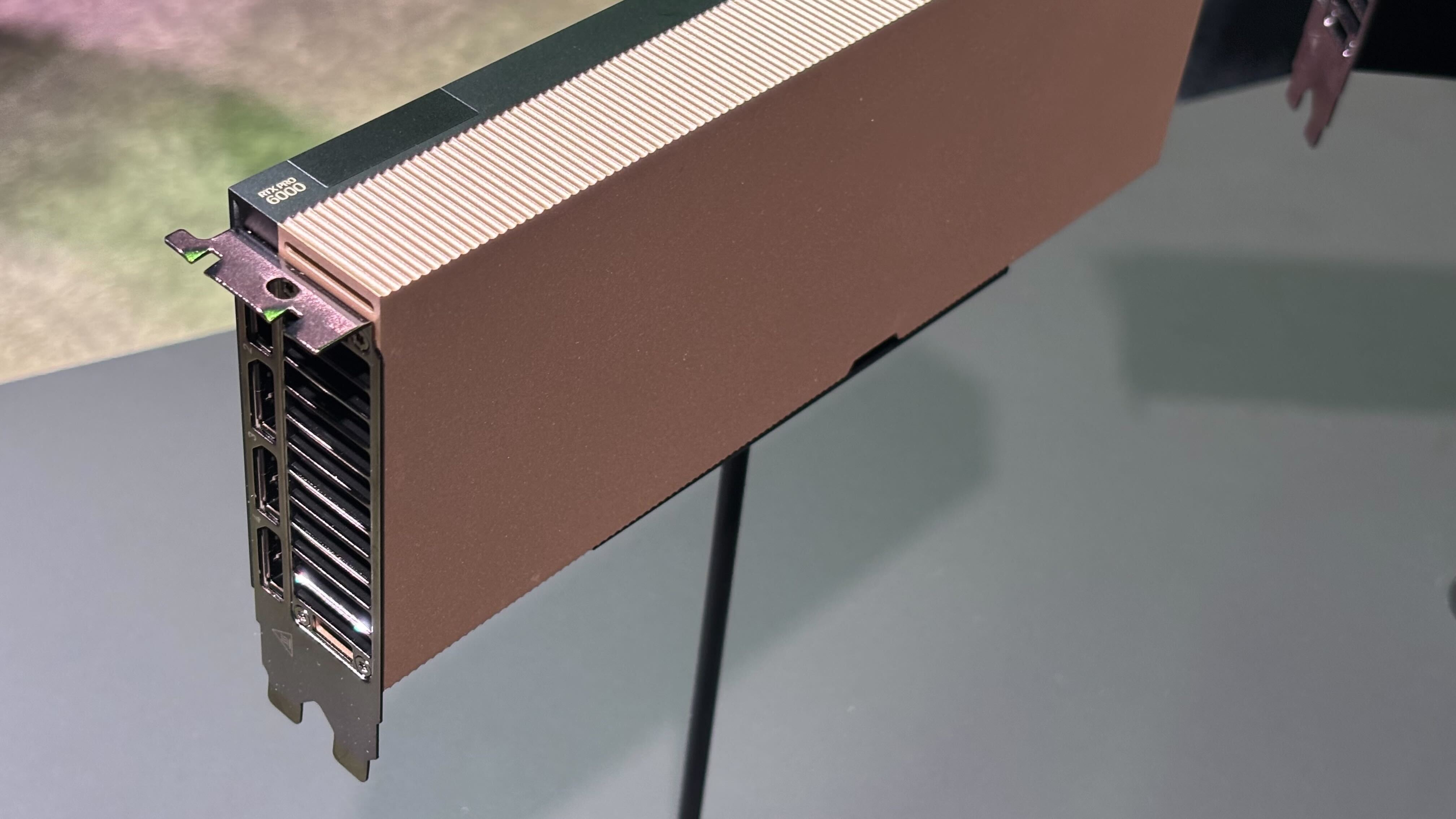

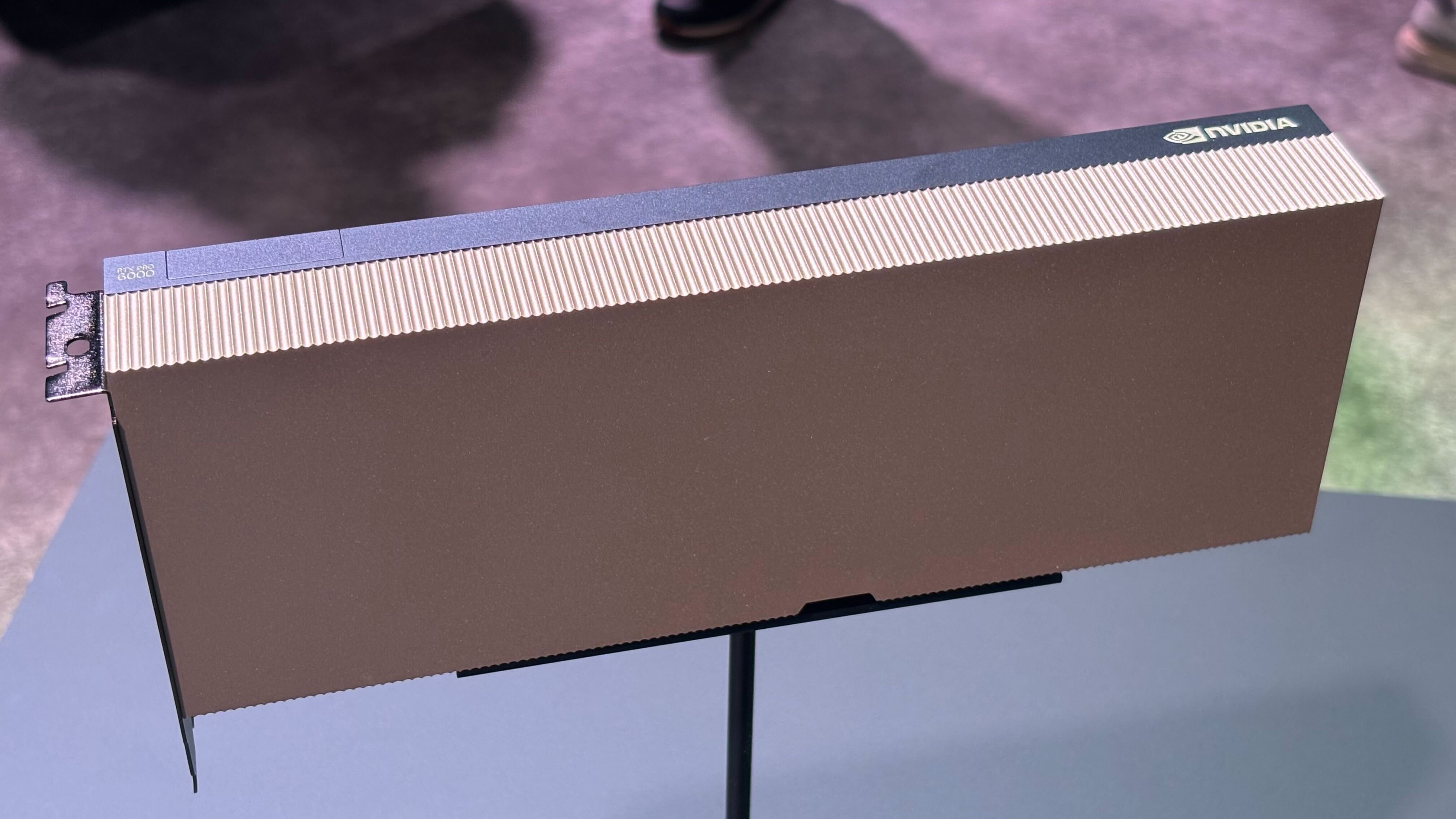

Finally, the Blackwell Server Edition has a similar form factor to the Max-Q card but ditches the fans, instead relying on the server fans to provide airflow and cooling. That's usually in ample supply for servers, and noise levels are usually less of a concern — you get high RPM fans moving lots of air in a regulated environment to make everything run sufficiently cool. The power on the Server Edition is configurable up to 600W, so some installations might opt for lower power to optimize the efficiency if they're power-limited.

All three models use the same 16-pin connector found on desktop RTX cards. Servers and workstations tend to be built to much tighter specifications, and so far there haven't been any widespread reports of servers or workstations with melting connectors. That suggests perhaps that the biggest issues with 16-pin connectors are component quality and proper installation — companies are less likely to cheap out on the cables in a server or workstation, so there aren't impurities causing hot spots and melting.

Pricing hasn't been discussed, but we typically see professional and server solutions like the RTX Pro 6000 selling for 4X~5X more than the equivalent consumer GPUs. It wouldn't be surprising if the various RTX Pro 6000 cards cost $10,000 or more. We'll find out exactly where they fall in the coming days.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

JarredWaltonGPU Reply

There's a whole bunch of information about the memory... that I only put in the initial announcement (which is linked in the first sentence). I've repeated that here now, with some additional information. Cheers!klatte42 said:Is there no information about memory, or is it just the same as a 5090? -

bit_user Probably the most interesting change vs. previous generations is going above the 300 W barrier. To my knowledge, that's a first for their workstation cards.Reply -

Li Ken-un Reply

I’m going to stick with 300 W since that is still an option.bit_user said:Probably the most interesting change vs. previous generations is going above the 300 W barrier.

For the Max-Q Workstation Edition, the TGP gets capped at 300W. Half the power will naturally mean lower typical boost clocks for a lot of workloads, though

…that also means not rolling the 🎲 die on potential connector meltage. -

JarredWaltonGPU Reply

Workstations don't typically skimp on materials quality. I suspect a large number of melting connectors have been more about contamination of the metal connections (along with some incorrectly inserted connectors). Have there been any reports of melted 16-pin connectors from servers? Because they're using the same connector there as the consumer cards, but I've heard basically nothing about problems from that sector.Li Ken-un said:I’m going to stick with 300 W since that is still an option.

…that also means not rolling the 🎲 die on potential connector meltage.

Crazy to think about the servers shown where there are eight RTX Pro 6000 cards lined up. 4800W right there! LOL -

jp7189 In servers and workstations cable routing and air flow tend to be more engineered/controlled. Technically, the 16AWG wire used on the 16pin connectors can go up to 18 amps if you're willing to have a 90C tolerance. That's 1296 watts, and plenty of safety factor IF all parts of the path are designed for it (high temp insulation, good cable routing, air flow over every part of it)Reply -

Loadedaxe Reply

In the server market these issues dont arise. You have IT people that actually know what they are doing and take the time to install things properly and have the proper power and cooling.Li Ken-un said:…that also means not rolling the 🎲 die on potential connector meltage.

The cable melting issue were due to user error and power supplies that were not adequate. -

Li Ken-un Reply

You see though… I’m not an IT team. I’m another individual installing a GPU in a typical desktop tower form factor, who likes neatly organized cables, and so has a collection of customized cables that are snipped to just the right length.Loadedaxe said:In the server market these issues dont arise. You have IT people that actually know what they are doing and take the time to install things properly and have the proper power and cooling. -

bit_user Reply

Wow, I'm impressed!Li Ken-un said:I’m another individual installing a GPU in a typical desktop tower form factor, who likes neatly organized cables, and so has a collection of customized cables that are snipped to just the right length.

I once bought a cable sleeving kit to put sleeves over my PSU cables (before this was standard, on higher-end PSUs), but it was such a PITA that I vowed never to do it again. Luckily, the industry embraced the style and I didn't have to.

In that machine, I also tried installing special noise-dampening foam on the inside of the case (like the DynaMat stuff sold to car stereo enthusiasts), but I can't say it made much difference. It's better to simply start with a case that's designed to be quiet. Also, I positioned my PC farther away from where I sit. -

Loadedaxe Reply

And there are a lot of people just like you, I used to be one, takes too much time though and I lost interest as no one saw my pc except me and I dont need to impress myself.Li Ken-un said:You see though… I’m not an IT team. I’m another individual installing a GPU in a typical desktop tower form factor, who likes neatly organized cables, and so has a collection of customized cables that are snipped to just the right length.

But most that do, they usually do it correctly. Then there are some not so technically inclined. That isn't the fault of Nvidia. And they definitely need a crack in the knees to knock them down a notch, but this isn't it.

Still, your original comment was the "rolling the dice" on server gpu being installed that the average consumer is not going to buy, those that do buy, they know how to RTFM. ;)