AMD A8-3850 Review: Llano Rocks Entry-Level Desktops

Earlier this month we previewed AMD's Llano architecture in a notebook environment. Now we have the desktop version with a 100 W TDP. How much additional performance can the company procure with a loftier thermal ceiling and higher clocks?

Dual Graphics: How Does It Perform?

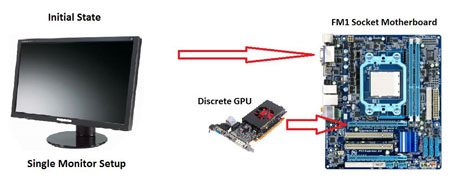

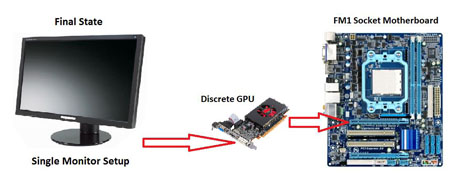

Setting Up Dual-Graphics

Don covered AMD’s Dual Graphics technology in his preview of Llano earlier this month. However, now we’re able to test it in a desktop environment.

In his earlier piece, Don figured out that, in some cases, when Dual Graphics wasn’t working, it’d default to the performance of the slower installed GPU. AMD said that wasn’t supposed to be the case. You’re actually supposed to get the speed of the faster graphics engine. We think the company is getting around that unexpected behavior by suggesting you use the discrete card as a primary output when two GPUs live in the same machine. The unfortunate consequence is a messy setup routine.

By default, Llano wants to be the primary output. So, when you configure your BIOS, set up Windows, and get drivers installed, AMD says use the APU’s outputs. If there’s a discrete card installed, CrossFire gets enabled automatically. At that point you have to install the latest Application Profiles patch, disable CrossFire, shut the system down, and switch over to the discrete outputs. From then on, you won’t see a video signal until Windows loads up. Enable CrossFire, reboot again, and then you should be able to use Dual Graphics. Whew.

At least once you get there, a Dual Graphics-enabled configuration makes Eyefinity a possibility. Using display outputs from each graphics processor, you can connect three or more displays in an extended desktop configuration. Just be careful with the outputs you choose. Some motherboard vendors are implementing single-link DVI connectors, while others use dual-link. Of the boards we have here, they all also come with VGA and HDMI; only Gigabyte gives you DisplayPort connectivity, too.

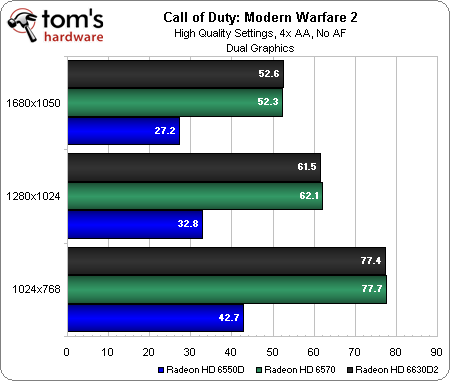

Now, What Equivalent GPU Do I Have?

Don also explained in his preview piece that AMD uses a specific naming system for determining the equivalent performance of two GPUs in the same machine. The Radeon HD 6550D on AMD’s A8-3850 plus the Radeon HD 6570 add-in card we’re testing with, for example, is referred to as a Radeon HD 6630D2. That designator indicates that, first, performance should be higher than both of the comprising GPUs individually, second, that the this is a desktop configuration (as opposed to a more thermally-constrained all-in-one system, specified with an A suffix), and third, that there are two graphics processors installed.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Dual-Graphics Branding | ||

|---|---|---|

| Radeon HD Discrete Graphics Card | AMD Accelerated Processing Unit | |

| Radeon Brand | Radeon HD 6550D | Radeon HD 6530D |

| Desktop | ||

| HD 6670 | HD 6690D2 | HD 6690D2 |

| HD 6570 | HD 6630D2 | HD 6610D2 |

| HD 6450 | HD 6550D2 | HD 6550D2 |

| HD 6350 | - | - |

| All-in-One | ||

| HD 6670A | HD 6730A2 | HD 6710A2 |

| HD 6650A | HD 6690A2 | HD 6670A2 |

| HD 6550A | HD 6610A2 | HD 6590A2 |

| HD 6450A | HD 6550A2 | HD 6550 S2 |

| HD 6350A | - | - |

While we like the descriptiveness of this system, there’s certainly still room for confusion. Dual Graphics isn’t universal. It supports DirectX 10/11 apps, and performance is at the mercy of driver optimization. You see where I’m going with this—while you know a Radeon HD 6850 will perform a certain way, the results from a Radeon HD 6630D2 can be expected to vary a lot more.

And How Does It Do?

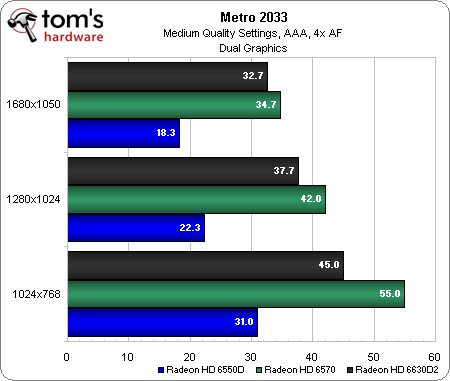

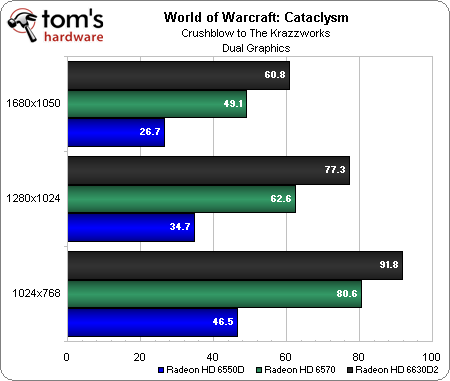

In order to test Dual Graphics, we ran three different games: Call of Duty: Modern Warfare 2 in DirectX 9, Metro 2033 in DirectX 10, and World of Warcraft: Cataclysm in DirectX 11.

Testing a DirectX 9-based game gives us a good control. As expected, Dual Graphics doesn’t work. And as AMD claims, the setup defaults to the speed of the fastest GPU, and not the slowest, as we saw in the mobile Llano preview.

The gains in Metro start large and then slowly shrink to a two-frame speed-up at 1680x1050. The implication there is that, as the load increases, the platform cannot keep up, and the gap narrows. Incidentally, this is the opposite of what we’d expect with more graphics muscle (platform limiting performance at low resolutions, and then graphics opening up as the bottleneck shifts). Keep this in mind on the next page, where I test a theory using Core i3.

WoW is less intense than Metro 2033, and DirectX 11 rendering helps boost performance. The gains are pretty minor given already-playable performance through 1680x1050, but there’s definitely a quantifiable frame rate increase when a Radeon HD 6570 is dropped into an A8-3850-based platform.

Current page: Dual Graphics: How Does It Perform?

Prev Page Meet AMD’s Desktop Llano-Based Lineup Next Page Dual Graphics: Not Always Your Best Bet-

SteelCity1981 So then what's the point of getting the Turbo Core versions when they are going to be Turbo Clocked slower then the none Turbo Clocked versions...Reply -

cangelini SteelCity1981So then what's the point of getting the Turbo Core versions when they are going to be Turbo Clocked slower then the none Turbo Clocked versions...Reply

They don't want you to see better performance from a cheaper APU in single-threaded apps by pushing Turbo Core further ;-) -

Known2Bone i really wanted see some amazing gains in the content creation department what with all that gpu power on chip... oh well games are fun too!Reply -

ivan_chess I think this would be good for a young kid's PC. It would be enough to run educational software and a web browser. When he grows up to be a gamer it would be time to replace the whole machine anyway.Reply -

DjEaZy ... it's may be not the greatest APU for desktop... but it will be a powerful thingy in a laptop... the review was nice... but in the gaming department... would be nice to see a standard 15,x'' laptop resolution tests @ 1366x768... or something like that...Reply -

Mathos Actually if you want good DDR3 1600 with aggressive timings, the Ripjaws X series memory that I have does DDR3 1600 at 7-8-7-24 at 1.5v, not all that expensive when it comes down to it either.Reply -

Stardude82 This makes little sense. An Athlon II X3 445 ($75) and a HD 5570 ($60, on a good day you can get a 5670 for the same price) would provide better performance for the same price ($135) and not have to worry about the RAM you use.Reply

So is AM3+ going to be retired in favor of FM1 in the near future? Why are there chipset at all? Why isn't everything SOC by now?

Otherwise this is a very good CPU. If AMD has used 1 MB level 2 caches in their quads when they came out with the Deneb Propus die, they would be much more competitive. -

crisan_tiberiu stardude82This makes little sense. An Athlon II X3 445 ($75) and a HD 5570 ($60, on a good day you can get a 5670 for the same price) would provide better performance for the same price ($135) and not have to worry about the RAM you use. what about power consumption?Reply