Why you can trust Tom's Hardware

Comparison Products

Update 8/16/21 5:30am PT: Crucial has swapped out the TLC flash that powered the initial P2 SSD we tested with QLC flash, severely reducing performance. We've written an investigation into that matter, which you can read here.

We have also inserted new testing in each test category below to reflect the real performance you'll get when purchasing this drive today.

Original Article:

Today, we put the 500GB Crucial P2 up against a bunch of the best SSDs on the market. We include performance leaders like the Samsung 970 EVO Plus, Adata XPG SX8200 Pro, and Seagate BarraCuda 510. We also threw in Silicon Power’s P34A60 and Crucial’s P1, which are two direct competitors on the pricing front. Also, we included a Crucial MX500 and WD Black HDD for good measure.

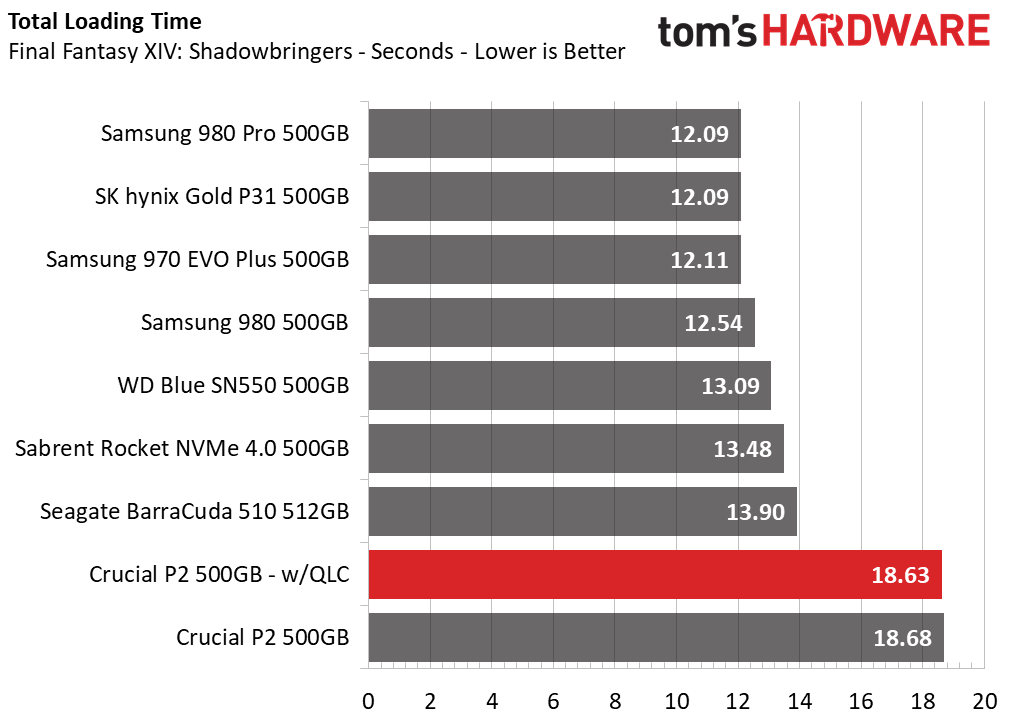

Game Scene Loading - Final Fantasy XIV

Final Fantasy XIV Stormbringer is a free real-world game benchmark that easily and accurately compares game load times without the inaccuracy of using a stopwatch.

Game load performance is one of the only metrics in which the new variant has improved, but the difference is so minuscule we could blame newer, more optimized firmware or run-to-run variance as the reason for the TLC variant’s loss – we’re looking at a 0.05 second difference between the two drives.

In either case, both P2 variants trail the comparison pool with the slowest times by a large margin, making them both subpar for game loading.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

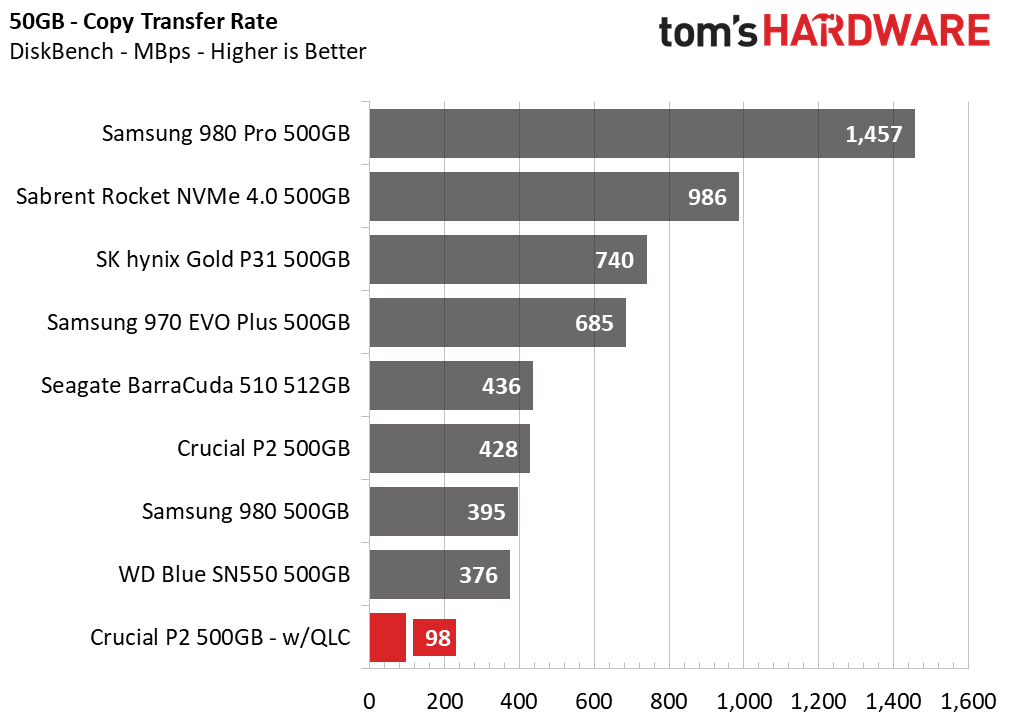

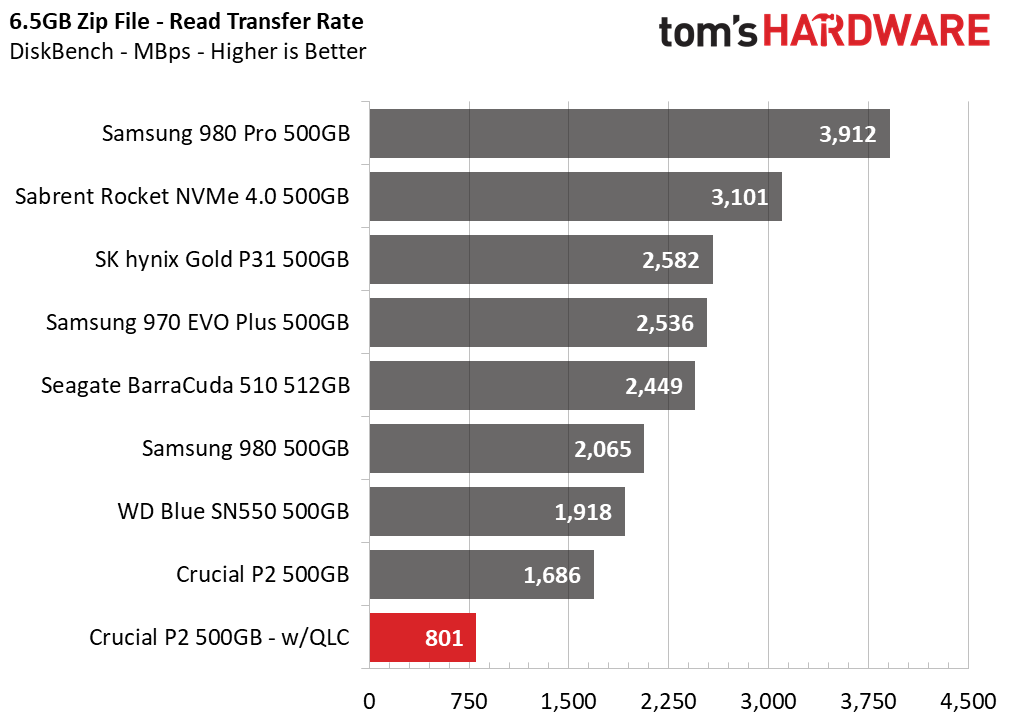

Transfer Rates – DiskBench

We use the DiskBench storage benchmarking tool to test file transfer performance with our own custom blocks of data. Our 50GB data set includes 31,227 files of various types, like pictures, PDFs, and videos. Our 100GB includes 22,579 files with 50GB of them being large movies. We copy the data sets to new folders and then follow-up with a reading test of a newly written 6.5GB zip file and 15GB movie file.

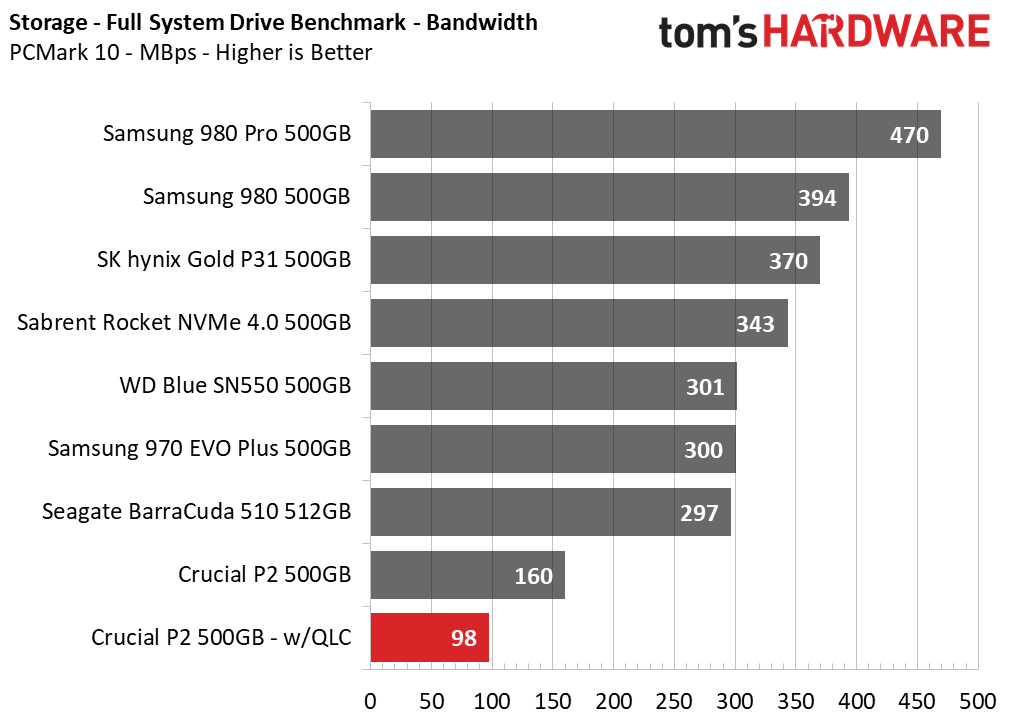

The QLC-based P2 delivered terrible performance during our real-world transfer test, which involves copying a 50GB file folder and reading back the test file. These are the types of real-world activities that are ubiquitous on PCs the world over, but you’ll take a significant performance haircut with the QLC P2.

The SSD could only muster up enough speed to copy the folder at a rate of 98 MBps, which is nearly four times slower than the original TLC-powered model. The drive also reads back large files at half the speed of the TLC variant, too.

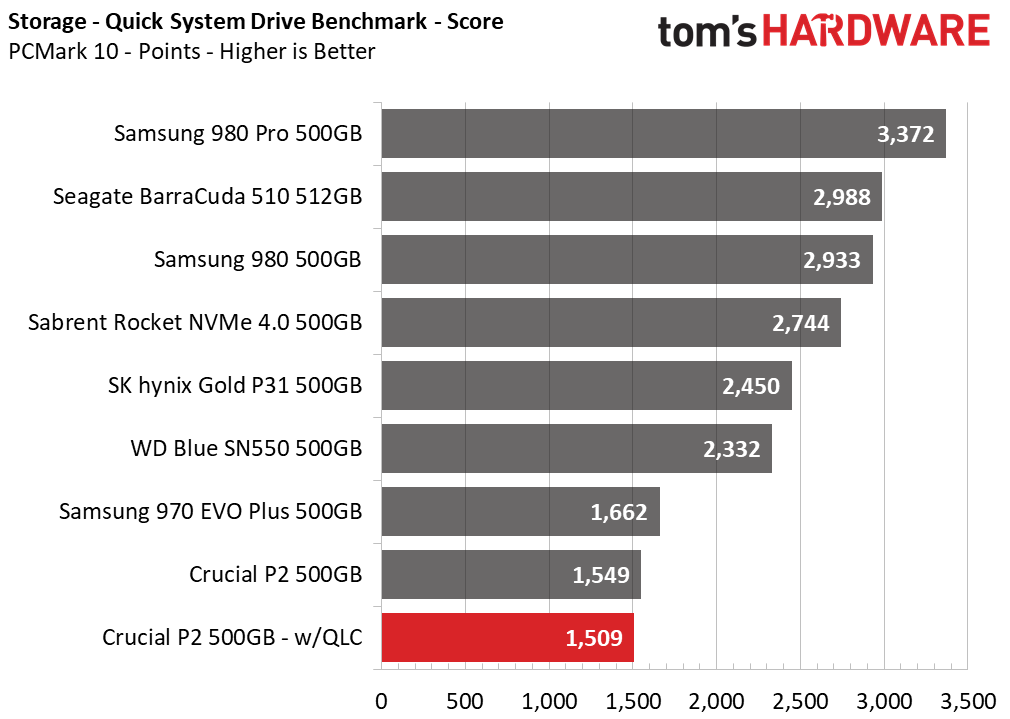

Trace Testing – PCMark 10 Storage Tests

PCMark 10 is a trace-based benchmark that uses a wide-ranging set of real-world traces from popular applications and common tasks to measure the performance of storage devices. The quick benchmark is more relatable to those who use their PCs lightly, while the full benchmark relates more to power users. If you are using the device as a secondary drive, the data test will be of most relevance.

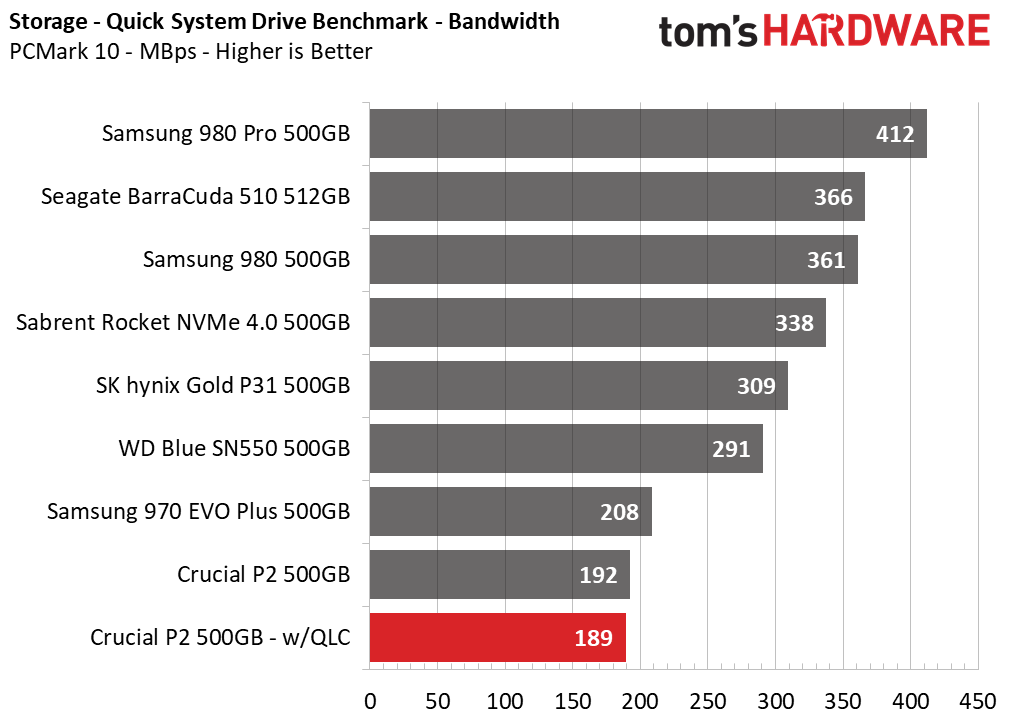

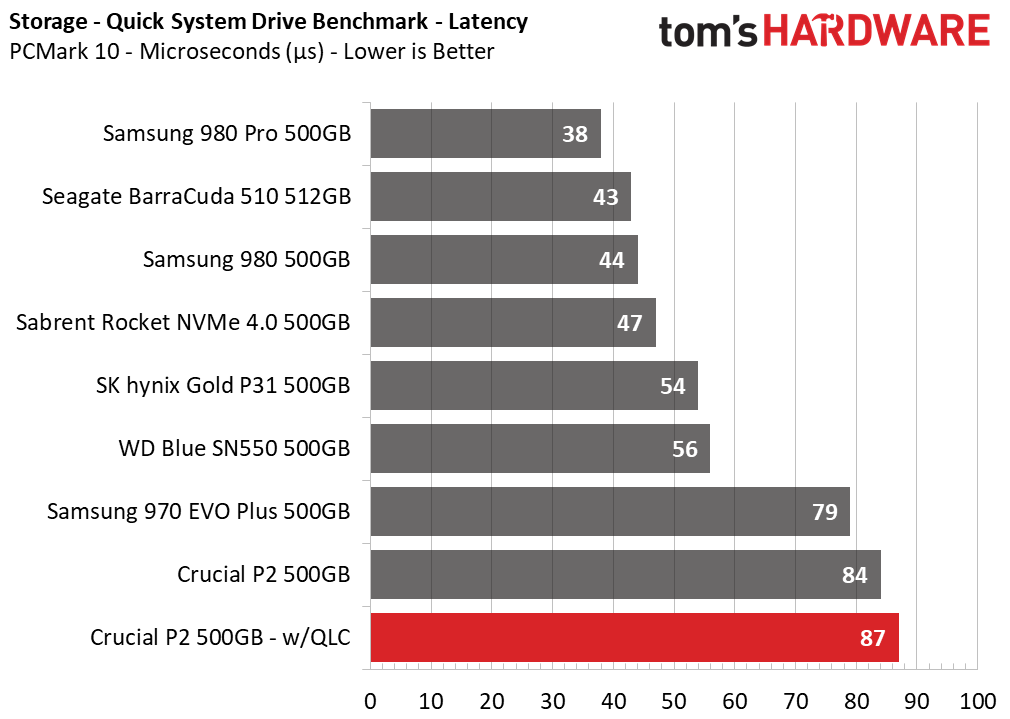

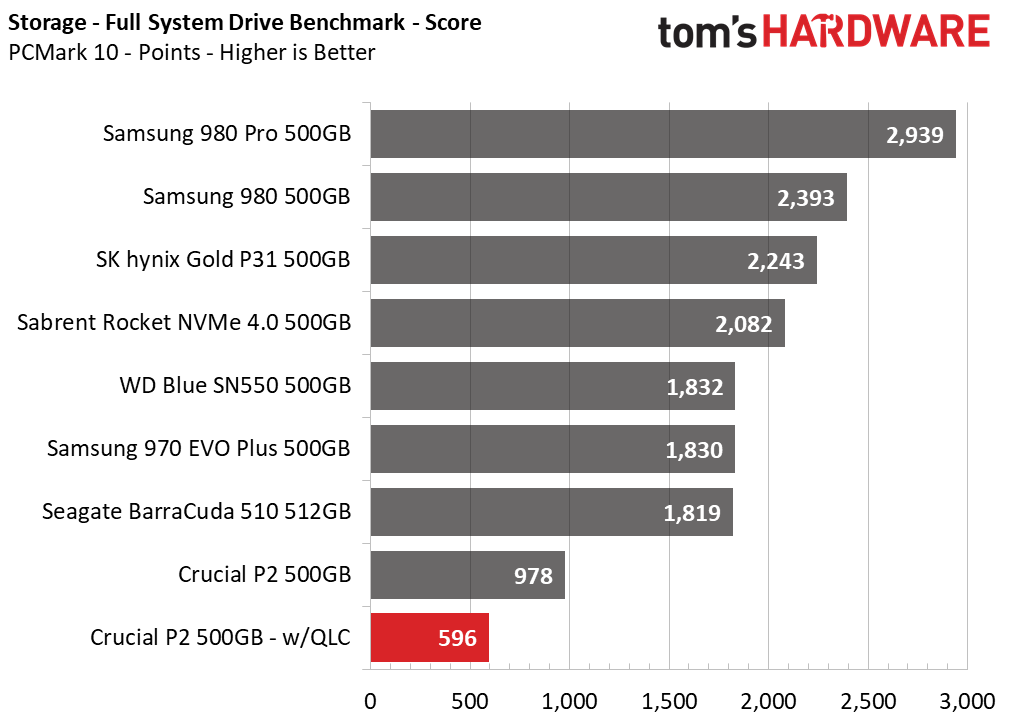

When tasked with light loads, the new QLC variant of the P2 delivers similar performance to its predecessor, scoring just a few points below in the Quick System Drive Benchmark.

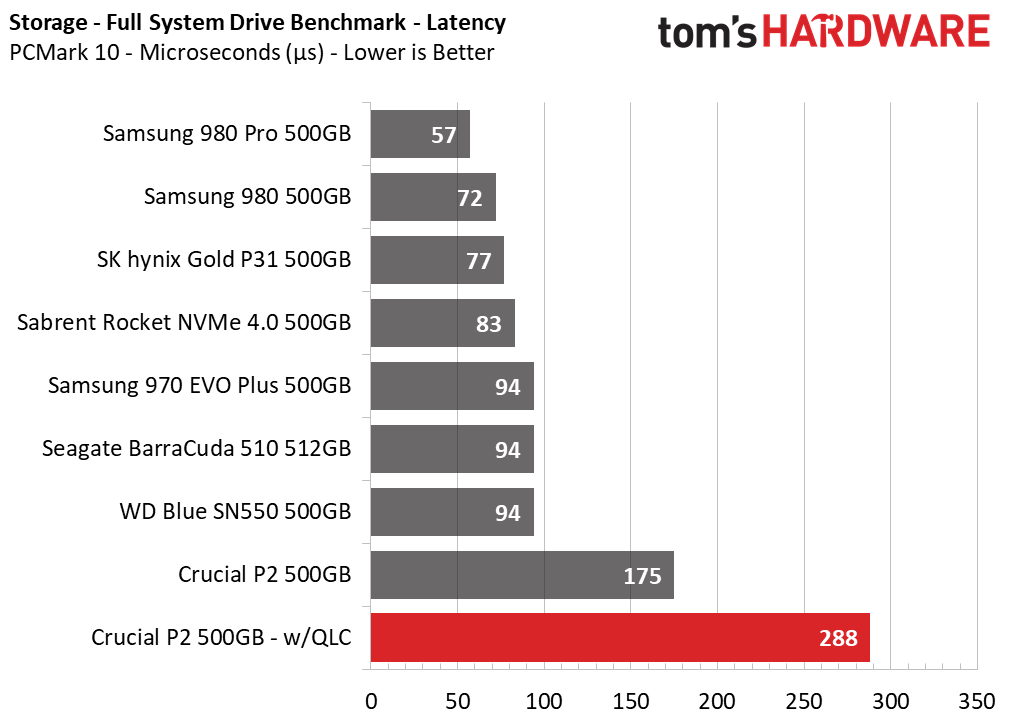

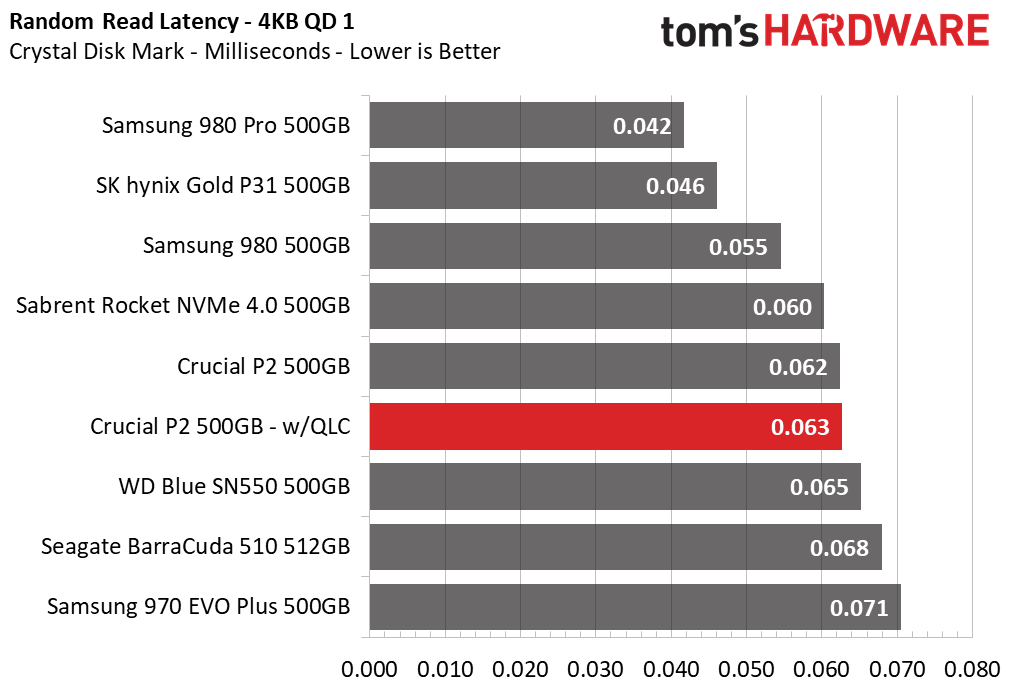

However, when tasked with the Full System Drive Benchmark tests, the QLC variant fell flat with roughly 40% lower performance. Latency, the most important metric for an SSD, was also three to four times higher than any other competitor.

Synthetics - ATTO

ATTO is a simple and free application that SSD vendors commonly use to assign sequential performance specifications to their products. It also gives us insight into how the device handles different file sizes.

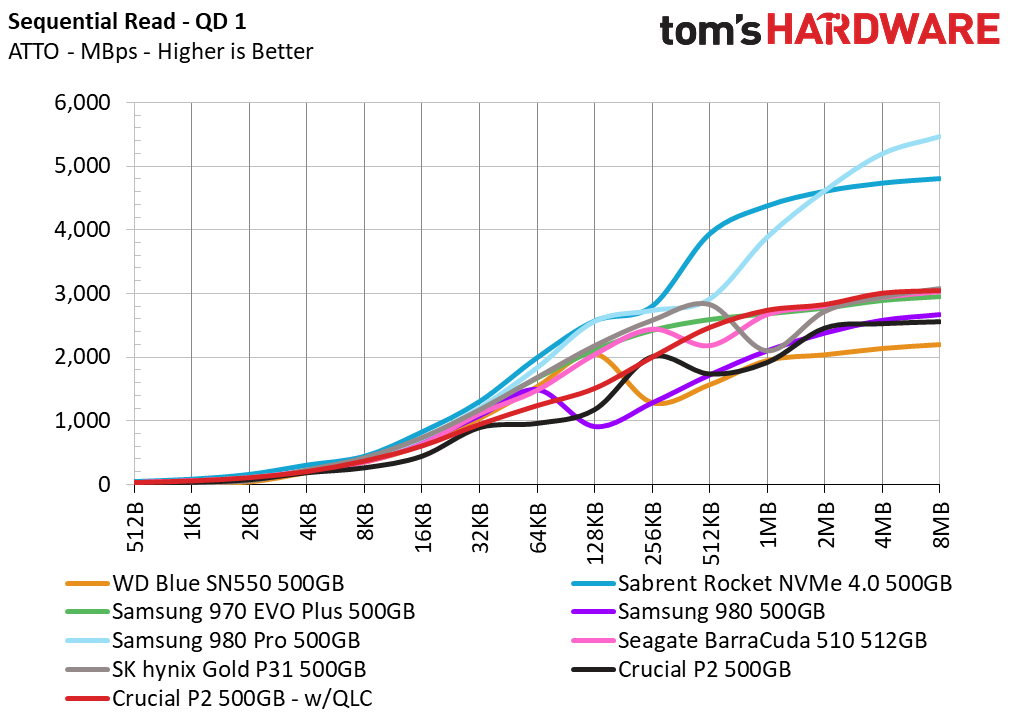

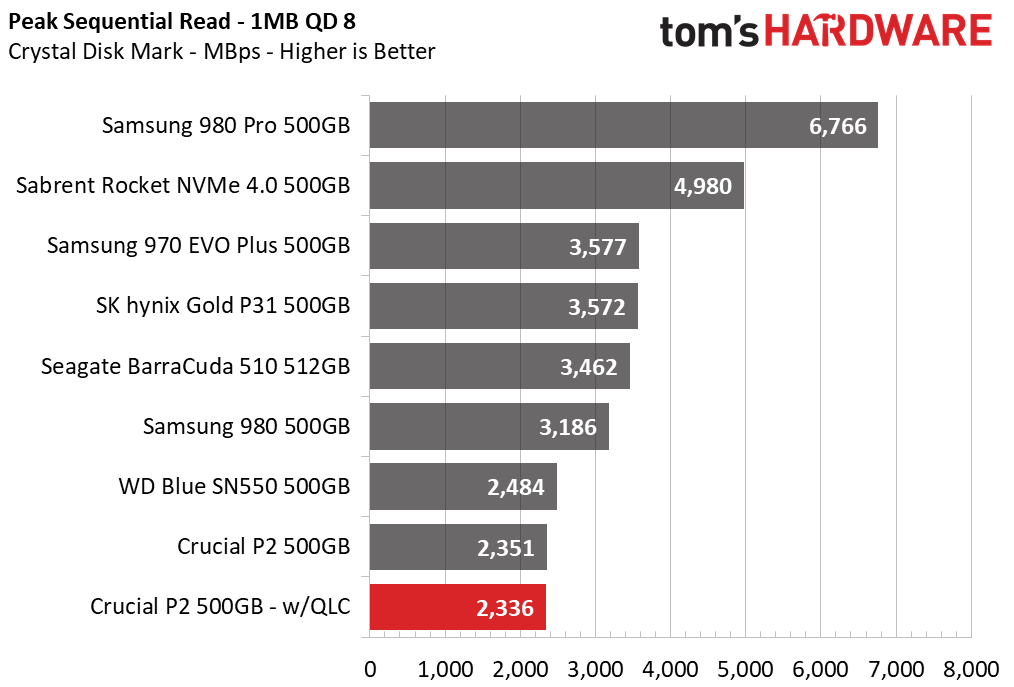

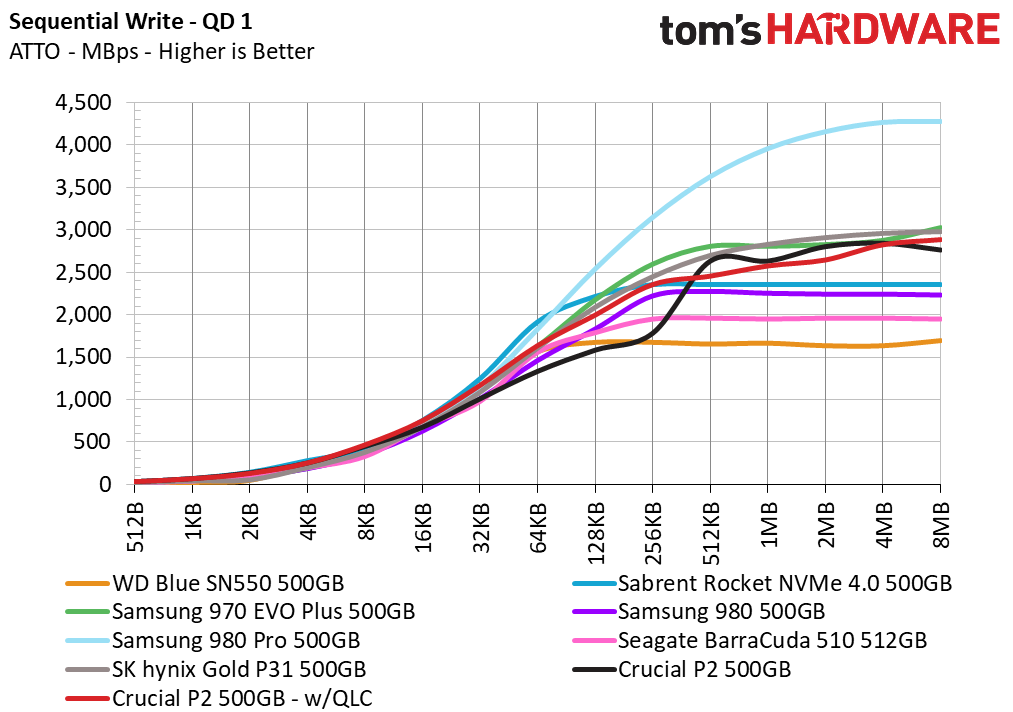

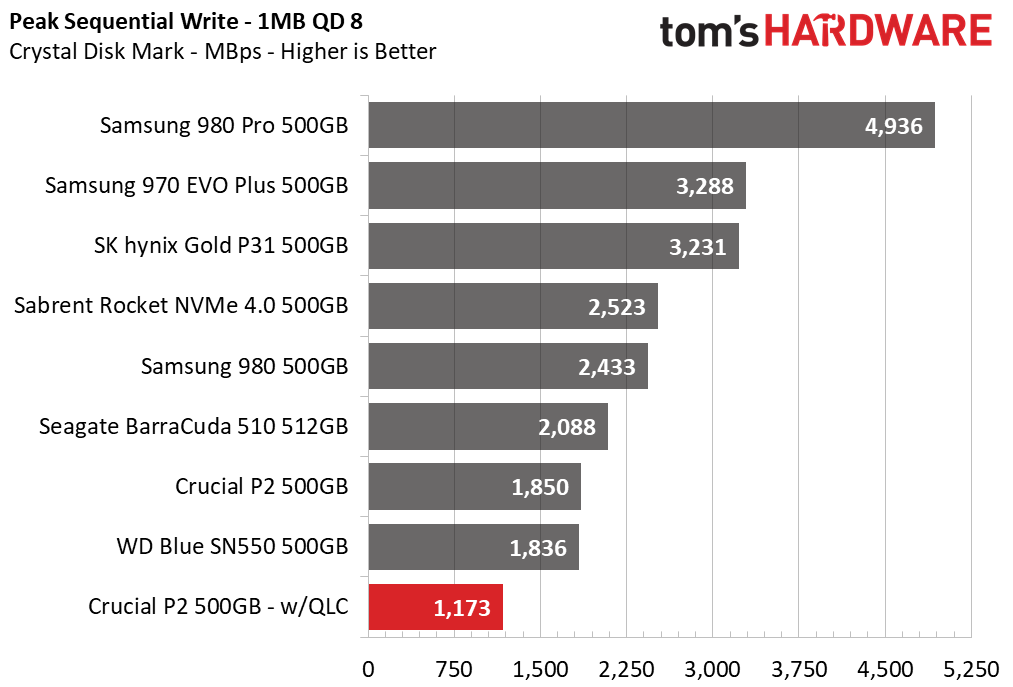

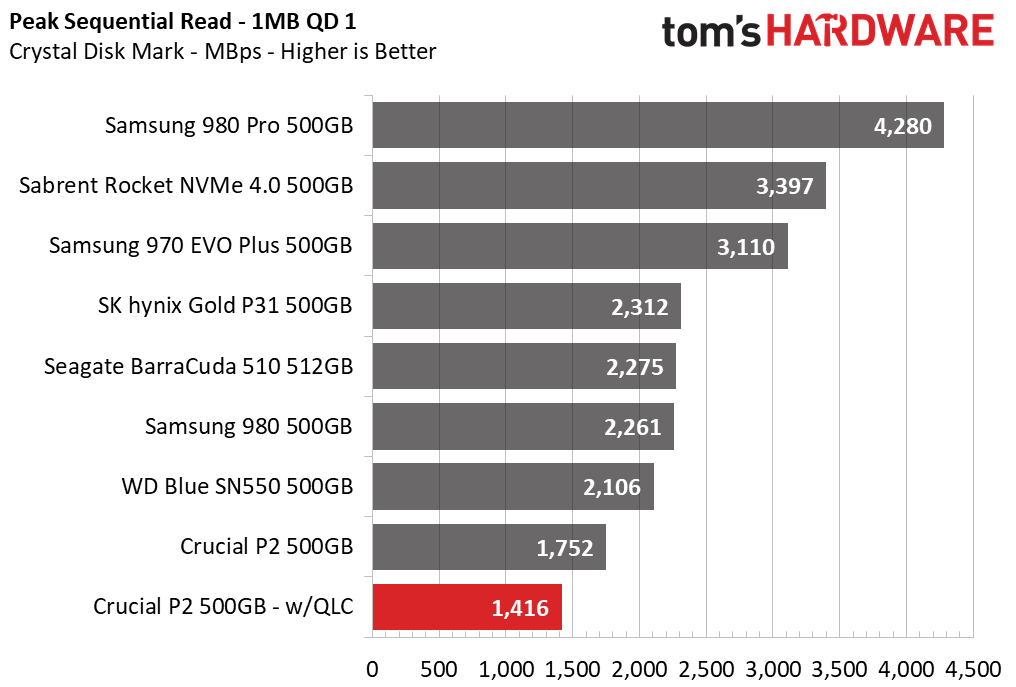

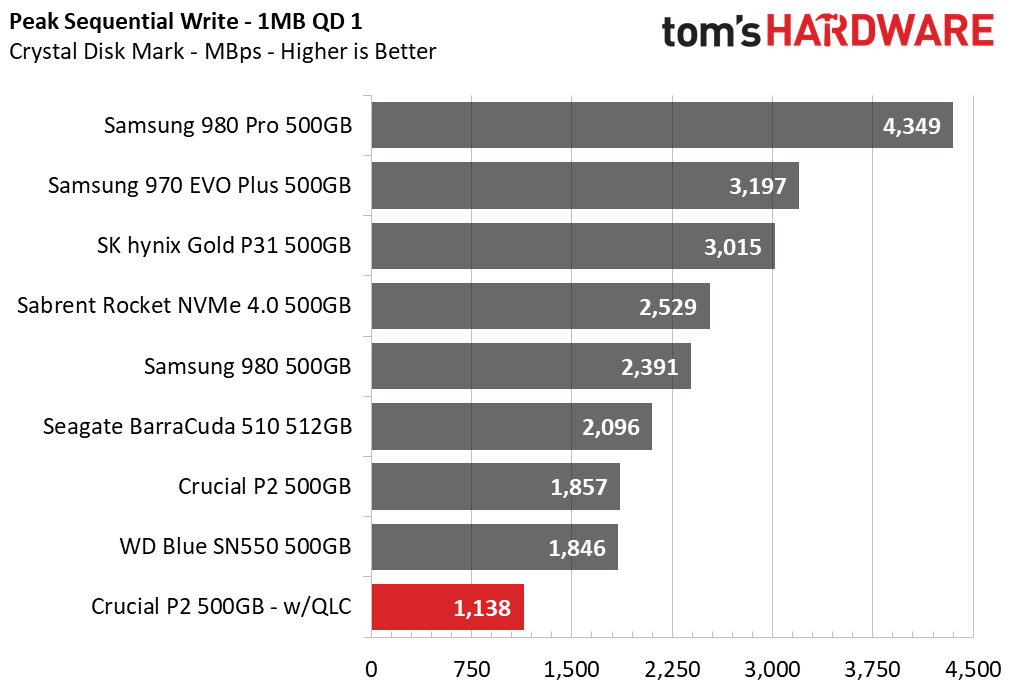

Based on the ATTO benchmark alone, it looks like the P2 should perform better than its predecessor, but unfortunately, this didn’t carry over into other tests because ATTO tests with fully compressible data, which is mostly unrealistic. Crystal Disk Mark’s results, on the other hand, show the new P2 delivers slower sequential performance with incompressible data, which more closely aligns with the data most people store on their PC.

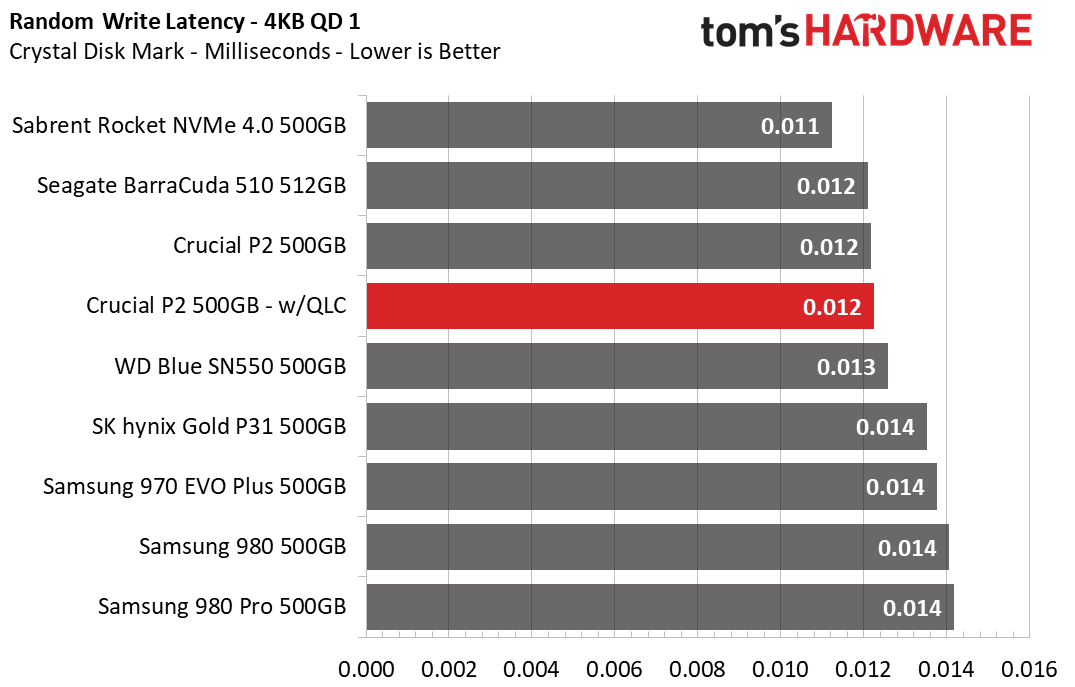

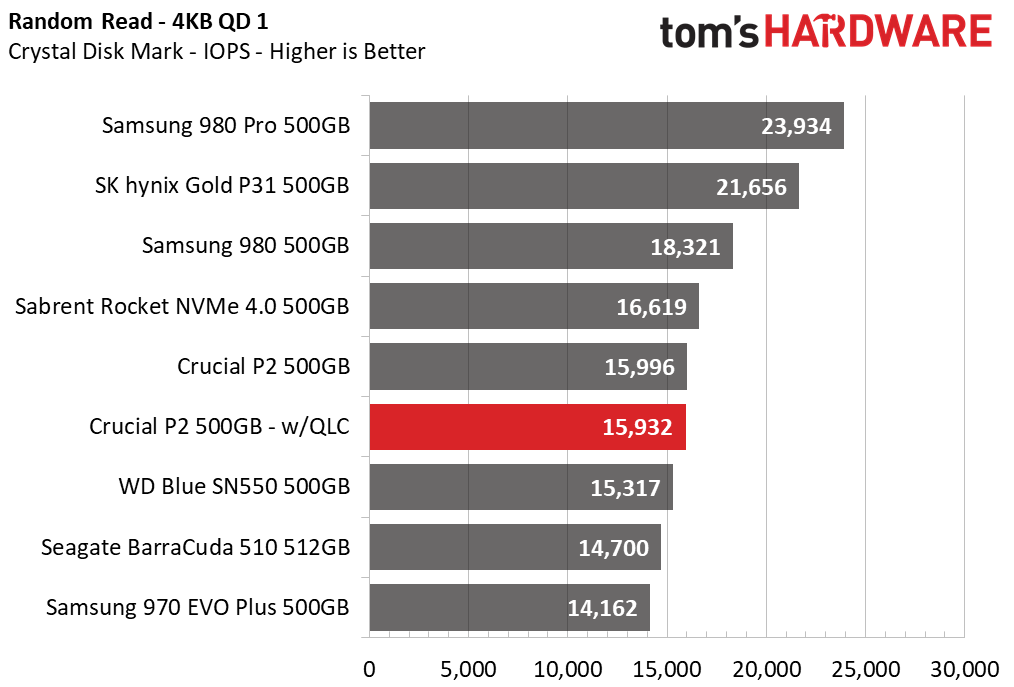

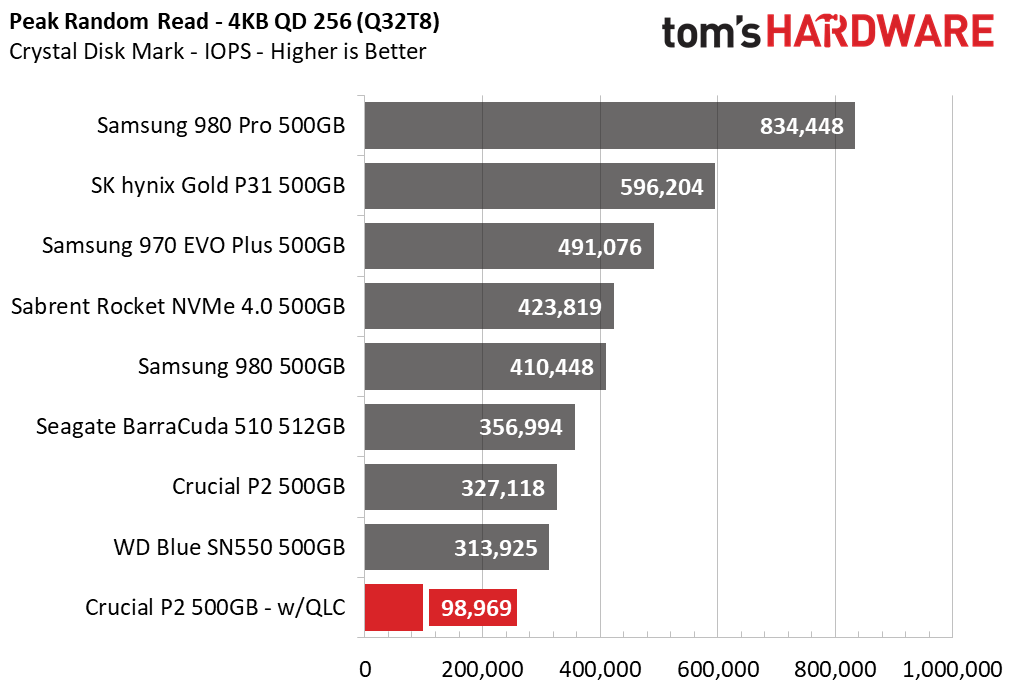

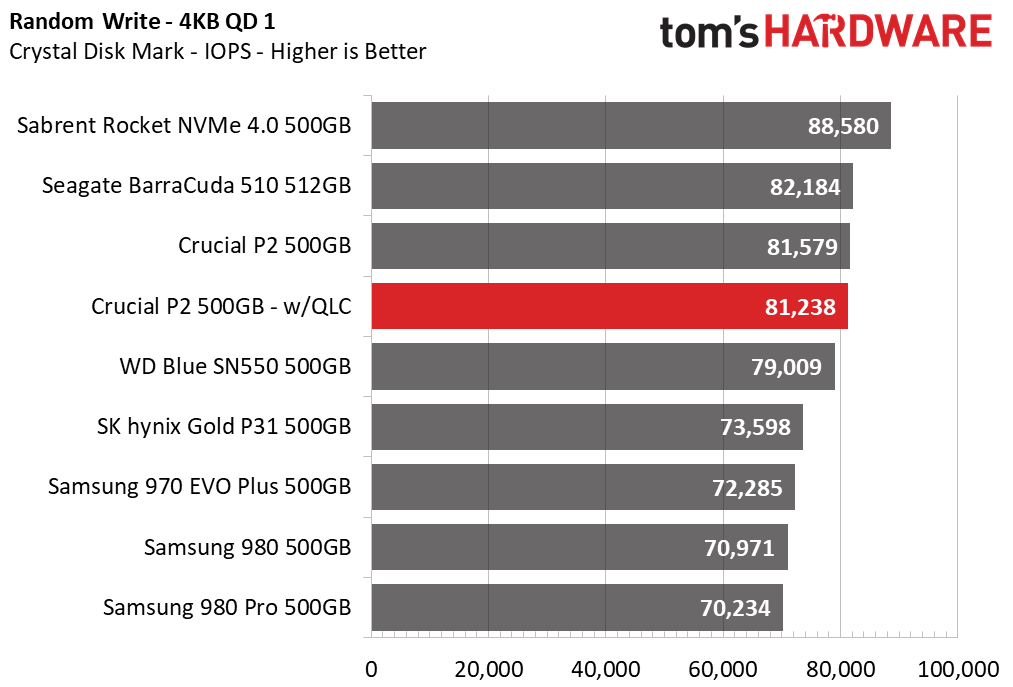

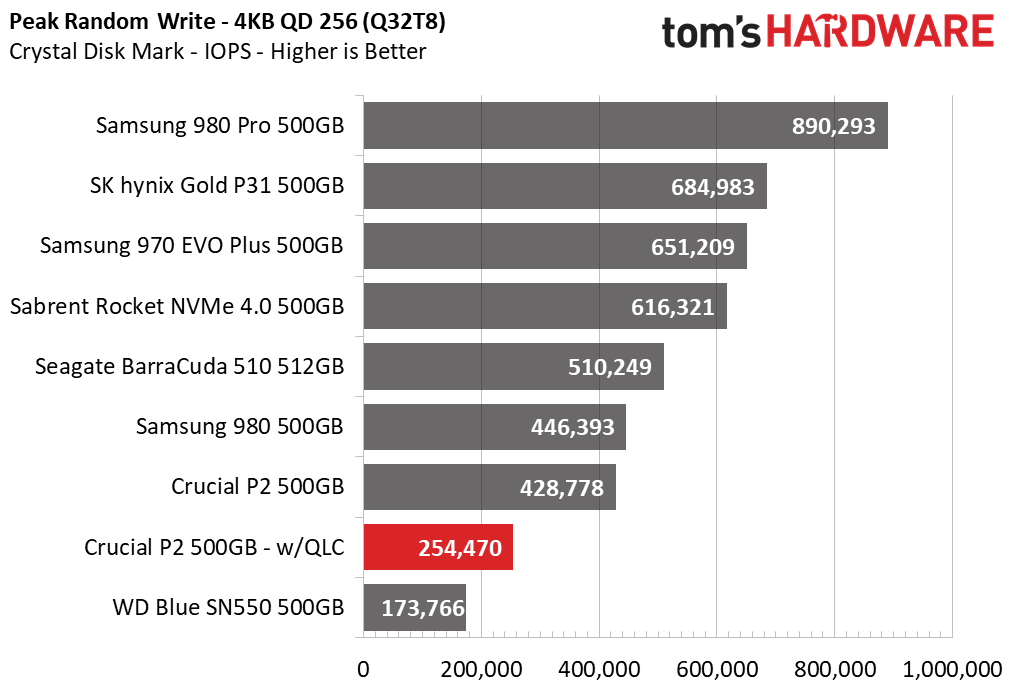

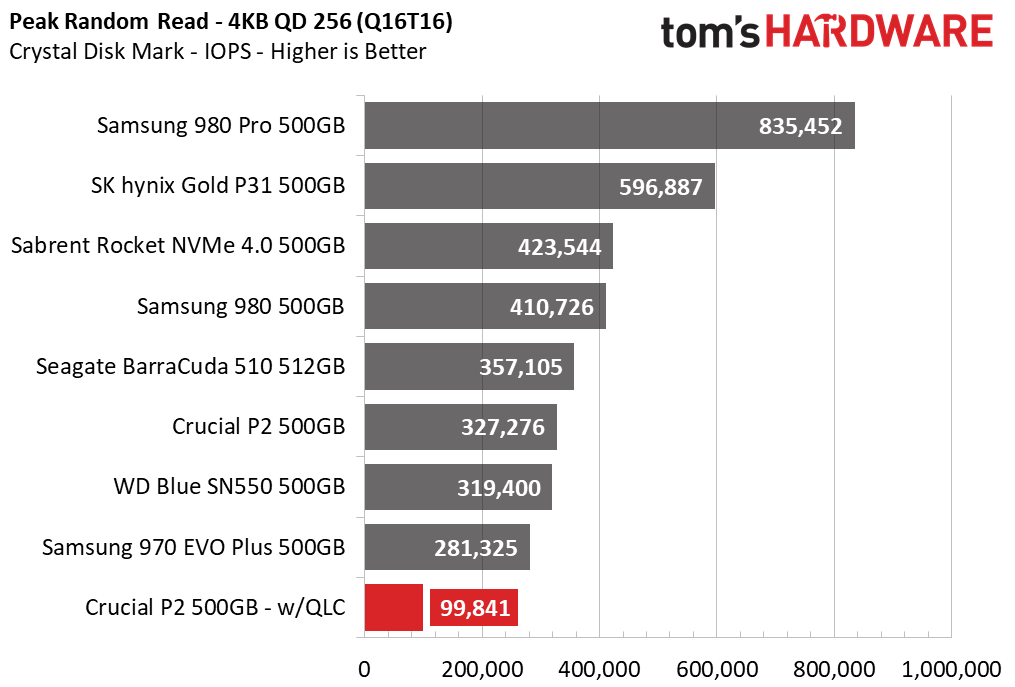

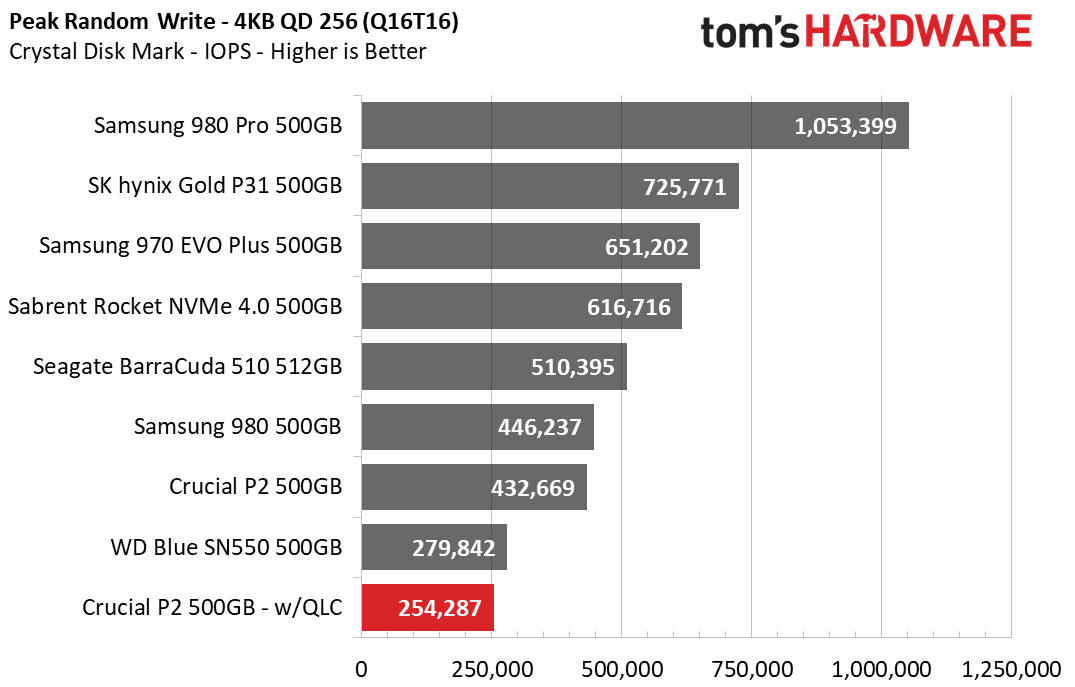

Additionally, the QLC-powered P2’s random performance at a queue depth of 1 (QD1) remains close to the TLC variant, but the QLC model severely lags at higher queue depths, delivering one-third to half the speed.

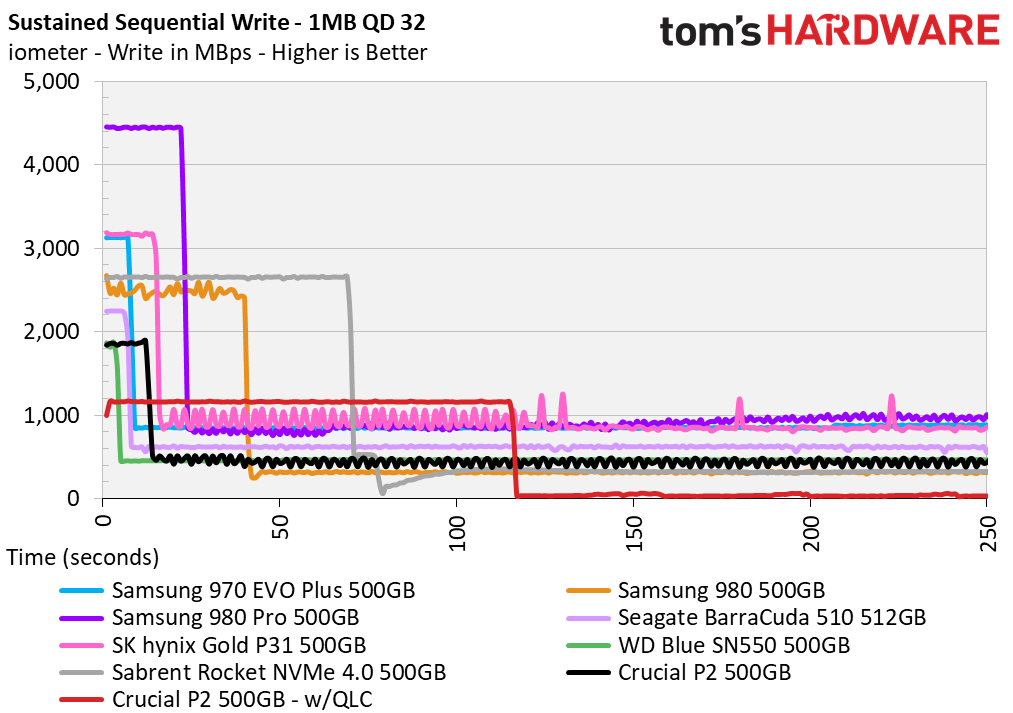

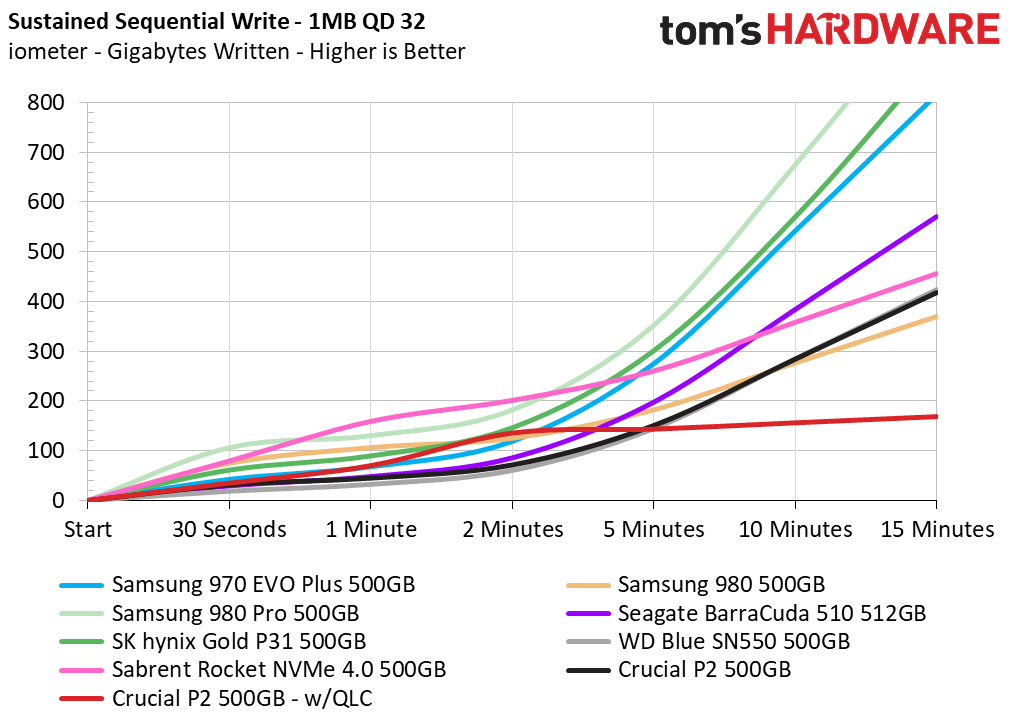

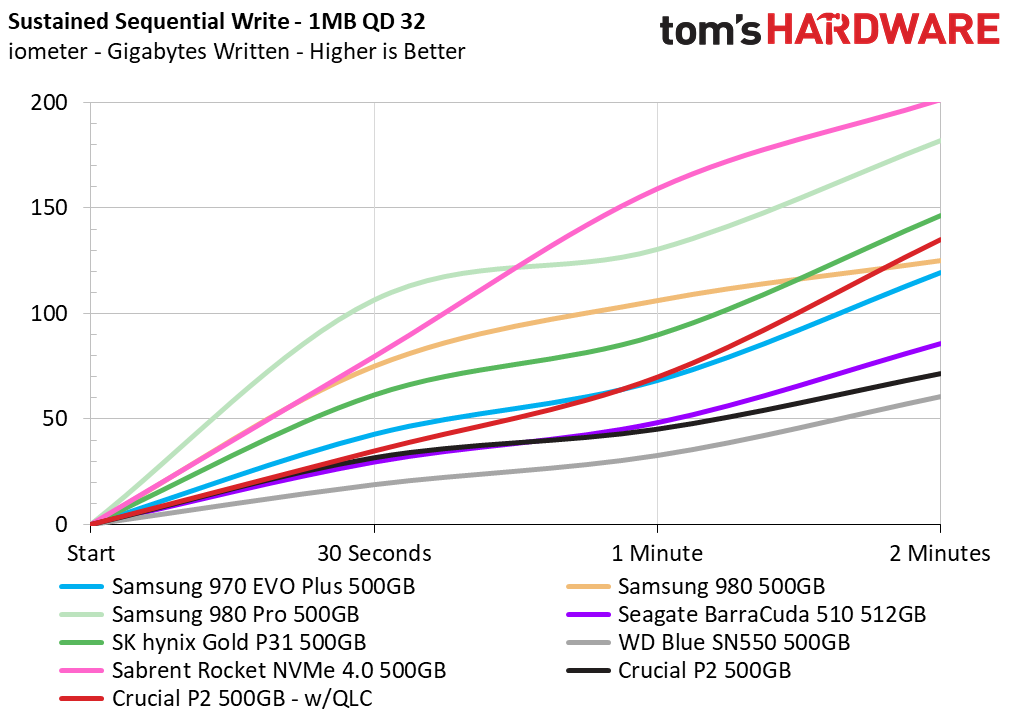

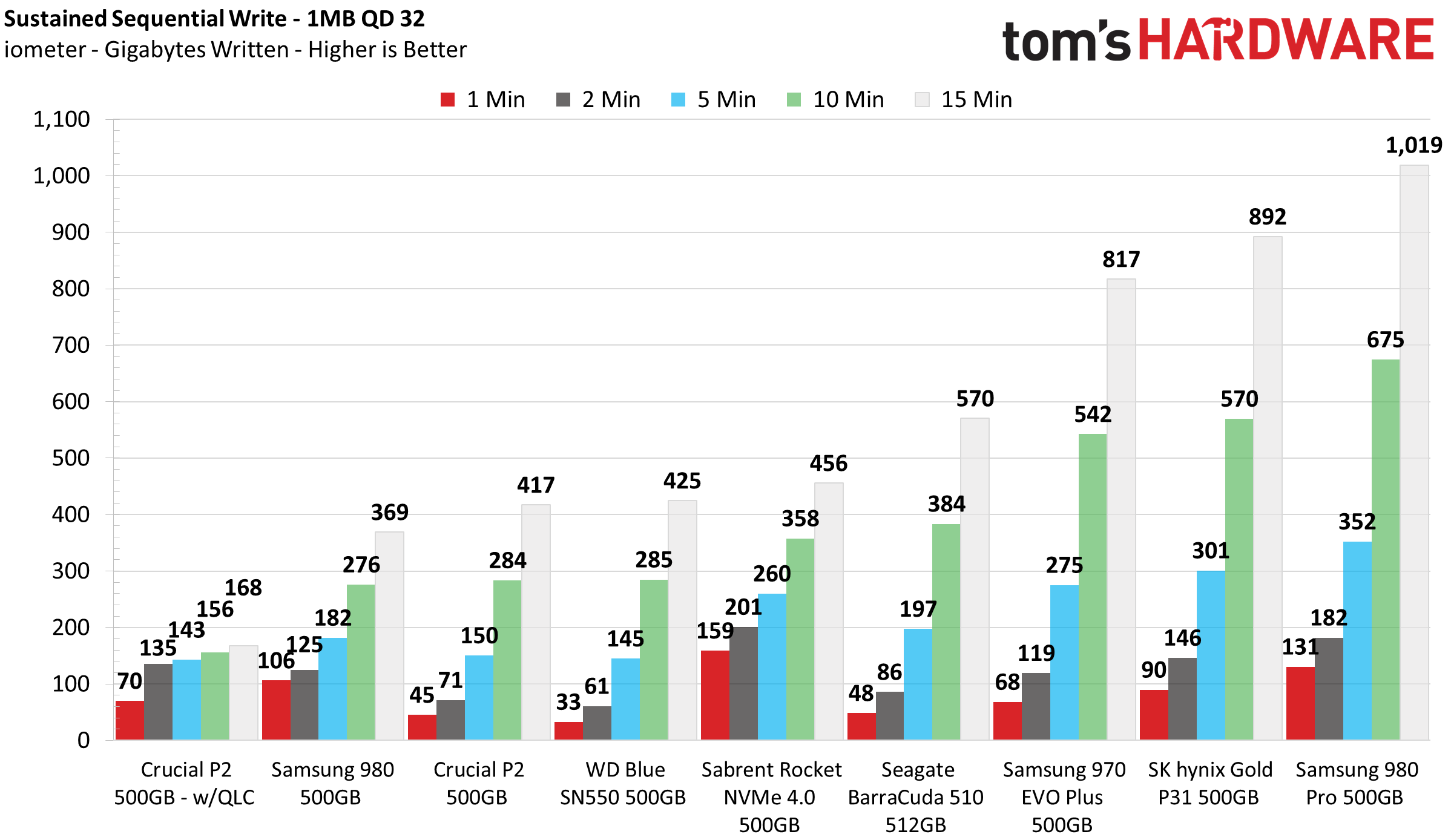

Sustained Write Performance and Cache Recovery

Official write specifications are only part of the performance picture. Most SSD makers implement a write cache, which is a fast area of (usually) pseudo-SLC programmed flash that absorbs incoming data. Sustained write speeds can suffer tremendously once the workload spills outside of the cache and into the "native" TLC or QLC flash. We use iometer to hammer the SSD with sequential writes for 15 minutes to measure both the size of the write cache and performance after the cache is saturated. We also monitor cache recovery via multiple idle rounds.

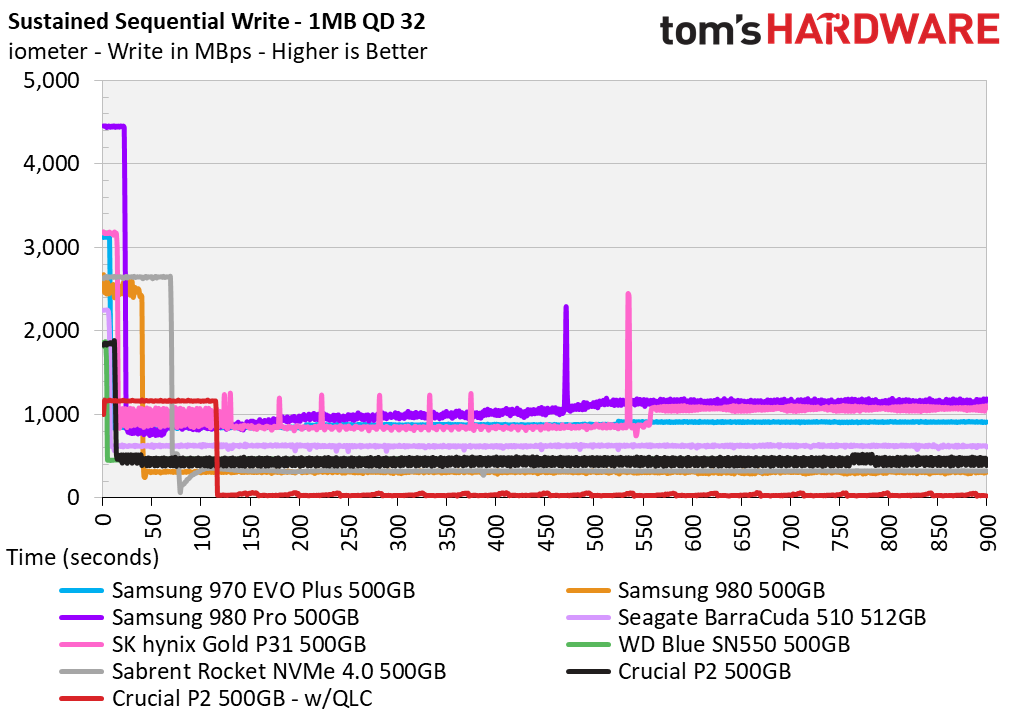

One improvement, at least to some degree, is the increased SLC cache capacity. The TLC variant’s SLC cache measured only 24GB, while the QLC replacement’s cache measured roughly 135 GB. Still, while the capacity has increased in an attempt to offset the impact of the much slower QLC flash, the speed of the P2 degraded severely both within and outside of the SLC cache. Some of this goes back to the reduced number of packages, which hampers interleaving, and thus parallelism.

Our TLC-based P2 wrote at roughly 1.85 GBps while the QLC-based P2 wrote at 1.16 GBps before degrading. Once degraded, the TLC variant's sustained write speeds measured roughly 450 MBps, which isn’t great, but acceptable. However, the QLC variant averaged USB 2.0-like speeds of just 40 MBps after the SLC cache was full.

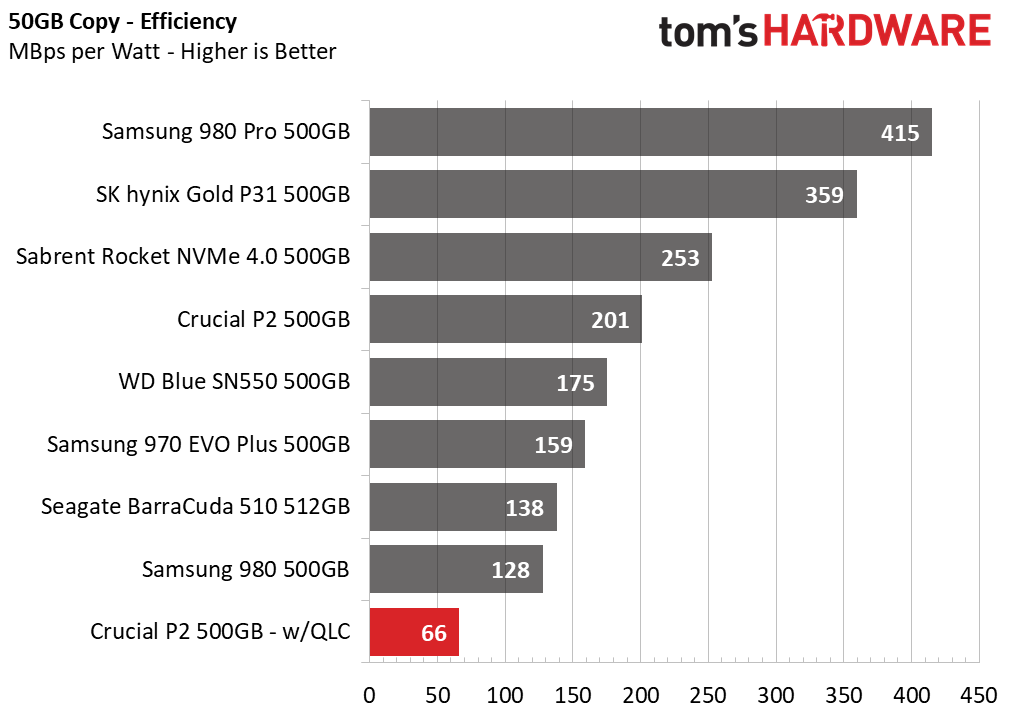

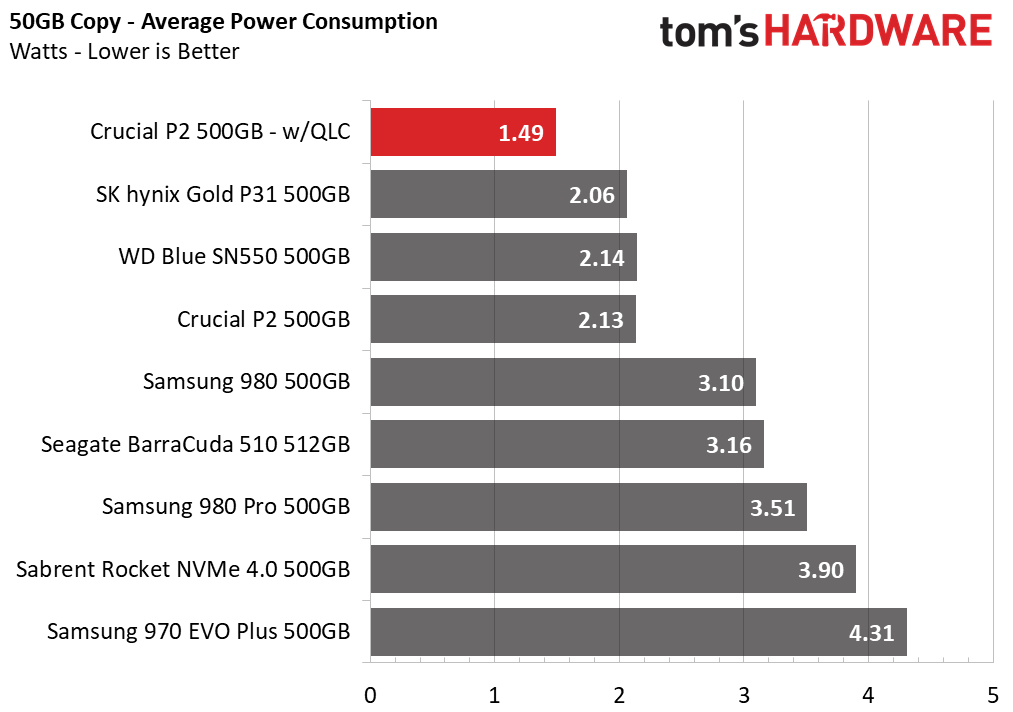

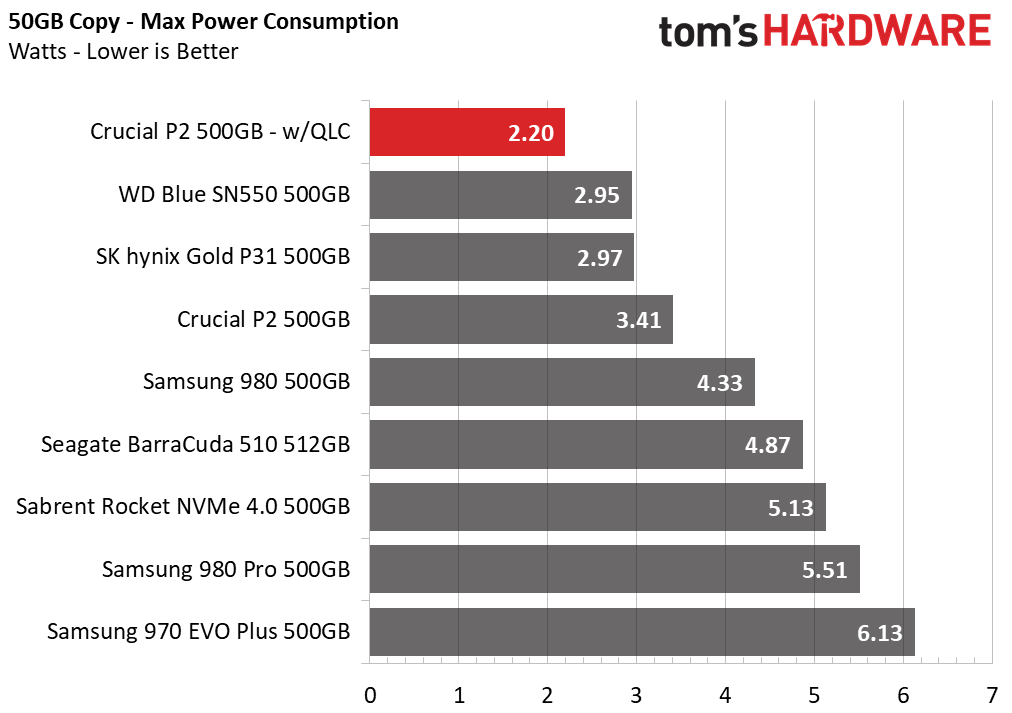

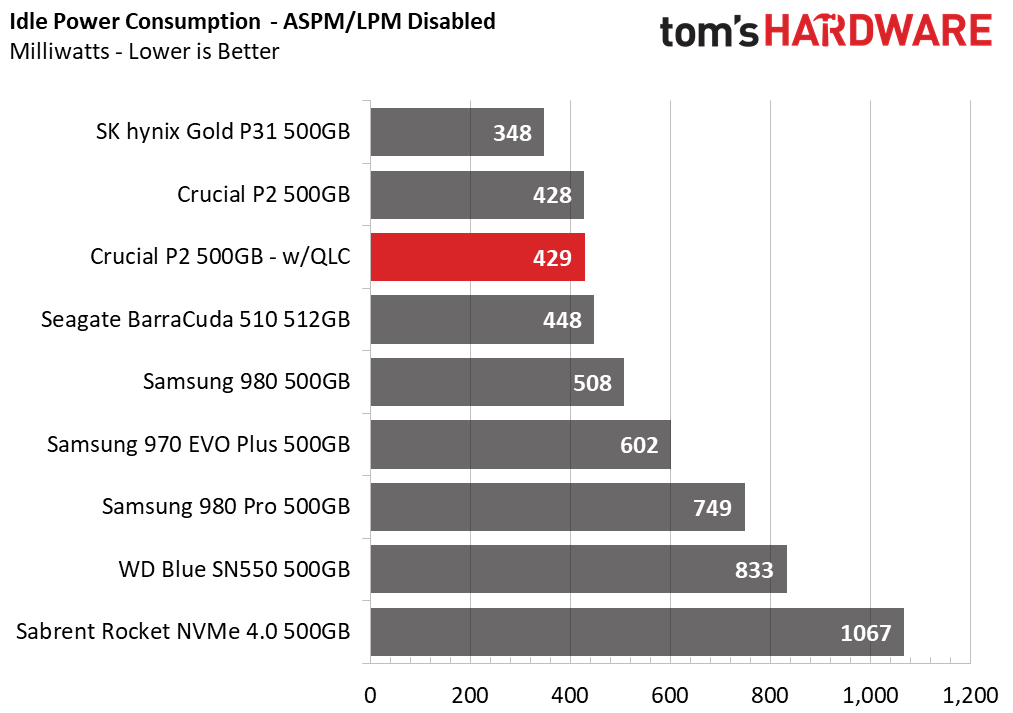

Power Consumption and Temperature

We use the Quarch HD Programmable Power Module to gain a deeper understanding of power characteristics. Idle power consumption is an important aspect to consider, especially if you're looking for a laptop upgrade. Some SSDs can consume watts of power at idle while better-suited ones sip just milliwatts. Average workload power consumption and max consumption are two other aspects of power consumption, but performance-per-watt is more important. A drive might consume more power during any given workload, but accomplishing a task faster allows the drive to drop into an idle state faster, which ultimately saves power.

When possible, we also log the temperature of the drive via the S.M.A.R.T. data to see when (or if) thermal throttling kicks in and how it impacts performance. Bear in mind that results will vary based on the workload and ambient air temperature.

Not only did the P2’s performance go down the drain, but so did power efficiency. While the average power consumption dropped during our 50GB file test in comparison to its predecessor, the new QLC-based P2 scores the lowest efficiency out of the test pool.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

Sean is a Contributing Editor at Tom’s Hardware US, covering storage hardware.

-

cryoburner The performance doesn't look particularly good, generally being at the bottom of the barrel among the NVMe drives, and even seeing a 23% longer load time than the SATA-based MX500 at the Final Fantasy test. If it's 23% slower than a SATA drive at actually loading things, and 30% slower than the previous P1 model, then what's the point? Higher sequential transfers in some synthetic benchmarks and file copy tests don't mean much if it's going to perform worse at common real-world operations. I certainly wouldn't say that the drive "beats SATA dollar for dollar" or "delivers SATA-breaking speed, even without DRAM" based on those results.Reply

The indications that QLC flash may be replacing the drive's TLC in a future revision also means the P2 might perform substantially worse than the results shown here, so I hope to see some follow up testing if that ends up being the case. The review says the BX500 did that, and mentions "keeping us up to date" about it, but if I search for BX500 reviews, including the one from Tom's Hardware, they all appear to describe the drive as using TLC and performing better than the drive's specifications would indicate, and no update appears to have been made about the switch to QLC. Sending out faster TLC drives for review, then releasing versions with QLC under the same product name at a later date seems rather shifty.

Under light operation, Crucial’s P2 offers a snappy and responsive user experience that will surpass any SATA SSD. It trades blows with Silicon Power’s P34A60 and even keeps up with the Samsung 970 EVO Plus, yet gets beat in both the Quick and Full System benchmarks by both of the drives. Crucial’s P1, with its DRAM-based architecture, outperforms both the P2 and P34A60. That proves that DRAM-based designs provide the most responsive user experience, even with slower QLC flash.

As far as a "responsive user experience" goes, something tells me that these differences of a few millionths of a second are not going to be perceptible. The difference in latency between the fastest and slowest SSD tested here only amounts to around one ten-thousandth of a second, so I don't see how anyone would notice that when it will take a typical monitor around a hundred times as long to update the image to display the output. Maybe a bunch of these operations added together could make some difference, but then more of the drive's performance characteristics will come into play than just latency, so I don't see how that synthetic benchmark would bear much direct relation to the actual real-world experience. It's fine to show those latency benchmark results, but I don't think direct relations to the user experience can really be drawn from them.

I'd like to see more real-world load time results in these reviews, as that's what these drives will typically be getting used for most of the time. As the Final Fantasy test shows, just because one drive appears multiple times as fast in some synthetic benchmarks or file copy tests, that doesn't necessarily translate to better performance at actually loading things. Practically all of the synthetic benchmarks show the P2 being substantially faster than an MX500 SATA drive, but when it comes to loading a game's files, it ends up being noticeably slower by a few seconds. Is that result a fluke, or are these synthetic tests really that out-of-touch with the drive's real-world performance? These reviews should measure other load times of common applications, games and so on, and not just rely primarily on pre-canned and synthetic benchmarks that seem to be at odds with the one real-world loading test.

Also, I'd like to see test results for drives that are mostly full. Does the real-world performance tank if the drive is 75% or 90% full, and less space is dedicated to the SLC cache? These benchmark results don't really provide any good indication of that. The graphs showing how much performance drops once the cache is filled are nice, but the size of that cache will typically change as the drive is filled. A mostly-full drive may only have a handful of gigabytes of cache that gets filled even with moderately-sized write operations.

The P2 lacks DRAM, meaning that it doesn’t have a fast buffer space for the FTL mapping tables. Instead, the P2, like most modern DRAMless NVMe SSDs, uses NVMe’s Host Memory Buffer feature. With it, the SSD can use a few MB of the host system’s memory to provide snappier FTL access, which improves the feeling of ‘snappiness’ when you use the drive.

A "few MB" is kind of vague. How much system RAM is it actually using? The Crucual P1 had 1GB of DRAM onboard for each 1TB of storage capacity. If the P2 is using 1GB of system RAM for the same purpose, then that's a hidden cost not reflected by the price of the drive itself. This might be especially relevant if one were adding such a drive to a system with just 8GB of RAM. And even on a system with 16GB, that could become more of a concern within a few years as RAM requirements rise for things like games. If one ends up needing to upgrade their RAM sooner due to DRAMless drives consuming a chunk of it, then the cost savings of cutting that out of the drive itself seems questionable, especially given the effects on performance.

And for that matter, it seems like the performance of system RAM could affect test results more than it does on drives with their own onboard RAM. I'm curious whether running system RAM at a lower speed, or perhaps on a Ryzen system with different memory latency characteristics could affect the standings for these DRAMless drives. The use of system RAM also undoubtedly affects the power test results as well. This drive appears to be among the most efficient models, but is the system RAM seeing higher power draw during file operations in its place? -

seanwebster Reply

Unfortunately, I don't have one. But, the P1 is very close in performance - so close it is almost a substitute if you want to see how the 660p 512GB may perform.Alvar Miles Udell said:The Intel 660p 512GB drive should have been included as well...

cryoburner said:The performance doesn't look particularly good, generally being at the bottom of the barrel among the NVMe drives, and even seeing a 23% longer load time than the SATA-based MX500 at the Final Fantasy test. If it's 23% slower than a SATA drive at actually loading things, and 30% slower than the previous P1 model, then what's the point? Higher sequential transfers in some synthetic benchmarks and file copy tests don't mean much if it's going to perform worse at common real-world operations. I certainly wouldn't say that the drive "beats SATA dollar for dollar" or "delivers SATA-breaking speed, even without DRAM" based on those results.

The indications that QLC flash may be replacing the drive's TLC in a future revision also means the P2 might perform substantially worse than the results shown here, so I hope to see some follow up testing if that ends up being the case. The review says the BX500 did that, and mentions "keeping us up to date" about it, but if I search for BX500 reviews, including the one from Tom's Hardware, they all appear to describe the drive as using TLC and performing better than the drive's specifications would indicate, and no update appears to have been made about the switch to QLC. Sending out faster TLC drives for review, then releasing versions with QLC under the same product name at a later date seems rather shifty.

Updates I am referring to typically come in news posts or if I am sampled the updated device, it will be reflected in an update in the review. I wasn't aware of the BX500 QLC swap until recently, the week of writing this review, and actually haven't had a moment to notify the team about it until the other day, tho I think I saw a post about it somewhere at one point.

As far as a "responsive user experience" goes, something tells me that these differences of a few millionths of a second are not going to be perceptible. The difference in latency between the fastest and slowest SSD tested here only amounts to around one ten-thousandth of a second, so I don't see how anyone would notice that when it will take a typical monitor around a hundred times as long to update the image to display the output. Maybe a bunch of these operations added together could make some difference, but then more of the drive's performance characteristics will come into play than just latency, so I don't see how that synthetic benchmark would bear much direct relation to the actual real-world experience. It's fine to show those latency benchmark results, but I don't think direct relations to the user experience can really be drawn from them.

After toying with hundreds of SSDs, for me, I notice the difference in responsiveness between SATA and PCIe SSDs in day to day use. It's slight but noticeable, and especially so when launching apps after boot and moving a bunch of files around. You may not be able to draw conclusions by only looking at synthetic, but that doesn't mean one can't.

The iometer and ATTO synthetic data are just a few of the data points I look at when analyzing performance. But, there are some relationships/patterns in these data points metrics that carry over to real-world performance. After analyzing the strengths and weaknesses between many SSDs architectures and performance scores and operation habits in the same system, one can start linking synthetic differences between devices to real-world experience differences between devices. As well, these results are included to validate manufacturer performance ratings. Real-world benchmarks can not do that, which is why I include them as supporting evidence to complement the real-world data.

I'd like to see more real-world load time results in these reviews, as that's what these drives will typically be getting used for most of the time. As the Final Fantasy test shows, just because one drive appears multiple times as fast in some synthetic benchmarks or file copy tests, that doesn't necessarily translate to better performance at actually loading things. Practically all of the synthetic benchmarks show the P2 being substantially faster than an MX500 SATA drive, but when it comes to loading a game's files, it ends up being noticeably slower by a few seconds. Is that result a fluke, or are these synthetic tests really that out-of-touch with the drive's real-world performance? These reviews should measure other load times of common applications, games and so on, and not just rely primarily on pre-canned and synthetic benchmarks that seem to be at odds with the one real-world loading test.

The only synthetic tests that I use are iometer and ATTO. PCMark 10 and SPECworkstation three are trace-based that test the SSD directly against multiple real-world workloads that cater to their respective consumer and prosumer market segments.

Final fantasy shows just a second or two difference because of a few reasons. Most SSDs are similarly responsive to this one workload simply because it isn't a demanding one, it is a rather light read test really. Overall, the game data loading process is so well optimized for HDD usage that when you replace the HDD with the SSD, most will load the few hundred GB of data per game scene at relatively the same time since its such a small transfer.

The fastest SSDs can respond faster to the random and sequential requests than others due to lower read request latency, and thus they rank ahead of slower ones here - those few hundredths of an ms add up to show that difference. Ideally, I need a larger, more graphically demanding game benchmark, a better GPU, and a 4K monitor to get a larger performance delta between drives. Different resolution settings and games will perform differently. I use Final Fantasy's benchmark because it is the only one I know of that saves load time data. I hope more devs could include load times in their game benchmarks. If you have any recommendations, I'm all ears!

Also, I'd like to see test results for drives that are mostly full. Does the real-world performance tank if the drive is 75% or 90% full, and less space is dedicated to the SLC cache? These benchmark results don't really provide any good indication of that. The graphs showing how much performance drops once the cache is filled are nice, but the size of that cache will typically change as the drive is filled. A mostly-full drive may only have a handful of gigabytes of cache that gets filled even with moderately-sized write operations.

Ah yes, more write cache testing, my favorite! I could do more and it would be cool to include, but it is not worth doing so at this time. Most dynamic SLC write caches will shrink at a higher full rate, but most perform well still. I perform all my testing on SSDs that are running the current OS and 50% full as it is (except the write cache testing is done empty after a secure erase when possible). Most of the time, even though the cache shrinks, it's still as responsive as when the drive was empty, the write cache is smaller in size so only larger transfers will be impacted.

A "few MB" is kind of vague. How much system RAM is it actually using? The Crucual P1 had 1GB of DRAM onboard for each 1TB of storage capacity. If the P2 is using 1GB of system RAM for the same purpose, then that's a hidden cost not reflected by the price of the drive itself. This might be especially relevant if one were adding such a drive to a system with just 8GB of RAM. And even on a system with 16GB, that could become more of a concern within a few years as RAM requirements rise for things like games. If one ends up needing to upgrade their RAM sooner due to DRAMless drives consuming a chunk of it, then the cost savings of cutting that out of the drive itself seems questionable, especially given the effects on performance.

Unfortunately, I do not have tools that tell me exactly how much each drive utilizes and manufacturers will not disclose specifics all the time...well I might have a tool, but I haven't been able to explore using it quite yet. From the drive's I have tested with HMB and had the RAM usage disclosed to me, it has been set to around 32-128MB. However, based on some discussion with a friend, we think it could be up to 2GB-4GB based on the spec's data

And for that matter, it seems like the performance of system RAM could affect test results more than it does on drives with their own onboard RAM. I'm curious whether running system RAM at a lower speed, or perhaps on a Ryzen system with different memory latency characteristics could affect the standings for these DRAMless drives. The use of system RAM also undoubtedly affects the power test results as well. This drive appears to be among the most efficient models, but is the system RAM seeing higher power draw during file operations in its place?

All testing is currently on an Asus X570 Crosshair VIIIHero (Wifi) + Ryzen R5 3600X @4.2 all core platform with a kit of 3600MHz CL18 DDR4. I actually have that suspicion myself and have been planning to get a faster kit of RAM to test out how it influences both DRAMless and DRAM-based SSD performance, too. -

MeeLee Price looks good. But I prefer to keep price lanes open for things that really need it, like GPUs, and run an SSD from the SATA port.Reply -

cryoburner Reply

Yeah, I know those test suites are based on real-world software, but it's a bit vague exactly what operations each test is based on and how those apply to different workloads in the real world, so they might as well be synthetics. With CPU and graphics card reviews, you tend to see much more of a focus on testing actual software in ways that make it relatively clear what each test is comprised of.seanwebster said:The only synthetic tests that I use are iometer and ATTO. PCMark 10 and SPECworkstation three are trace-based that test the SSD directly against multiple real-world workloads that cater to their respective consumer and prosumer market segments.

The Final Fantasy test usually seems to be relatively in line with typical measurements of NVMe vs SATA vs HDD performance as far as game loading is concerned, but the results for this drive seem a bit off, with it not even matching the MX500, despite practically all the other benchmarks in the review saying it should perform better. Maybe its a result of being DRAMless? If the game is heavily utilizing the CPU and RAM during the loading process, perhaps it's fighting with the drive for access to system memory? It might be something worth investigating further.seanwebster said:Final fantasy shows just a second or two difference because of a few reasons. Most SSDs are similarly responsive to this one workload simply because it isn't a demanding one, it is a rather light read test really. Overall, the game data loading process is so well optimized for HDD usage that when you replace the HDD with the SSD, most will load the few hundred GB of data per game scene at relatively the same time since its such a small transfer.

I'm not really sure about any demanding games that include load time readouts. However, there could be other methods. For example, recording video of games loading with an external capture device and using the resulting video file to check how long loading took, though I can see how that might make testing a bit inconvenient. Or maybe just using a graph of disk access to check that, which would likely work reasonably well for at least some titles. There wouldn't necessarily need to be a lot of tests, but having a few might prevent any one from making a particular drive appear better or worse than might typically be the case.seanwebster said:I use Final Fantasy's benchmark because it is the only one I know of that saves load time data. I hope more devs could include load times in their game benchmarks. If you have any recommendations, I'm all ears! -

seanwebster Replycryoburner said:Yeah, I know those test suites are based on real-world software, but it's a bit vague exactly what operations each test is based on and how those apply to different workloads in the real world, so they might as well be synthetics. With CPU and graphics card reviews, you tend to see much more of a focus on testing actual software in ways that make it relatively clear what each test is comprised of.

They are based on real-world system interaction and are traces that are exactly the same on each SSD. It is up to the storage device to differentiate itself when running those exact same workloads. There is some more detailed data in the results, but the time to spend on graphing out the details isn't. Most of the data shows similar to the rankings already graphed anyways. For more knowledge, you can read through the benchmark technical guides for more detailed information on how the tests run and how scoring is calculated. Storage tests are near the bottom of the tech guide.

https://s3.amazonaws.com/download-aws.futuremark.com/pcmark10-technical-guide.pdf

The Final Fantasy test usually seems to be relatively in line with typical measurements of NVMe vs SATA vs HDD performance as far as game loading is concerned, but the results for this drive seem a bit off, with it not even matching the MX500, despite practically all the other benchmarks in the review saying it should perform better. Maybe its a result of being DRAMless? If the game is heavily utilizing the CPU and RAM during the loading process, perhaps it's fighting with the drive for access to system memory? It might be something worth investigating further.

Yes, entirely so. The firmware and HMB on Phison's E13T's optimized for write performance while Silicon Motion's SM2263XT's firmware and HMB is optimized for reading. Going from SSD controller though the PCIe lanes, to host memory for the buffer, and then, back to the controller adds a bunch of latency. Which is why the write-optimized E13T can't keep up in reading tasks, it is ready to respond to write requests more than it is ready to respond to read requests. -

logainofhades ReplyMeeLee said:Price looks good. But I prefer to keep price lanes open for things that really need it, like GPUs, and run an SSD from the SATA port.

An M.2 PCI-E drive are not going to affect your top GPU slot, unless said board has a very weird configuration. Typically an M.2 will disable sata ports, or PCI-E slots that most people never really use much anyway. SLI/CF is dead, as well. -

MeeLee Reply

I run multiple GPUs, so PCIE lanes are still in short supply.logainofhades said:An M.2 PCI-E drive are not going to affect your top GPU slot, unless said board has a very weird configuration. Typically an M.2 will disable sata ports, or PCI-E slots that most people never really use much anyway. SLI/CF is dead, as well.

For my purposes, I prefer to have PCIE 3.0 x8 slots, but can do with x4 lanes too.

I use 3 GPUs, the max the motherboard supports.

On an MSI MPG board i have, that means an x8/x8/x4 configuration, or 20 out of the 24 lanes.

On other boards, I can only do x8/x4/x4 (or 16 lanes).

In both cases that leaves either zero or 4 lanes left for (m.2) PCIE SSDs. -

logainofhades The top M.2 slot gets its lanes, from the CPU, at least on the AMD side of things. You get 16 lanes for GPU, 4 for M.2 NVME storage, and 4 that connect to the chipset. Any other M.2 is going to get it PCI-E lanes from the chipset. I am not aware of any current board that has a PLX chip, for more lanes. What lanes give what, with regards to M.2 and GPU lanes, beyond the top GPU and M.2, are dependent on chipset, and then how the board manufacturer decided to implement the lanes, they have to deal with.Reply -

occational_gamer Buyer Be Warned: Crucial is now using lower density QLC chip, without any change in model numbers. Tom's re-reviewed the drives and changed its review to "do not recommend".Reply

Updated Review: https://www.tomshardware.com/features/crucial-p2-ssd-qlc-flash-swap-downgrade