Nvidia GeForce GTX 650 And 660 Review: Kepler At $110 And $230

We have two new graphics cards in the lab today: Nvidia's GeForce GTX 650 and 660, filling the gap between its GeForce GT 640 and GTX 660 Ti with Kepler derivatives. Are these GK107- and GK106-based boards able to challenge the Radeon HD 7750 and 7850?

Memory Bandwidth: Analysis And Summary

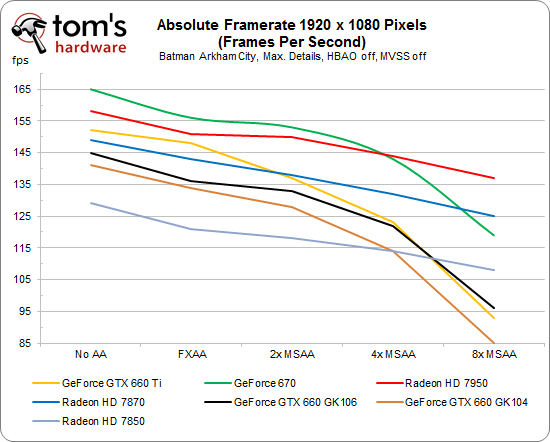

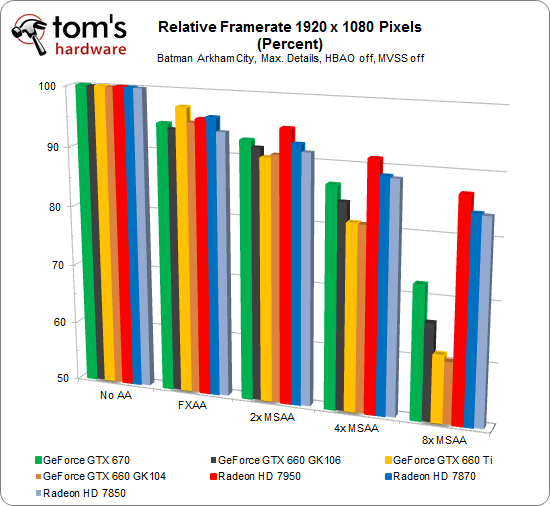

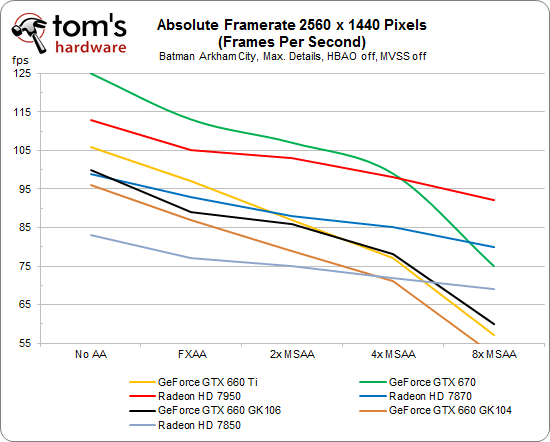

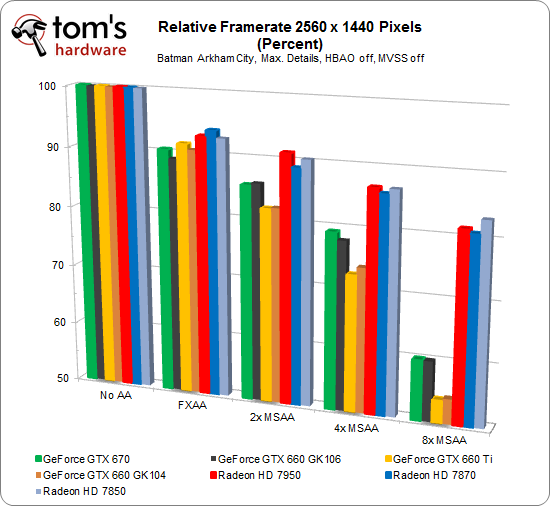

First, we plotted the frames per second achieved by each card. Then, we used the benchmark results with no anti-aliasing as our 100% reference point and calculated the percentage of this for the other settings. This illustrates how much of their original performance the cards lose when anti-aliasing is applied and no CPU or GPU limitation is in play.

Performance at 1920x1080

Performance at 2560x1440

The varied benchmark results published by different sites prove that, in many games, more demanding presets are more or less able to conceal the GeForce GTX 660 and 660 Ti's bandwidth disadvantage. Because its GPU is inherently less powerful, though, the GeForce GTX 660's memory bottleneck is less likely to be felt.

How about the relationship between the GK104- and GK106-based GeForce GTX 660s? Both slot in somewhere behind AMD's Radeon HD 7870. The gap between them grows with increasingly more intensive levels of anti-aliasing.

Four observations surprised us. First, we witnessed very linear scaling among AMD's Radeon boards as we increased AA settings. Second, it's impressive to see the GeForce cards hold their own, even at higher resolutions, providing they’re not forced to contend with 4x MSAA or more. The third surprise was that two Radeon HD 7750s in CrossFire were able to outpace the GeForce GTX 660 and 660 Ti. Finally, we were surprised to dig up an OEM card based on a pared-down GK104 GPU, although we weren't pleased with what we found. A higher shader count doesn't compensate for a lower core clock. So, without overclocking the board Nvidia is selling to OEMs turns out to be slower than retail cards with the same name.

So what does this tell us?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia's GeForce GTX 660 is most competitive when its GPU is taxed, deemphasizing its narrower memory interface. At stock settings, the OEM-only GK104-based GeForce GTX 660 is considerably slower than the GK106-based retail model. Not surprisingly, then, we have to take serious issue with Nvidia’s naming scheme, since buying a tier-one box with a GeForce GTX 660 is going to stick you with a much less powerful product and no intuitive way to see that.

Does the same handicap apply in compute-oriented workloads?

Current page: Memory Bandwidth: Analysis And Summary

Prev Page Memory Bandwidth: Testing The Limits Next Page OpenCL: GPGPU BenchmarksDon Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

EDVINASM Was waiting for GTX 650 to see if it can beat the old GTX 550 Ti but it seems other than power draw it's no match. Might as well keep my GPU until next NVidia lineup. GTX 660 on other hand is only €50 cheaper than GTX 660 Ti meaning its a no budget saver to buy non Ti version. Fail...Reply -

mikenygmail Buy a 7770 or 7870 instead.Reply

Wait for sales on whichever one is needed and then grab one -

AMD 7770 can be had for just over $100.

AMD 7870 can be had for about $220. -

lahawzel Goddamn Mike NY Gmail or whatever the hell your name is supposed to be, here, proper commenting etiquette:Reply

1. Read the article.

2. Understand what the article is talking about.

3. If you find an urge to comment about "______ sucks" or "_______ wins again", especially when the article says the opposite of what you want to post, chances are your comment will look dumb as hell when it's posted and earn you 20 downvotes. Therefore, don't post that goddamn poor excuse of a "comment". -

tomfreak How "nice" of u tomshardware. By only compared 7750/7770 vs 650 in high detail but not comparing 7750/7770 on the Ultra detail, then when u pull out a 460 SE/9800GT for benchmark, u are taking away 650(why?).Reply

Is it because 650 performance is too poor to show off on benchmark? It doesnt take a genius to figure out the huge diff between 6870 vs 650. 7770= 6850 speed. So I guess even the 7750/460SE are putting shame on 650 on those high quality detail? too shy to show off 460SE/9800GT up against 650?

I dare u put on a detailed benchmark with 650 up against 7770/7750/GTS450/550ti/460/9800GT/9800GTX on all condition. Not a selective benchmark. -

Why are there giant gaps in both lineups? AMD has the 7770 at $130 and 7850 at $230 -- nvidia has the 650 and 660 at similar price points -- ideally for my budget would be something in the $150-170 price range, but I either have to compromise or shell out more. It seems like an obvious market gap.Reply

-

mikenygmail LaHawzelGod damn Mike NYThanks for the attempted compliment, but call me Mike. I'm glad you've been paying attention.Reply

It was more of a joke than anything else to simply write "AMD wins again!" and it was actually pretty funny! I try to balance things out so that no one company is viewed too favorably.

For example, I recently bought an Nvidia GTX 460 1 GB 256 bit card for $70, new, with a 3 month warranty for a friend to upgrade his gaming computer. Unusual? Yes. Great deal? You better believe it! Of course, if an equivalent AMD card was available at a cheaper price, that's the one I would've bought.

Now, relax and try to control yourself. Refrain from the use of profanity in future posts. Thanks.

-

EzioAs Nice article to be honest. I'm really glad you tested the Radeon cards with the new driver compared to other review sites.Reply

I've got nothing else to say on the GTX650 but to just point out that it's a weak card.

On the other hand, the GTX660 is probably the only Kepler (besides the 670) that impresses me. I don't know about everyone else though. To point out one thing, most Radeon 7870s can be found at $240 or lower without MIR. The GTX660 is priced well for a release MSRP and makes the 660ti offers less value, kind of like the 670 vs 680. For 8xMSAA, the performance does cripple but I think at this price point, most people are going to stay with 4xAA or possibly lower. -

mikenygmail EzioAsNice article to be honest. I'm really glad you tested the Radeon cards with the new driver compared to other review sites.I've got nothing else to say on the GTX650 but to just point out that it's a weak card.On the other hand, the GTX660 is probably the only Kepler (besides the 670) that impresses me. I don't know about everyone else though. To point out one thing, most Radeon 7870s can be found at $240 or lower without MIR. It's priced well for a release MSRP and makes the 660ti offers less value, kind of like the 670 vs 680. For 8xMSAA, the performance does cripple but I think at this price point, most people are going to stay with 4xAA or possibly lower.Reply

Exactly - Savvy TH readers will wait for sales on whichever one is needed and then grab one!

AMD 7770 can be had for just over $100 on sale.

AMD 7870 can be had for about $220 on sale.