GeForce GTX Titan X Review: Can One GPU Handle 4K?

Results: Temperature, GPU Boost And Noise

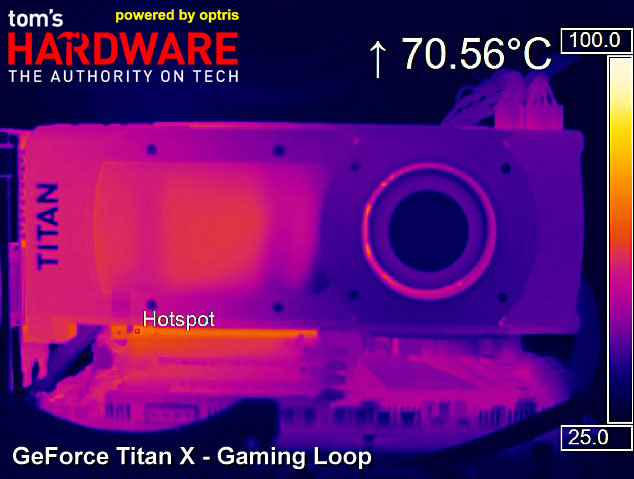

Temperatures and Infrared Measurements

In order to get precise infrared thermal measurements, we’re using either a special tape or lacquer with known emissivity. The transparent paint, which is used by board manufacturers for so-called tropicalizing, is well-suited for analyzing the backs of PCBs (or other surfaces with unknown emissivity). The largest measurement errors occur when normal infrared thermometers, which invariably have dihedral angles too large to capture the VRM pins, are used. Doubly so if the emissivity can’t be set exactly.

This is why we’re using an Optris PI450, which doesn’t just provide us with high-resolution pictures in real time, but also allows us to define all measurement sites exactly.

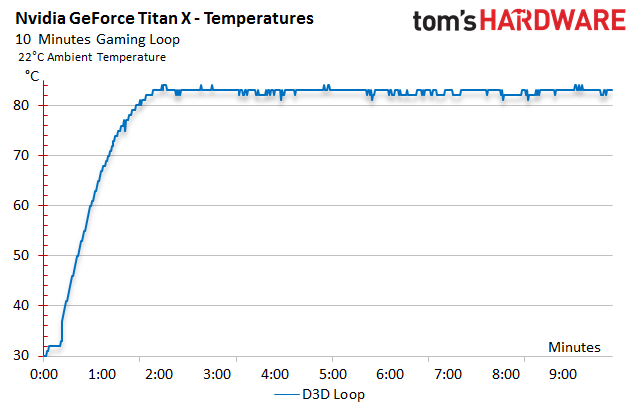

First, we look at the GeForce Titan X’s temperatures in a closed PC case during a gaming workload. The set maximum of 83 degrees Celsius is reached quickly, but never surpassed.

GPU Boost Frequency

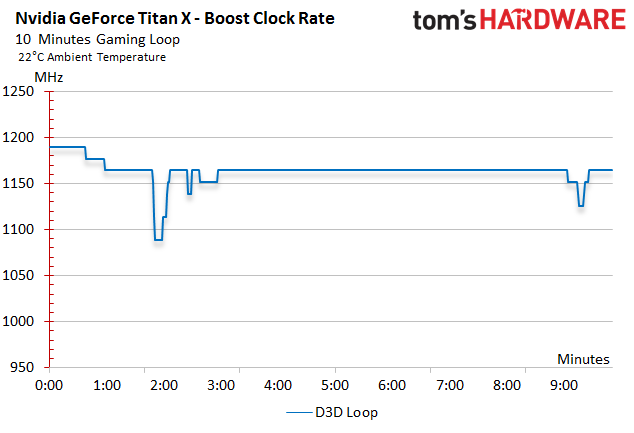

Plotting frequency and temperature over time shows that Nvidia's GPU Boost rating of 1190MHz drops dramatically once the thermal target is reached. It then stabilizes after several steps at 1164MHz. Shorter and shallower drops happen whenever the graphics card’s workload gets a little too high and threatens to push up the temperature.

Temperatures after Prolonged Operation

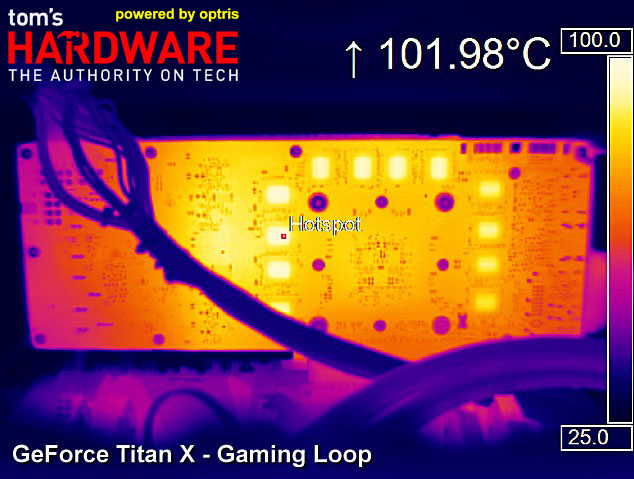

No backplate, you ask? Most of them are useless eye candy on today's gaming graphics cards anyway, unless they are specifically engineered for their designated purpose. In a bid to improve airflow in multi-card configurations, Nvidia doesn't equip its GeForce Titan X with one. So, what happens to the memory modules on the back of the board, which don't receive any cooling whatsoever?

There’s no question that 102 degrees Celsius is more than what you’d want to see over the long term. Although we understand Nvidia's rationale, for this particular card, a backplate still might have been smart.

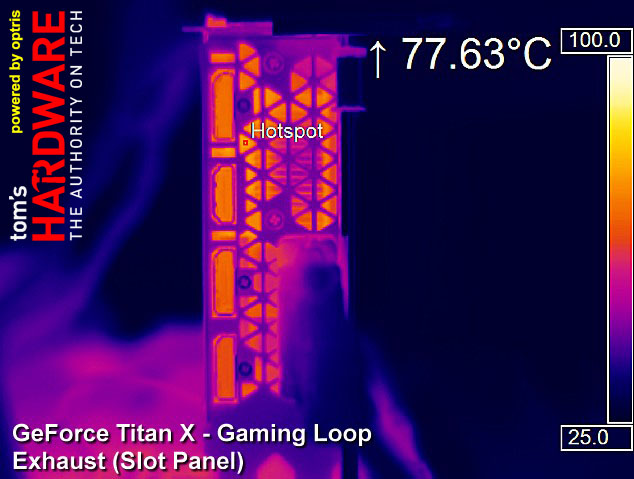

Nvidia’s approach to exhausting waste heat out of the graphics card’s I/O panel is well-executed. We measure 78 to 80 degrees Celsius where heated air leaves the card. That number fluctuates a bit, though.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The GeForce Titan X’s front doesn’t have any thermal problems. Its hottest spot is the bare PCB, which is only visible around the PCI Express interface.

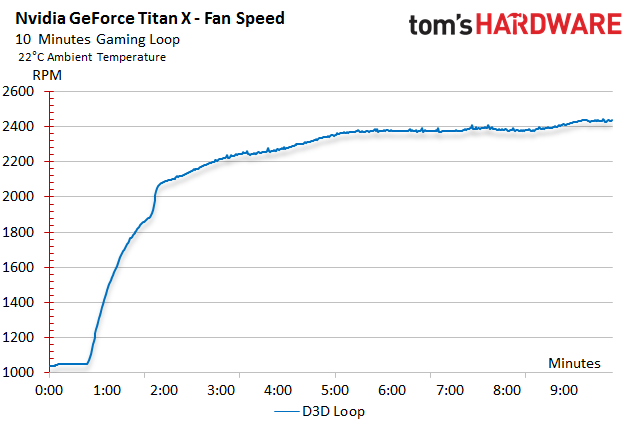

Fan Speed

The fan reaches its maximum RPM after only nine minutes in our closed be quiet! Silent Base 800 PC case. After that, its rotational speed essentially stay the same until the graphics load lightens. Nvidia's thermal solution is tasked with keeping GM200 at 83 degrees Celsius or less, which pushes the fan to 2460 RPM during gaming with an average power consumption of 224W.

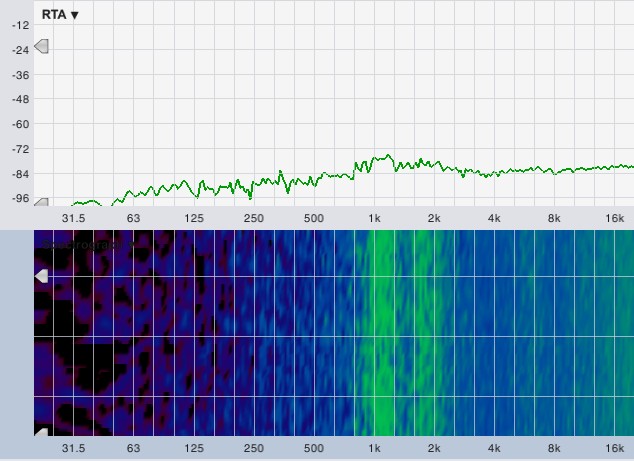

Noise

As always, we use a high-quality microphone placed perpendicular to the center of the graphics card at a distance of 50 cm. The results are analyzed with Smaart 7. The ambient noise when our readings were recorded never rose above 26 dB(A). This was noted and accounted for separately during each measurement.

Let’s first take a look at the frequency spectrum as Nvidia's fan keeps GM200 at 83 degrees Celsius in a gaming workload:

We don’t observe any low motor noises, which we sometimes hear from the fans used by many of Nvidia's board partners. What we do hear is a pleasant whooshing noise spanning a relatively wide band of the spectrum. It starts right above two somewhat more pronounced areas at 1.2 and 1.9kHz. Coil whine, which is usually found between 6 to 7kHz, is completely absent. The rest, from 10kHz upwards, is just pure air movement.

The following table summarizes the situation a little differently. First, we ascertain fan speed in a closed case. That value is then dialed in manually on an open test bench. This way, we get around the fact that you just can’t measure a graphics card on its own in a closed case with the rest of the system running.

| Header Cell - Column 0 | Open Case (Test Bench) | Open Case (Fixed RPM, Case Simulation) | Closed Case (Full System) |

|---|---|---|---|

| Idle | 31.2 dB(A) | N/A | N/A |

| Gaming Loop | 44.2 db(A) | 44.5 dB(A) | 39.7 dB(A) |

Apart from the fact that GeForce Titan X can’t hold its maximum GPU Boost frequency over prolonged periods of time, Nvidia’s reference cooler is still the benchmark that all other direct heat exhaust (DHE) coolers are measured against.

Current page: Results: Temperature, GPU Boost And Noise

Prev Page Results: Power Consumption Next Page GeForce GTX Titan X And G-Sync At 4K