GeForce GTX Titan X Review: Can One GPU Handle 4K?

Results: Power Consumption

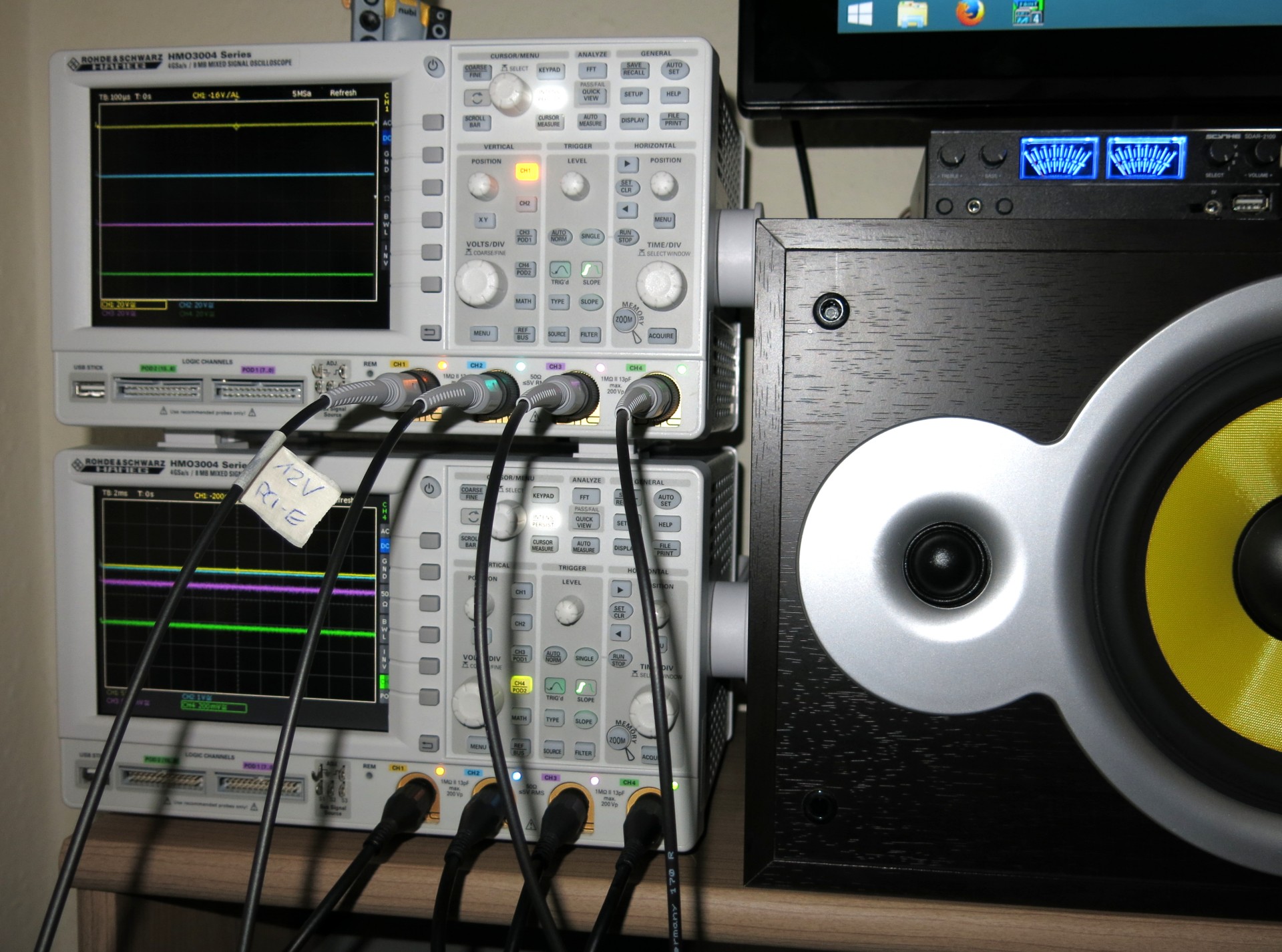

We measure the power consumption of these graphic cards as described in The Math Behind GPU Power Consumption And PSUs. It's the only way we can achieve readings that facilitate sound conclusions about efficiency.

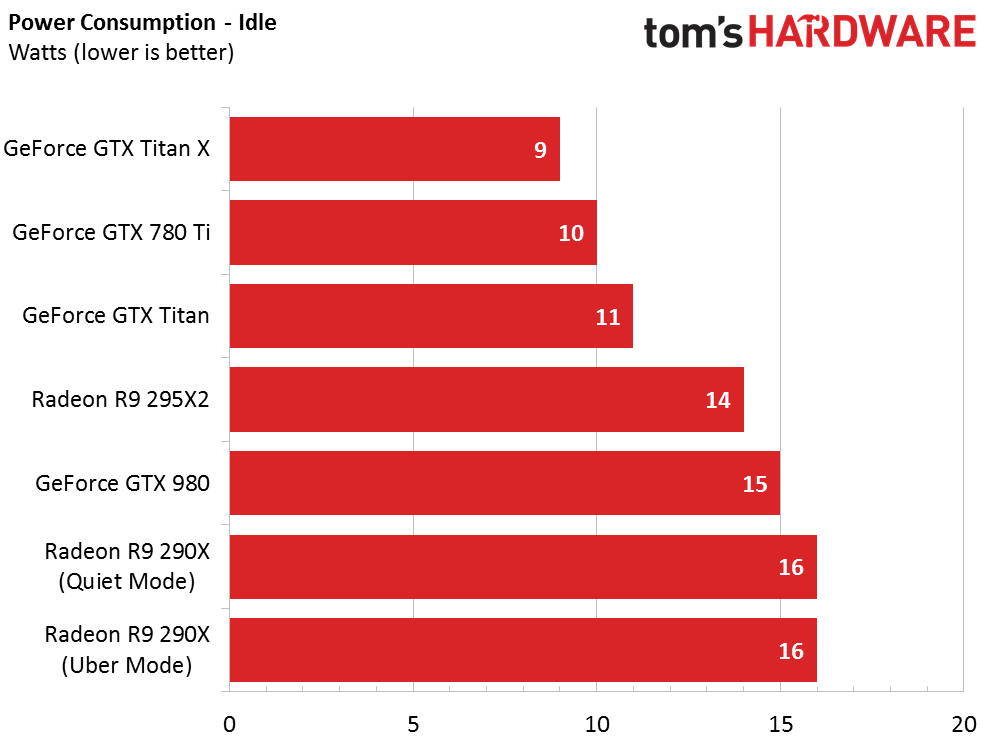

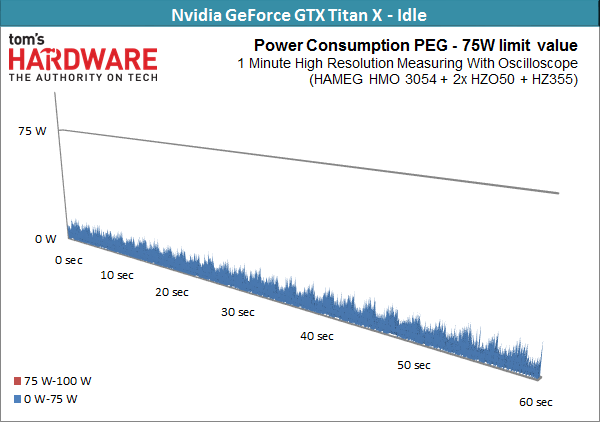

Idle And 2D Desktop

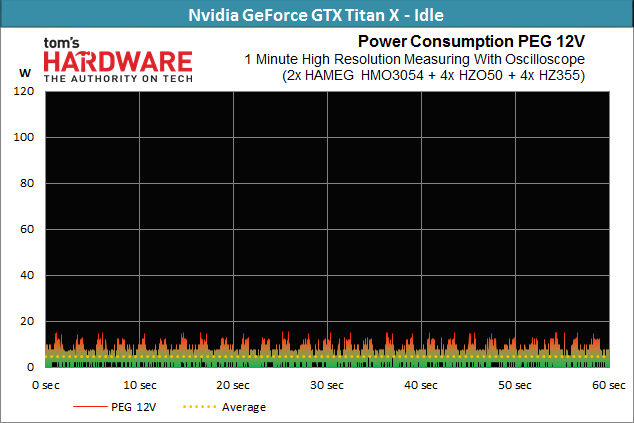

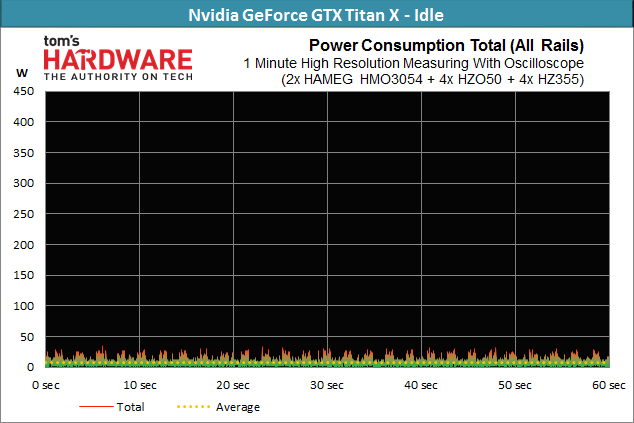

An 8W result is downright impressive, though we're puzzled as to how GeForce GTX Titan X uses less power than 980 in spite of three times as much memory and a far more complex GM200 GPU.

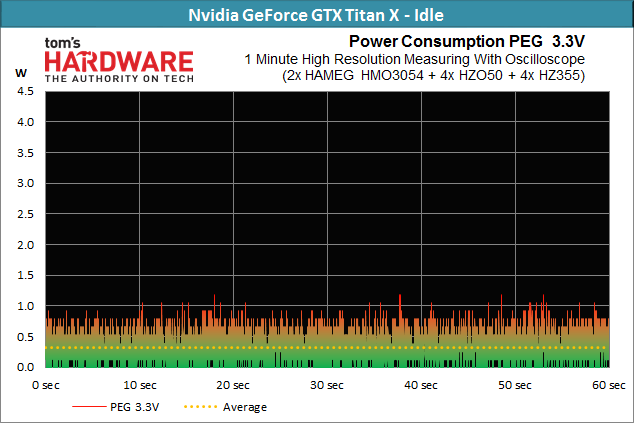

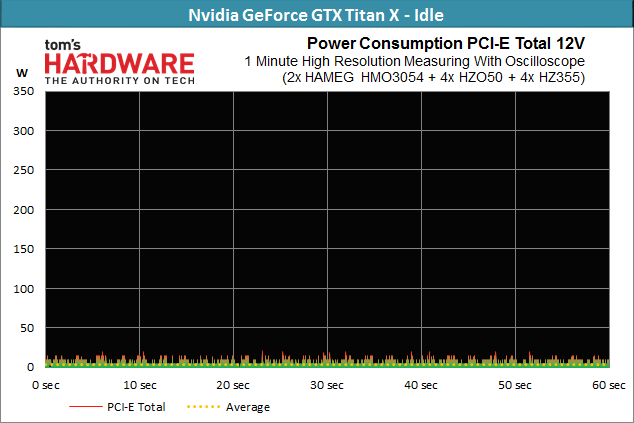

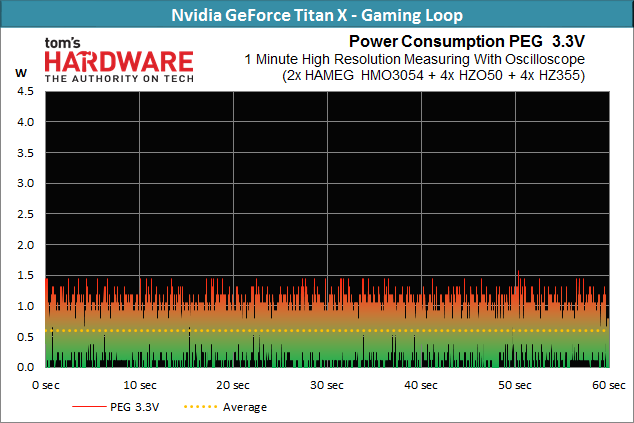

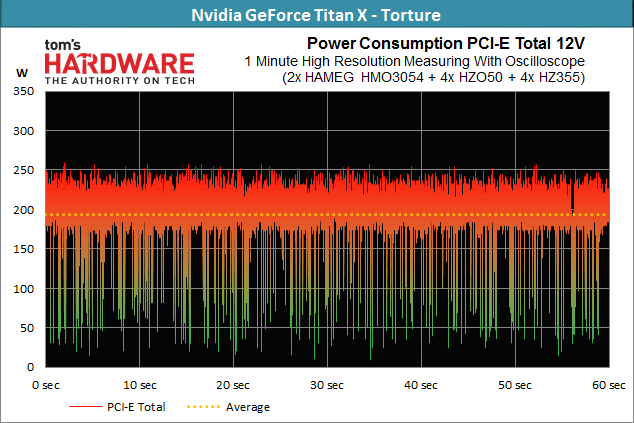

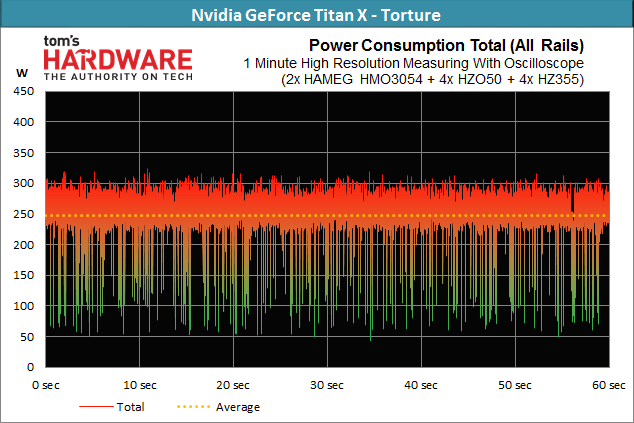

A closer look at the individual rails shows that the loads fluctuate periodically; the overall result represents an average of many ups and downs.

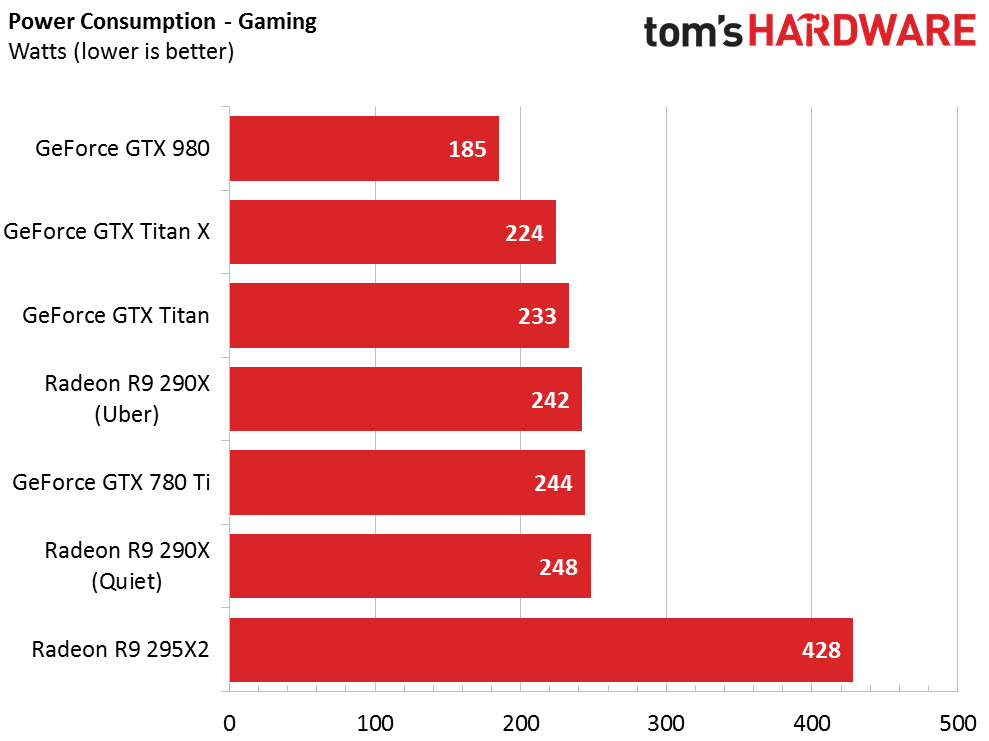

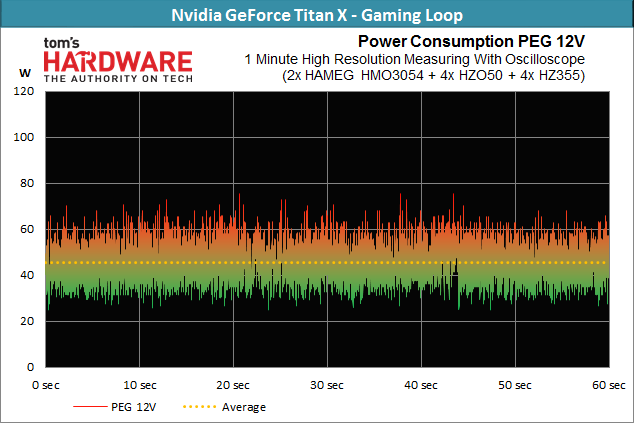

Gaming Loop

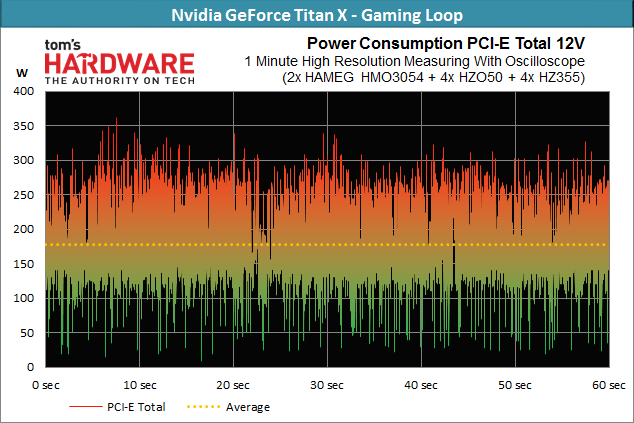

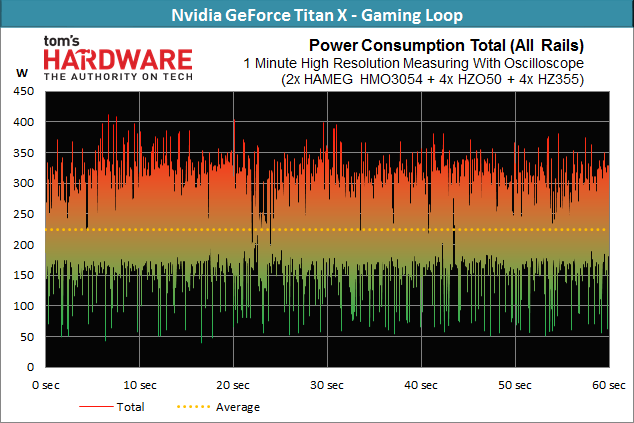

Ignoring professional applications and torture test scenarios, gaming is where we see the highest power consumption. The Titan X measures an average of 224W in this scenario.

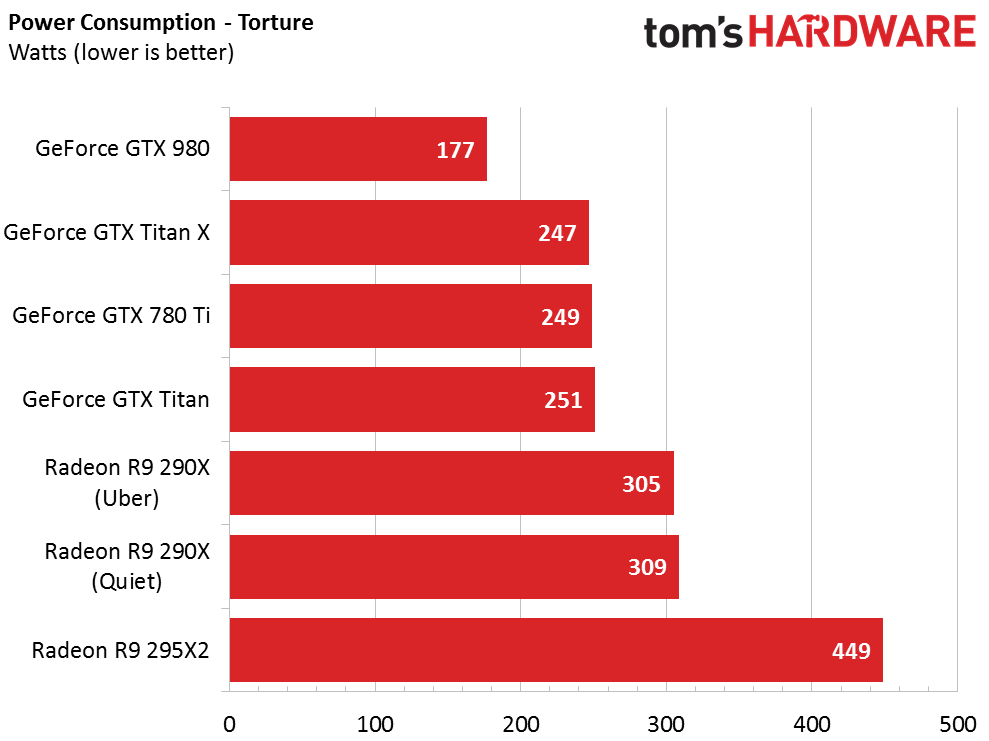

Torture – Full Load

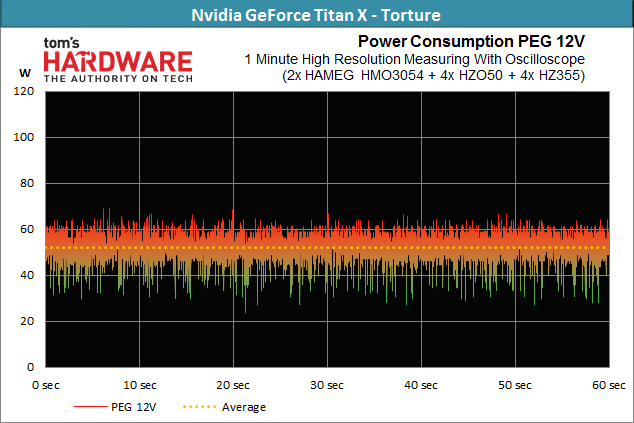

While Nvidia reports the GeForce Titan X’s TDP at 250W, it never actually reaches this figure under normal circumstances. In fact, not one of the usual stress test applications pushes the card past 247W. With the gaming loop average at around 224W, this typically leaves about 25W left for further overclocking. Sure, that doesn't sound like much, but we are working in the realm of hypothetical here.

The 250W TDP stated in Nvidia’s technical documentation is difficult to reach, even if you deliberately try to max out Titan X's power consumption. In normal usage scenarios, you'll see this board way under our observed ceiling. Arming it with one 8- and one 6-pin PCIe power connector seems like overkill, and this certainly leaves a lot of room for speculation about possible cooling solutions from Nvidia’s partners.

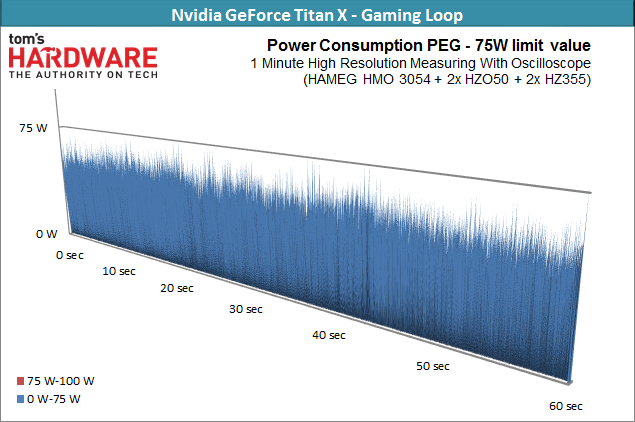

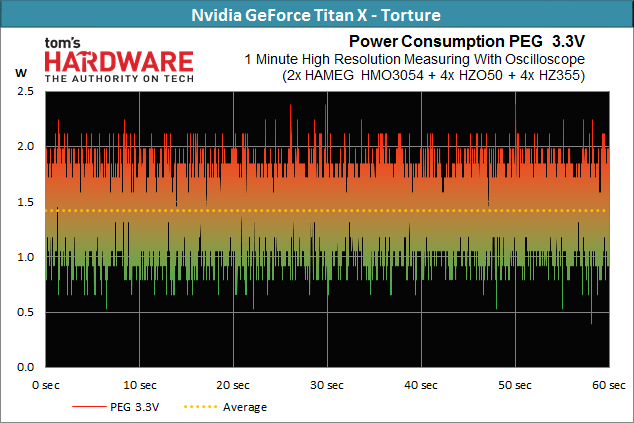

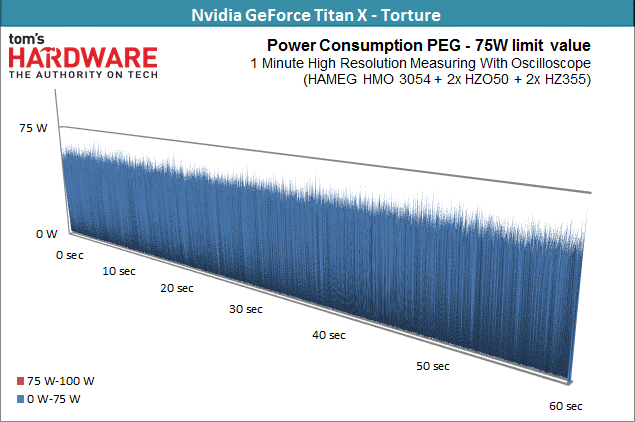

Last, but not least, Nvidia should be commended for limiting GeForce Titan X to 75W from the motherboard slot. Higher spikes, which we've seen from more mainstream graphics cards, don't show up at all. The dynamic load distribution across the rails works flawlessly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Power Consumption Rankings

Reporting in at 9W, GeForce GTX Titan X’s idle power consumption is surprisingly, despite the 12GB of GDDR5 on-board. The reference GeForce GTX 980 surpasses it by more than half.

The GeForce GTX Titan X’s efficiency in gaming loads is really impressive. Also, something every car aficionado knows holds true here as well: there's no replacement for displacement. GM200 performs exceptionally well at a relatively low clock rate, doing wonders for its power consumption. As we know from many previous tests, Maxwell-based graphics cards overclock well but lose efficiency quickly, since gaming performance doesn’t necessarily scale well with frequency and power consumption at the high-end.

Once again, we see the restrictive power target at work during our stress test. This is really only relevant to targeted torture tests, though. It's simply a value we want to know, if only to better appreciate where the graphics card's limits are.

Current page: Results: Power Consumption

Prev Page Results: Middle-earth: Shadow of Mordor, Thief And Tomb Raider Next Page Results: Temperature, GPU Boost And Noise-

-Fran- Interesting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.Reply

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers! -

chiefpiggy The R9 295x2 beats the Titan in almost every benchmark, and it's almost half the price.. I know the Titan X is just one gpu but the numbers don't lie nvidia. And nvidia fanboys can just let the salt flow through your veins that a previous generation card(s) can beat their newest and most powerful card. Cant wait for the 3xx series to smash the nvidia 9xx seriesReply -

chiefpiggy ReplyInteresting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.

Paying almost double for a 30% increase in performance??? Shenanigans alright xD

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers! -

rolli59 Would be interesting to comparison with cards like 970 and R9 290 in dual card setups, basically performance for money.Reply -

esrever Performance is pretty much expected from the leaked specs. Not bad performance but terrible price, as with all titans.Reply -

dstarr3 I don't know. I have a GTX770 right now, and I really don't think there's any reason to upgrade until we have cards that can average 60fps at 4K. And... that's unfortunately not this.Reply -

hannibal Well this is actually cheaper than I expected. Interesting card and would really benefit for less heat... The Throttling is really the limiting factor in here.Reply

But yeah, this is expensive for its power as Titans always have been, but it is not out of reach neither. We need 14 to 16nm finvet GPU to make really good 4K graphic cards!

Maybe in the next year...

-

cst1992 People go on comparing a dual GPU 295x2 to a single-GPU TitanX. What about games where there is no Crossfire profile? It's effectively a TitanX vs 290X comparison.Reply

Personally, I think a fair comparison would be the GTX Titan X vs the R9 390X. Although I heard NVIDIA's card will be slower then.

Alternatively, we could go for 295X2 vs TitanX SLI or 1080SLI(Assuming a 1080 is a Titan X with a few SMMs disabled, and half the VRAM, kind of like the Titan and 780). -

skit75 ReplyInteresting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.

Paying almost double for a 30% increase in performance??? Shenanigans alright xD

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers!

You're surprised? Early adopters always pay the premium. I find it interesting you mention "almost every benchmark" when comparing this GPU to a dual GPU of last generation. Sounds impressive on a purely performance measure. I am not a fan of SLI but I suspect two of these would trounce anything around.

Either way the card is way out of my market but now that another card has taken top honors, maybe it will bleed the 970/980 prices down a little into my cheapskate hands. -

negevasaf IGN said that the R9 390x (8.6 TF) is 38% more powerful than the Titan X (6.2 TF), is that's true? http://www.ign.com/articles/2015/03/17/rumored-specs-of-amd-radeon-r9-390x-leakedReply