GeForce GTX Titan X Review: Can One GPU Handle 4K?

GeForce GTX Titan X And G-Sync At 4K

I co-authored our first look at G-Sync alongside Filippo Scognamiglio in G-Sync Technology Preview: Quite Literally A Game Changer. In that piece, we discussed the issues with conventional v-sync, introduced Nvidia’s approach to variable refresh and shared our experiences with the first G-Sync-capable monitor. During the initial barrage of questions Filippo and I lobbed at Nvidia, we debated the technology’s value in 120 and 144Hz monitors. G-Sync would shine brightest, we determined, between 30 and 60 FPS, where you might want v-sync turned on to mitigate tearing, but then be subject to stuttering as the output shifted between 60 and 30Hz.

Incidentally, that’s the range most of our GeForce GTX Titan X benchmarks fell into at 3840x2160 with detail settings as high as they’d go. Could G-Sync be the technology needed for this specific card at that exact resolution?

Nvidia sent over Acer’s XB280HK bprz, currently the only G-Sync-enabled 4K screen you can buy. The 28” display is on sale for $750 over at Newegg, making it a reasonable (or even affordable) pairing to the equally niche GeForce GTX Titan X. Christian Eberle will handle our review of the XB280HK. But I do want to mention that this particular sample showed up with a dead pixel. Then again, so did our Asus PQ321, which sold for more than four times as much.

G-Sync And 4K, In Practice

If you remember back to our previously-linked preview, we clarified that G-Sync is a quality feature; it doesn’t affect performance. So, the benchmark results you just saw persist through this experiment since we’re leaving v-sync off and enabling G-Sync.

Far Cry 4 is the first title I wanted to look at. Its lush outdoor environment makes tearing so obvious with v-sync off (the trees are particularly susceptible). Our performance data shows frame rates between 33 and 51, averaging just under 40 FPS. Were you to turn on v-sync, you’d see 30 FPS and experience some degree of latency. With v-sync off, the tearing between frames is unmistakable. G-Sync alleviates the tearing and gives you back that performance beyond 30 FPS in the range between 30 and 60 frames per second. Awesome. But while the technology sounds like a magic bullet, you’re still looking at dips down to 33 FPS. G-Sync doesn’t add or interpolate between frames. It simply improves perceived smoothness. Because of the way it plays, Far Cry 4 would benefit from a less demanding detail level or some additional rendering horsepower.

How about Battlefield 4? We reported between 27 and 48 FPS through our benchmark, averaging a similar 39 frames per second on one GeForce GTX Titan X at 3840x2160. Even though this title is also fairly fast-paced, the action feels so much smoother with G-Sync enabled than our frame rate suggests.

The same goes for Metro Last Light—one of the games I was most excited about when we applied G-Sync to it back in 2013. The technology irons out the severe tearing you’d normally see strafing through narrow halls, even averaging 39 FPS. Middle-earth, Thief, Tomb Raider, Crysis 3—they all benefit more from higher frame rates than turning v-sync on, while eliminating the tearing you’d see after turning v-sync off.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

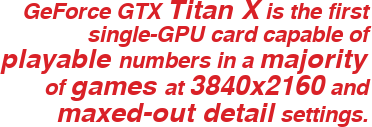

Whether or not you consider the resulting experience enjoyable, though, is a matter of personal opinion. My own take is this: GeForce GTX Titan X is the first single-GPU card capable of playable numbers in a majority of games at 3840x2160 and maxed-out detail settings. Adding G-Sync neutralizes the artifacts that crop up as you choose between enabling or disabling v-sync in Titan X’s performance range. Really, the technology couldn’t have become available in a 4K panel at a better time.

Current page: GeForce GTX Titan X And G-Sync At 4K

Prev Page Results: Temperature, GPU Boost And Noise Next Page Conclusion-

-Fran- Interesting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.Reply

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers! -

chiefpiggy The R9 295x2 beats the Titan in almost every benchmark, and it's almost half the price.. I know the Titan X is just one gpu but the numbers don't lie nvidia. And nvidia fanboys can just let the salt flow through your veins that a previous generation card(s) can beat their newest and most powerful card. Cant wait for the 3xx series to smash the nvidia 9xx seriesReply -

chiefpiggy ReplyInteresting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.

Paying almost double for a 30% increase in performance??? Shenanigans alright xD

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers! -

rolli59 Would be interesting to comparison with cards like 970 and R9 290 in dual card setups, basically performance for money.Reply -

esrever Performance is pretty much expected from the leaked specs. Not bad performance but terrible price, as with all titans.Reply -

dstarr3 I don't know. I have a GTX770 right now, and I really don't think there's any reason to upgrade until we have cards that can average 60fps at 4K. And... that's unfortunately not this.Reply -

hannibal Well this is actually cheaper than I expected. Interesting card and would really benefit for less heat... The Throttling is really the limiting factor in here.Reply

But yeah, this is expensive for its power as Titans always have been, but it is not out of reach neither. We need 14 to 16nm finvet GPU to make really good 4K graphic cards!

Maybe in the next year...

-

cst1992 People go on comparing a dual GPU 295x2 to a single-GPU TitanX. What about games where there is no Crossfire profile? It's effectively a TitanX vs 290X comparison.Reply

Personally, I think a fair comparison would be the GTX Titan X vs the R9 390X. Although I heard NVIDIA's card will be slower then.

Alternatively, we could go for 295X2 vs TitanX SLI or 1080SLI(Assuming a 1080 is a Titan X with a few SMMs disabled, and half the VRAM, kind of like the Titan and 780). -

skit75 ReplyInteresting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.

Paying almost double for a 30% increase in performance??? Shenanigans alright xD

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers!

You're surprised? Early adopters always pay the premium. I find it interesting you mention "almost every benchmark" when comparing this GPU to a dual GPU of last generation. Sounds impressive on a purely performance measure. I am not a fan of SLI but I suspect two of these would trounce anything around.

Either way the card is way out of my market but now that another card has taken top honors, maybe it will bleed the 970/980 prices down a little into my cheapskate hands. -

negevasaf IGN said that the R9 390x (8.6 TF) is 38% more powerful than the Titan X (6.2 TF), is that's true? http://www.ign.com/articles/2015/03/17/rumored-specs-of-amd-radeon-r9-390x-leakedReply