Why you can trust Tom's Hardware

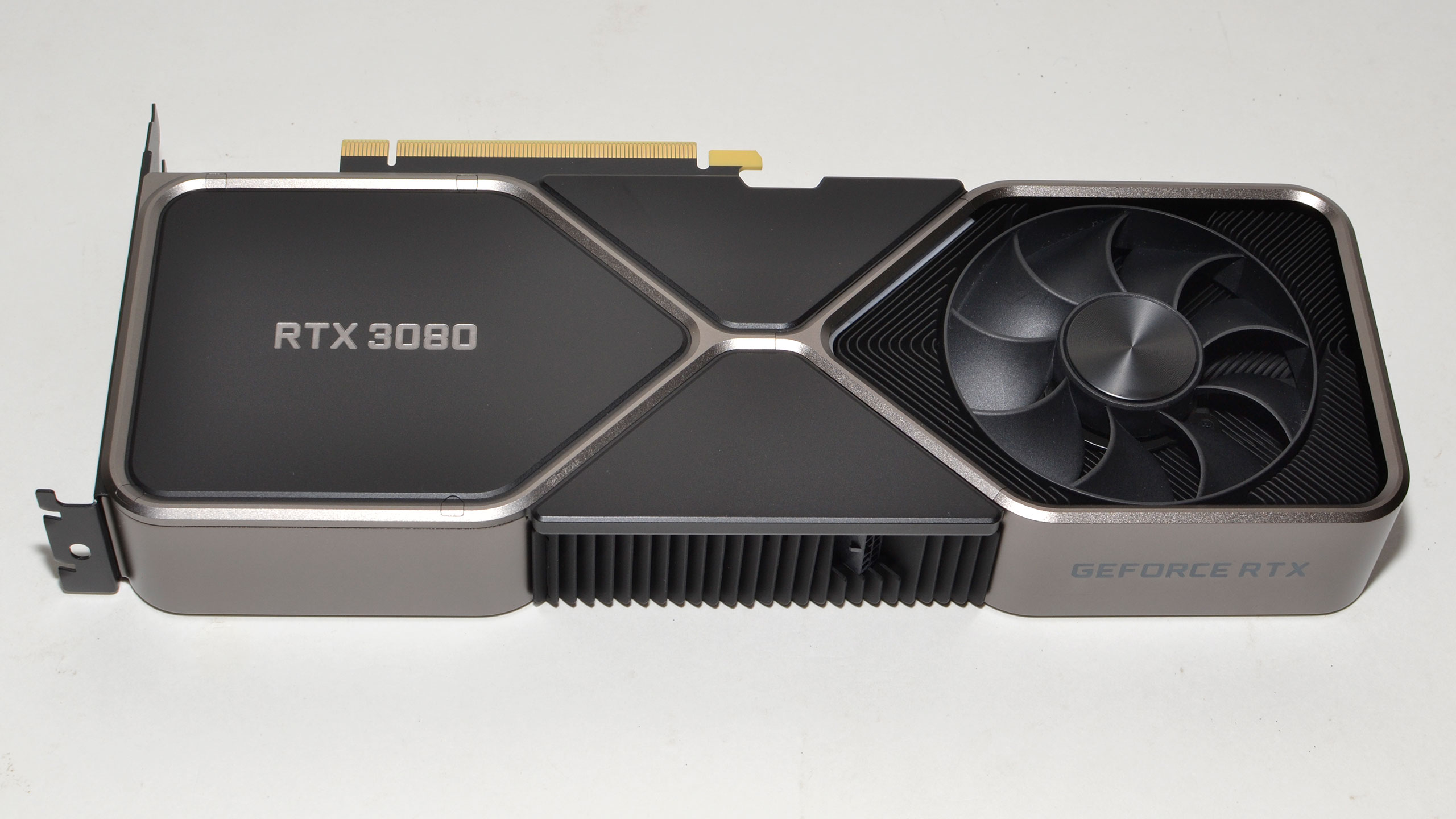

If you've followed the world of CPU overclocking, you've probably noticed how AMD and Intel are both pushing their CPUs closer and closer to the practical limits. I still have fond memories of 50% overclocks on some old CPUs, like the Celeron 300A. These days, the fastest CPUs often only have a few hundred MHz of headroom available — and then only with substantial cooling. I begin with that preface because the RTX 3080 very much feels like it's also running close to the limit.

For the GPU core, while Nvidia specs the nominal boost clock at 1710 MHz, in practice, the GPU boosts quite a bit higher. Depending on the game, we saw sustained boost clocks of at least 1830 MHz, and in some cases, clocks were as high as 1950 MHz. That's not that different from the Turing GPUs, or even Pascal. The real question is how far we were able to push clocks.

The answer: Not far. I started with a modest 50 MHz bump to clock speed, which seemed to go fine. Then I pushed it to 100 MHz and crashed. Through a bit of trial and error, I ended up at +75 MHz as the best stable speed I could hit. That's after increasing the voltage by 100 mV using EVGA Precision X1 and ramping up fan speeds to keep the GPU cool. The result was boost clocks in the 1950-2070 MHz range, typically settling right around the 2GHz mark.

Memory overclocking ended up being far more promising. I started with 250 MHz increments. 250, 500, 750, and even 1000 MHz went by without a problem before my test (Unigine Heaven) crashed at 1250 MHz. Stepping back to 1200 MHz, everything seemed okay. And then I ran some benchmarks.

Remember that bit about EDR we mentioned earlier? It works. 1200 MHz appeared stable, but performance was worse than at stock memory clocks. I started stepping down the memory overclock and eventually ended up at 750 MHz, yielding an effective speed of 20.5 Gbps. I was really hoping to sustain 21 Gbps, in honor of the 21st anniversary of the GeForce 256, but it was not meant to be. We'll include the RTX 3080 overclocked results in the ultra quality charts (we didn't bother testing the overclock at medium quality).

Combined, we ended up with stable performance using the +75 MHz core overclock and +750 MHz GDDR6X overclock. That's a relatively small 4% overclock on the GPU, and a slightly more significant 8% memory overclock, but neither one is going to make a huge difference in gaming performance. Overall performance at 4K ultra improved by about 6%.

GeForce RTX 3080: Test Setup

We're using our standard GPU test bed for this review. However, the Core i9-9900K CPU is now approaching its second birthday, and the Core i9-10900K might be a bit faster. Never fear! While we didn't have nearly enough time to retest all of the graphics cards on a new test bed, we did run a series of RTX 3080 CPU Scaling benchmarks on five other processors. Check that article out for the full results, but the short summary is that the 9900K and 10900K are effectively tied at 1440p and 4K.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You can see the full specs of our GPU test PC to the right. We've equipped it with some top-tier hardware, including 32GB of DDR4-3200 CL16 memory, a potent AIO liquid cooler, and a 2TB M.2 NVMe SSD. We actually have two nearly identical test PCs, only one of which is equipped for testing power use — that's the OpenBenchTable PC. Our second PC has a Phanteks Enthoo Pro M case, which we test with the side removed because of all the GPU swapping. (We've checked with the side installed as well, and we have sufficient fans that temperatures for the GPU don't really change with the panel installed.)

Our current gaming test suite consists of nine games, some of which are getting a bit long in the tooth, and none with ray tracing or DLSS effects enabled. We're adding 14 additional 'bonus' graphics tests, all at 4K ultra with DLSS enabled (where possible) on a limited selection of GPUs to show how pushing the RTX 3080 to the limit changes things … or doesn't, in this case.

Also, Microsoft enabled GPU hardware scheduling in Windows 10 a few months back, and Nvidia's latest drivers support the features. We tested with HW scheduling enabled and disabled, with all of our other GPU results run with the feature disabled. It's not that the feature doesn't help, but overall it ends up being in the margin of error at most settings. We'll include the HW scheduling enabled results in our charts as well.

Current page: GeForce RTX 3080: Initial Overclocking Results

Prev Page GeForce RTX 3080 Founders Edition: Hail to the King! Next Page GeForce RTX 3080: Sweeping the 4K Gaming Benchmarks

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

chickenballs I thought the 3080 was released in September.Reply

Maybe it's considered a "paper-launch"

Nvidia won't like that :Dchalabam said:This article lacks a tensorflow benchmark -

JarredWaltonGPU Reply

FYI, it's the same review that we posted at the launch, just reformatted and paginated now. If I wasn't on break for the holidays, I'd maybe do a bunch of new charts, but I've got other things I'll be doing in that area soon enough.Zeecoder said:This article is outdated. -

MagicPants I still think the shortage is the big news for the 3080.Reply

I might be paranoid, but I think there be something to the fact that the top ebay 3080 scalper "occomputerparts" has sold 97 RTX 3080s from EVGA specifically and shipped them from Brea, California.

Anyone want to guess where EVGA is headquartered??? Brea, California.

Now it could be that some scalper wrote a bot and has been buying them off Amazon, BestBuy, and Newegg. But then why only sell EVGA cards? They'd have to program their bot to ignore cards from other vendors, which wouldn't make any sense. -

TEAMSWITCHER ReplyMagicPants said:I still think the shortage is the big news for the 3080.

I might be paranoid, but I think there be something to the fact that the top ebay 3080 scalper "occomputerparts" has sold 97 RTX 3080s from EVGA specifically and shipped them from Brea, California.

Anyone want to guess where EVGA is headquartered??? Brea, California.

Now it could be that some scalper wrote a bot and has been buying them off Amazon, BestBuy, and Newegg. But then why only sell EVGA cards? They'd have to program their bot to ignore cards from other vendors, which wouldn't make any sense.

It's probably an inside job. Demand for RTX 3000 series is off the charts! I don't see any good reason to buy an AMD GPU other than for a pity-purchase. AMD is pricing their cards less, because they are less. In sheer performance it's just a bit less, but in next generation capabilities .. it's a lot less. I will hold out for an RTX 3080. AMD won't catch up, before Nvidia has filled the demand of the market. Patience will be rewarded. -

LeszekSol What is a point discussing items (namely - Nvidia RTX3080 Founders Edition) which dos not exist in free market? Cards are anavailable since first day of "hiting market" and even Nvidia has no idea (or perhaps plans) when it will become available again. There are other cards based on similar architecture - but actually a little different - so.... WHAT FOR guys you are loosing time to discuss this? Lets forget about NVidia, or let's put it on the shelf with MobyDick books.....Reply -

PureMist Hello,Reply

I have a question about the settings used in the testing for FFXIV. The chart says Medium or Ultra but the game itself only has Maximum and two PC and Laptop settings. So how do these correlate to the actual in game settings? Or are “Medium” and “Ultra” two custom presets you made for testing purposes?

Thank you for your time. -

JarredWaltonGPU Reply

Sorry, my chart generation script defaults to "medium" and "ultra" and I didn't think about this particular game. You're right, the actual settings are different in FFXIV Benchmark. I use the "high" and "maximum" settings instead of medium/ultra for that test. I'll see about updating the script to put in the correct names, though I intend to drop the FFXIV benchmark at some point regardless.PureMist said:Hello,

I have a question about the settings used in the testing for FFXIV. The chart says Medium or Ultra but the game itself only has Maximum and two PC and Laptop settings. So how do these correlate to the actual in game settings? Or are “Medium” and “Ultra” two custom presets you made for testing purposes?

Thank you for your time. -

acsmith1972 The cheapest I see this card for right now is $1800. I really wish these manufacturers wouldn't be pricing these cards based on bitcoin mining. It's once again going to price the rest of us out of getting any decent video cards till BTC drops again.Reply