Why you can trust Tom's Hardware

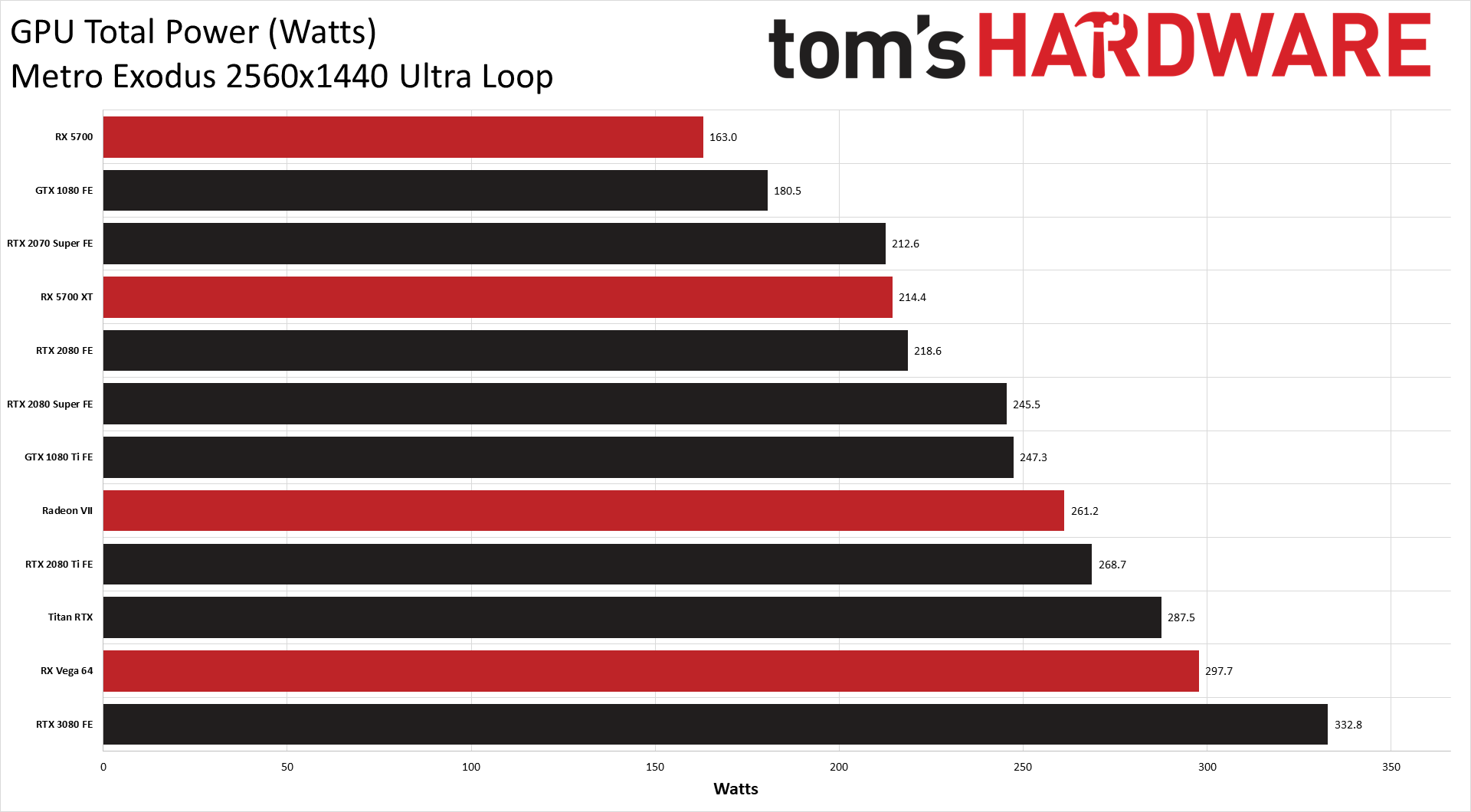

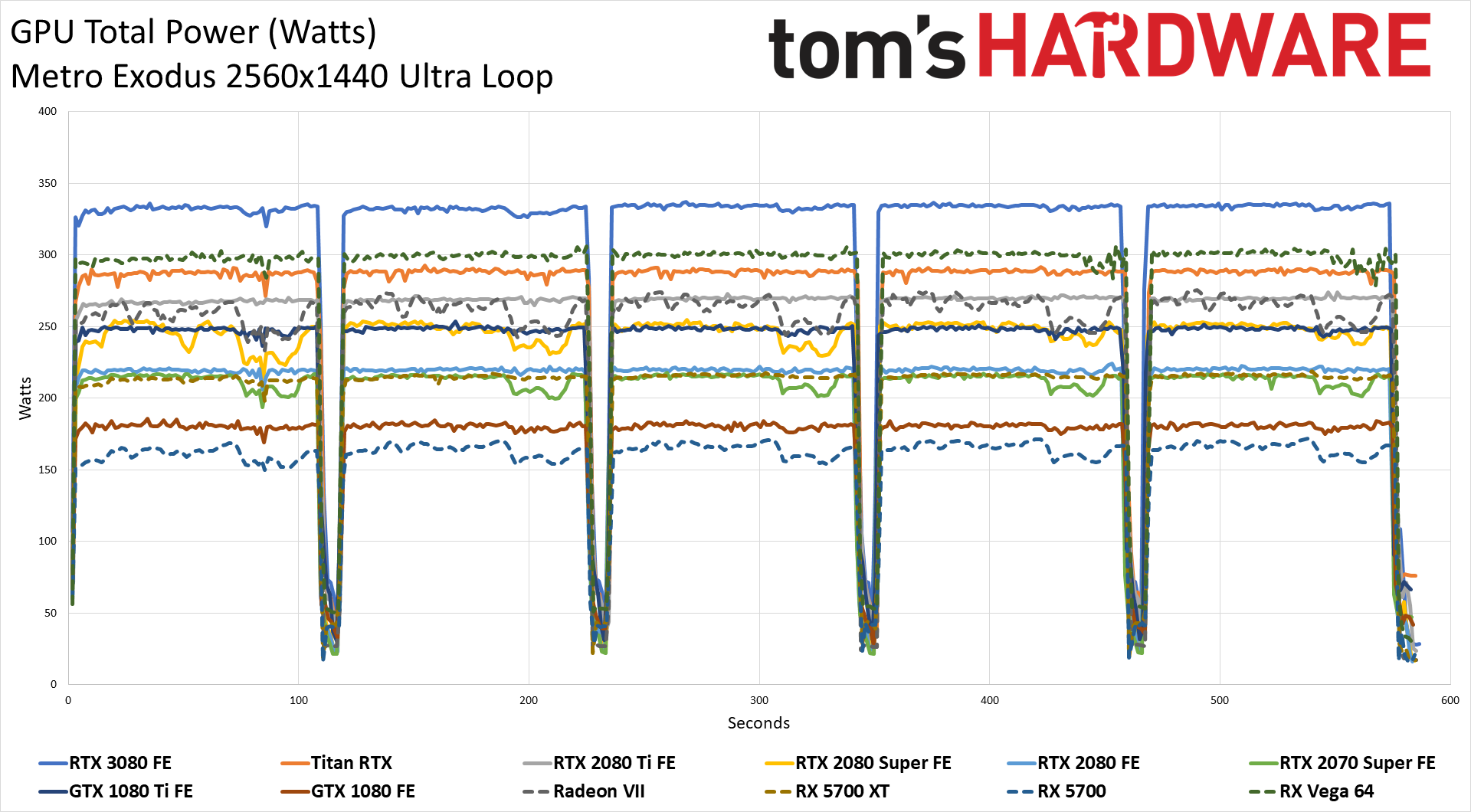

We mentioned the significantly higher TGP rating of the RTX 3080 already, but now let's put it to the test. We use Powenetics in-line power monitoring hardware and software so that we can report the real power use of the graphics card. You can read more about our approach to GPU power testing, but the takeaway is that we're not dependent on AMD or Nvidia (or Intel, once dedicated Xe GPUs arrive) to report to various software utilities exactly how much power the GPUs use.

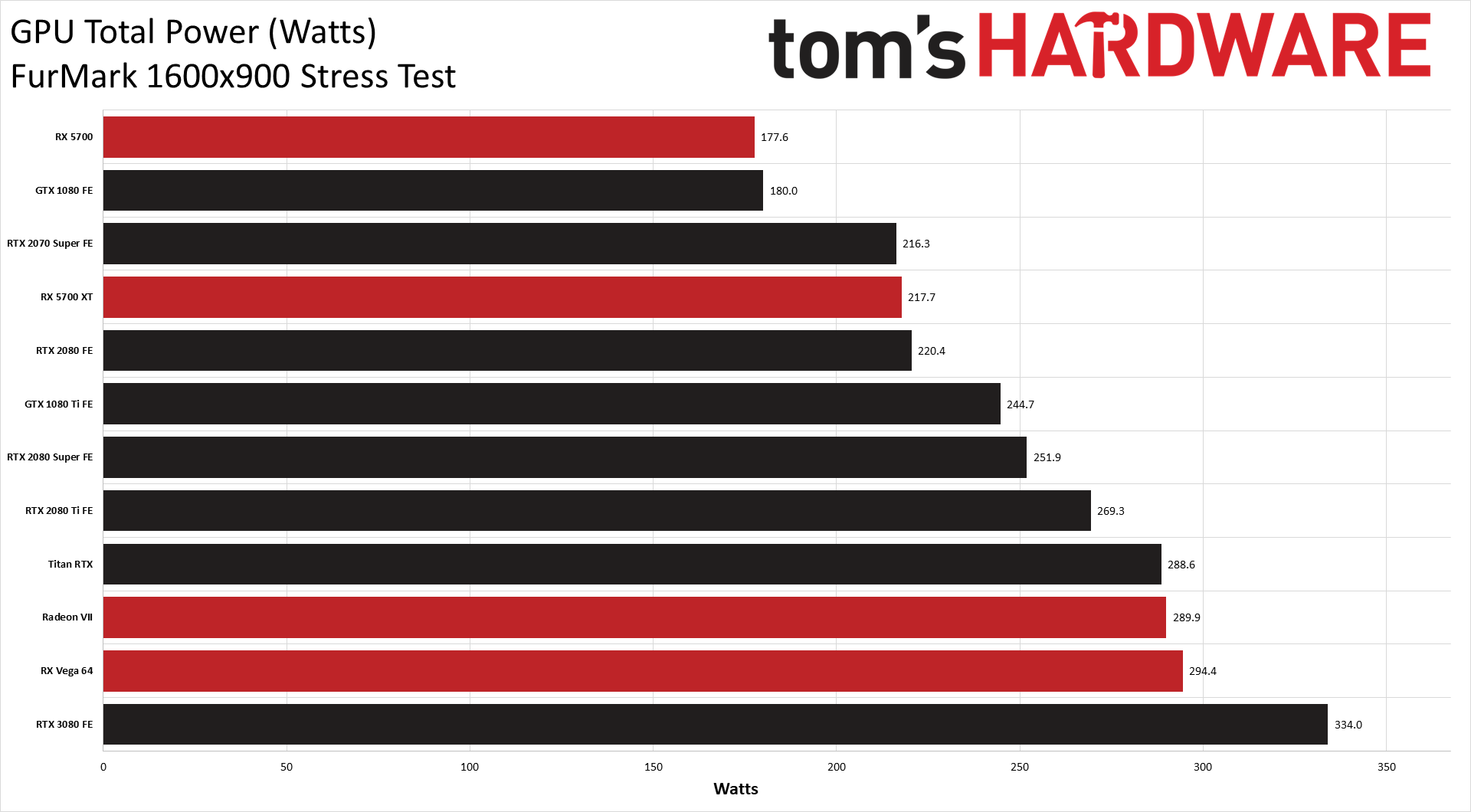

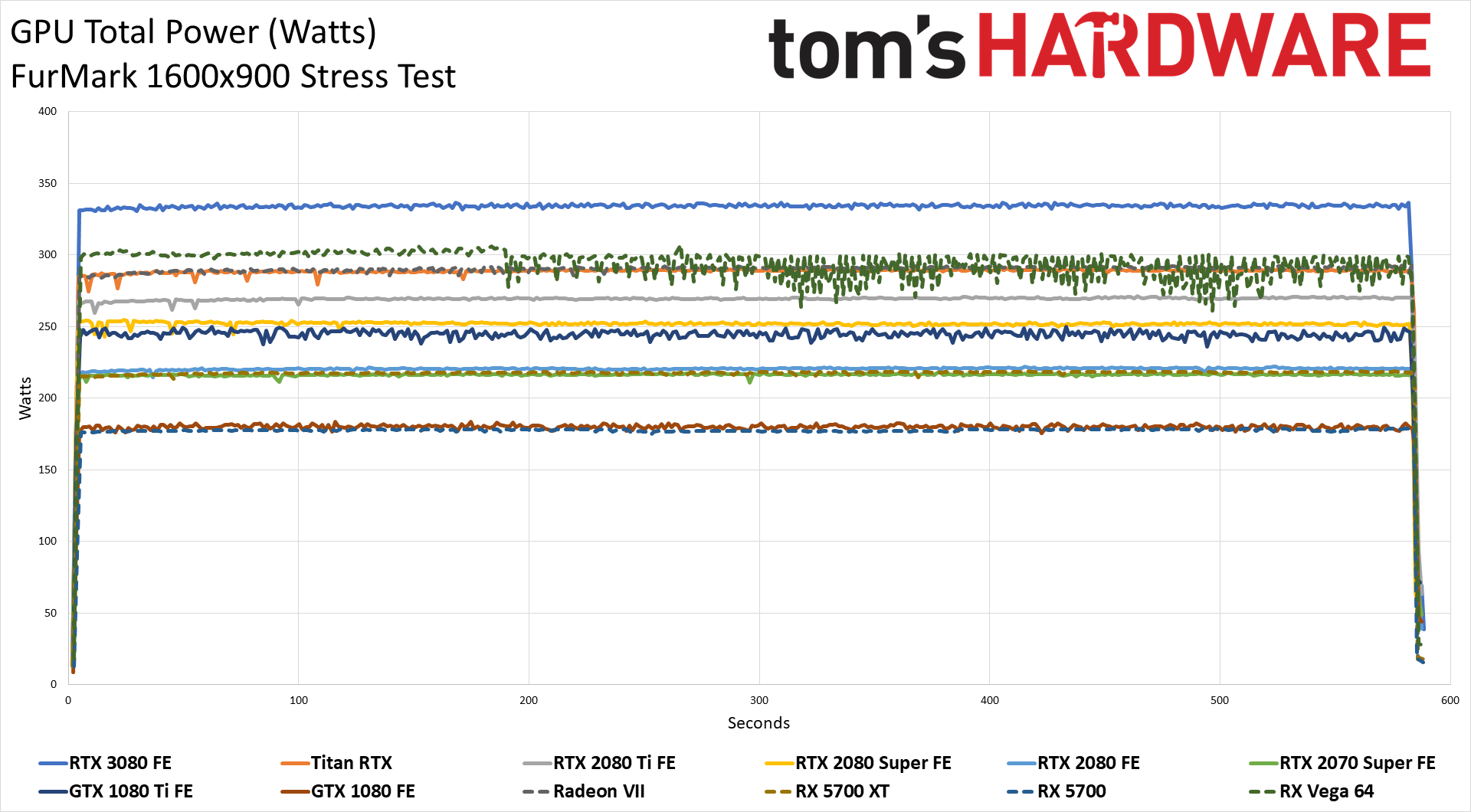

So how much power does the RTX 3080 use? It turns out the 320W rating is perhaps slightly conservative: We hit 335W averages in both Metro Exodus and FurMark.

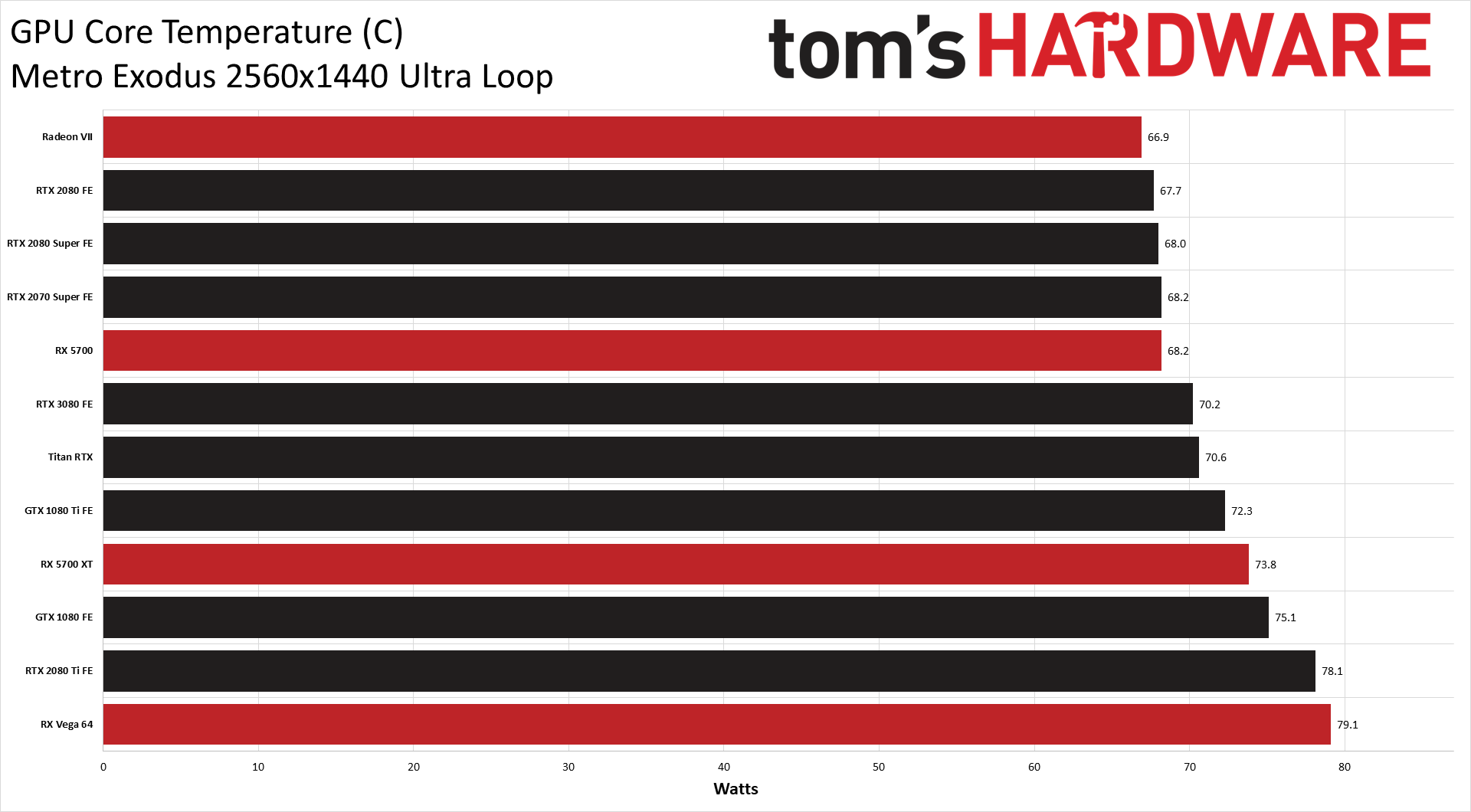

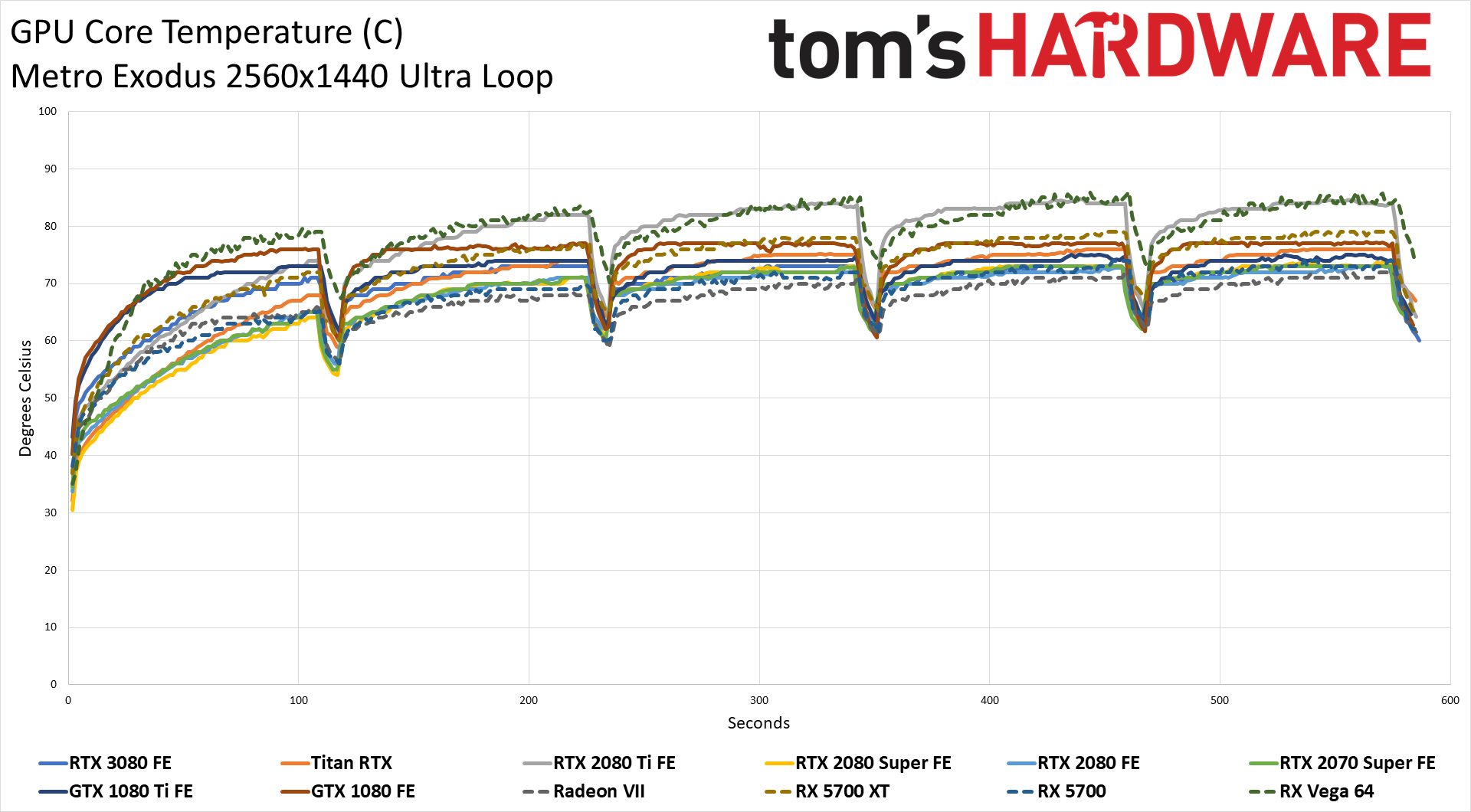

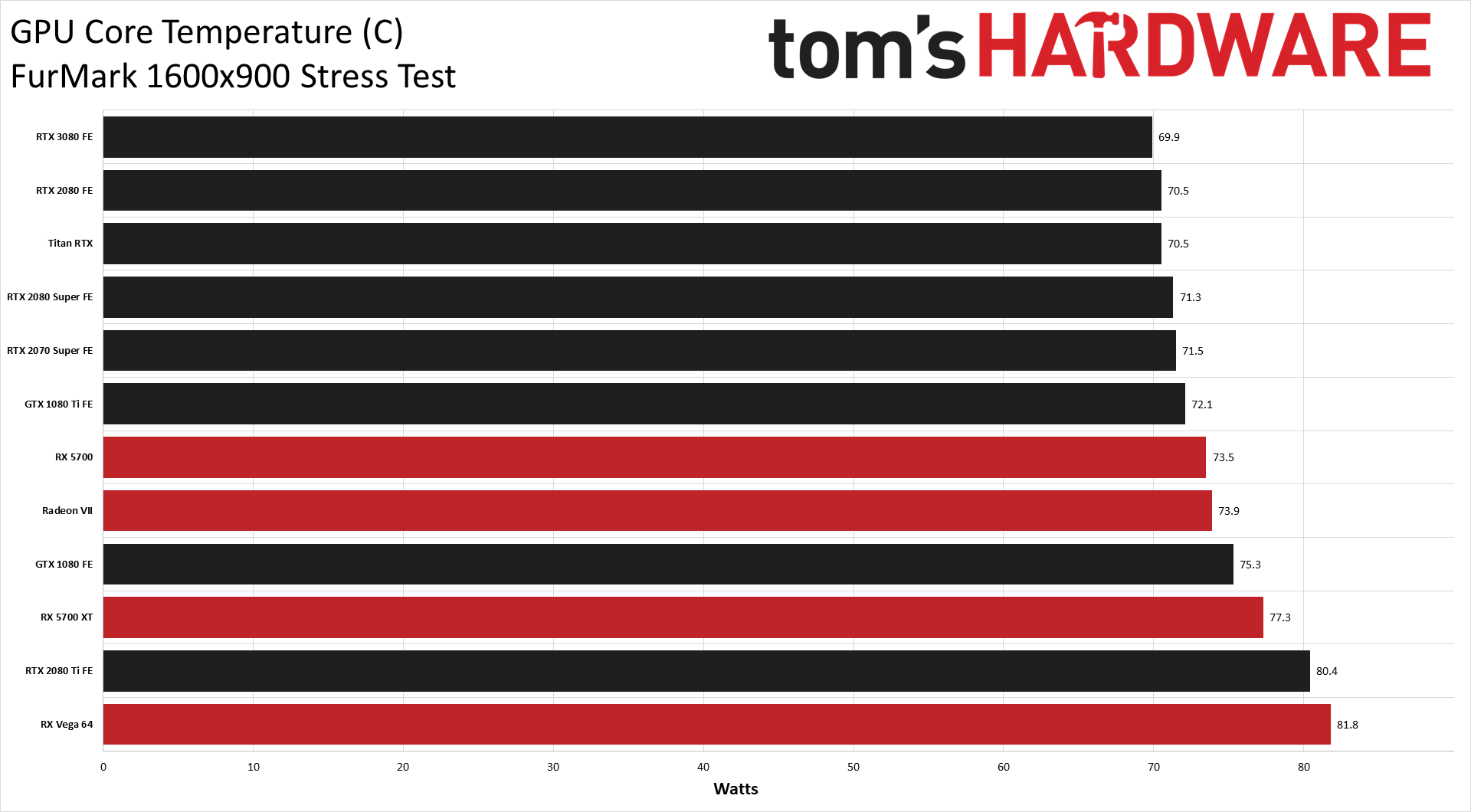

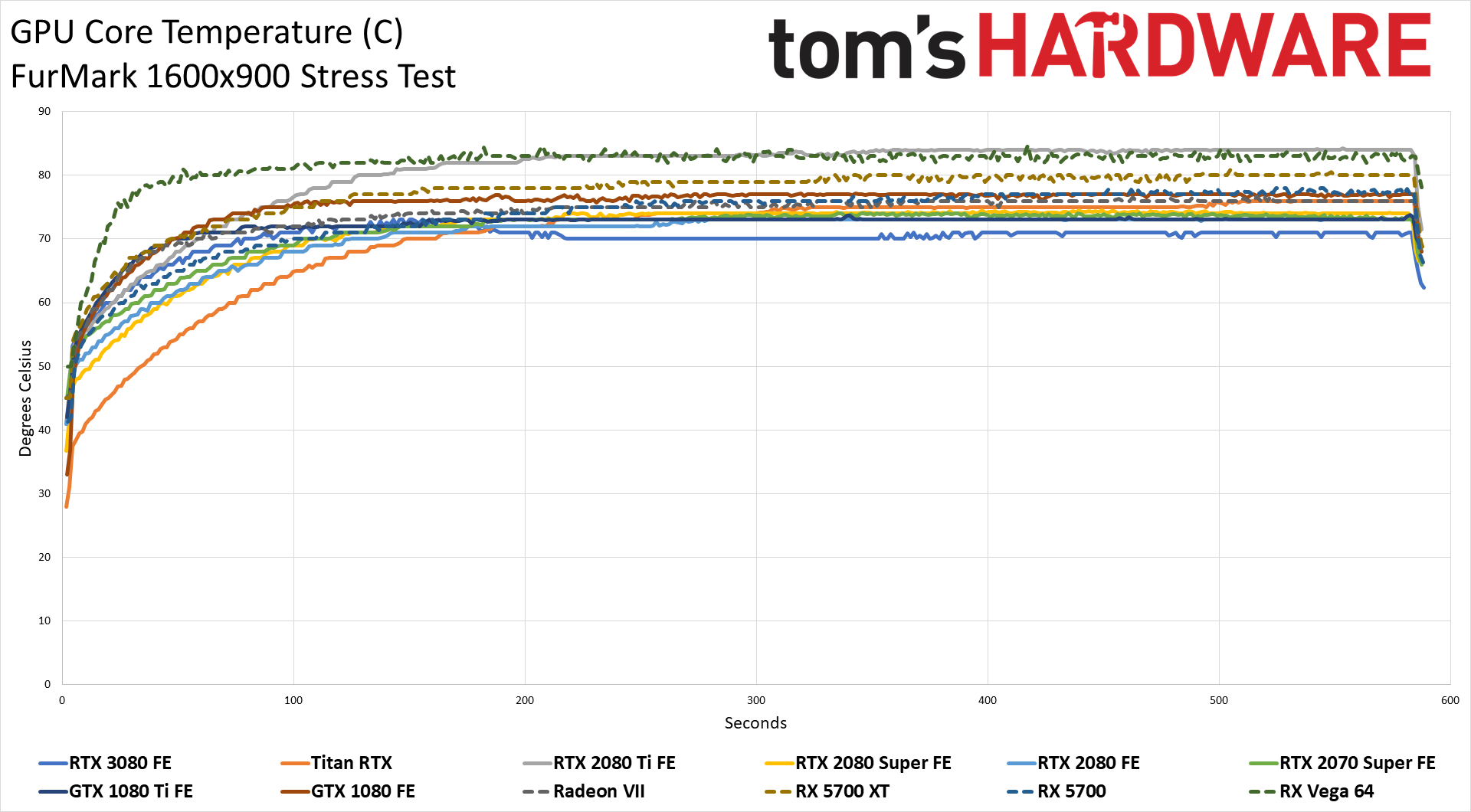

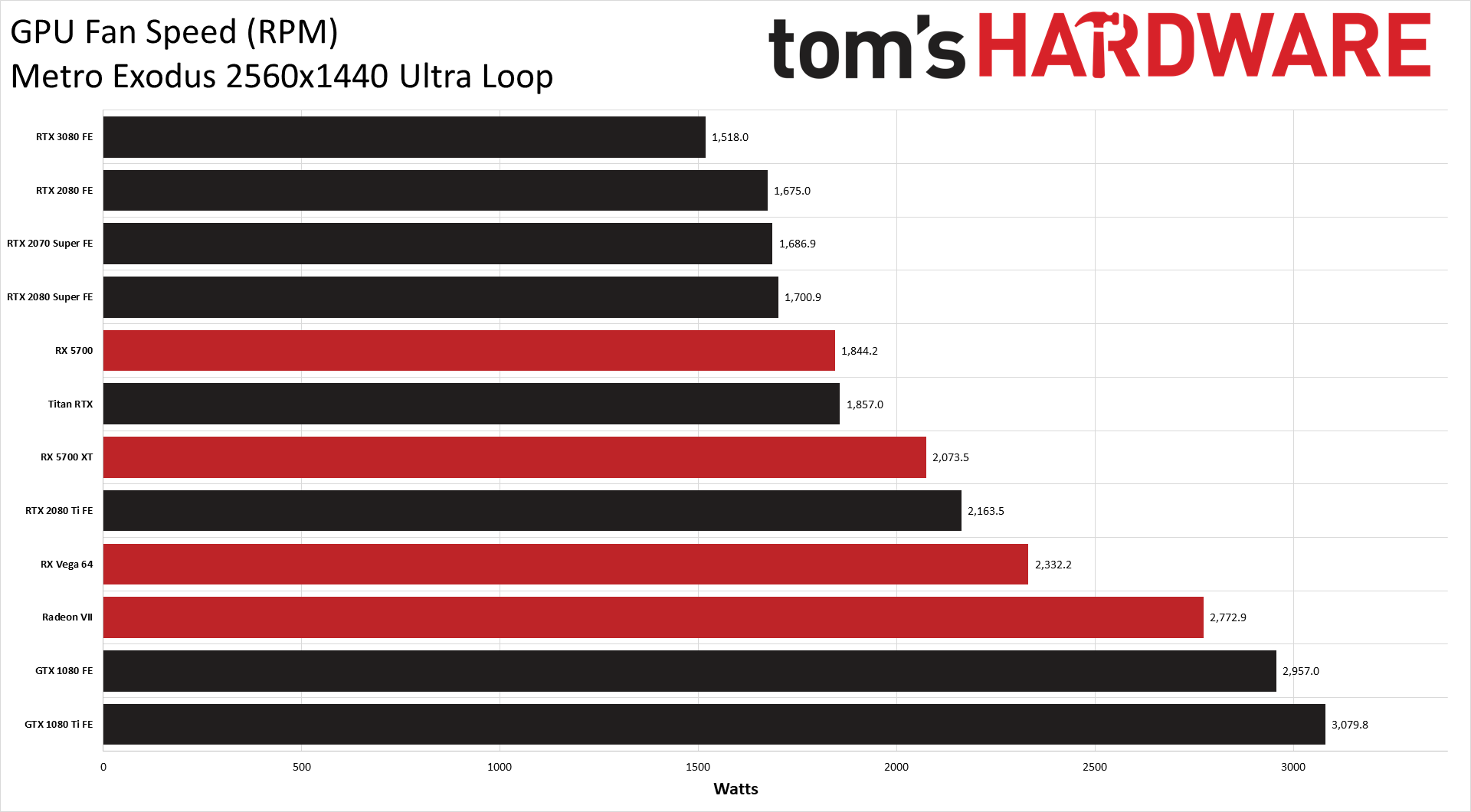

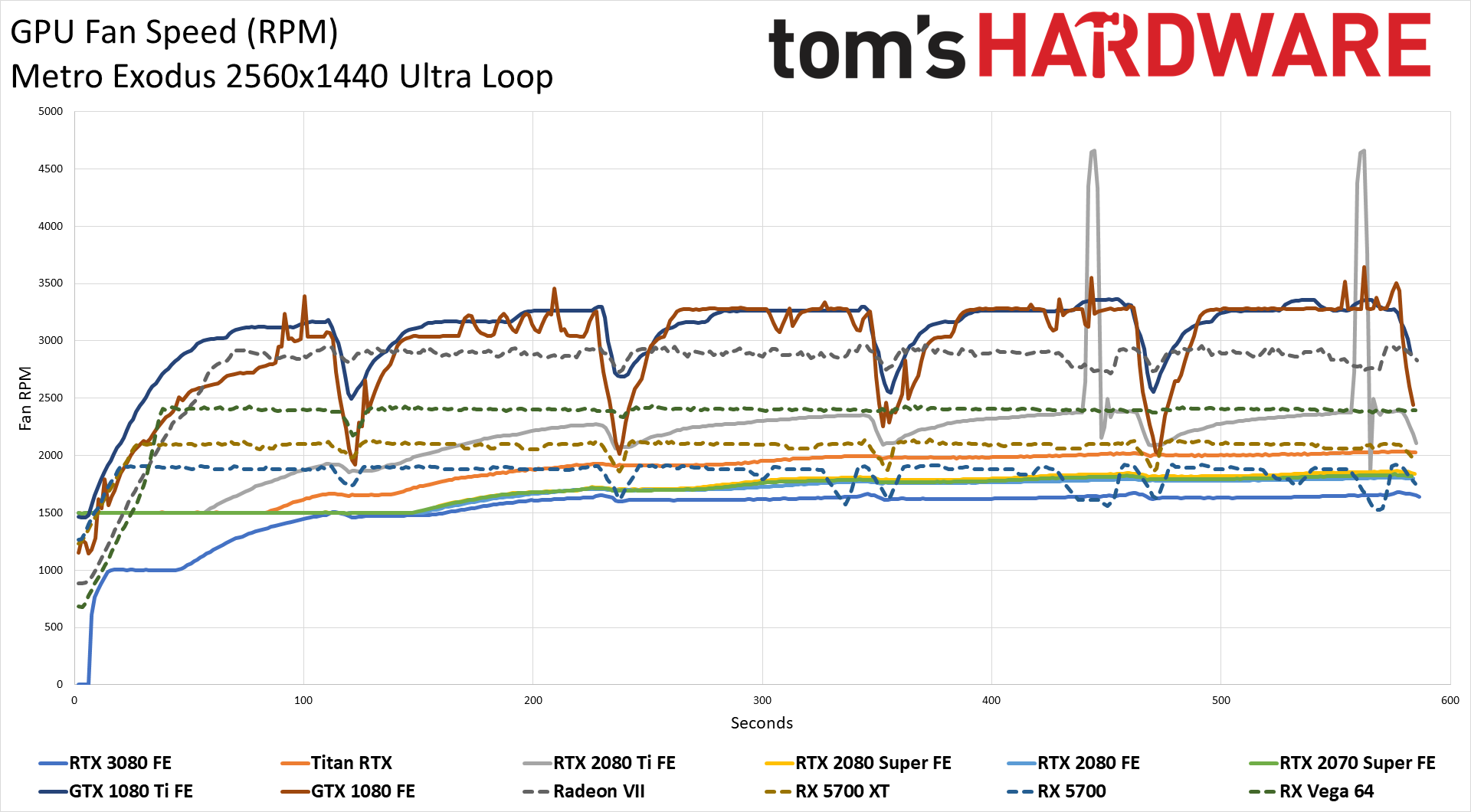

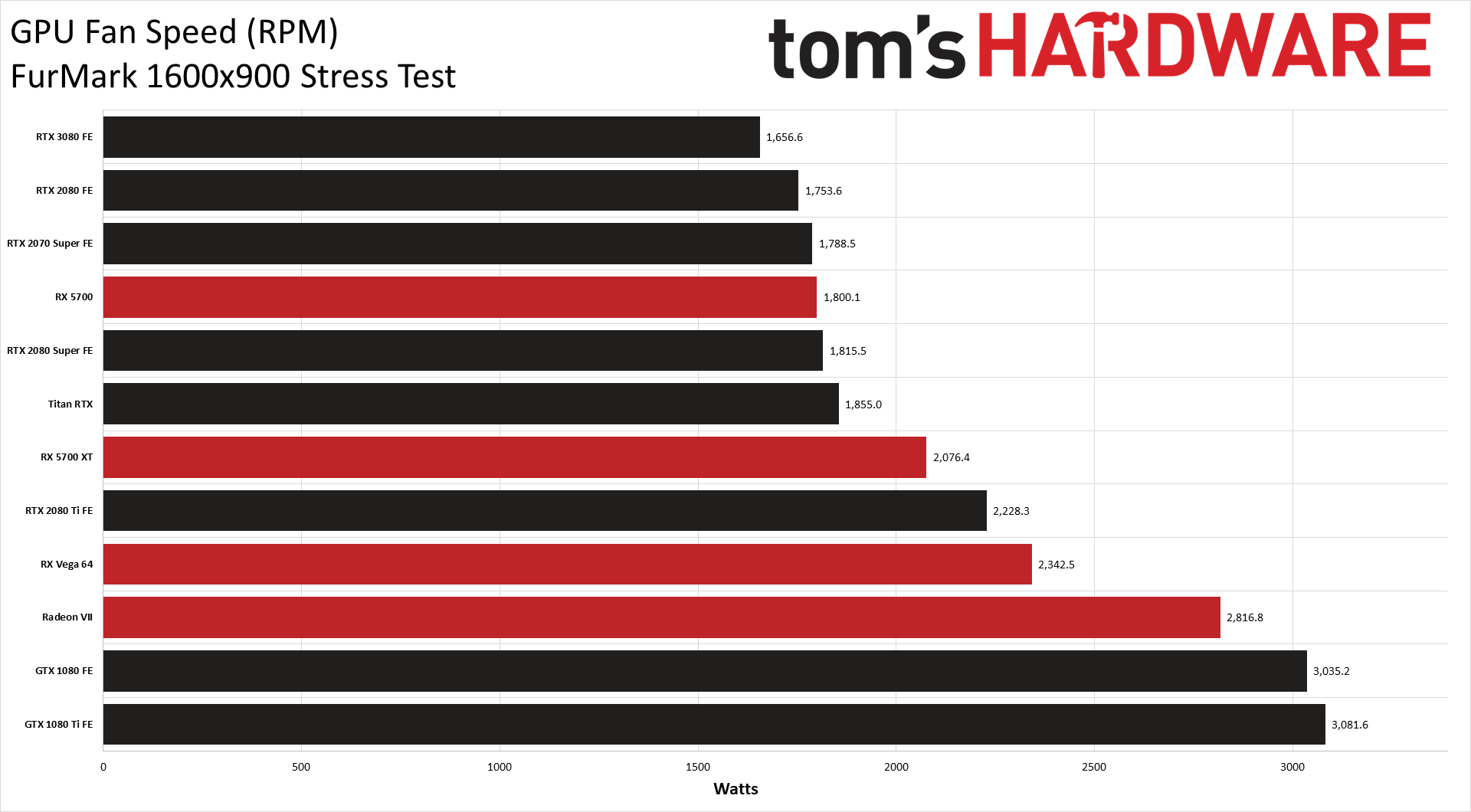

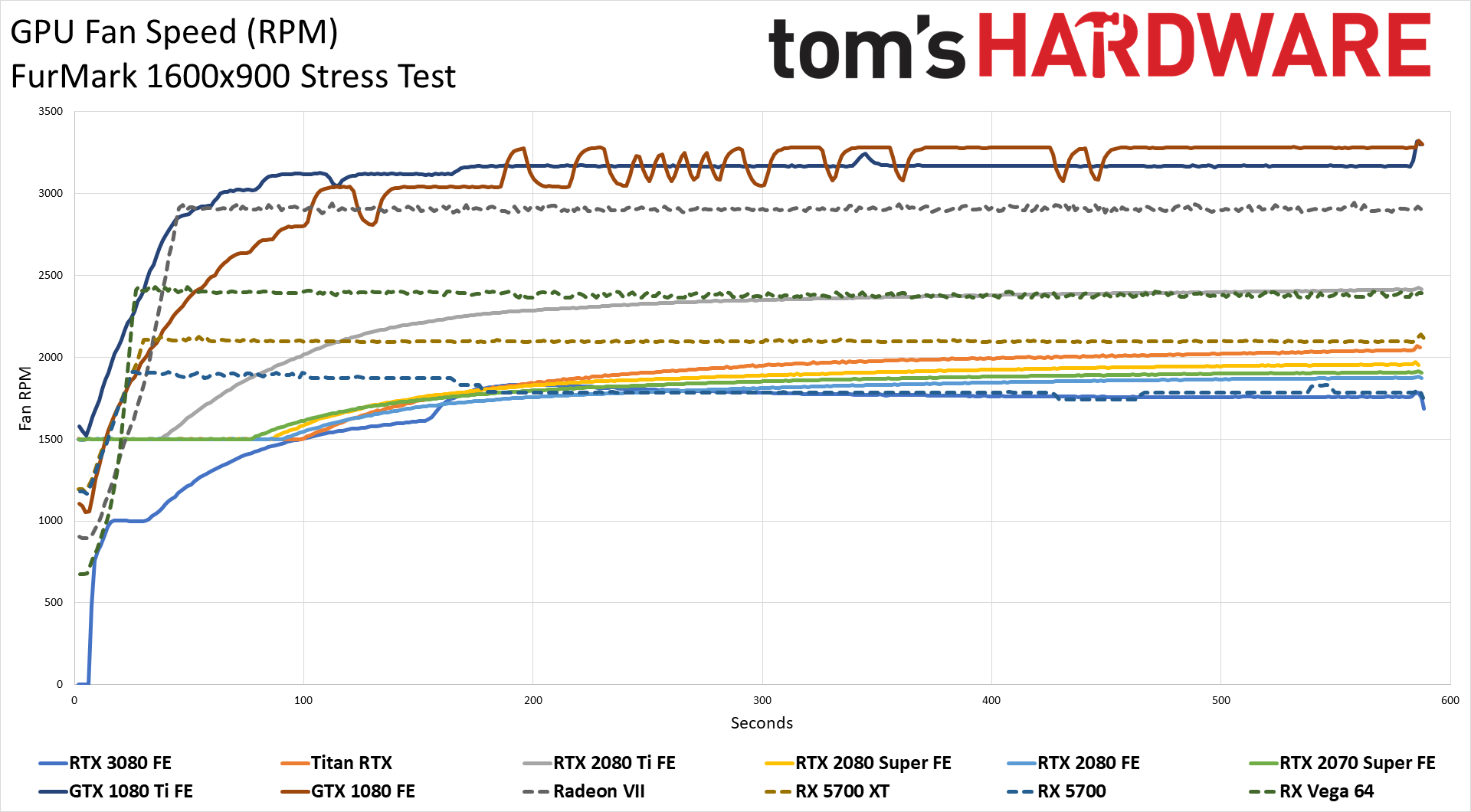

Temperatures and fan speeds are closely related to power consumption. More airflow from a higher-RPM fan can reduce temperatures, while at the same time increasing noise levels. We're still working on some noise measurements, but the RTX 3080 is measurably quieter than the various RTX 20-series and RX 5700 series GPUs. As for fan speeds and temperatures:

Wow. No, seriously, that's super impressive. Despite using quite a bit more power than any other Nvidia or AMD GPU, temperatures topped out at a maximum of 72C during testing and averaged 70.2C. What's more, the fan speed on the RTX 3080 was lower than any of the other high-end GPUs. Again, power, temperatures, and fan speed are all interrelated, so changing one affects the others. A fourth factor is GPU clock speed:

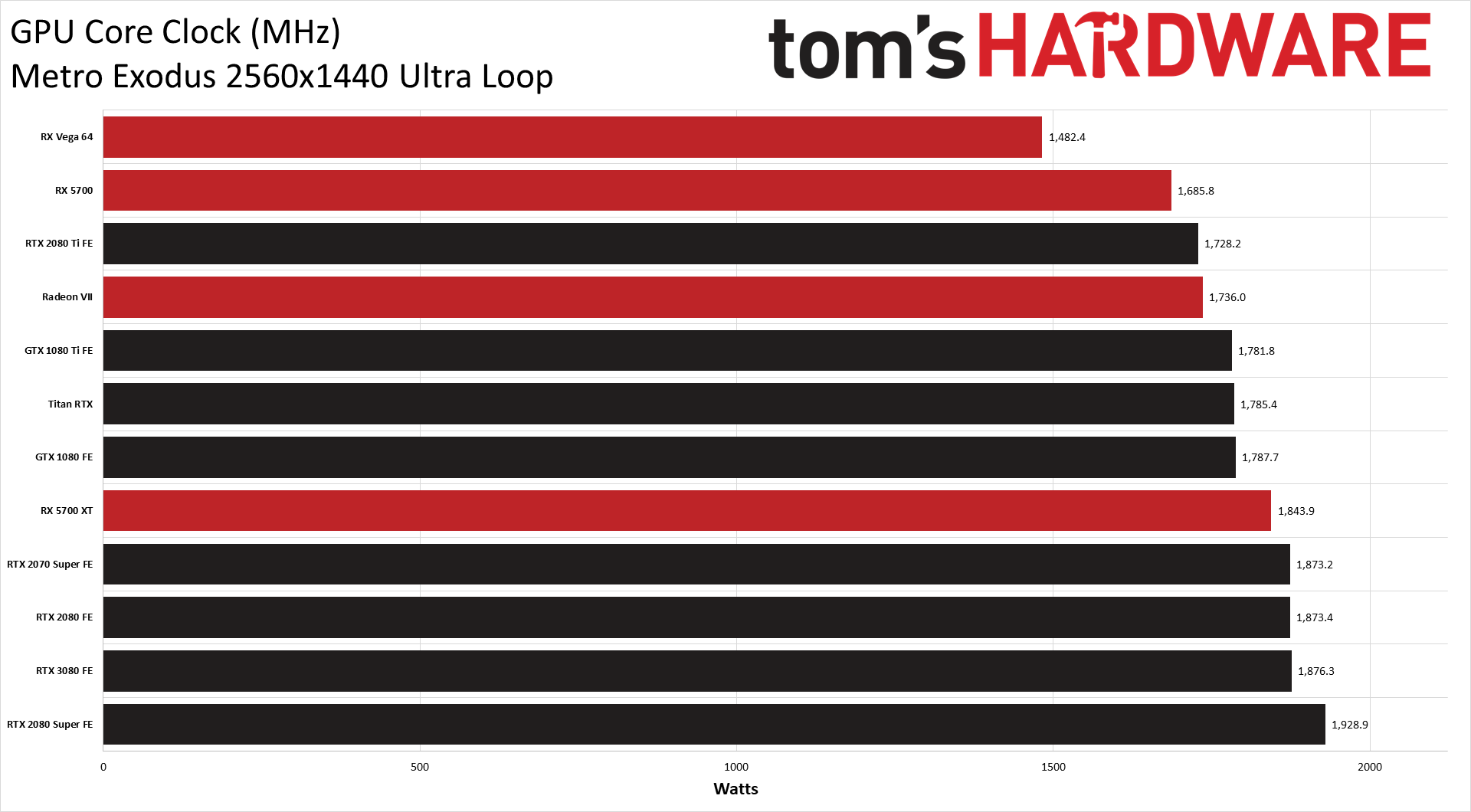

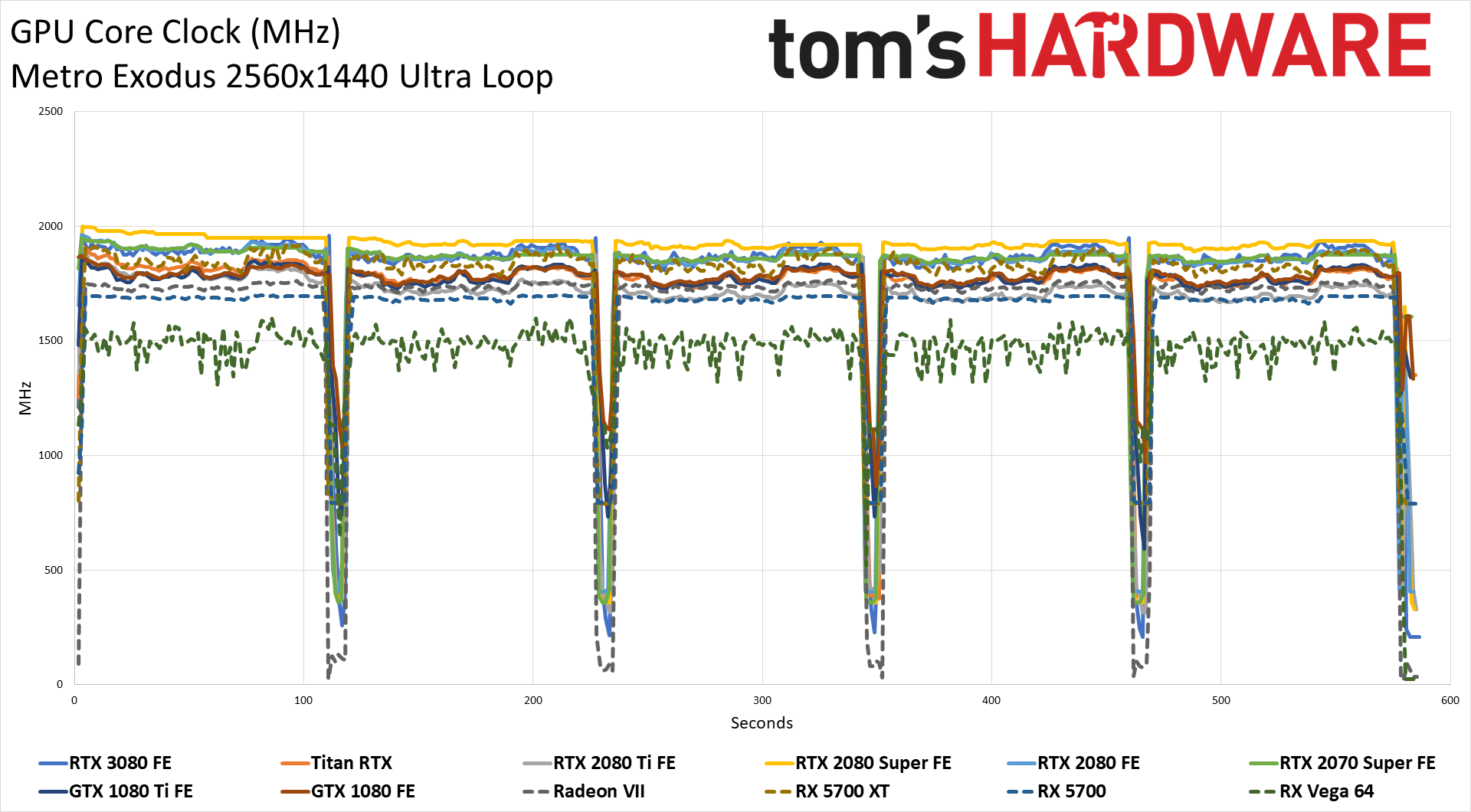

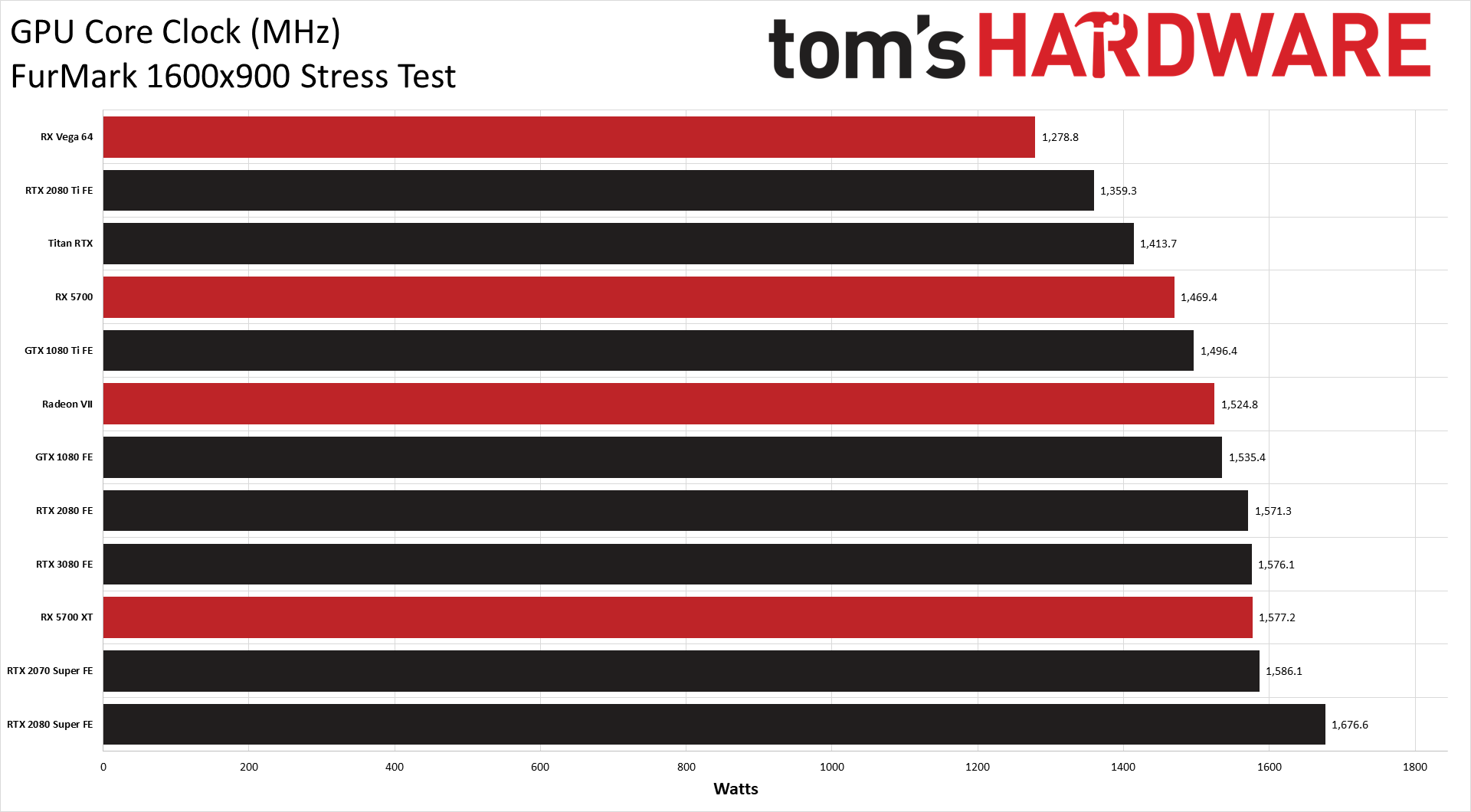

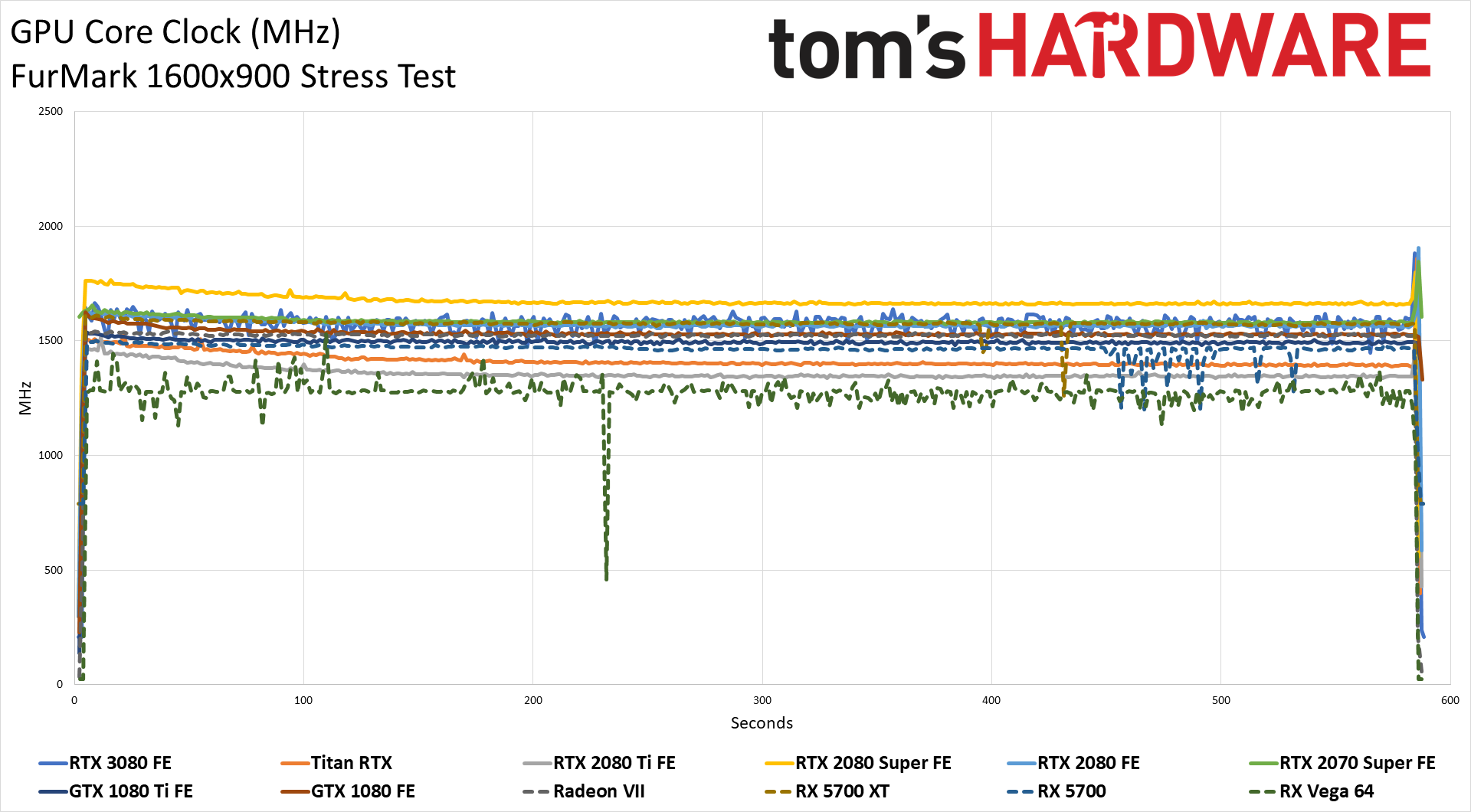

Here you can see that the RTX 3080 clocks to slightly lower levels than the 2080 Super, but that's the only GPU with a higher average speed in Metro Exodus. The 3080 drops a bit lower in FurMark, keeping power in check without compromising performance too much — unlike some other cards where we've seen them blow past official TDPs.

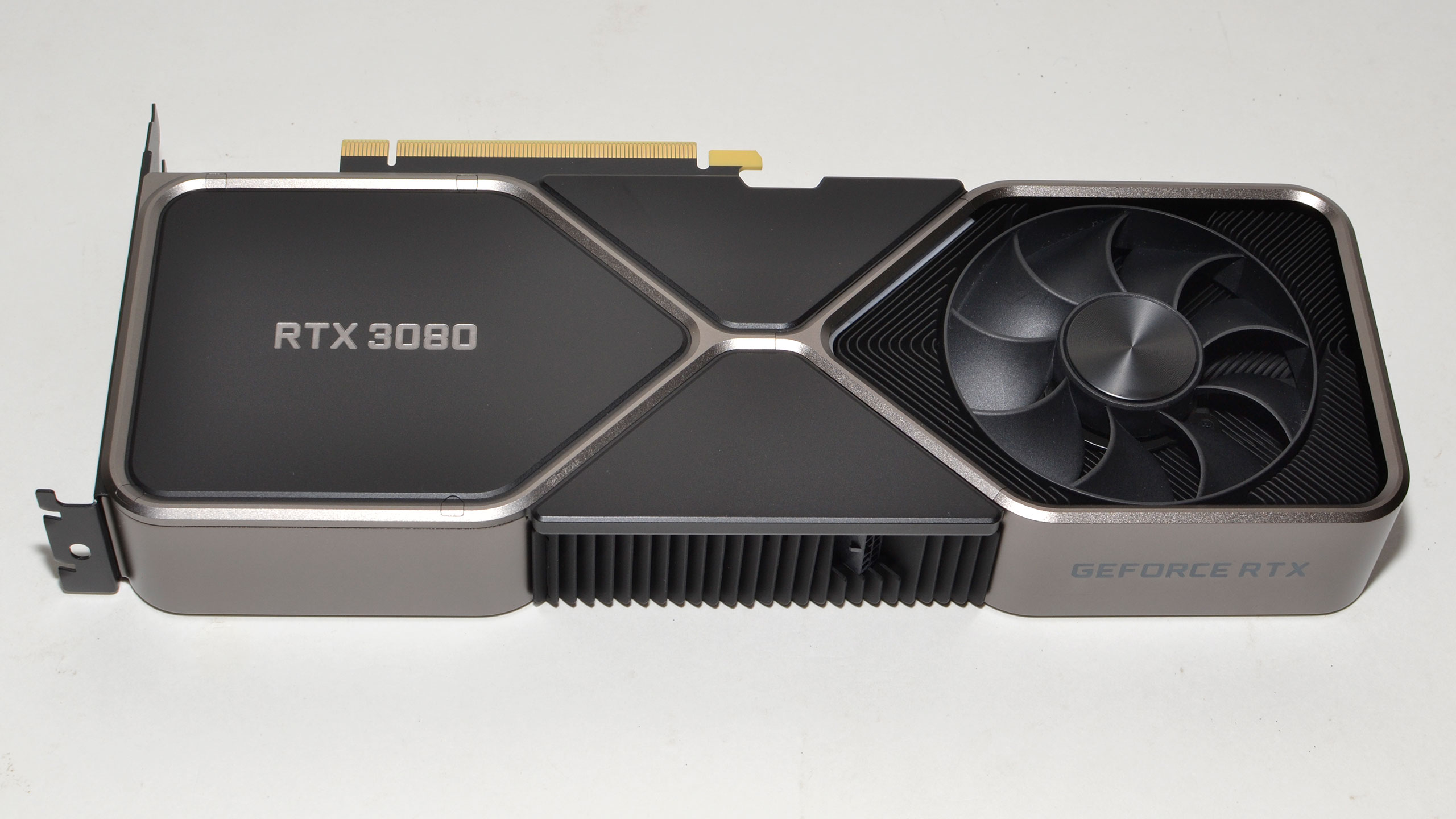

Overall, the combination of the new heatsink and fan arrangement appears more than able to keep up with the RTX 3080 FE's increased power use. It's one of the quietest high-end cards we've seen in a long time. There will undoubtedly be triple-slot coolers on some custom AIB cards that run just as quiet, but Nvidia's new 30-series Founders Edition cooler shows some great engineering talent.

GeForce RTX 3080: Is 10GB VRAM Enough?

In the past few weeks since the RTX 30-series announcement, there have been quite a few discussions about whether the 3080 has enough memory. Take a look at the previous generation with 11GB, or the RTX 3090 with 24GB, and 10GB seems like it's maybe too little. Let's clear up a few things.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

There are ways to exceed using 10GB of VRAM, but it's mostly via mods and questionably coded games — or running a 5K or 8K display. The problem is that a lot of gamers use utilities that measure allocated memory rather than actively used memory (e.g., MSI Afterburner), and they see all of their VRAM being sucked up and think they need more memory. Even some games (Resident Evil 3 remake) do this, informing gamers that it 'needs' 12GB or more to properly run the ultra settings properly. (Hint: It doesn't.)

Using all of your GPU's VRAM to basically cache textures and data that might be needed isn't a bad idea. Call of Duty Modern Warfare does this, for example, and Windows does this with system RAM to a certain extent. If the memory is just sitting around doing nothing, why not put it to potential use? Data can sit in memory until either it is needed or the memory is needed for something else, but it's not really going to hurt anything. So, even if you look at a utility that shows a game using all of your VRAM, that doesn't mean you're actually swapping data to system RAM and killing performance.

You'll notice when data actually starts getting swapped out to system memory because it causes a substantial drop in performance. Even PCIe Gen4 x16 only has 31.5 GBps of bandwidth available. That's less than 5% of the RTX 3080's 760 GBps of bandwidth. If a game really exceeds the GPU's internal VRAM capacity, performance will tank hard.

If you're worried about 10GB of memory not being enough, my advice is to just stop. Ultra settings often end up being a placebo effect compared to high settings — 4K textures are mostly useful on 4K displays, and 8K textures are either used for virtual texturing (meaning, parts of the texture are used rather than the whole thing at once) or not used at all. We might see games in the next few years where a 16GB card could perform better than a 10GB card, at which point dropping texture quality a notch will cut VRAM use in half and look nearly indistinguishable.

There's no indication that games are set to start using substantially more memory, and the Xbox Series X also has 10GB of GPU memory, so an RTX 3080 should be good for many years, at least. And when it's not quite managing, maybe then it will be time to upgrade to a 16GB or even 32GB GPU.

If you're in the small group of users who actually need more than 10GB, by all means, wait for the RTX 3090 reviews and launch next week. It's over twice the cost for at best 20% more performance, which basically makes it yet another Titan card, just with a better price than the Titan RTX (but worse than the Titan Xp and 2080 Ti). And with 24GB, it should have more than enough RAM for just about anything, including scientific and content creation workloads.

Current page: GeForce RTX 3080: Power, Temperatures, and Fan Speeds

Prev Page GeForce RTX 3080: 1080p Gaming Benchmarks Next Page GeForce RTX 3080: The New King of the Graphics Card Hill

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

chickenballs I thought the 3080 was released in September.Reply

Maybe it's considered a "paper-launch"

Nvidia won't like that :Dchalabam said:This article lacks a tensorflow benchmark -

JarredWaltonGPU Reply

FYI, it's the same review that we posted at the launch, just reformatted and paginated now. If I wasn't on break for the holidays, I'd maybe do a bunch of new charts, but I've got other things I'll be doing in that area soon enough.Zeecoder said:This article is outdated. -

MagicPants I still think the shortage is the big news for the 3080.Reply

I might be paranoid, but I think there be something to the fact that the top ebay 3080 scalper "occomputerparts" has sold 97 RTX 3080s from EVGA specifically and shipped them from Brea, California.

Anyone want to guess where EVGA is headquartered??? Brea, California.

Now it could be that some scalper wrote a bot and has been buying them off Amazon, BestBuy, and Newegg. But then why only sell EVGA cards? They'd have to program their bot to ignore cards from other vendors, which wouldn't make any sense. -

TEAMSWITCHER ReplyMagicPants said:I still think the shortage is the big news for the 3080.

I might be paranoid, but I think there be something to the fact that the top ebay 3080 scalper "occomputerparts" has sold 97 RTX 3080s from EVGA specifically and shipped them from Brea, California.

Anyone want to guess where EVGA is headquartered??? Brea, California.

Now it could be that some scalper wrote a bot and has been buying them off Amazon, BestBuy, and Newegg. But then why only sell EVGA cards? They'd have to program their bot to ignore cards from other vendors, which wouldn't make any sense.

It's probably an inside job. Demand for RTX 3000 series is off the charts! I don't see any good reason to buy an AMD GPU other than for a pity-purchase. AMD is pricing their cards less, because they are less. In sheer performance it's just a bit less, but in next generation capabilities .. it's a lot less. I will hold out for an RTX 3080. AMD won't catch up, before Nvidia has filled the demand of the market. Patience will be rewarded. -

LeszekSol What is a point discussing items (namely - Nvidia RTX3080 Founders Edition) which dos not exist in free market? Cards are anavailable since first day of "hiting market" and even Nvidia has no idea (or perhaps plans) when it will become available again. There are other cards based on similar architecture - but actually a little different - so.... WHAT FOR guys you are loosing time to discuss this? Lets forget about NVidia, or let's put it on the shelf with MobyDick books.....Reply -

PureMist Hello,Reply

I have a question about the settings used in the testing for FFXIV. The chart says Medium or Ultra but the game itself only has Maximum and two PC and Laptop settings. So how do these correlate to the actual in game settings? Or are “Medium” and “Ultra” two custom presets you made for testing purposes?

Thank you for your time. -

JarredWaltonGPU Reply

Sorry, my chart generation script defaults to "medium" and "ultra" and I didn't think about this particular game. You're right, the actual settings are different in FFXIV Benchmark. I use the "high" and "maximum" settings instead of medium/ultra for that test. I'll see about updating the script to put in the correct names, though I intend to drop the FFXIV benchmark at some point regardless.PureMist said:Hello,

I have a question about the settings used in the testing for FFXIV. The chart says Medium or Ultra but the game itself only has Maximum and two PC and Laptop settings. So how do these correlate to the actual in game settings? Or are “Medium” and “Ultra” two custom presets you made for testing purposes?

Thank you for your time. -

acsmith1972 The cheapest I see this card for right now is $1800. I really wish these manufacturers wouldn't be pricing these cards based on bitcoin mining. It's once again going to price the rest of us out of getting any decent video cards till BTC drops again.Reply