Qualcomm Snapdragon 805 Performance Preview

The Snapdragon brand is synonymous with performance. It creates anxiety that competing SoCs feel deep down in their silicon substrate. But can Qualcomm's new Snapdragon 805 maintain its dominance in the Android-based smartphone market?

Snapdragon 805: GPU And The 4K Revolution

Adreno 420 GPU

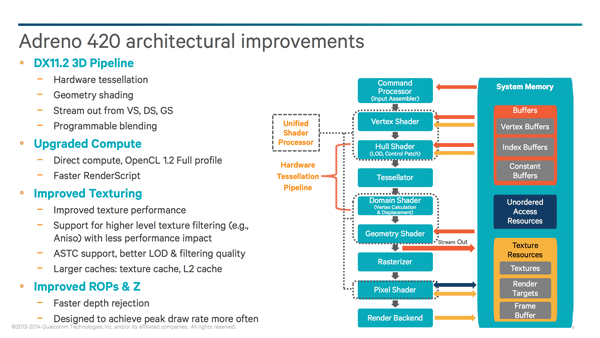

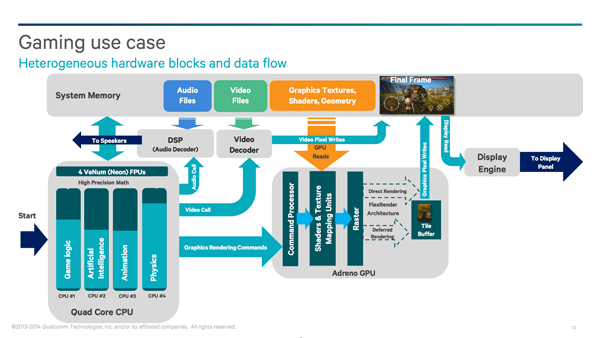

The new GPU now has direct access to main memory, while the controller in Snapdragon 805 uses quality of service (QoS) to ensure each processing engine (GPU, CPU, ISP) receives the bandwidth and latency it requires for peak performance. Along with the bump in memory bandwidth, the texture and L2 caches are also larger. Adreno 420's rendering pipeline benefits from an enhanced early z-buffer test for faster depth rejection and improvements to the ROPs on the back-end.

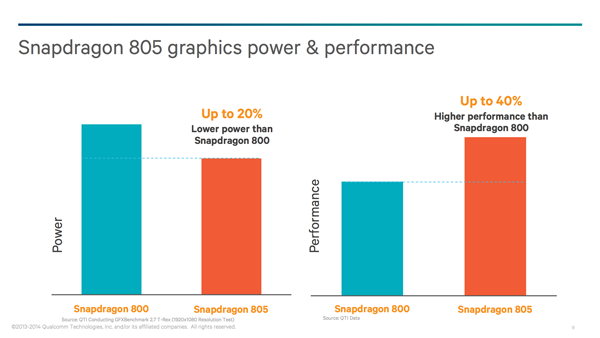

Qualcomm doesn’t provide any low-level details about its graphics architecture beyond those general enhancements. However, looking at the large increase in memory bandwidth and texture cache, I think it’s safe to assume that Adreno 420 wields more texture units. Qualcomm doesn’t mention if any changes were made to the design or quantity of shader units, or even GPU frequency, but based on our benchmark results, it’s likely that either one or both of these saw increases as well. According to Qualcomm, all of its improvements add up to 40%-higher performance and 20%-less power consumption than Snapdragon 800 running GFXBenchmark 2.7's T-Rex test at 1920x1080. We'll see if our benchmarks corroborate the company's claim, though we're forced to wait for a 805-based product to test the SoC's impact on battery life.

Adreno 420 does more than just raise the performance bar; it also improves rendering quality with support for OpenGL ES 3.1 and DirectX 11 feature level 11_2 (up from 9_3 in Adreno 3xx). It also adds support for geometry shaders and dynamic hardware tessellation, significantly reducing memory bandwidth requirements and power consumption, while simultaneously increasing scene detail. Rather than storing additional geometry mesh data in main memory and pulling it into the GPU, hardware tessellation generates the additional geometry detail programmatically on-chip without ever touching main memory.

The image below shows the visual advantage of tessellation, and according to Qualcomm, for “this simple hornet graphics scene, hardware tessellation delivers a bandwidth savings of ~360 MB/s, and a memory footprint savings of ~20 MB. For larger games, the savings on memory footprint could be in GBs.”

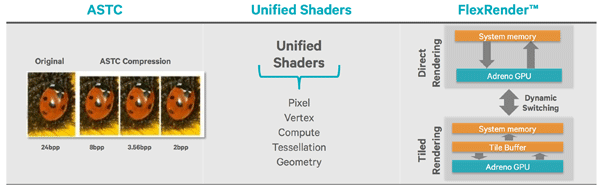

Another addition to Adreno 420 that can both reduce memory usage/bandwidth and improve visual quality is support for Adaptive Scalable Texture Compression (ASTC), the next-generation, lossy, block-based texture compression format introduced in OpenGL ES 3.0 (support is currently optional). ASTC offers developers more flexibility in choosing the appropriate texture size and quality than the ETC2 format used in the previous Adreno generation.

The 420 continues the Adreno tradition of using Qualcomm’s FlexRender technology to dynamically choose between two different rendering methods: immediate-mode rendering and tile-based deferred rendering (Adreno uses a different technique than Imagination Technologies). The goal of FlexRender is to select the most efficient rendering technique for a given workload.

Another efficiency feature is Dynamic Clock and Voltage Scaling (DCVS), which dynamically varies frequencies and voltages for each processing engine in the SoC. While this isn’t a new feature, the Adreno 420 GPU adds additional power levels for more granular control, reducing power usage.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

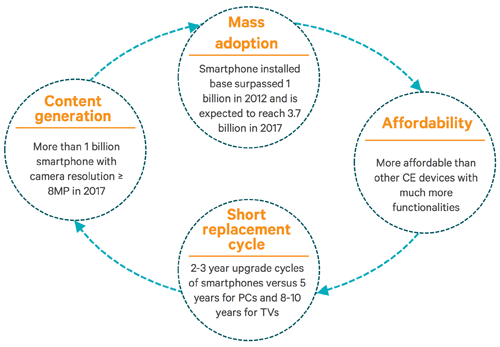

4K Video

Ultra-high-definition television (UHDTV), with a 4K resolution of 3840x2160 for the consumer version, is the latest video standard looking to replace high-definition television (HDTV), with its well-known 1920x1080 resolution. Living room adoption has been slow, however, due to the high cost of televisions and general lack of content. The situation is improving, though. Some 4K TVs sell for less than $1000, while Netflix and YouTube are currently streaming limited content in 4K. Amazon and Comcast are preparing to stream 4K video later this year, too.

For Qualcomm, big-screen TVs aren't driving 4K adoption. Rather, the company has its eye on the smaller, more mobile screens on our smartphones and tablets, as well as their 4K-capable cameras. With Snapdragon 805, Qualcomm hopes to push 4K harder. The new 805 is capable of concurrently driving its native panel at 4K (presumably at 60 Hz) and an external monitor at 4K/24 Hz.

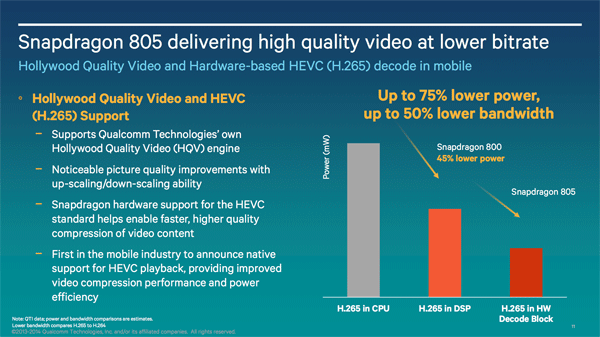

While Snapdragon 800/801 can encode/decode Ultra HD H.264 video in hardware, H.265 is handled in software. The 805 improves upon this by decoding 4K H.265 video in hardware. We'll have to wait for the Snapdragon 810 in 2015 for hardware-based encode, though. For now, the 805 can capture/encode Ultra HD video at 30 Hz and 1080p content at up to 120 Hz.

In the slide below, Qualcomm suggests up to a 75% power savings from the 805's hardware-based decode functionality.

Snapdragon 805 also includes Qualcomm’s Hollywood Quality Video (HQV) engine, a technology purchased from Integrated Device Technology in 2011. The HQV engine is supposed to improve image quality by reducing noise and optimizing image formatting and conversion from various formats. There are also image enhancement algorithms for low-resolution images.

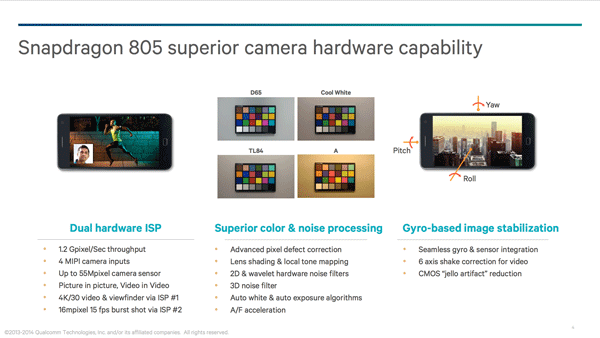

ISP

The Snapdragon 805 retains the dual ISP (Image Signal Processor) design used previously, but gets a performance boost. It’s now capable of processing 1.2 Gigapixel/s and image captures up to 55 MP across a combination of four camera inputs (up from two inputs in Snapdragon 800). The additional ISP inputs enable stereo and depth camera support.

Also included in the 805 are gyro-based image stabilization, enhanced noise reduction, and auto-focus acceleration.

Current page: Snapdragon 805: GPU And The 4K Revolution

Prev Page Snapdragon Becomes Snappier Next Page Test System Specs, Benchmark Suite and Methodology-

blackmagnum Trying not to be an Apple fanboy, but their A7 processor supports 64-bit instructions since last year. They lead innovation due to their clientele having more open-ended budget for the device than Android users (can't remember link to the study).Reply -

JOSHSKORN Did I read that right? There won't be ANY 64-bit Android Phones until 2015? It's going to take practically TWO YEARS to play catch-up to the iPhone? Mind you, most iPhone users don't know the difference between 32-bit and 64-bit, anyway, but it's "better" and that their logic for upgrading. Preying on stupid people basically has become (or always has been?) Apple's business model, and it's paid off. Sometimes, I wish I wasn't anti-Apple. C'mon, Android...get with the program!Reply -

rantoc Just find it funny tragic that more and more phone displays are almost at the same resolution as in many general desktop PC's.Reply

Also find it funny that their marketing team dare to call this "Ultra HD", would be fun to see a benchmark of this running that 4k resolution in any 3D descent detail benchmark=P

"It actually approaches what a fairly modern desktop CPU's integrated memory controller can do. All of this extra memory bandwidth isn’t for the CPU, though. It's reserved for Qualcomm’s new Adreno 420 GPU."

Yeah mostly is for the GPU, where a modern PC gpu alone pushes well over 300gb/sec. Close no? =P -

Memnarchon Dam! And I was hoping to see K1 on these benchmarks too, for a comparison. Oh well...Reply -

ta152h Is it too difficult for you guys to write a consistently good and accurate article? It's like you do the hard stuff, and then screw up details.Reply

For example, why are some charts from 0 to somewhere above the max score, and others start at, for example, 2300 and go to 3000.

I realize you guys aren't really computer people from this terrible lack of attention to detail (which someone who does more than write about computers has to have as a personality flaw), but can't you hire someone that can look over this stuff, and at least try to present it in a consistent way? Writers who aren't computer people make these types of mistakes, because their minds aren't ordered enough, but you guys really need someone like that, because all the articles suffer from imprecision and lack of clarity (and over use of words like 'alacrity', which really implies emotion, and isn't a true synonym for speed. Again, precision ...)

For example, I'm looking at charts, and am shocked by some, then realize it's just because you guys screwed up the scaling, and can't stay consistent.

Don't worry, a chart that shows very little difference because you used the full scale isn't bad. Because, if you really think about it, neither is the performance, and if you can't see a big difference in the chart, you aren't going to see a big difference in the performance. But, when you see one bar over three times longer than another, and the real difference is less than 20%, don't you think that gives the wrong impression?

If you do all the hard work, and then screw up details, it's just not as good as it could be. And yes, I've learned these sites like to say things in a way it is correct, but then present it in a manner which gives the opposite impression. Try writing without bias, and maybe this will go away. Charts are one way, comparing Kabinis with Haswells are another. Commenting more on charts that read what you want, while just presenting charts you don't like the results of, are another way. Or commenting on the part of the chart you like, while ignoring the part you don't. It's not as subtle as you might think, or maybe it is, and you don't even realize your bias. But we do.

I used to love this site, especially when Thomas Pabst used to write in his crazy way. But, it's slowly, and inexorably getting worse. There are better sites now. Maybe skip the really bad car reviews (do you really think your opinions even approximate professional sites like Car and Driver? At all? ) and focus more producing better quality computer articles. It should be easy, you guys get a lot of good information, often do reviews that people want but other sites skip, but then screw it up with a lack of attention to detail and consistency.

-

esrever I find the inconsistency makes most of this completely pointless until the software gets actually optimized and the drivers start working.Reply -

hannibal Well I really expect new article in near future where Tegra K1 and 805 are against each other. And then 810. It is interesting to see what 64bit computing will bring to mobile platforms... Mobile gaming is getting quite serious in next few years!Reply

-

irish_adam I dont see why you are all so impressed with 64bit. I mean if you believe that the A7 is super amazing because it is 64bit then you're an idiot. The fact that its 64bit adds minimal performance and is 100% gimmick and just so that they can claim to be the first.Reply

It reminds me of when AMD released their first 64bit chips and microsoft released XP64, you soon realised that unless you had 4gb or more of ram then there was no difference (well except that none of your hardware drivers would work grrrr).

Why would Qualcomm rush out a 64bit chip when there isnt any real improvement to be had? surely its better that they focus on things that will actually improve performance and battery life? I mean they havent even finished a 64bit version of android yet so what would it even run on? Until we see the need for more than 4gb of ram on phones then i really dont see the point