AMD Radeon R9 290 Review: Fast And $400, But Is It Consistent?

We have all the makings of a dramatic launch: new high-end hardware, a last-minute delay for more performance, a crazy twist based on retail hardware, and our own home-baked solution to AMD's noise problem. Does Radeon R9 290 impress us or fall short?

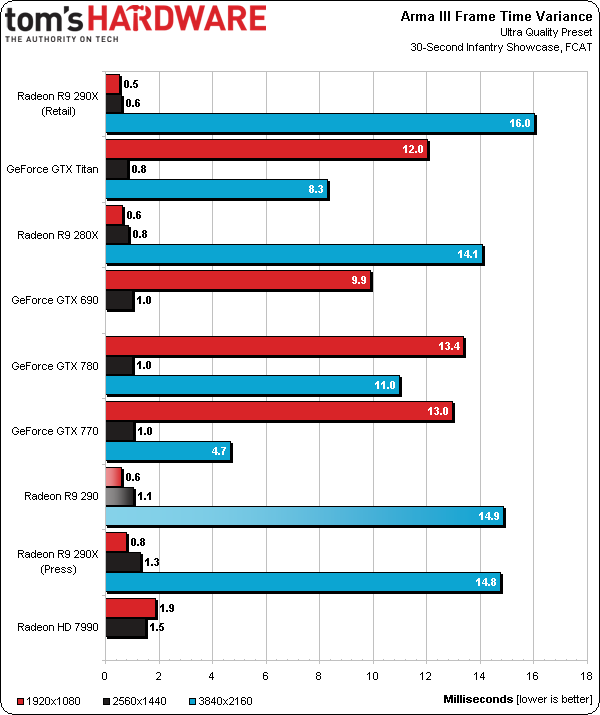

Results: Arma III

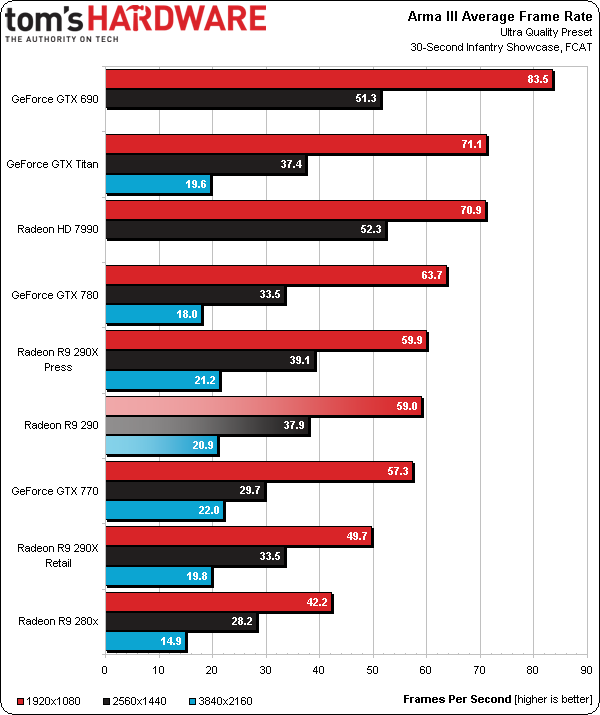

I wanted to cut down on the page count of this story, so all of the re-run benchmarks are piling into one chart with three resolutions. Again, everything you see in the next seven pages is the product of heating every graphics card up prior to testing.

Right out of the gate, at 1920x1080, Radeon R9 290 jumps up alongside our press-sampled R9 290X at 1920x1080, 25601440, and the unplayable 3840x2160. Of course, achieving this requires a more aggressive 47% fan speed ceiling, which isn’t as bad as the 290X’s Uber mode, but still significantly louder than Quiet mode.

Meanwhile, the R9 290X we bought off the shelf starts under the $330 GeForce GTX 770 and $400 R9 290. Now you see why we’re making such a big deal about the variance between boards, right?

Fortunately for AMD, the shift to 2560x1440, where we’d expect these products to be used, shakes up the standings. The press-sampled R9 290 finishes in front of the GeForce GTX 780, and indeed the Titan as well. It continues to barely trail the 290X card we received from AMD, too. But then there’s the retail 290X, which manages to tie the $500 GeForce GTX 780, but loses to the 290 it should be beating.

By the time we hit 3840x2160, all of these cards are running too slowly for playable performance. You’d need to back Arma III off of its Ultra graphics quality setting—and after spending $3500 on a monitor, you aren’t going to want to do that.

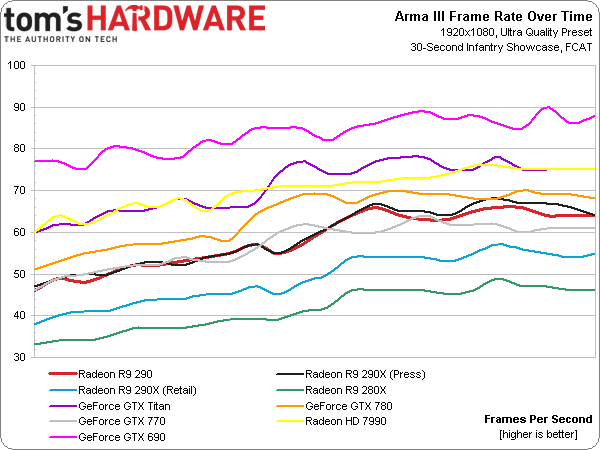

The frame rate over time charts demonstrate just how close Radeon R9 290 and 290X come to each other—at least the cards we were sent by AMD. Our retail board is consistently in a different (lower) class.

Nvidia’s cards have an issue with Arma at 1920x1080—we cannot FCAT their results without a ton of frames getting inserted into the video output. Charted out, these insertions are what mess with worst-case frame time variance at that resolution.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

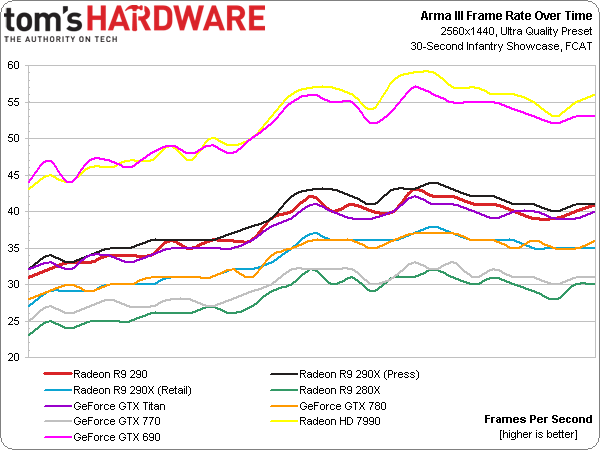

At 2560x1440, every card drops back to very low variance, which is what we want to see to confirm that there’s little in the way of stuttering going on.

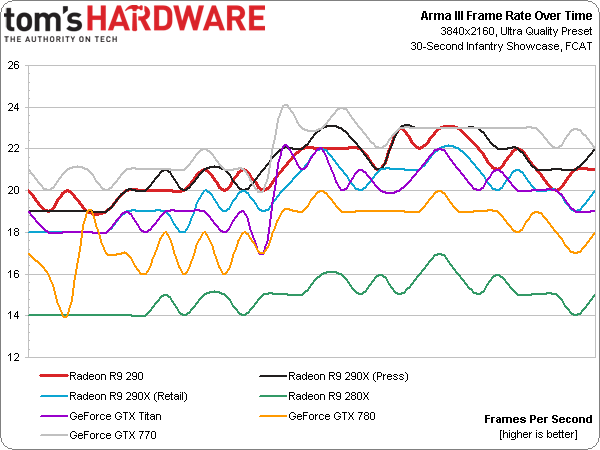

Stepping up to Ultra HD, however, frame rates drop so low, and the workload is so demanding, that variance between frames grows substantially.

Current page: Results: Arma III

Prev Page Test Setup And Benchmarks Next Page Results: Battlefield 4-

slomo4sho This is a win at $400! Good job AMD!Reply

http://techreport.com/review/25602/amd-radeon-r9-290-graphics-card-reviewed/9

11865199 said:However, the two retail Radeon R9 290X boards in our lab are both slower than the 290 tested today. They average lower clock rates over time, pushing frame rates down. Clearly there’s something wrong when the derivative card straight from AMD ends up on top of the just-purchased flagships. So who’s to say that retail 290s won’t follow suit, and when we start buying those cards, they prove to underperform GeForce GTX 780? We can only speculate at this point, though anecdotal evidence gleaned from our experience with R9 290X is suggestive.

Chris, these results differ drastically from real world results from 290X owners at OCN... I understand that your observations are anecdotal and based on a very small sample size but do you mind looking into this matter further because putting such a statement in bold in the conclusion even though it contradicts real world experiences of owners just provides a false assumption to the uninformed reader...

The above claim has already escalated further than it should... A Swiss site actually has already rebutted by testing their own press sample with a retail model and concluded the following:

With the results in hand, the picture is clear. The performance is basically identical between the press copy and graphics card from the shelf, at least in Uber mode. Any single frame per second is different, which is what may be considered normal as bonds or uncertainty in the measurements.

In the quiet mode, where the dynamic frequencies to work overtime, the situation becomes slightly turbid. A minor performance difference can be seen in some titles, and even if it is not about considerable variations, the trend is clear. In the end, it does an average variance tion of only a few percent, ie no extreme levels. The reason may include slightly less contact with the cooler, or simply easy changing ambient temperature. -

Heironious This is weird, something must be wrong with your system. I have an i5-2500, GTX 780, 16 GB G Skill 1333, 500 GB samsung SSD, Windows 8.1 64 bit, and on Ultra with 4x MSAA I get 80 - 100 FPS....Reply -

Heironious Multiplayer would add more stress to the CPUs / GPU's. Like I said, something is wrong with their machine. I would prob get higher on single player. Im going to check and find out.Reply -

slomo4sho Reply11865222 said:According to Tom's Benchmarks Nvidia's price drop just became meaningless

Now to wait for the non-reference cards at the end of the month! -

jimmysmitty I agree that the stock cooling is pretty bad but in honesty, no matter how nice they make it after market is always better. The Titan may not have had after market but if it did it would have cooled better.Reply

It looks like a good card for the price as it even keeps up with the $100 more GTX780. This is good as NVidia may drop prices even more which means we could also see a price drop on the 290X and I wouldn't mind a new 290X Toxic for sub $500. -

guvnaguy In terms of potential performance it seems like a great card, but you get what you pay for with regards to chip quality and cooling.Reply

Best to wait a month or two before buying to see how this all goes down