Talking Heads: Motherboard Manager Edition, Q4'10, Part 1

We've already talked to product managers representing the graphics industry. But what about the motherboard folks? We are back with ten more unidentified R&D insiders. The platform-oriented industry weighs in on Intel's, AMD's, and Nvidia's prospects.

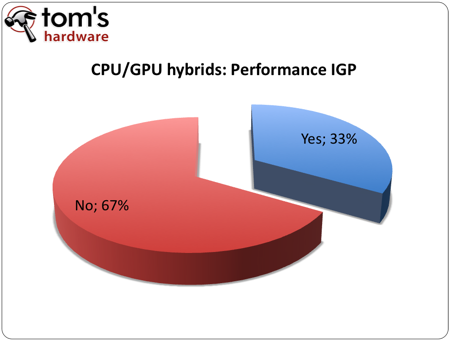

CPU/GPU Hybrids And Performance Integrated Graphics

Question: CPU/GPU hybrid designs like Sandy Bridge and Llano potentially mitigate the need for a separate graphics card. Historically, integrated graphics have been inadequate for everything above entry-level desktops. Do you think the integrated graphics from the first generation of CPU/GPU hybrids are powerful enough to drive workstations and high-end desktops?

- High-end gamers will still require a more powerful 3D experience, which is difficult for the CPU/GPU hybrid architecture.

- The performance of a first-generation integrated graphics platform is not powerful enough. It might be sufficient for Web browsing, Flash-based games, and the actions in a simple user environment. However, the graphics performance is not suitable for gaming, 3D graphing, and HD video playback. Some people still don't even know about this new technology, and so AMD and Intel need us to educate their customers about intended use characteristics.

- This question is really hard to answer. It depends on the game provider. If I’m Nvidia, I will work with game providers to create a superior game that must use a discrete graphic card. Besides, Intel and AMD will continue improving their onboard graphics, so this is a seesaw battle.

- Historically, fully-integrated solutions have been powerful enough for general desktop use, but not for workstations and high-performance systems. I think those high-end users will still prefer discrete products that give better performance and are easier to upgrade.

- Discrete graphic cards will be cornered in extreme segment only in the future.

This question was designed to mirror a similar question that we were asked in our graphics card survey. However, at the last minute, we changed it a bit. Instead of asking if the hybrid processors were “powerful enough to replace low-end to mid-range discrete graphic solutions,” we asked if they were “powerful enough to drive workstations and high-end desktops.” Admittedly, the workloads applied to workstations and high-end desktops can be quite large, so we probably should have asked two separate questions. Yet, our respondents seemed to understand that we were trying to gauge the performance that first-generation hybrids could deliver.

We intentionally made the question less loaded for the VGA oriented survey. The motherboard team has very little to fear from a struggling VGA division, so there was no need to pull any punches. It was a bit surprising we got back similar responses though, especially when you consider that the graphics card and motherboard divisions largely work independently of one another. The only people with a more holistic picture are further up the corporate ladder. However, more than half of the respondents in our VGA survey work for graphics card-exclusive companies, so they don’t even have a motherboard team to converse with.

When it comes to workstations, it is possible to task hybrids with certain tasks like transcoding. However, this is going to depend on both Intel and AMD locking down driver support. We're not so worried about this in AMD's case, but Intel has priors.

As it pertains to discrete solutions in the $150+ range, there is no way the first generation of hybrids can provide the performance necessary to compete. Looking at the roadmaps beyond 2011, we’re still skeptical because the performance demands in the mid-range and high-end market segments increase each time the fastest hardware is refreshed.

In essence, the high-end leads the way when it comes to new features and capabilities. We got DirectX 11 from flagship parts, then everything trickled down. Enthusiasts want that early access to the latest and greatest, and they're willing to pay for it. Processor-based graphics can't offer the same fix--nor will it ever be able to. A CPU with onboard graphics is going to evolve much more slowly because there are other subsystems in play.

Don't believe us? Look what memory controller integration did to chipsets. Before AMD's Athlon 64, Intel, AMD, Nvidia, VIA, and SiS could all differentiate their core logic by improving memory performance. Controllers would change every single product generation, and you'd see performance improvements in rapid succession, each new chipset adding support for the next-fastest memory standard. Once that memory controller migrated north, the reason to even consider an nForce chipset nearly dried up (save SLI support). But you notice AMD couldn't make changes to that controller as often. The pickup of DDR2 and DDR3 were much slower as a result. Specifically, Intel was quicker to the DDR2 punch than AMD. Fortunately, AMD's onboard controller gave it enough performance that the technology migration wasn't necessary.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

That won't be the case with onboard graphics, though. Now we'll have integrated GPUs set in stone for extended periods delivering middling performance--so that delay between generations will be more painfully-felt. And that's why the vendors selling discrete graphics will continue to excel at the high-end. Hell, Intel isn't even supporting DirectX 11 with Sandy Bridge. How long will it be before we see Intel make the jump? Oh, we know. You're saying, "who cares in the entry-level space." Indeed, there will be a contingent of business-oriented folks who do just fine with DX10 capabilities and fixed-function video decoding capabilities. There will even be gamers who might have previously bought $75 graphics cards who forgo the add-in board. But even with such an encroachment on the entry-level space, we simply don't see hybrid processor architectures evolving quickly enough to keep up with graphics development, and our respondents would seem to concur (albeit, in their own ways).

Current page: CPU/GPU Hybrids And Performance Integrated Graphics

Prev Page GPGPU Programming, Where Is It?-

dannyboy3210 I seem to have this nagging feeling that discrete graphics options will probably be around for another 10-15 years, at the least.Reply

If you factor the fact that getting a fusion of cpu/gpu will cost a bit more than a simple cpu, if you plan on doing any gaming at all, why not invest an extra 30$ or so (over the cost of cpu/gpu fusion, not just cpu) and get something that will game like twice as well and likely have support for more monitors to boot?

Edit: Although after the slow release of Fermi, I bet everyone's wondering what exactly is in store for Nvidia in the near future; like this article says, there seems to be a lot of ambivalence on the subject. -

sudeshc I would rather like improvements in chipsets then in CPU GPU they already are doing a wow job, but we need chipsets with less and less limitation and bottlenecks.Reply -

ta152h I'm kind of confused why you guys are jumping on 64-bit code not being common. There's no point for most applications, unless you like taking more memory and running slower. 32-bit code is denser, and therefore improves cache hit rates, and helps other apps have higher cache hit rates.Reply

Unless you need more memory, or are adding numbers more than over 2 billion, there's absolutely no point in it. 8-bit to 16-bit was huge, since adding over 128 is pretty common. 16-bit to 32-bit was huge, because segments were a pain in the neck, and 32-bit mode essentially removed that. Plus, adding over 32K isn't that uncommon. 64-bit mode adds some registers, and things like that, but even with that, often times is slower than 32-bit coding.

SSE and SSE2 would be better comparisons. Four years after they were introduced, they had pretty good support.

It's hard to imagine discrete graphic cards lasting indefinitely. They will more likely go the way of the math co-processor, but not in the near future. Low latency should make a big difference, but I would guess it might not happen unless Intel introduces a uniform instruction set, or basically adds it to the processor/GPU complex, for graphics cards, which would allow for greater compiler efficiency, and stronger integration. I'm a little surprised they haven't attempted to, but that would leave NVIDIA out in the cold, and maybe there are non-technical reasons they haven't done that yet. -

Draven35 ReplyCUDA was a fairly robust interface from the get-go. If you wanted to do any sort of scientific computational work, Nvidia's CUDA was the library to use. It set the standard. Unfortunately, as with many technologies in the PC industry kept proprietary, this has also limited CUDA's appeal beyond specialized scientific applications, where the software is so niche that it can demand a certain piece of hardware.

A lot of scientific software vendors I have communicated with about this sort of thing actually have been hesitant to code for CUDA because until the release of the Fermi cards, the floating-point support in CUDA was only single-precision floating point. They were *very* excited about the hardware releases at SIGGRAPH... -

enzo matrix Odd how everyone ignored workstation graphics, even when asked about them in the last question.Reply -

K2N hater That will only replace discrete video cards once motherboards ship with dedicated RAM for video and the CPU allows a dedicated bus for that.Reply

Until then the performance of the processors with integrated GPU will be pretty much the same as platforms with integrated graphics as the bottleneck will still be RAM latency and bandwidth. -

elbert The death of discrete will never occur because the hybrids are limited like consoles. Even if the CPU makers could place large amounts of resources on the hybrid GPU they will be stripped away by refreshes. The margin of error being estimating how many thought motherboard integrated graphics would kill discrete kind of kills the percentages.Reply

From what I have read AMD's Llano hybrid gpu is about the equal to a 5570. Llano by next year has no chance of killing sales of $50+ discrete solutions. I think they hybrids will have little effect on discrete solutions and your $150+ is off. The only thing hybrid means is potentially more CPU performance when a discrete is used. Another difference will be unlike motherboard integrated GPU's going to waste the hybrids will use the integrated GPU for other tasks. -

Onus sohaib_96cant we get an integrated gpu as powerful as a discrete one??No. There are two reasons that come to my mind. The first is heat. It is hard to dissipate that much heat in such a small area. Look at how huge both graphics card and CPU coolers already are, even the stock ones.Reply

The second is defect rate in manufacturing. As the die gets bigger, the chances of a defect grow, and it's either a geometric or exponential growth. The yields would be so low as to make the "good" dies prohibitively expensive.

If you scale either of those down enough to overcome these problems, you end up with something too weak to be useful. -

Onus elbert...From what I have read AMD's Llano hybrid gpu is about the equal to a 5570. Llano by next year has no chance of killing sales of $50+ discrete solutions...Although the reasoning around this is mostly sound, I'd say your price point is off. Make that $100+ discrete solutions. A typical home user will be quite satisfied with HD5570-level performance, even able to play many games using lowered settings and/or resolution. As economic realities cause people to choose to do more with less, they will realize that this level of performance will do quite nicely for them. A $50 discrete card doesn't add a whole lot, but $100 very definitely does, and might be the jump that becomes worth taking.Reply