Nvidia's Tegra 4 GPU: Doubling Down On Efficiency

After fending off barbs from its competition about Tegra 3's power consumption under load, Nvidia wanted to show off the architectural efficiency of Tegra 4. We sat down with the company for a deep-dive on the SoC family's unique GPU implementations.

Stepping Through Tegra 4

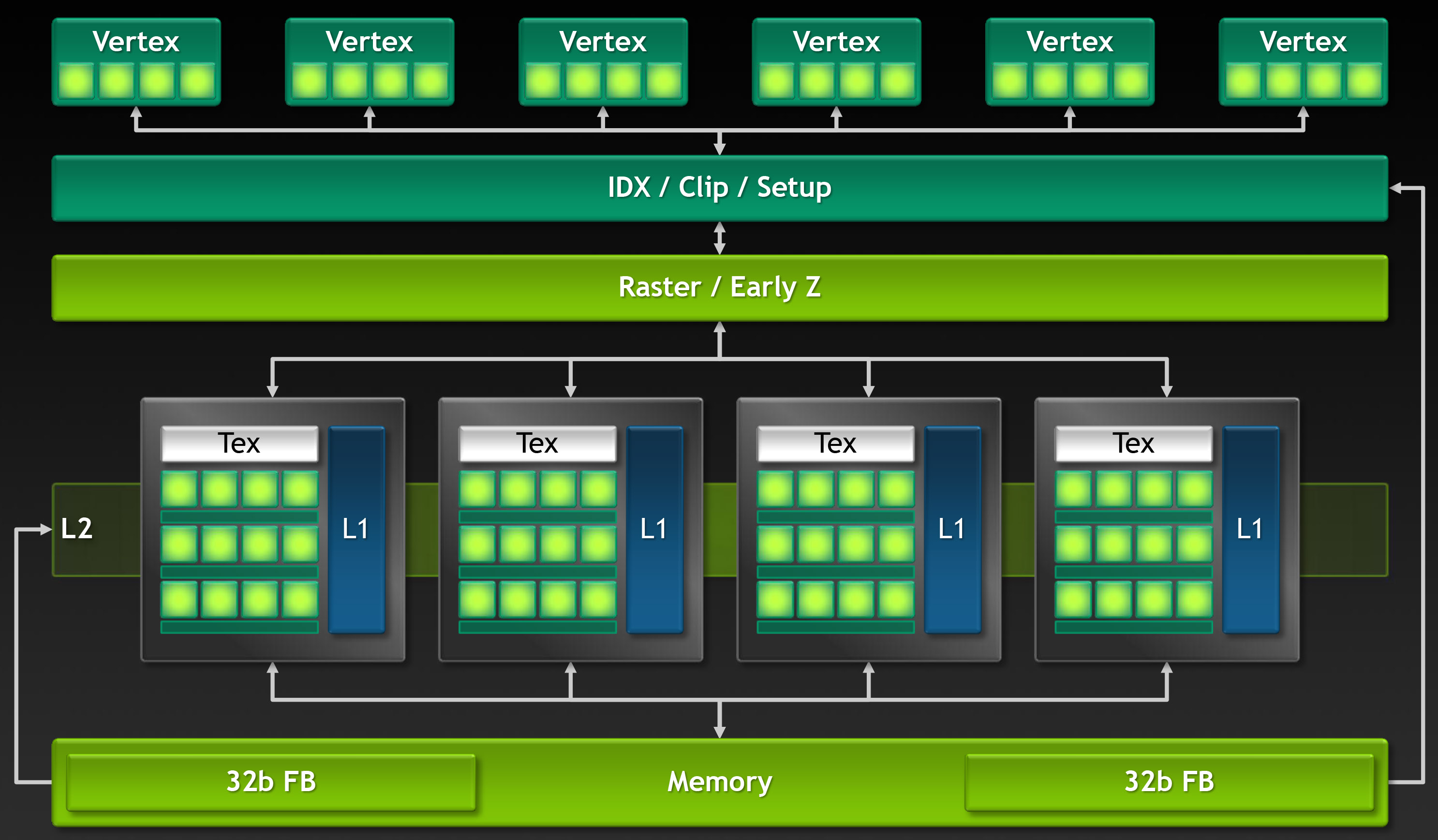

Tegra 4’s vertex processing architecture is essentially the same as NV40’s—the GPU that powered GeForce 6800 and its derivatives back in 2004.

Geometry data from the vertex processing stage is fed into the triangle setup engine, which can do a visible triangle in five clock cycles.

From there, triangles are turned into pixels. Tegra 4 does a combination of raster and early-Z detection at a rate of eight pixels per clock, discarding data that isn’t going to be visible early on in the pipeline and saving the engine from working on occluded pixels unnecessarily. It should come as no surprise (given Nvidia’s background) that this approach borrows heavily from the desktop GPU space.

How does Nvidia’s immediate-mode renderer differ from the tile-based deferred method employed by Imagination Technologies’ PowerVR IP core, and consequently Apple's Ax and Intel's Atom SoCs? Prior to the rasterization stage in a TBDR architecture, frames are cut up into tiles, and the resulting geometry data is put into a memory buffer, where occluded pixels are resolved. Particularly as the geometric complexity of a scene increases, the hidden surface removal process doesn’t fare as well.

Back to Tegra 4. Color and Z data are then compressed via a lossless algorithm. This is especially beneficial for enabling anti-aliasing without huge memory bandwidth costs (more on this shortly), since the values contained entirely within a primitive tend to be the same. Thus, they compress away nicely, yielding high compression ratios. Now, a lossless approach means that data is only compressed when it can be, so you still have to allocate space in memory—there are no savings there. But a lot of bandwidth can be conserved.

The raster stage feeds Tegra 4’s fragment pipe, which can do four pixels per clock. As mentioned, each pixel pipe has three ALUs with four multiply-add units, plus one multi-function unit, facilitating a number of VLIW instruction combinations to do different things (normalizes and combines, blends, traditional lighting calculations, and so on). Tegra 4 exposes 24 FP20 registers per pixel, compared to Tegra 3’s 16, allowing more threads in flight at any given time.

The four pipes have their own read- and write-capable L1 cache, and are serviced by a shared L2 texture cache—a new feature to Tegra 4. Naturally, you get better locality for texture filtering, again saving memory bandwidth. According to Nvidia, the cache is also really well-optimized for 2D imaging-style operations, which plays into the company’s work with computational photography.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Even as Nvidia’s engineers emphasize bandwidth-saving across the GPU, balancing a significant increase in texture rate requires a memory subsystem able to keep the SoC’s resources busy. Tegra 3 got by with a single-channel 32-bit interface. Tegra 4 uses two 32-bit channels, along with LPDDR3 memory at up to 1,866 MT/s, to push more than 3x the throughput available from LPDDR2-1066.

Current page: Stepping Through Tegra 4

Prev Page Inside Tegra 4’s GPU Next Page Tegra 4 Features And Functionality-

s3anister I'm always amazed with the progress made in strides in this ultra-competitive sector so it's nice to see nvidia finally hit 28mm with Tegra 4. I'm sure some of their performance gains can be attributed to this.Reply -

levin70 Charlie at semiaccurate is correct. The Tegra 4 is DOA. Almost no one will be using it. Everyone else is already ahead of where the T4 is today, and it hasn't even launched. How many design wins were noted? 1?Reply

Yeah, says it all. -

blazorthon deedee2die4Nvidia staying on top, the best of the best!Reply

Uhh, no... T4 isn't supposed to be out for like six months, yet it's already not as fast as some of Qualcomm's latest. Nvidia is improving, but as usual, they're staying a little behind in technology. -

aicom levin70Charlie at semiaccurate is correct. The Tegra 4 is DOA. Almost no one will be using it. Everyone else is already ahead of where the T4 is today, and it hasn't even launched. How many design wins were noted? 1?Yeah, says it all.Nobody is ahead of Tegra's four Cortex A15 cores. Krait is at less performance than A15 (until the refresh at least). Samsung's got Exynos 5 Octa, but that's not out yet either and T4 will probably still top it in the GPU performance department. Speaking of which, Tegra 4 has the most powerful GPU in floating-point of anyone (including the iPad 4) with 74.8 TFLOPS @ 672 MHz. It only takes a 825 MHz Cortex A15 to match a 1.6 GHz A9, and Tegra 4 is supposed to ship at 1.9 GHz. Unfortunately, TDP does go up in the process.Reply

You also have to look at where these parts are targeted. Krait is really gunning for phone design wins and they have many. It's a very power efficient chip that found its way into some very nice phones. Tegra 4 is not aimed at that market; Tegra 4i is. Tegra 4 will have a much higher TDP than 4i (and Krait) and will get substantially higher performance as a result. -

tjosborne Hey guys, I am considering getting a Asus transformer prime tablet with the tegra 3. Would it be best to wait till this processor ends up in a tablet to get one?Reply -

So at 1.3Gpix/s, Nvidia has just admitted to 10x overdraw...per second? So we're looking at 9~10 frames per second oh high res displays. Lag lives on.Reply

-

PreferLinux aicomNobody is ahead of Tegra's four Cortex A15 cores. Krait is at less performance than A15 (until the refresh at least). Samsung's got Exynos 5 Octa, but that's not out yet either and T4 will probably still top it in the GPU performance department. Speaking of which, Tegra 4 has the most powerful GPU in floating-point of anyone (including the iPad 4) with 74.8 TFLOPS @ 672 MHz. It only takes a 825 MHz Cortex A15 to match a 1.6 GHz A9, and Tegra 4 is supposed to ship at 1.9 GHz. Unfortunately, TDP does go up in the process.You also have to look at where these parts are targeted. Krait is really gunning for phone design wins and they have many. It's a very power efficient chip that found its way into some very nice phones. Tegra 4 is not aimed at that market; Tegra 4i is. Tegra 4 will have a much higher TDP than 4i (and Krait) and will get substantially higher performance as a result.You mean Gigaflops, not Teraflops.Reply -

blazorthon aicomNobody is ahead of Tegra's four Cortex A15 cores. Krait is at less performance than A15 (until the refresh at least). Samsung's got Exynos 5 Octa, but that's not out yet either and T4 will probably still top it in the GPU performance department. Speaking of which, Tegra 4 has the most powerful GPU in floating-point of anyone (including the iPad 4) with 74.8 TFLOPS @ 672 MHz. It only takes a 825 MHz Cortex A15 to match a 1.6 GHz A9, and Tegra 4 is supposed to ship at 1.9 GHz. Unfortunately, TDP does go up in the process.You also have to look at where these parts are targeted. Krait is really gunning for phone design wins and they have many. It's a very power efficient chip that found its way into some very nice phones. Tegra 4 is not aimed at that market; Tegra 4i is. Tegra 4 will have a much higher TDP than 4i (and Krait) and will get substantially higher performance as a result.Reply

S4 Pro is a faster CPU IIRC. IDK about how the graphics compares and won't comment about it.

Nvidia, like I said, is getting better, but they're still going to be a little behind. They're making up a lot of ground here, especially with how they're making Tegra 4 and Tegra 4i instead of a single SoC to take both places, but they seem like they'l still have a little room to make up, at least in CPU performance, to be the best. Like I said before (at least in other articles about it), they'll still be near the top either way.