Everything You Need To Know About Thunderbolt

Thunderbolt's Bandwidth: Sizing Up To USB 3.0, FireWire, And eSATA

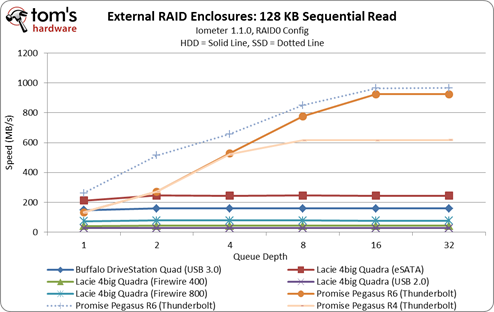

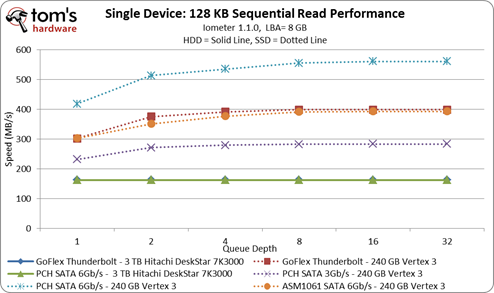

Thunderbolt was designed with a number of usage models in mind, one of which is high-bandwidth, low-latency data transfer for audio and video professionals. That has sequential transfers written all over it. And so, we're able to fire up Iometer and cram as many 128 KB blocks through the interface as possible in order to gauge Thunderbolt's potential performance.

In our quest to test the limits of external storage interfaces, we rounded-up a handful of external RAID enclosures (subsequently disabling caching).

We got our hands on LaCie's 4big Quadra to use with FireWire 400/800, USB 2.0, and eSATA. It was a little harder to track down a USB 3.0-capable solution, but we managed to snag a DriveStation Quad USB 3.0 from Buffalo Technology. Promise sent us its Pegasus R6 with Thunderbolt compatibility. All enclosures were loaded up with Hitachi DeskStar 7K3000 drives.

Thunderbolt wins hands-down in a raw performance comparison, with the hard drive-based Pegasus R6 maxing out at up to ~925 MB/s at high queue depths. Because the cable's second Thunderbolt channel is used for display data, that ~925 MB/s figure is very close to the interface's 1 GB/s theoretical ceiling in one direction. Despite that ceiling getting hit, Thunderbolt simply destroys the other five interface options.

Notice in that chart above that there are lines for hard drives and lines for SSDs. Crucial lent us six m4 SSDs, just in case the hard drives failed to saturate our connections. What we saw, though, was that the DriveStation Quad and 4big Quadra didn't speed up after replacing disks with SSDs. Throughput from the Pegasus R6 did increase to 965 MB/s, though.

This small performance delta confirms that we're saturating the Thunderbolt interface with six hard drives in RAID 0. With four disks (Pegasus R4), performance tops out at 600 MB/s using Thunderbolt. We also see that the SSD-equipped Pegasus R6 achieves better performance at lower queue depths than the version with hard drives.

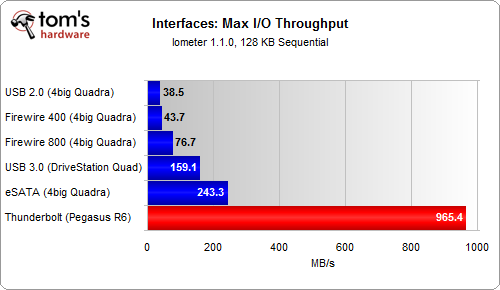

The above chart represents peak throughput from our sequential results, derived from testing a single device attached to a Thunderbolt port. According to Promise, as you add devices, aggregate performance slowly starts to slide due to the protocol overhead required to manage multiple devices. Consequently, you're better off with one high-speed device compared to several slower peripherals if you're trying to tax interface bandwidth. Naturally, when we add devices to a USB 2.0 hub or FireWire daisy chain, the aggregate performance of those devices drops as well.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

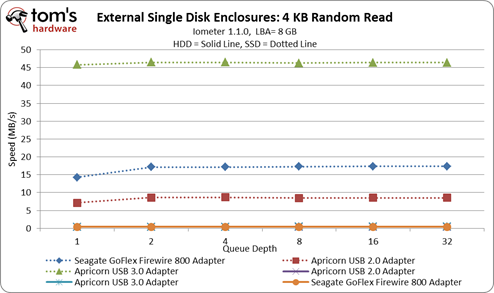

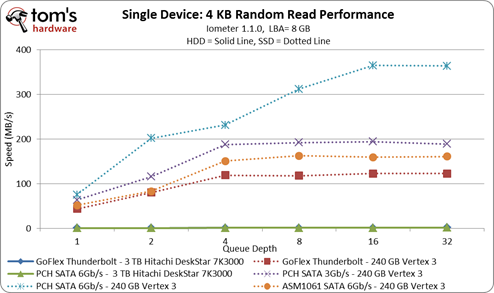

Despite impressive sequential results, Thunderbolt's random I/O performance is substantially weaker—often the case when you're working with external interfaces. Dropping an internal SATA drive into an enclosure with some sort of bridge chip negatively affects the disk's native potential. This can be attributable to the interface itself. For example, USB and FireWire completely discard command queuing, resulting in benchmarks the would seem to reflect a queue depth of one at all times. The graph below illustrates:

It is no surprise to see the hard drives deliver low throughput in a test involving random reads. But we'd expect to see SSDs doing better. Of course, our expectation there is based on the performance of a drive attached via native SATA (a 240 GB Vertex 3 should hit ~325 MB/s) at high queue depths. With one outstanding command, the Vertex 3 falls closer to ~70 MB/s. But the USB- and FireWire-based solutions even come up short of that number. What's going on?

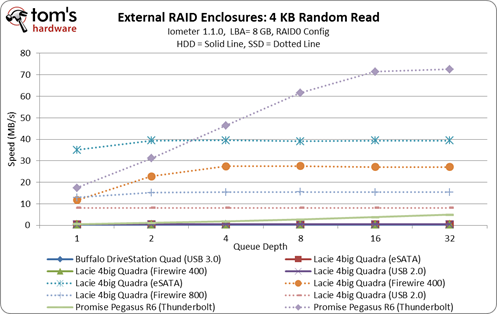

Let's examine the random I/O performance of our external RAID enclosures. If these connectivity technologies cannot queue up commands, can we compensate through the use of multiple drives? After all, these RAID devices have their own own controllers to manage I/O requests, hence the support for hardware-based RAID.

Random I/O still looks pretty bad, even with multiple SSDs in RAID. It's simply bad here. Even with SSDs in RAID, we cannot achieve the same performance possible with a native SATA connection. Even the Pegasus R6 equipped with six Crucial m4 SSDs cannot seem to get past 80 MB/s. Although we're seeing generally-poor handling of random I/O by external interfaces, there are two exceptions where this wouldn't be the case.

First, a non-RAID eSATA drive should be able to achieve native SATA 3Gb/s performance, as long as it does not support any other interface. It must be non-RAID and exclusively eSATA because adding support for RAID and other interface technologies requires controller hardware. Lacie’s 4big Quadra, for example, cannot achieve native SATA performance via eSATA because it uses Oxford Semiconductor's OXUFS936QSE, a universal interface-to-quad-SATA storage controller (supporting eSATA, FireWire 800, FireWire 400, and USB 2.0). The RAID controller within the Oxford Semiconductor chip is implemented after the eSATA switch, affecting random I/O performance. Unfortunately, only a handful of external enclosures support eSATA and only eSATA.

Non-RAID Thunderbolt devices are also an exception. Inside them, you'll likely find a PCIe-to-SATA controller. This is very similar to the topology motherboard vendors used to add SATA 6Gb/s support to their platforms before it was integrated into chipsets, employing third-party Marvell and ASMedia controllers attached to the core logic through one PCIe link.

Non-RAID Thunderbolt drives employing third-party SATA controllers underperform native SATA connections, though, in this case. Seagate's GoFlex Thunderbolt adapter, for example, uses ASMedia's ASM1061 SATA controller, which coincidentally is also on-board our MSI Z77A-GD80. Theoretically, random performance should be nearly identical from both devices. But the GoFlex Thunderbolt adapter only delivers 120 MB/s, whereas we can achieve 160 MB/s with a direct connection to motherboard's ASM1061.

According to ASMedia, the performance of its ASM1061 depends on vendor-specific BIOS optimization. Creating a product for a broader range of applications, like the GoFlex, means less of the tuning you'd find on a piece of hardware tweaked for a certain motherboard model.

Sequential performance isn't as sensitive to those optimizations, which is something we also see in our SSD reviews. While we keep our BIOS, drivers, and SSD firmware up-to-date, sequential numbers rarely change. Not surprisingly, then, we see identical performance from our Vertex 3 in Seagate's GoFlex Thunderbolt adapter and MSI's Z77A-GD80. Both deliver a maximum of 400 MB/s in sequential reads.

Current page: Thunderbolt's Bandwidth: Sizing Up To USB 3.0, FireWire, And eSATA

Prev Page Thunderbolt Finds Its Way To PCs Next Page Thunderbolt Controllers: Five Flavors, All Intel-

mayankleoboy1 for more insight of thunderbolt fail and Intel's lying :Reply

http://semiaccurate.com/2012/06/06/intel-talks-about-thunderbolt/ -

shoelessinsight Active cables are more likely to have defects or break down over time. This, plus their high expense, is not going to go over well with most people.Reply -

mayankleoboy1 ^Reply

because "thunderbolt" sounds much sexier than "HDBaseT " ?

and with apple, its all about the sexiness, not functionality/practicality. -

JOSHSKORN Prediction: We will see Thunderbolt available on SmartPhones. When we do, this port will be able to handle a monitor, external hard drives, speakers and many other USB devices through its Thunderbolt docking station. Obviously a SmartPhone won't need to be attached to a webcam. This will become the future desktop...that is, if it can run Crysis. LOL Had to add that in there. :)Reply -

pepsimtl I remenber scsi interface ,so expensive ,just the company (server) use it .Reply

and sata interface replace it.

For me Thunderbolt is the same song

I predict a sata 4 (12gb) or usb 4 ,soon