Wolfenstein II: The New Colossus Performance Review

Bonus: Radeon RX Vega 64 vs. GeForce GTX 1080

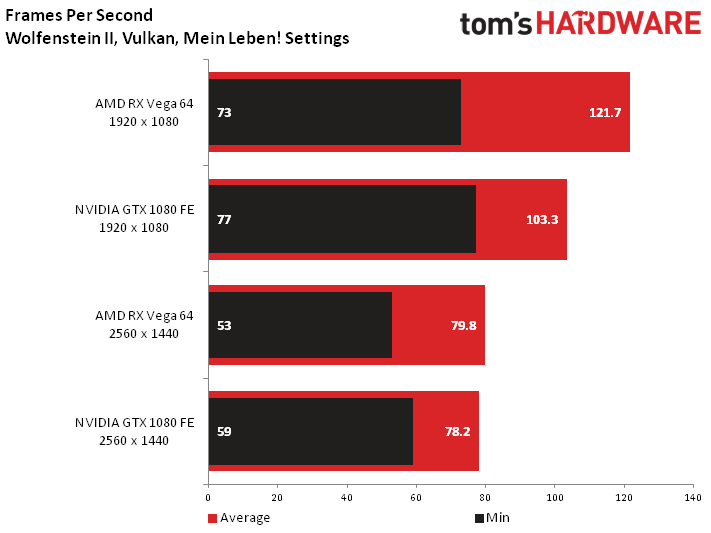

Let’s quickly take a look at the performance of our two most powerful cards: Nvidia's GeForce GTX 1080 Founders Edition and AMD's Radeon RX Vega 64.

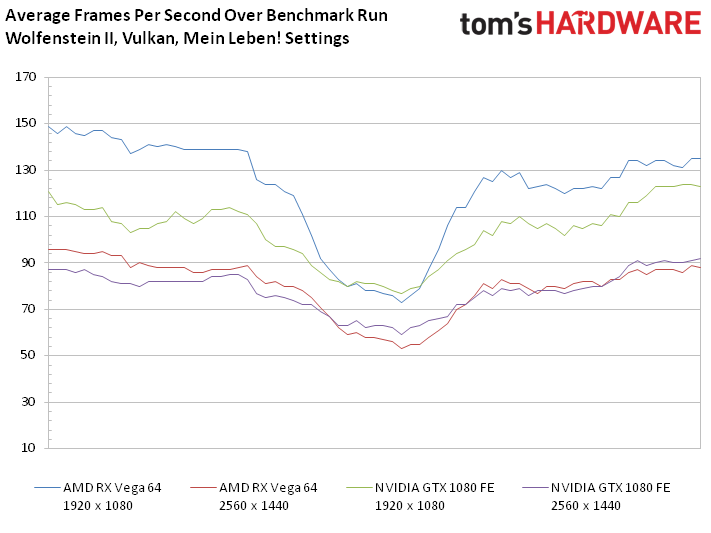

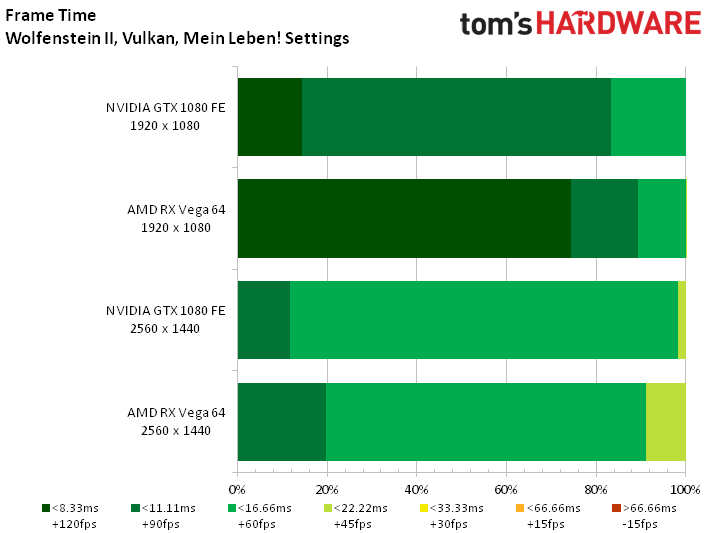

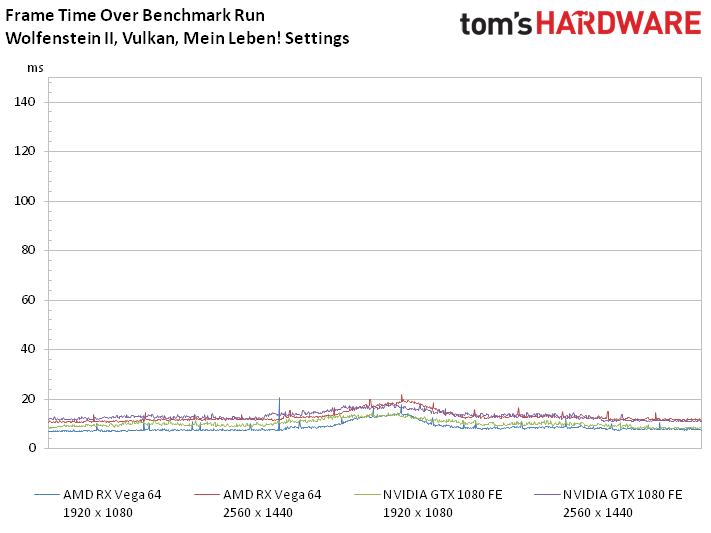

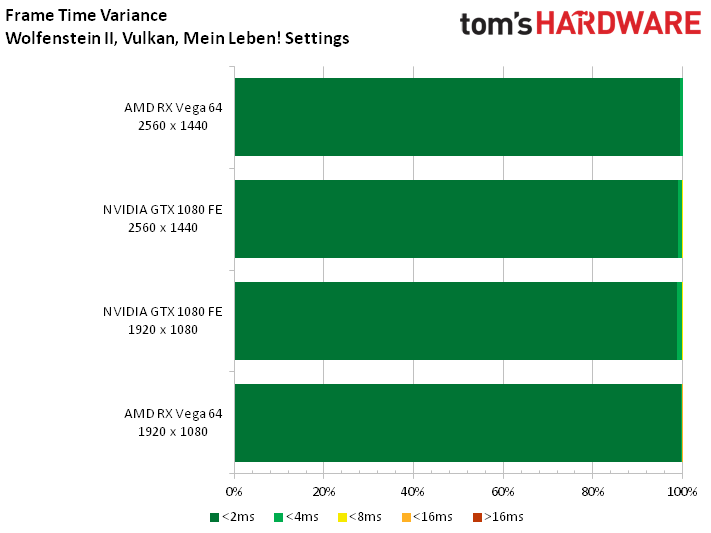

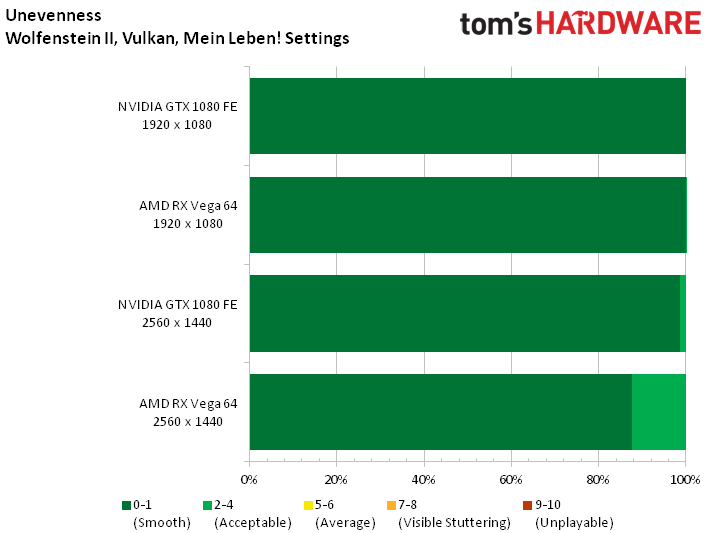

It is no surprise that these two beastly boards run Wolfenstein II in a perfectly smooth manner at 1920x1080 and 2560x1440 with max'ed-out quality settings. AMD's Radeon RX Vega 64 posts a better frame rate at 1080p, averaging more than 120 FPS. But the GeForce GTX 1080 FE catches up at 1440p.

Processor Utilization

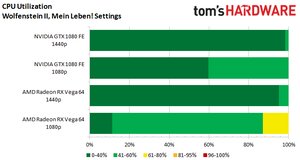

While there is almost no difference in CPU usage between these two cards at 1440p, the Radeon is noticeably more hungry for processor resources than the GTX 1080 at 1920x1080. On a positive note, this translates to a much higher frame rate, so we're hardly complaining.

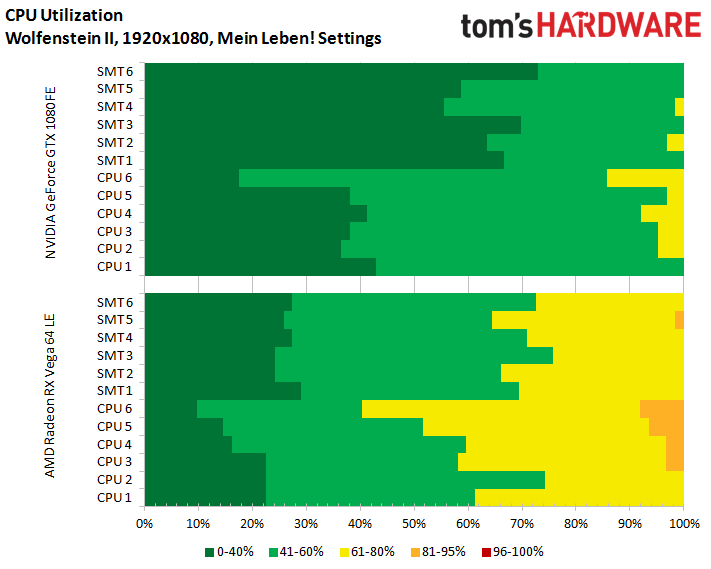

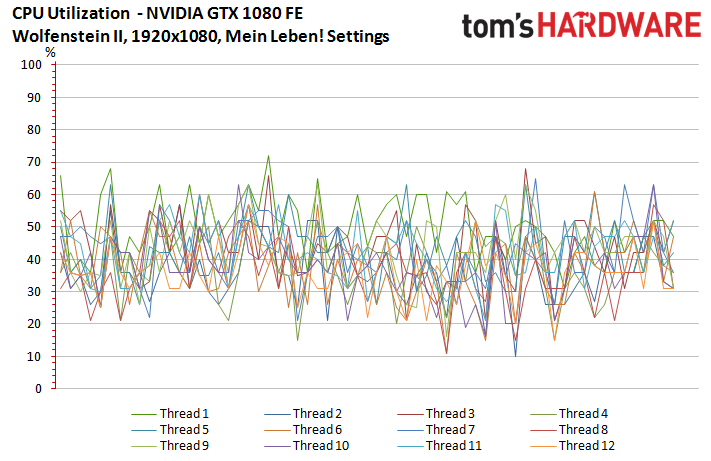

1080p

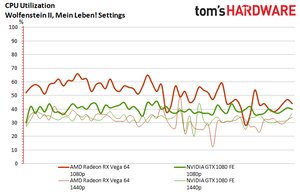

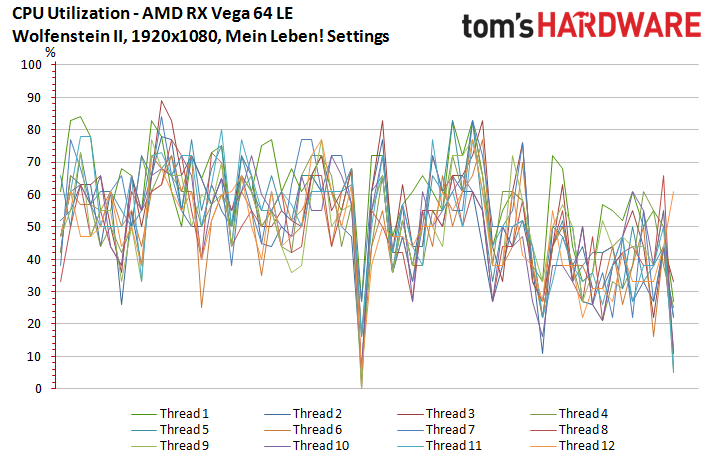

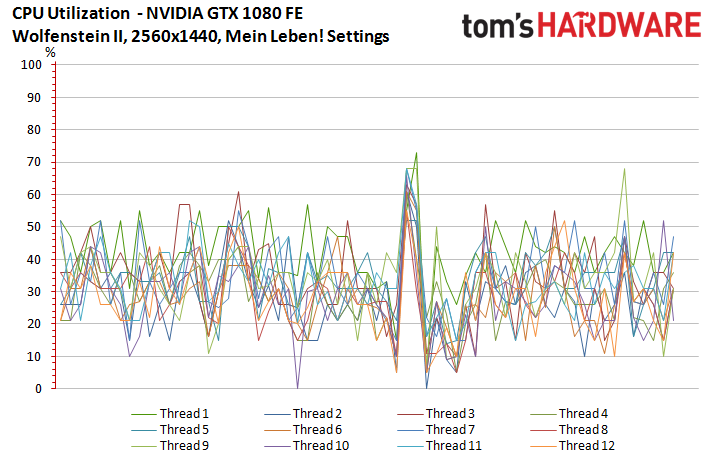

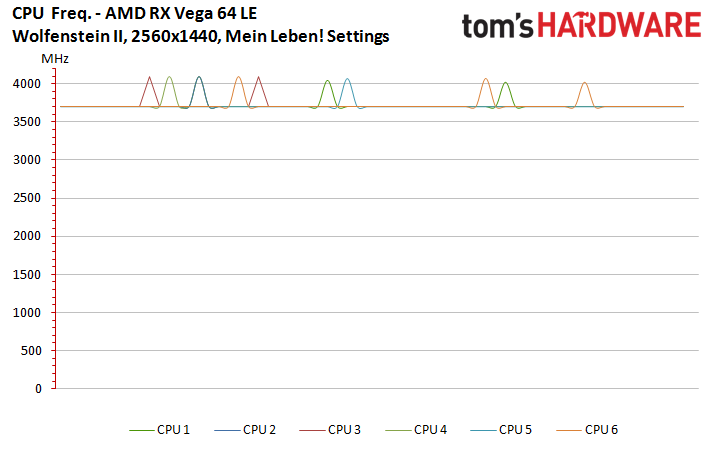

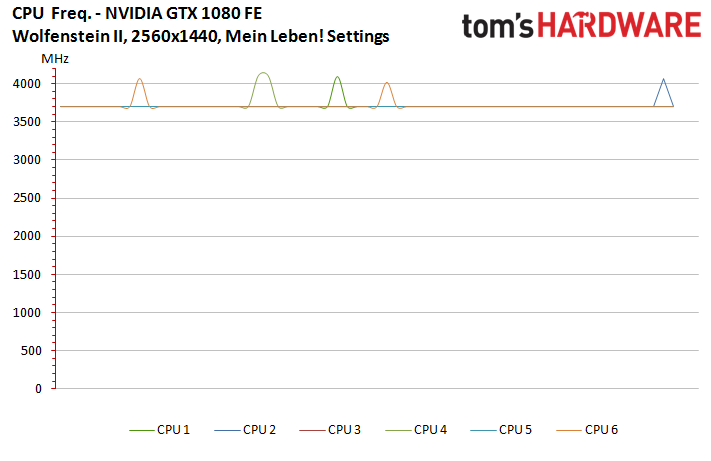

Our utilization analysis confirms what we just saw in condensed form: mainly, Radeon RX Vega 64 is more hungry for CPU resources than the GTX 1080. Moreover, the id Tech 6 engine does a fantastic job at implementing threading, as our 6C/12T Ryzen 5 1600X is used in a homogeneous manner.

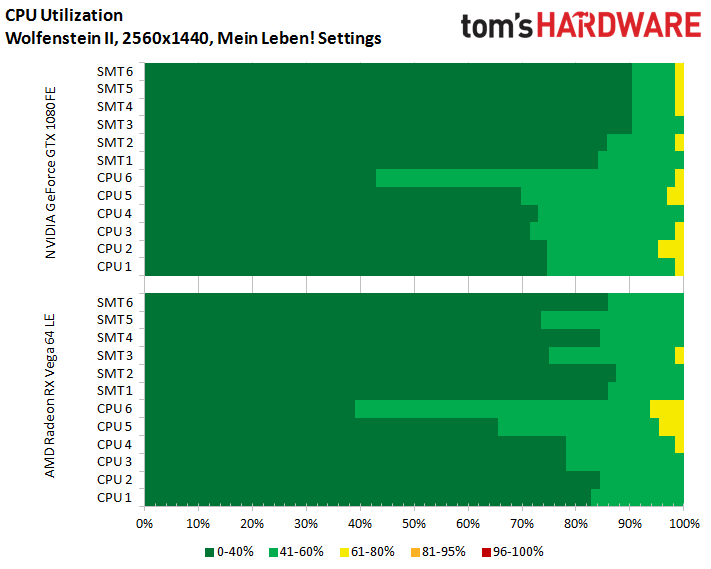

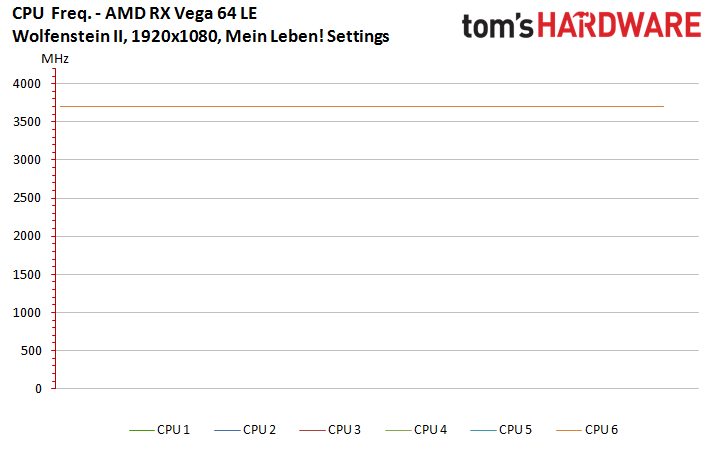

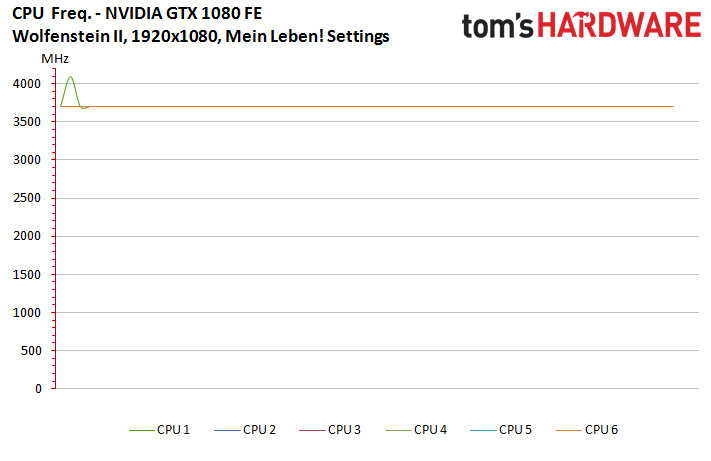

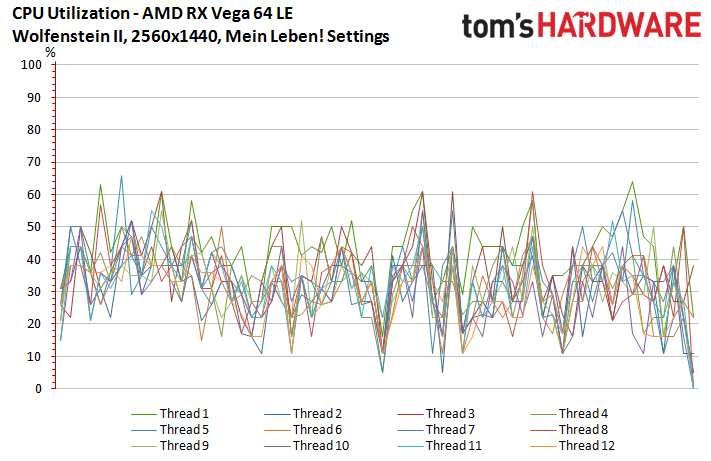

1440p

Differences between these two cards practically evaporate at 1440p. Once again, host processing resources are well-utilized by the game engine.

MORE: Destiny 2 Performance Review

MORE: DiRT 4 Performance Review

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Prey Performance Review

Current page: Bonus: Radeon RX Vega 64 vs. GeForce GTX 1080

Prev Page CPU, RAM & VRAM Resources Next Page Conclusion-

HEXiT something i noticed about this game. i have an ancient 920@3.5 and a gtx 970Reply

i can run the game at near max settings (just turn the textures down from ultra) and when vsync is on it shows a solid 60fps... i turn vsync off and get between 40 and 55 fps. so im guessing the fps counter isnt that accurate in game. -

toddybody Not sure why the RX 64 and GTX 1080 Benchmarks are separate as a "bonus"...and why other top tier (i.e. 1070, 1080ti) cards arent included.Reply

Don't have one myself, but 4K/2160p benchmarks would be nice too. Kinda underwhelmed, considering how long the title has been out. -

Sakkura Would have liked to see a 4GB card in the mix, and ideally even the 1060 3GB. So we could see how much VRAM is actually needed for solid performance. VRAM utilization is imprecise because games can allocate a lot more VRAM than they really need.Reply

I would also like to have seen other CPUs tested. It's great that the game uses 6 cores and 12 threads, but will it still run well on 4c/4t? Lots of people are still on processors like that. -

spdragoo Would have also been nice to see some actual CPU benchmarking, especially since the spread of minimum/recommended CPUs represents a very wide range (i.e. Ivy Bridge Core i5/i7 CPUs & 4C/8T FX CPUs all the way up to current Ryzen CPUs), as well as some idea as to whether newer Intel CPUs have much of a boost over the 3rd/4th-gen versions.Reply

Something strange as well: the minimum/recommended Intel CPUs were 4C/4T or 4C/8T CPUs, implying that you need at least 4 physical cores to run this (i.e. just having 4 threads won't work, so no 2C/4T Core i3/Pentium CPUs). And that's kind of supported by the listed FX CPUs. But why would Machine Games say that you can't use a Ryzen 3 (4C/4T) CPU to run this game? The R3 1200 is almost identical to the minimum R5 1400 listed (same Boost/XFR speeds, only 100MHz slower on base, & is a 4C/4T CPU vs. the 4C/8T 1400), & the R3 1300X runs almost as fast as the 6C/12T R5 1600X. Also, would this perhaps be a game that a Coffee Lake Core i3 (4C/8T) could handle, or would you still need to use a Core i5 or i7? -

spdragoo Reply20435970 said:Would have liked to see a 4GB card in the mix, and ideally even the 1060 3GB. So we could see how much VRAM is actually needed for solid performance. VRAM utilization is imprecise because games can allocate a lot more VRAM than they really need.

I would also like to have seen other CPUs tested. It's great that the game uses 6 cores and 12 threads, but will it still run well on 4c/4t? Lots of people are still on processors like that.

Just what I was wondering, especially since they listed Ivy Bridge/Haswell Core i5 (4C/4T) CPUs in the minimum/recommended CPU sections. -

Kahless01 did you read different articles than i did? there is a damn 1060 3g and several 4gb cards included in the test. the 3g 1060 takes a huge hit compared to the 6g.Reply -

quilciri Looks like the game requires a large amount of GPU memory, and doesn't necessarily need the highest end GPU. I don't know how they came to that conclusion that they did when the 8GB 390 did so well in the test.Reply -

quilciri Reply20435970 said:Would have liked to see a 4GB card in the mix, and ideally even the 1060 3GB.

There were four 4GB cards tested, and so was the 1060 3GB.

What it looks like they should have tested, given their results, is something along the lines of an 8GB 560 - a mid or lower range GPU with a large amount of memory. -

phobicsq I thought it runs really well all maxed out. I was a bit sad there wasn't a lot of openess though. It was rather linear.Reply -

spdragoo Reply20436405 said:20435970 said:Would have liked to see a 4GB card in the mix, and ideally even the 1060 3GB.

There were four 4GB cards tested, and so was the 1060 3GB.

What it looks like they should have tested, given their results, is something along the lines of an 8GB 560 - a mid or lower range GPU with a large amount of memory.

Don't know about that, since the minimum was supposed to be the GTX 770. But I do think they should have used a different GPU list:

■ They probably should have skipped the GTX 1050 or RX 460, as both are 2GB GPUs (well below the supposed minimum 4GB VRAM threshold) & well below the minimum GTX 770/R9 290 minimums. Although it did confirm that low-end cards aren't going to cut it. Maybe they would have been better in a follow-up article, i.e. "Can low-end GPUs handle Wolfenstein II?".

■ They should have tested the GTX 770 & R9 290, since both are listed as the minimum GPU needed for the game. Yes, I know that the 6GB GTX 1060 is roughly comparable (1 tier up from the 770, same tier as the 290), but there have been a number of games where similarly-tiered GPUs don't always have similar performance.

■ Not only was it strange that the GTX 1080/RX Vega 64 testing was "bonus" testing, but they didn't even bother testing with the GTX 1070/1070TI (or even anything like the Fury X or Vega 56). Considering that those GPUs are the current recommendation for 1440p gameplay (which was a resolution they tested), it would have been nice to see that testing.