Cerebras video shows AI writing code 75x faster than world's fastest AI GPU cloud — world's largest chip beats AWS's fastest in head-to-head comparison

75 times faster than hyperscaler GPUs, Cerebras says.

Cerebras got Meta’s Llama 3.1 405B large language model to run at 969 tokens per second, 75 times faster than Amazon Web Services' fastest AI service with GPUs could muster.

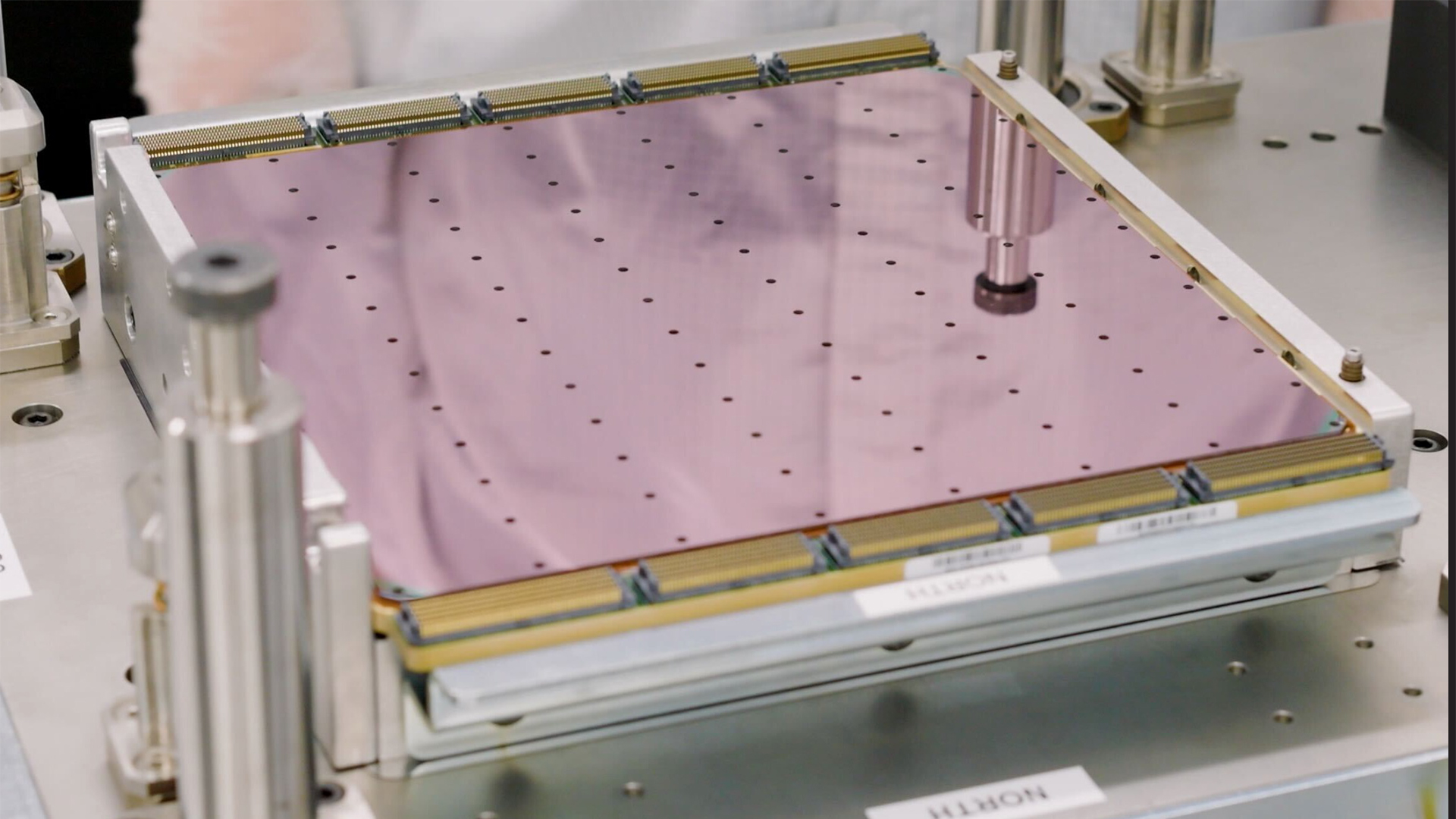

The LLM was run on Cerebras’s cloud AI service Cerebras Inference, which uses the chip company’s third-generation Wafer Scale Engines rather than GPUs from Nvidia or AMD. Cerebras has always claimed its Inference service is the fastest for generating tokens, the individual parts that make up a response from an LLM. When it was first launched in August, Cerebras Inference was claimed to be about 20 times faster than Nvidia GPUs running through cloud providers like Amazon Web Services in Llama 3.1 8B and Llama 3.1 70B.

But since July, Meta has offered Llama 3.1 405B, which has 405 billion parameters, making it a far heavier model than Llama 3.1 70B with 70 billion parameters. Cerebras says its Wafer Scale Engine processors can run this massive LLM at “instant speed,” with a token rate of 969 per second and a time-to-first-token of just 0.24 seconds; that’s a world record according to the company, not just for its chips, but also for the Llama 3.1 405B model.

Compared to Nvidia GPUs rented from AWS, Cerebras Inference was apparently 75 times faster; the Wafer Scale Engine chips were 12 times faster than even the fastest implementation of Nvidia GPUs from Together AI. Its closest competitor, AI processor designer SambaNova, was beaten by Cerebras Inference by 6 times.

To illustrate how fast this is, Cerebras prompted Fireworks (the fastest AI cloud service equipped with GPUs) and Inference to create a chess program in Python. Cerebras Inference took about three seconds, while Fireworks took 20.

Here is what instant 405B looks like: Cerebras vs. fastest GPU cloud: pic.twitter.com/d49pJmh3yTNovember 18, 2024

“Llama 3.1 405B on Cerebras is by far the fastest frontier model in the world – 12x faster than GPT-4o and 18x faster than Claude 3.5 Sonnet,” Cerebras said. “Thanks to the combination of Meta’s open approach and Cerebras’s breakthrough inference technology, Llama 3.1-405B now runs more than 10 times faster than closed frontier models.”

Even when upping the query size from 1,000 tokens to 100,000 tokens (a prompt that’s made up of at least a couple thousand words), Cerebras Inference apparently operated at 539 tokens per second. Of the five other services that could even run this workload, the best mustered just 49 tokens per second.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Cerebras also bragged that just a single second-generation Wafer Scale Engine outperformed the Frontier supercomputer by 768 times in a molecular dynamics simulation. Frontier had been the world’s fastest supercomputer until Monday, when the El Capitan supercomputer launched, and it has 9,472 Epyc CPUs from AMD.

Additionally, the Cerebras chip outperformed the Anton 3 supercomputer by 20%, a significant feat considering Anton 3 was built for molecular dynamics; its performance of 1.1 million simulation steps per second was also the first time any computer had broken the million-simulation-step barrier.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

bit_user Pretty impressive numbers, but I find it interesting that cost wasn't mentioned once.Reply

While we can't exactly know what even hardware with an actual list price would cost big customers, we can simply look at the hourly cost for running those models on each of these services. I'd like to see that comparison! -

jcridge Extraordinary performance.Reply

Would agree with another commenter, a comparison on multiple measures would also be very useful. Some charts perhaps too.

Note AWS's could be contracted to AWS' like Jesus' etc.