Nvidia and partners could charge up to $3 million per Blackwell server cabinet — analysts project over $200 billion in revenue for Nvidia

The industry is going to need 60,000 – 70,000 B200-based servers.

According to a report from Morgan Stanley cited by United Daily News, Nvidia and its partners will charge roughly $2 million to $3 million per AI server cabinet equipped with Nvidia's upcoming Blackwell GPUs. The industry will need tens of thousands of AI servers in 2025, and their aggregate cost will exceed $200 billion.

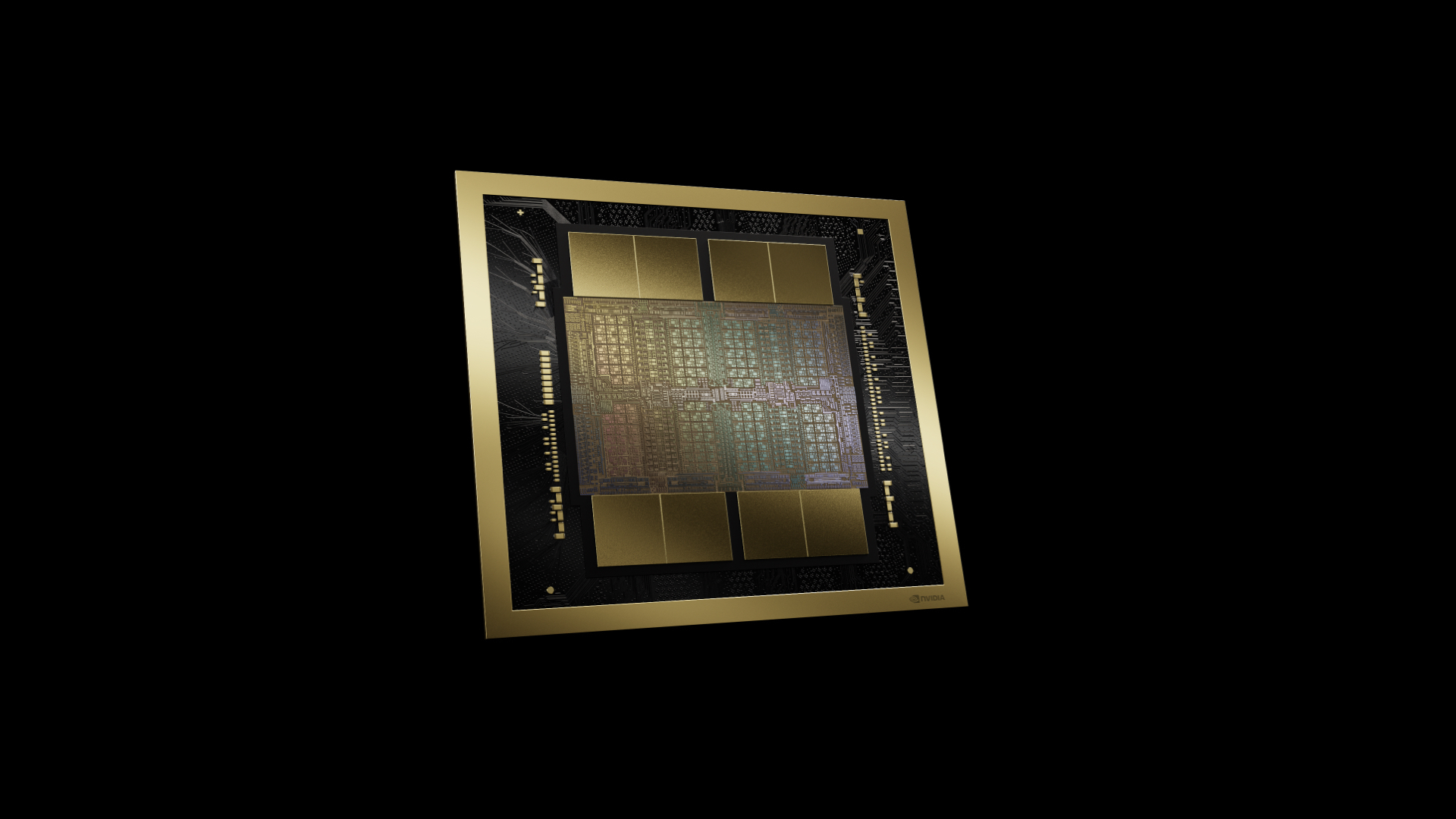

So far, Nvidia has introduced two 'reference' AI server cabinets based on its Blackwell architecture: the NVL36, equipped with 36 B200 GPUs, which is expected to cost from $2 million ($1.8 million, according to previous reports), and the NVL72, with 72 B200 GPUs, which is projected to start at $3 million.

NVL36 and NVL72 server cabinets (or PODs) will be available not only from Nvidia itself as well as its traditional partners, such as Foxconn, the world's largest supplier of AI servers, Quanta, and Wistron but also from newcomers, such as Asus. Assuming that Nvidia's partner TSMC can produce enough B100 and B200 GPUs using its 4nm-class lithography process technology and packaged using its chip-on-wafer-on-substrate (CoWoS) technology, availability from newcomers should ease the tight supply of actual Blackwell-based machines.

Based on the UDN report citing Morgan Stanley, Nvidia anticipates shipping between 60,000 and 70,000 B200 server cabinets, each priced between $2 million and $3 million next year. It translates to an estimated annual revenue of at least $210 billion from these machines, which means that companies like AWS and Microsoft will spend even more. It brings us to math by Sequoia Capital partner David Cahn, who believes that the AI industry has to earn around $600 billion to pay off machines and data centers.

Demand for AI servers is setting records and will not slow down any time soon, which will benefit both makers of AI servers and developers of AI GPUs. Despite the influx of competitors, Nvidia's GPUs are set to remain the de facto standard for training and many inference workloads, which benefits the company.

Based on talks with industry sources, the same Morgan Stanley report revealed that international giants such as Amazon Web Services, Dell, Google, Meta, and Microsoft will all adopt Nvidia's Blackwell GPU for AI servers. As a result, demand is now projected to exceed expectations, so Nvidia is prompted to increase its orders with TSMC by approximately 25%, the report claims.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JasHod1 Someone, seriously needs to come along and offer some competition to Nvidia. When one company is basically in charge of all the AI data it is ringing alarm bells. CUDA needs to be opened up to others if not by Nvidia then by regulators.Reply

Other companies have been stung by regulators for far less and it will also have the effect of, hopefully, driving down prices. This is a monopoly by any other name. -

edzieba The NVL-72 rack is pretty stuffed full of hardware with little in the way of 'wasted' support Us (no cable stuffing holes/patch panels/etc). $2m spread over 48 RUs is ~$42k per U, which you could hit with regular server hardware without trying too hard.Reply -

Mindstab Thrull "The industry will need tens of thousands of AI servers in 2025, and their aggregate cost will exceed $200 billion." (In the first paragraph)Reply

Assuming this is true, is there any reason they need to be servers based on Blackwell? It feels to me very much like "it's the new shiny hotness" but I mean, there's previous gen solutions or options from other companies. And then there's the power usage, but that's a "sometime later" problem, right? -

robocop007 Reply

The competition should be required to pay a % of all investment cost made by Nvidia to develop CUDA over decades before access.JasHod1 said:Someone, seriously needs to come along and offer some competition to Nvidia. When one company is basically in charge of all the AI data it is ringing alarm bells. CUDA needs to be opened up to others if not by Nvidia then by regulators.

Other companies have been stung by regulators for far less and it will also have the effect of, hopefully, driving down prices. This is a monopoly by any other name.

What is your plan for monopoly of ASML and TSMC? Ask regulators to force them to share their trade secrets as well? -

robocop007 Reply

This 200$ bln is a projection based on Nvidia Blackwell servers demand alone. In total, AI industry will spend more for servers.Mindstab Thrull said:"The industry will need tens of thousands of AI servers in 2025, and their aggregate cost will exceed $200 billion." (In the first paragraph)

Assuming this is true, is there any reason they need to be servers based on Blackwell? It feels to me very much like "it's the new shiny hotness" but I mean, there's previous gen solutions or options from other companies. And then there's the power usage, but that's a "sometime later" problem, right? -

Scourge00165 Reply

Yeah, it's not the "new shiny hotness," it's the massive computing power that companies need to grow their AI capabilities.Mindstab Thrull said:"The industry will need tens of thousands of AI servers in 2025, and their aggregate cost will exceed $200 billion." (In the first paragraph)

Assuming this is true, is there any reason they need to be servers based on Blackwell? It feels to me very much like "it's the new shiny hotness" but I mean, there's previous gen solutions or options from other companies. And then there's the power usage, but that's a "sometime later" problem, right?

And if there any reason to believe this will go to the Corporation that controls 80-90% of the business because of the massive moat it's created due to the overwhelming advantage it has in hardware?

No, it could go to Intel...that'd be like trying to get into Nascar with a Ford Pito, but a company is free to make that choice....

As for the energy, did you just hear that question today? It's using LESS energy relative to it's queries than the inferior products.

So yeah, the reason would be the enormous 4-5 year advantage they have over every other company's chips.