Intel Ponte Vecchio and Xe HPC Architecture: Built for Big Data

Intel dropped a ton of new information during its Intel Architecture Day 2021, and you can check out our other articles for deep dives into the Alder Lake CPUs, Sapphire Rapids, Arc Alchemist GPU, and more. That last one is particularly relevant to what we will discuss here, Intel's Ponte Vecchio and the Xe HPC architecture. It's big. Scratch that: It's massive, particularly in the maximum configuration that sports eight GPUs all working together. The upcoming Aurora supercomputer will be using Sapphire Rapids and Ponte Vecchio in a bid to be the first exascale supercomputer in the U.S., and there's a good reason the Department of Energy opted to go with Intel's upcoming hardware.

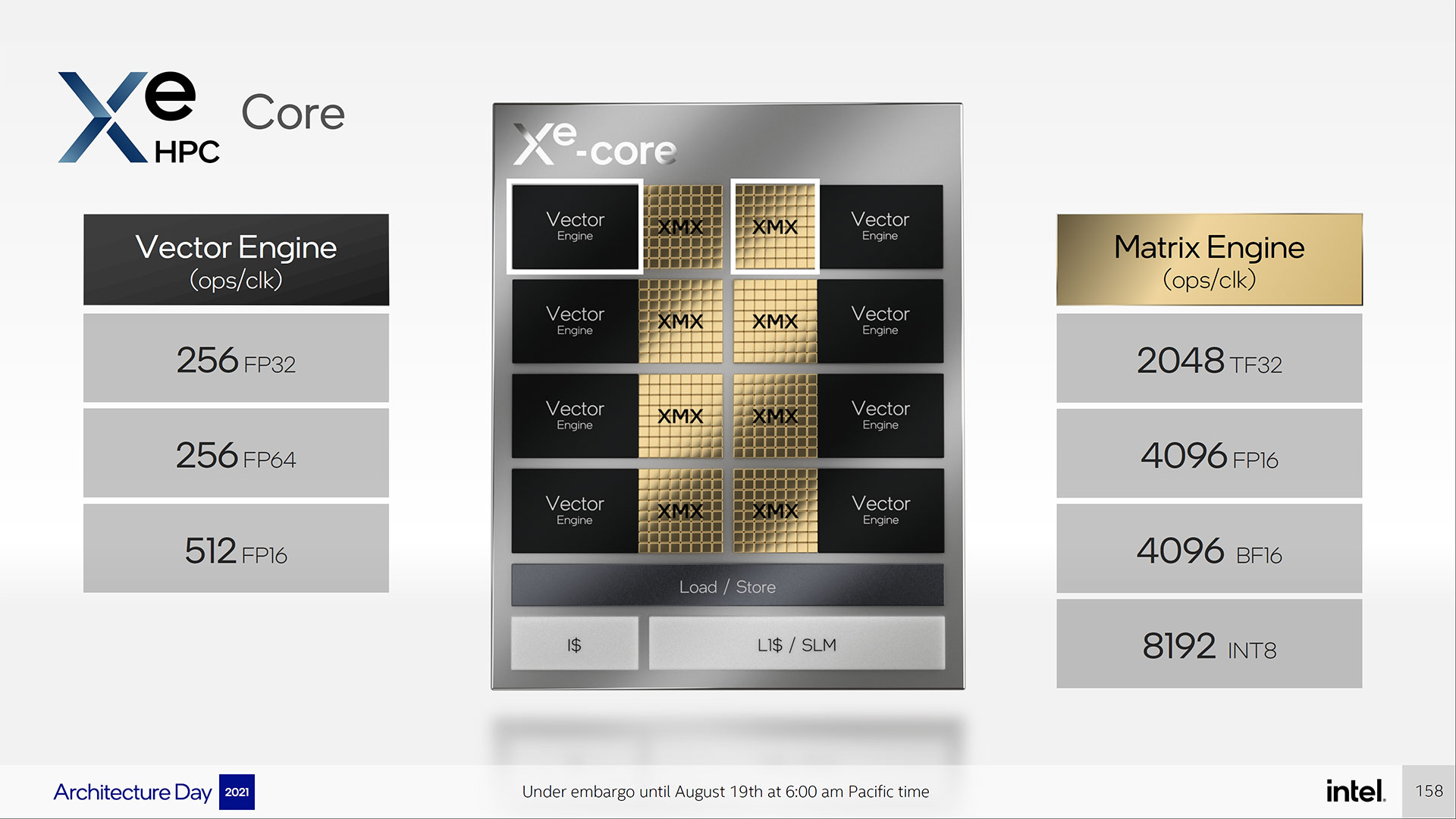

Like the built-for-gaming Xe HPG, the base building block for Xe HPC starts with the Xe-core. There are still eight vector engines and eight matrix engines in the Xe-core, but this Xe-core is fundamentally very different from Xe HPG. The vector engine uses a 512-bit register (for 64-bit floating point), and the XMX matrix engine has been expanded to 4096-bit chunks of data. That's double the potential performance for the vector engine and quadruple the FP16 throughput on the matrix engine. L1 cache sizes and load/store bandwidth have similarly increased to feed the engines.

Besides being bigger, Xe HPC also supports additional data types. The Xe HPG MXM only works on FP16 and BF16 data, but the Xe HPC also supports the TF32 (Tensor Float 32), which has gained popularity in the machine learning community. The vector engine also adds support for FP64 data, though only at the same rate as FP32 data.

With eight vector engines per Xe-core, the total potential throughput for a single Xe-core is 256 FP64 or FP32 operations, or 512 FP16 operations on the vector engine. For the matrix engines, each Xe-core can do 4096 FP16 or BF16 operations per clock, 8192 INT8 ops per clock, or 2048 TF32 operations per clock. But of course, there's more than one Xe-core in Ponte Vecchio.

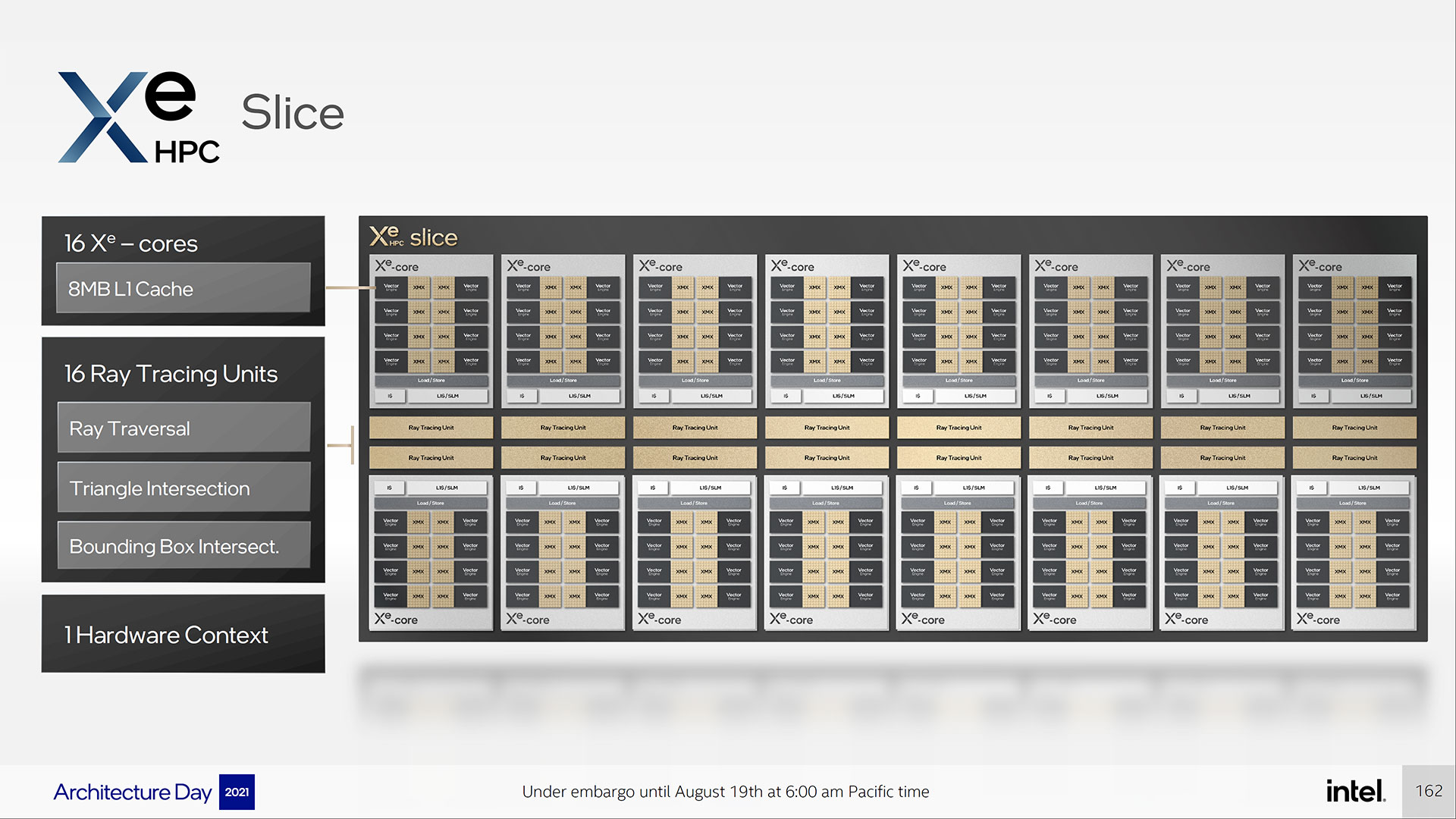

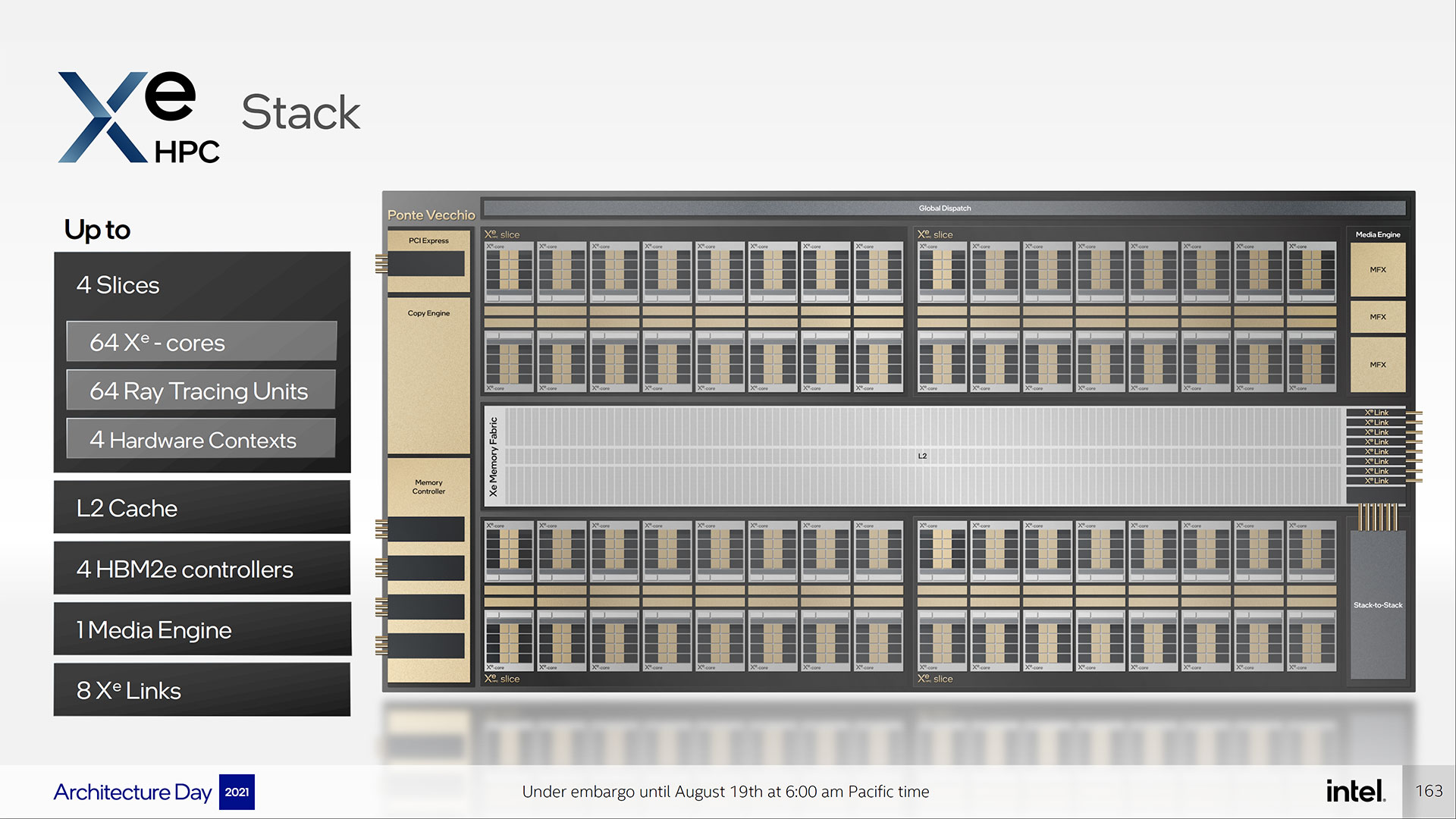

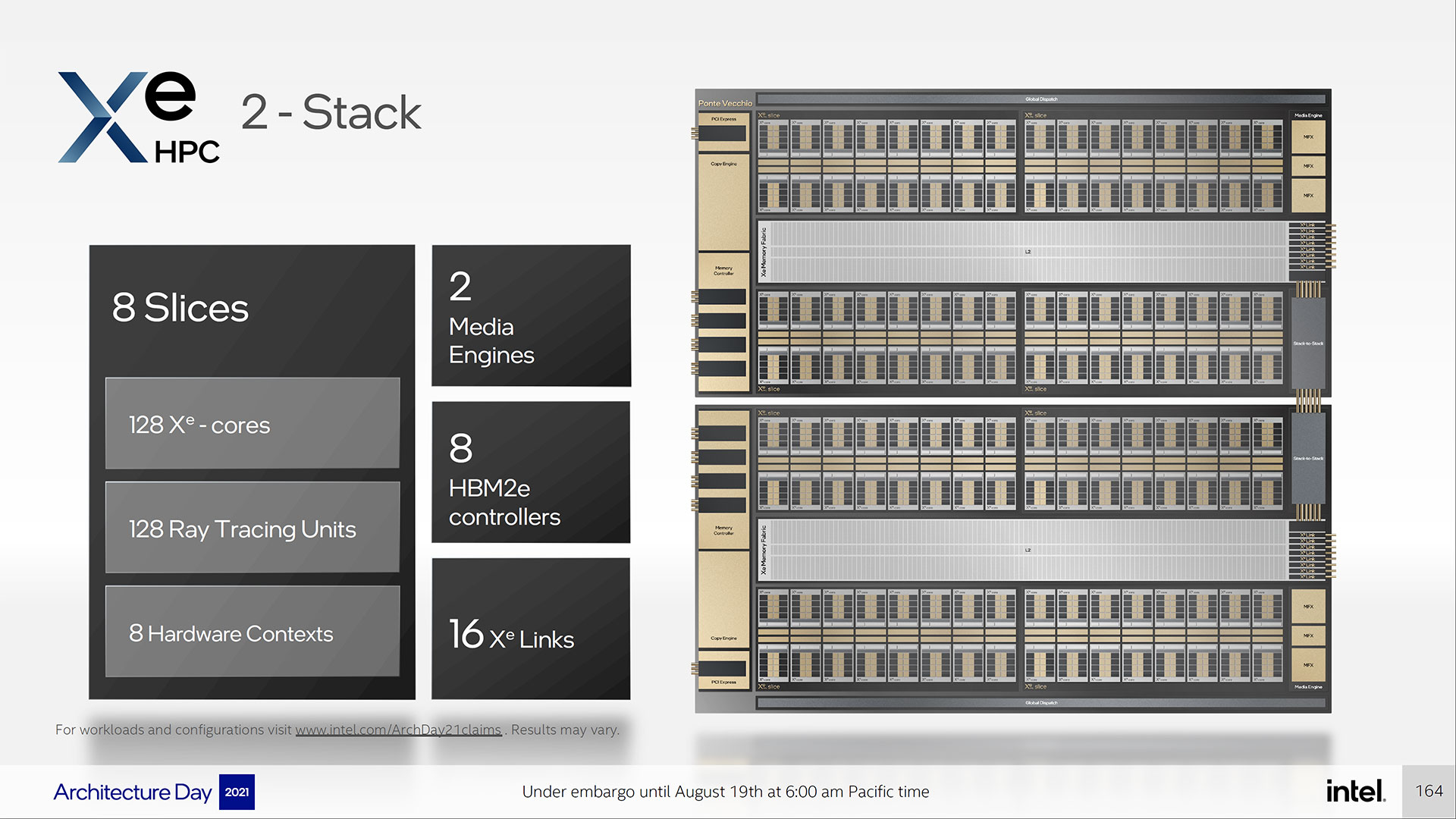

Xe HPC groups 16 Xe-core units into a single slice, where the consumer Xe HPG only has up to eight units. One interesting point here is that, unlike Nvidia's GA100 architecture, Xe HPC includes the ray tracing units (RTUs). We don't know how fast the RTU is relative to Nvidia's RT cores, but that's a big potential boost to performance for professional ray tracing applications.

Each Xe-core on Ponte Vecchio also contains a 512KB L1 cache, which is relatively massive compared to consumer GPUs. All of the Xe-cores in a slice function under a single hardware context as well. But this is still only at the slice level.

The main compute core for Xe HPC packs in four slices, linked together by a massive 144MB L2 cache and memory fabric, with eight Xe Link connectors, four HBM2e stacks, and a media engine. But Intel still isn't finished, as Xe HPC is also available as a 2-stack configuration that doubles all of those values, linked together via EMIB.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

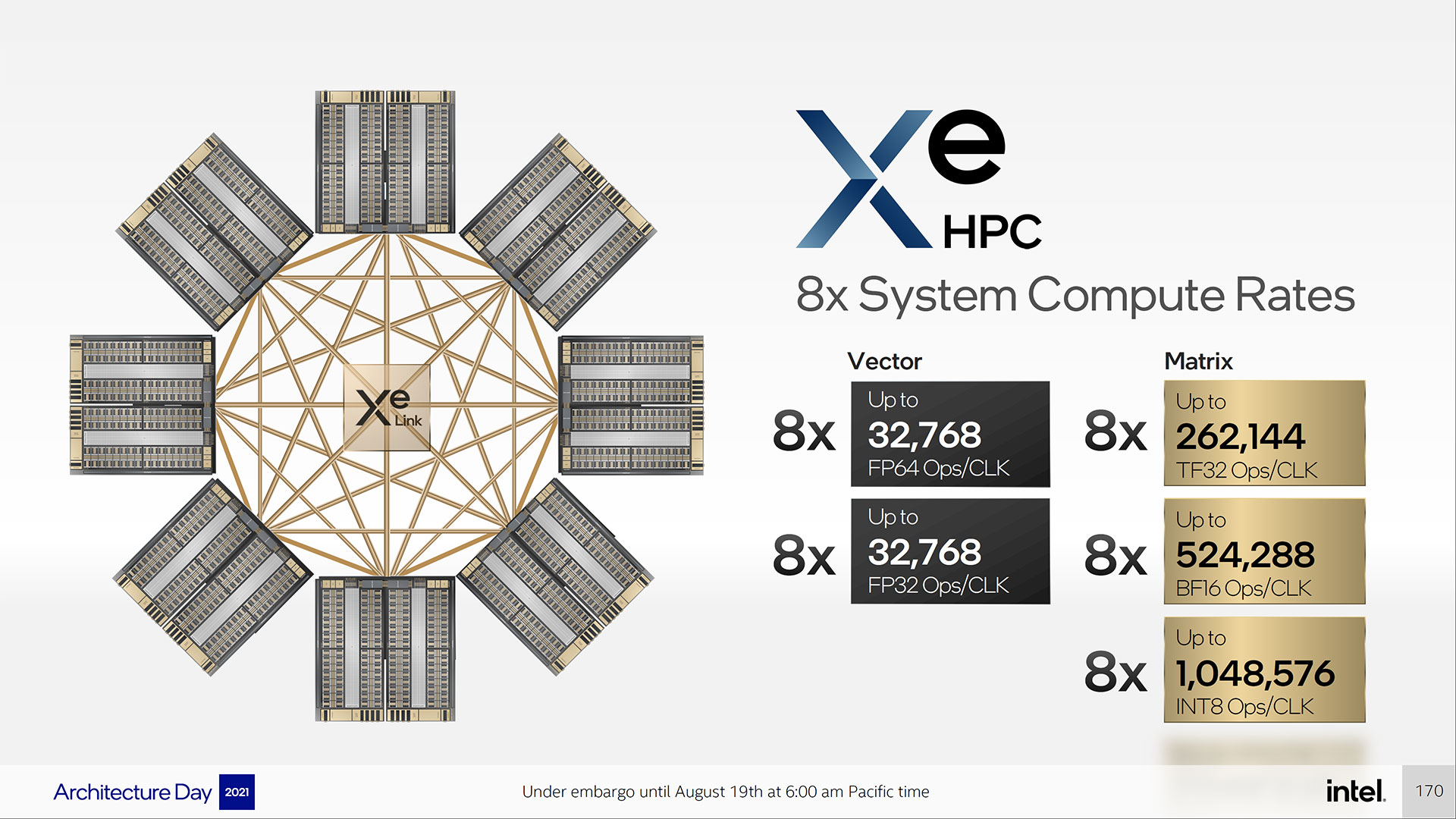

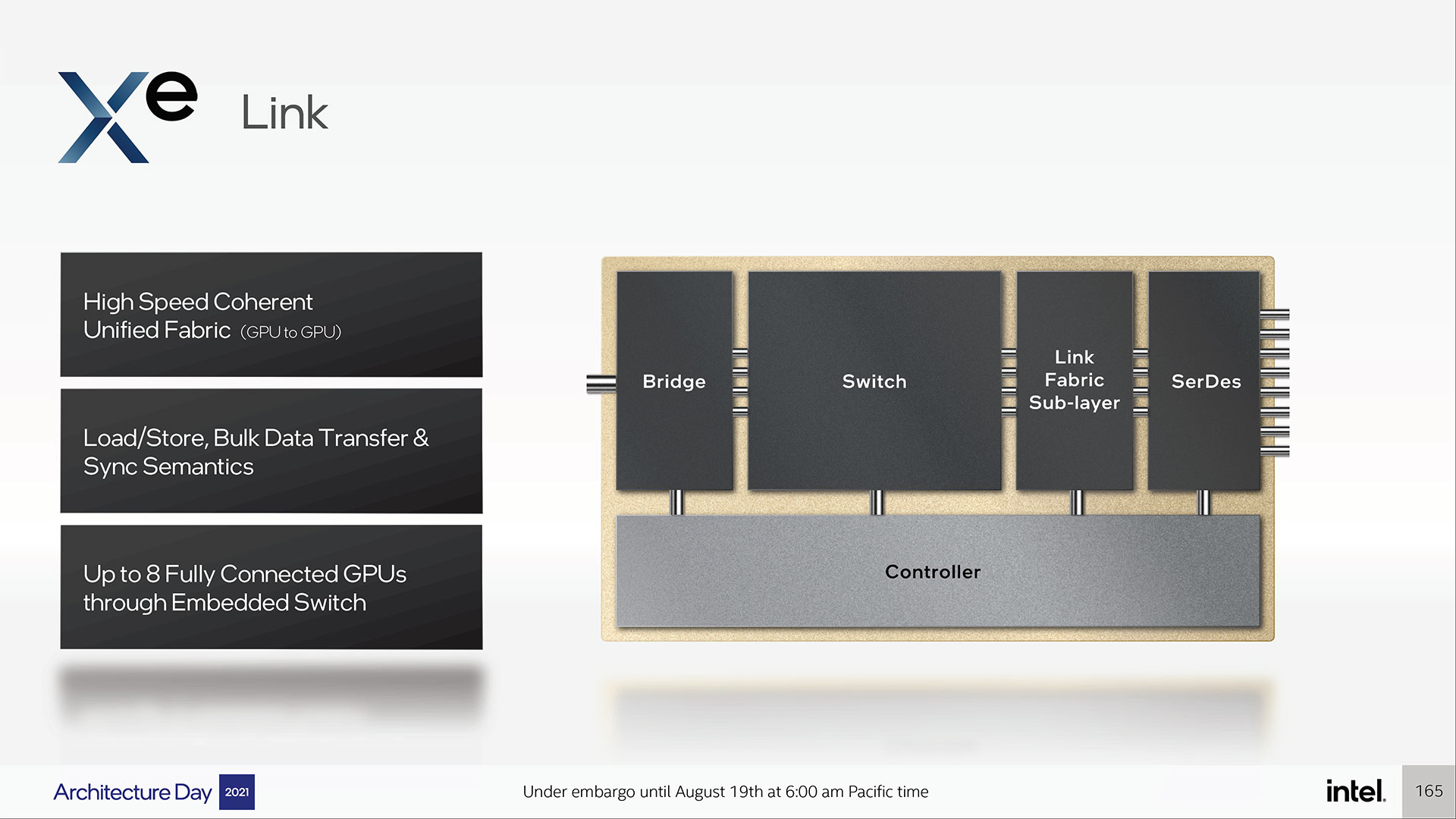

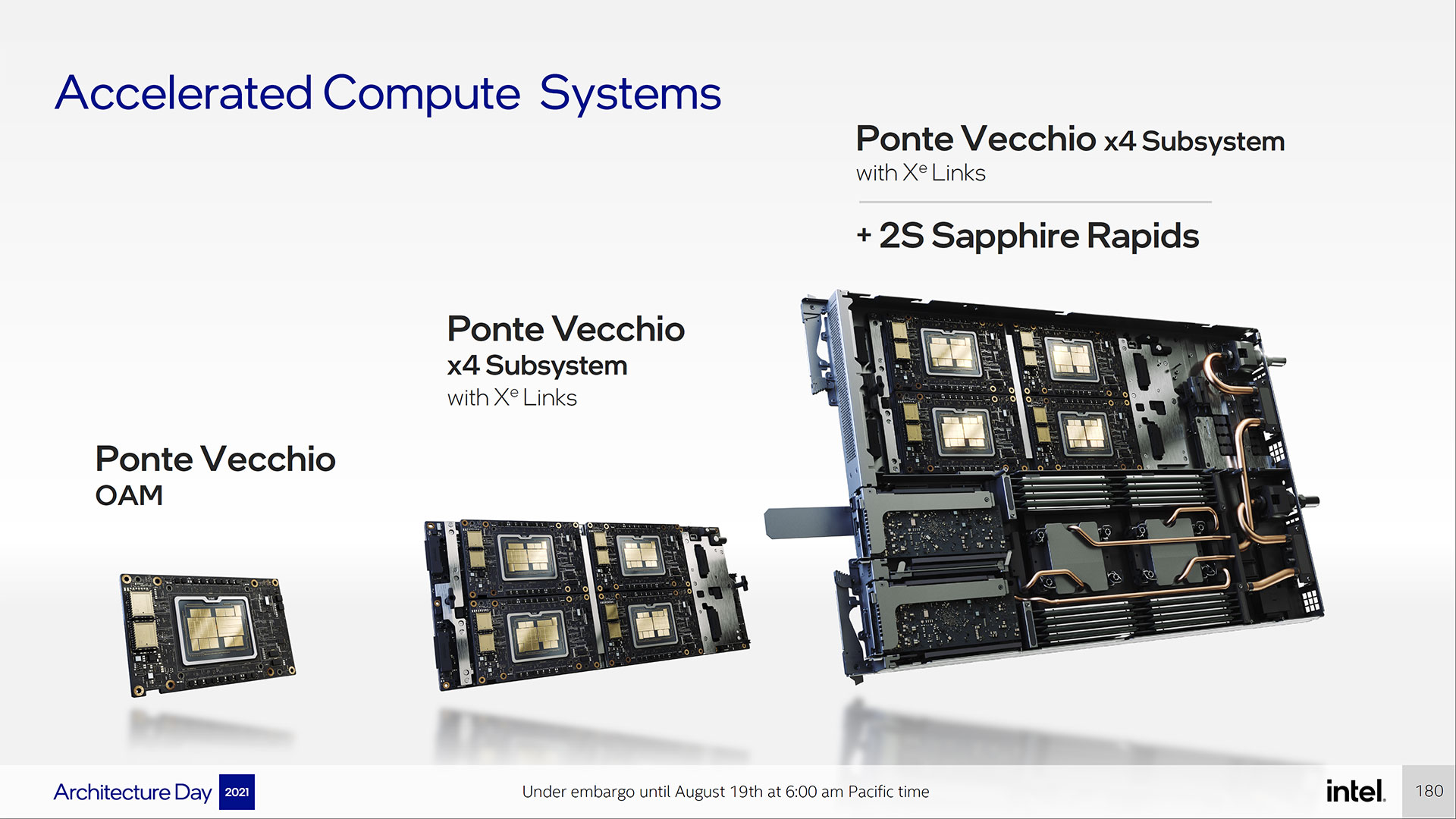

The Xe Link is an important part of Xe HPC, providing a high-speed coherent unified fabric between multi-GPU configurations. It can be used in 2-way, 4-way, 6-way, and 8-way topologies, with each GPU linked directly to every other GPU. Put it all together and you get a massive amount of compute!

Intel hasn't disclosed clock speeds yet, but we're looking at up to 32,768 FP64 operations per clock. Assuming it runs at speeds of somewhere between 1.0 and 2.0 GHz, that means anywhere from 8.2 to 16.4 TFLOPS of FP64 compute for a single Xe HPC GPU, and up to 131 TFLOPS for a cluster of eight, which brings us to the second topic, Ponte Vecchio, the productized reality of Xe HPC.

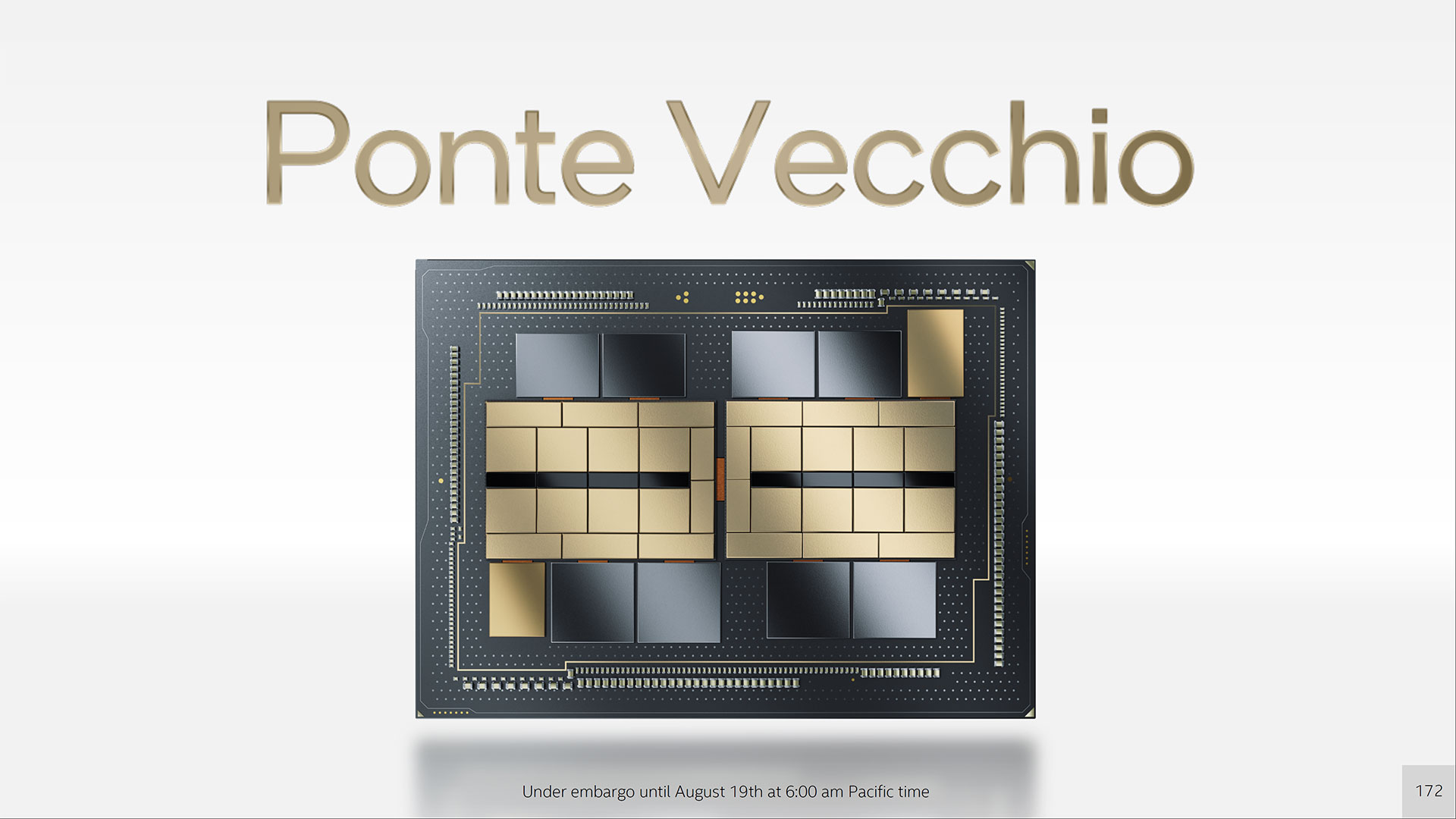

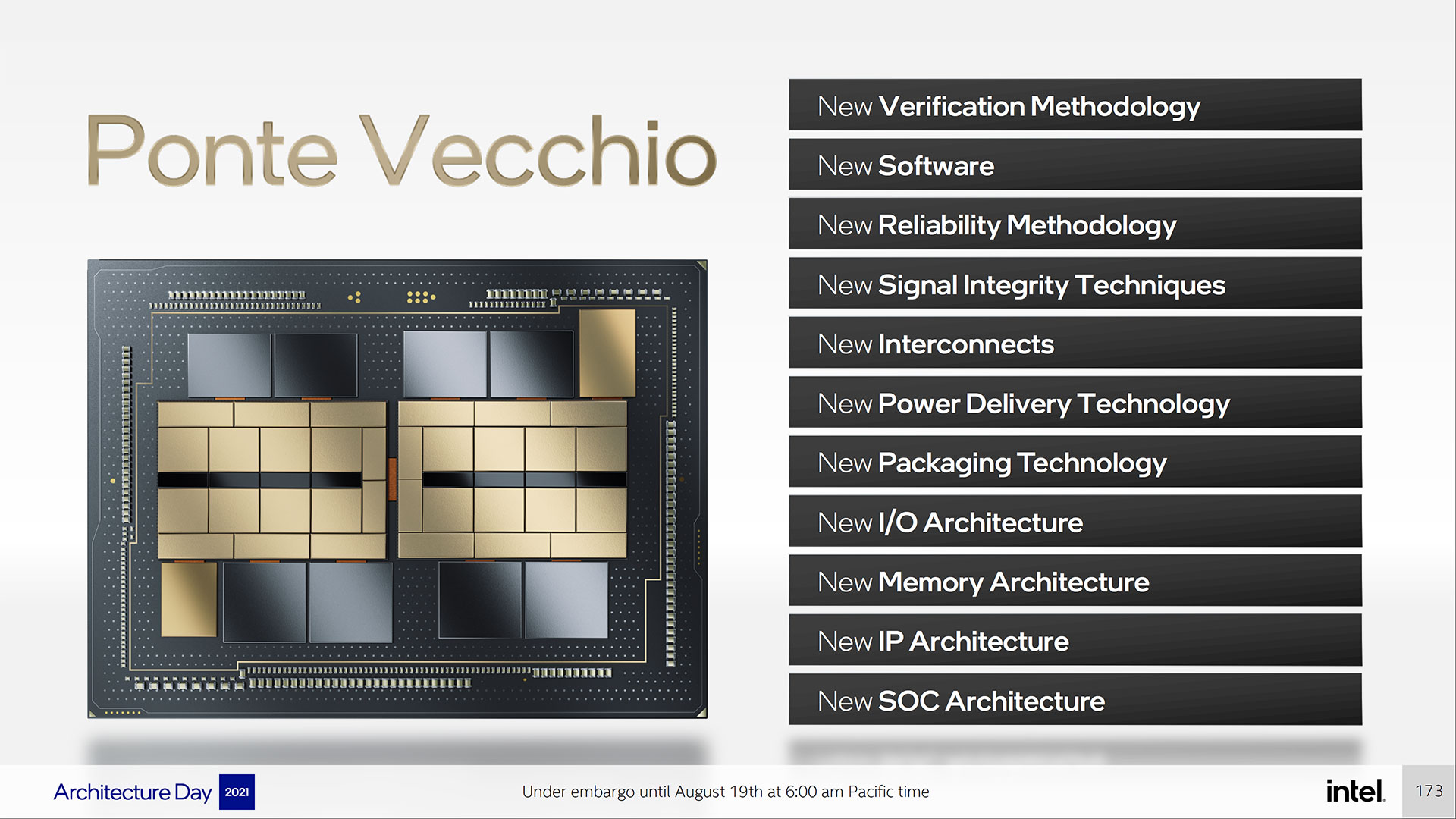

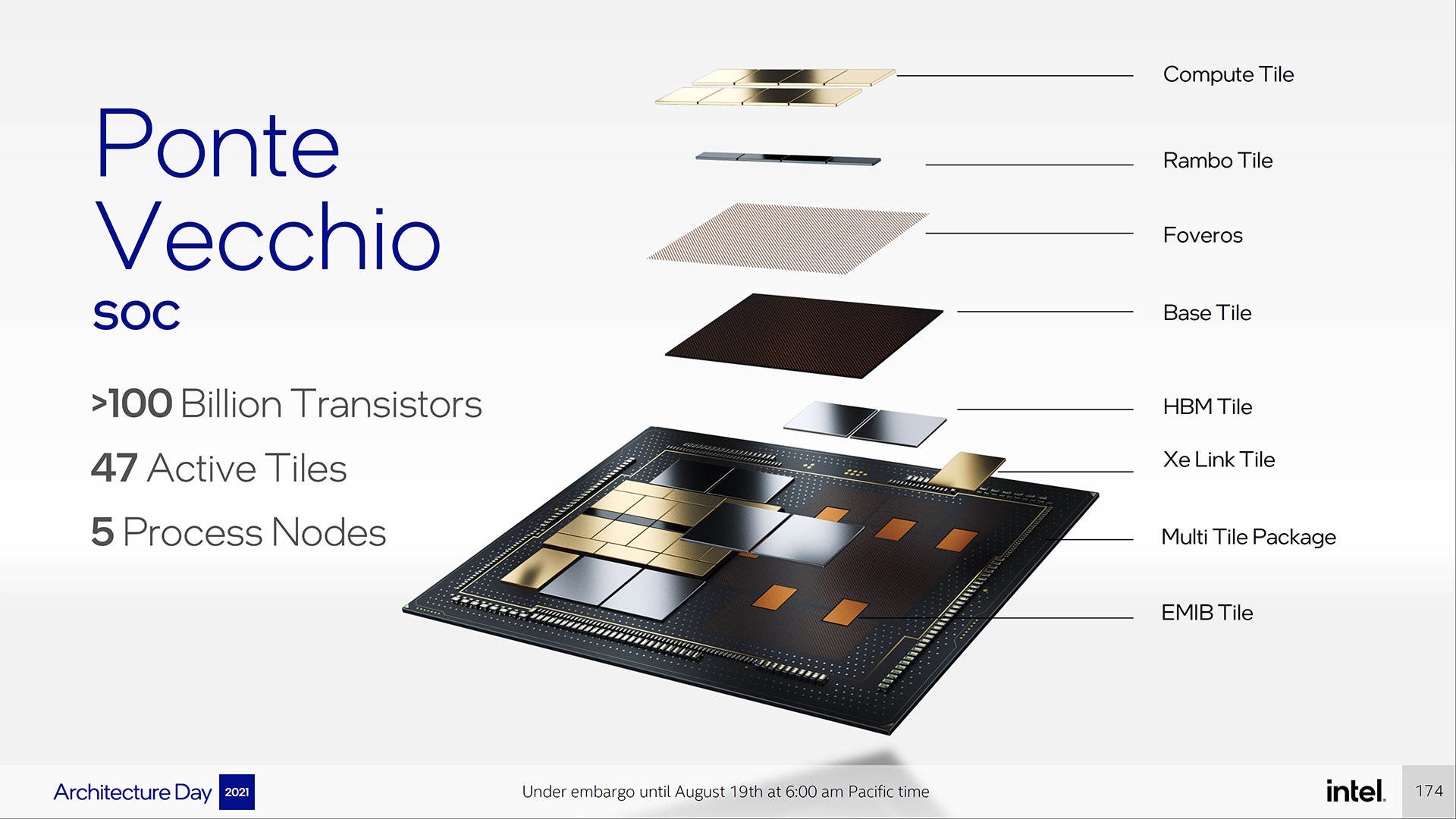

Ponte Vecchio will be a major step forward for packaging and integration. The entire SOC consists of over 100 billion transistors across 47 active tiles manufactured on five different process nodes. This is all via Intel's 3D chip stacking technologies. We've already covered a lot of the details previously, but it's still an impressive tour de force for Intel.

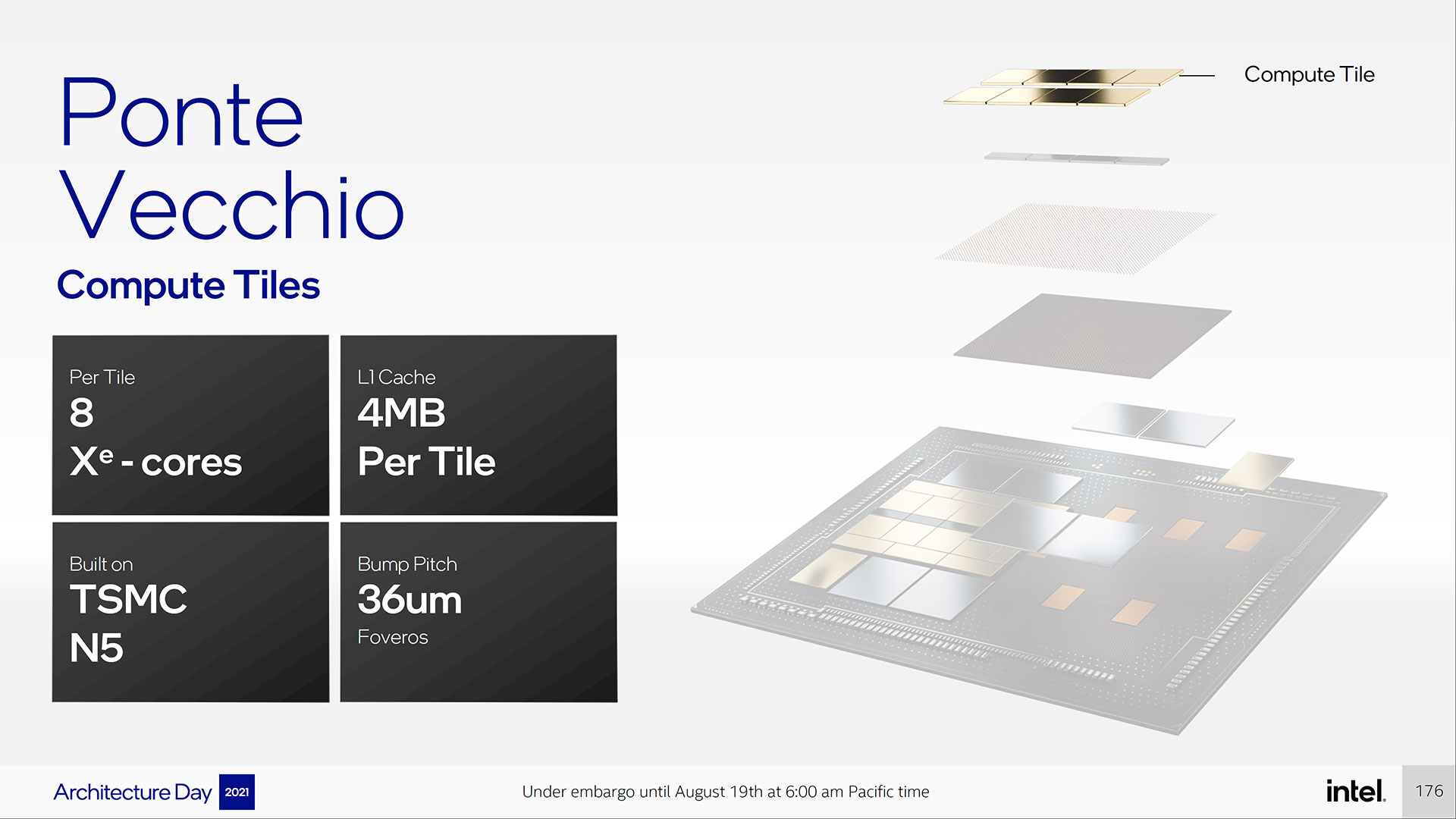

The compute tiles at the heart of Ponte Vecchio will be made using TSMC's N5 process, each with eight Xe-cores. These link to an Intel Foveros base tile (built on the newly renamed Intel 7 process), which also houses the Rambo cache, HBM2e, and a PCIe Gen5 interface. The Xe Link tile meanwhile uses TSMC N7.

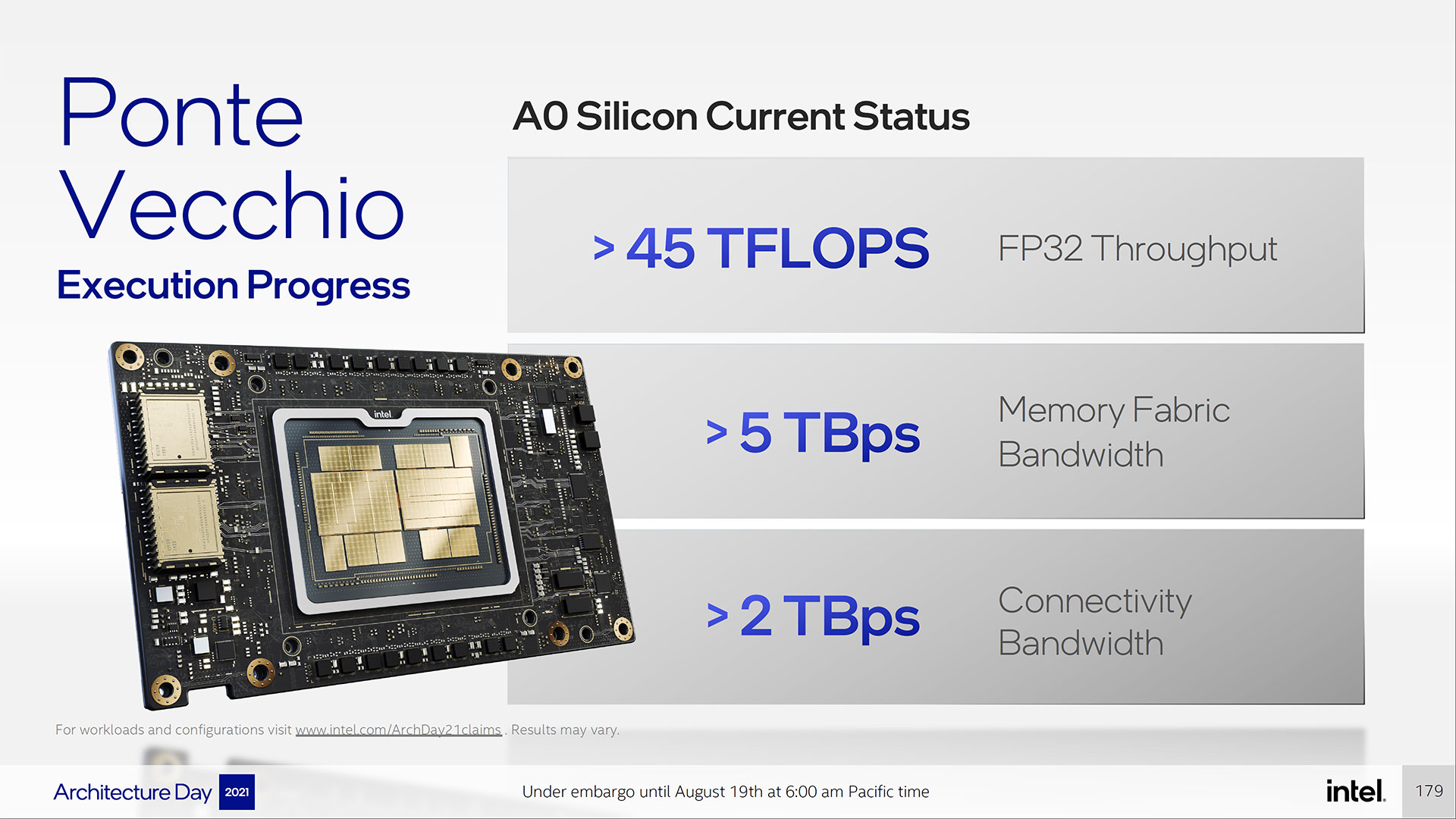

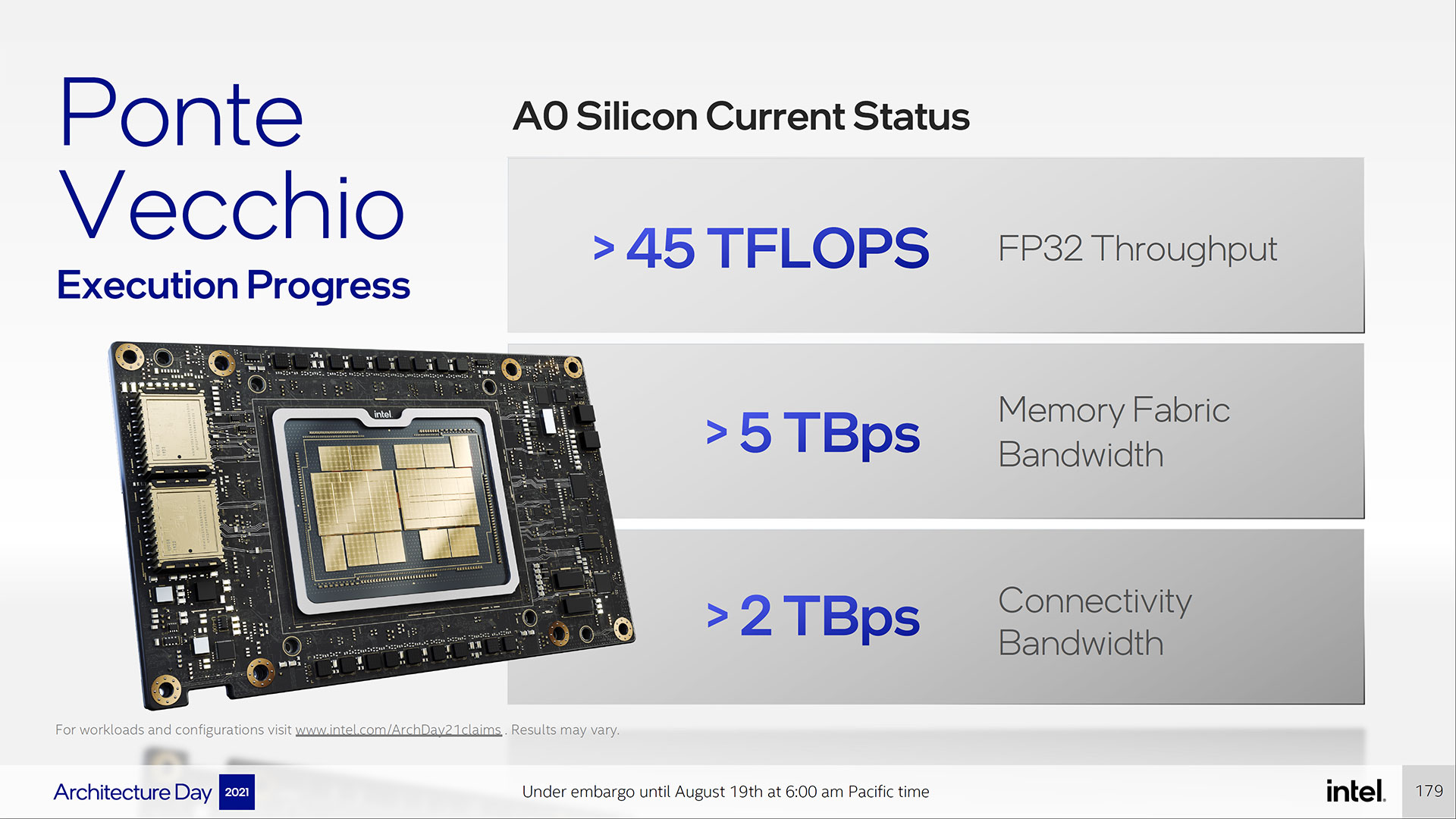

Intel already has working A0 silicon (basically early silicon, not yet in final production), running at over 45 TFLOPS for FP32 and with over 5TBps of HBM2e bandwidth. Connectivity is also running at more than 2TBps.

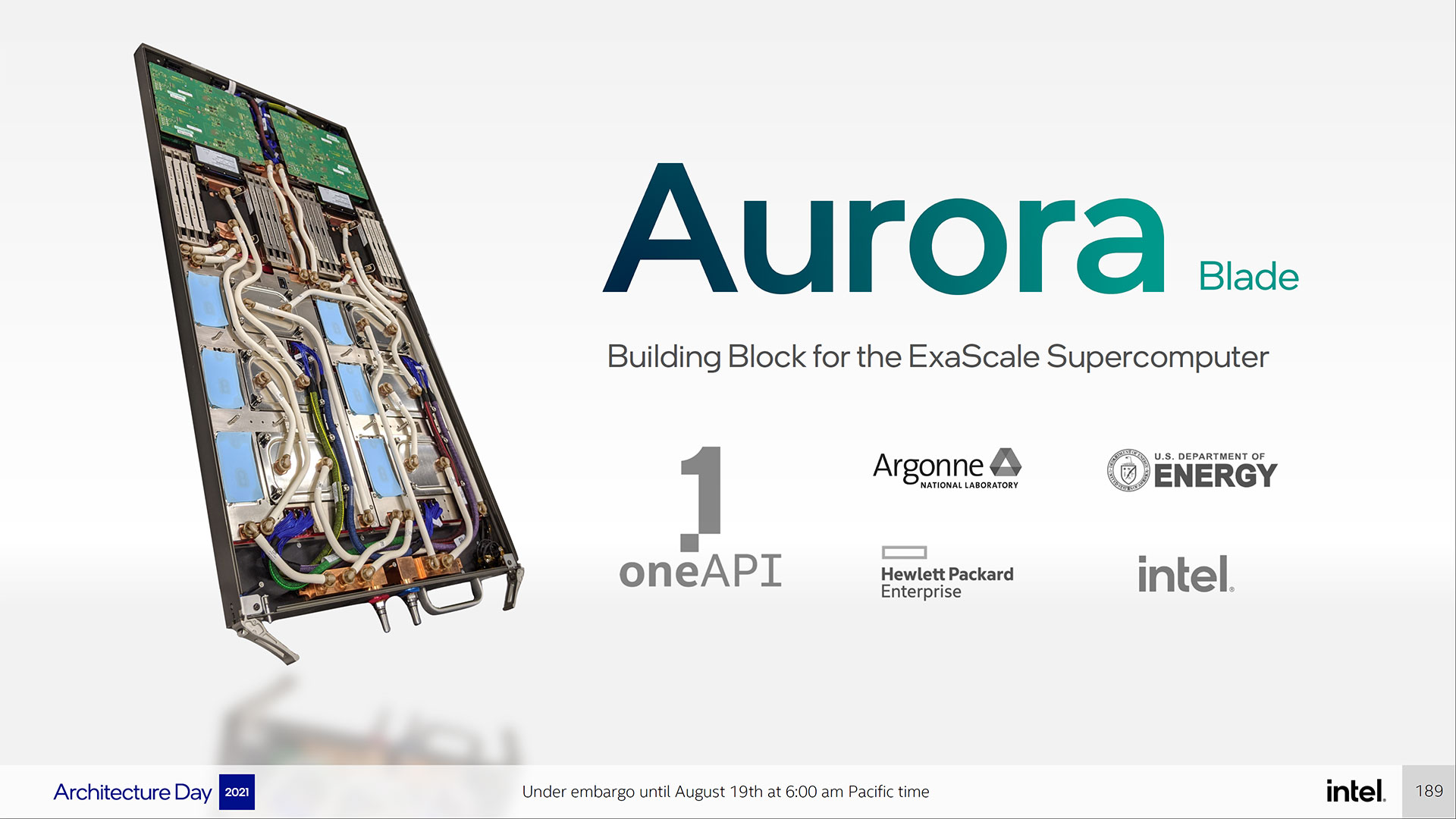

The Aurora supercomputer will run in a 6-way configuration using Xe Links to tie things together, which you can see in the above Aurora Blade. That also sports two Sapphire Rapids CPUs — all liquid-cooled, naturally, to keep things running cool.

This won't be the last we see of Ponte Vecchio, obviously. With the extreme performance and features that it stuffs into the package, plus a design built for scaling out to hundreds or thousands of nodes, Ponte Vecchio will undoubtedly show up in many more installations over the coming years. This is also the first round of Xe HPC hardware, with many more iterations planned for the future, offering up even more performance and features.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Intel999 Maybe if Ponte Vecchio had been ready in 2018, when it was suppose to be, it would be shipping in the first exascale computer.Reply

However, since AMD confirmed that MI200 accelerators started shipping in Q2 for implementation in an exascale computer it seems Ponte Vecchio might be in the second exascale computer and still slower than the first one.

That "first exascale computer" comment might be coming from an old Intel propaganda sheet. This whole article sounds like regurgitated Intel double speak.

If you are going to brag about Intel's 8 way GPU to GPU connectivity why not compare the FP64, BF16 etc. performance to Nvidia's 8 way communicating GPUs that have been shipping for two years? By the time, Ponte Vecchio ships the Nvidia option will have been out for 3 years. Worse than that, an updated version from Nvidia will be out.

Typical Intel, look at we have! in the future, maybe. -

JayNor Strange that Xe-Link is responsible for coherency in today's presentations. I recall discussions of CXL maintaining coherency being a feature, but didn't see CXL discussed today.Reply -

waltc3 Let's hope that Intel isn't going to try and texture across the PCIe bus as its i7xx GPUs were hobbled with texturing across the AGP bus--if so, then these won't really be competitive at all (as the i7xx GPUs weren't competitive with 3dfx or nVidia GPUs at the time, who both textured from their far faster onboard memory.) This is tongue 'n cheek because I really do not expect Intel to make such a fundamental mistake again, but it does bear a comment, imo.Reply