MRTouch Turns Any Physical Surface Into A Mixed Reality Touch Screen

Microsoft is a big believer in immersive technology, and the company is always working to improve the immersive computing experience. A team of Microsoft researchers recently revealed an interaction technique called MRTouch that would enable tactile touch for mixed reality content on the Hololens (as well as other devices, eventually).

In 2016, Microsoft released the HoloLens headset, which was our first introduction to the company’s mixed reality aspirations. The HoloLens headset features environment mapping and hand tracking technology, and it enables you to interact with 3D virtual objects with a real-world space. HoloLens supports gesture, gaze, and voice-based input, but those methods aren’t as intuitive as we would like. Gestures don’t offer a tactile response to user interaction, and although gaze and voice interactions can be helpful, they aren’t suitable as universal interaction methods.

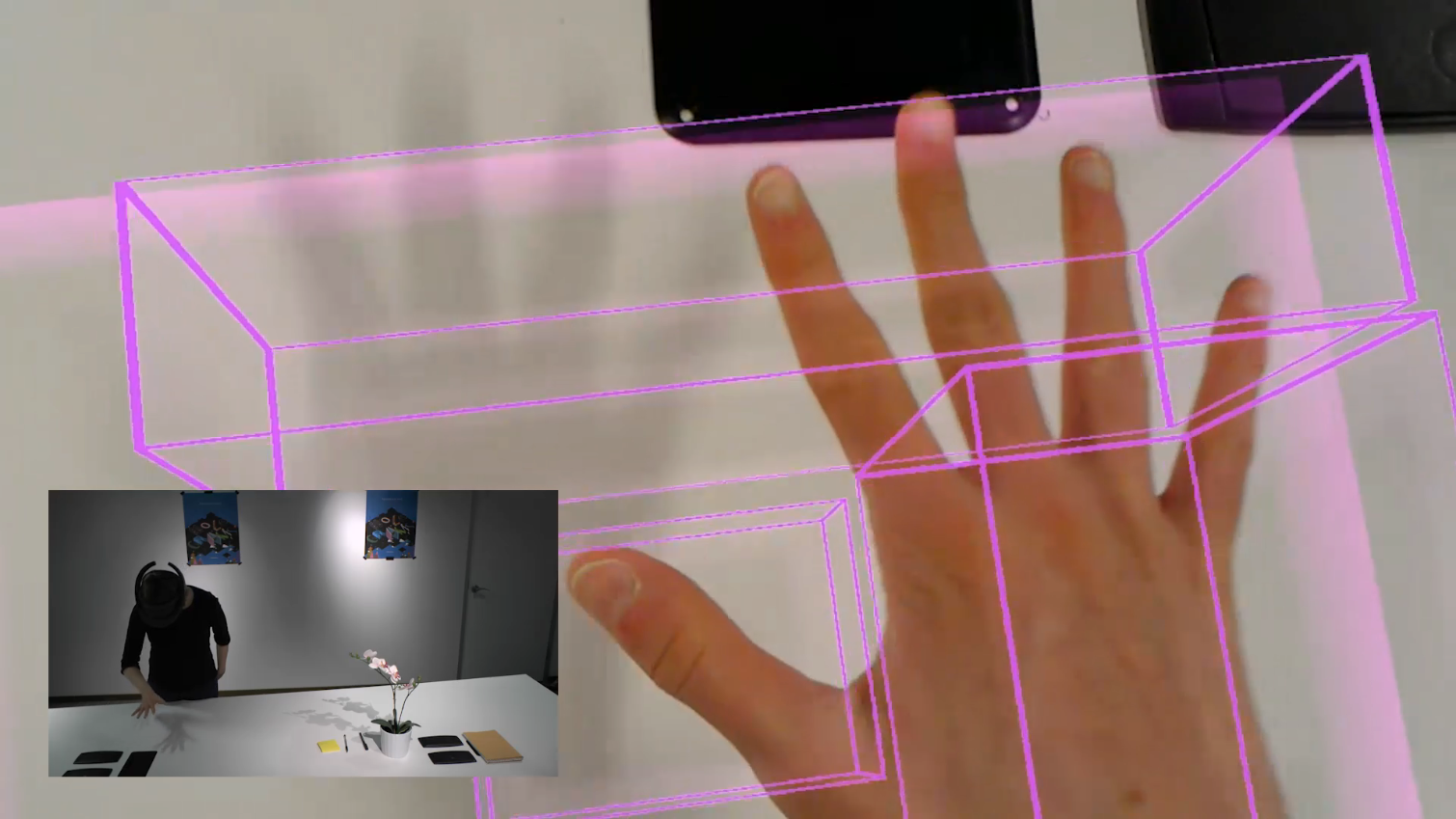

Most people are accustomed to touch-based interaction from using smartphones, tablets, and to a lesser extent, touchscreen computer monitors. Microsoft’s experimental MRTouch software brings touch interaction into mixed reality environments by taking advantage of the HoloLens’s advanced environment mapping and hand tracking technology to pinpoint flat surfaces that you can use as a virtual input device.

The Microsoft HoloLens headset features two environment tracking modes. The long-throw tracking mode uses the on-board camera to map the space around you, including the walls of the room and the physical objects in that space. The headset also operates in short-throw mode, which is used for gesture tracking and features a maximum tracking distance of one meter. The MRTouch application uses the HoloLens’s short-throw tracking mode, because the long-throw tracking isn’t accurate enough for finger tracking.

Microsoft’s researchers combined the short-throw depth camera information with the infrared camera feed to provided accurate mapping of flat surfaces without relying on information from the long-throw depth camera. The researchers said this enables to MRTouch software to operate without a full 3D map of the environment around you.

With MRTouch, you can use any flat surface, such as a countertop, your desk, or the wall, as a tactile touch surface. To open an app with MRTouch, touch the surface you wish to work with and drag your finger down to the right, which will create a virtual window that you can open the application. The physical surface enables you to feel the virtual buttons when you press them, but it also enables more precise input. In-air gestures work for simple interactions, but it’s hard to work with a virtual menu when you can’t tell when you’ve pressed a button. Further, in-air interactions are limited to single input points, whereas MRTouch enables multi-touch interactions with up to all 10 of your digits.

The tracking pipeline for the MRTouch software operates at 25fps and features three components: the Image Streamer, Tracker Engine, and Client Library. The Image Streamer exports the data from the infrared and depth cameras and sends it via a TCP socket to the Tracker Engine. The MRTouch Tracker Engine receives that data from the Image Streamer and uses it to maintain a list of “known tracking surfaces” and their coordinates. Like any application in Microsoft’s Windows Mixed Reality platform, MRTouch windows remain anchored in their position while you move around the space. The third component of the MRTouch system is the Client Library, which opens a TCP connection to the Tracker Engine to receive the position data and touch information. The Client Library then translates that information into a format that the application can interpret.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The MRTouch software operates on Microsoft’s HoloLens Mixed Reality headset, but the team said that it created a custom API to build the software, which means it would be easy to port the app to other mixed reality devices.

However, MRTouch is currently unavailable. Microsoft didn’t say when, or if, MRTouch would be offered publicly. However, if you wish to learn more about the process, the team’s leader, Rober Xiao, published a research paper that details the methods used to make MRTouch work. We’re hopeful that this project will progress into marketable software, because tactile interaction in mixed reality is something we desperately want to see available.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

237841209 This looks amazing. Yet, it'll probably be awkward the first time around like the HoloLens was. I'd like to be able to save the windows' positions on my walls so I can open them all back up when I need to.Reply