Samsung Demos In-Memory Processing for HBM2, GDDR6, DDR4, and LPDDR5X

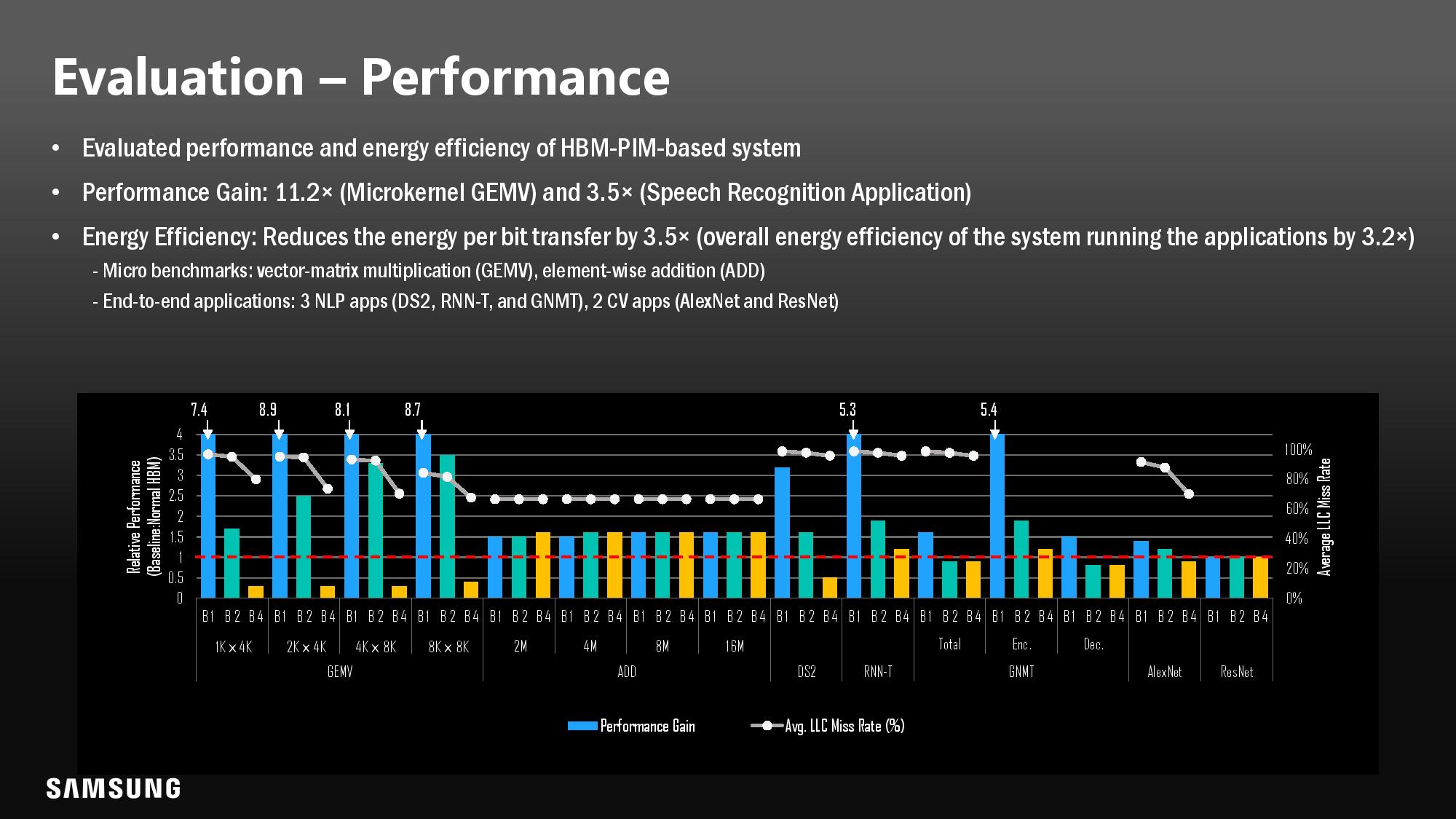

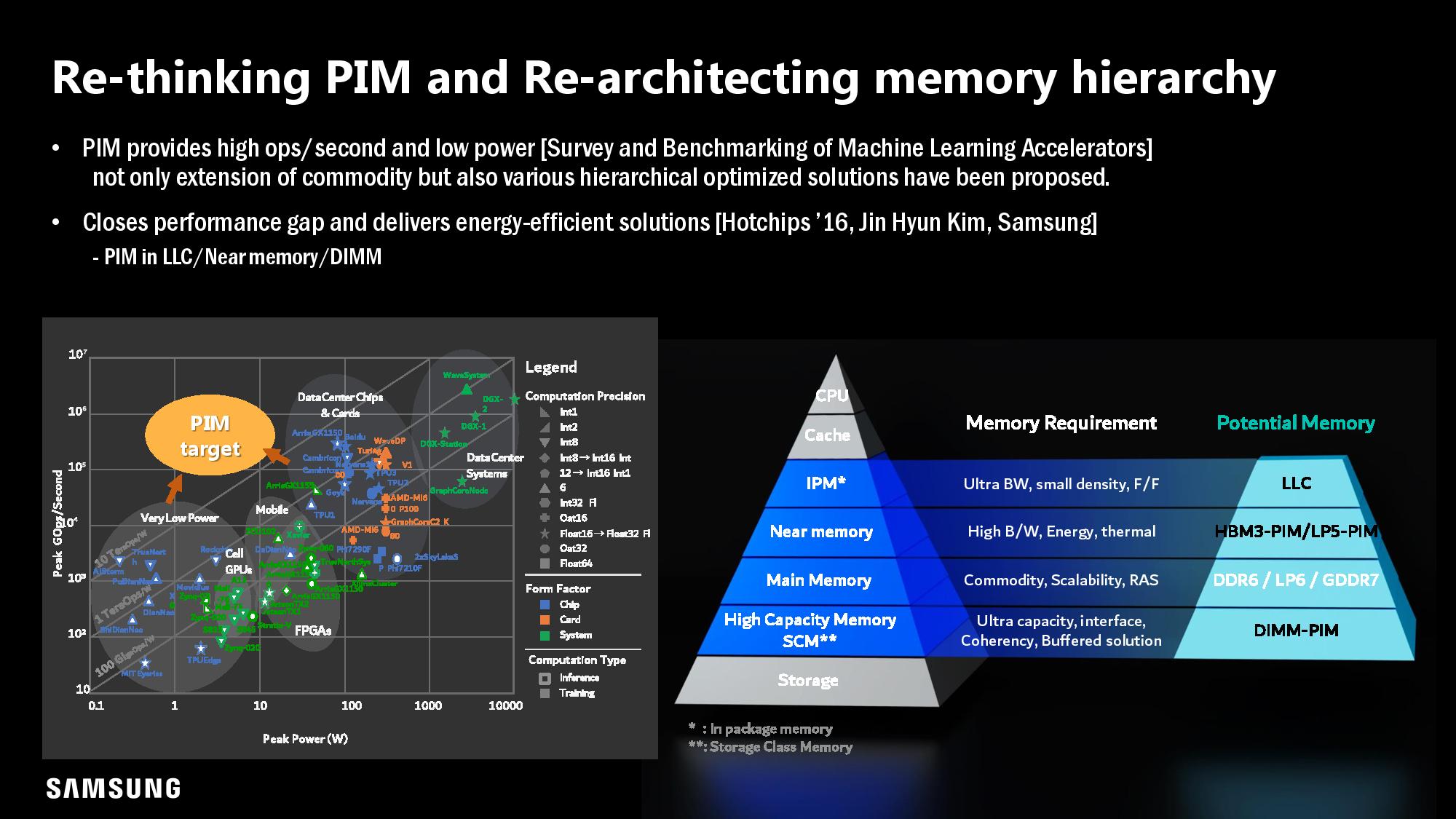

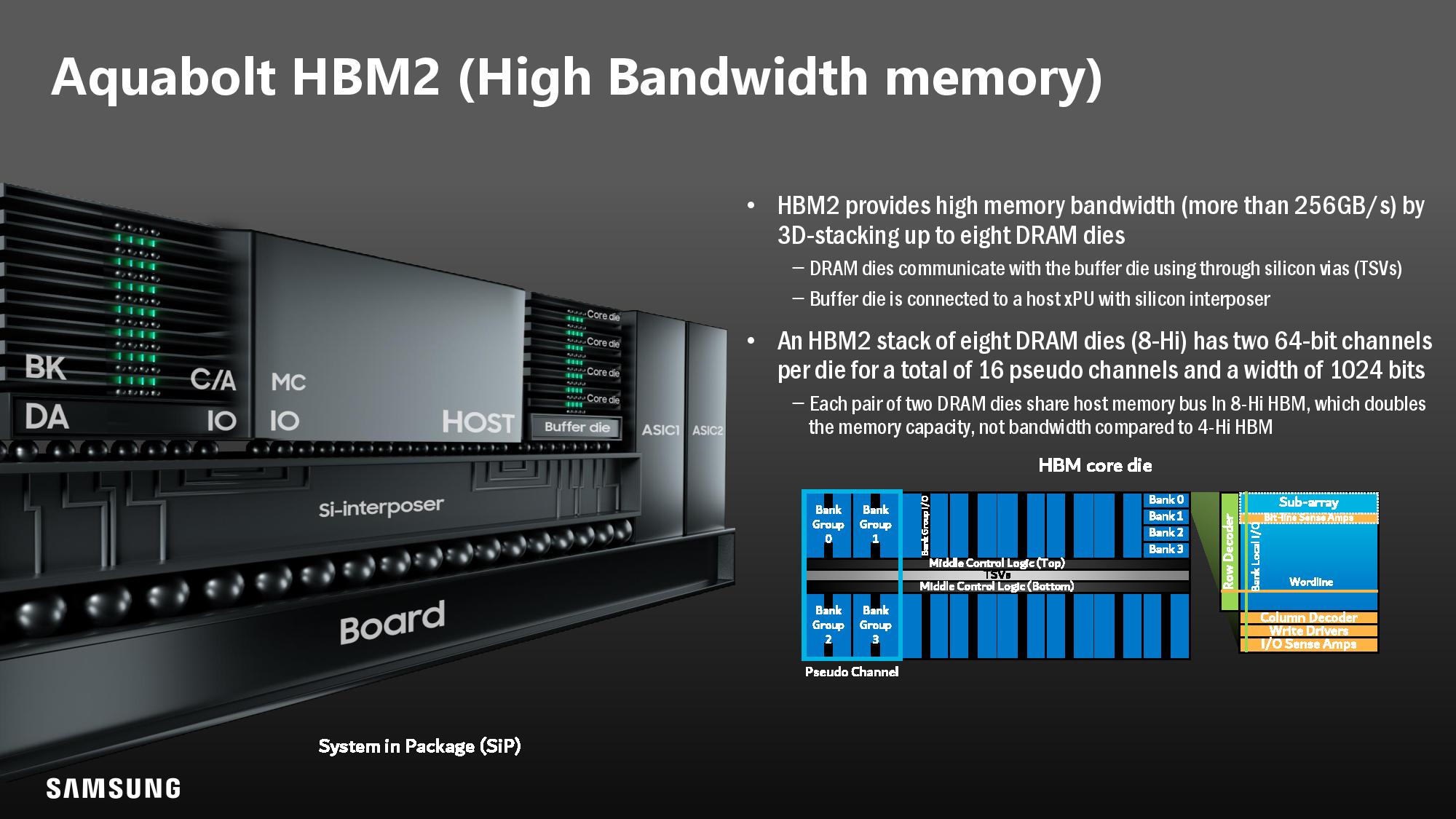

If Samsung has its way, the memory chips in future desktop PCs, laptops, or GPUs could think for themselves. At Hot Chips 33, Samsung announced that it would extend its processing-in-memory technology to DDDR4 modules, GDDR6, and LPDDR5X in addition to its HBM2 chips. Earlier this year, Samsung announced its HBM2 memory with an integrated processor that can compute up to 1.2 TFLOPS for AI workloads, allowing the memory itself to perform operations usually reserved for CPUs, GPUs ASICs, or FPGAs. Today marks more forward progress with that chip, but Samsung also has more powerful variants on the roadmap with its next-gen HBM3. Given the rise of AI-based rendering techniques, like upscaling, we could even see this tech work its way into gaming GPUs.

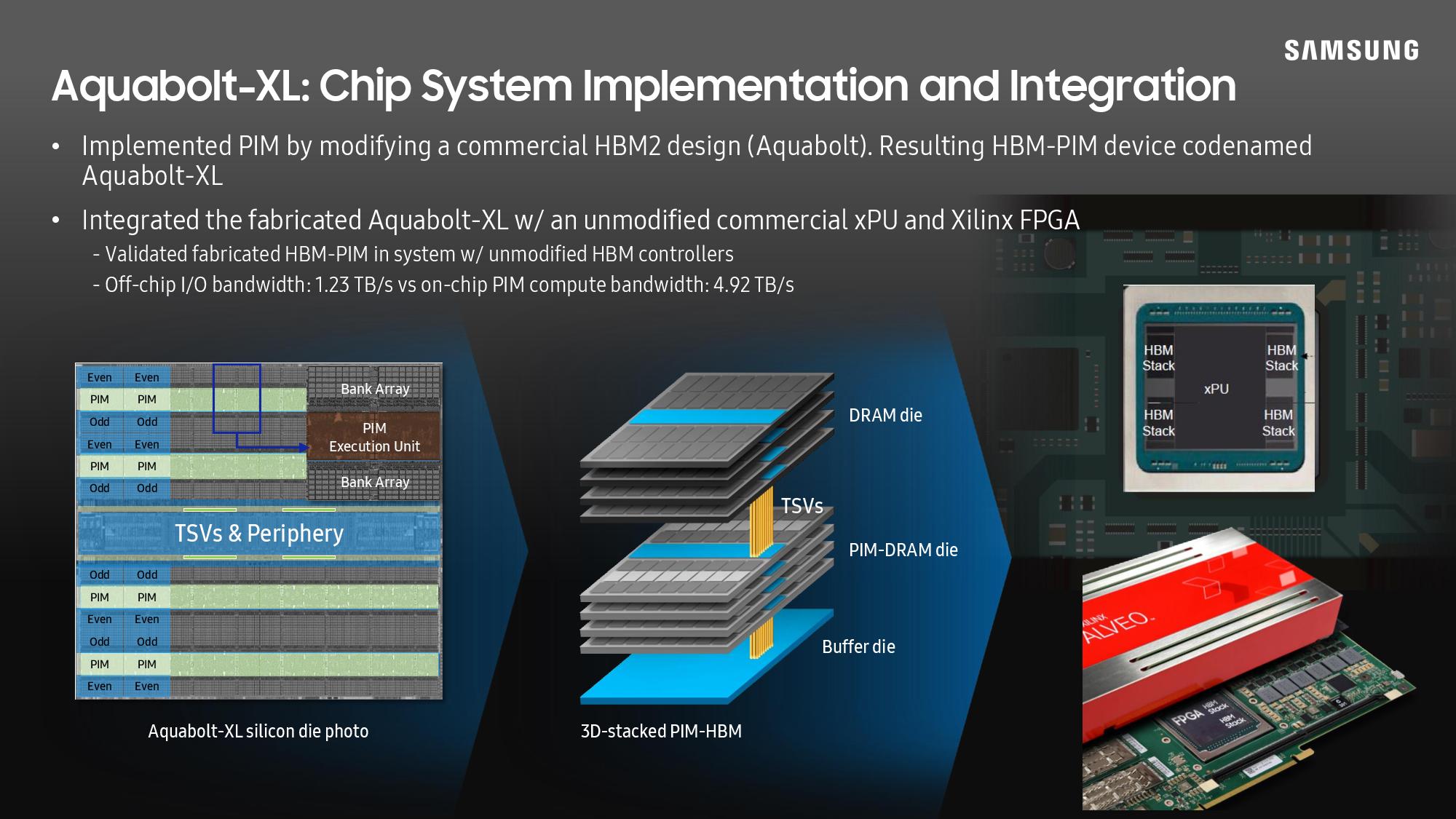

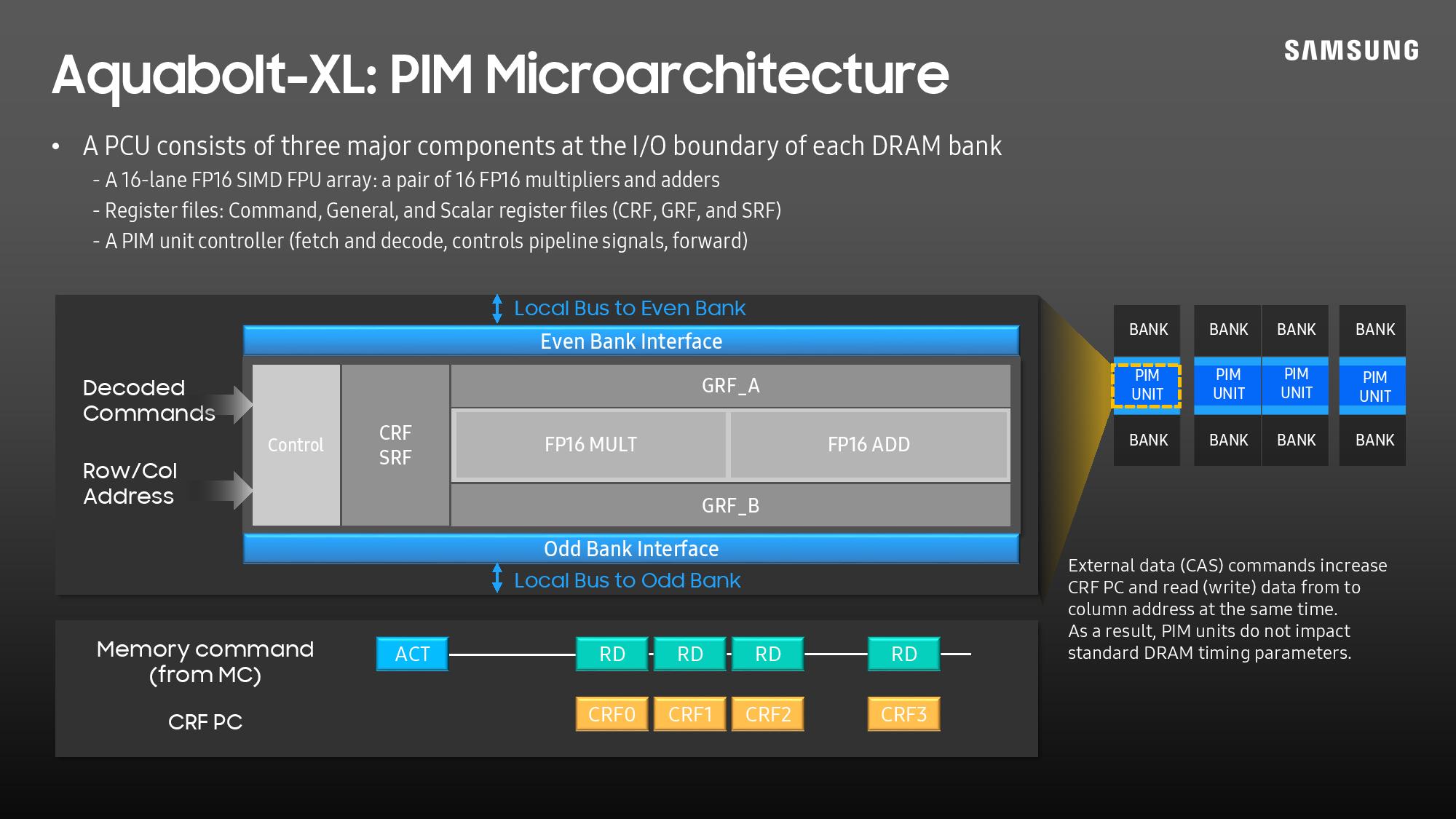

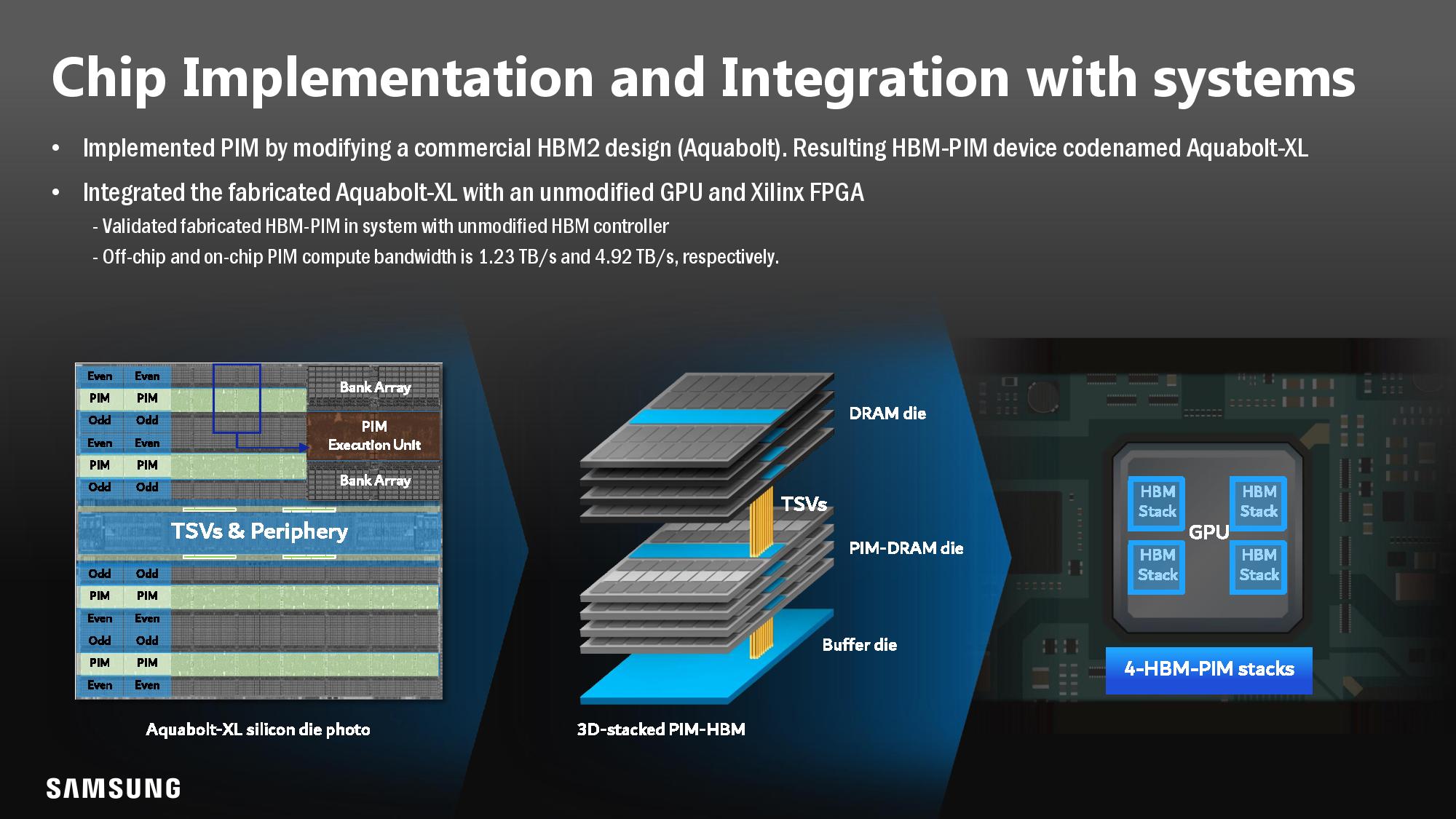

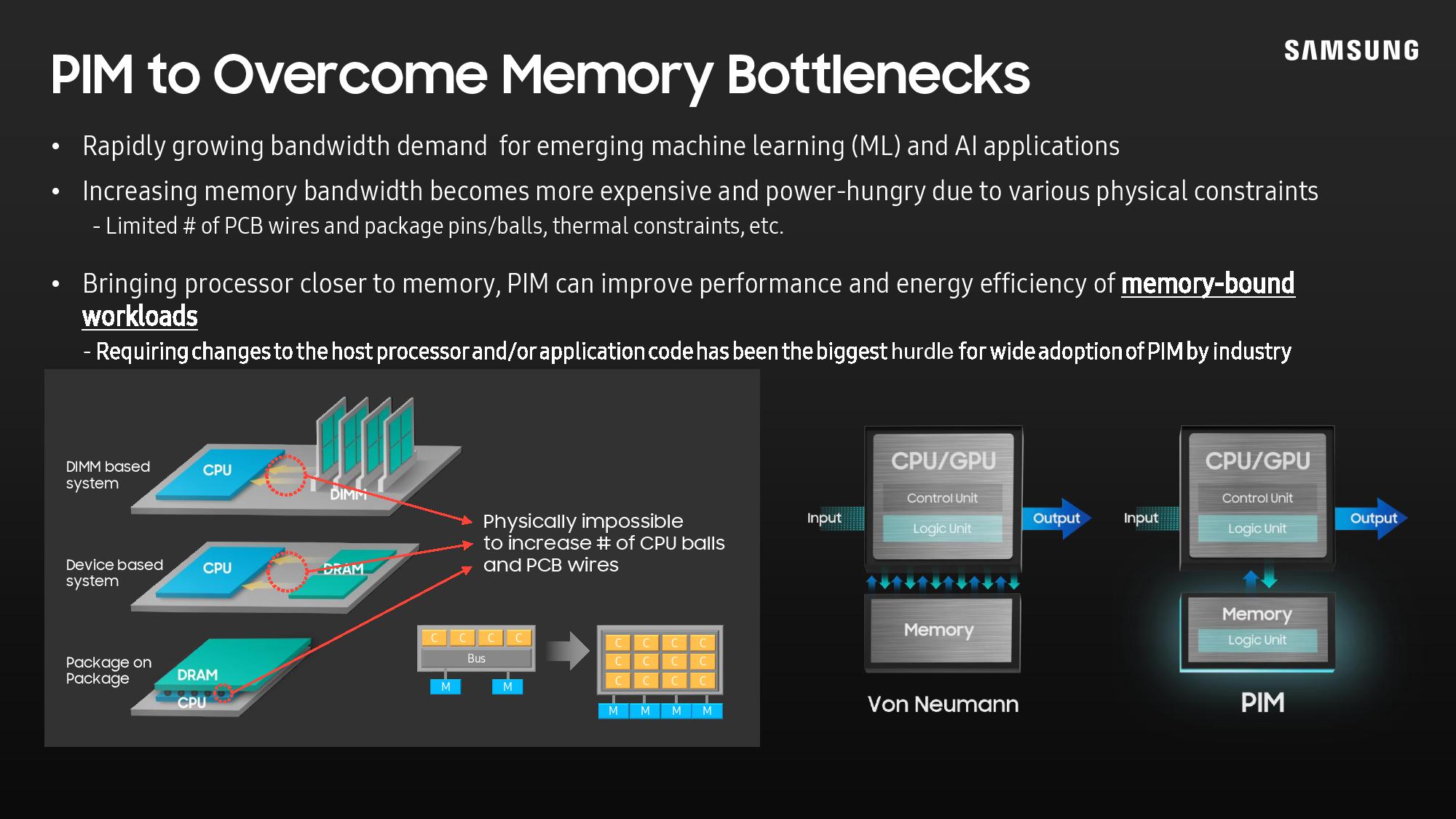

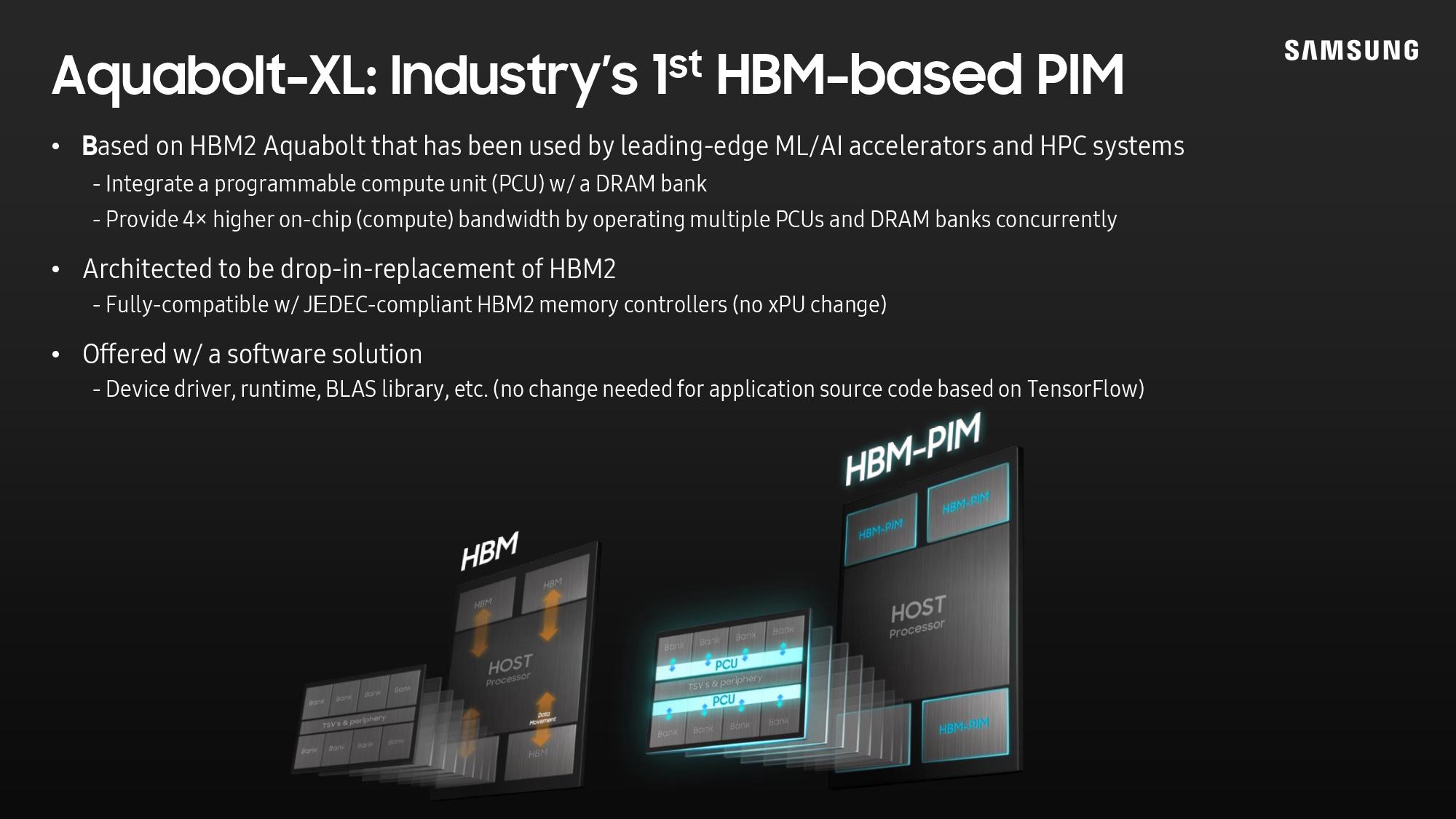

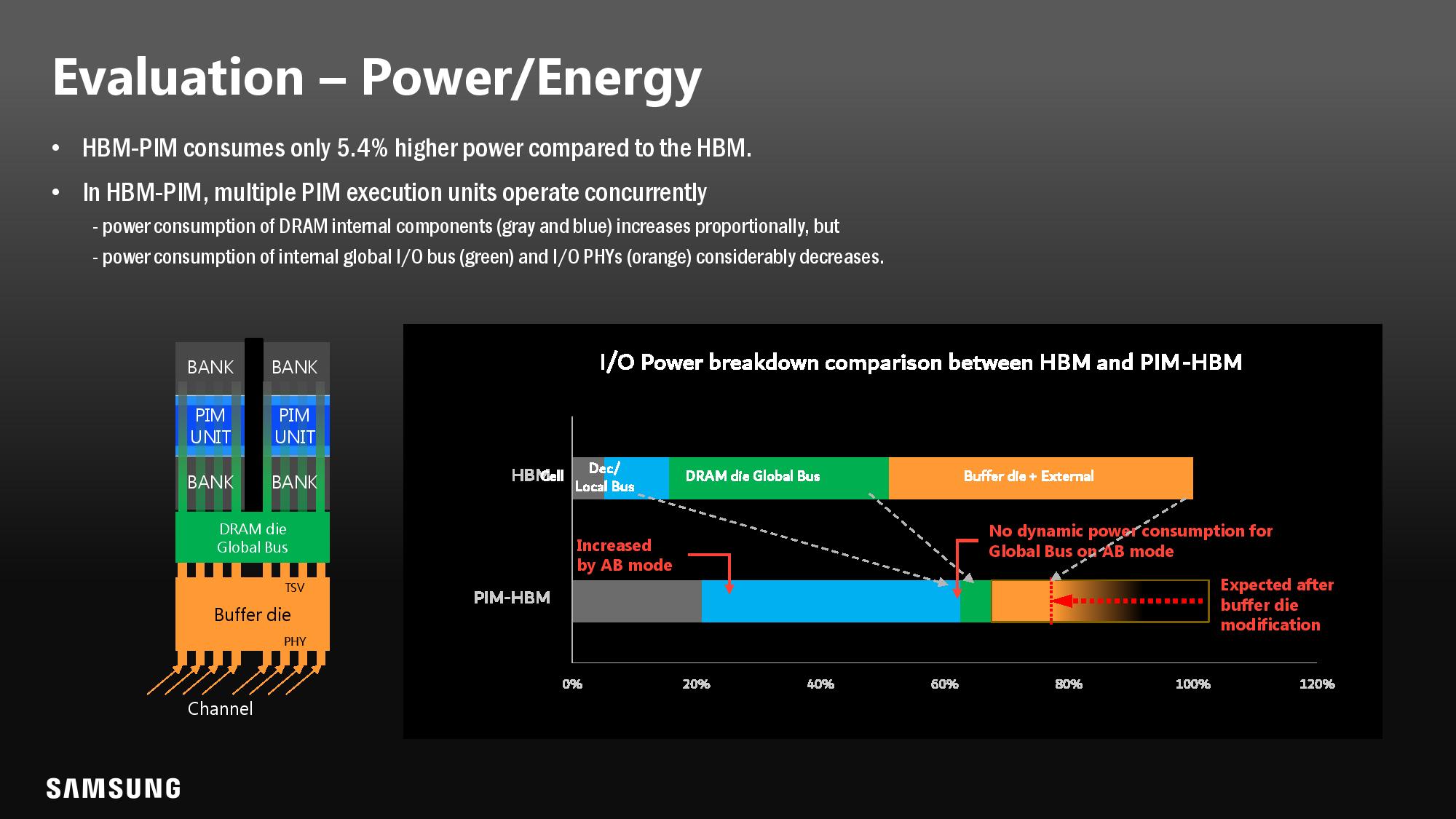

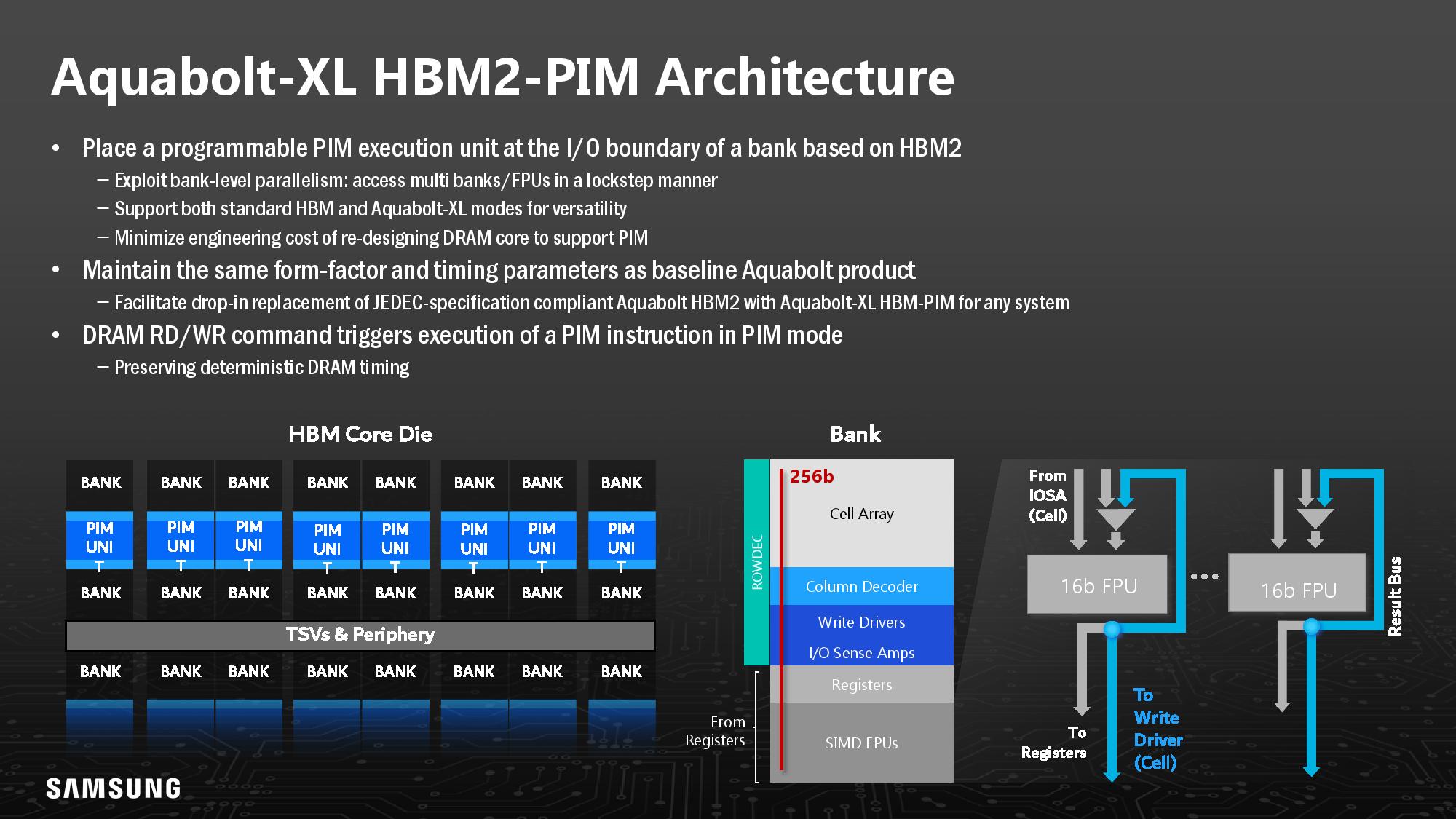

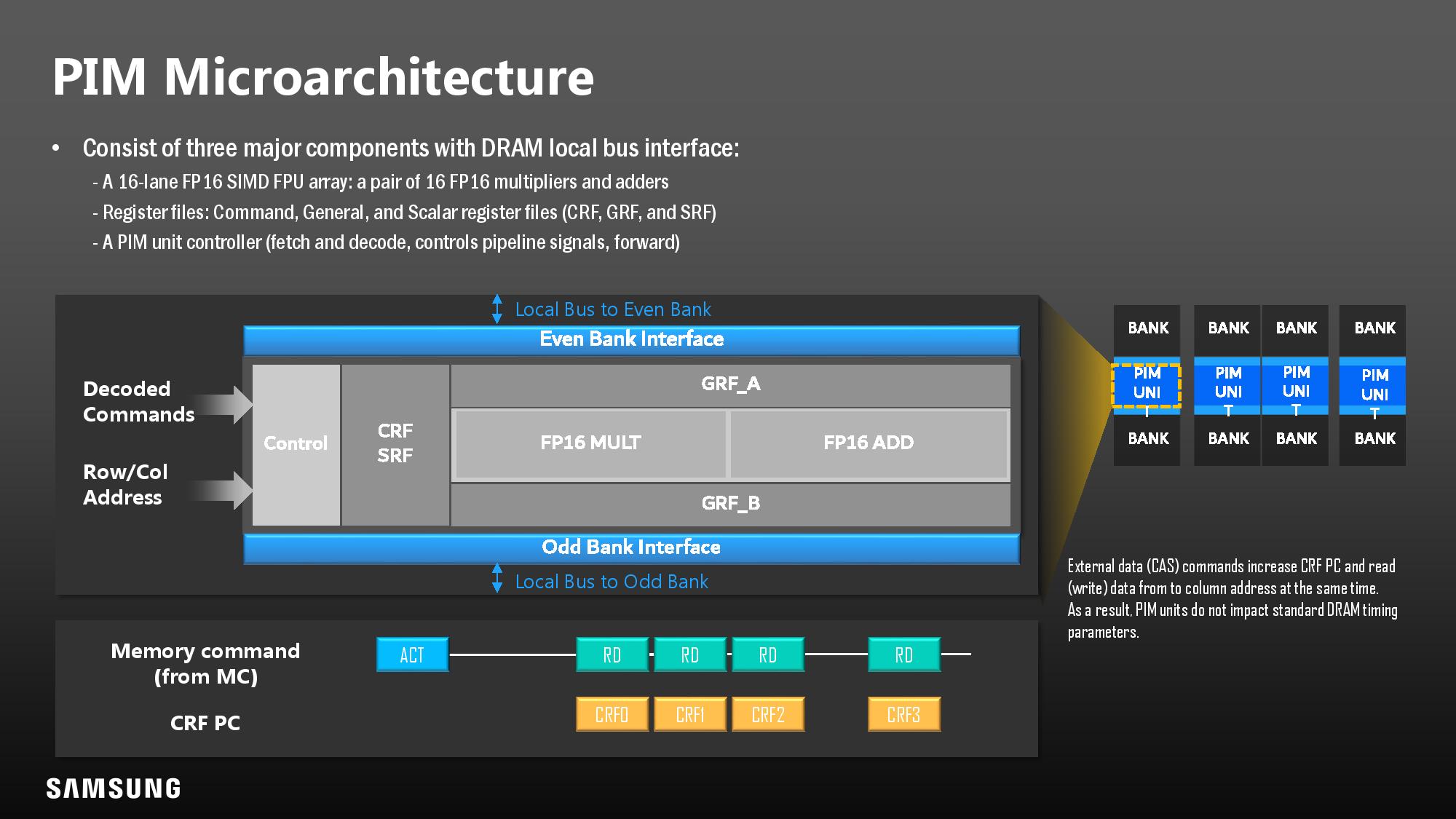

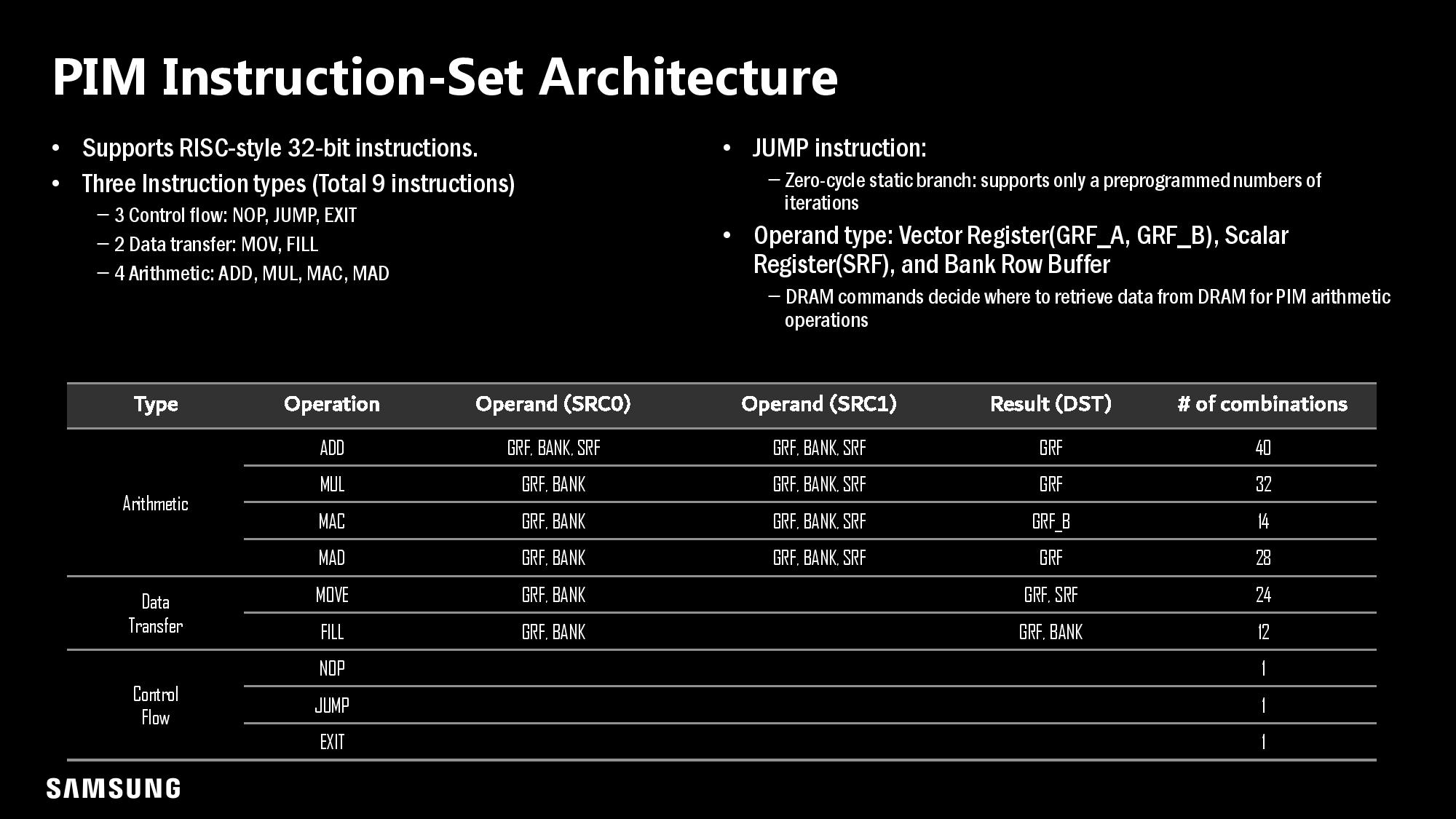

Today's announcement reveals the official branding of the Aquabolt-XL HBM2 memory, along with a reveal of the AXDIMM DDR4 sticks and LPDDR5 memory that also come with embedded compute power. We covered the nitty-gritty details of the first HBM-PIM (Processing-In-Memory) chips here. Put simply, the chips have an AI engine injected inside each DRAM bank. That allows the memory itself to process data, meaning that the system doesn't have to move data between the memory and the processor, thus saving both time and power. Of course, there is a capacity tradeoff for the tech with current memory types, but Samsung says that HBM3 and future memories will have the same capacities as normal memory chips.

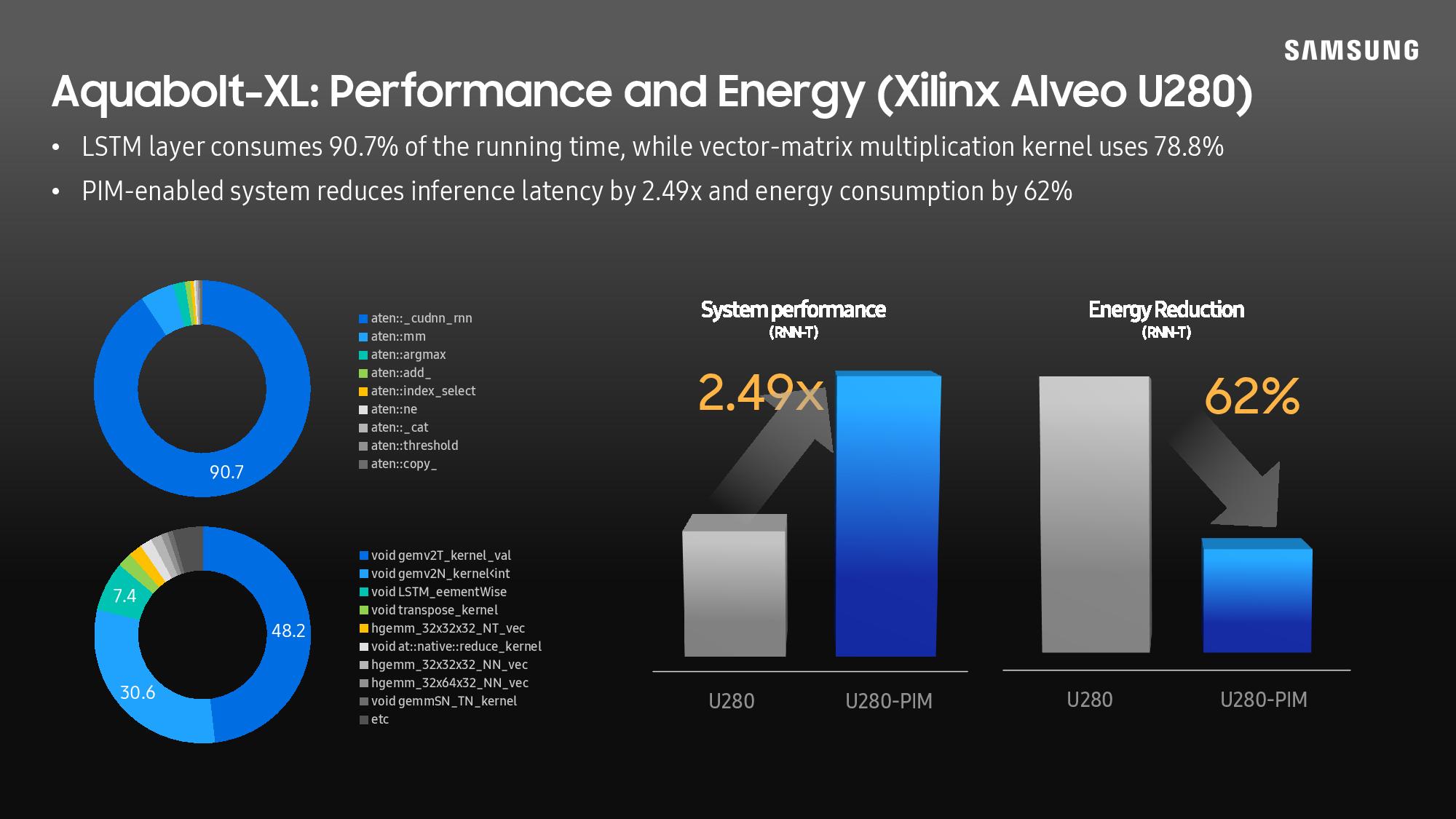

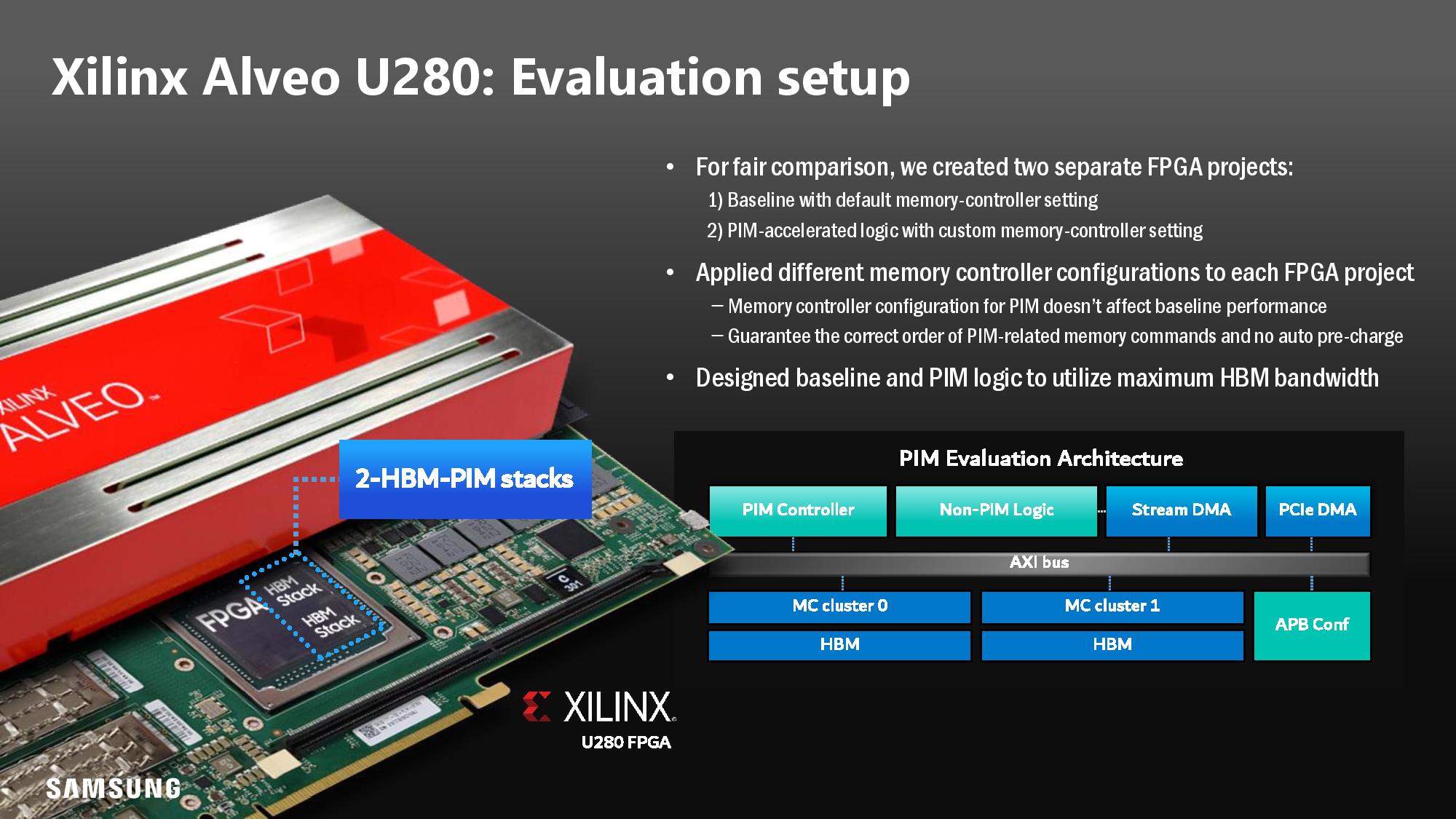

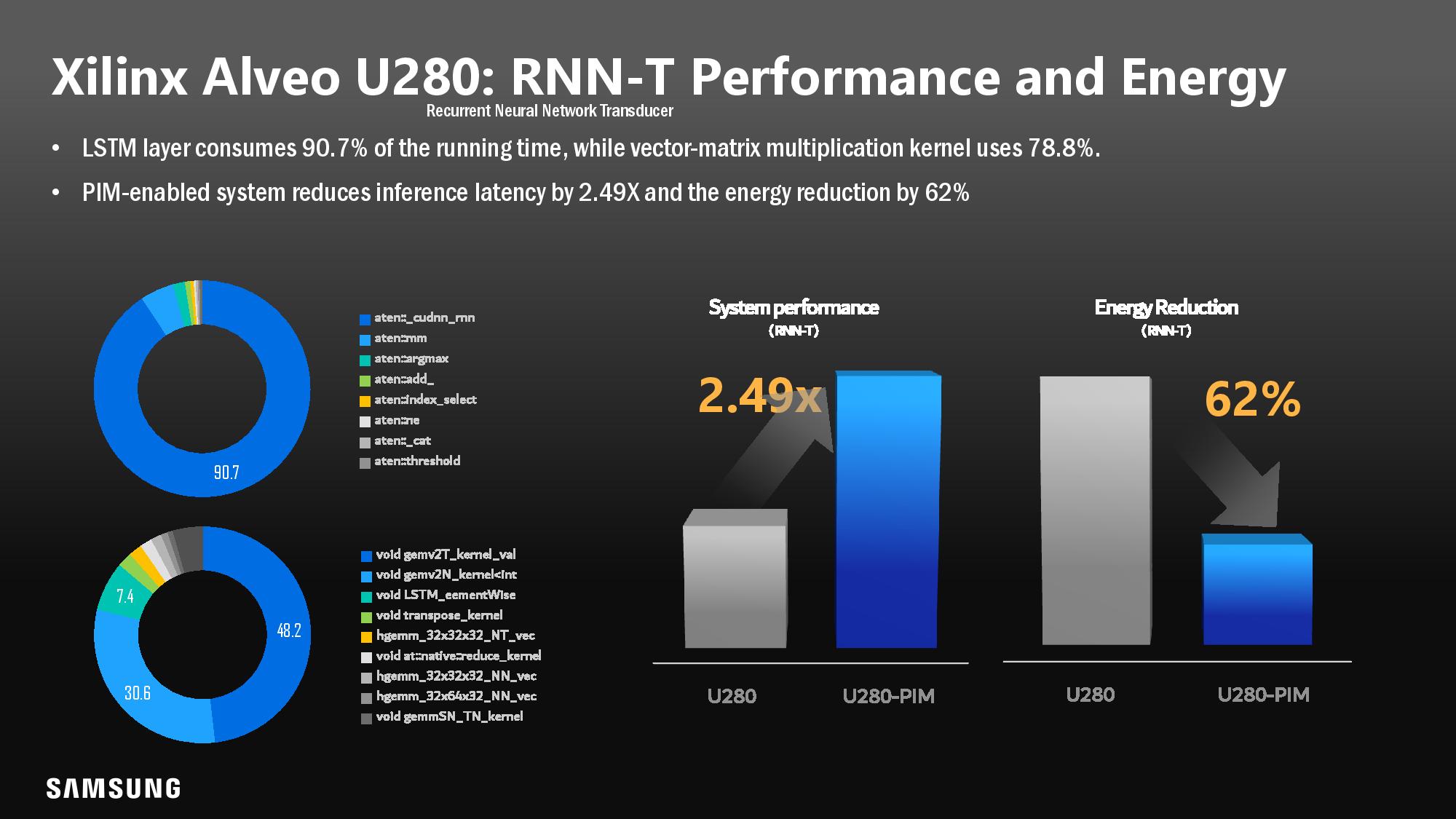

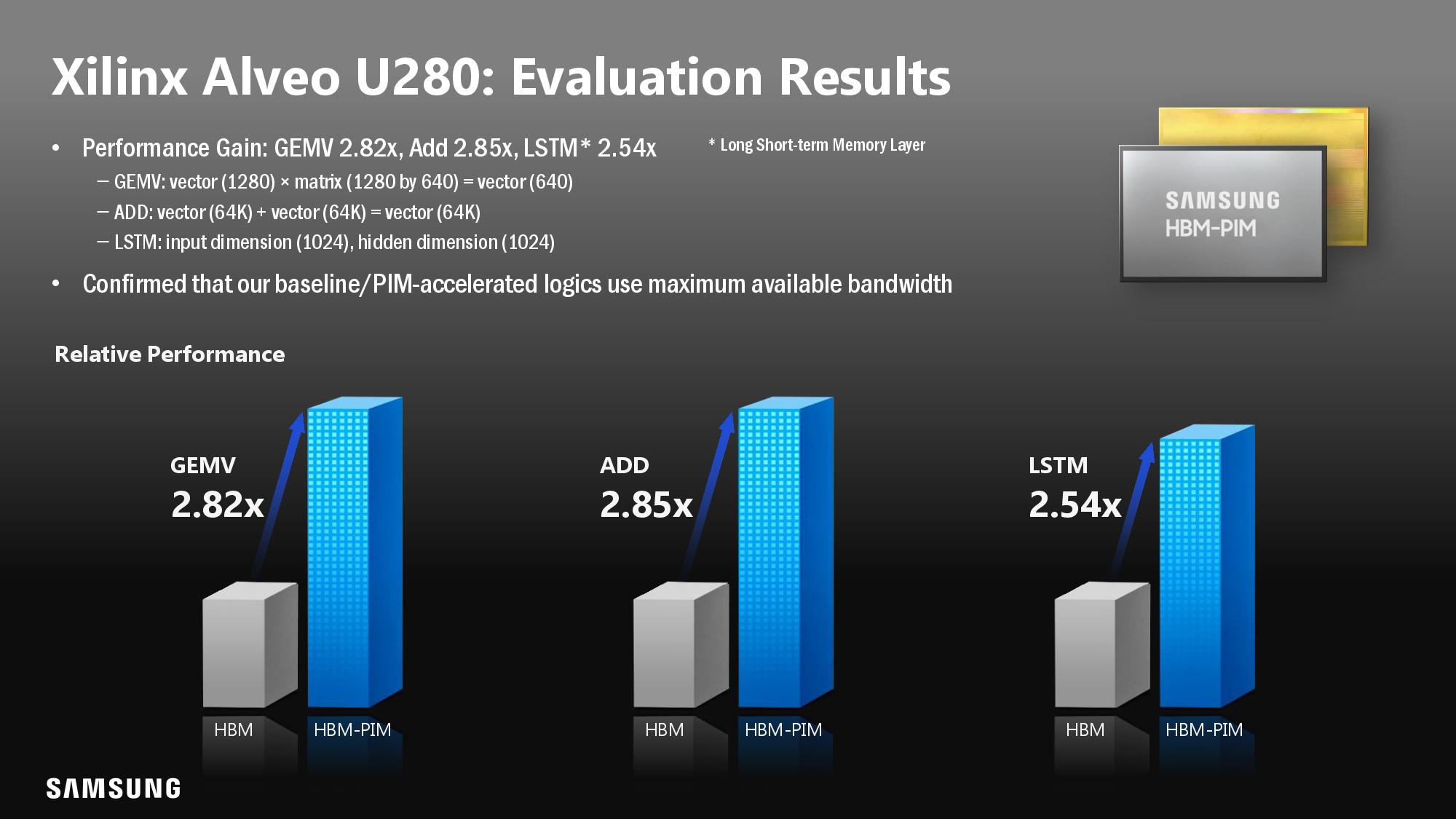

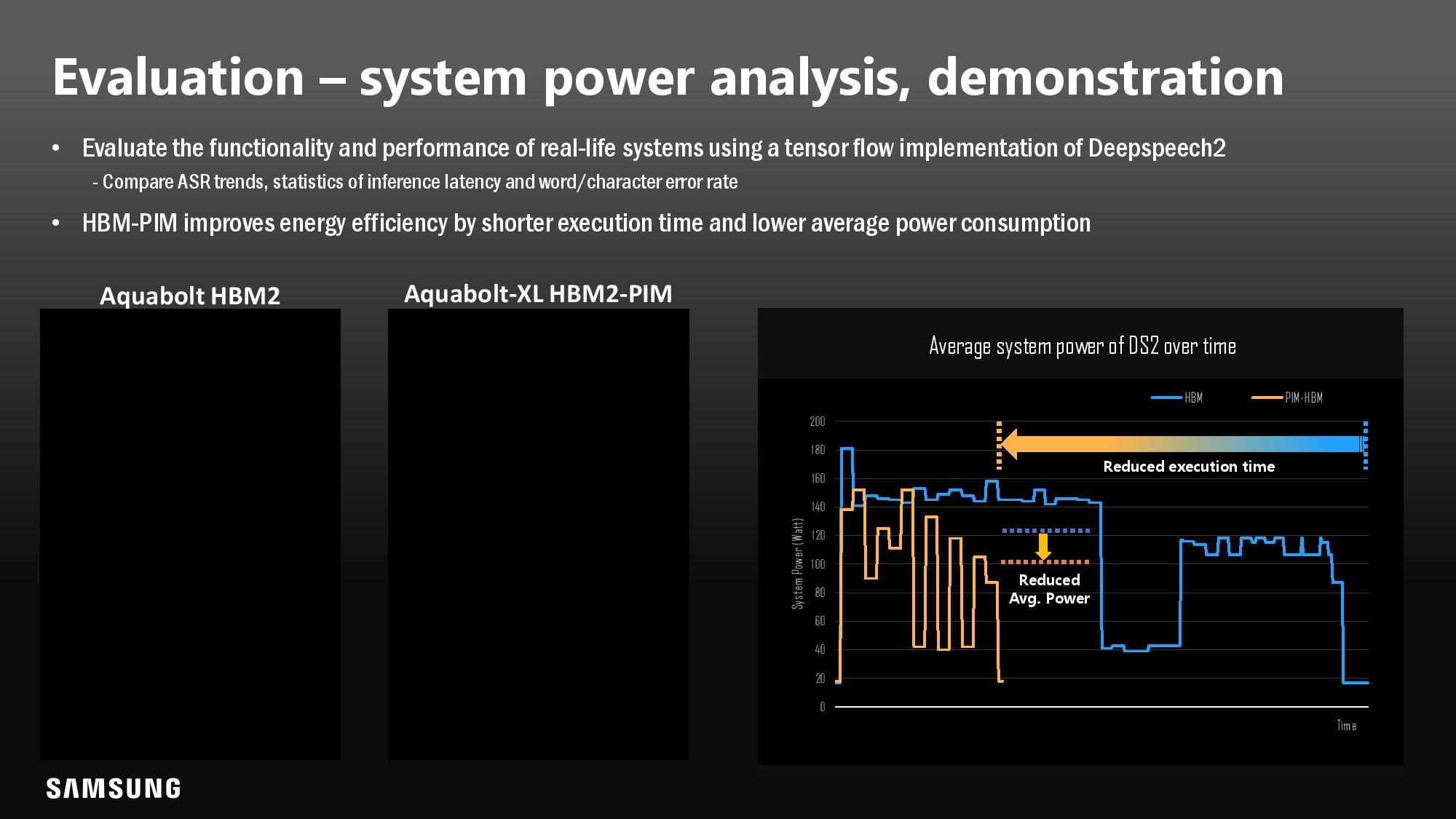

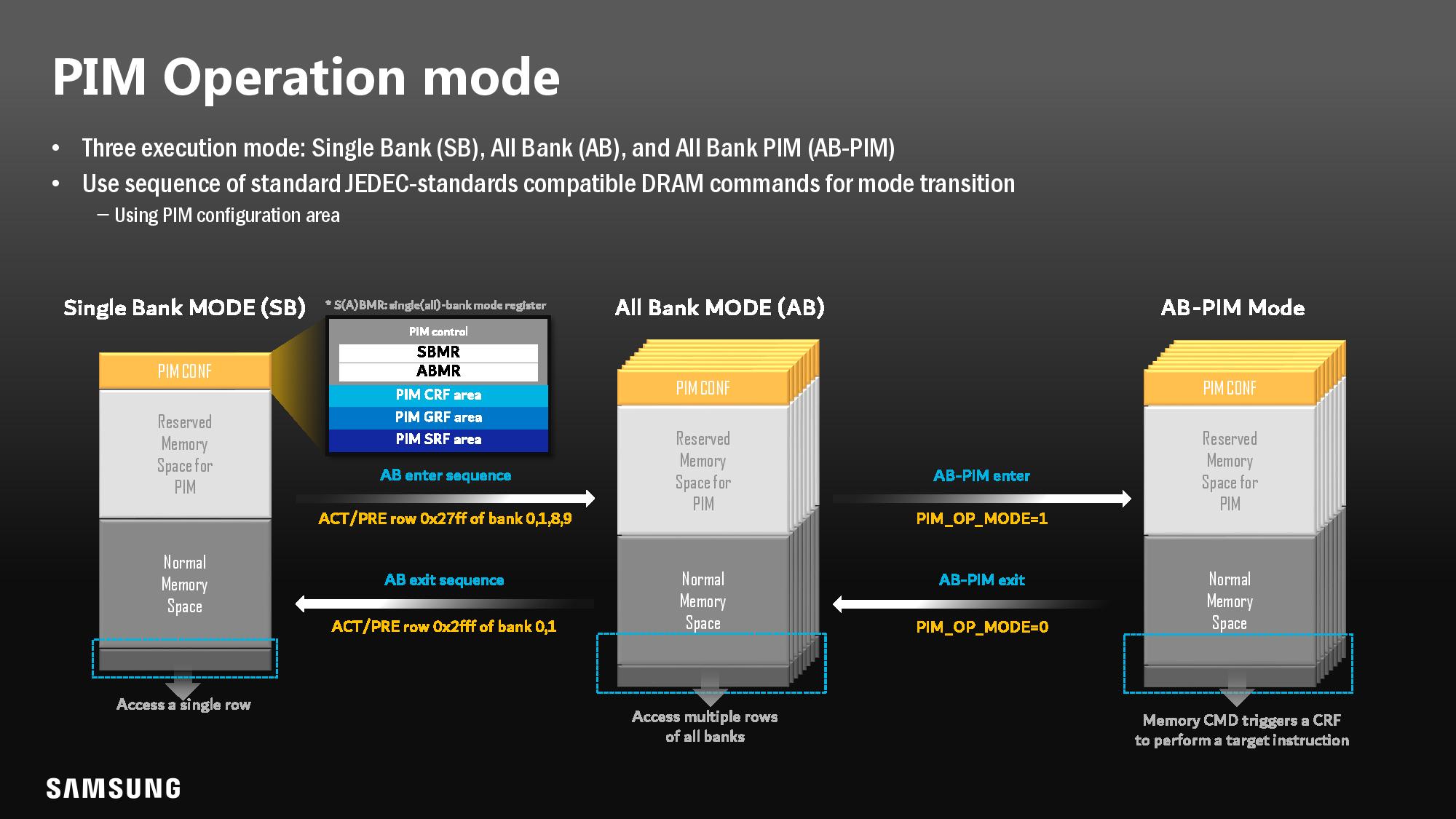

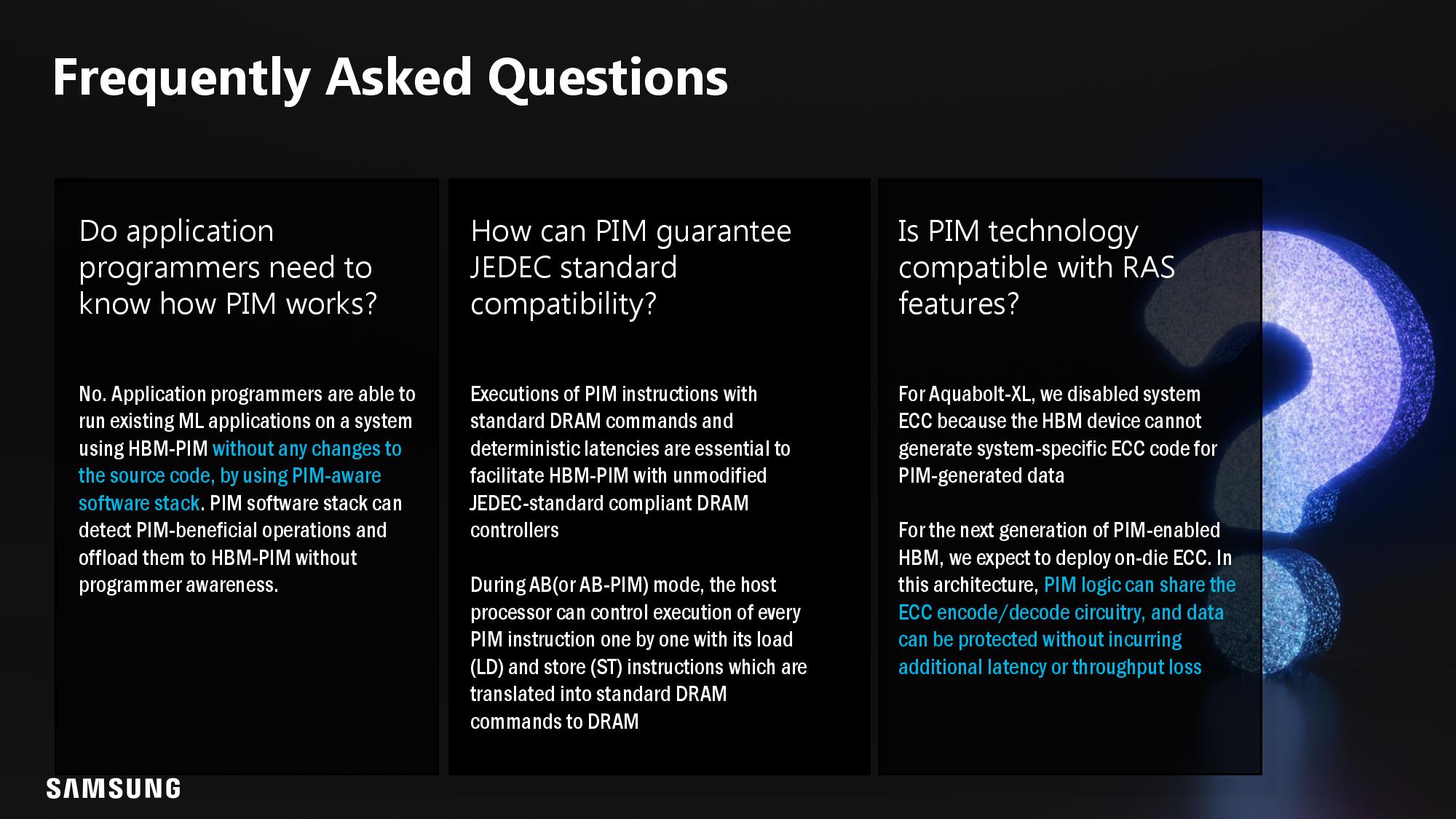

Samsung's Aquabolt-XL HBM-PIM slots right into the company's product stack and works with standard JEDEC-compliant HBM2 memory controllers, so it's a drop-in replacement for standard HBM2 memory. Samsung recently demoed this by swapping its HBM2 memory into a standard Xilinx Alveo FPGA with no modifications to the card, netting a 2.5X system performance gain with a 62% reduction in energy consumption.

While Samsung's PIM tech is already compatible with any standard memory controller, enhanced support from CPU vendors will result in more performance in some scenarios (like not requiring as many threads to fully utilize the processing elements). Samsung tells us that it is testing the HBM2-PIM with an unnamed CPU vendor for use in its future products. Of course, that could be any number of potential manufacturers, be they on the x86 or Arm side of the fence — Intel's Sapphire Rapids, AMD's Genoa, and Arm's Neoverse platforms all support HBM memory (among others).

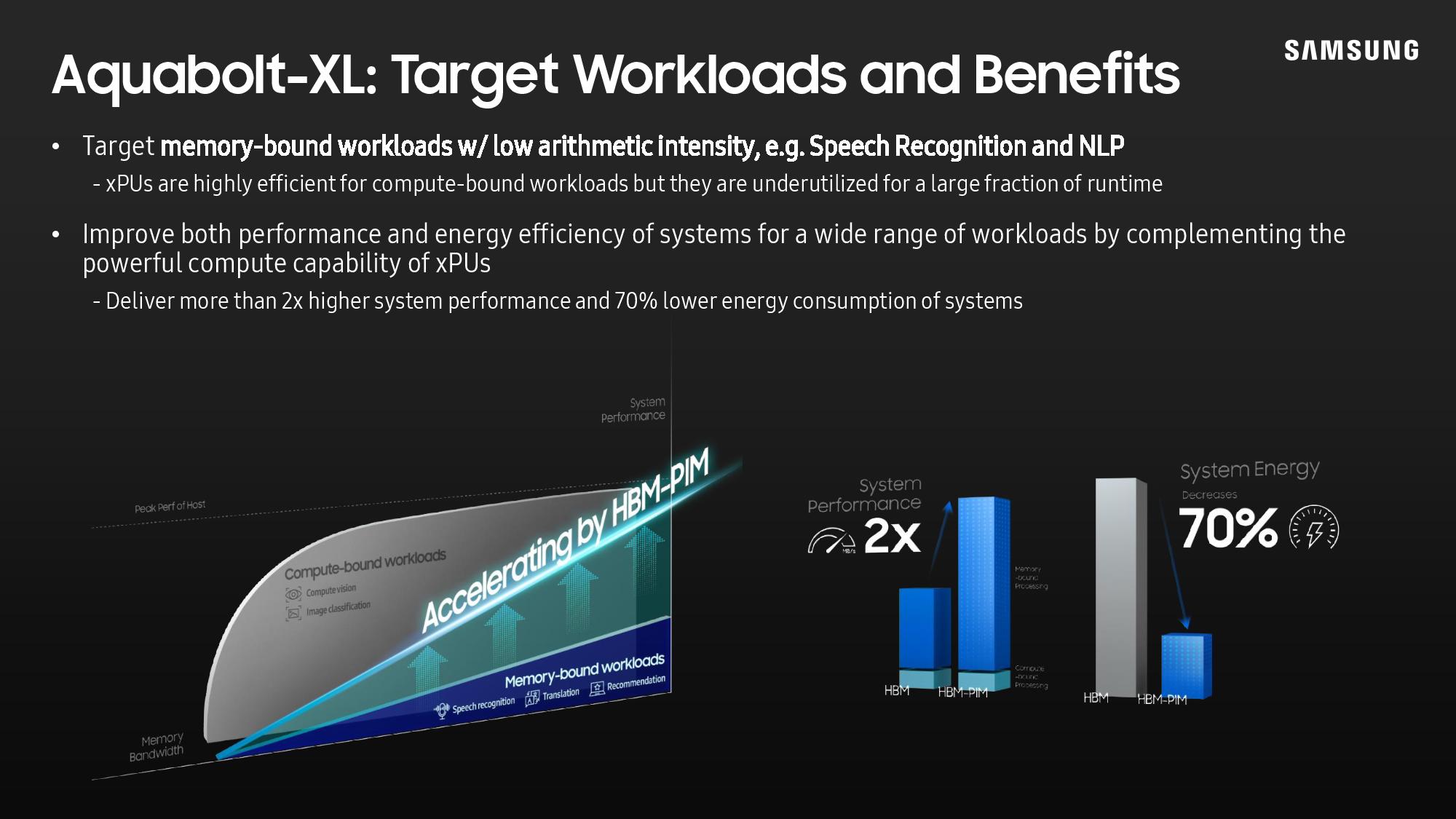

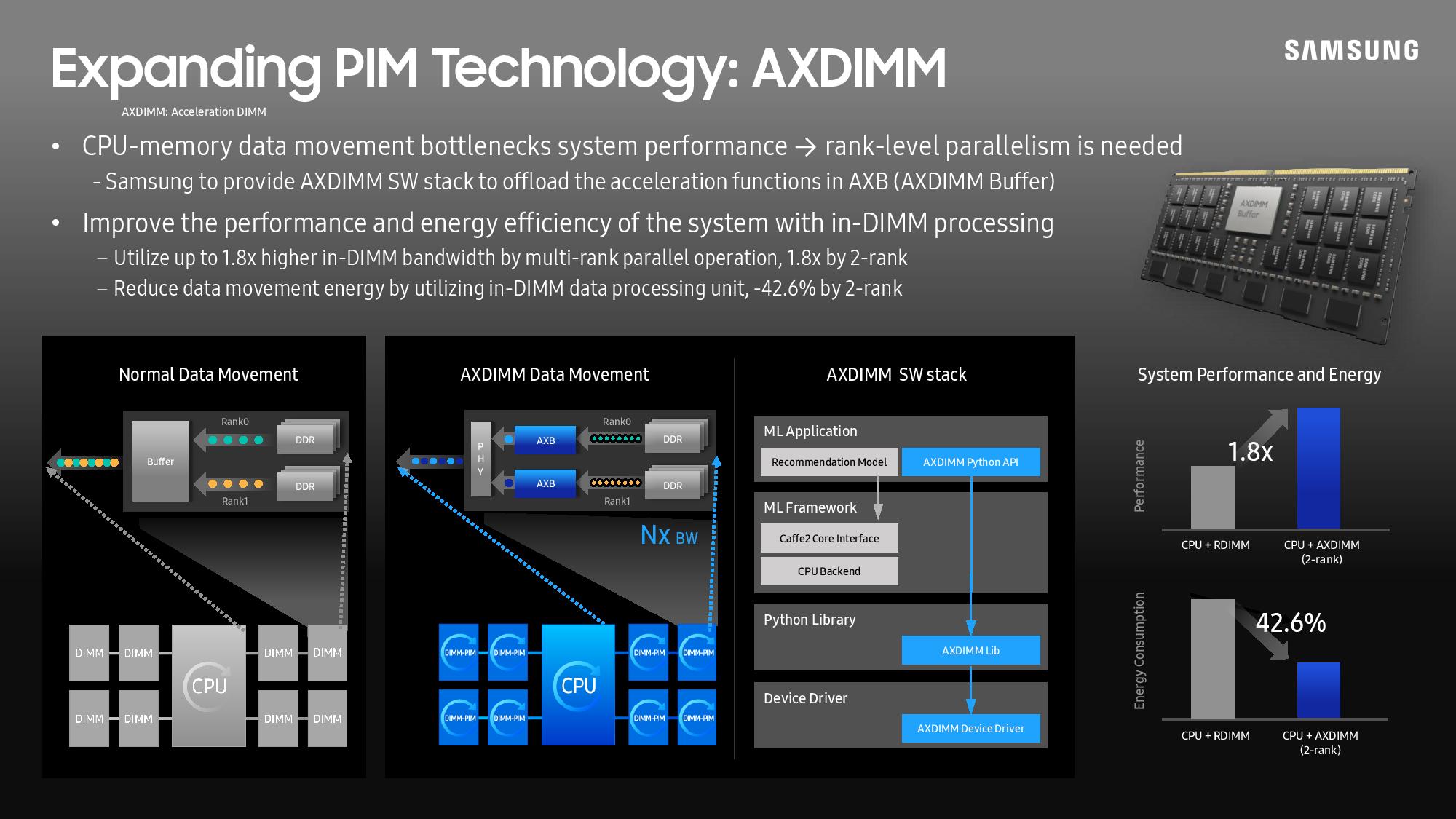

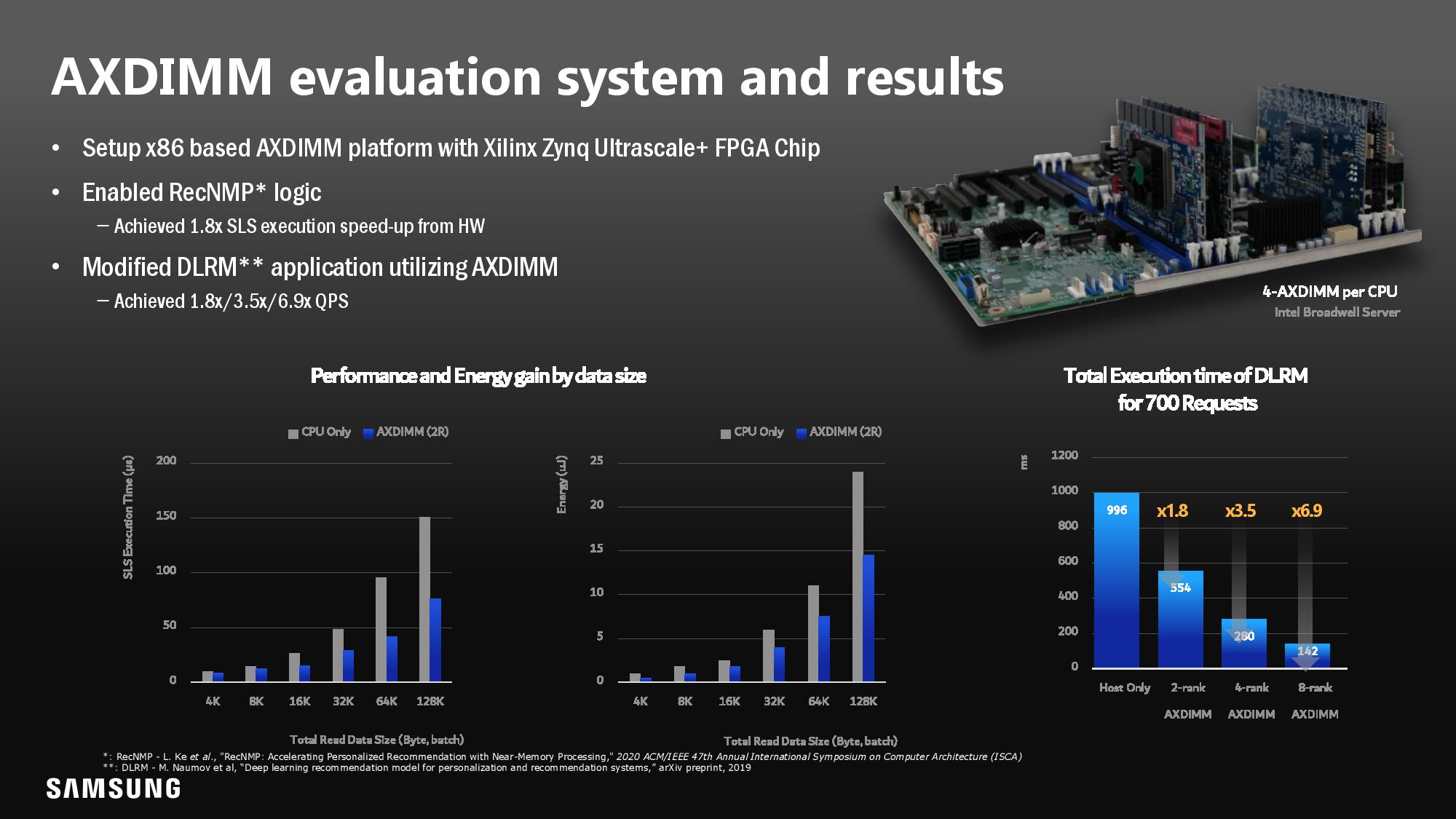

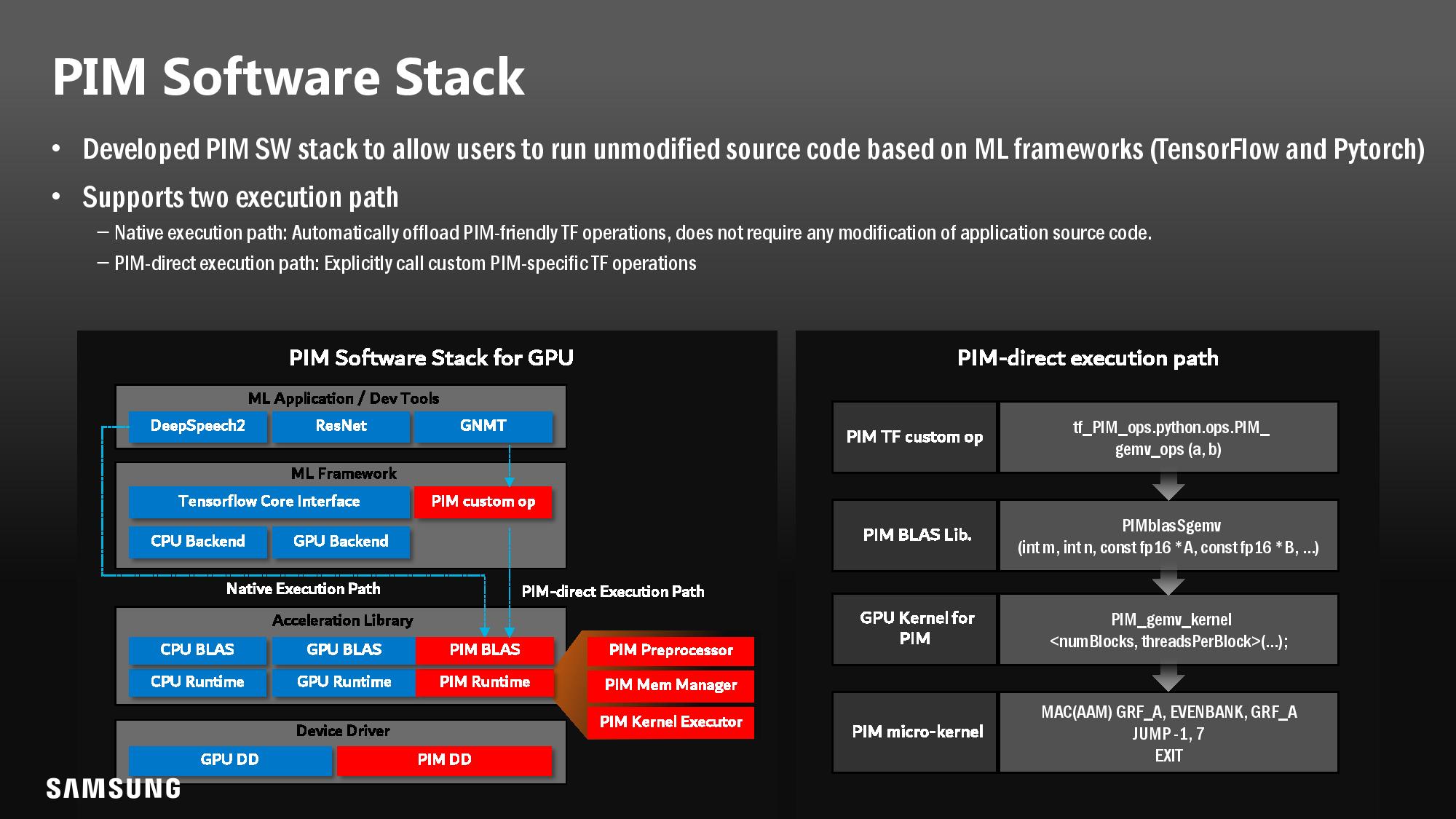

Naturally, Samsung's PIM tech is a good fit for data centers, largely because it is a great fit for memory-bound AI workloads that don't have heavy computation, like speech recognition. Still, the company also envisions the tech moving to more standard climes too. To that end, the company also demoed its AXDIMM, a new acceleration DIMM prototype that performs processing in the buffer chip. Like the HBM2 chip, it can perform FP16 processing using standard TensorFlow and Python code, though Samsung is working feverishly to extend support to other types of software. Samsung says this DIMM type can drop into any DDR4-equipped server with either LRDIMMs or UDIMMS, and we imagine that DDR5 support will follow in due course.

The company says its tests (conducted on a Facebook AI workload) found a 1.8X increase in performance, a 42.6% reduction in energy consumption, and a 70% reduction in tail latency with a 2-rank kit, all of which is very impressive—especially considering that Samsung plugged the DIMMs into a standard server without modifications. Samsung is already testing this in customer servers, so we can expect this tech to come to market in the near future.

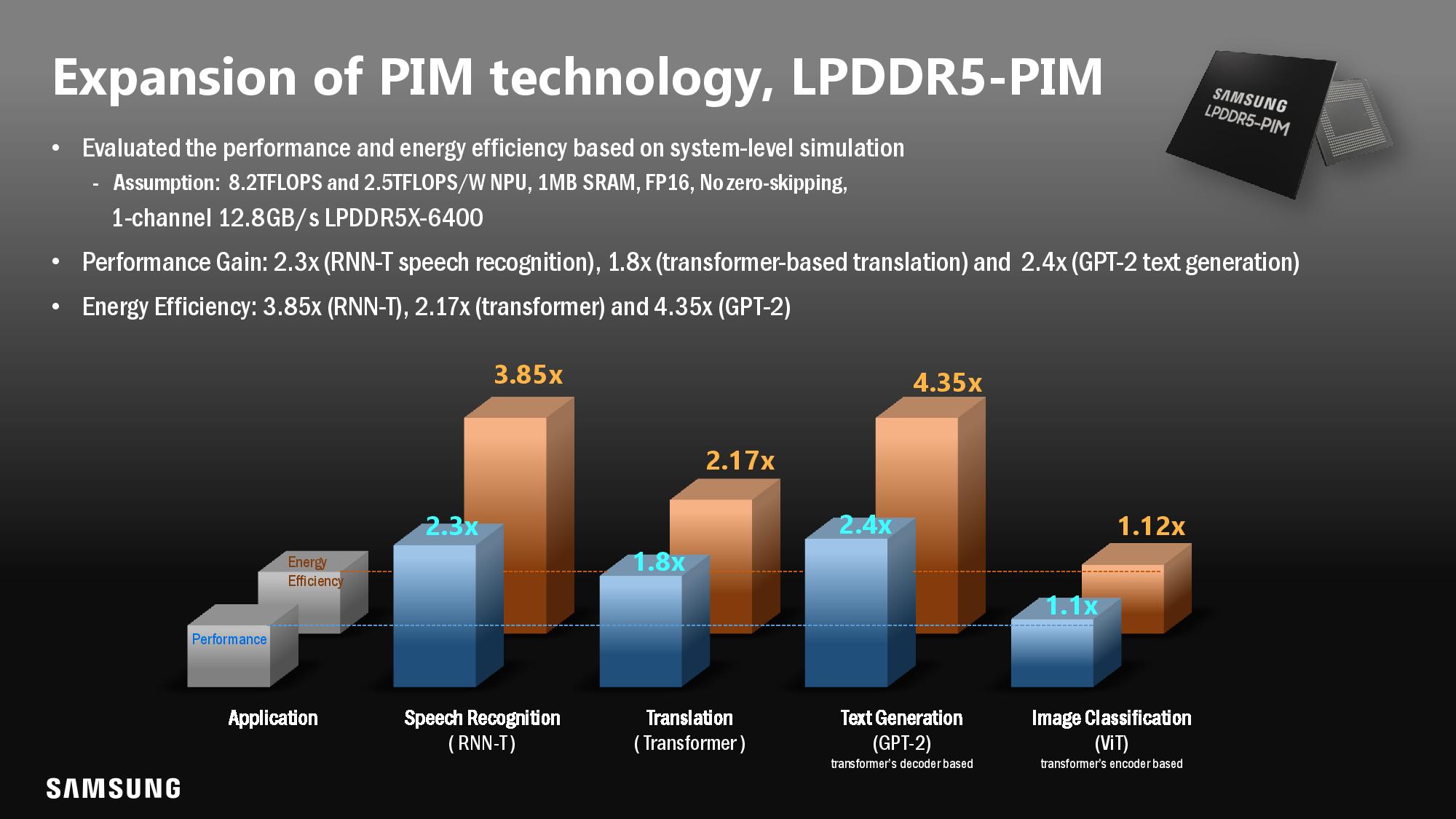

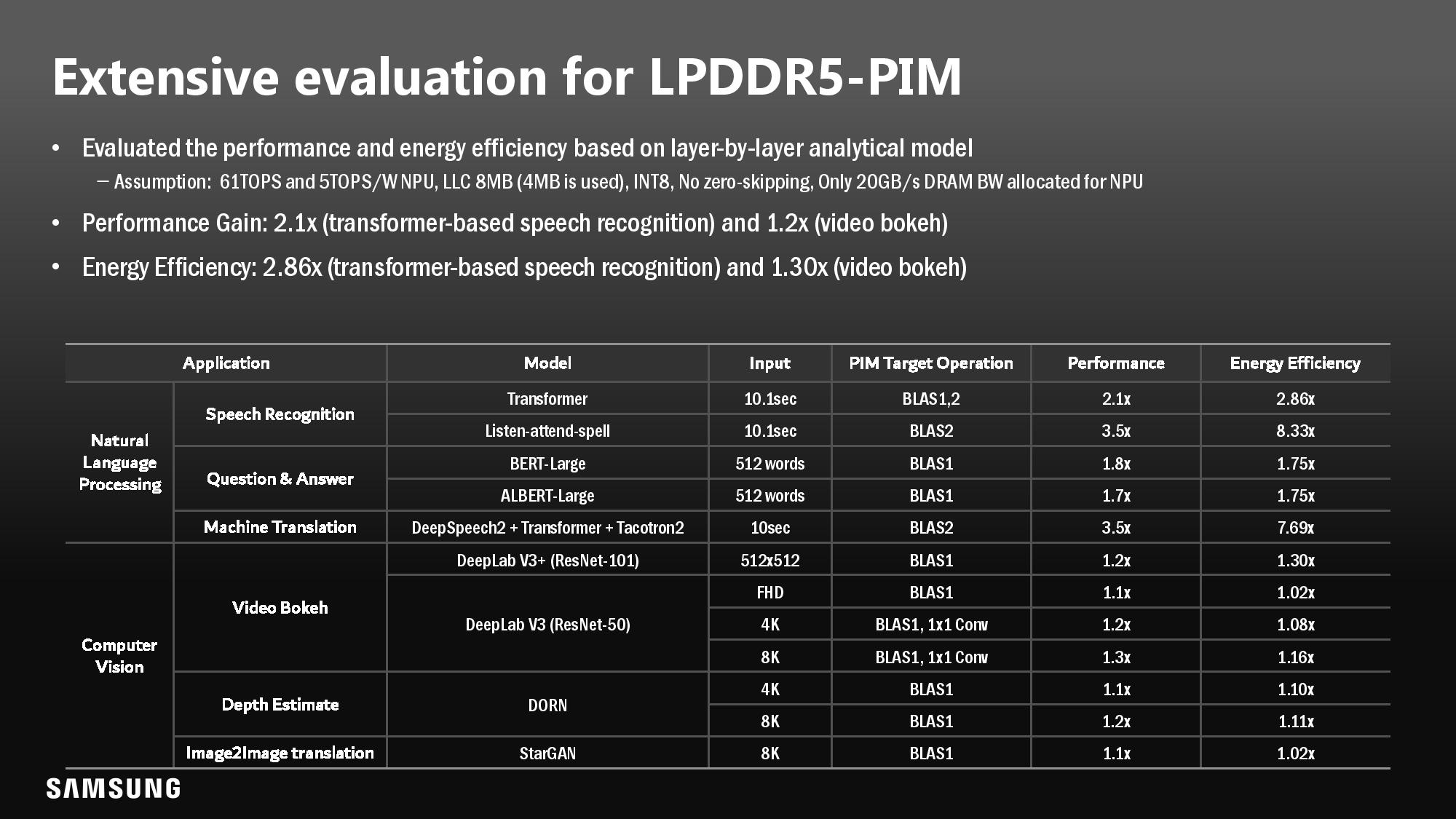

Samsung's PIM tech is transferable to any of its memory processes or products, so it has even begun experimenting with PIM memory in LPDDR5 chips, meaning that the tech could come to laptops, tablets, and even mobile phones in the future. Samsung is still in the simulation phase with this tech. Still, its tests of a simulated LPDDR5X-6400 chip claim a 2.3X performance improvement in speech recognition workloads, a 1.8X improvement in a transformer-based translation, and a 2.4X increase in GPT-2 text generation. These performance improvements come paired with a 3.85X, 2.17X, and 4.35X reduction in power, respectively.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This tech is moving rapidly and works with standard memory controllers and existing infrastructure, but it hasn't been certified by the JEDEC standards committee yet, a key hurdle that Samsung needs to jump before seeing widespread adoption. However, the company hopes that the initial PIM spec is accepted into the HBM3 standard later this year.

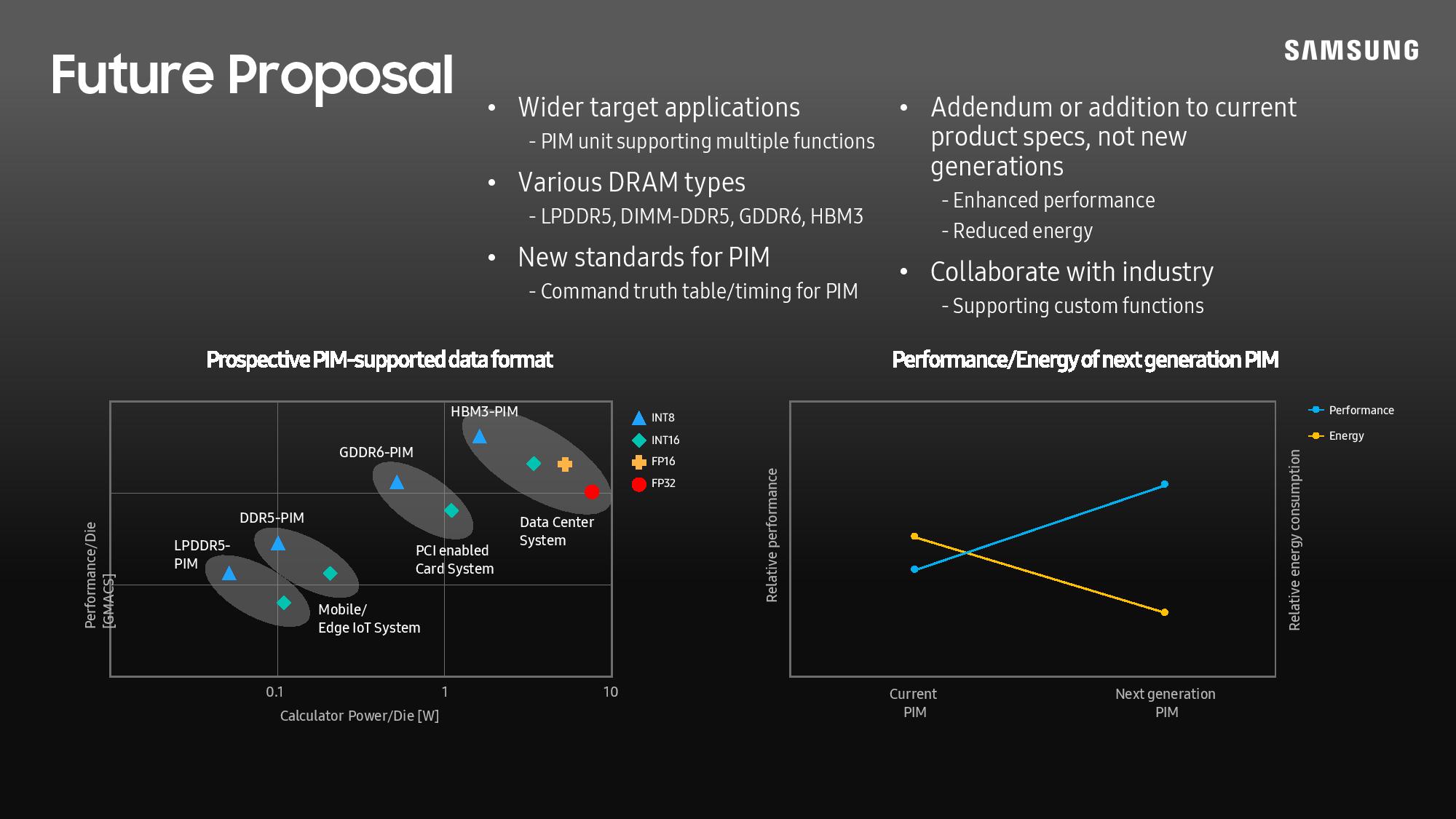

Speaking of HBM3, Samsung says that it will move forward from the FP16 SIMD processing in HBM2 to FP64 in HBM3, meaning the chips will have expanded capabilities. FP16 and FP32 will be reserved for data center usages, while INT8 and INT16 will serve the LPDDR5, DDR5, and GDDR6 segments.

Additionally, you lose half the capacity of an 8GB chip if you want the computational power of HBM2 PIM, but there will be no such capacity tradeoffs in the future: The chips will have the full standard capacity regardless of the computational capabilities.

Samsung will also bring this capability to other types of memory, like GDDR6, and widen the possible applications. CXL support could also be on the horizon. Samsung says its Aquabolt-XL HBM2 chips are available for purchase and integration today, with its other products are already working their way through the developmental pipeline.

Who knows, with the rise of AI-based upscaling and rendering techniques, this tech could be more of a game-changer for enthusiasts than we see on the surface. In the future, it's plausible that GPU memory could handle some of the computational workloads to boost GPU performance and reduce energy consumption.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.