The 23 Greatest Graphics Cards Of All Time

Do you remember the first card that introduced you to 3D gaming? How about the best accelerator you ever owned? Take a trip with us down memory lane as we recount the 23 most powerful graphics boards of their respective eras.

April 2004: Nvidia GeForce 6800 Ultra

Nvidia struck back with the GeForce 6800 Ultra, a product that recaptured the fastest-card title. It featured 16 pixel pipelines in a 400 MHz GPU and up to 512 MB of memory at 1100 MT/s. Not only was the card blazing fast, but two could be used in tandem with Nvidia's new Scalable Link Interface (SLI) technology, an evolution of the intellectual property gained from the purchase of 3dfx. ATI launched its Radeon X800 XT one month later, and while it traded blows with the GeForce 6800 Ultra, the 6800 was technically more advanced. While the GeForce supported 32-bit floating point precision and shader model 3.0, ATI's entry was limited to 24-bit precision and shader model 2.0. Though those features had little impact on the software of the day, they were good marketing bullet points that often made the 6800 more attractive.

June 2005: Nvidia GeForce 7800 GTX

Nvidia released the GeForce 7800 GTX with 24 rendering pipelines, a 430 MHz GPU, and 256 MB of memory running at 1.2 GT/s on a 256-bit interface. This card had little trouble taking the performance crown, as there was no serious competition from ATI until November of 2005 with the Radeon X1800 XT. Immediately after, Nvidia launched the GeForce 7800 GTX 512 MB with much faster 1.7 GT/s memory and a 550 MHz core to keep the crown. It's notable that ATI released its original incarnation of CrossFire right around the same time, which earned bad reviews for its external dongle and special CrossFire-edition master card requirement.

January 2006: ATI Radeon X1900 XTX

The Radeon X1900 XT was equipped with 48 pixel shaders, a 650 MHz core clock, and 512 MB of GDDR3 memory at 1.55 GT/s. Nvidia fired back with the GeForce 7900 GTX a couple months later, though, and kept the pressure on ATI with a card that won its share of battles. We'll call this round in favor of the Radeon's impressive forward-looking pixel-shading power.

June 2006: Nvidia GeForce 7950 GX2

Nvidia doubled its potential with the GeForce 7950 GX2, essentially two GeForce 7900 GTX PCBs in SLI on a single card. With 48 pipelines, a 500 MHz core clock, and two 512 MB banks of RAM at 1.2 GT/s, this beast ruled the roost with no real competition. At the time, ATI had no dual-GPU-equipped option to offer a proper challenge, and even the powerful GDDR4-equipped Radeon X1950 XTX was humbled.

November 2006: Nvidia GeForce 8800 GTX

The DirectX 10 era was ushered in with the GeForce 8800 GTX and its 128 streaming processors, a 575 MHz core, independent 1.35 GHz shader clock, and 768 MB of GDDR3 memory running at 1.8 GT/s on a 384-bit bus. Put simply, this monolithic GPU absolutely trounced the competition (including the dual-GPU GeForce 7950 GX2) in every possible way, setting a very high bar for both features and performance. The only complaint was high power use. But while this downside to high-end gaming was only just becoming the norm, it didn't take away from the 8800's glory.

AMD acquired ATI around this time, and it took months for the company to respond with a competing DirectX 10 GPU. In May of 2007 it finally released the Radeon HD 2900 XT, but it was immediately recognized as a failure due to low performance compared to the GeForce card and incredibly high power consumption. AMD came back to the fight in November 2007 with the Radeon HD 3870, a card that fixed the 2900 XT's power problem, but didn't bring any more performance to the table. The GeForce 8800 GTX continued to enjoy unchallenged supremacy.

January 2008: AMD Radeon HD 3870 X2

AMD finally nabbed the performance title again with the Radeon HD 3870 X2, its first dual-GPU card since the Rage Fury MAXX. With 640 combined stream processors, an 825 MHz GPU clock, and two-512 MB banks of GDDR3 memory at 1.8 GT/s, this card sailed past the GeForce 8800 Ultra at high resolutions. The Radeon HD 3870 X2's reign was short-lived though, as Nvidia had its own dual-GPU card waiting in the wings.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

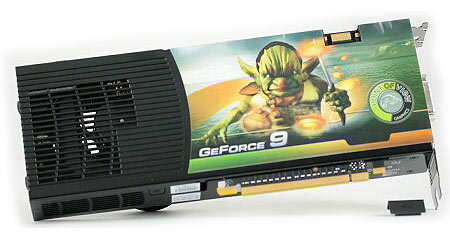

March 2008: Nvidia GeForce 9800 GX2

The GeForce 9800 GX2 was essentially two GeForce 9800 GTX GPUs in a single card. It sported 256 total stream processors, a 600 MHz core, an independent 1.5 GHz shader clock, and two 512 MB banks of RAM at 2 GT/s. The card's performance was indisputably high, and it even used less power than the Radeon HD 3870 X2 under load. But just like the competition, the GeForce GTX 9800 GX2's reign was over quickly due to a new challenger.

June 2008: Nvidia GeForce GTX 280

The GeForce GTX 280 debuted with an impressive 240 stream processors, a 602 MHz core, an independent 1296 MHz shader clock, and 1024 MB of GDDR3 RAM at 2214 MT/s. While performance was on par with the GeForce 9800 GX2 on average, the GeForce GTX 280 was much more consistent, as it didn't rely on SLI driver optimizations. AMD released the Radeon HD 4870 one month later, but it was never intended to combat the GeForce GTX 280 and instead challenged the GeForce GTX 260. An interesting side note: this is the last time a graphics card with a single GPU held the performance crown.

August 2008: AMD Radeon HD 4870 X2

Employing a new strategy to focus on smaller, more efficient GPUs designed to work in tandem on high-end products, AMD pulled a significant coup with its Radeon HD 4000-series. Armed with 1600 stream processors, a 750 MHz core clock, and 2 GB of RAM at 3600 MT/s, the dual-GPU Radeon HD 4870 X2 was part of AMD's core plan and launched only one month after the Radeon HD 4870. Saddled with a large, power-hungry GPU, Nvidia was unable to respond quickly with its own dual-GPU super-card.

January 2009: Nvidia GeForce GTX 295

Not to be outdone, Nvidia released its own dual-GPU board to beat the Radeon HD 4870 X2. The GeForce GTX 295 features 480 stream processors, a 576 MHz core, a 1242 MHz shader clock, and two banks of RAM at 1998 MT/s. This card's GPU wasn't from the GeForce GTX 280. Instead, it was slightly less potent with a narrower memory bus and 28 ROPs. The only other card you found it in was the GeForce GTX 275. AMD wasn't able to compete against the GTX 295 until it launched its Radeon HD 5000-series.

Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

JackMomma Never thought I'd be proud of my ol' 295GTX as I am now... Anyway, it's time to let her cool off again.Reply -

jimmysmitty One thing, you part with the 3870 X2 states it had a combined 160 Pixel Shaders but a single 3870 had 320 Stream processors, same as the HD2900, and a 3870 X2 had 640 Stream processing units.Reply

If anything, the 3870 X2 had 128 SP units that acted as 5 SP units giving it 640.

Other than that, nice article. I remember my 9700 Pro. Too bad the memory died out on it. But it was ok. I got a 9800XT to replace it. -

buzznut Its amazing how many of these I've owned. Voodoo II, Voodoo III, GeForce 4ti, Riva TNT, Radeon 9800, TNT 2 Ultra..Course now that I'm married I can't spend money on things like the latest video card. :(Reply -

utengineer Still running GTX 280's in SLI. I don't get DX11, but I still play every game out there with no problem. 3 years and still going strong. Great investment.Reply -

cangelini jimmysmittyOne thing, you part with the 3870 X2 states it had a combined 160 Pixel Shaders but a single 3870 had 320 Stream processors, same as the HD2900, and a 3870 X2 had 640 Stream processing units.If anything, the 3870 X2 had 128 SP units that acted as 5 SP units giving it 640.Other than that, nice article. I remember my 9700 Pro. Too bad the memory died out on it. But it was ok. I got a 9800XT to replace it.Reply

Absolutely right. Don must have owned a "special" 3870 X2. I've updated the story to reflect the X2s everyone else owned ;) -

toxxel Still have my MSI Geforce 4 TI 4200 128mb DDR card, no real use for it now a days so it just lays in a box with the rest of my outdated stuff.Reply