ATI's Optimized Texture Filtering Called Into Question

Brilinear - Simply Filter Less

Brilinear filtering represented the next optimization step. It's a mixed mode between bilinear and trilinear filtering, or in other words, it involves less filtering. The area in which neighboring mipmaps are blended through the trilinear filter is simply reduced.

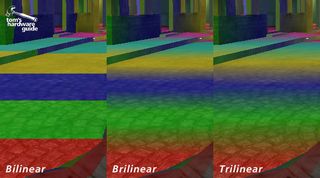

This mode shows the differences between bilinear, brilinear and trilinear. The brilinear shows the optimization used by NVIDIA on the GeForce 6800 Ultra. The amount of optimizations can differ from card to card.

It allows some savings in computing time. In real games, the differences are usually very small and can only be detected when compared with images using proper trilinear filtering.

This type of texture filtering was introduced by NVIDIA, whose complete GeForce FX 5xxx series filters in this manner. Even NVIDIA's new GeForce 6xxx series filters like this by default, but NVIDIA has reacted to past criticism and now offers the user an option to switch this optimization off.

In the past ATi had the reputation of offering better image quality, thanks to proper trilinear filtering. This was also true, until the Radeon 9600, alias RV360, was introduced. Things changed with RV360. The chip now filters brilinearly too, although ATi stresses that this is an adaptive algorithm. The driver determines the degree of optimization using the variability of the mipmaps. ATi's new R420, alias Radeon X800, also uses this optimization.

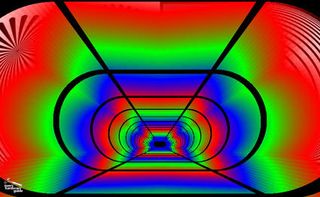

What is annoying is that ATI did not bother explaining this filtering procedure. Reviewers did not notice this new ATI filtering technique because standard filter quality tests using colored mipmaps don't show this behavior. The driver switches to full trilinear whenever colored mipmaps are used.

This what X800 shows in standard image quality tests - full trilinear. In reality (i.e. what is done in games), the image looks different.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: Brilinear - Simply Filter Less

Prev Page Optimization Fever Next Page Brilinear - Simply Filter Less, ContinuedMost Popular