AMD FirePro W8100 Review: The Professional Radeon R9 290

After introducing the flagship FirePro W9100, AMD now has a FirePro W8100 in its portfolio. Somewhat lower specs (like 8 GB of memory, a slower GPU, and fewer shader units) should position it in the workstation world where the Radeon R9 290 is in gaming.

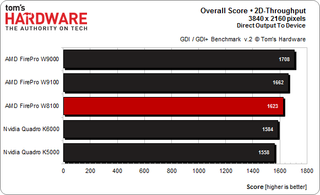

2D Performance: GDI and GDI+

Why Are We Still Looking At GDI and GDI+?

Even in 2014, many applications use GDI and GDI+ for drawing, even if only for their GUIs. Older productivity applications and specific business titles still leverage GDI/GDI+ predominantly. These applications range from simple 2D CAD programs and viewers to pre-print stage WYSIWYG layout programs and file import/export programs.

As modern graphics cards with unified shaders don’t feature dedicated 2D units anymore, and modern operating systems no longer access graphics cards directly, device drivers play a crucial role in facilitating fast 2D functions.

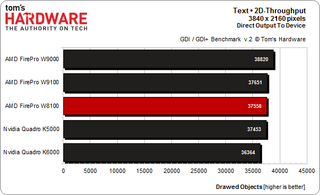

Text

Displaying text is a crucial task and, needless to say, both manufacturers make sure that their high-end graphics cards render large amounts of text almost instantly.

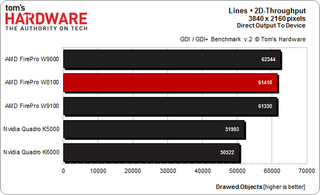

Lines

Another basic 2D element is lines (the lines in a menu, for example). Again, none of the cards encounter problems with this task, though we notice that AMD's products are around 20 percent faster than Nvidia's.

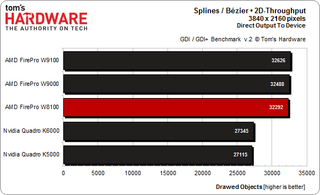

Splines / Bezier Curves

Curvy lines require some computational power, and it's only natural that they take longer to draw. Just as we saw in the line test, there's a noticeable gap between the AMD and Nvidia cards. The FirePro W9100 and W8100 come close to each other, even though the older W9000 does manage to slide in between them.

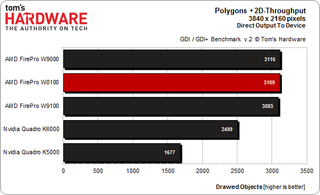

Polygons

This benchmark draws filled and unfilled polygons with three to eight vertices; AMD's hardware knows how to handle it. You can't say the same for Nvidia's Quadro K5000, which is slightly better than half as fast as the older FirePro W9000.

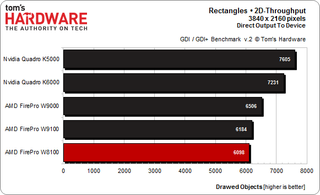

Rectangles

Yes, rectangles are polygons, too. But GDI exports a separate, simpler API for drawing them. Needless to say, we expected the cards to draw rectangles faster than polygons.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Apparently, AMD treats rectangles just like any other polygon, whereas Nvidia employs a more efficient implementation, yielding a benchmark win. Then again, pure rectangles aren’t used all that often, so this is arguably not a big shortcoming. Unfilled objects are usually just drawn as polygons anyway, and this function doesn’t allow the output of rotated rectangles.

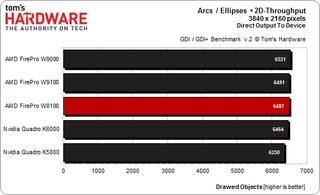

Circles, Circle Segments and Ellipses

All cards demonstrate comparable performance in the Arcs and Ellipses benchmark.

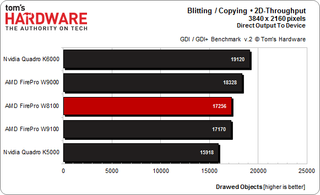

Bit Blitting

Bit blitting, which is copying a block from system to graphics memory, is becoming less important. After all, it's the graphics card itself that's supposed to fill its RAM with pixels, not the CPU. Not surprisingly, the performance of this operation hasn't increased much over the past few years. In fact, it actually went in the other direction. Today’s high-end graphics cards barely manage to beat the latest integrated graphics chipsets from Nvidia with full 2D functionality (such as the nForce 630i with GeForce 7100 graphics). Nvidia seems to address the operation a bit better, though in truth every card posts somewhat disappointing results.

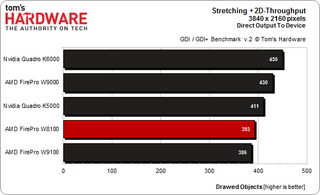

Stretching

Stretching is even worse, since the CPU has to help out. Overhead due to the driver getting lost in emulations and offloading computations to the CPU packs quite a whammy.

Summary

Neither Nvidia nor AMD earn a definitive win when it comes to GDI performance. Disappointingly, 2D alacrity seems to be at a standstill, and it has since 2010. At least we've seen AMD alleviate some bottlenecks since then.

In general, applications achieve better performance if they render everything into a temporary DIB (device-independent bitmap) and copy the final result to the graphics card. But at higher screen resolutions, the amount of data that needs to be moved across the PCIe interface can be quite substantial. It's appalling that, in the age of PCIe 3.0, copying data to the graphics card is still an order of magnitude slower than similar operations within the workstation’s RAM.

With that said, AMD's cards perform better than Nvidia's, mostly due to the faster line, spline, and polygon functions. We'd like to think our scathing criticism of AMD’s drivers in the past is partly responsible for this improvement.

The new FirePro W9100 renders complex 2D drawings via GDI almost twice as fast as Nvidia's Quadro K5000. Or only half as slowly. It depends on your point of view.

Current page: 2D Performance: GDI and GDI+

Prev Page OpenCL: Financial Mathematics and Scientific Computations Next Page SPECviewperf 12: CATIA, Creo and Maya 2013-

Memnarchon "The video shows that the AMD FirePro W8100 is bearable when it comes to maximum noise under load. This also demonstrates that a thermal solution originally designed for the Radeon HD 5800 (which hasn't changed much since) deals with the W8100’s nearly 190 W a lot better than the W9100's 250 W."Reply

Wait a minute. If this kind of cooling is better than the ones that used on R9 290 and they had this kind of technology from HD5800 series, then why in the hell they didn't use it on R9 290 series instead of using this crap cooler they used? -

FormatC It is the same cooler, but the power consumption of the W8100 is a lot lower. This cooler type can handle up to 190 watts more or less ok, but the R9 290(X) produces more heat due a more expensive power consumptionReply -

Cryio "Nvidia's Quadro K5000 is quite a bit cheaper, but comes with half the memory, less 4K connectivity, and is generally slower."Reply

That's almost an understatement. The K5000 is almost constantly 50% slower than the W8100, with a few 25% cases difference. For 700 $ more, the W8100 looks like a great buy. -

Memnarchon Reply

Well this doesn't approve that the cooler they used is superior. It might be higher TDP rated but that doesn't mean that its better than a lower TDP rated. We know how this rated works, the number is not by any means absolute. And we have seen in the past (especially at CPU coolers) higher TDP rated coolers to loose against lower TDP coolers for a lot of reasons (better quality, better tech, better materials, heatpipe placement etc etc).13836269 said:It is the same cooler, but the power consumption of the W8100 is a lot lower. This cooler type can handle up to 190 watts more or less ok, but the R9 290(X) produces more heat due a more expensive power consumption

I think the real reason might come from your review.

I don't believe in coincidence, but they decided to use it on a more expensive professional GPU with great success.

How do we know that the cooler used in W8100 wasn't approved for R9 290(X) cause of its higher cost perhaps?

ps: Am I asking too much if I ask from any reviewer on Tom's to test this cooler on a R9 290? (if its compatible ofc...) -

bambiboom Gentlemen?,Reply

The focus on the Firepro W8100 and Quadro K5000 being competitors as something to directly compare is a bit misleading and distracts attention from the impressive features of the Firepro W8100.

The W8100 does outperform the Quadro K5000 is some important ways, but to be in marketing competition, the performance should be to be in the same general league. The W8100 is 56% more expensive- the price difference of $900 is more than enough to buy a K4000 (About $750).

On a marketing-basis a $60,000 car that is 50% faster is not a direct competitor to a $38,000 one. The use and expectations of performance and quality are different. The logic is to say, "If you're thinking of buying a Quadro K5000, you should know that for 56% more you can have 25-50% higher performance in several important but not all categories." These purchases are most often budget driven- how many have unlimited funds- and the buyer of a $1,600 card will be a different person from someone with a $2,500 budget. The buyer's quest is more often based on how much performance is expected combined with how much is possible within the budget.

These cards may have the same applications, but for the W8100 to be better value than a K5000, it should have a consistent 56% performance advantage. A better comparison would be to consider for example, the W7000 and Quadro K4000. Both about $750, but the W7000 is 256-bit, has 4GB. a 154GB/s bandwidth. and 1280 stream processors against the K4000's 192-bit 3GB, 134GB/s and 768 CUDA cores. On Passmark Performance Test, a W7000 3D score near but not the top is about 4300 and 2D at about 1000 while the K4000 scores near the top at about 3000 3D and 1100 2D. The news for AMD is even better when considering that a $1,600 Quadro K5000- double the W7000 cost but also 4GB and 256-bit- near the top 3D scores are about 4300 and in 2D about 900. For me, a better marketing strategy would be to compare the K5000 to the W7000 and the W8100 to a mythological "K5500" that would cost $2,800 (midway between 4 and 12GB and $1600 and $5000).

This means that the person looking for the best performance for $750 -and uses the applications the W-series is good at- has an easy choice in the W7000.

Still, the features- especially the 512-bit and 8GB plus overall performance make the W8100 one to consider in the upper end of workstation cards. This should be a very good animation /film editing card. The comments about AMD being more forward looking than NVIDIA may be correct though the comments about the quality of Quadro drivers also seems true. This furthers the trend of GPUs tending to concentrate in certain functions-( the W8100 in OpenCL for example), having to consider GPU's one by one according to the applications used. More and more, with complex 3D modeling and animation software, specific software drives graphics card choices and except for the very top of the lines, the cards seem to less all-rounders than before- not good at everything.

BambiBoom -

nebun call me stupid but how is a $2600 gpu a fair competition to a $1600 gpu....am i missing something?....also the amd gpu has more cores....this is not a fair comparison....take an envidia card with the same about of cores and we see who comes on top....AMD HAS THE WORST DRIVERSReply -

falchard I would like to see these matched against their desktop counterparts in productivity tasks as well. Over the years we have seen a shift in architecture where the desktop part is pretty much the same as the workstation part using different drivers. Some of us need to compare the benefits of workstation cards over desktop cards. A few years ago in CAD based programs we would see a 400% or more increase in performance compared to desktop chips, and knowing this is still the case is very important.Reply -

mapesdhs Typo: "... our processor runs at a base close rate ... "Reply

I assume that should be, 'clock rate'.

Btw, how come the test suite has changed so that there is no longer any

app being used such as AE for which NVIDIA cards can be strong because

of CUDA support?

Ian.

-

mapesdhs A down-vote eh? I guess the proverbial NVIDIA-haters still lurk, unwilling toReply

present any rationale as usual. :D

And falchard is right, Viewperf tests showed enormous differences between

pro & gamer cards in previous years, but it seems vendors are deliberately

blurring the tech now, optimising for consumer APIs (ie. not OGL), which

means pro tests often run well on gamer cards. In which case where is their

rationale for the cost difference? Apart from support and supposedly better

drivers, basic performance used to be a major factor of choosing a pro card

and a sensible justification for the extra cost, but this appears to be not the

case anymore; check Viewperf11 scores for any gamer vs. pro card, the only

test where a gamer card isn't massively slower is ENSIGHT-04. For MAYA-03,

a Quadro 4000 is 3X faster than a GTX 580; for PROE-05, a Q4K is 10X faster;

for TCVIS-02, a Q4K is 30X faster.

Today though, with Viewperf12, a 580 is faster than a K5000 for MAYA-04,

about the same for CREO-01, about the same for SHOWCASE-01 and

not that much slower for SW-03. Only for CATIA-04 and SNX-02 does the

expected difference persist.

Meanwhile we get OpenCL touted everywhere, even though there are plenty

of apps which can exploit CUDA, but little attempt to properly compare the

two when the option to use the latter is also available, eg. 3DS Max, Maya,

Cinema4D, AE, LW, SI, etc.

Ian.

PS. nebun, the core structure on these cards is completely different. The number

of cores is a totally useless measure, it tells one nothing. One can't even compare

between different cards from the same vendor, eg. a GTX 770 has way more cores

than a GTX 580, but a 580 hammers the 780 for CUDA. Indeed, a 580 beats all

the 600 series cards for CUDA despite having far few cores (it's because the newer

cards use a much lower core clock, less bandwidth per core, etc.)

-

ddpruitt Doesn't Tom's do copy editing? What's up with the chart with blanks at the bottom of page 13.Reply

It's nice to see that AMD is starting to close the gap on it's products. They seriously need to consider updating their cooling solutions and improving power. I would be interested to see if these workstation cards throttle down as often as their desktop counterparts. In my experience most of the current Hawaii chips are running higher voltages than needed and they could save both power and heat by running them down a bit. It should allow the boards to stay stable and compete better in many workloads.

Most Popular