Nvidia's GeForce GTX 285: A Worthy Successor?

Benchmark Results: Crysis

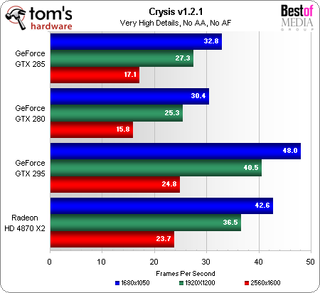

Even after all this time, Crysis continues to punish graphics cards with high details and minimal optimization. While most of the world is more interested in the more efficient Crysis Warhead version, inclusion of this older title in our GTX 295 Quad-SLI evaluation caused it to reappear in today’s review.

While the test appeared to run smoothly on the GTX 285 at our lowest-tested Crysis settings, occasional stutters would get the player fragged in a real game. The only good option for a single-GPU card would be to reduce detail levels, although the dual-GPU GeForce GTX 295 could make 1920x1200 pixel game play possible for buyers with more discretionary income.

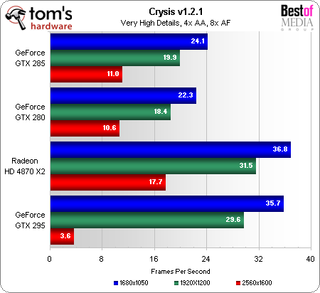

Players can forget about using very high details with AA and AF enabled in Crysis, as even the dual-GPU cards suffered enough stutter to cause an occasional surprise ending. The GTX 285 edged out the GTX 280, but with all cards producing unplayable frame rates, this win is purely academic.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: Benchmark Results: Crysis

Prev Page Benchmark Results: COD World At War Next Page Benchmark Results: Far Cry 2-

Proximon Perfect. Thank you. I only wished that you could have thrown in a 4870 1GB and a GTX 260+ into the mix, since you had what I'm guessing are new beta drivers. Still, I guess you have to sleep sometime :pReply -

fayskittles I would have liked to see the over clocking that could be done to all cards and see how they compare then.Reply -

I would like to see a benchmark between SLI 260 Core 216, SLI 280, SLI 285, GTX 295, and 4870X2Reply

-

ravenware Thanks for the article.Reply

Overclocking would be nice to see what the hardware can really do; but I generally don't dabble into overclock video cards. Never seems to work out, either the card is already running hot or the slightest increase in frequency produces artifacts.

Also driver updates seem to wreak havoc with oc settings. -

wlelandj Personally, I'm hoping for a non-crippled GTX 295 using the GTX 285's full specs(^Core Clock, ^Shader Clock, ^Memory Data Rate, ^Frame Buffer, ^Memory Bus Width, and ^ROPs)My & $$$ will be waiting.Reply -

A Stoner I went for the GTX 285. I figure it will run cooler, allow higher overclocks, and maybe save energy compared to a GTX 280. I was able to pick mine up for about $350 while most GTX 280 cards are still selling for above $325 without mail in rebates counted. Thus far, over the last three years I have had exactly 0 out of 12 mail in rebates for computer compenents honored.Reply -

A Stoner ravenwareThanks for the article.Overclocking would be nice to see what the hardware can really do; but I generally don't dabble into overclock video cards. Never seems to work out, either the card is already running hot or the slightest increase in frequency produces artifacts.Also driver updates seem to wreak havoc with oc settings.I just replaced a 8800 GTS 640MB card with the GTX 285. Base clocks for the GTS are 500 GPU and 800 memory. I foget the shaders, but it is over 1000. I had mine running with 0 glitches for the life of the card at 600 GPU and 1000 memory. Before the overclock the highest temperature at load was about 88C, after the overclock the highest temperature was 94C, both of which were well within manufaturer specifications of 115C. I would not be too scared of overclocking your hardware, unless your warranty is voided because of it.Reply

I have not overclocked the GTX 285 yet, I am waiting for NiBiToR v4.9 to be released so once I overclock it, I can set it permantly to the final stable clock. I am expecting to be able to hit about 730 GPU, but it could be less. -

daeros ReplyBecause most single-GPU graphics cards buyers would not even consider a more expensive dual-GPU solution, we’ve taken the unprecedented step of arranging today’s charts by performance-per-GPU, rather than absolute performance.

In other words, no matter how well ATI's strategy of using two smaller, cheaper GPUs in tandem instead of one huge GPU works, you will still be able to say that Nvidia is the best.

Also, why would most people who are spending $400-$450 on video cards not want a dual-card setup. Most people I know see it as a kind of bragging right, just like water-cooling your rig.

One last thing, why is it so hard to find reviews of the 4850x2? -

roofus because multi-gpu cards come with their own bag of headaches Daeros. you are better off going CF or SLI then to participate it that pay to play experiment.Reply

Most Popular