PowerColor Devil R9 390X Review

Closed-loop liquid cooling isn't just for the Fury X. PowerColor uses a similar setup for its Devil R9 390X 8GB, but how does the fancier thermal solution affect performance, cooling and noise?

Why you can trust Tom's Hardware

Gaming Benchmarks

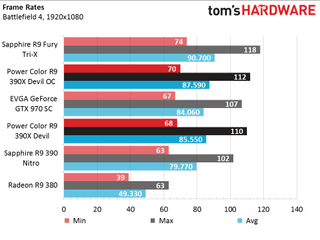

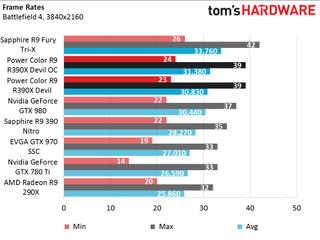

Battlefield 4

Battlefield 4 is several years old, but it's still a great workload for even the most powerful graphics cards. PowerColor's Devil R9 390X, with its aggressive overclock, fares well against the competition.

In stock form, PowerColor's card performs as expected. It's slightly slower than the Fury X, and considerably faster than the Radeon R9 390. This game is one where Nvidia's GeForce GTX 970 outperforms the R9 390, matching the Devil at its factory clock rates.

QHD is where the Radeon R9 390X really shines, though. The R9 390X Devil performs well here, keeping pace with Nvidia's GeForce GTX 980 and the Fury Tri-X. If your native resolution is 2560x1440, you have to love a graphics card averaging right around 60 FPS.

Although 4K gets a lot of attention for its ability to punish even high-end hardware, it's still fairly rare (under .1%, according to Steam's most recent hardware survey), likely owing to the resolution's requirements for fluid game play. Despite the R9 390X Devil's 8GB of memory and modest overclock, a Grenada GPU isn't fast enough to drive 4K on its own. You'd need a faster processor or multiple cards in CrossFire.

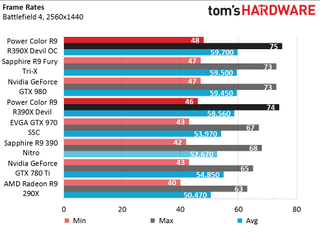

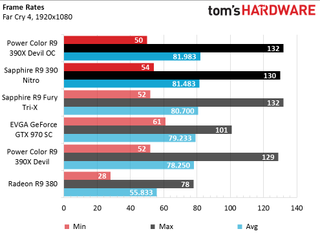

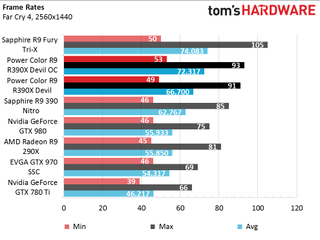

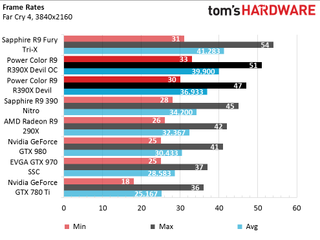

Far Cry 4

Far Cry 4 is much newer, and it is written using some of Nvidia's GameWorks technologies. You'd naturally expect it to favor that company's hardware. But Radeon owners still play the game, so we need to know what to expect.

The Devil R9 390X performs well at 1920x1080, averaging around 2 FPS less than Sapphire's Fury Tri-X. After overclocking, we even got the Devil card to lead.

Nvidia's GeForce GTX 970 averages roughly the same performance, but achieves a much higher minimum frame rate, staying above 60 FPS at all times.

As we increase the resolution, Nvidia's boards start to slip. Meanwhile, the Devil manages 66 FPS in its stock form and 72 FPS after overclocking. The Fury Tri-X is marginally faster, managing 12 more frames per second at the top end.

The Devil R9 390X fares well in Far Cry 4 at 4K. At no point does the frame rate dip below 30, and it's usually in the mid- to high-30s. Those numbers aren't great, but at 3840x2160, it's what we've come to expect. Still, you'd be happier with a faster card or multiple GPUs rendering cooperatively.

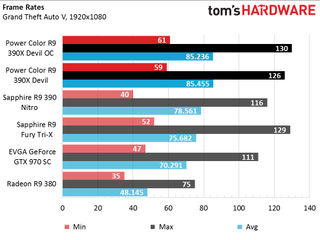

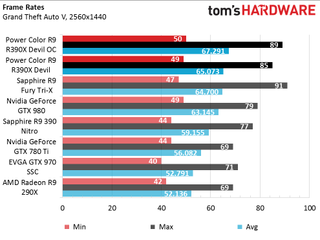

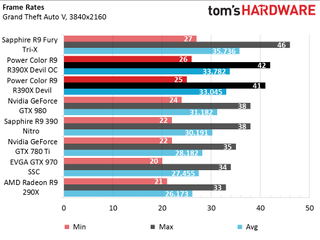

Grand Theft Auto V

GTA V is notorious for utilizing a lot of graphics memory. If any title is going to benefit from the 390X's 8GB, this should be it.

In stock form, PowerColor's R9 390X Devil manages a significant lead over the Fury, averaging approximately 10 FPS more. It goes without saying that the card we're reviewing handles these settings with ease at 1080p.

With the resolution cranked up to 1440p, the gap between PowerColor's Devil R9 390X and Sapphire's R9 Fury Tri-X disappears. While the Devil continues to lead, the difference is negligible. The Fiji GPU and its 4GB of HBM are not yet a bottleneck.

At 3840x2160, HBM's superior bandwidth allows the Fury to extend its advantage of the Devil R9 390X. More capacity doesn't seem to help the Grenada GPU. Regardless, the minimum frame rates from both cards are too low for this to be a viable setup. You'd want to scale back on the detail settings or add hardware.

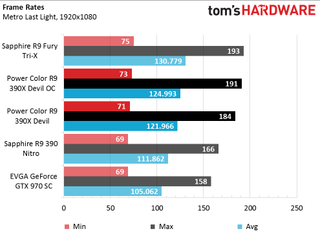

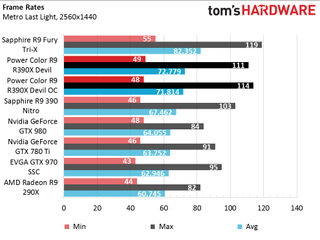

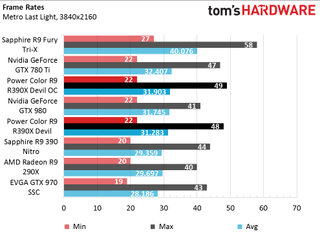

Metro: Last Light

Running Metro: Last Light at 1080p yields well over 100 FPS from the entire field.

For gamers playing on QHD displays, the Devil R9 390X is an excellent option. We measured frame rates in the low 70s, with minimums that remain north of 40. The Fury card enjoys a sizable lead over PowerColor's card, while Nvidia's GeForce GTX 980 trails by nearly 8 FPS.

The Devil R9 390X doesn't do as well at 4K compared to the Fury, which isn't constrained by its 4GB of HBM, and seems to really benefit from its extra shading resources. Interestingly, the GeForce GTX 980 and 780 Ti are in the same league as PowerColor's board.

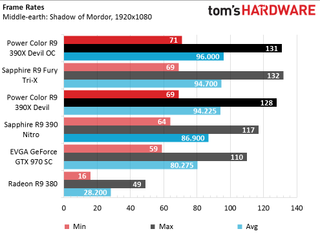

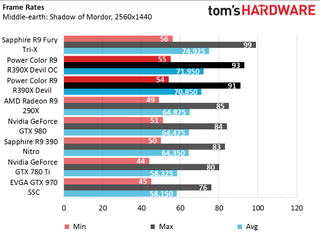

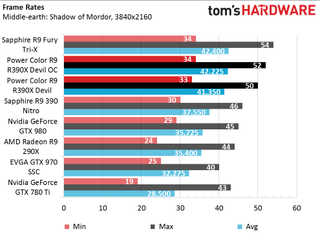

Middle-earth: Shadow of Mordor

In stock form, PowerColor's Devil R9 390X handles 1920x1080 as well as Sapphire's Fury Tri-X; overclocking pushes it ahead of the Fiji-based board.

Running at 2560x1440, the Devil loses its edge over the Fury, which assumes the lead. Still, the Devil R9 390X is best suited to this resolution. You pay less money for playable frame rates at QHD.

Another resolution bump takes us to 4K, where the Devil catches back up to Sapphire's Fury. Prior to overclocking, the Devil R9 390X lands within one frame per second of the HBM-equipped board on average. After tuning PowerColor's card, they're almost indistinguishable.

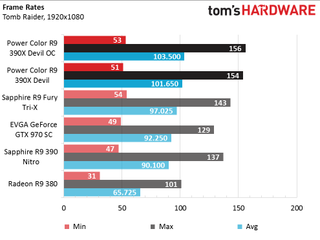

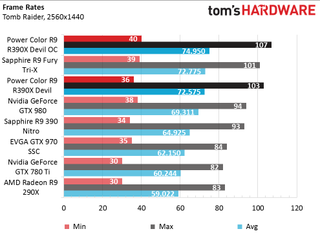

Tomb Raider

As you can see from the graphs, our short benchmark run in Tomb Raider features highly variable frame rates. PowerColor's Devil R9 390X outpaces the Fury by an average of 4 FPS, though the Fury doesn't dip as low as the Devil.

At 2560x1440, the difference between 390X and Fury closes (though overclocked settings confer an advantage to PowerColor's card). With an average frame rate in the 70s, Tomb Raider can easily be considered playable, despite minimums that drop into the 30s.

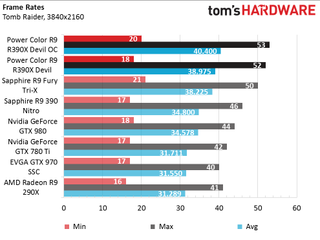

If you want a decent frame rate at 4K, you'll need to lower this game's detail settings. Though the Devil R9 390X manages to pass the Fury, neither card touches 60 FPS on the high side. Averages approaching 40 aren't bad, but those minimums are jarring, to be sure.

MORE: Best Graphics CardsMORE: All Graphics Content

Current page: Gaming Benchmarks

Prev Page How We Test Next Page Overclocking, Noise, Temperature & PowerStay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

utroz Hmm.. So a pre almost max OCed card with watercooling.. Only around 6 months late (or more if you count the 290X 8GB as basically the same as a 390X). At this point if you have a decent card wait for 16nm..Reply -

BrandonYoung An impressive result by AMD and PowerColor! I'm looking forward to future (more modern) released by these companies hoping to bring more competition into the once stagnant GPU realm!Reply

I'm aware this is a review of the Devil R9, yet I'm curious why the GTX 980 was mentioned in the noise graph, but omitted in the temperature graph, I get the feeling its because it will show the card was throttling based on thermals, helping describe its performance in the earlier tests, this is strictly speculation on my behalf however, and highly bias as I currently own a 980. -

ryguystye Does the pump constantly run? I wish there was a hybrid liquid/air cooler that ran the fan only when idle, then turned on the water pump for more intensive tasks. I don't like the noise of water pumps when the rest of my system is idleReply -

fil1p ReplyDoes the pump constantly run? I wish there was a hybrid liquid/air cooler that ran the fan only when idle, then turned on the water pump for more intensive tasks. I don't like the noise of water pumps when the rest of my system is idle

The pump has to run, even at low RPMs, otherwise the card would overheat. The waterblock itself is generally not enough to dissipate heat. The waterblock simply transfers the heat to the water and the radiator does almost all of the heat dissipation. If the pump is off there is no water flow through the radiator, no water flow will mean heat from the waterblock is not dissipated, causing the water in the waterblock to heat up and the GPU to overheat.

-

elho_cid What's the point testing on the windows 8.1? I mean, there was enough time to upgrade to windows 10 already... It was shown several times that the new W10 often provide measurable performance advantage.Reply -

Sakkura To be fair, overclocking headroom varies from GPU to GPU. Maybe you just got a dud, and other cards will overclock better.Reply -

Cryio Why test on 15.7? Seriously, that's like 6 drivers old. AMD stated they will release WHQL just ocasionaly, with more Beta throughout the year. You're doing them a diservice benching only "official" drivers.Reply

Nvidia's latest ... dunno, 12 drivers in the last 3 months were all official and most of them broke games or destroyed performance in a lot of other games. -

kcarbotte ReplyWhy test on 15.7? Seriously, that's like 6 drivers old. AMD stated they will release WHQL just ocasionaly, with more Beta throughout the year. You're doing them a diservice benching only "official" drivers.

Nvidia's latest ... dunno, 12 drivers in the last 3 months were all official and most of them broke games or destroyed performance in a lot of other games.

At the time this review was written it was not that old. As mentioned in the article, we first got this card over the summer. The test were done a couple months ago now and at the time they were done with the driver that Power Color suggested after having problems with the first sample.

An impressive result by AMD and PowerColor! I'm looking forward to future (more modern) released by these companies hoping to bring more competition into the once stagnant GPU realm!

I'm aware this is a review of the Devil R9, yet I'm curious why the GTX 980 was mentioned in the noise graph, but omitted in the temperature graph, I get the feeling its because it will show the card was throttling based on thermals, helping describe its performance in the earlier tests, this is strictly speculation on my behalf however, and highly bias as I currently own a 980.

The temperature of the 980 was omitted because the ambiant temperature of the room was 3 degrees cooler when that card was tested, which affected the results. I didn't have the GTX 980 in the lab to redo the tests with the new sample. I had the card when the defective 390x arrived for the roundup, but when the replacement came back it was loaned to another lab at the time.

Rather than delay the review even longer, I opted to omit the 980 from the test.

It had nothing to do with hiding any kind of throttling result. If that were found we wouldn't slip it under the rug.

What's the point testing on the windows 8.1? I mean, there was enough time to upgrade to windows 10 already... It was shown several times that the new W10 often provide measurable performance advantage.

We have not made the switch to Windows 10 on any of our test benches yet. I don't make the call about when that happens and I don't know the reasons behind the delay. -

Cryio Well then, sir @kcarbotte, I can't wait until you guys get to review some AMD GPUs on Windows 10 with the new Crimson drivers and some Skylake i7s thrown into the mix !Reply -

kcarbotte Reply17016358 said:Well then, sir @kcarbotte, I can't wait until you guys get to review some AMD GPUs on Windows 10 with the new Crimson drivers and some Skylake i7s thrown into the mix !

You and me both!

I have a feeling that the Crimson drivers have better gains in Win10 than the do in older OS's.