PowerColor Devil R9 390X Review

Closed-loop liquid cooling isn't just for the Fury X. PowerColor uses a similar setup for its Devil R9 390X 8GB, but how does the fancier thermal solution affect performance, cooling and noise?

Why you can trust Tom's Hardware

Overclocking, Noise, Temperature & Power

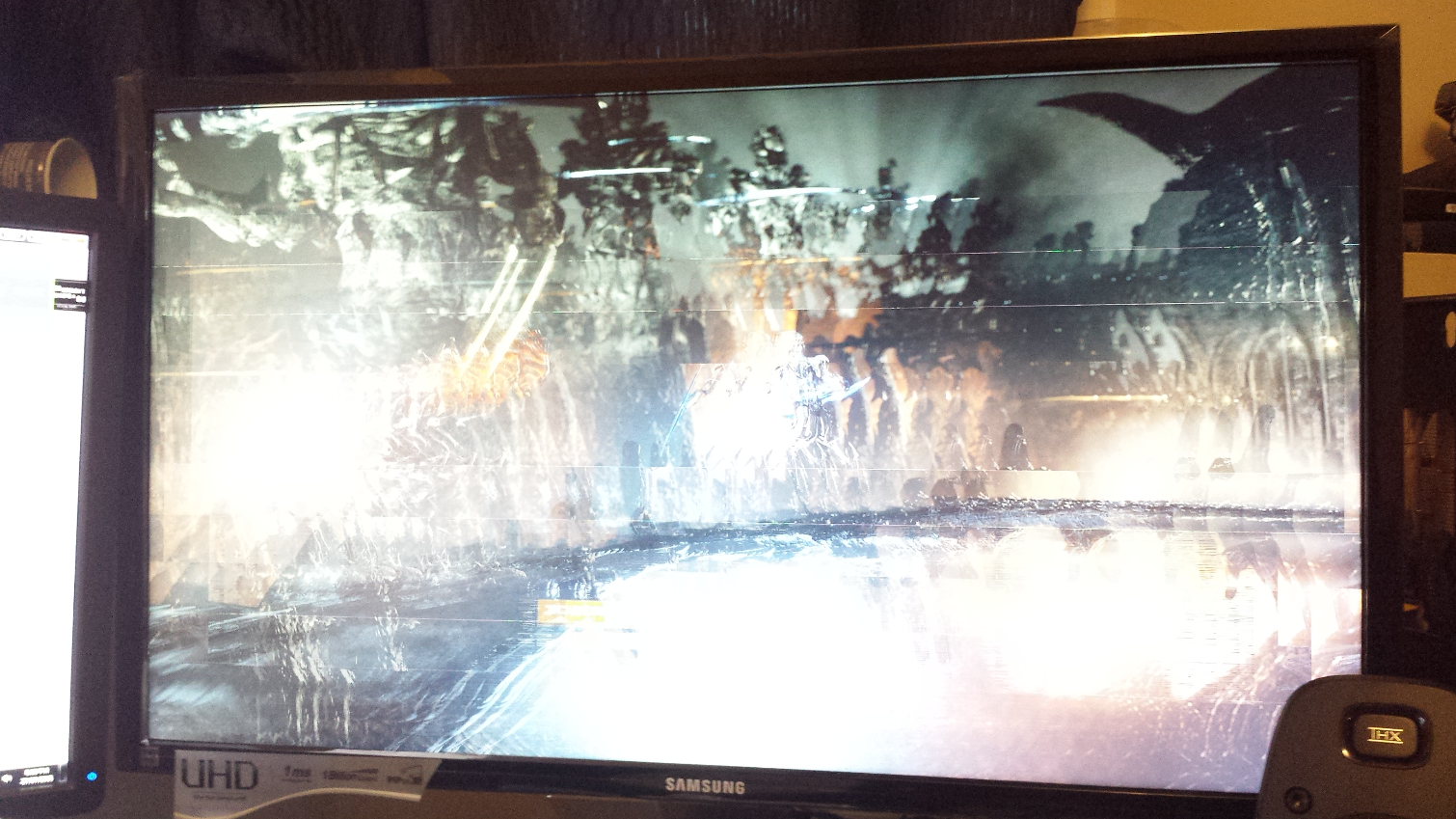

Before I get into our overclocking experience, I need to mention that this isn't our first sample of PowerColor's Devil R9 390X. A pre-production card landed in our lab several months ago. Unfortunately, the early sample arrived with a pre-production BIOS that affected its clock rate (it wouldn't run at the advertised frequencies, causing display artifacts so severe that the test platform was unusable).

PowerColor sent a second sample that arrived with the same BIOS. But the company also provided an updated firmware to flash to the card. After writing that file to the board, we continued seeing significant display issues. PowerColor suggested that there could be a problem with the installed software, and recommended using Display Driver Uninstaller. After that utility removed all remnants of AMD's and Nvidia's driver packages, Catalyst 15.7.1 was reinstalled. Sadly, the distorted display remained.

While troubleshooting the issue, I determined that our DisplayPort cable was the problem's source. I use a Samsung U28D590D 4K display for testing, and for whatever reason, the bundled cable doesn't play nice with PowerColor's graphics card.

Overclocking

Nothing beyond the factory-stated specifications is ever guaranteed, but manufacturers don't make extreme cooling solutions without expecting their cards to be pushed to the limit. To that end, once we had a stable card, overclocking it was a priority. For science.

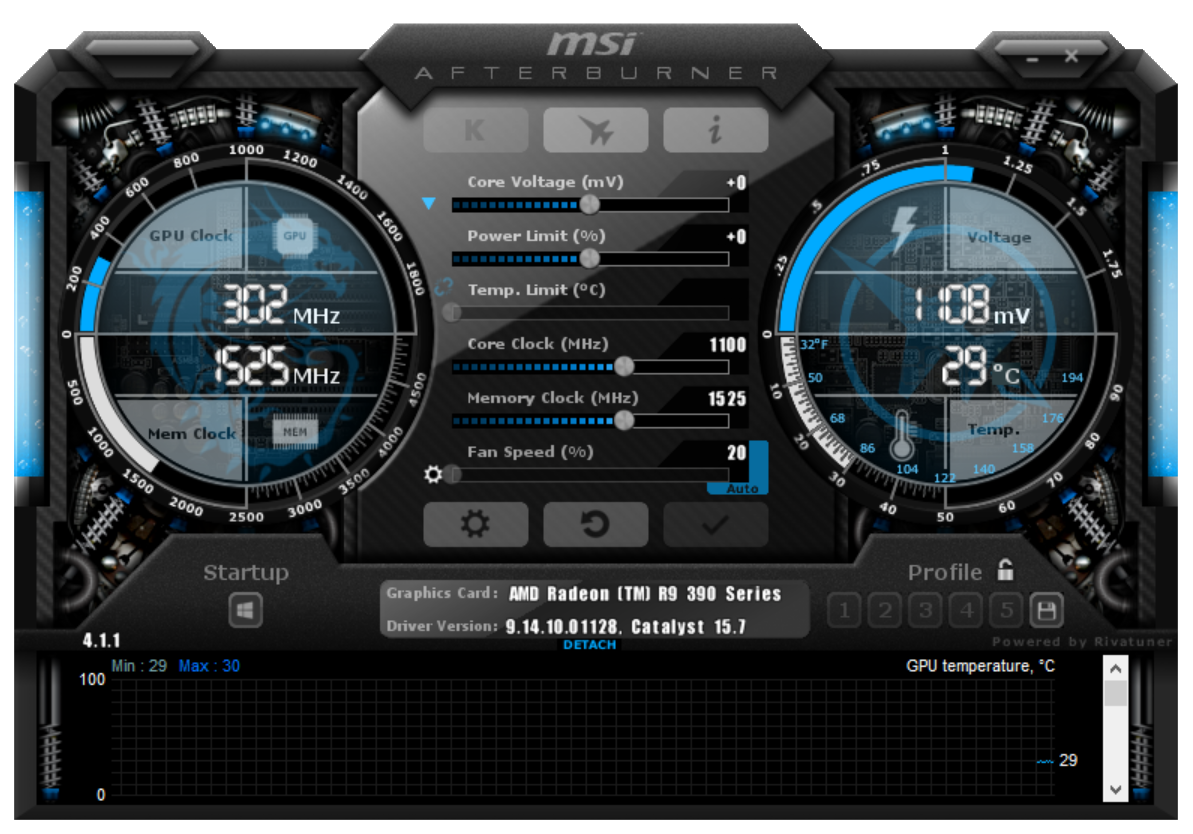

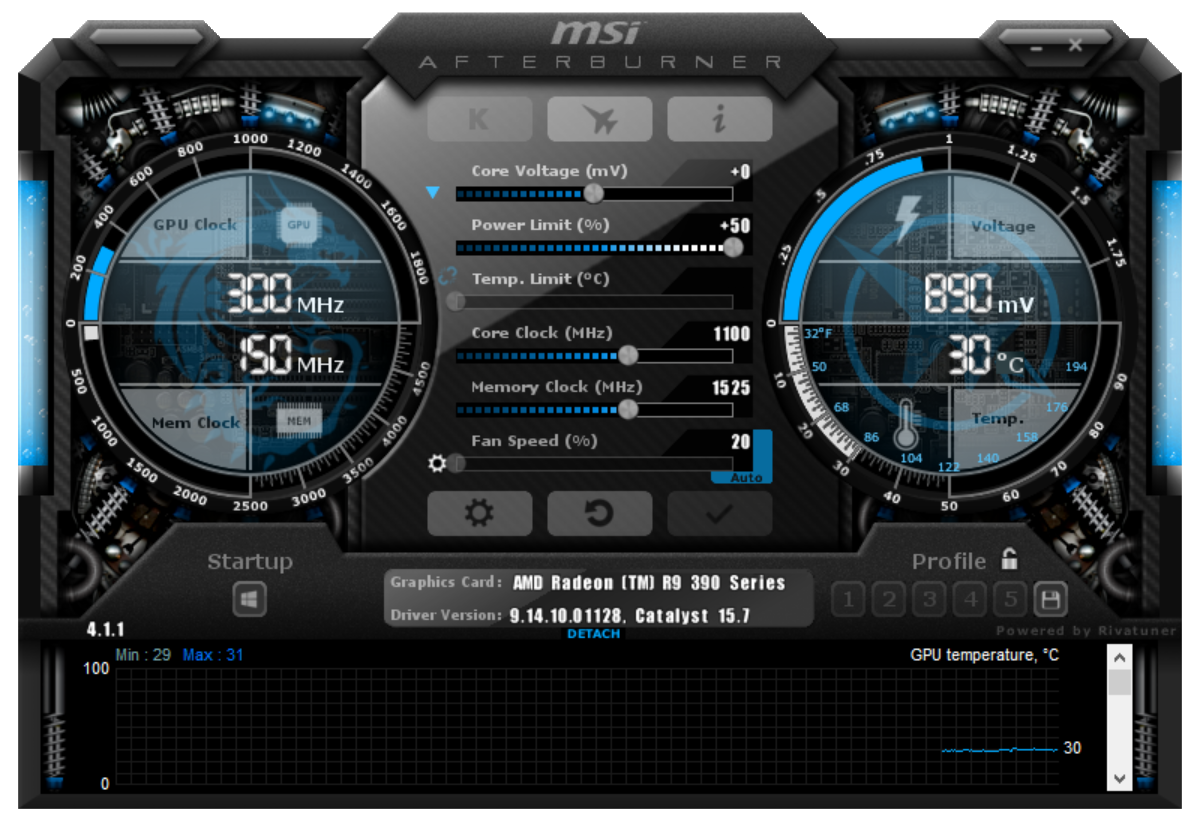

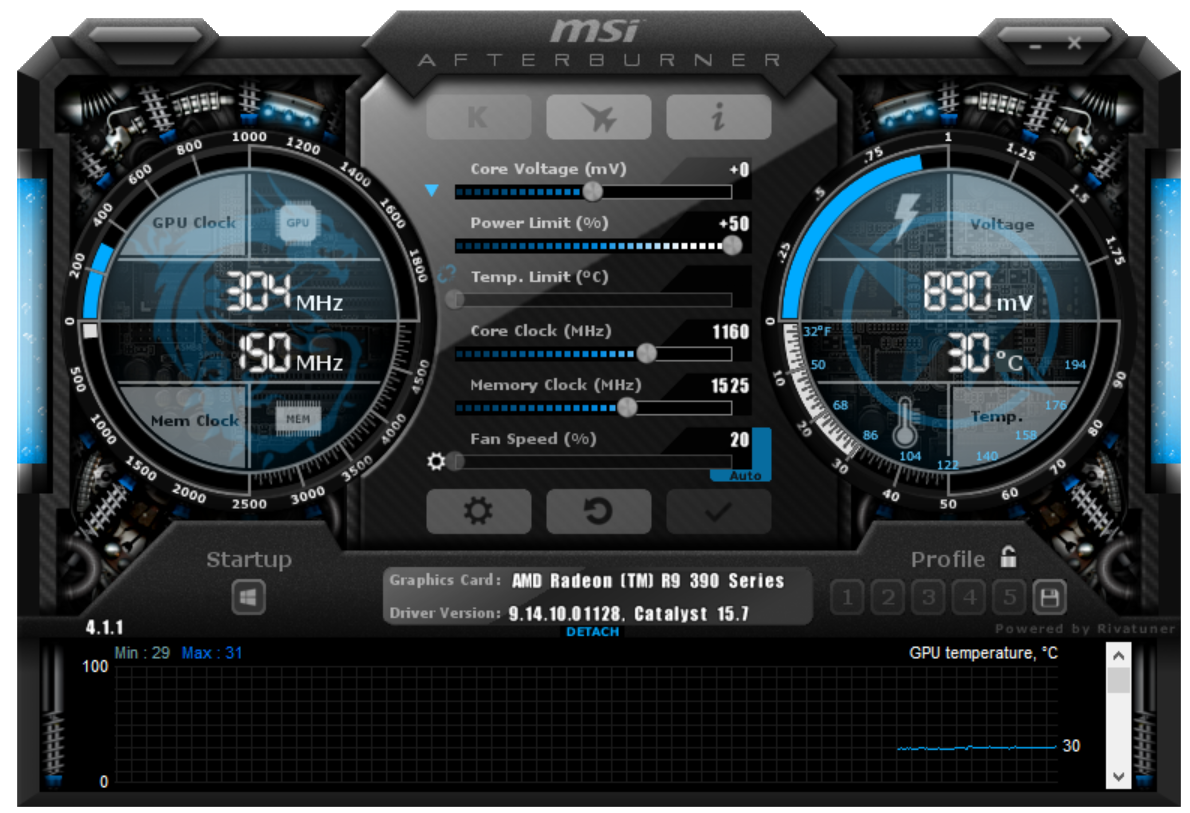

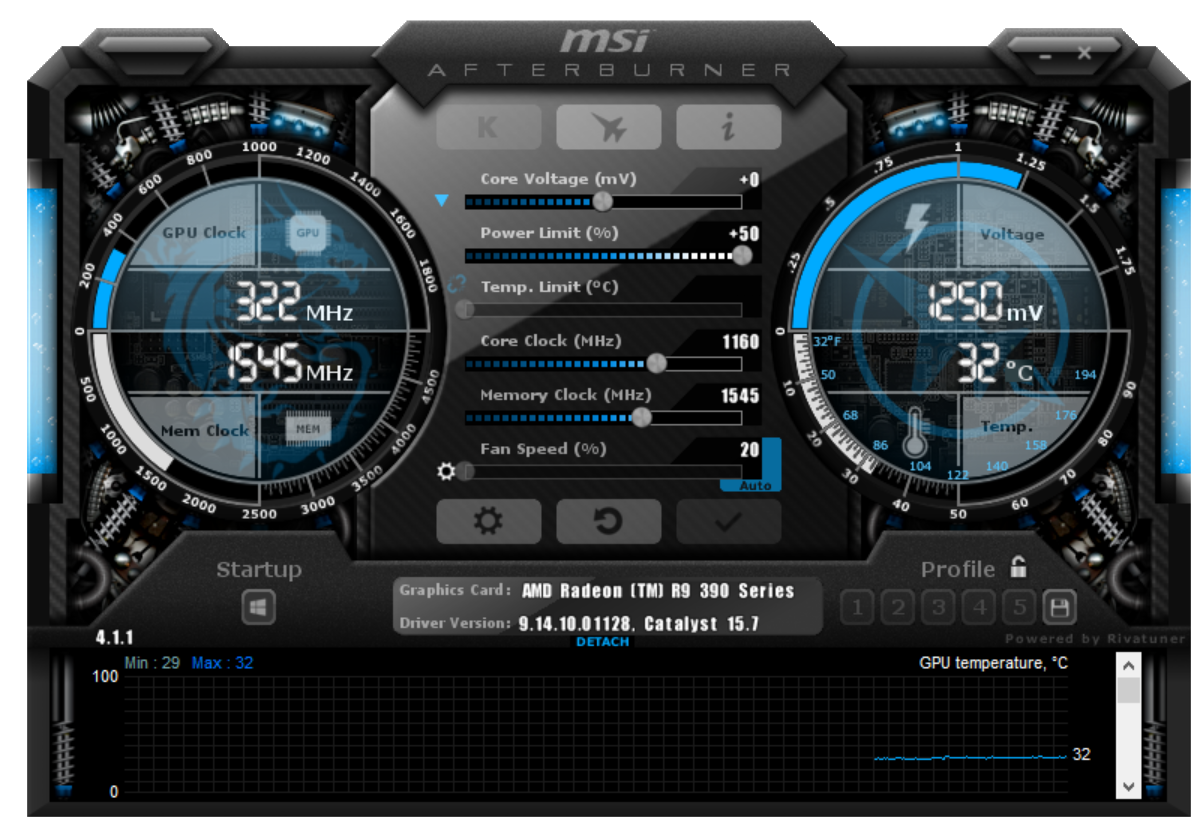

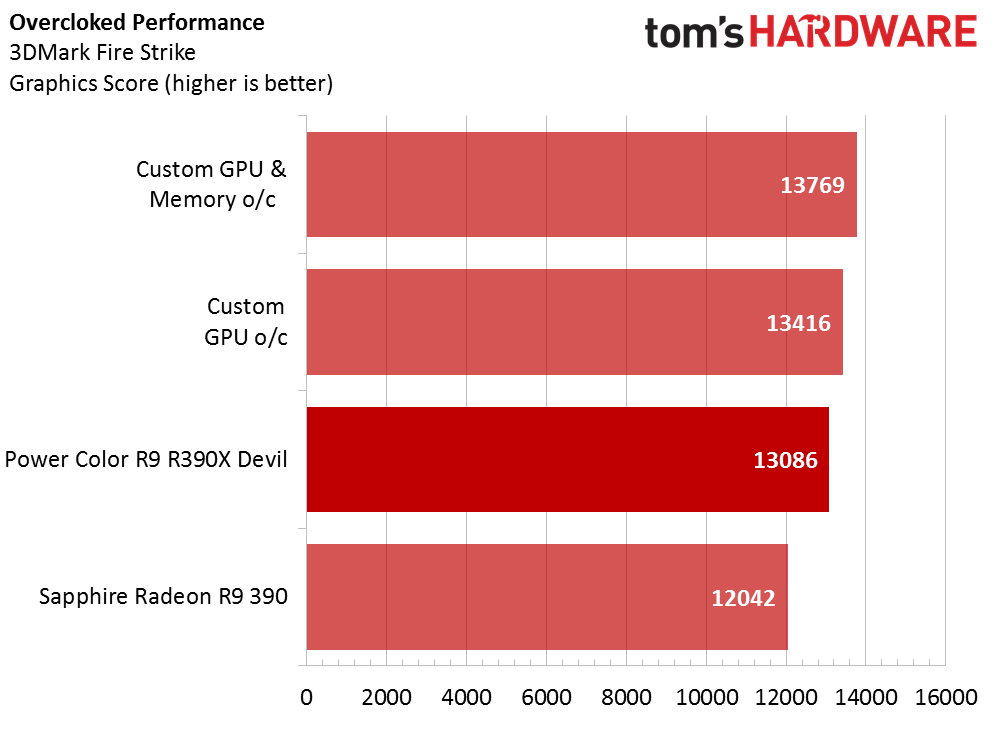

First, I ran 3DMark to get a baseline score for the Devil R9 390X. Strangely, this ended up being lower than the score I recorded from Sapphire's Nitro R9 390 earlier this summer. Next, I opened MSI Afterburner and maxed out the power limit. I ran another baseline test with the maxed-out power limit to make sure it didn't cause any instability. The results came back the same, and I observed no artifacts on-screen.

Using 10MHz increments, I found that the GPU core maxed out at 1160MHz. That wasn't a particularly large increase, but it was free performance nonetheless. Once artifacts started showing up, I dropped back to the default frequency to ensure no damage was done to the GPU. During the following test, I observed significant corruption, so I halted the benchmark immediately.

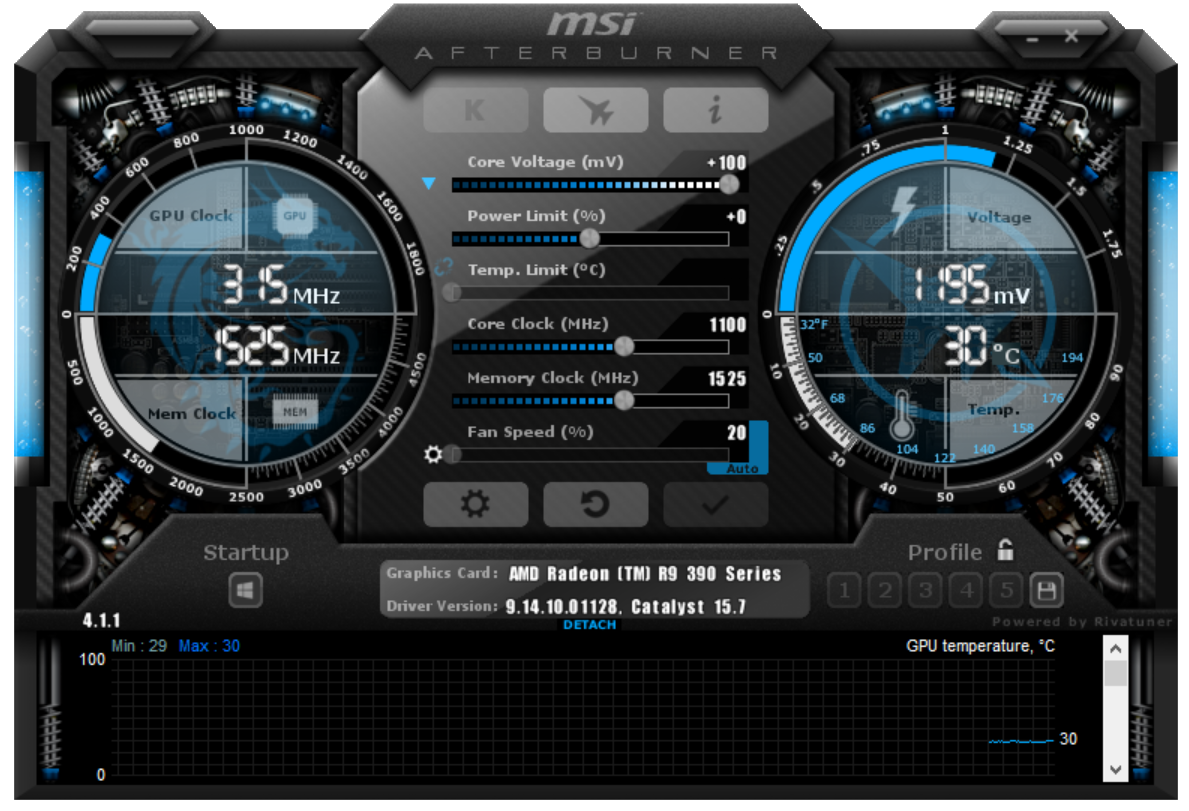

Upon verifying the settings in Afterburner, I discovered that resetting to default actually pushed the core voltage up 100mV. The pre-release BIOS that was sent to us seems to have a bug in it. Fortunately, this did not cause any permanent damage. Stability was restored by dropping the voltage. I found out later that re-seating the graphics card also fixes the voltage issue.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Further testing revealed that the highest stable clock rate remained 1160MHz. It's possible that increasing the voltage a little could help, but after what I had just gone through, I didn't want to push my luck with more juice.

The memory on our sample came clocked at 1525MHz. This particular board didn't take kindly to additional frequency; beyond 1545MHz, the graphics on-screen started to break up into green squares. Really, these weren't the results I was hoping for from such an aggressively-cooled card.

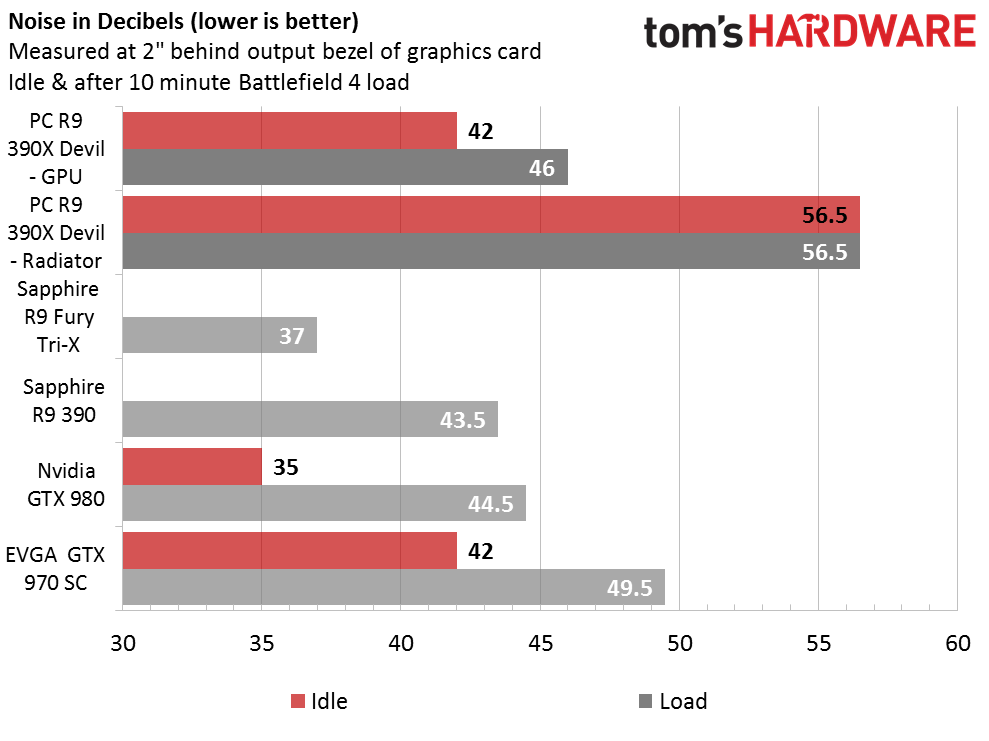

Noise

We typically report a noise measurement taken from the rear of the card at a distance of two inches. In this instance, however, I felt it necessary to show two readings, as the first one doesn't tell the whole story. It would be misleading to show only the noise levels directly behind a water-cooled GPU, especially when the majority of the sound comes from the radiator.

Readings from the back of the card fall within the same range as much of the competition, though, at idle, the Devil R9 390X's 12cm fan doesn't stop spinning like many other cards.

Measurements from the radiator are much louder. The fan pushes quite a bit of air flow, but it generates significant noise in the process. I took a reading next to the graphics card inside the case, and it was even louder. During game play, the decibel meter registered 62.5 dB in front of the fan on the card. From two feet away, I was still hearing 37 dB.

Temperature

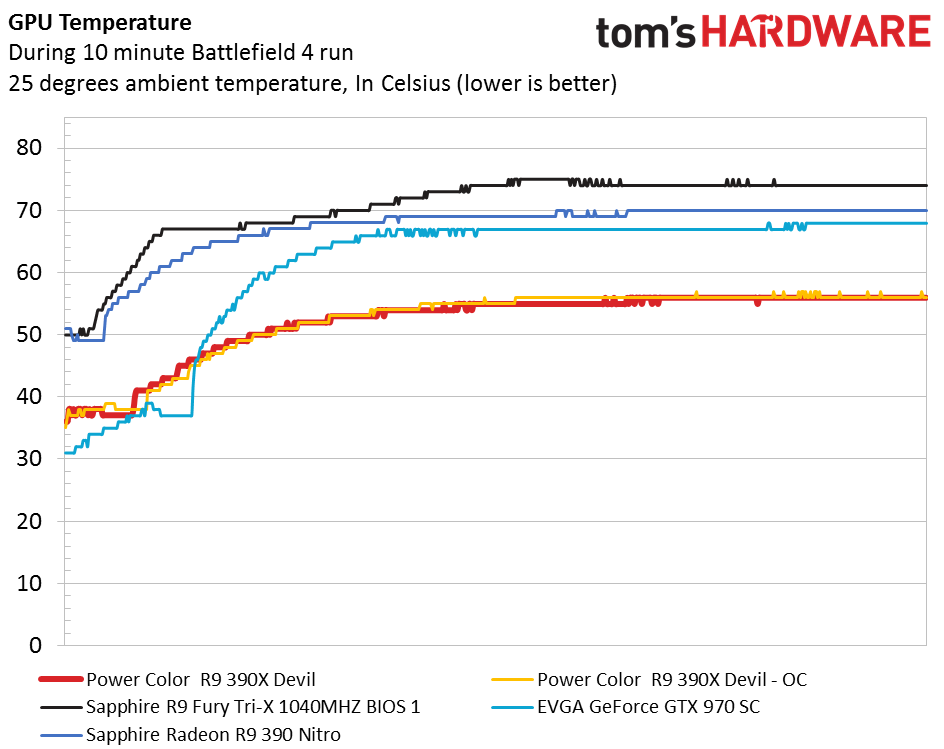

There was no doubt that the R9 390X Devil would be among the coolest cards we've tested. The closed-loop cooler is more effective at dissipating the GPU's substantial heat than a conventional heat sink and fan.

The graph confirms our hypothesis; the closed-loop cooler does its job well. Even after 10 minutes of full load, the GPU temperature is only a few degrees warmer than its starting point. The R9 390X settled around 55 degrees, even after overclocking the card.

Power Consumption

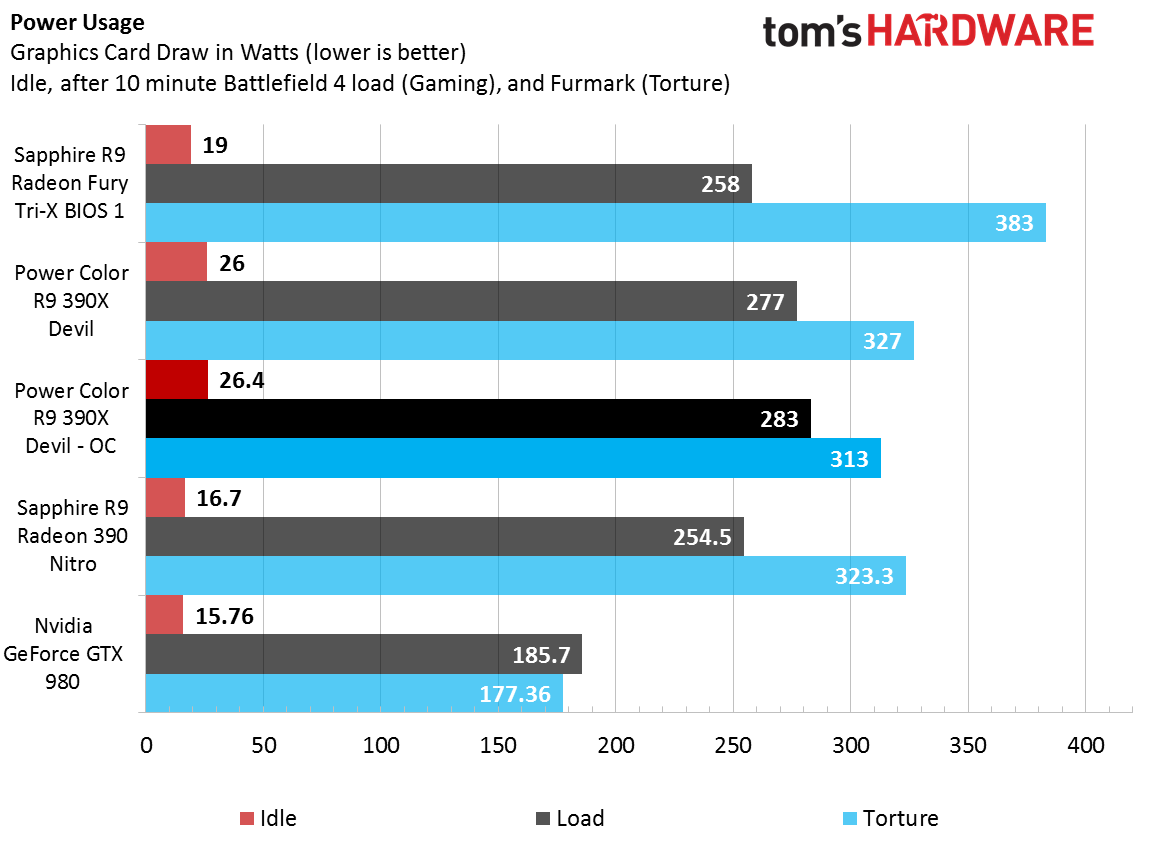

It's no surprise that AMD GPUs are power-hungry. PowerColor's Devil R9 390X uses one of the company's highest-end processors and overclocks it. There's also a pump in the loop, which requires power as well. Clearly, this was never meant to be an efficient graphics card.

And there it is; the R9 390X tops the chart. Peak power draw in the torture test isn't as high as the Fury, but under a more realistic gaming load, the R9 390X uses almost 20W more. Even the card's idle power draw is 10W higher than the R9 390. When the overclock is applied, the spread is even larger.

MORE: Best Graphics CardsMORE: All Graphics Content

Current page: Overclocking, Noise, Temperature & Power

Prev Page Gaming Benchmarks Next Page ConclusionKevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

utroz Hmm.. So a pre almost max OCed card with watercooling.. Only around 6 months late (or more if you count the 290X 8GB as basically the same as a 390X). At this point if you have a decent card wait for 16nm..Reply -

BrandonYoung An impressive result by AMD and PowerColor! I'm looking forward to future (more modern) released by these companies hoping to bring more competition into the once stagnant GPU realm!Reply

I'm aware this is a review of the Devil R9, yet I'm curious why the GTX 980 was mentioned in the noise graph, but omitted in the temperature graph, I get the feeling its because it will show the card was throttling based on thermals, helping describe its performance in the earlier tests, this is strictly speculation on my behalf however, and highly bias as I currently own a 980. -

ryguystye Does the pump constantly run? I wish there was a hybrid liquid/air cooler that ran the fan only when idle, then turned on the water pump for more intensive tasks. I don't like the noise of water pumps when the rest of my system is idleReply -

fil1p ReplyDoes the pump constantly run? I wish there was a hybrid liquid/air cooler that ran the fan only when idle, then turned on the water pump for more intensive tasks. I don't like the noise of water pumps when the rest of my system is idle

The pump has to run, even at low RPMs, otherwise the card would overheat. The waterblock itself is generally not enough to dissipate heat. The waterblock simply transfers the heat to the water and the radiator does almost all of the heat dissipation. If the pump is off there is no water flow through the radiator, no water flow will mean heat from the waterblock is not dissipated, causing the water in the waterblock to heat up and the GPU to overheat.

-

elho_cid What's the point testing on the windows 8.1? I mean, there was enough time to upgrade to windows 10 already... It was shown several times that the new W10 often provide measurable performance advantage.Reply -

Sakkura To be fair, overclocking headroom varies from GPU to GPU. Maybe you just got a dud, and other cards will overclock better.Reply -

Cryio Why test on 15.7? Seriously, that's like 6 drivers old. AMD stated they will release WHQL just ocasionaly, with more Beta throughout the year. You're doing them a diservice benching only "official" drivers.Reply

Nvidia's latest ... dunno, 12 drivers in the last 3 months were all official and most of them broke games or destroyed performance in a lot of other games. -

kcarbotte ReplyWhy test on 15.7? Seriously, that's like 6 drivers old. AMD stated they will release WHQL just ocasionaly, with more Beta throughout the year. You're doing them a diservice benching only "official" drivers.

Nvidia's latest ... dunno, 12 drivers in the last 3 months were all official and most of them broke games or destroyed performance in a lot of other games.

At the time this review was written it was not that old. As mentioned in the article, we first got this card over the summer. The test were done a couple months ago now and at the time they were done with the driver that Power Color suggested after having problems with the first sample.

An impressive result by AMD and PowerColor! I'm looking forward to future (more modern) released by these companies hoping to bring more competition into the once stagnant GPU realm!

I'm aware this is a review of the Devil R9, yet I'm curious why the GTX 980 was mentioned in the noise graph, but omitted in the temperature graph, I get the feeling its because it will show the card was throttling based on thermals, helping describe its performance in the earlier tests, this is strictly speculation on my behalf however, and highly bias as I currently own a 980.

The temperature of the 980 was omitted because the ambiant temperature of the room was 3 degrees cooler when that card was tested, which affected the results. I didn't have the GTX 980 in the lab to redo the tests with the new sample. I had the card when the defective 390x arrived for the roundup, but when the replacement came back it was loaned to another lab at the time.

Rather than delay the review even longer, I opted to omit the 980 from the test.

It had nothing to do with hiding any kind of throttling result. If that were found we wouldn't slip it under the rug.

What's the point testing on the windows 8.1? I mean, there was enough time to upgrade to windows 10 already... It was shown several times that the new W10 often provide measurable performance advantage.

We have not made the switch to Windows 10 on any of our test benches yet. I don't make the call about when that happens and I don't know the reasons behind the delay. -

Cryio Well then, sir @kcarbotte, I can't wait until you guys get to review some AMD GPUs on Windows 10 with the new Crimson drivers and some Skylake i7s thrown into the mix !Reply -

kcarbotte Reply17016358 said:Well then, sir @kcarbotte, I can't wait until you guys get to review some AMD GPUs on Windows 10 with the new Crimson drivers and some Skylake i7s thrown into the mix !

You and me both!

I have a feeling that the Crimson drivers have better gains in Win10 than the do in older OS's.