Virtual Reality Basics

Join us as we take a quick look at the most prominent players, technologies and terminology in the modern world of virtual reality.

Just a few short years ago, practical virtual reality was limited to the confines of an expensive academic lab or secret military installation. But with the discovery that dirt-cheap mobile panels could be repurposed for high-quality VR came a flurry of head-mounted displays (HMDs), smartphone-holding clamshells and controllers.

Before a single VR product has hit store shelves, consumers and content makers have been battered with innovation, specs, claims and perhaps a touch of witchcraft. Thanks to the multitude of announcements at the Game Developers Conference and, most recently, E3 Expo, things got even more confusing.

We're about to sort it all out for you with a quick summary of what's out there and how it all ties together. We'll define some of the terminology, and separate a little fact from fiction. And, in the end, hopefully you'll have a better idea of how to compare all of these offerings when they become, to borrow a phrase, a literal reality.

Presence

This is what everyone is striving for: the point of being truly convinced you are in the environment presented to you. It's not about having a true-to-life representation (photo realism). Rather, it's about that moment when you are standing on a virtual ledge, and while your mind says, "It's OK to step forward," your body has a very different reaction.

MORE: Best Graphics Cards For The Money

MORE: All Graphics Content

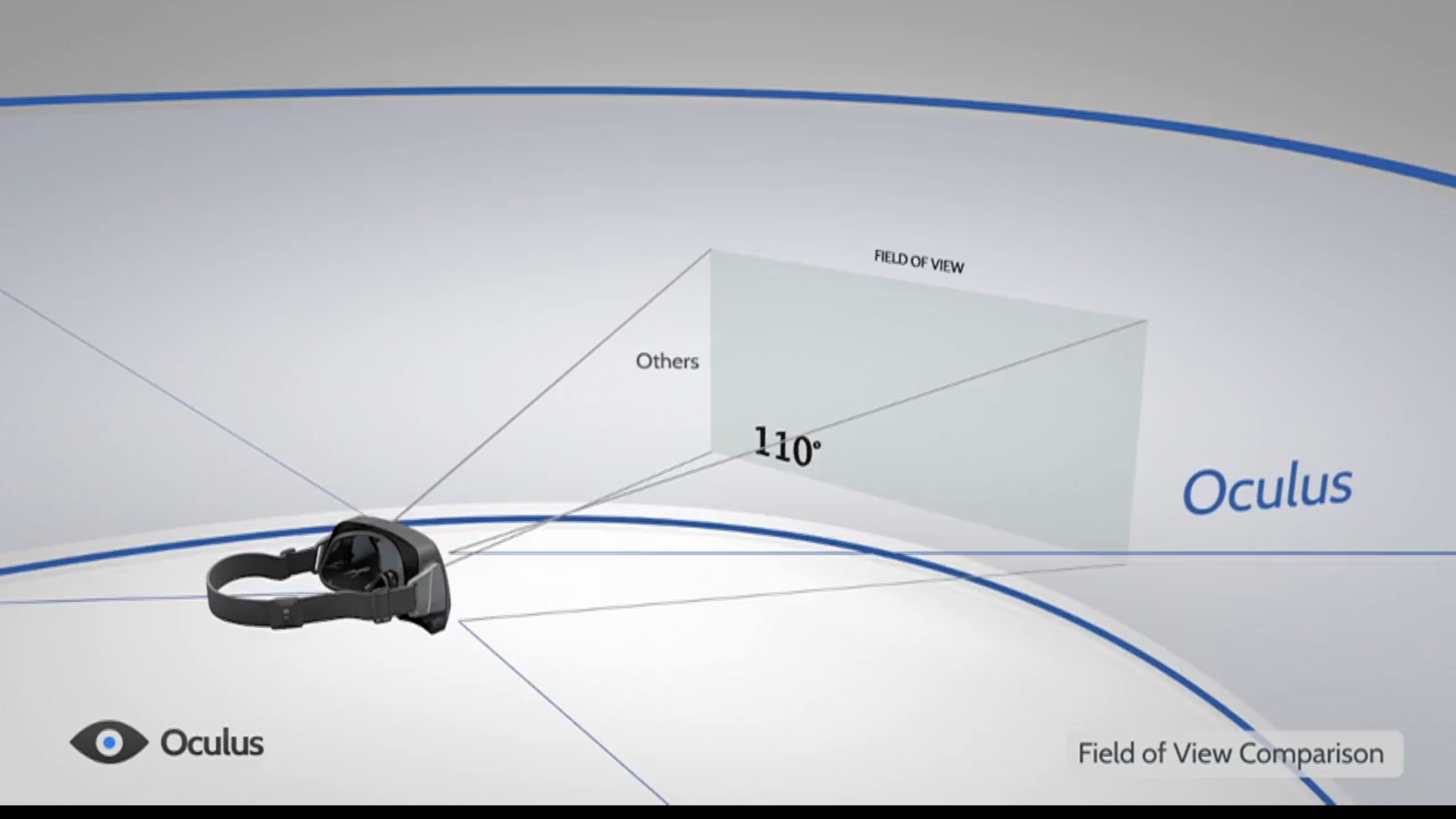

Field of View (FOV)

Arguably the biggest breakthrough with consumer-grade VR has been in field of view (FOV), or the actual viewable area. On average, we are able to see about 170 degrees of FOV naturally. Before the Oculus Rift Kickstarter, most consumer-grade HMDs—like the Vuzix VR920 and Sony HMZ-T1—had an FOV of 32 to 45 degrees. These slivers were regularly touted as making you think you were in a movie theater with a big screen in front of you, but it never really worked out that way.

In contrast, modern consumer-grade HMD prototypes display 90 to 120 degrees. While that's not the full 170 degrees we can see naturally, a 100+ degree FOV is plenty to make what we experience in VR convincing, as demonstrated by products like Sony's Project Morpheus and Oculus' anticipated consumer release (CV1).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Latency

Latency refers to the delay between when you move your head and when you see the physical updates on the screen. Richard Huddy, AMD's chief gaming scientist, says a latency of 11 milliseconds or less is necessary for interactive games, though occasionally, moving around in a 360-degree VR movie is acceptable at 20ms. Nvidia's documentation and interviews promote 20ms, though this isn't an indication of its hardware capability; rather it's just a benchmark.

Head Tracking

There are two categories of head tracking.

Head tracking based on orientation is the most basic form, and it detects only the direct rotations of your head: left to right, up to down and a roll similar to a clock rotation. For now, this is the only form of head tracking available for mobile products like Samsung's Gear VR, ImmersiON-VRelia's GO HMD, Google Cardboard and most, if not all, of the remaining mobile VR devices. This type of head tracking also was used in the Oculus Rift DK1.

Another form of head tracking, called positional tracking, includes the rotations of your head as well as the movements and related translations of your body. It does not track your limbs but rather the HMD as it is positioned in relation to your body—for example, if you were to sway side to side or kneel forward.

Positional tracking is achieved in multiple ways:

- Oculus Rift DK2 and Crescent Bay—and now, CV1—use an infrared camera combined with magnetometers and gyroscopes. The camera picks up reflectors on the HMD and extrapolates the positional data from how they are positioned. Oculus is calling its tracking system Constellation.

- Sixense developed an experimental add-on for Samsung Gear VR that uses magnetic fields to detect how an object is positioned, and therefore managed to develop ad-hoc positional tracking for a device that didn't otherwise have it.

- Vrvana Totem PrototypeVrvana uses a pair of cameras and tracks markers or structures in a room as a basis for determining how the HMD is physically positioned. While the tracking still needs work, we saw a prototype at E3 that is based on dual cameras and also works in a markerless environment.

- HTC/Valve's Vive HMD uses a "Lighthouse" technology (more on that later).

There are other options, but those are just a few popular examples.

Resolution

Beware! There is more to advertised resolution than meets the eye (get it?). There are two types of HMDs being promoted in the market today. The most popular type has a single LCD panel whose resolution is divided in half, right down the middle.

Therefore, a Sony PlayStation VR (PSVR) resolution that's promoted as 1920x1080 is actually 960x1080 pixels per eye. The Oculus Rift DK1 (1280x800 or 640x800 per eye) and DK2 (1920x1080 or 960x1080 per eye) operate under the same principles.

Other systems, like ImmersiON-VRelia, are working toward higher resolutions by using two LCD panels instead of one. So, a 1080p panel is actually 1920x1080 per eye. The Oculus CV1's resolution is 2160x1200, or 1080x1200 per panel.

Input

With all of the attention on the display side of VR, input options were surprisingly lacking until GDC and, later, E3. When we say "input," we really mean controllers designed specifically for VR and interaction.

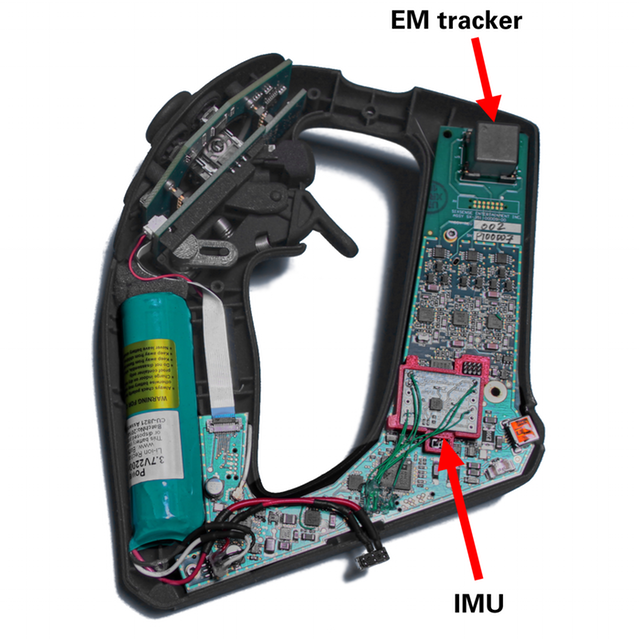

Just as Sixense added positional tracking to Samsung Gear VR using magnetic fields, the company's STEM wireless controllers are also based on magnetic fields. This is an old-school technology used in VR CAVEs in decades past. A CAVE (cave automatic virtual environment) is basically a virtual-reality room based on projection or, in rare cases, large display panels. A minimum CAVE is considered two walls and a floor, but can be as advanced as six surrounding walls at a large scale. They are used most frequently for simulations and research.

The earliest forms of interaction in virtual-reality CAVEs were magnetically detected gesture devices and controllers. The challenge is, CAVEs are usually based on a big, metallic frame, and similar to Superman's aversion to Kryptonite, magnetic fields don't react well to metal—which is why CAVEs ended up focusing on optical camera tracking in the end.

According to Amir Rubin, CEO of Sixense, the company's new STEM controllers have overcome the challenges associated with magnetic fields interacting with metal frames. With the assistance of an inertial measurement unit (IMU) inserted into each STEM component, Sixense correlates data between the magnetic field and the IMUs to maintain consistent accuracy. In all of the prepared demos we have seen, the technology worked well.

It's unknown if this is a finalized direction, but Oculus VR's offering is the Oculus Touch or half-moon controllers. They are a cross between Oculus' infrared tracking, which is already included in its CV1 and Crescent Bay, and controllers designed to determine how the wearer grasps and gestures. Unlike glove-based technologies that try to determine the exact positioning of the user's fingers and grasp, they emulate a similar experience by detecting the on-off status of different buttons and capacitive sensors as they relate to the way the devices are held. While I struggled with it initially, other users have found it easier to learn, and it's definitely a far better option for VR than a standard Xbox controller on its own.

HTC and Valve went the laser-beam route with the Vive HMD and controllers. With the technology, dubbed "Lighthouse," you mount two laser-emitting base stations on the ceiling, on opposite ends of the room. The HMD and controllers are covered with nodule sensors.

The nodule sensors are strategically placed along the shape of the HMD or device—or whatever needs to be tracked. As the lasers flash on these sensors, the lighthouse system calculates where and how the device is positioned relative to the base stations based on the timing of the laser flashes. This knowledge of how the nodules are placed, combined with the detection of how they are positioned when the lasers flash on them, provides the information needed for instantaneous positional head tracking and input control.

To make "The Walking Dead" in VR work at E3, Starbreeze Studios' experimental StarVR HMD prototype is based on two 2.5K display panels, fresnel lenses and a whopping 210-degree field of view. The company's tracking is made possible by recognizable patterns called fiduciary markers that are pasted onto the HMD and any objects that need tracking. An external camera detects the placement of these markers and extrapolates the HMD and object positioning.

Emmanuel Marquez, chief technology officer at Starbreeze Studios, said the company would like its HMD's resolution to be as high as 8K, and he expects this will be possible within the next five years.

ANTVR's advanced prototype works in a completely different way. In this case, the floor is dotted with retro-reflective material, and the HMD's downward-facing on-board camera uses the mat as a positional tracking reference.

Sony's Move platform is comprised of the PlayStation Eye camera and Move handheld controller.The controller features an orb that dynamically changes color. This color change is necessary for it to stand out against the background colors of the room so the camera can easily track it. The Move controller also features an accelerometer and rate sensor that detects the controller's rotation. All of these elements combined make for a controller with super-duper accuracy.

However, the real victory is that, although this PlayStation Move technology was originally developed as a method for fun video game interaction, it was also easily repurposed as the core head-tracking technology for Sony's PSVR because it met all the criteria for low latency and positional head tracking.

It's not all about what you hold in your hands, though. There are hand-gesture controllers that pick up every nuance of your fingertips as well.

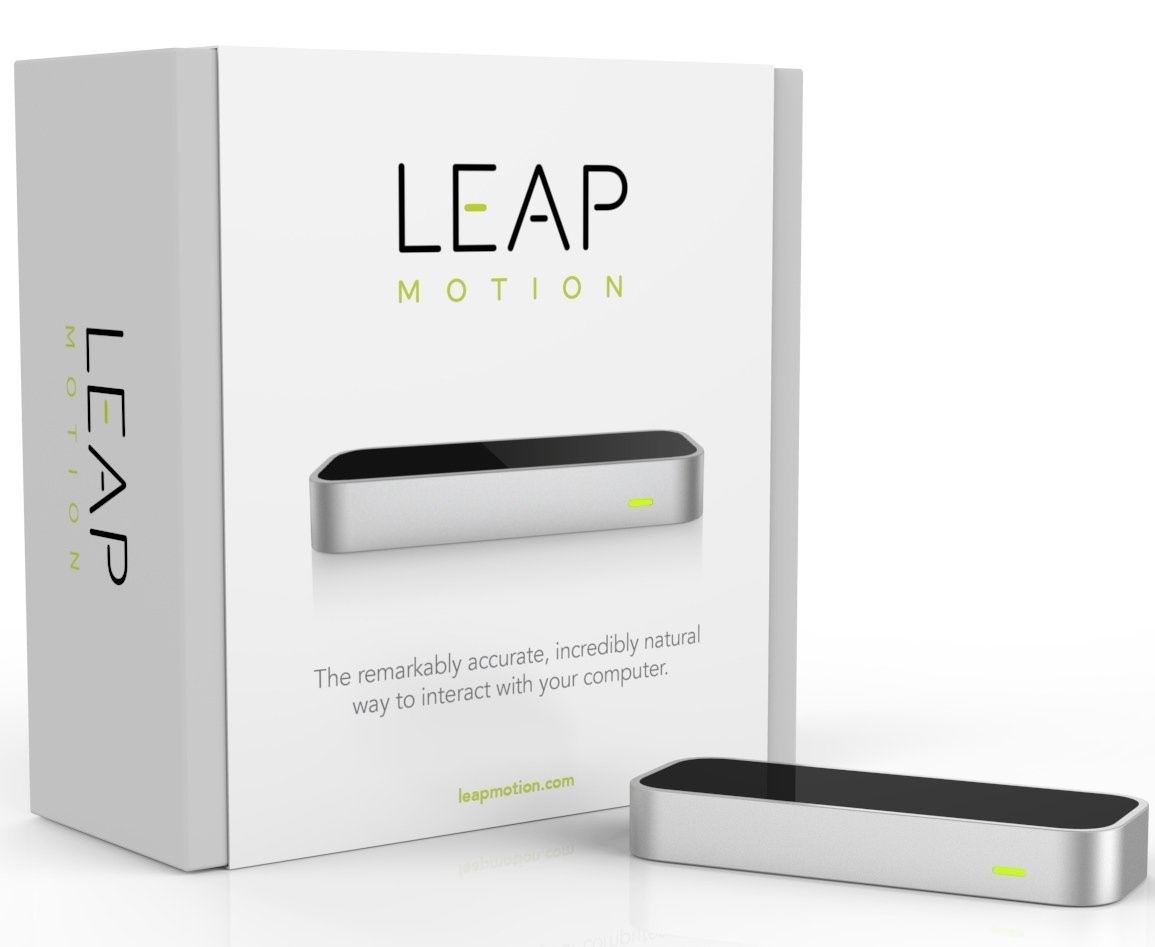

Leap Motion is the size of a small chocolate bar, and features two cameras with three infrared LEDs. The cameras see what is reflected from the infrared light, and using software, they create maps of what is in their field of view. Leap Motion's most recent software even includes techniques to compensate for hand skeletal tracking when one hand is covering the other.

Leap Motion tracks movement within about two feet in front of the device, and Razer has been using it as an official spec addition to its OSVR platform for hand tracking with the company's HMD.

FOVE, a company whose name is derived from the word "fovea" (the part of the eye that allows it to focus) is developing an HMD that features eye tracking, and has done demonstrations where your eye placement controls the interface. The technology can also adjust the appearance of depth of field according to what you are looking at.

As mentioned earlier, a huge challenge for virtual reality is having enough processing power to deliver the required frame rates and super-low latency. While a high field of view is necessary for presence, it's not required for pristine resolution in the whole image, because people don't naturally see the fine details in their peripheral vision. Eye tracking opens the door for "foveated rendering," whereby the bulk of the graphics power is spent on the fraction of the screen where you are focused, and far less is needed for the periphery, which you can't effectively see anyway. In summary, eye tracking opens the door to an efficient way of rendering just the graphics you need to see.

As reported by Meant to be Seen at E3 2015, FOVE might make foveated rendering available by the time its HMD developer kits ship. The company also has built a relationship with Valve as a Lighthouse tracking candidate. (Valve is licensing its tracking technology to qualified third parties and devices.)

There is one more option worth mentioning: Sulon Technologies is developing a mixed/augmented-reality head-mounted display. It's actually in line with what Magic Leap and Microsoft's HoloLens are purported to be, and the company's marketing videos are just as impressive. However, it's the end result that everyone is most excited about.

The Sulon Cortex head-mounted display features a pair of cameras that capture the room. The unit's computer or smartphone adds a digital layer based on what you are seeing and how you are interacting with what you see. The result is an augmented-reality experience that mixes the real and digital worlds around you.

Each revision we've seen has definitely been early-stage technology compared to the marketing videos, but companies like Sulon Technologies are headed in the right direction. You can read more on our experiences with Sulon Cortex here.

Locomotion

A huge challenge in achieving presence is having the ability to experience a full range of convincing physical movement. If your body and mind are speaking different languages, the illusion is as good as broken. HTC/Valve claims Vive will work in a space as big as 15 square feet (1.4 square meters), and Oculus' CV1 demos have showcased about a third of that.

The Virtuix Omni and the Cyberith Virtualizer are two options that debuted on Kickstarter. The principles are the same. You stand on a slippery platform, and your feet are tracked by a camera (Cyberith Virtualizer) or sensors implanted in the specially worn overshoes (in the case of the Virtuix Omni). Neither example is natural, but both technologies work once you get the hang of them.

Another option is to have an open space and to make do with it. If you are wearing a head-mounted display in a large enough area, what you see could end up coaxing you to safely walk in circles, and you might not even realize it. For example, the HTC/Valve Vive is designed to support a 15x15-inch space. It's feasible for the device to know where the room's boundaries are, track where you are physically, and come up with narrative roadblocks before you hit those edges.

In the case of the Sulon Cortex, the room was physically scanned in advance, so the digital wall was calibrated to exist in the same space as the physical wall. As long as there aren't any objects strewn about on the floor, we can indeed have safe VR environments, even though we don't see the physical world around us.

Here are a couple of fancy but important expressions that people say all the time with very little explanation.

Low Persistence

When the Oculus Rift DK1 was brought to market, it became immediately evident that existing mobile displays had a VR-related issue: motion blur. The DK1 is based on a "persistent" display, which means the image is always on. As a result, the imagery gets very muddy as you move your head around because you are seeing data from the previous frame, which no longer represents the orientation of your head. This discrepancy can result in image trails.

The latest OLED panels have higher refresh rates, but that isn't enough to solve the problem. Low-persistence displays work by showing a correctly placed frame, immediately turning off and then showing another frame as soon as it is ready. With the Oculus Rift DK2 and higher, Sony PSVR, HTC Vive and other solutions, motion blur is gone because the previous image is cleared completely.

The trade-off with this technology is that it requires a minimum of 75 frames per second to keep up with the panel's 75Hz refresh rate (or higher, depending on the refresh rate). If content can't achieve that, the technology won't work properly. Fortunately, there is a solution.

Asynchronous Timewarp

We know that if a low-persistence display is working at 75Hz, it needs to hit 75 FPS. The problem is, 75 FPS at high resolution is difficult to sustain, given that modern graphics cards often struggle to hit a modest 60 FPS. If you factor in the requirement to have a left and right view for stereoscopic 3D support, it becomes clear how those graphics-intensive exhibition demos heat up showroom floors in the dead of winter.

Asynchronous timewarp (ATW) is a technique for achieving a balance between what your GPU can render and what your display needs to show by inserting intermediary frames in situations where the game or application can't sustain the frame rate. The "warp" is the actual adjustment process. Timewarp on its own warps or adjusts the image before sending it to the display by correcting for head motion that occurred just after the scene was created in the rendering thread. It's a post-processing effect in that it shifts the completed image to account for the user's latest head position.

The limitation of timewarp is that it's dependent on consistent frames being fed from the graphics card because the adjustment happens after the render is finished. If the computer is distracted by another task and hesitates, the information shown on the HMD is too far out of date and will cause discomfort.

Asynchronous Timewarp works to solve that problem by continually tracking the latest head positioning in a separate processing thread that isn't dependent on the graphics card rendering cycle. Before every vertical sync, the asynchronous thread contributes its up-to-date information to the latest frame completed by the rendering thread. Doing it this way should have even lower latency and improved accuracy over Timewarp on its own.

Unfortunately, nothing is perfect. Even though ATW is there to help compensate for an under-performing graphics card, it is prone to "judder," or slight image doubling, as showcased above.

There are additional techniques, and there will likely be more, but these are the main ones we hear about most often.

While we await formal standards via DirectX and OpenGL, AMD and Nvidia have come up with proprietary SDKs and techniques as a stopgap measure for improved interaction via their graphics cards. The goal is to cut down on latency and increase frame rates for VR purposes.

Liquid VR

Liquid VR is a separate piece of code and runs on the Mantle platform, according to Richard Huddy, chief gaming scientist for AMD. Mantle is a proprietary AMD API that provides deeper and faster access to the company's architecture, compared with the standard DirectX 11 route, he claims.

Mantle and Liquid VR provide full access to AMD's asynchronous compute engine (ACE) functionality. A GPU stream processing unit is a slot for processing a single command. In older GPUs, those commands were handled sequentially. While the Radeon HD 7970 has 2048 shaders, even when the GPU is busy, rarely are all of them fully utilized. The ACE architecture makes it possible to interleave different commands at the same time and fill in those gaps. When unused stream processing unit slots are available, the commands are passed through as needed. The GPU could actually be handling compute tasks even while it's crunching away on graphics work, without giving up valuable processing time.

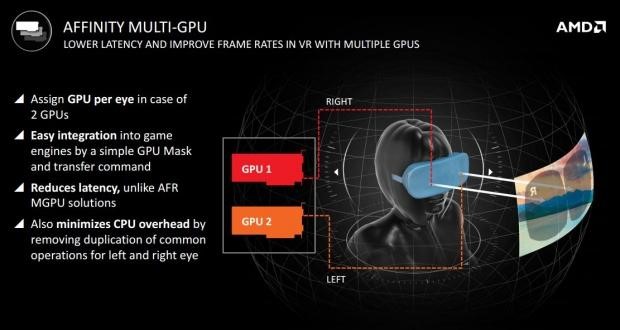

With CrossFire setups (multi-GPU), each graphics card would alternate frames. This doesn't work well when an image is split between displays in a VR HMD. Liquid VR can effectively divide the responsibilities of each card—one GPU for the left eye and one for the right.

AMD's API also makes it possible to communicate directly to the HMD without the need for a separate SDK like Oculus VR or Razer's OSVR platform. Even though AMD is showcasing Oculus VR support with Liquid VR, it's nonproprietary (for hardware attached to AMD GPUs), and the company intends to support all qualified solutions.

Even though some displays being demonstrated at GDC were quietly using AMD's 300-series GPUs, AMD believes its faster previous-gen cards are capable of running modern VR content as well, likely because the newer boards are largely re-branded versions of what came before.

Nvidia GameWorks VR

Nvidia's GameWorks VR is less a formal API and more a series of techniques and features that help developers get the most out of their GPUs for VR purposes. For example, earlier, we talked about Asynchronous Timewarp. In the Nvidia GameWorks world, Asynchronous Timewarp is made available at the driver level. Similar to AMD's Liquid VR platform, Nvidia is also introducing SLI VR, which means computers with multiple graphics cards will be better equipped to render the left and right views simultaneously, allowing for the most effective VR experience.

But it's the company's multi-resolution shading technology that's most interesting. Modern HMDs like the Oculus Rift and HTC Vive require an elliptical dual view sent to their display. While the images look strange to the naked eye on a computer monitor, they are properly shaped experienced through the HMD lenses. The center of the left and right images are dense, and the pixels grow far more sporadic and spread as the image warps outward.

Until now, this pixel distortion was applied after the fact. Graphics cards churned out full-resolution imagery with all of their available processing power, and the warping was handled during a post-processing stage. This is wasteful because the display panels can do nearly as well with significantly fewer pixels to work with.

Nvidia's solution is to divide the image into a 3x3 grid of viewports. For our purposes, think of viewports as segments of screen space. While the center viewport remains at full or nearly full resolution, the surrounding viewports are rendered in a manner similar to the traditional warp that used to be handled in post-processing. When all is said and done, the user's perceived image quality is about the same with 25 percent to 50 percent fewer pixels being created and increasing the pixel draw rate by 1.3 to 2 times that of normal. Using Oculus CV1 as an example, multi-shader rendering for its 2160X1200 displays would need 30 percent to 50 percent less horsepower to do effectively.

Nvidia is also promoting a concept called high-priority context. When rendering through traditional DirectX, developers have a fixed process for getting from point A to point B. Nvidia is widening the door to the render path so that as unpredictable changes arise from things like head tracking, the developer will be able to correct them much more quickly.

While both AMD and Nvidia have strong offerings, what's key is that neither represents a formal standard. That means game and content makers will have to support two proprietary development paths to reap the maximum performance benefits from each architecture.

Conclusion

Having been involved with this industry practically since its modern inception, I can say that it's really amazing. Before a single consumer product has been released, we have already seen dedicated innovations in the core of how we get graphics to our VR displays, and we are seeing head-mounted displays that feature a wide range of specifications and core technologies. Even brand-new experimental ideas are surfacing—including one that was flown (sadly, unsuccessfully) into space.

Yet, we have to remind ourselves that this race doesn't have a finish line. Unlike the traditional display industry, which has a limited learning curve, every addition can dramatically change our understanding of virtual reality and other forms of immersive technology. According to Simon Benson, director of the Immersive Technology Group for Sony Computer Entertainment Worldwide Studios, a mere five-degree increase in FOV changes everything. In this case, he cited an example in which race car drivers were having more car crashes because their updated car's design allowed a wider view of the road and inadvertently broke their focus.

Having personally seen Oculus CV1, Valve's HTC Vice, FOVE, Starbreeze VR, Immersion-VRelia's GO platform and countless other products, this promises to be a diverse industry, and the best is yet to come. As long as the platforms don't limit themselves to a single brand, or APIs fail to be agnostic, there will only be winners in this space for both consumers and vendors.

[Update: This article was updated on 10/12/2015 to fix an error in the resolution we provided for Oculus CV1, and other more minor changes, such as referencing the Sony PSVR (rather than Morpheus), a title modification for a quoted expert, and some small clarifications on how the HTC/Valve Vive Lighthouse technology works.]

MORE: Best Graphics Cards For The Money

MORE: All Graphics Content

-

hdmark Good read! Covered pretty much everything ive glanced through in the last few months. Looking forward to an update to this in a few years !!Reply -

tylanner After the significant technological progress recently there has been a shift in focus towards improving ergonomics and wearer comfort.Reply

Improving on the heavy and obtrusive 1.0's will go along way for overall immersion and natural mobility. -

utgardaloki What do you mean the resolution is 2160x1200 per eye on the Rift and HTC Vive? As far as I've heard both companies state it's supposed to be 2160x1200 combined for both screens so 1080x1200 per eye.Reply

So 2160x1200 total. Not 4320x1200 total.

Can anyone confirm? -

FlukeRogi @utgardaloki - you're correct, the article is wrong.Reply

Overall resolution is 2160x1200 as you said, giving 1080(horizontal) x 1200(vertical) per eye. -

Enterfrize Hi there!Reply

I'm the actual author. The resolution as written for the Oculus CV1 is indeed incorrectly written in the article. It's 2160X1200 spread over two displays (not multiplied by two). The article was written months ago before the CV1 details were actually confirmed, which is why other details are a bit out of date as well (e.g. Project Morpheus instead of PSVR). We'll get this cleaned up - I just noticed that it was published today. I completely forgot this article was in the can for much later; I should have been more diligent and followed up on the finer details before this had the opportunity to see the light of day. Sorry for the inconvenience.

Regards,

Neil -

Enterfrize Hi there!Reply

I'm the actual author. The resolution as written for the Oculus CV1 is indeed incorrectly written in the article. It's 2160X1200 spread over two displays (not multiplied by two). The article was written months ago before the CV1 details were actually confirmed, which is why other details are a bit out of date as well (e.g. Project Morpheus instead of PSVR). We'll get this cleaned up - I just noticed that it was published today. I completely forgot this article was in the can for much later; I should have been more diligent and followed up on the finer details before this had the opportunity to see the light of day. Sorry for the inconvenience.

Regards,

Neil -

Enterfrize Just got word that the fixes will be implemented shortly. Don't worry; even at 2160X1200 pixels, VR is loads of fun! :-)Reply

Regards,

Neil -

FritzEiv Sorry folks. I've updated the Oculus CV1 specs and am making a few other modifications, none of them of that level of significance, thankfully.Reply

-- Fritz Nelson, Editor-in-chief -

beetlejuicegr Asynchronous Timewarp Don't you love it when new words are used for old stuff? This "tech" is used on online games to compensate for the high latency of some players.Reply

Also awesome example was the "zero ping" mod in unreal tournament which was kinda doing something similar for your bullets and aiming :P

Seriously though, this article is super awesome and i will bookmark it for some time, because i intend to buy one VR in a year or so too.