Shocking: Did the W3C Sell Out to Microsoft?

It’s the official HTML5 test that praises IE9's HTML5 features. The W3C has spoken, the IE9 is the best HTML5 browser. But my question is: How credible can the test be, if you discredit it yourself and if you quietly change the results?

I know, how dare I question the authority of the W3C and how dare I bash IE9 again. I am such an angry guy. But wait: Tame your fingers, don't scroll down to the comments section yet. In fact, I like IE9 and Microsoft's progress, which has, conceivably, brought us the best IE since IE4. IE9 is a very competitive browser with many strengths and some weaknesses, just like all other browsers.

What I don't like is that there appears to be a need to push IE9 more than it needs to be pushed and it may suffer credibility as a result. We have seen Microsoft's IE marketing campaign reaching from market share blog posts that go to extreme lengths in selecting convenient numbers and forgetting those that would actually represent a realistic picture. It reaches to product demonstrations in benchmarks that put rival browsers at a disadvantage by choosing outdated competing browser versions and benchmarks that are tailored to IE's strengths.

In aggregate, reports of overly positive statements have injected doubt in me, doubt about IE9's capabilities and I question new IE9 benchmark results right off the bat. It prompted me to come up with a thorough benchmark myself, which provides an environment to confirm claims or call out false claims. The experience from previous months shows that there is usually a minimal and almost negligible performance difference between browsers today. The 2x or 3x advantages are history. Sometimes we can observe 20 - 30% advantages in some benchmarks, but that is the exception. Especially as far as the popular Sunspider benchmark is concerned, the big browsers are within a close range that is, in many cases, small enough to be considered a margin of error.

HTML5 is a different category, however. IE, Firefox, Chrome, safari and Opera are still evolving and adding HTML5 support. Common tests such as HTML5test.com HTML5demos.com or listings such as WhenCanIuse.com suggest that Chrome and Safari offer the best support for HTML5 these days, followed by Firefox and Opera. IE9 is trailing the pack in those evaluations, even if we know that the browser does very well in some HTML5 tests and is, hands down, the best browser as far as canvas composition is concerned.

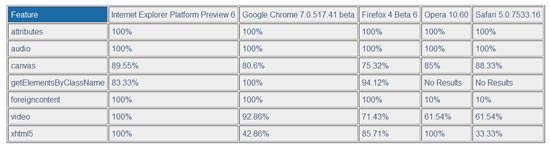

A few days ago, the W3C posted its own HTML5 support findings. As a standardization authority, the word of the W3C carries weight and the results are somewhat official. Interestingly, the test shows IE9 as the leader with 96% of HTML5 compliance, followed by Chrome with 88%, Firefox with 80%, Opera with 65% and Safari with 56%. Let those numbers sink in for a moment. If you are interested in browsers just a tiny bit, then you may have some questions. My first thought was: How can it be that Chrome and Safari differ so much, especially since they are based on the same architecture? There may be differences here and there, but are 30 points in difference realistic?

I have been testing software for nearly 16 years and browsers for nearly 6 years. I tend to have a close eye to detail, which may have been created especially by the staff and readers of Tom's Hardware, both of which put an incredible effort into the comparison of products. Such comparisons start with the selection of products, the creation of fair test environments and benchmarks that reflect an entire application field and not just part of it. Occasionally, your choices can be unfortunate, but you can and will correct them as eagle-eyed readers surely find errors or note unfair comparisons. Readers can have a very subtle way to point flaws out. I personally always loved (and still love) the fanboy accusation and, depending on someone's aggravation, that Intel/AMD/Nvidia/Sony/Nintendo/Apple have sponsored and purchased the test result, which is occasionally followed by some sort of verbal threat. The reality is that advertising is necessary for websites to survive, but no website I know of can afford to accept payments in exchange of a better or worse test result.

So, this may have been the reason for me to look a bit closer how the W3C exactly came up with those numbers. First I noticed that it is a fairly comprehensive and time-consuming test. There are 212 tests that you need to run and record manually. It was clear that there is a substantial effort behind the creation of those results. So, how much time difference was there?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It was significant. So substantial that the W3C tested two stable versions of browsers against two beta versions and against one developer version that was not even available at the time of testing. Huh?

Yup.

So, my question would be: How can you compare the (old) stable versions of Opera (10.60) and Safari (5.0.7) against the beta versions of Chrome (7.0) and Firefox (4.0 Beta 6) - and against the developer version of IE9 (PP6)? I am scratching my head over this one as developer versions have been available for all five browsers. If one version in this field should have been omitted, it should have been IE9 PP6, as this is a rough and unusable browser version for everyday browsing - it lacks the URL bar. If it lacks those basic features how could the W3C assume that this is the best browser version Microsoft has? Maybe other features are missing as well.

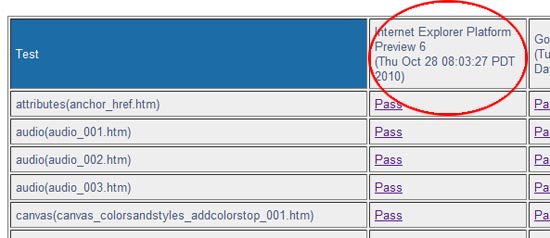

It gets better: According to our records, the IE9 version the W3C tested was not even available at the time of the test run. At the time of the test, Microsoft had not publicly communicated that PP6 has more HTML5 support than IE9 Beta: The W3C ran the test of IE9 PP6 exactly 79 minutes before the software was announced by Dean Hachamovitch during the PDC 2010 keynote and 79 minutes before the announcement of the additional HTML5 support was made. Even more strange, just hours after the HTML5 test result was posted, Microsoft's IE team decided to blog about IE9's XHTML features, which is exactly the category in which IE9 trashes its rivals. You have to admit, something fishy is going on here. That can't be all coincidence.

Hey, it does not have to be a conspiracy, right? So I wondered: Who gave the W3C the PP6 early? Who ran the test and who chose which browser would be tested and compared? I contacted the W3C's press contact Ian Jacobs as well as Microsoft's PR team with a request for clarification. Microsoft's PR agency replied with a very brief note and told me that they decline to comment beyond a blog post, whose URL I am yet to receive. Jacobs decided to simply ignore me.

Alright. Maybe he was busy?

He was not.

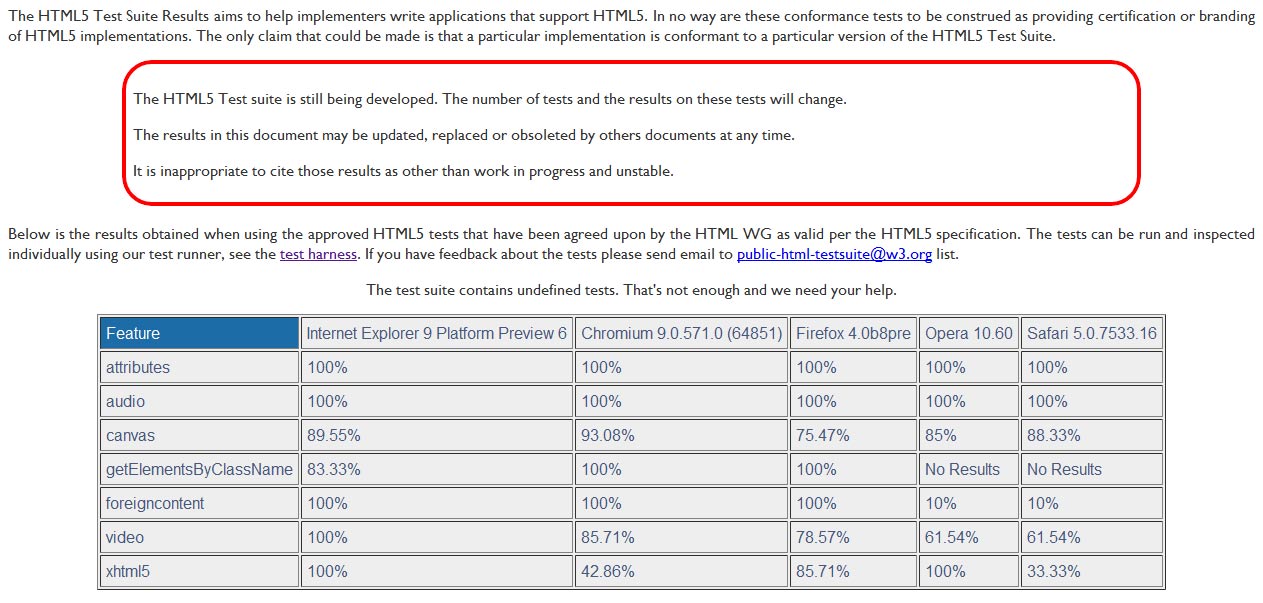

This morning, when I finished up this article, I noticed that the W3C had changed the test results.

Firefox 4 Beta 6 was changed to Firefox 4 b8-pre and Chrome 7 Beta was changed to Chrome 9 nightly. The number for Chrome climbs to 89% and Firefox jumps to 91%. This shows the numbers are substantially different. The test result, however, is still irrelevant as there is no number for Opera 11.0 and there is no number for the latest Webkit build. Also, it is my opinion that IE9 PP6 is not a complete browser and hardly can be part of this comparison. If you can't use the browser in every day browsing what does it matter that some future engine has the best HTML5 support?

Hachamovitch mentioned during the PDC 2010 keynote that PP6 is a bit faster than the IE9 Beta and that it has received extra HTML5 support, which amounts to an advantage of about 10% for IE9 PP6 over the Beta, according to HTML5test.com. I was also able to confirm the speed increase - in Sunspider, the browser is about 8% faster than the IE9 Beta, while there is no change in any other category, including offline web page loading performance, Flash and hardware acceleration.

In a reasonable scenario, there is the assumption that IE9 Beta may not have the best HTML5 support at this time. So why is it that PP6 was tested by the W3C, but IE9 Beta was not? Common sense suggests that it is always an unfortunate chain of events that lead to such a questionable scenario. Human error, if you will. A bad choice.

But I would like to ask the question what exactly happened and how far Microsoft's influence in the W3C reaches, especially since Steve Ballmer and Hachamovitch mentioned that they want to contribute more resources to the W3C. Maybe I am a bit negative here, but there are too many red flags to ignore.

I won't claim that the W3C changed the claims on its test page because of my inquiry. But the timing is rather strange nevertheless. As a journalist you know that you poke into a bee's nest when you get brief answers or no answers at all, while you see changes happening.

Gladly, I assume that it was Jacobs who also made a decision that the test results should be accompanied by a note that basically states that theW3C HTML5 test results cannot be quoted as they are, if we don't mention that they are work in progress. Seriously? What value do they have other than pitching IE9 PP6 as the best HTML5 browser? If they are work in progress shouldn’t they be removed? It is a reasonable assumption that IE9 Beta, which has less HTML5 support than IE9 PP6, would look not as great in this comparison. Given the chain of events there is this strange feeling that there is a good reason why IE9 Beta is not listed.

This test was way out of line, beyond my comfort level and may hurt the credibility of the W3C. If we see these inconsistencies in the test field and sudden changes without explanation, how can we know that the test is fair? How can you trust the independence of the W3C? Should we simply ignore the results or should we be concerned? Did the W3C sell out to Microsoft - at least in this instance?

Have I just turned into a Tom's Hardware reader and am I unfairly criticizing the effort of the test? Perhaps. But the criticism should get the W3C on their toes just as it gets average hardware and software review editors on their toes when they are criticized.

For now, those HTML5 test results have little credibility, if any. Especially since the W3C says that it may change the test suite, the results may change and that you can't cite the results as fact.

Wolfgang Gruener is an experienced professional in digital strategy and content, specializing in web strategy, content architecture, user experience, and applying AI in content operations within the insurtech industry. His previous roles include Director, Digital Strategy and Content Experience at American Eagle, Managing Editor at TG Daily, and contributing to publications like Tom's Guide and Tom's Hardware.

-

awood28211 Shenanigans! To the test and your article! So much hoopla over what? Browsers that aren't production releases... Pointing out the obvious... No test is valid until it's tested with what everyone will get... not just "beta" or "developer" versions.Reply

Isn't there a Apple product you could be covering? -

Silmarunya Falsified test results are one of the most vile things in the world of science and computing. Kill the W3C!Reply

-

vant Anyone with half a brain would realize testing unreleased products vs released products (when their beta release is available) is unfair.Reply -

IzzyCraft Standards lol takes 10 years for something to even reach the level of close to becoming a standard.Reply

It would take someone with alot of time on their hands just to sort though their test suite which could have been somewhat automated imo, i just think there are a bunch of lazy people at W3C -

de_com Guys, your missing the point. The W3C is one of the most respected benchmarks there is. If they can't be seen to be objective and above reproach then what's the point. True, they made a dogs dinner of this one and even changed results when queried on their validity without giving any explanations at all, bad form.Reply

We need more whistle blowing of this type to keep the testers and such on their toes, they need to know they can't and wont get away with passing off biased results, even if they're useless (Beta and Developer Versions) after all.

-

ikefu Inconsistent data sources used to state a finding as fact then shortly followed by a small print retraction posted after the fact...Reply

Yep, this test is about as unbiased as Fox News and CNN are completely fair and balanced. -

rallende It's about time a Tom's Hardware reporter actually did thier own investigative journaling rather than site another site's data. You sir, have my support. But can you really test stable browers against beta browsers and still have accurate numbers? Shouldn't the test have benn done amongst all beta or alpha browsers?Reply