Intel's 100 Series Platforms Feature Less Connectivity Than You Might Expect

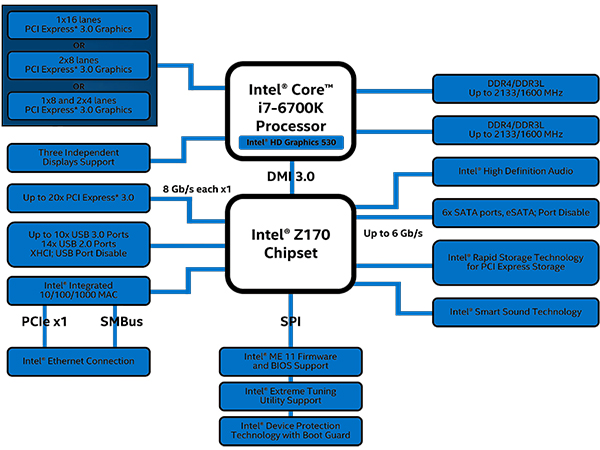

In Intel's latest 100 Series chipsets, the company promised an impressive increase in the connectivity of its mainstream platform. USB 3.0 support improved, and the aging PCI-E 2.0 lanes coming from the chipset were replaced by a large number of PCI-E 3.0 lanes. These improvements sounded great when Intel originally showed us the series, but now we have found out the chipset isn't capable of offering everything we expected.

Following The Trail Of Breadcrumbs

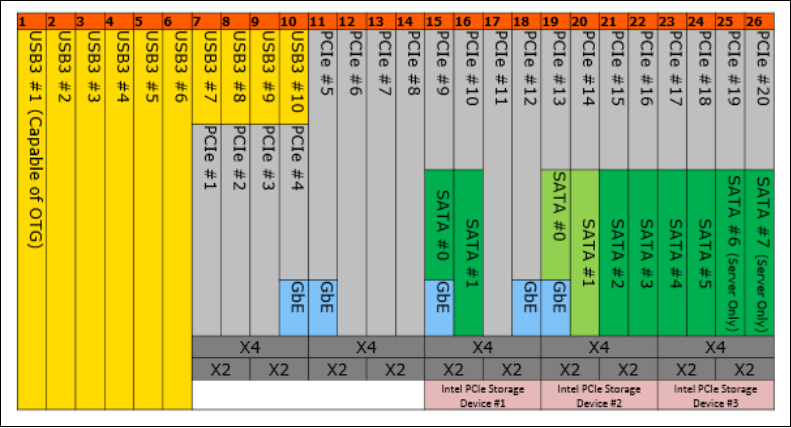

In the most feature-rich 100 series chipset, the Z170, Intel specified that the platform would provide 10 USB 3.0 ports, 6 SATA 3.0 connections and as many as 20 PCI-E 3.0 lanes. Intel also listed a new feature: HSIO lanes (High-Speed I/O lanes). Initially, we knew that the PCI-E 3.0 lanes and HSIO were related, with PCI-E 3.0 lanes essentially being leftover HSIO lanes. But we didn't know that the SATA, USB 3.0, and gigabit Internet connection ran through these HSIO lanes, too.

Every SATA and USB 3.0 connection or port consumes an entire HSIO lane, as does each PCI-E and gigabit LAN connection. When all the SATA, USB 3.0 ports and LAN are used, there are only nine HSIO lanes remaining, which means that at most, only nine PCI-E lanes can be configured. But if the chipset can't support the full number of PCI-E lanes, then why did Intel say it can?

Explaining The Magic

It turns out that the connectivity specs Intel reported on its 100 series chipsets are just the max supported connections. For example, Z170 supports as many as 10 USB 3.0 ports, and 6 HSIO lanes are permanently configured as 6 USB 3.0 connections. If an OEM wants more, they can configure an additional four USB 3.0 ports, but this will consume the four HSIO lanes typically allocated to PCI-E lanes one through four.

| Intel 100 Series Consumer Chipsets | |||

|---|---|---|---|

| Chipset | Z170 | H170 | H110 |

| CPU PCI-E 3.0 Config Support | 1 x 162 x 81 x 8 + 2 x 4 | 1 x 16 | 1 x 16 |

| I/O Port Flexibility | Yes | Yes | No |

| Maximum HSIO Lanes | 26 | 22 | 14 |

| Max Chipset PCI-E Support | 20 PCI-E 3.0 Lanes | 16 PCI-E 3.0 Lanes | 6 PCI-E 2.0 Lanes |

| Max PCI-E Support WhenAll SATA/USB 3.0/ and GbE Used | 9 | 7 | 5 |

| USB Support (USB 3.0) | 14 (10) | 14 (8) | 10 (4) |

| SATA 3.0 Ports | 6 | 6 | 4 |

Similarly, none of the SATA ports are configured by default and can be configured onto various HSIO lanes, but this too will reduce the number of PCI-E lanes available. Things get worse when you look at PCI-E storage devices, which consume four PCI-E lanes and can be configured only in one of three ways. A PCi-E storage device can be connected to HSIO lanes 15-18, 19-22, or 23-26; however, they cannot be connected to any other group of 4 HSIO lanes.

The first PCI-E Storage Device can be configured without much issue, but enabling a second PCI-E storage device will also knock out at least two SATA ports.

On the PCI-E side of things, although PCI-E 3.0 provides significantly more bandwidth than the older PCI-E 2.0 standard, Intel has limited the use of these lanes to either x1, x2, or x4 configurations. This is likely to compensate for the very limited DMI 3.0 connection running between the CPU and the PCH, which is only capable of roughly 4 GB/s of bi-directional bandwidth. That is the same bandwidth that four PCI-E 3.0 lanes would have, and as such, the PCH isn't capable of feeding devices running at more than a PCI-E 3.0 x4 connection.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Intel 100 Series Business Chipsets | |||

|---|---|---|---|

| Chipset | Q170 | Q150 | B150 |

| Maximum HSIO Lanes | 26 | 20 | 18 |

| USB Support (USB 3.0) | 14 (10) | 14 (8) | 12 (6) |

| SATA 3.0 Ports | 6 | 6 | 6 |

| Chipset PCI-E Lanes | 20 PCI-E 3.0 | 10 PCI-E 3.0 | 8 PCI-E 3.0 |

| Max PCI-E Support WhenAll SATA/USB 3.0/ and GbE Used | 9 | 5 | 5 |

We asked Intel if this limited bandwidth would negatively impact performance on the platform, and an Intel representative told us that it is unlikely that a sufficient number of devices connected to the PCH would be in use at the same time to saturate the connection.

Are We In The Oven Or Eating The House Made Of Candy?

To be fair, Intel created the HSIO system in an attempt to give OEMs broader control when configuring their motherboards, and the company has succeeded in that goal. However, the problem with this setup is that it gives users the impression that the chipset is capable of much more than it actually is, and that may disappoint some customers.

OEMs could take advantage of third-party controllers in order to partially overcome these issues, adding more SATA or USB 3.0 ports, but that will raise the overall cost of the motherboards and will result in less performance because of the limited bandwidth. In the end, users are getting less in these chipsets than we originally expected, but with plenty of USB and SATA ports available, it is hard to say how big of a problem this will be. Are we getting less than we paid for, or are we still in for a sweet experience?

Update, 9/30/15, 4:30pm PT: Clarified the way in which PCI-E storage devices are configured.

Follow Michael Justin Allen Sexton @EmperorSunLao. Follow us @tomshardware, on Facebook and on Google+.

-

electric_mouse No big surprise here.... Semiaccurate covered this back before the Broadwell launch.Reply

Part of the point of Skylake and Broadwell is to smash AMD and NVIDIA by reducing the number of PCIe lanes available to make it much less compelling to use a discrete graphics card rather than just settling for integrated graphics. Intel wants you to just play Candy Crush. -

InvalidError Most people use only a fraction of the total connectivity available on any given CPU and chipset configuration, so most people won't notice the difference.Reply

Since the SERDES are practically the same between PCIe, USB3 and SATA, expect Intel (and AMD) to use even more HSIO/multi-purpose serial ports in the future instead of dedicated standard-specific lanes to let board manufacturers decide what mix of IOs to offer. Ultimately, we may end up with chipsets that have 30 universal lanes shared any which way between the chipset's PCIe, SATA, USB3(.1), Ethernet and other media access controllers. -

dgingeri I noticed this when they first came out.Reply

The worst thing is the arrangement of the slots. If all the SATA ports are used, there isn't a way to get more than one M.2 PCIe x4 slot, using PCIe ports 9-12. To get a second, the motherboard manufacturer would have to disable two SATA ports to use PCIe lanes 17-20. In addition, the chipset lanes cannot be used to make a x8 slot at all. This setup severely limits the possibilities on how the I/O can be used. The desktop chips, and the Xeon E3 series with them, are severely limited as to what hardware can be used with them. -

jimmysmitty Reply16710208 said:I noticed this when they first came out.

The worst thing is the arrangement of the slots. If all the SATA ports are used, there isn't a way to get more than one M.2 PCIe x4 slot, using PCIe ports 9-12. To get a second, the motherboard manufacturer would have to disable two SATA ports to use PCIe lanes 17-20. In addition, the chipset lanes cannot be used to make a x8 slot at all. This setup severely limits the possibilities on how the I/O can be used. The desktop chips, and the Xeon E3 series with them, are severely limited as to what hardware can be used with them.

You are not considering that M.2 is a relatively new interface and before only one was available. Two is pretty overkill since one is capable of up to 32Gbps connections so what would be the downside to having two less SATA ports?

This is consumer end as well. Most consumers are not going to max out SATA ports and M.2.

The extra lanes were never able to be used to make an x8 slot and the only thing that benefits from that is a GPU, or server grade PCIe SSD but who is going to pay through the nose for that and buy a lower end setup?

If you want more than 2 GPUs you can get the EVGA Z170 board with the PLX chip but again it starts to move into the realm of X99 that is made more for high end enthusiasts who want more connections anyways. -

CyranD Reply16710139 said:No big surprise here.... Semiaccurate covered this back before the Broadwell launch.

Part of the point of Skylake and Broadwell is to smash AMD and NVIDIA by reducing the number of PCIe lanes available to make it much less compelling to use a discrete graphics card rather than just settling for integrated graphics. Intel wants you to just play Candy Crush.

That makes no sense at all. Discrete Video cards uses the PCI-E lanes from CPU not chipset. What this article about does not affect AMD/NVIDIA video cards at all.

Skylake cpus have 20 pci-e 3.0 lanes exactly the same as haswell/broadwell. It the chipset pci-e lanes that have changed.

-

SteelCity1981 if intel would just use QPI for their mainstream cpu's this 4gb memory controller bandwidth limit would be a non issue, but they won't they keep QPI reserved for intel's extreme editions and make you pay a premium for that luxury...Reply -

CyranD Reply16710490 said:if intel would just use QPI for their mainstream cpu's this 4gb bandwidth limit would be a non issue, but they won't they keep QPI reserved for intel's extreme editions and make you pay a premium for that luxury...

I am pretty sure the extreme edition still uses the DMI to connect to the chipset. QPI is used on muti-cpu motherbords to connect CPU to CPU .

It use to be used on the extreme edition(x58) to connect the memory controller but that no longer needed since it integrated into the cpu. -

IInuyasha74 Yea, the bandwidth between the CPU and chipset does concern me. They got by with just 2 GB/s of bi-directional bandwidth on DMI 2.0, so the new DMI 3.0 may adequately keep the chipset feed. That is one area in which AMD greater surpasses Intel, as they use HyperTransport on all of their motherboards.Reply -

SteelCity1981 Reply16710490 said:if intel would just use QPI for their mainstream cpu's this 4gb bandwidth limit would be a non issue, but they won't they keep QPI reserved for intel's extreme editions and make you pay a premium for that luxury...

I am pretty sure the extreme edition still uses the DMI to connect to the chipset. QPI is used on muti-cpu motherbords to connect CPU to CPU .

It use to be used on the extreme edition(x58) to connect the memory controller but that no longer needed since it integrated into the cpu.

all E/EP editions starting with Nehalem use QPI and the x58 didn't have a memory controller on its chipset. if you recall the memory controller was integrated onto the Nehalem arch. -

CyranD Reply16710490 said:if intel would just use QPI for their mainstream cpu's this 4gb bandwidth limit would be a non issue, but they won't they keep QPI reserved for intel's extreme editions and make you pay a premium for that luxury...

I am pretty sure the extreme edition still uses the DMI to connect to the chipset. QPI is used on muti-cpu motherbords to connect CPU to CPU .

It use to be used on the extreme edition(x58) to connect the memory controller but that no longer needed since it integrated into the cpu.

all E/EP editions starting with Nehalem use QPI and the x58 didn't have a memory controller on its chipset. if you recall the memory controller was integrated onto the Nehalem arch.

You right about memory controller and that it has QPI. I am right that QPI is not used to communicate with the X99 chipset. Look at any block diagram for x99 similar to the first picture in this article. It shows the cpu connected to x99 through DMI 2.0. All the other lines coming from cpu is QPI but not that one.