Three Factory-Overclocked, High-End Graphics Cards

Why buy a standard model when you can get the top-of-the-line? Treat yourself to the good stuff! We take three premium graphics cards for a spin to see just what kind of optional extras you can get when purchasing a factory-tweaked non-reference board.

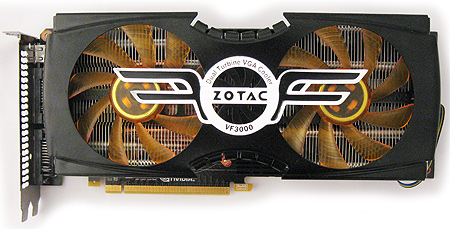

Zotac GeForce GTX 480 AMP! Edition

We've been impressed by Zotac's factory-overclocked AMP! edition cards in the past. This time around, the company is working with Zalman in order to provide a card with a more effective aftermarket cooler. Certainly, one of the main detractors from Nvidia's GeForce GTX 480 is how hot the card gets, and how noisy the stock cooler can be. So, if a new cooler can fix these issues, this product has the potential to be a particularly attractive option. At $510 on Newegg, this board is about $60 more than the lowest-priced reference GeForce GTX 480s.

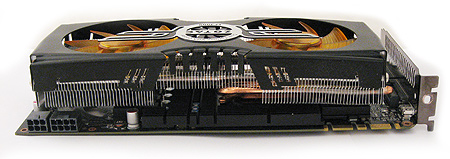

The $60 price premium gets you a Zalman VF3000 graphics card cooler, which is a large and effective unit with dual-axial fans and five heat pipes designed to pull the high temperatures from the hot GF100 GPU. At the time of writing, the delta between this card and the reference pack was only $20. Since the Zalman VF3000F cooler will cost in the neighborhood of $50 when it's released (It hasn't made it to retail at time of writing), that seemed like a very reasonable deal. Now, it's a little less impressive in the face of cratering prices on the GTX 480s.

As with the rest of our factory-overclocked models, good case airflow is a must, as the hot air is not forced out of the back of the case. Instead, most of it will find its way back into the enclosure. Note that the Zotac GeForce GTX 480 AMP! edition card is the only model in our roundup that monopolizes three expansion card slots, due to the massive Zalman cooler.

The card's bundle includes some standard items, such as a driver CD, a manual, a DVI-to-VGA dongle, and a Molex-to-PCIe power adapter. But there are a couple of adapters that I'm not used to seeing: a mini HDMI-to-HDMI adapter and a dual-six-pin-to-eight-pin-PCIe power adapter. The card doesn't have any value-added software, but there are some 30-day trials of CUDA-accelerated software like the Badaboom video encoder. Zotac offers a five-year warranty with this AMP! edition card (and limited lifetime within the US), which is fantastic compared to the competition.

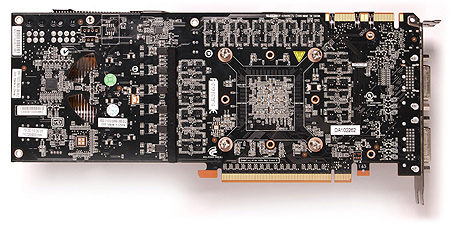

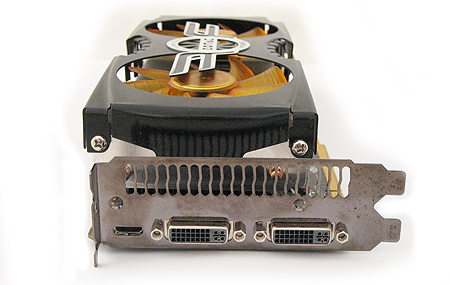

The PCB is 100% reference, complete with unused holes for the cross-flow fan with which the standard model comes equipped. Of course, this doesn't detract from the product. Just like the reference card, the GeForce GTX 480 comes with 1536 MB of GDDR5 memory. The outputs mirror the reference card, with two dual-link DVI options and a single mini-HDMI port. Because the GF100 includes two independent display pipelines, you can only use two of this card's three outputs at any given time.

This factory-overclocked AMP! edition card has a core speed of 756 MHz (56 MHz above reference), a shader speed of 1512 MHz (111 MHz over reference), and a memory speed of 950 MHz (26 MHz/104 MT/s effective over reference). As far as we know, the fastest factory-overclocked GeForce GTX 480 is the EVGA GeForce GTX 480 SuperClocked+ model, with a mere 4 MHz more on the core (760 MHz). Yet, the EVGA card has a 61 MHz lower shader speed, so we think it's reasonable to say that the Zotac AMP! card has the highest factory overclock you can get on a GeForce GTX 480. At idle, the card's clocks drop to a miserly 50.5 MHz core/101 MHz shader/67.5 MHz memory to keep things as efficient as possible.

Overclocking

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

As a testament to the effectiveness of the cooling system, we were able to overclock the Zotac card's core to 825 MHz, its shaders to 1650 MHz, and it memory to 1050 MHz. We achieved this with MSI's Afterburner overclocking utility that, fortunately, allowed us to adjust clock rates and voltages. We increased voltage from 1.05 V to 1.138 V, and we increased the fan speed to 100% to keep temperatures down.

Current page: Zotac GeForce GTX 480 AMP! Edition

Prev Page Gigabyte GV-R587SO-1GD SUPER OVERCLOCK Next Page Test System Setup, Benchmarks, And 3DMarkDon Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

knutjb Good to see sensible conclusions, bang for the buck.Reply

Amazing how well the ATI cards are doing given their time on the market. -

Jax69 i am amazed by ati cards after one year on the market is still strong as hell. very good amdReply -

jonsy2k I'm not liking the trend of these cards consuming more and more pci slots to be honest.Reply -

h83 So, the conclusion is that the only good point about those factory overclocked cards are their coolers...Reply -

Tamz_msc ReplyAliens vs. Predator favors the Radeons, just like Crysis favors the GeForce cards. However, the playing field remains very close

The graphs tell otherwise.

-

The Lady Slayer It's a shame the Big Green has paid off so many game developers that we'll never see a 'true' comparison between ATI & nVidiaReply