Early Verdict

You’ll need a very specific workload to get the most out of the HighPoint SSD7101A-1 models. These are professional products designed for serious work, but we like the bare model for the DIY crowd because you can fill the adapter with low-cost SSDs.

Pros

- +

DIY option

- +

Open platform (not locked to other hardware)

- +

Excellent cooling

- +

Heavy workload performance

- +

Working TRIM

- +

Hassle-free option with drives

- +

Wide capacity range

Cons

- -

Low random performance

- -

Average NVMe performance in light workloads

- -

Expensive for consumer use

Why you can trust Tom's Hardware

Specifications & Pricing

Where can you get an 8TB SSD that will work on your workstation without being locked into a proprietary system? The new HighPoint SSD7101 series is such a product, but you don't have to spend $6,500 to achieve a blistering 13,500 MB/s of performance. The company offers several solutions including a DIY model so you can add your own M.2 SSDs. Do you need it? Most likely not. Do you want it? Yes, you do!

HighPoint will soon release seven new products designed to satisfy performance enthusiasts. The upcoming SSD7101 series comes to market in a user-friendly bare DIY package or loaded with Samsung 960 EVO or Samsung 960 Pro NVMe drives. The possibilities are virtually endless, and unlike comparable products, you aren’t locked into a specific motherboard or PC brand.

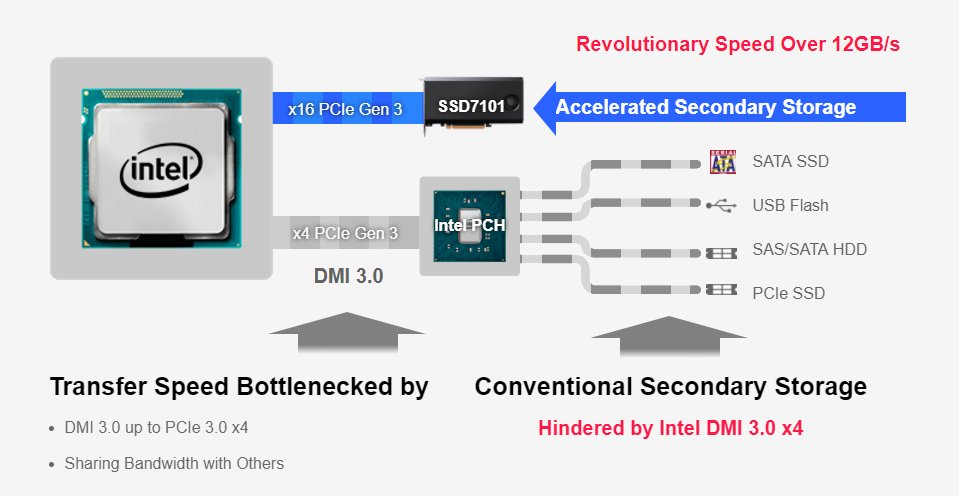

There are several benefits to using an add-in card over your system's existing M.2 ports. Most consumer-focused platforms, like Z270, only feature two M.2 ports, though some motherboards host three. Unfortunately, the ports usually route through the restrictive DMI bus that only provides a PCIe 3.0 x4 connection for all the devices connected to the PCH. Even normal use, like using a USB device or playing audio, will slow your storage system by chewing into the available bandwidth.

Products like the HighPoint SSD7101 really shine when you move away from the mainstream platforms and into workstation-class hardware. Intel's new X299 platform supports vROC (Virtual RAID On CPU), a feature carried over from the enterprise market. The feature allows you to build RAID 0 arrays with up to 20 PCIe-linked storage devices. The array is bootable in RAID 0 without any add-ons, but you must purchase a dongle key to use SSDs other than Intel's. The standard key enables RAID 1 functionality, but Intel will also offer a premium key that unlocks RAID 5 for data redundancy.

We would love to tell you more about the feature, but we are limited to the information that is publicly available. Intel's vROC FAQ page refers us to a white paper for more details, but as of October 2017, the document doesn't exist for public viewing. We've spoken with several motherboard vendors, but they also do not have the document that outlines how to use non-Intel SSDs. We will circle back and test Intel's vROC features once the keys are available.

The other new workstation platform comes from AMD. The Threadripper CPU paired with the X399 chipset will support bootable NVMe RAID via a platform upgrade that recently came in the form of a BIOS update and driver package.

The best part about the two new workstation-class platforms is the PCIe lanes route directly to the CPU and not through the restrictive DMI bus.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

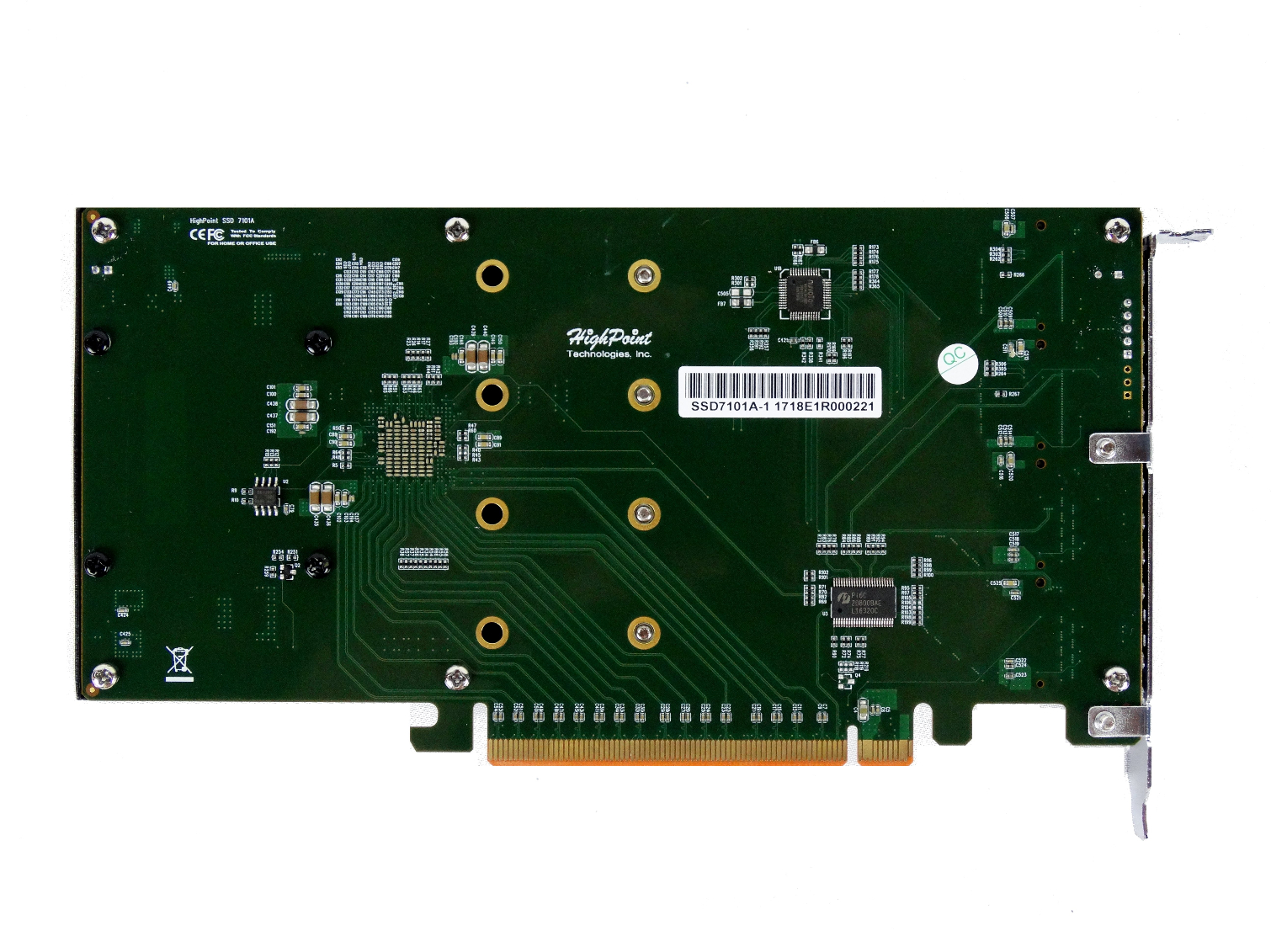

Most of the current 4x M.2 adapters are dummy products. There is very little logic, so the drives just appear to your system as four independent SSDs. That means you have to build the array with software RAID. For Windows systems, software RAID is not bootable.

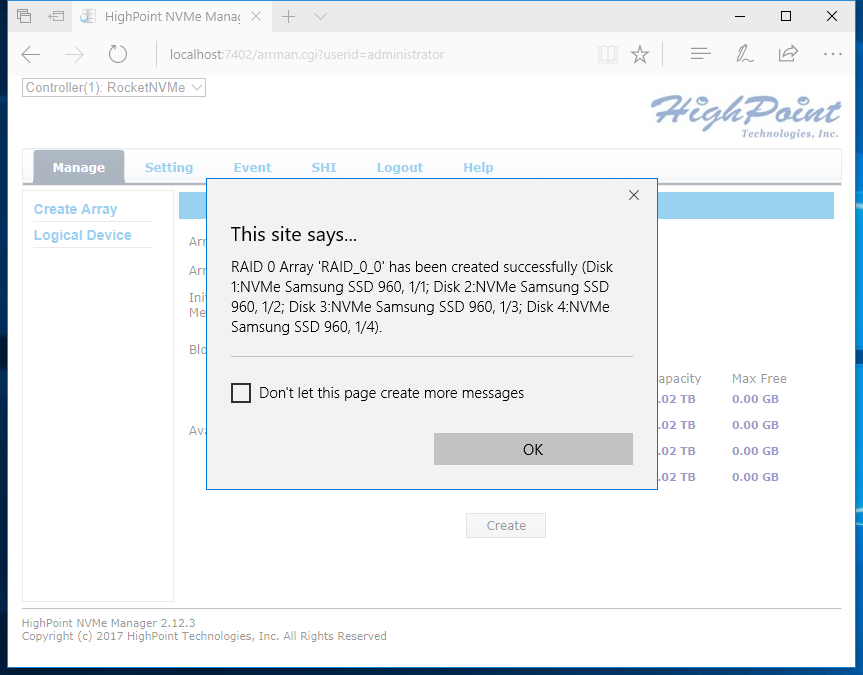

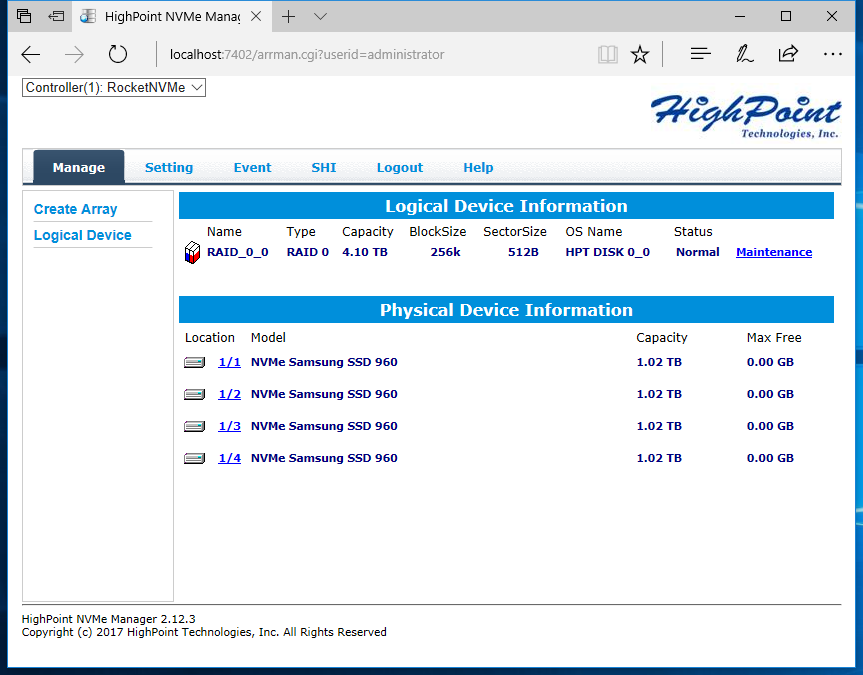

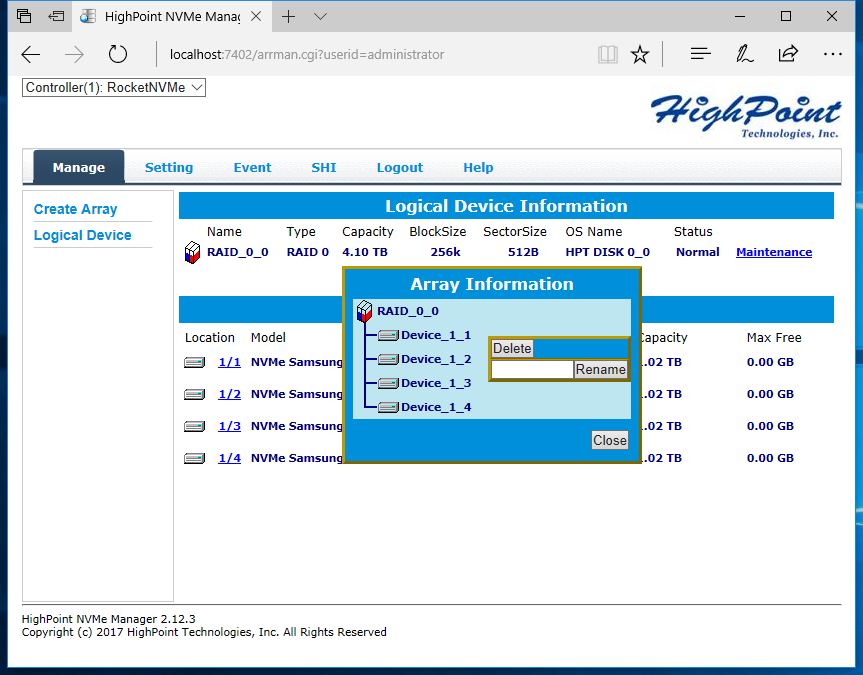

Window's software RAID presents several challenges for storage testing. Some of our advanced software addresses the storage as a physical drive, but it cannot address the volume as a logical drive. We ran into the issue in our Aplicata Quad M.2 PCIe x8 Adapter review. HighPoint is the first company we've seen that includes a specific NVMe RAID driver and software package for this type of product. Not only do you get a very easy and intuitive way to build an array, but it also presents the array as one logical drive in the disk manager.

Specifications

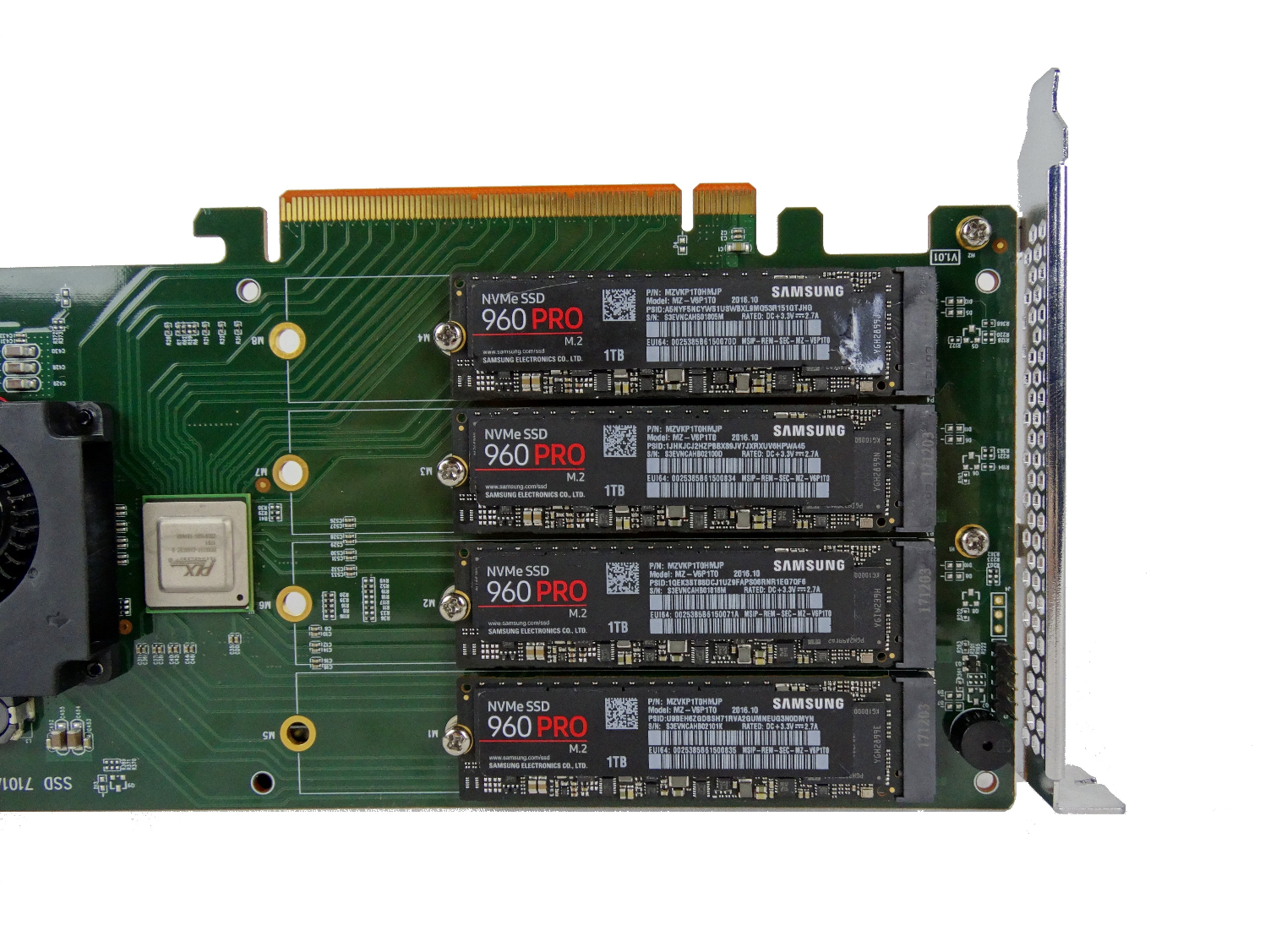

NVMe SSDs are already fast, but HighPoint chose the fastest on the market and partnered with Samsung to use the 960 Pro and 960 EVO. Every loaded SKU delivers at least 13,000 MB/s of sequential read performance. Sequential write performance spans from 6,000 MB/s on the low end, and up to 8,000 MB/s on the high end. These numbers are fairly conservative, but what's a thousand or so megabytes per second when you're dealing with this much throughput?

HighPoint breaks the SSD7101 series into three groups. The SSD7101A models use Samsung's 960 EVO NVMe SSDs, and the SSD7101B models use Samsung 960 Pro NVMe SSDs. HighPoint broke the groups up into three models based on capacity. HighPoint planned to offer two models with just two drives but later canceled the products. The website still lists those products, but they will never come to market. All the models will feature four SSDs.

The HighPoint SSD7101A-1 is a bare adapter that you can load with your own drives, so it will be popular with many of our readers. It's important to understand that you don't have to run the adapter in RAID. You can use it as a pure HBA (Host Bus Adapter) to take advantage of the raw capacity with individual SSDs that you already own. You can also run SSDs other than Samsung's. We tested the adapter with four low-cost MyDigitalSSD BPX 240GB SSDs and the performance was as spectacular as you can imagine, but it wasn't as fast as the 960 Pro drives that Samsung sent us.

HighPoint's driver and software package also enable an advanced configuration that will give you both performance and sticker shock. You can use more than one adapter in your system to reach up to 25,000 MB/s of sequential performance. It's a lot of bandwidth, but we doubt most users would even be able to take advantage of the baseline performance offered by a single adapter loaded with four 960 EVO SSDs.

Speaking of performance, you will notice a glaring hole in the adapter specifications; HighPoint doesn't list random performance metrics. Software RAID has issues keeping pace with high-performance NVMe SSDs during random data transfers. In many of our tests, you'll find that the array is actually slower than a single drive during random workloads. You won't notice it in normal applications like Word, Excel, or loading games, but don't expect to break any random performance records in a software RAID array. That's one reason we're so excited to see what Intel's vROC feature can bring to the table.

The HighPoint SSD7101 comes up short in one area. All the competing products we've tested feature host power failure protection via capacitors. The SSD7101 doesn't have that extra layer of data protection. That's an important feature because Samsung's NVMe driver caches user data in volatile cache for a brief time to increase performance, which exposes you to potential data loss during an unexpected power down.

Pricing, Warranty, And Endurance

There are numerous products, so we'll refer you back to the specification chart for individual pricing. The SSD7101A-1 bare drive starts the series out at just $399. The SSD7101B starts with four 250GB Samsung 960 EVO SSDs for $1,099. That jumps to $2,699 for the 4TB model. The SSD7101B with Samsung 960 Pro SSDs starts out at $1,999 for the 2TB model and leaps to $6,499 for a massive 8TB of capacity.

We're testing the SSD7101B-040T armed with four Samsung 960 Pro 1TB SSDs for a total of 4TB of high-speed performance.

HighPoint covers the SSD7101 series with a one-year warranty. That's significantly less than the 960 Pro’s five-year warranty and the 960 EVO’s three-year warranty. We're not sure if users could just go back to Samsung for warranty service on the individual drives.

The endurance ratings are in line with the drives inside the enclosure. Endurance scales with capacity and varies between the 960 EVO and Pro. The series starts on the low end with a 400 TBW rating, and that jumps to 4,800 TBW for the large 8TB model.

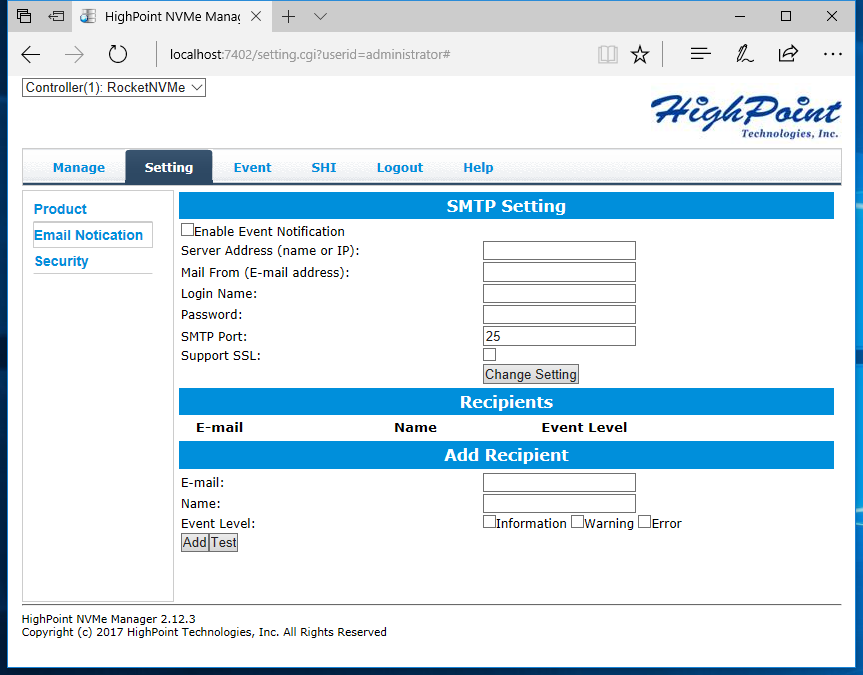

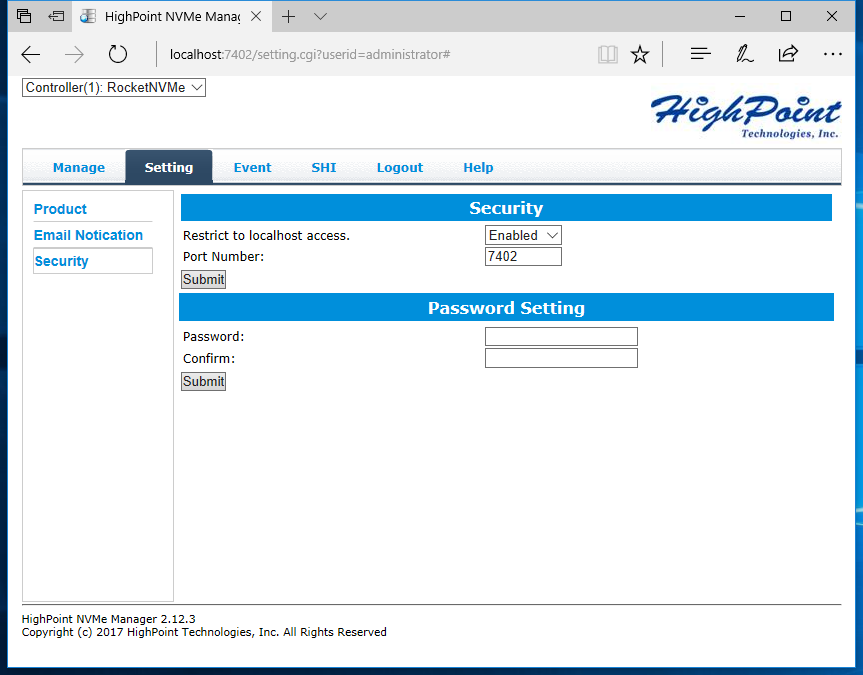

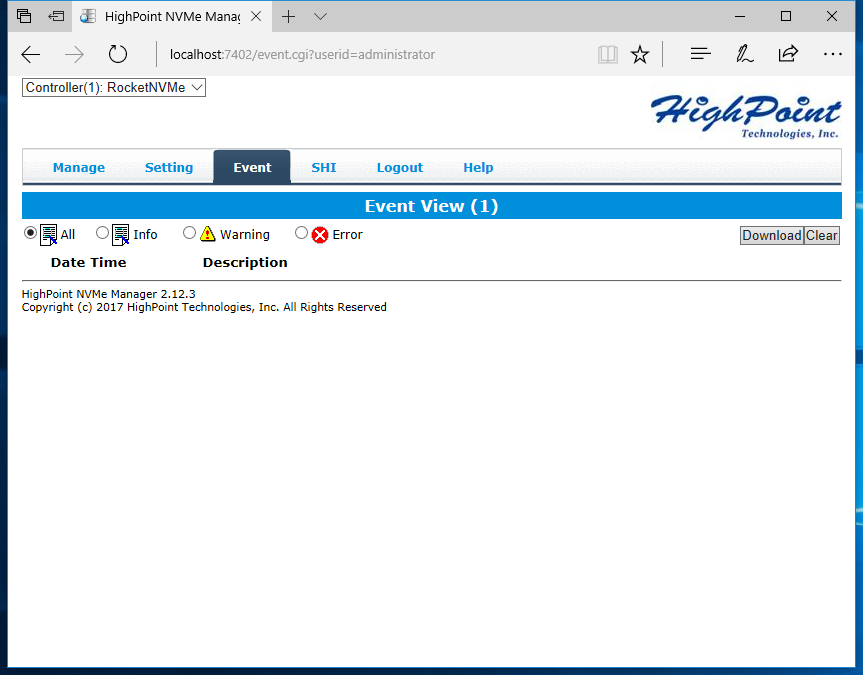

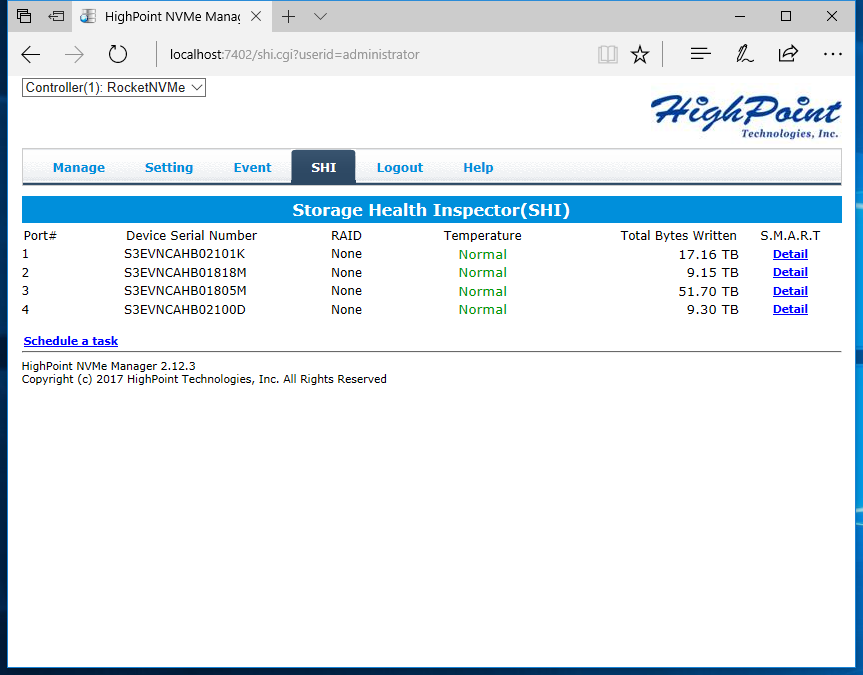

Software

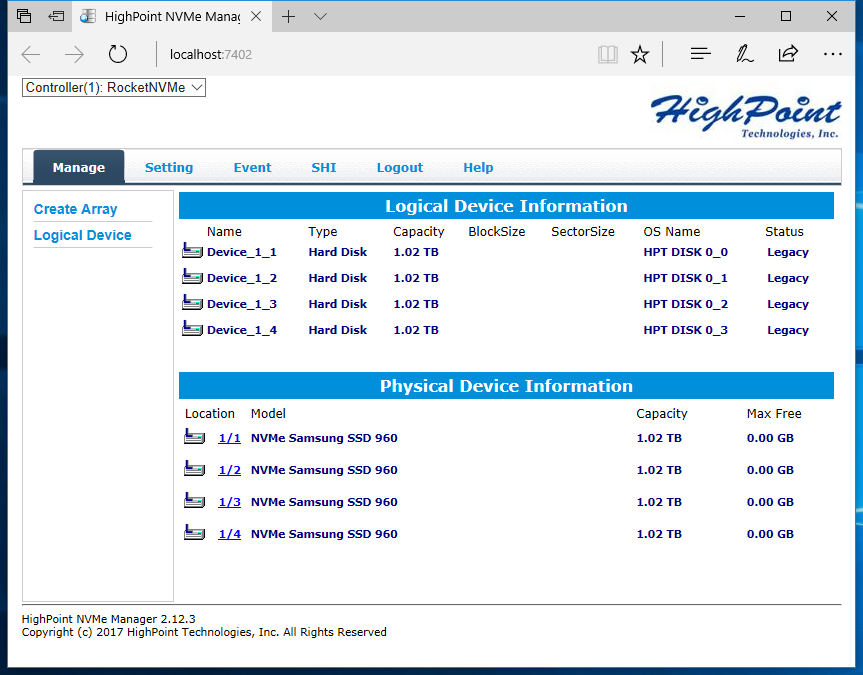

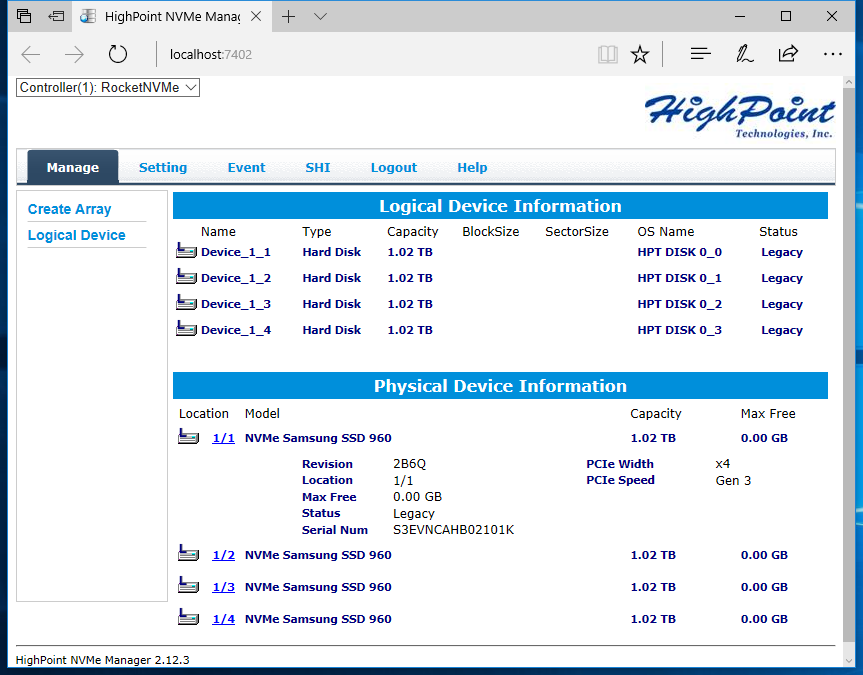

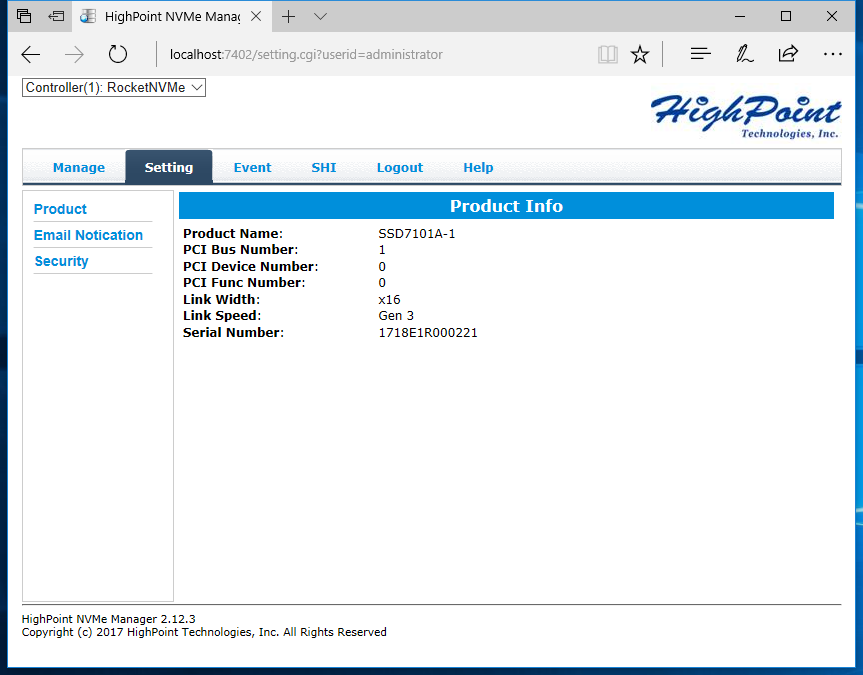

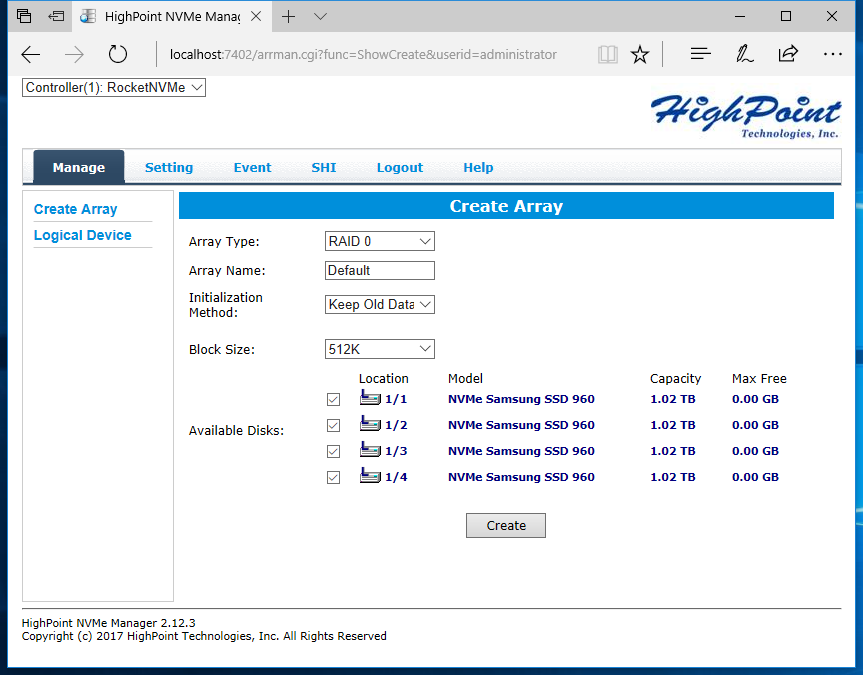

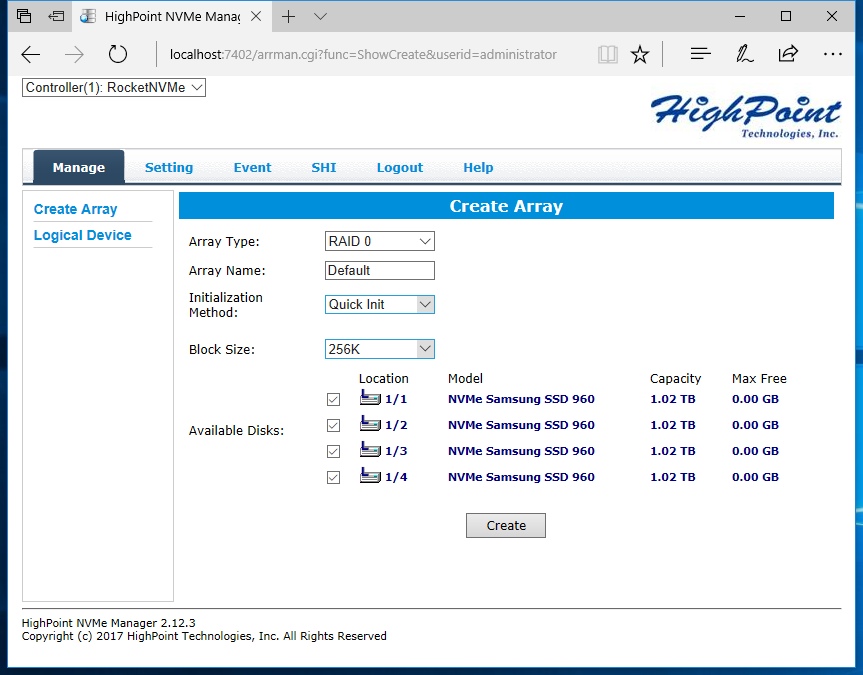

The software is intuitive, but it also provides details that you might not expect. It's really like having an old-school hardware RAID controller, just simplified.

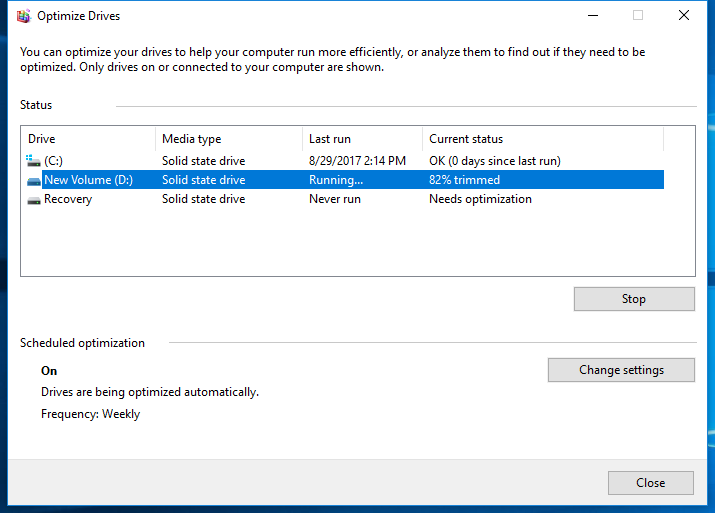

The SSD7101 comes with a custom NVMe driver, which brings a unique function that we haven't seen with other competing products: it enables TRIM in Windows. That's important because it allows the array to take clean-up tips from the operating system. This helps to keep your array running at high speeds, even after heavy use. The other platforms we've tested so far do not pass the TRIM command because the Windows software array doesn't support this feature. We expect Intel's vROC to pass TRIM, but we haven't confirmed that yet.

We tested the adapter on Z170, Z270, X99, and X299 systems, and they didn't have any issue recognizing the adapter or the SSDs. In the Z170 and Z270, the only way to utilize the full 16 lanes of bandwidth is to use the onboard video. A discrete video card would limit the second full-length PCIe slot to just eight lanes. You don't have that problem on the workstation-class X99 and X299 systems, so HighPoint recommends using the SSD7101 series in workstations.

Packaging

Our HighPoint sample arrived before the retail packages were printed. The box is straightforward; you get the adapter, four screws for mounting drives, a paper manual, and a sticker with the serial number.

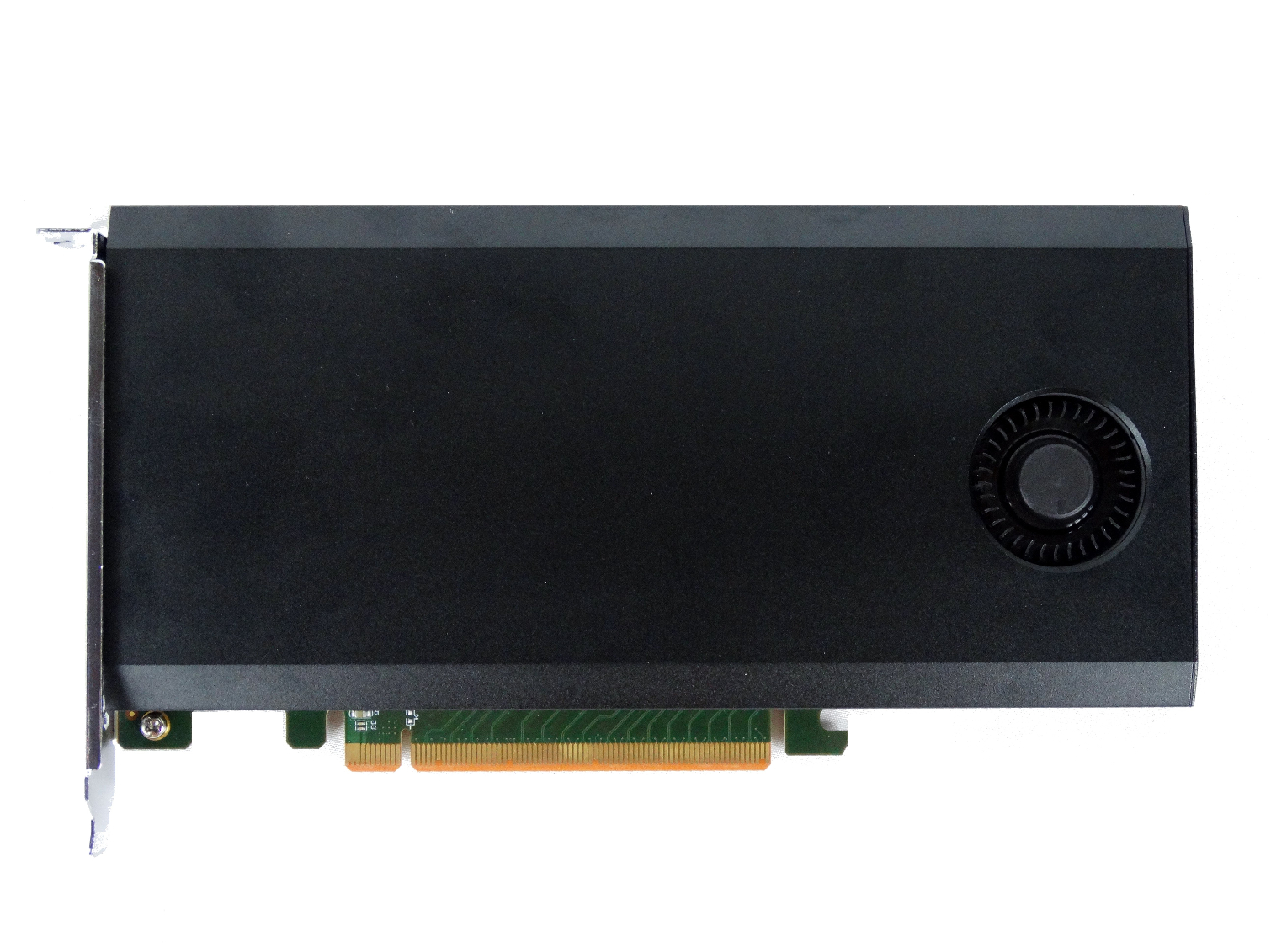

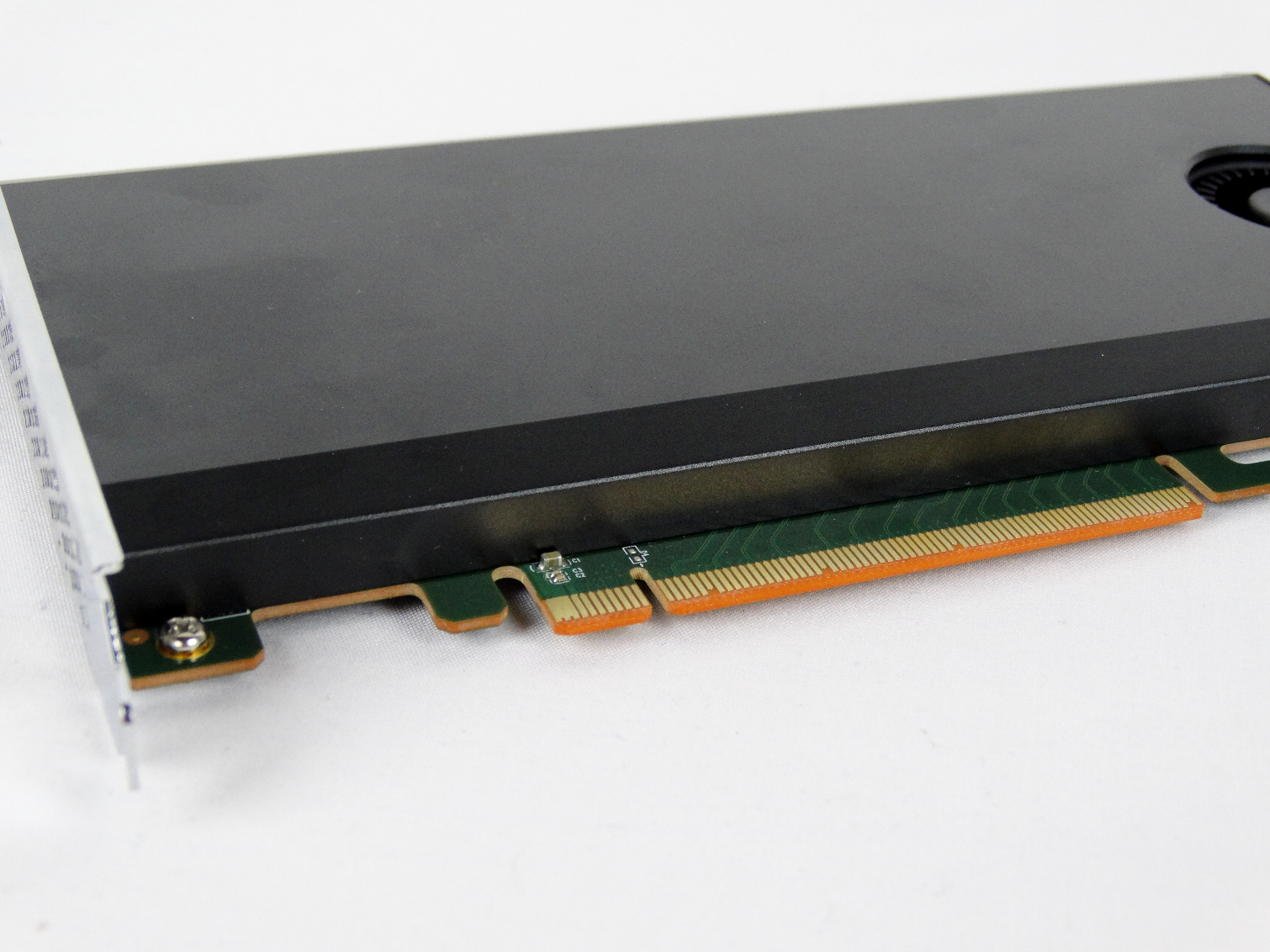

A Closer Look

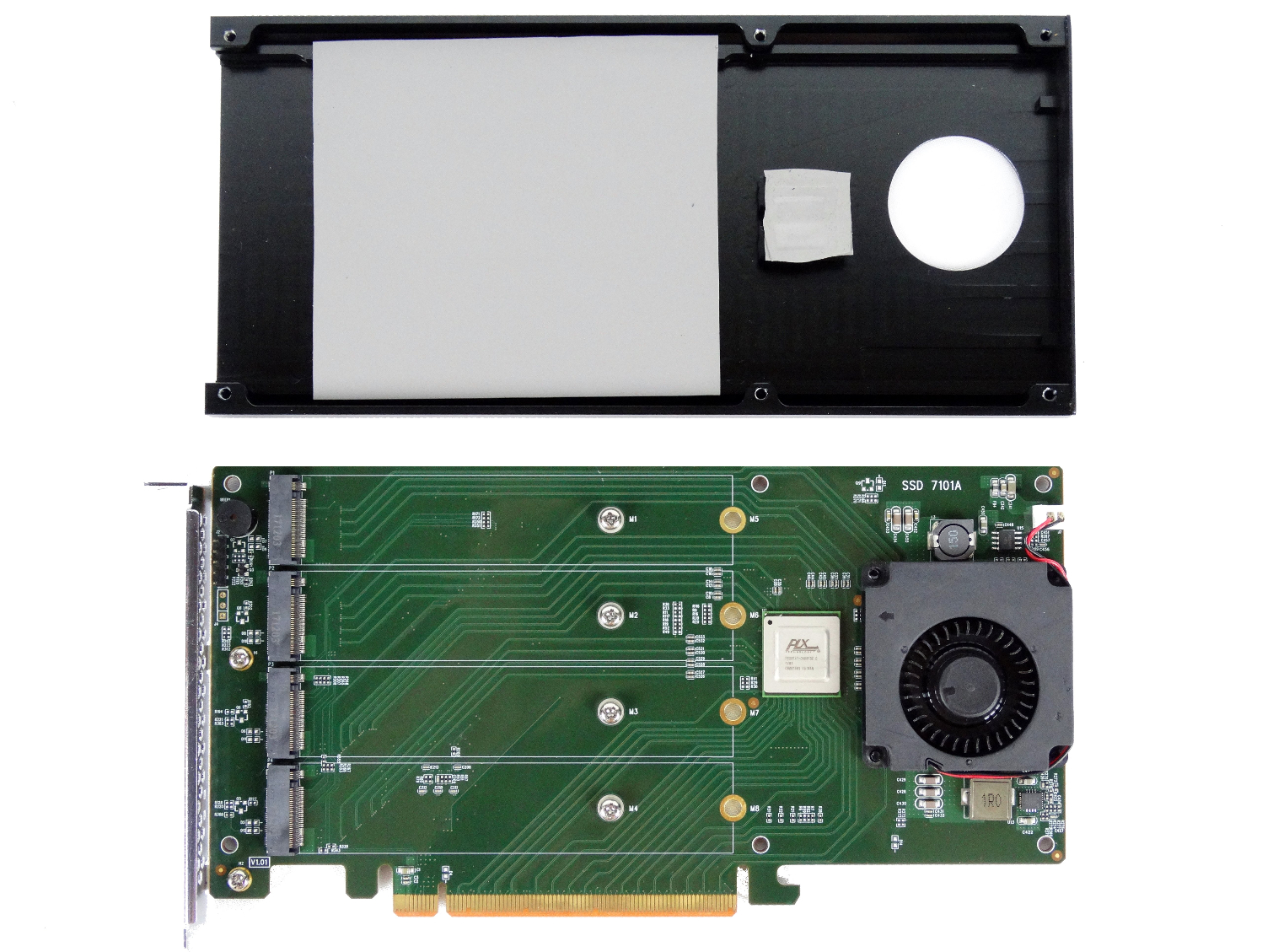

In the pictures, the top cover looks like a large plastic piece, but it's actually made of aluminum. A squirrel cage fan blows air through the inside of the heatsink and pushes air around the drives and also cools the PLX bridge.

The Tear Down

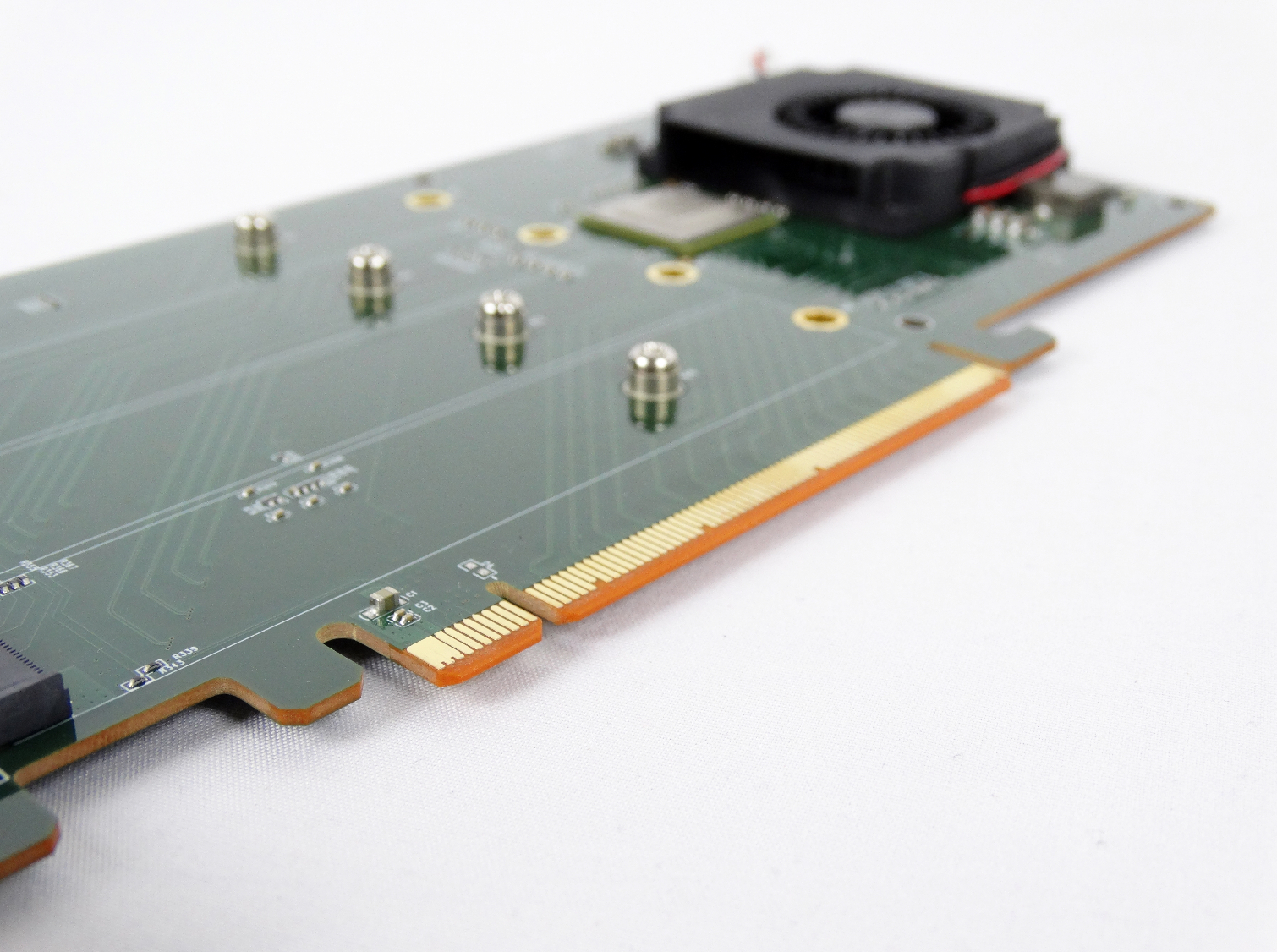

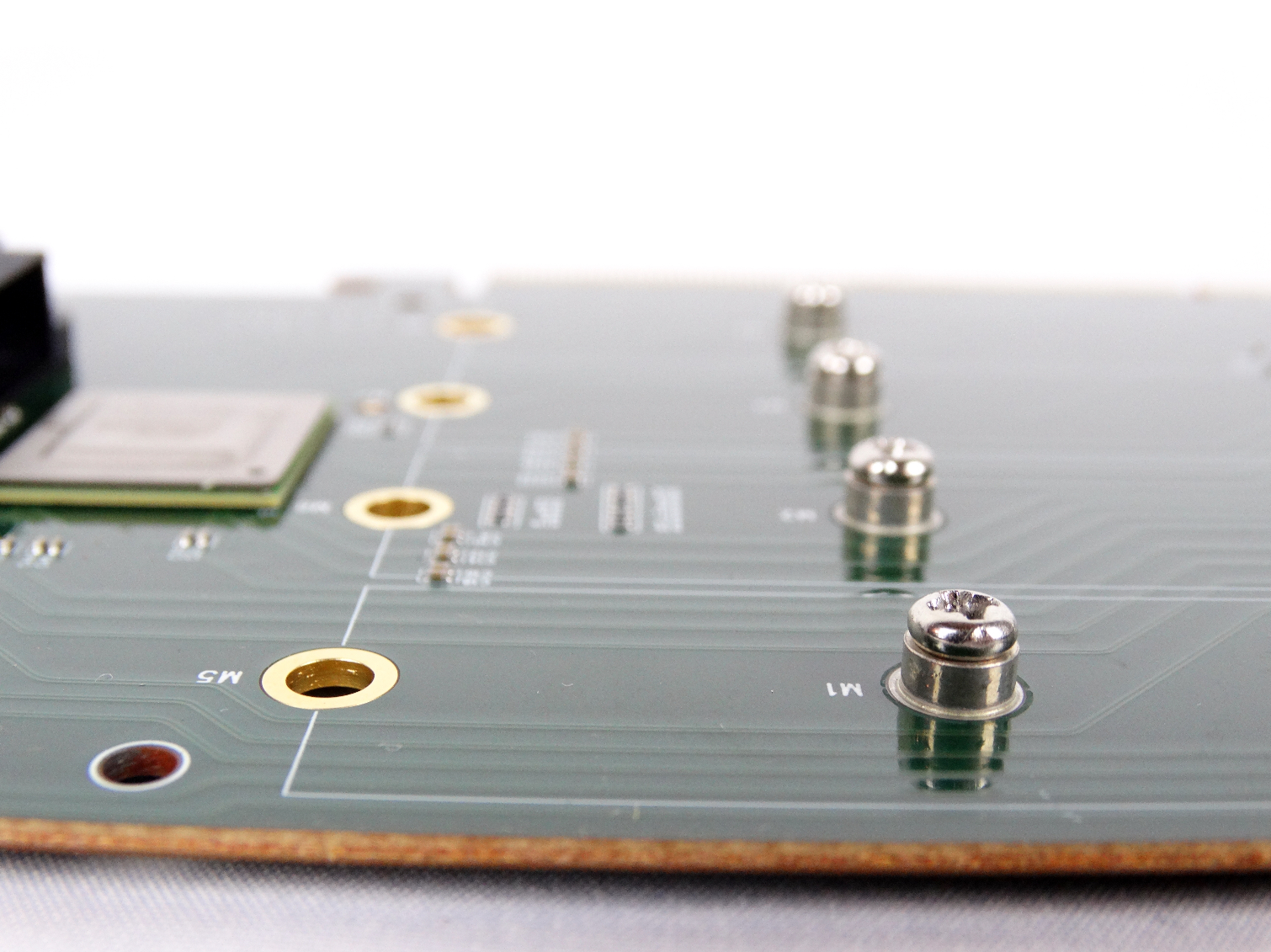

The adapter supports two M.2 form factors -- the widely-used 2280 and the 22110 that's used primarily in high-capacity enterprise NVMe SSDs.

We also get a better look at the fan that cools the components. Under full load, the adapter is very quiet. We couldn't differentiate the fan noise from the typical system fans.

The heatsink uses thermal transfer material between the drives and the heatsink. This helps to provide good contact and maximizes heat transfer from the controller to the large heatsink.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

Chris Ramseyer was a senior contributing editor for Tom's Hardware. He tested and reviewed consumer storage.

-

gasaraki So this is a "RAID controller" but you can only use software RAID on this? Wut? I want to be able to boot off of this and use it as the C drive. Does this have legacy BIOS on it so I can use it on systems without UEFI?Reply -

mapesdhs I'll tell you what to do with it, contact the DoD and ask them if you can run the same defense imaging test they used with an Onyx2 over a decade ago, see if you can beat what that system was able to do. ;)Reply

http://www.sgidepot.co.uk/onyx2/groupstation.pdf

More realistically, this product is ideal for GIS, medical, automotive and other application areas that involve huge datasets (including defense imaging), though the storage capacity still isn't high enough in some cases, but it's getting there.

I do wonder about the lack of power loss protection though, seems like a bit of an oversight for a product that otherwise ought to appeal to pro users.

Ian.

-

samer.forums Reply20284739 said:So this is a "RAID controller" but you can only use software RAID on this? Wut? I want to be able to boot off of this and use it as the C drive. Does this have legacy BIOS on it so I can use it on systems without UEFI?

This is not a Raid controller. it uses software raid. the PLX chip on it allows the system to "think" that the x16 slot is a 4 independent x4 slots thats all , and all active and bootable. thats all. The rest is software. -

samer.forums I see this product very useful for people who want huge SSD storage The motherboard will not give you ... and for Raid 1 . the performance gain in real life for NVME SSD Raid cant be noticed ...Reply -

samer.forums Hey HighPoint if you are reading this , make this card low profile please and place 2 M2 SSD on each side of the card (total 4).Reply -

samer.forums Reply20285047 said:I do wonder about the lack of power loss protection though, seems like a bit of an oversight for a product that otherwise ought to appeal to pro users.

Ian.

there is a U2 Version of this card , using it with built in power loss U2 nvme SSD will solve this problem.

link

http://www.highpoint-tech.com/USA_new/series-ssd7120-overview.htm

-

JonDol I hope that the lack of power loss protection was the single reason this product didn't get an award. Could you, please, confirm it or list other missing details/features for an award ?Reply

The fact alone that such a pro product landed in the consumer arena, even if it is for a very selective category of consumers, is nothing small of an achievement.

The explanation of how the M.2 traffic is routed through the DMI bus was very clear. I wonder if there is even a single motherboard that will route that traffic directly to the CPU. Anyone knows about such a motherboard ?

Cheers -

samer.forums Reply20287411 said:I hope that the lack of power loss protection was the single reason this product didn't get an award. Could you, please, confirm it or list other missing details/features for an award ?

The fact alone that such a pro product landed in the consumer arena, even if it is for a very selective category of consumers, is nothing small of an achievement.

The explanation of how the M.2 traffic is routed through the DMI bus was very clear. I wonder if there is even a single motherboard that will route that traffic directly to the CPU. Anyone knows about such a motherboard ?

Cheers

All motherboards have slots directed to the CPU lanes. for example , most Z series come with 2 slots SLI 8 lanes each. you can use one of them for GPU and the other for any card and so on.

If the CPU has more than 16 lanes you will have more slots connected to the CPU lanes like the X299 motherboards.