HighPoint SSD7101 Series SSD Review

Why you can trust Tom's Hardware

Performance Testing

Comparison Products

We put the HighPoint 4TB SSD7101B-040T up against our group of 1TB NVMe SSDs. This group of products spans a wide range of price points. The Intel 600p is the only true low-cost drive in the group. Most of the other products fall in the middle of the price spectrum. The Corsair Neutron NX500 and Samsung 960 Pro are the most expensive drives in this class, but the latter delivers the highest performance. We used the Samsung 960 Pro 2TB instead of the 1TB. Now that we have 960 Pro 1TB drives in the lab for testing, we will add them to the charts for future reviews.

Test System

To fully utilize the HighPoint SSD7101 we brought back the Intel Core i9-7900X system with the X299 chipset. We introduced the system in our Aplicata Quad M.2 PCIe x8 Adapter review. The system allows us to run the SSD7101 in full PCIe 3.0 x16 mode.

HighPoint and Samsung sent us four 960 Pro 1TB NVMe SSDs to load the adapter. That creates the SSD7101B-040T, a $4,000 SSD that's guaranteed to outperform every SSD we've ever tested.

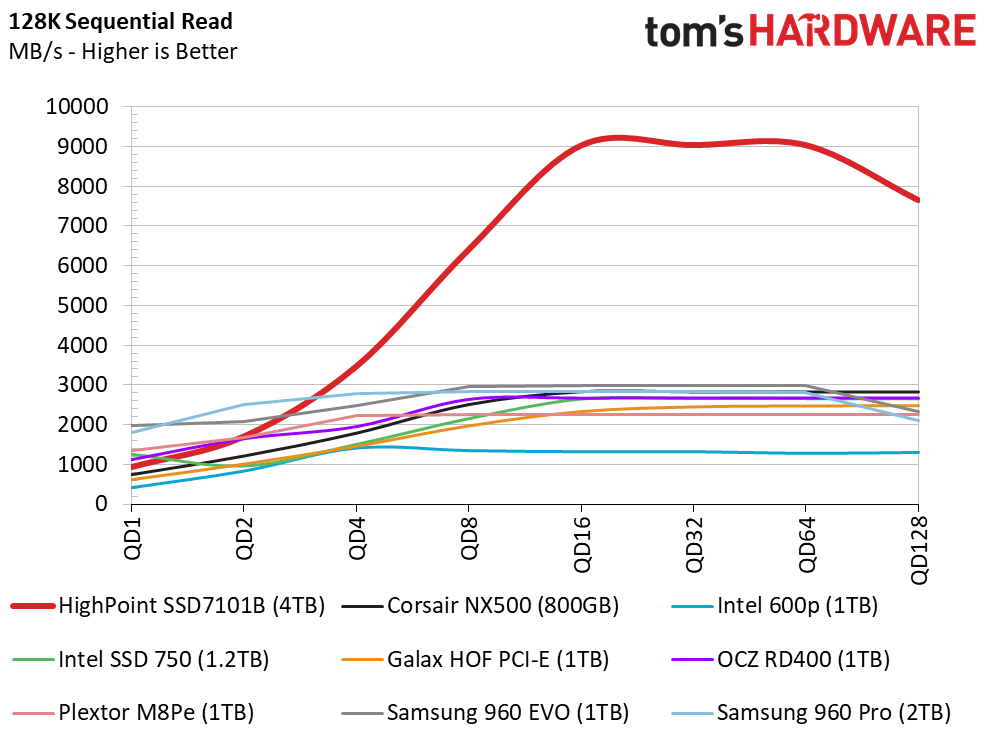

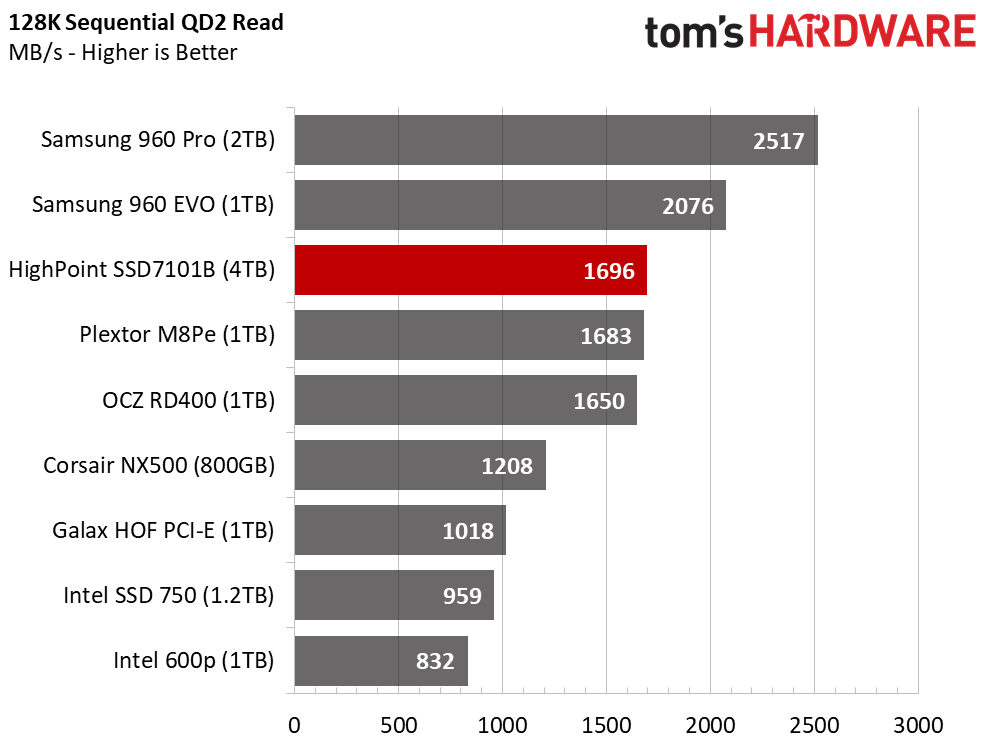

Sequential Read Performance

To read about our storage tests in-depth, please check out How We Test HDDs And SSDs. We cover four-corner testing on page six of our How We Test guide.

The HighPoint SSD7101B-040T 4TB SSD sets a strong tone for this review. With a single worker, it delivers over 9,000 MB/s of sequential read performance. The exceptionally high result comes at a queue depth (QD) of 16, which is well beyond what you would see with a typical desktop workload. The drive delivered lower performance than a single 960 Pro at low QDs, but the throughput shot up significantly when we reached QD4.

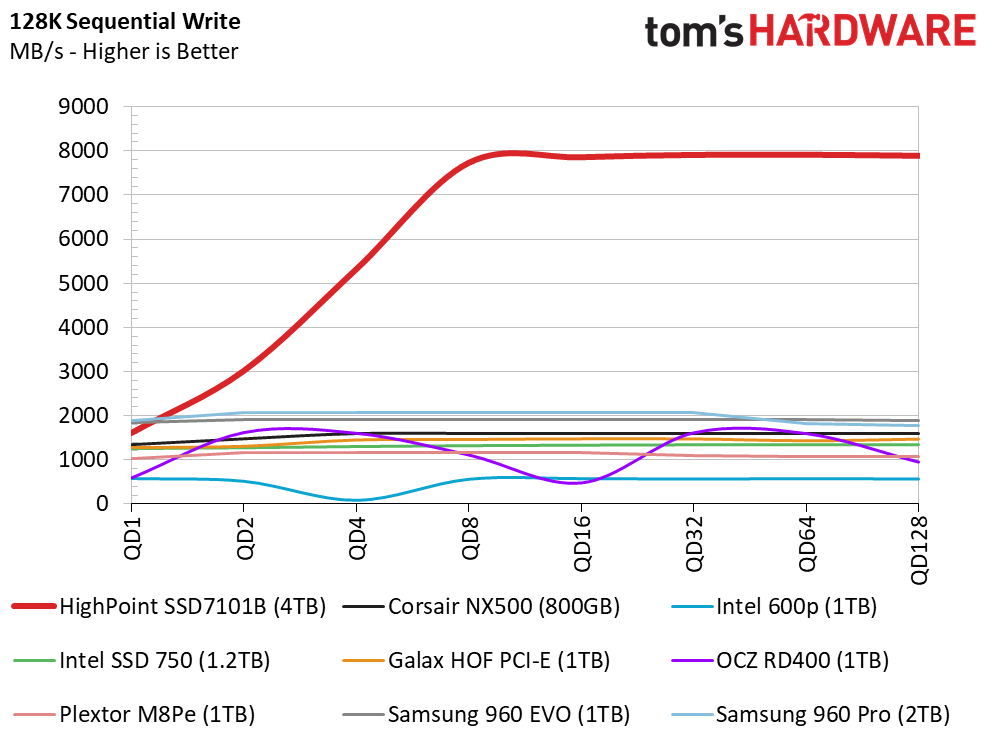

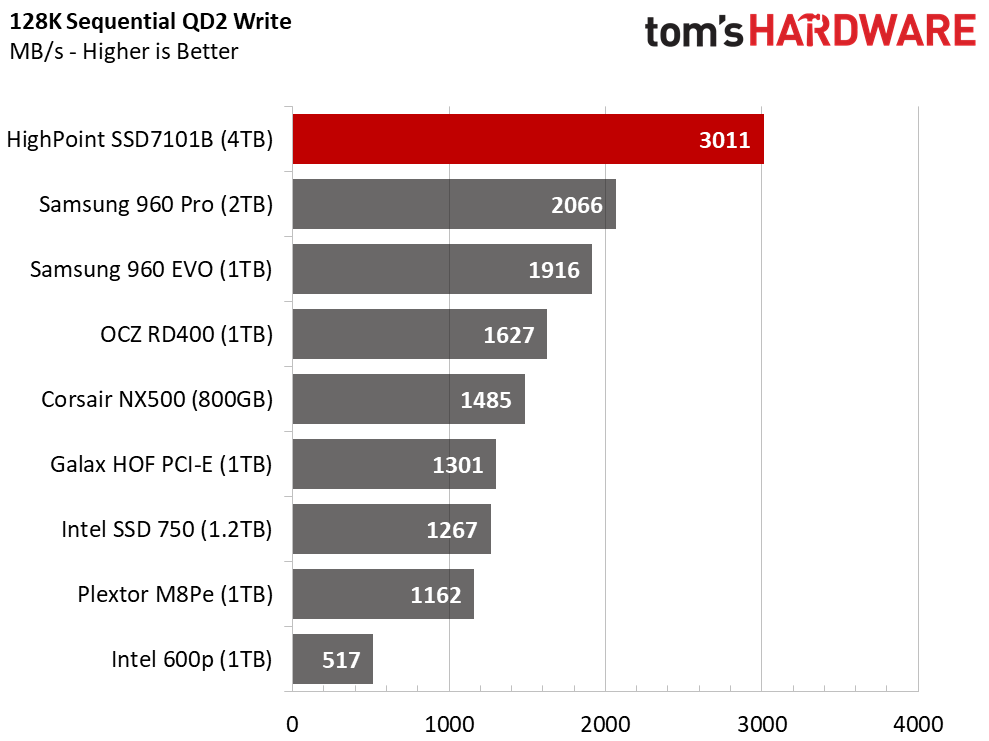

Sequential Write Performance

The sequential write performance test shows a different result. QD1 performance is in line with the single 960 Pro SSD, but by QD2 the SSD7101 again pulled away from the rest of the products. The HighPoint leveled off around 8,000 MB/s at QD8 and held solid through the remainder of the workload.

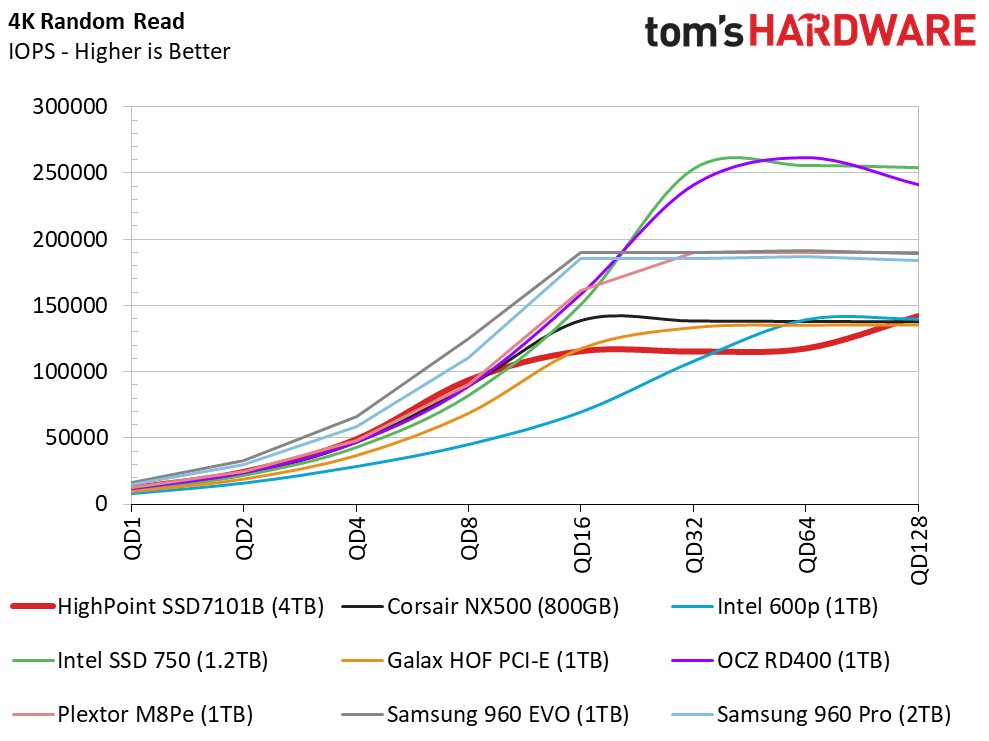

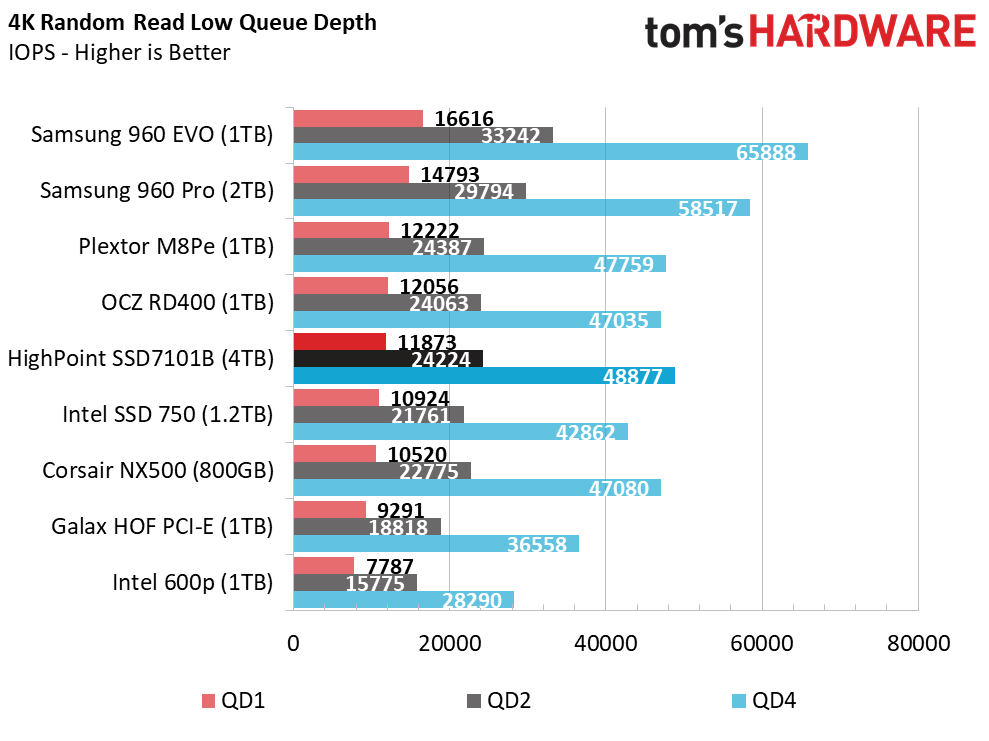

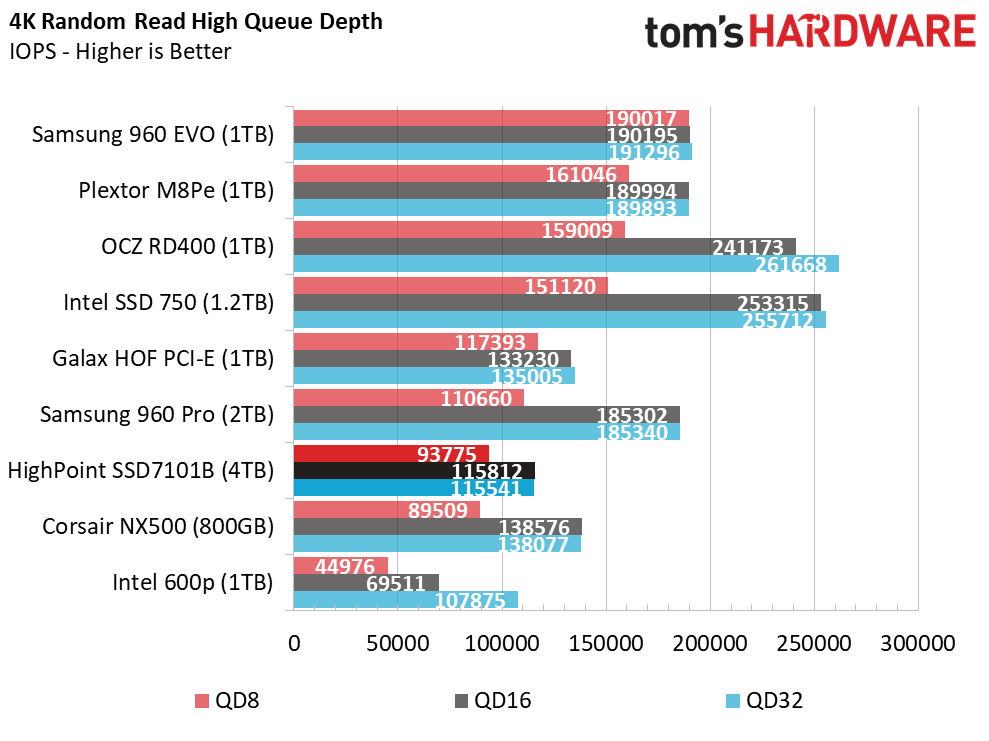

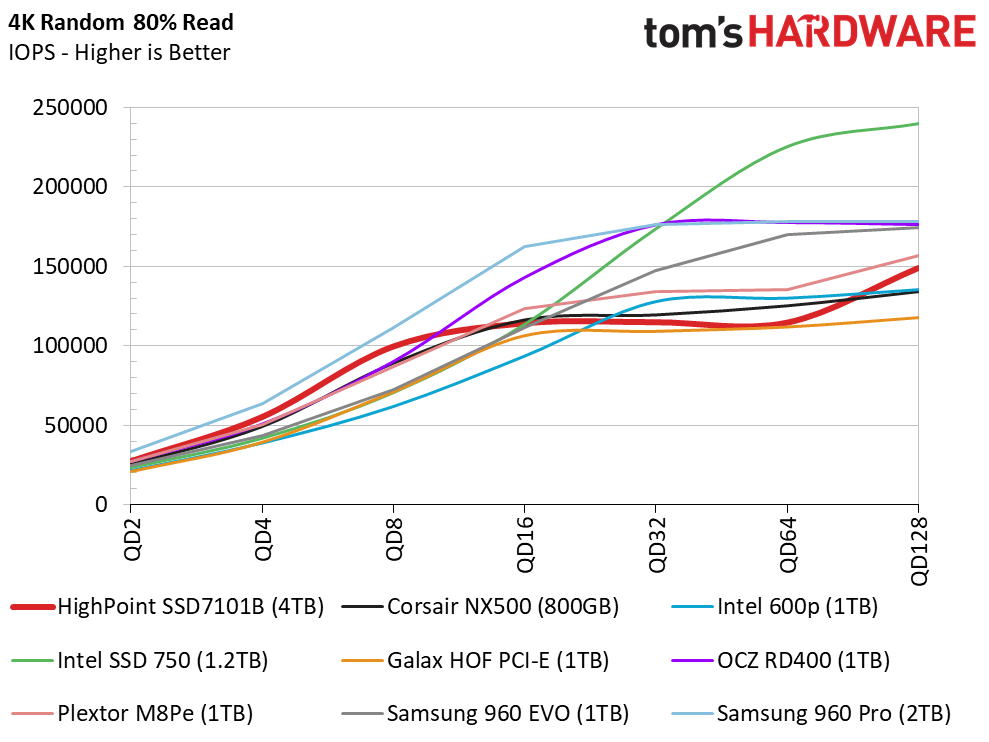

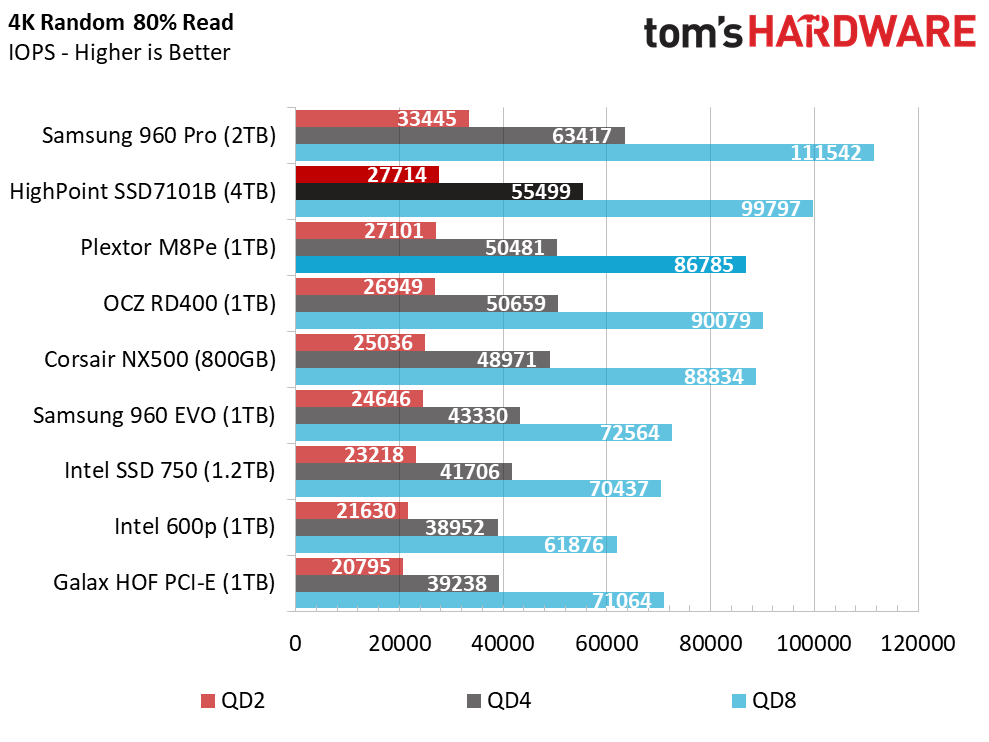

Random Read Performance

The random read performance test shows us how poorly software-based RAID scales as we ramp up the workload. The HighPoint array still delivered very good low queue depth performance, but it's slightly slower than a single 960 Pro. We see good scaling up to QD8, but the array loses momentum at that point. It eventually holds steady at just over 115,000 IOPS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

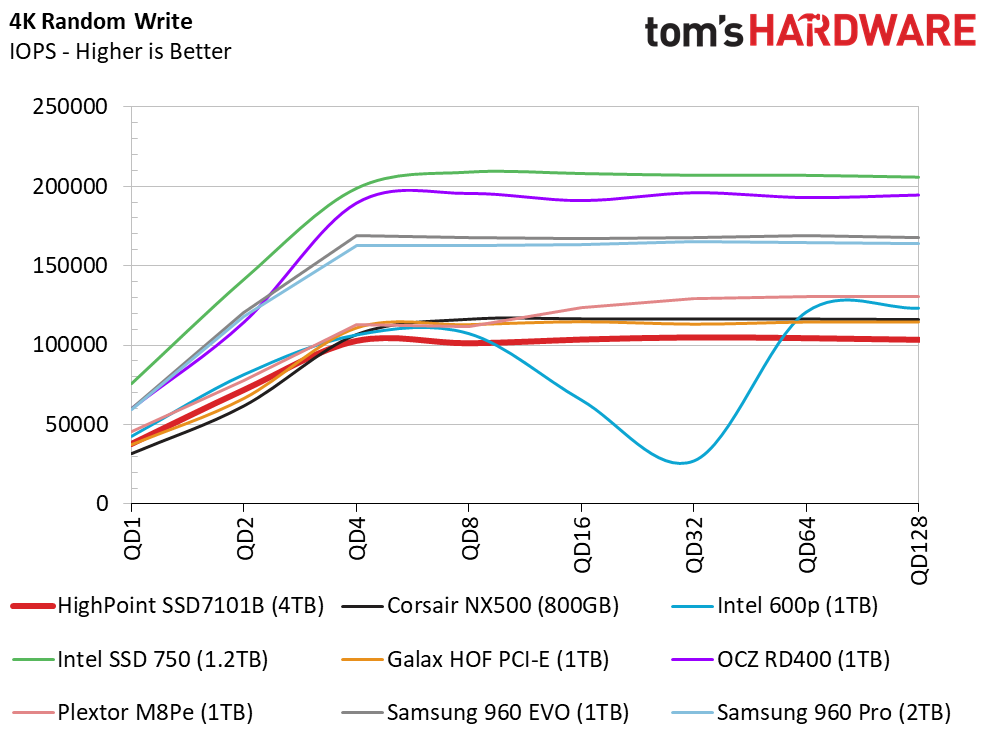

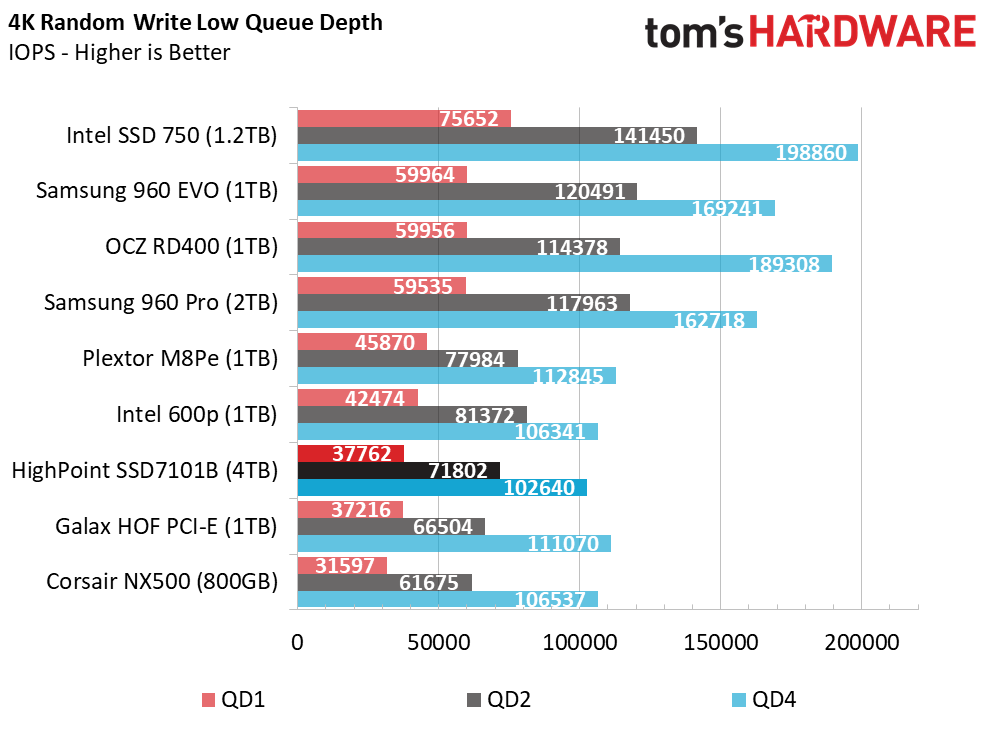

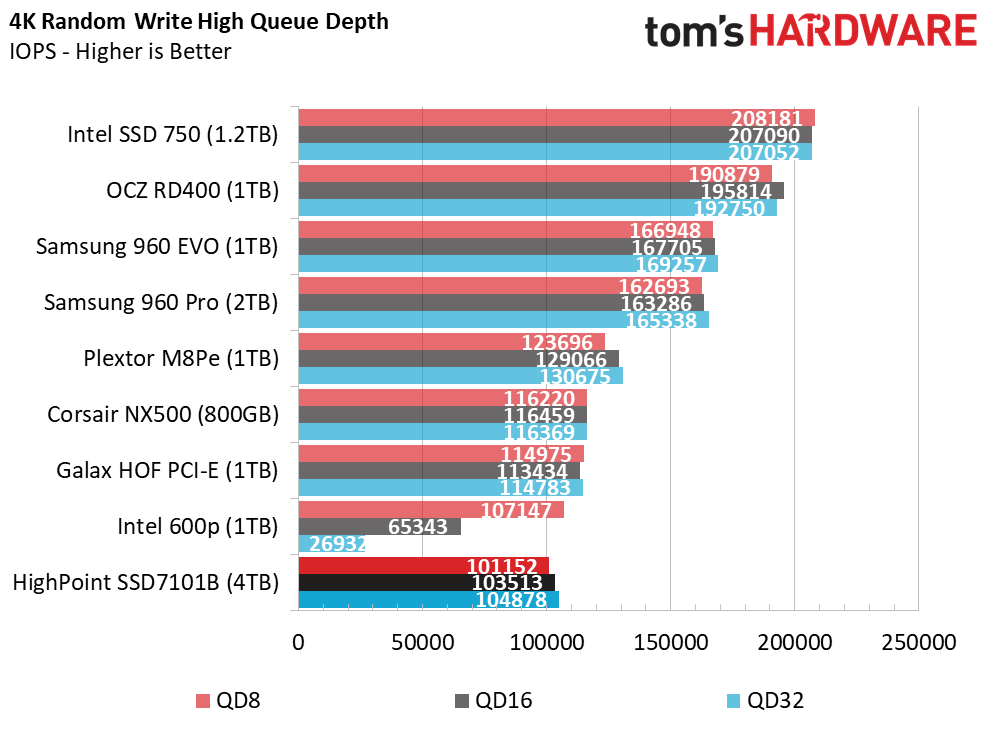

Random Write Performance

Random write performance takes the brunt of the software RAID inefficiency; the array only mustered around 100,000 IOPS. The saving grace is that the array can reach that level of performance at just QD4. The SSD7101 array will not feel slow by any means; you just have to match it to the correct workload. This isn't a product for databases or transactional workloads.

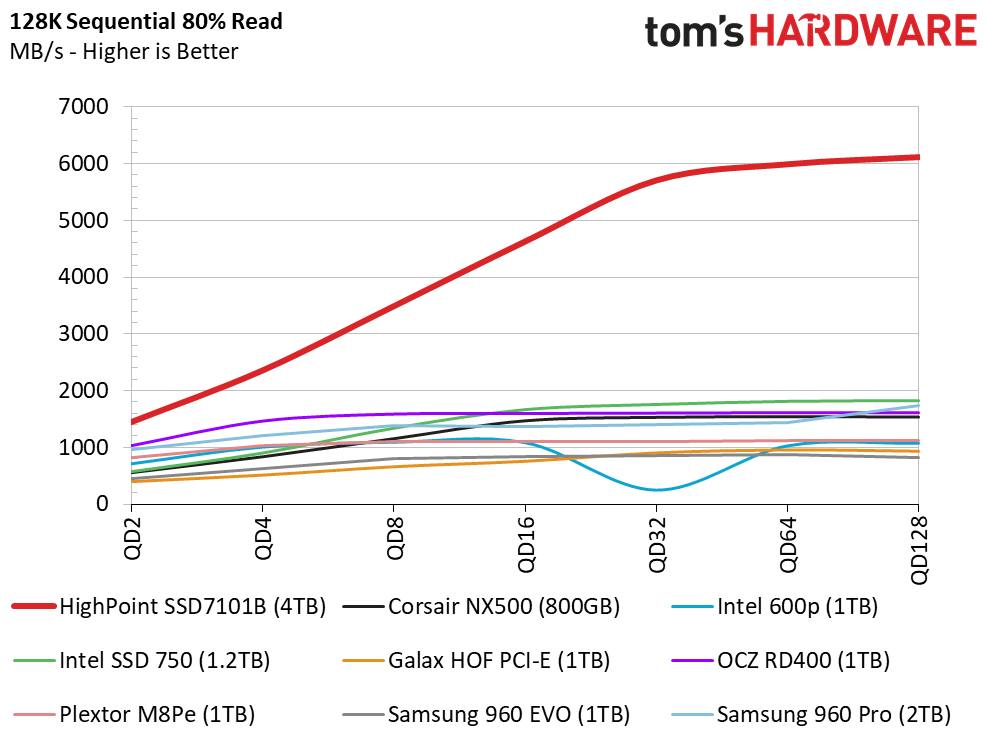

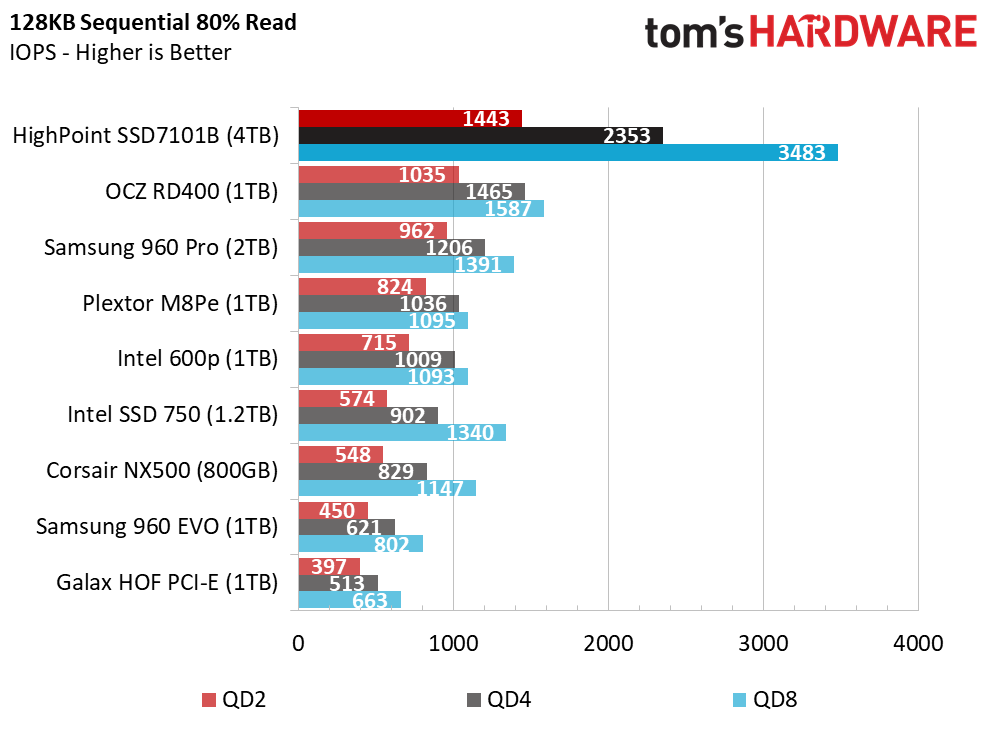

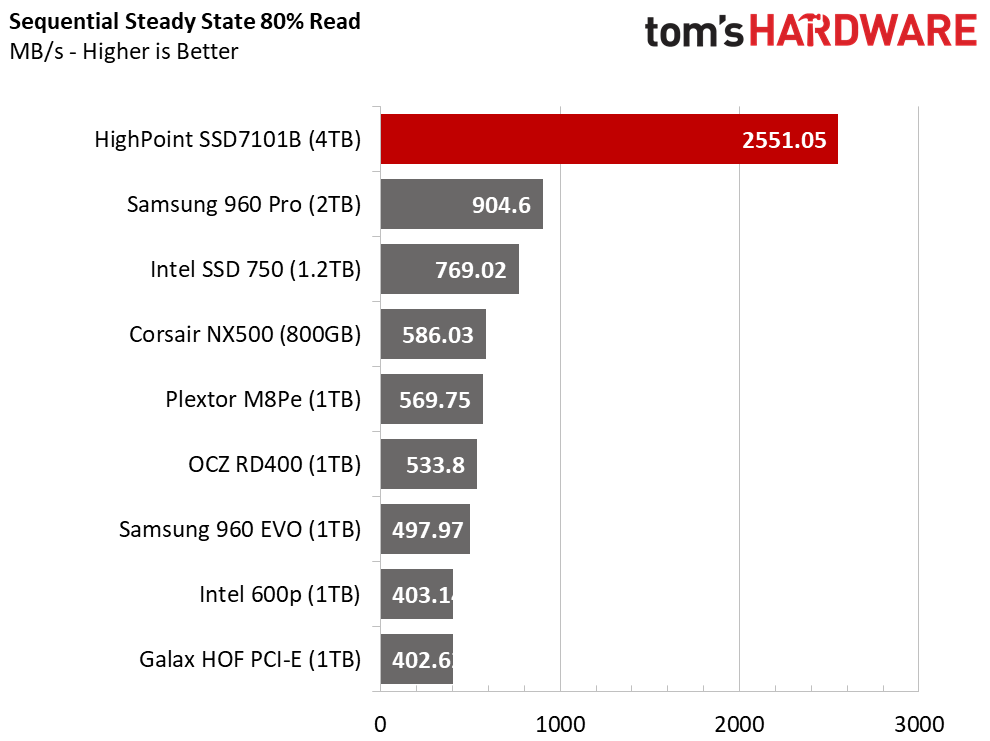

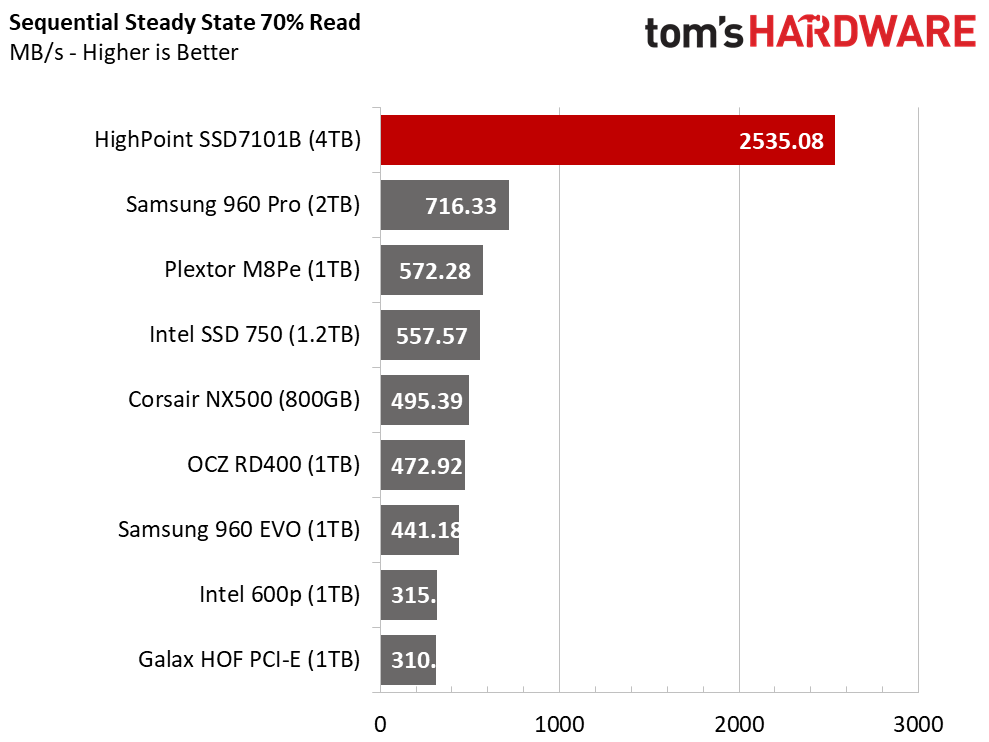

80% Mixed Sequential Workload

We describe our mixed workload testing in detail here and describe our steady state tests here.

Now that we've established that the HighPoint SSD7101 accelerates sequential workloads, we can shift our focus to what you should use the drive for. Most sequential workloads feature large-block transfers. Audio and video creation/editing are the obvious target market for this product, but to take full advantage of the system, you have to go above and beyond what a home studio is capable of. This is the perfect storage device for real-time editing on local storage.

80% Mixed Random Workload

Again, the random read performance isn't bad with this product in the configuration. You're not missing a lot of performance over a single drive, but you are not gaining anything in these workloads.

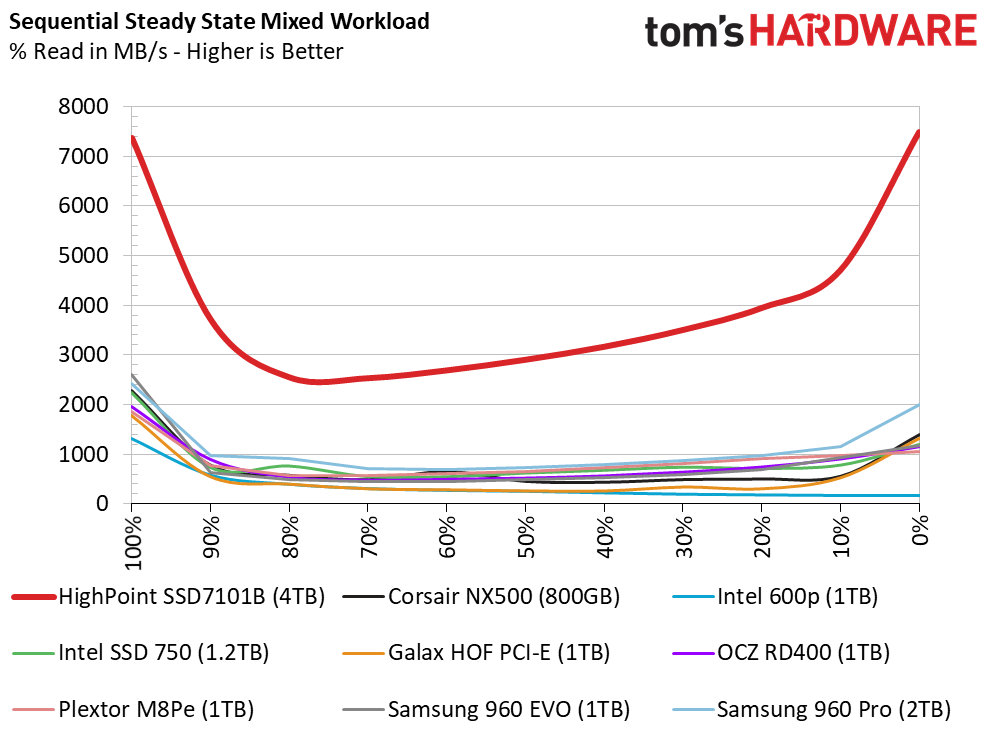

Sequential Steady-State

The sequential steady-state test writes 128KB sequential data for ten hours to fill the drive several times before we measure performance. This is the worst case scenario for the SSDs with sequential data. It's also a common workload for studios recording at high bit rates.

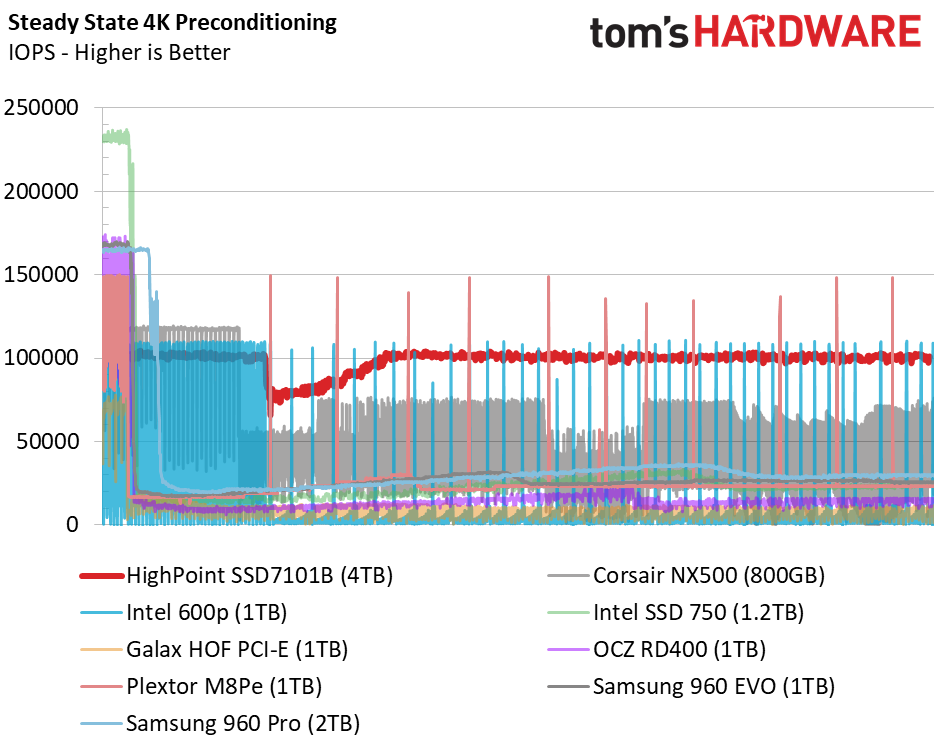

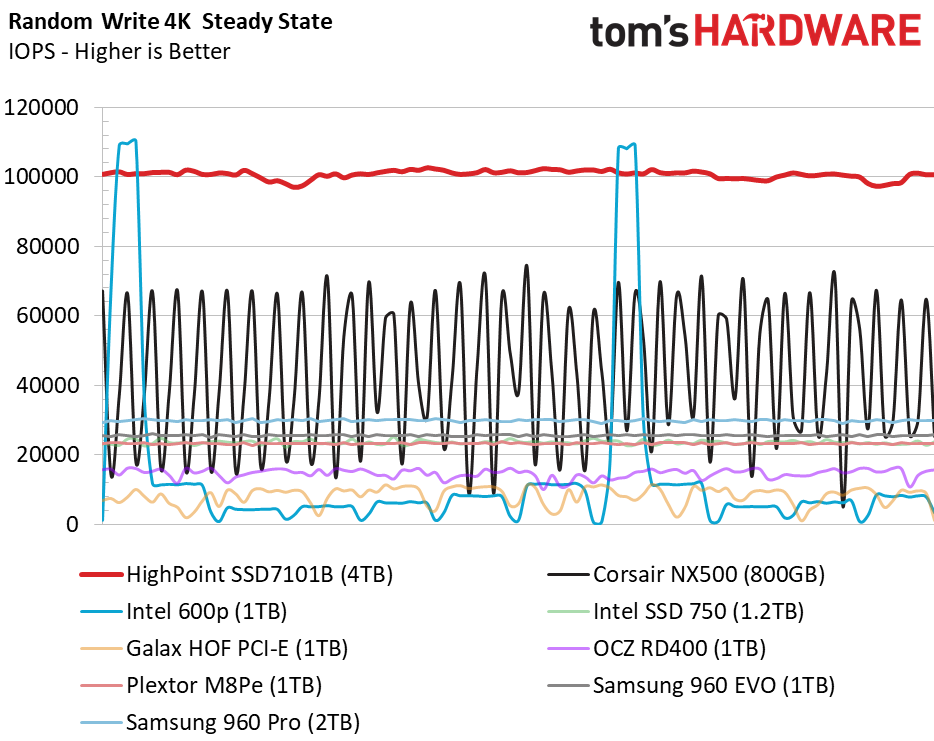

Random Steady-State

You may start to doubt what we said about random performance. The results look very good, but for the same money you could buy an enterprise SSD designed for random workloads and extract more performance.

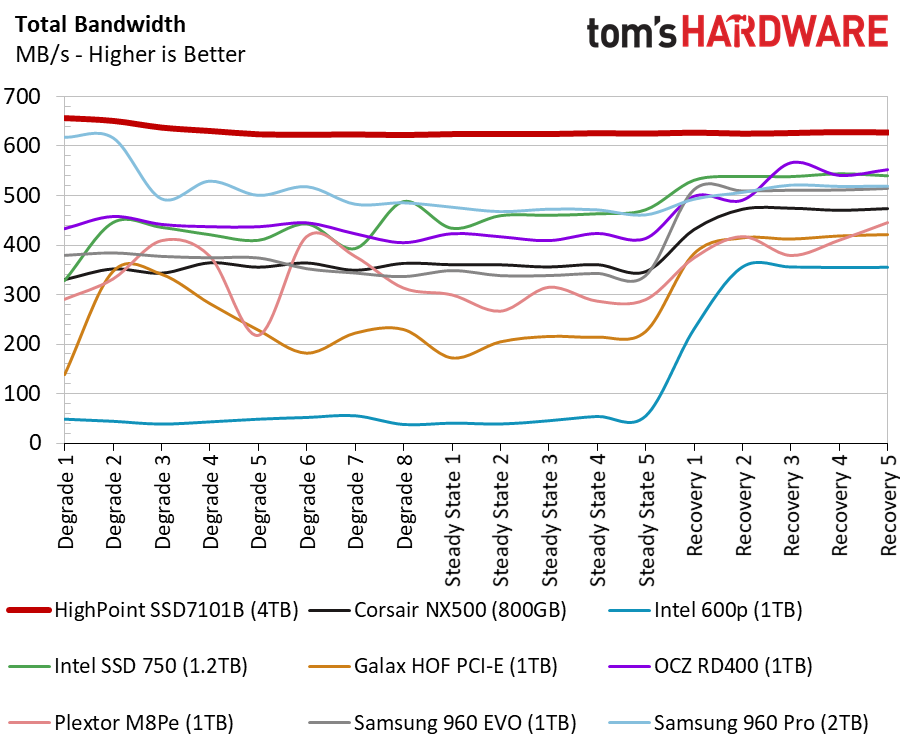

We don't publish RAID articles too often, but we examine performance consistency because it has a direct relation to RAID. The single 960 Pro 2TB delivers a flat performance line with slight variation between its highest and lowest points. As you add drives, you magnify the variation by the number of drives in the array. You can see the minor differences in the single drive, and visually see the array with roughly four times the variation.

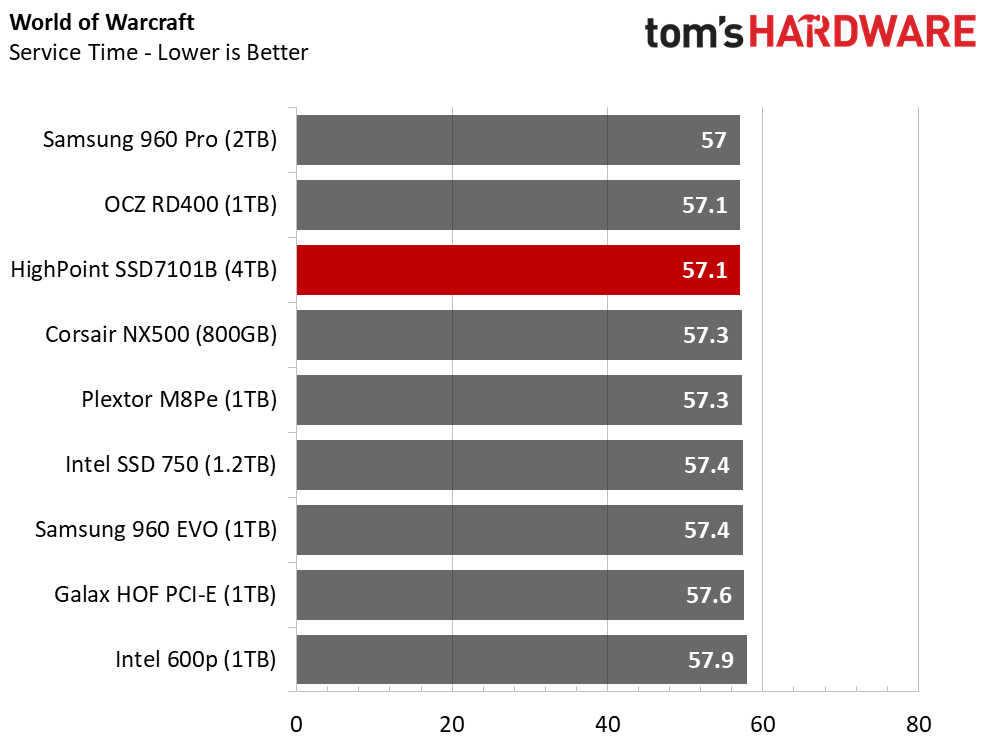

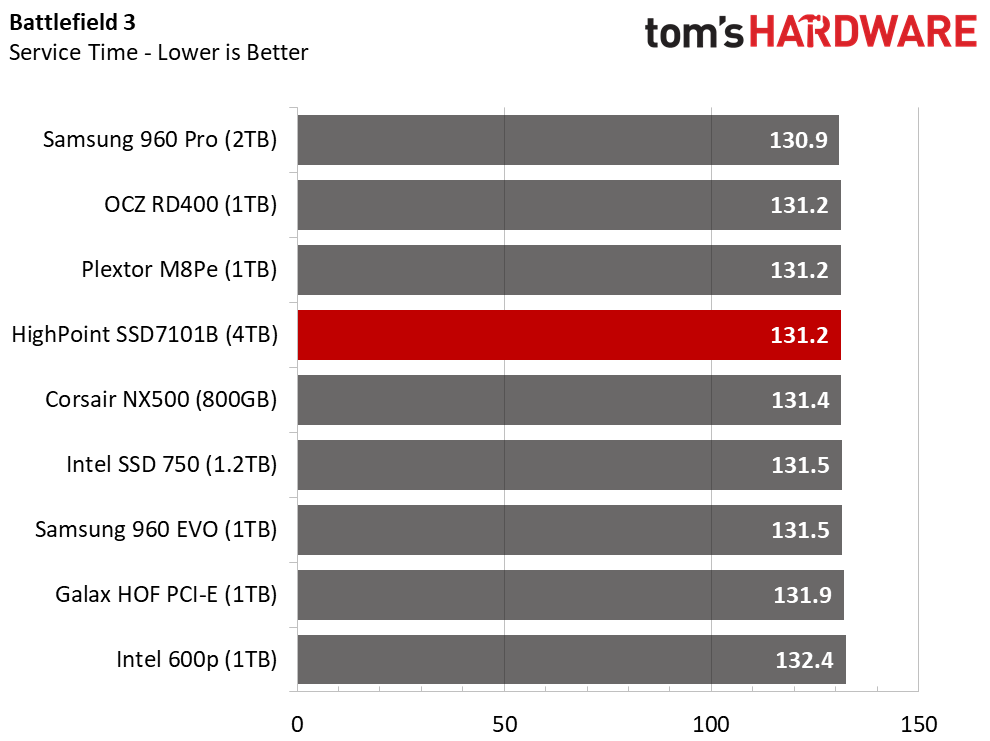

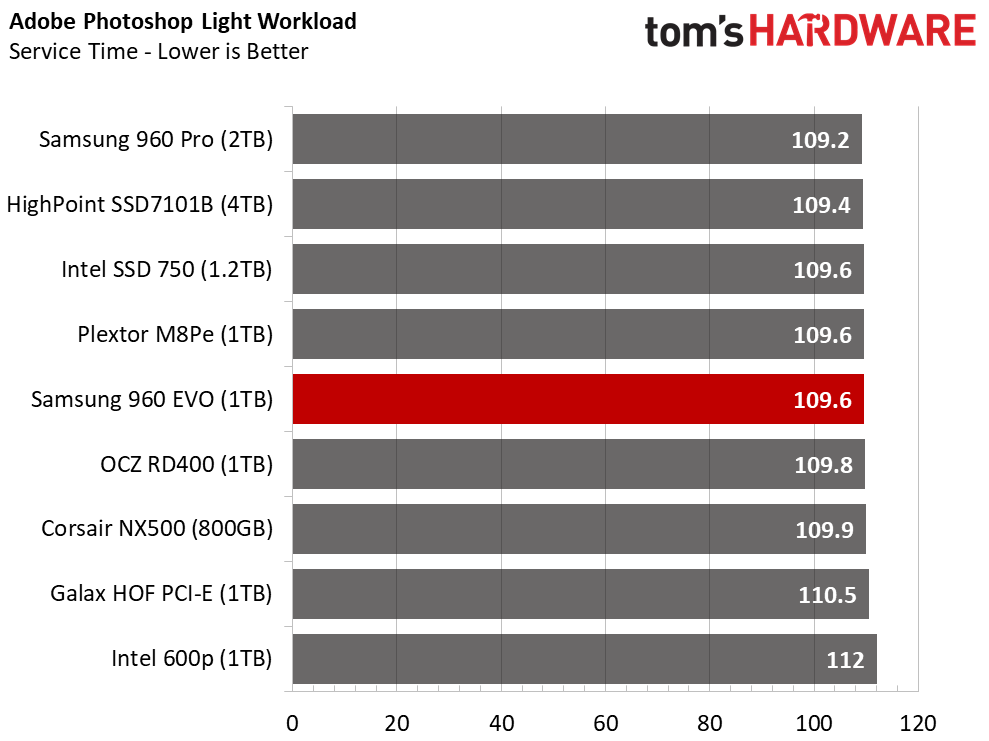

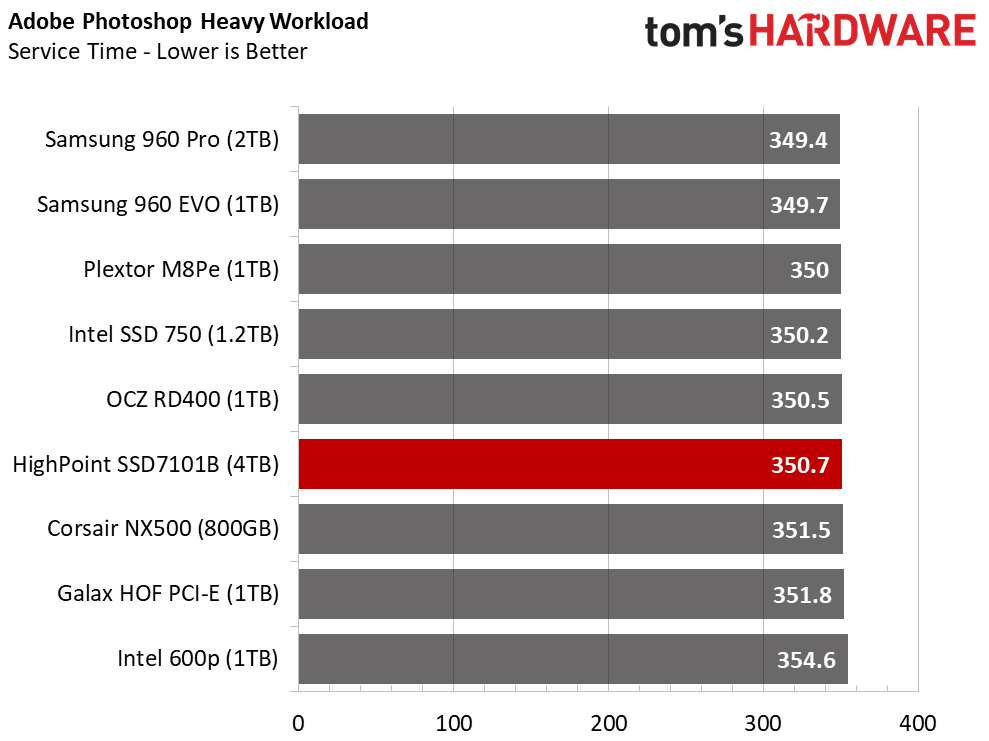

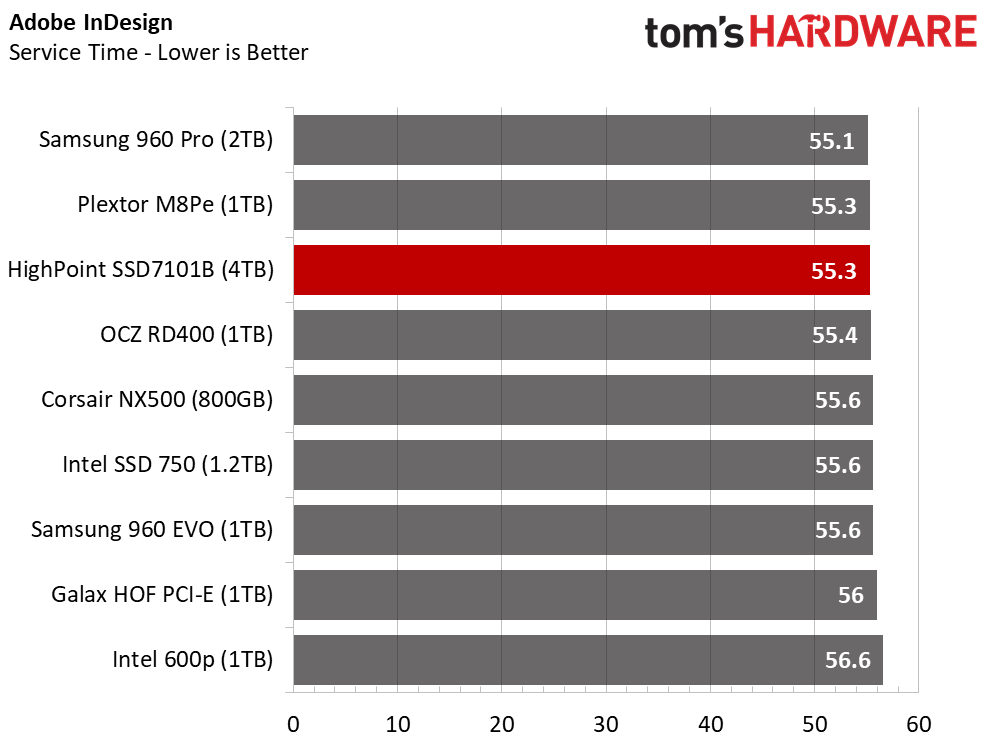

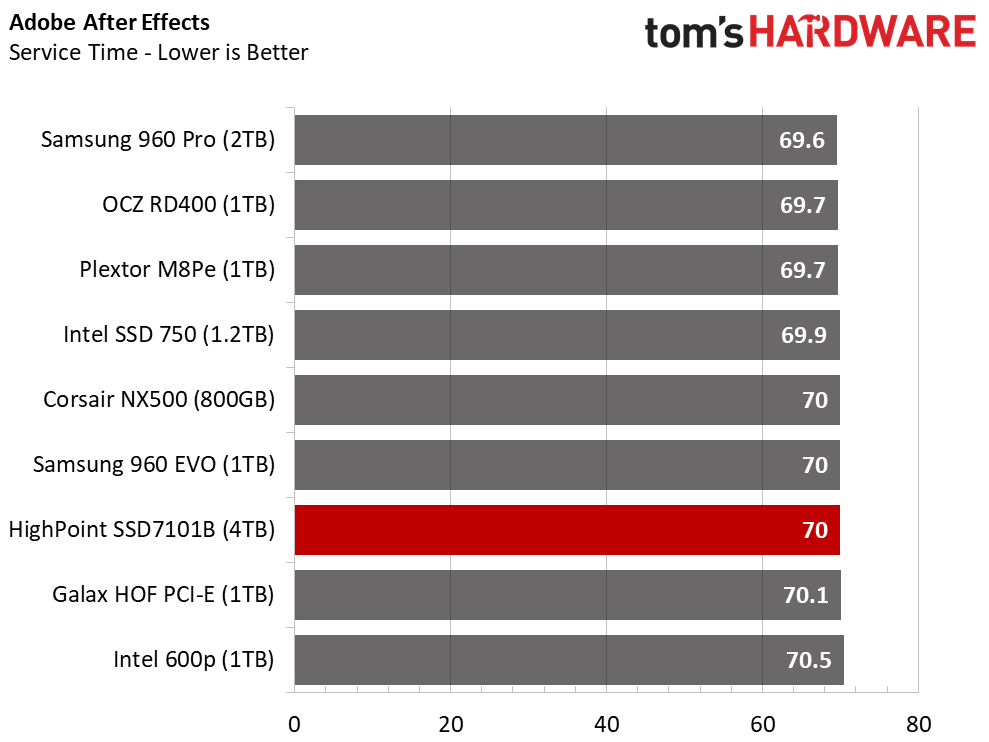

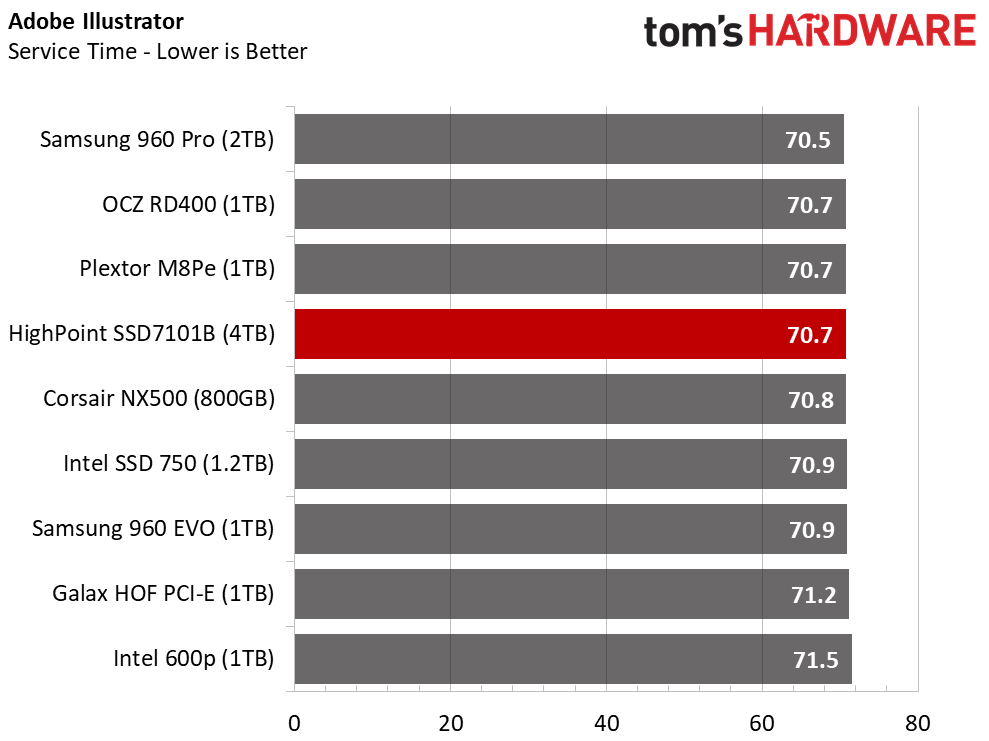

PCMark 8 Real-World Software Performance

For details on our real-world software performance testing, please click here.

The lower random performance carries over to traditional desktop performance. We expected to see the HighPoint SSD7101 lead the heavy Photoshop test. We went back to Futuremark's technical guide to see if we could spot the reason the HighPoint didn't break away. The test consists primarily of heavy sequential writes, but it also sprinkles in a healthy dose of random reads. The random access during the test hurts the total overall score.

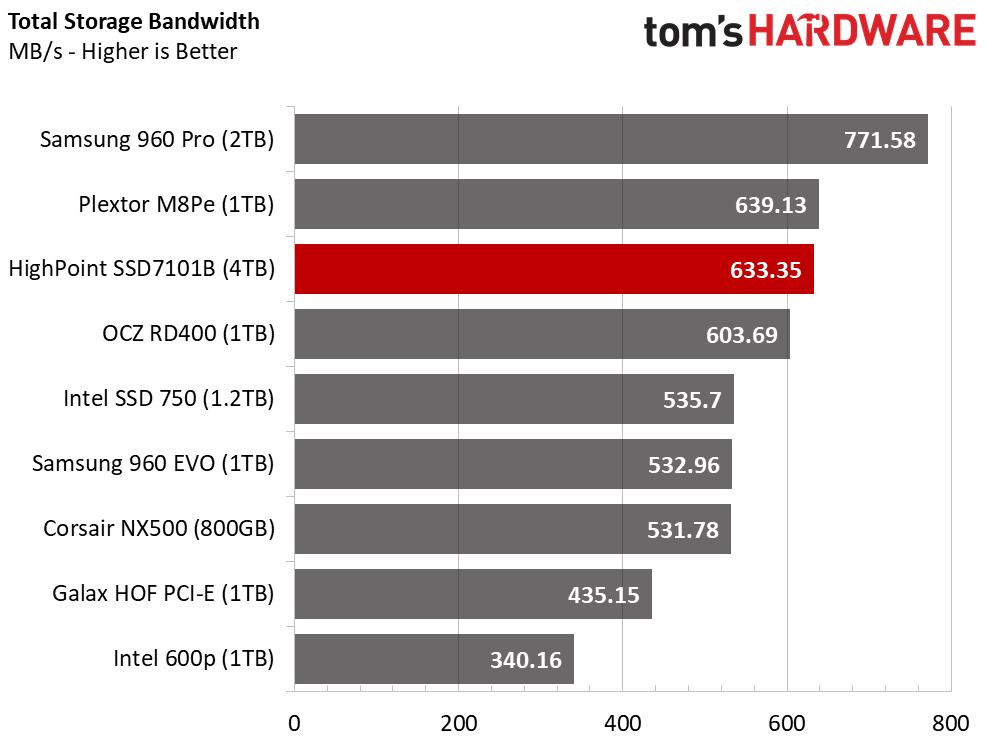

Application Storage Bandwidth

The HighPoint SSD7101B-040T 4TB array still managed to outperform most of the other NVMe SSDs that we tested, but you can see the performance is slightly lower than a single 960 Pro when the drives aren't full.

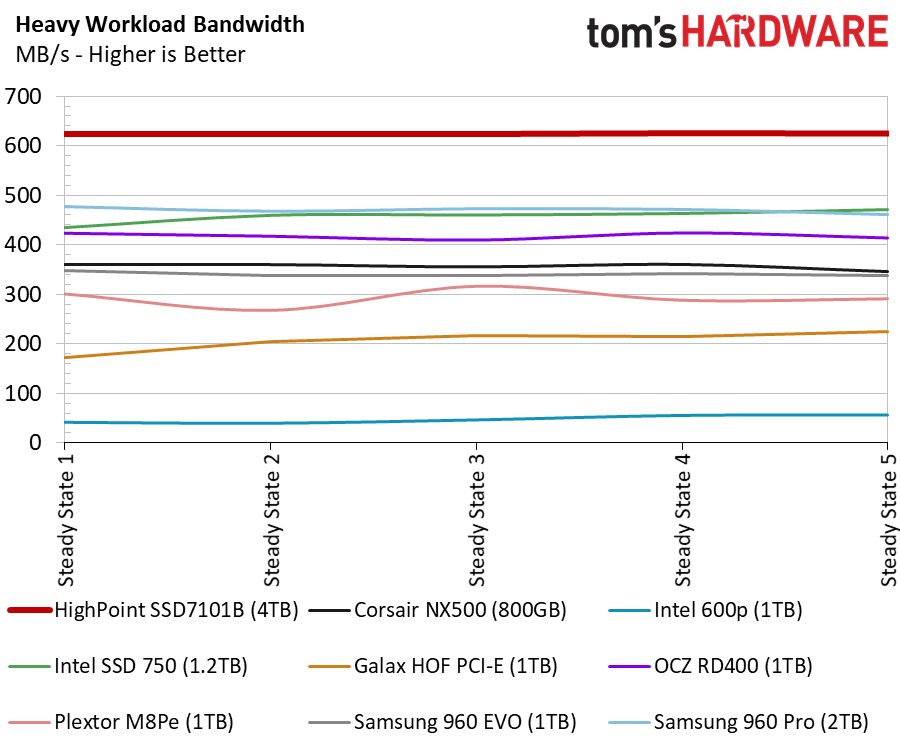

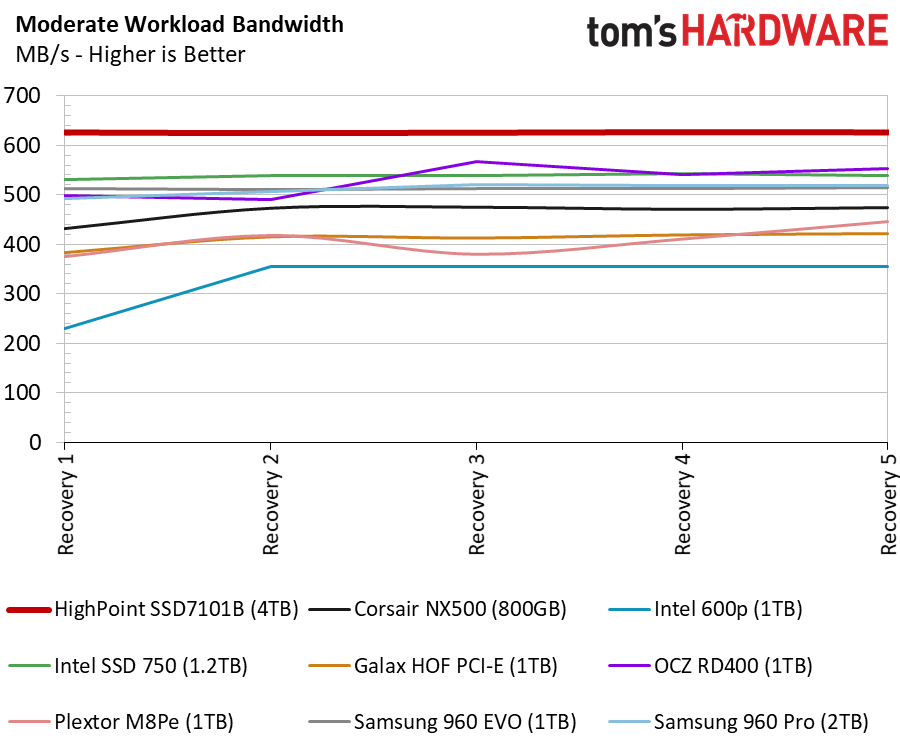

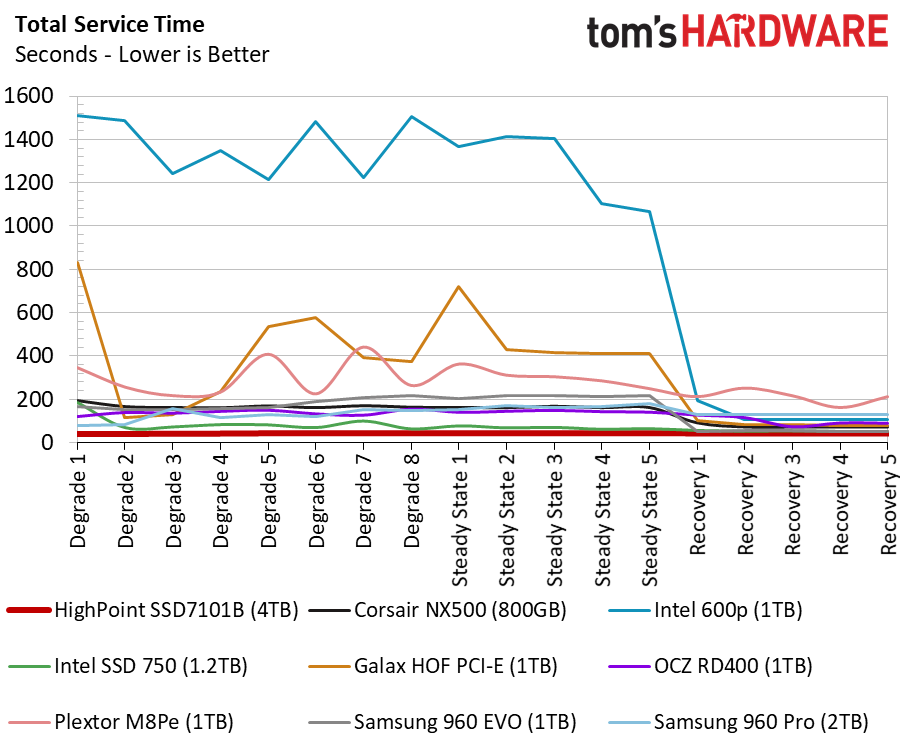

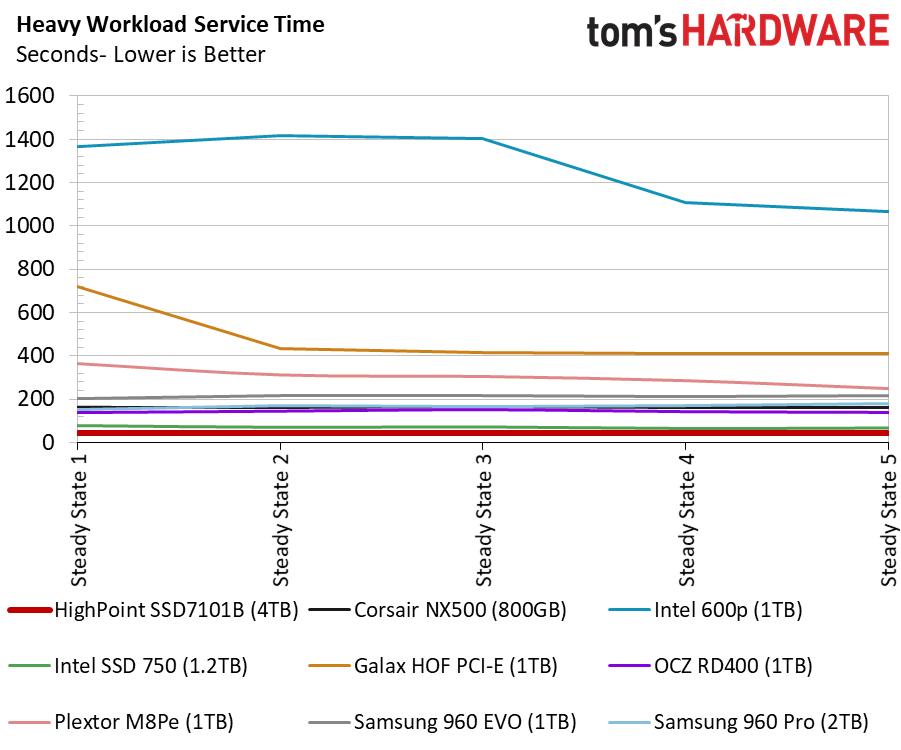

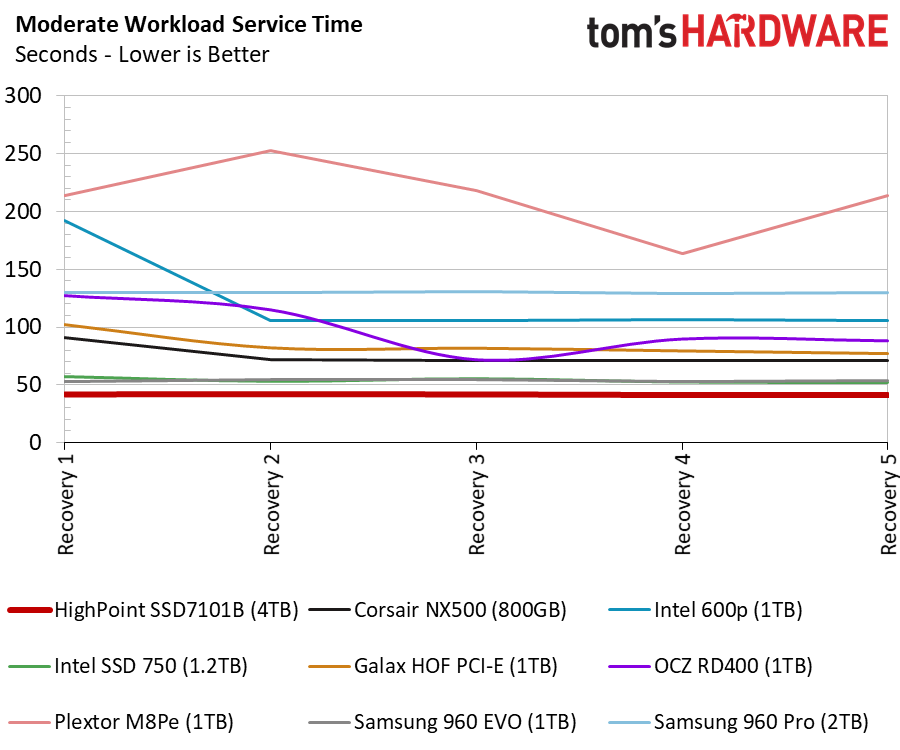

PCMark 8 Advanced Workload Performance

To learn how we test advanced workload performance, please click here.

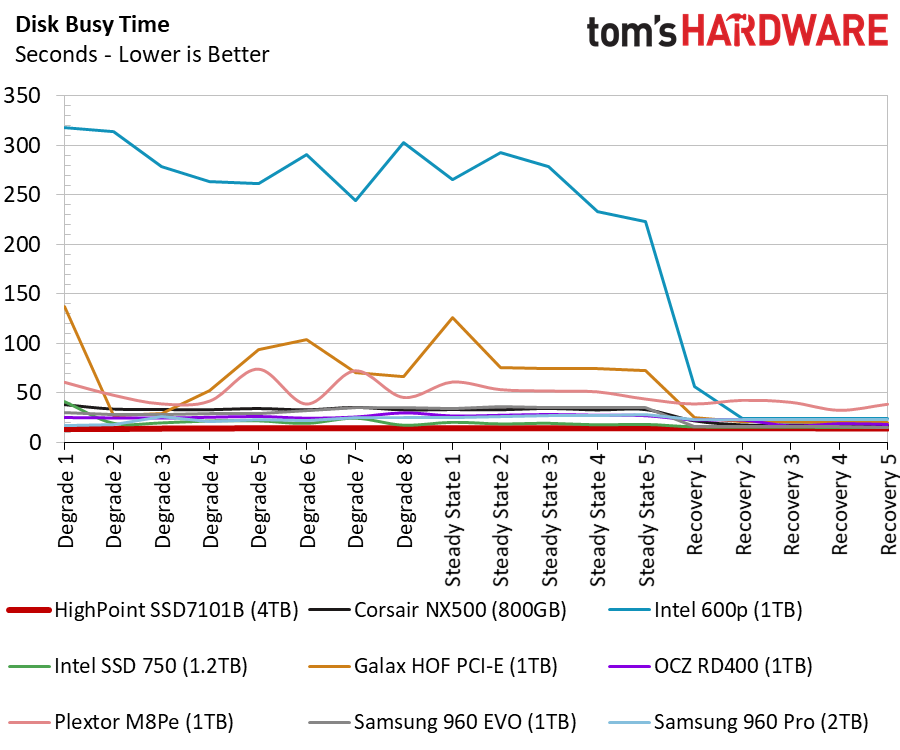

We fill the drives again, reduce the idle time for the heavy section of the test, and then give the drives five minutes of rest for the recovery stages. The steady performance during the tests shows the array is virtually immune to workload fatigue.

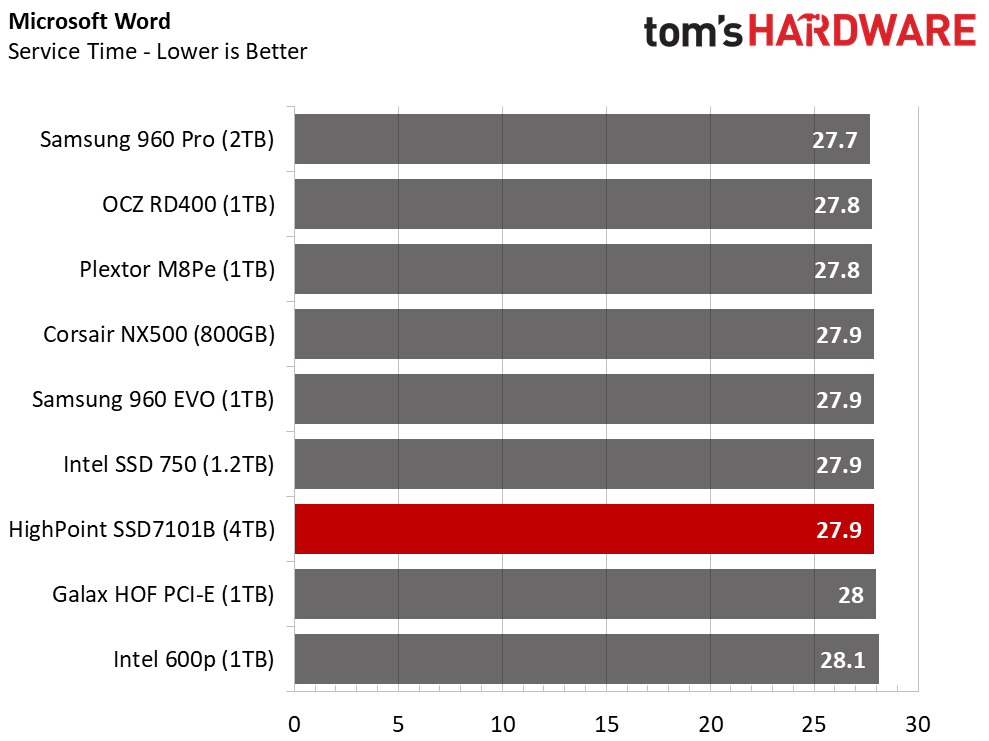

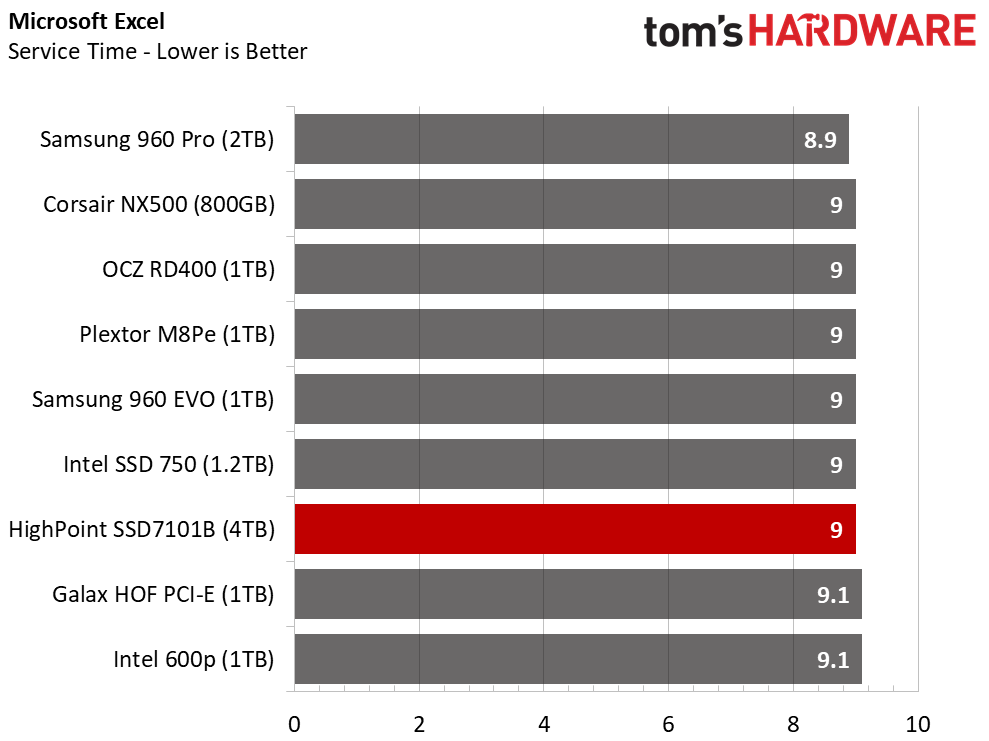

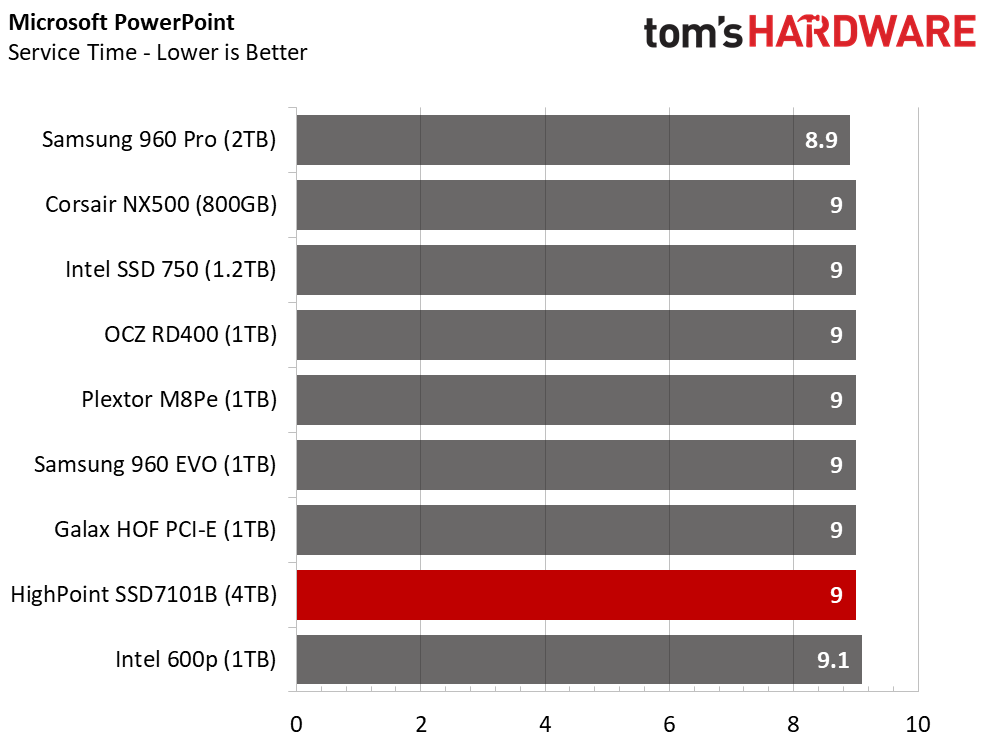

Total Service Time

Many of us will buy a larger SSD than we need to maintain high performance. With the HighPoint SSD7101, you can have your capacity and actually use it, too.

Disk Busy Time

The results really shouldn't come as a surprise. HighPoint took the best consumer SSD available and combined several to supersize performance.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

Chris Ramseyer was a senior contributing editor for Tom's Hardware. He tested and reviewed consumer storage.

-

gasaraki So this is a "RAID controller" but you can only use software RAID on this? Wut? I want to be able to boot off of this and use it as the C drive. Does this have legacy BIOS on it so I can use it on systems without UEFI?Reply -

mapesdhs I'll tell you what to do with it, contact the DoD and ask them if you can run the same defense imaging test they used with an Onyx2 over a decade ago, see if you can beat what that system was able to do. ;)Reply

http://www.sgidepot.co.uk/onyx2/groupstation.pdf

More realistically, this product is ideal for GIS, medical, automotive and other application areas that involve huge datasets (including defense imaging), though the storage capacity still isn't high enough in some cases, but it's getting there.

I do wonder about the lack of power loss protection though, seems like a bit of an oversight for a product that otherwise ought to appeal to pro users.

Ian.

-

samer.forums Reply20284739 said:So this is a "RAID controller" but you can only use software RAID on this? Wut? I want to be able to boot off of this and use it as the C drive. Does this have legacy BIOS on it so I can use it on systems without UEFI?

This is not a Raid controller. it uses software raid. the PLX chip on it allows the system to "think" that the x16 slot is a 4 independent x4 slots thats all , and all active and bootable. thats all. The rest is software. -

samer.forums I see this product very useful for people who want huge SSD storage The motherboard will not give you ... and for Raid 1 . the performance gain in real life for NVME SSD Raid cant be noticed ...Reply -

samer.forums Hey HighPoint if you are reading this , make this card low profile please and place 2 M2 SSD on each side of the card (total 4).Reply -

samer.forums Reply20285047 said:I do wonder about the lack of power loss protection though, seems like a bit of an oversight for a product that otherwise ought to appeal to pro users.

Ian.

there is a U2 Version of this card , using it with built in power loss U2 nvme SSD will solve this problem.

link

http://www.highpoint-tech.com/USA_new/series-ssd7120-overview.htm

-

JonDol I hope that the lack of power loss protection was the single reason this product didn't get an award. Could you, please, confirm it or list other missing details/features for an award ?Reply

The fact alone that such a pro product landed in the consumer arena, even if it is for a very selective category of consumers, is nothing small of an achievement.

The explanation of how the M.2 traffic is routed through the DMI bus was very clear. I wonder if there is even a single motherboard that will route that traffic directly to the CPU. Anyone knows about such a motherboard ?

Cheers -

samer.forums Reply20287411 said:I hope that the lack of power loss protection was the single reason this product didn't get an award. Could you, please, confirm it or list other missing details/features for an award ?

The fact alone that such a pro product landed in the consumer arena, even if it is for a very selective category of consumers, is nothing small of an achievement.

The explanation of how the M.2 traffic is routed through the DMI bus was very clear. I wonder if there is even a single motherboard that will route that traffic directly to the CPU. Anyone knows about such a motherboard ?

Cheers

All motherboards have slots directed to the CPU lanes. for example , most Z series come with 2 slots SLI 8 lanes each. you can use one of them for GPU and the other for any card and so on.

If the CPU has more than 16 lanes you will have more slots connected to the CPU lanes like the X299 motherboards.