Nvidia's CUDA: The End of the CPU?

A Few Definitions

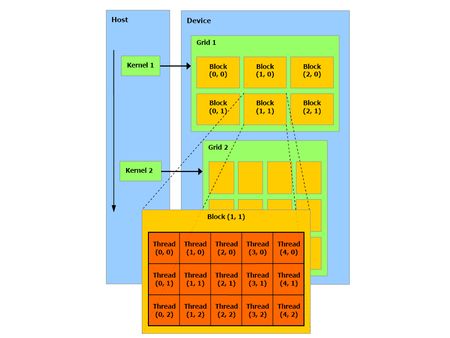

Before we dive into CUDA, let’s define a few terms that are sprinkled throughout Nvidia’s documentation. The company has chosen to use a rather special terminology that can be hard to grasp. First we need to define what a thread is in CUDA, because the term doesn’t have quite the same meaning as a “CPU thread,” nor is it the equivalent of what we call "threads" in our GPU articles. A thread on the GPU is a basic element of the data to be processed. Unlike CPU threads, CUDA threads are extremely “lightweight,” meaning that a context change between two threads is not a costly operation.

The second term frequently encountered in the CUDA documentation is warp. No confusion possible this time (unless you think the term might have something to do with Start Trek or Warhammer). No, the term is taken from the terminology of weaving, where it designates “threads arranged lengthwise on a loom and crossed by the woof.” A warp in CUDA, then, is a group of 32 threads, which is the minimum size of the data processed in SIMD fashion by a CUDA multiprocessor.

But that granularity is not always sufficient to be easily usable by a programmer, and so in CUDA, instead of manipulating warps directly, you work with blocks that can contain 64 to 512 threads.

Finally, these blocks are put together in grids. The advantage of the grouping is that the number of blocks processed simultaneously by the GPU are closely linked to hardware resources, as we’ll see later. The number of blocks in a grid make it possible to totally abstract that constraint and apply a kernel to a large quantity of threads in a single call, without worrying about fixed resources. The CUDA runtime takes care of breaking it all down for you. This means that the model is extremely extensible. If the hardware has few resources, it executes the blocks sequentially; if it has a very large number of processing units, it can process them in parallel. This in turn means that the same code can target both entry-level GPUs, high-end ones and even future GPUs.

The other terms you’ll run into frequently in the CUDA API are used to designate the CPU, which is called the host, and the GPU, which is referred to as the device. After that little introduction, which we hope hasn’t scared you away, it’s time to plunge in!

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: A Few Definitions

Prev Page The CUDA APIs Next Page The Theory: CUDA from the Hardware Point of View