Gen11 Iris Plus Tested: Could Tiger Lake and Xe Double Performance?

Intel still has plenty of ground to make up, but from what we know of Ice Lake and the early Tiger Lake demonstrations, Xe Graphics looks promising.

Intel plans to join the dedicated graphics card fray in the coming months with its Xe Graphics architecture, which will cover everything from integrated graphics in the upcoming Tiger Lake and Rocket Lake CPUs to dedicated graphics cards for consumers, with number-crunching data center beasts at the top of the spectrum. Could Intel end up making our list of the best graphics cards? It's far too early to say, but after covering AMD vs Intel integrated graphics performance, we wanted to dive into more extensive integrated GPU testing with the latest mobile integrated GPUs. That means Ice Lake and Renoir, preparing us for the pending launches ... except we're still trying to procure the Renoir system, so this article will focus on Gen11 Ice Lake.

Intel posted a video showing a Tiger Lake-U laptop running Battlefield V at 1080p and high settings (in DirectX 11 mode), so we at least have some indication of how Xe Graphics will perform. We know the laptop hit about 30-33 fps during the test, but we don't know the TDP of the chip in question—it could be 15W or it could be 25W. But it's most likely a 28W chip, as that represents a typical Intel mobile CPU that isn't relying on configurable TDP to boost performance.

The good news is that it doesn't really matter. It does matter, actually, but we reached out to Razer and it supplied us with a Razer Blade Stealth 13 equipped with a Core i7-1065G7—the fastest Iris Plus graphics solution currently offered by Intel. The important thing about the Razer laptop is that it supports Intel's configurable TDP and will run the chip at 15W, 20W, or 25W depending on which performance setting you select in Razer Synapse. We tested with the 15W and 25W options to define both the low and high range of performance from Ice Lake's Gen11 Graphics, and 25W is close enough to 28W that we expect the Ice Lake and Tiger Lake laptops to be on equal ground.

That allows us to at least get a reasonable idea of where Tiger Lake will land, and by extension Xe Graphics. The integrated version of Xe Graphics is rumored to support 96 EUs (Execution Units), where the Xe HP Graphics discrete cards (the "High Performance" chips) are rumored to have up to 512 EUs, along with dedicated VRAM. For example, if the Tiger Lake-U laptop is 50% faster than an Ice Lake-U laptop running at 25W, that at least suggests Tiger Lake won't be fully limited by its TDP.

Test Setup

As with our main integrated graphics article, we have multiple different PCs for testing—now with a couple of laptops for good measure. Besides the Razer Blade Stealth 13 mentioned above, we also have an HP Envy 17t with the same i7-1065G7 CPU but different RAM: DDR4-3200 instead of the LPDDR4X-3733 used in the Razer laptop. It wasn't clear if the HP laptop supported running at higher TDP for the CPU or not, but after testing some games, it appears to stick with a 15W TDP limit. What's more, the HP graphics performance is quite a bit lower than even the Razer in 15W mode, so we stuck with the Razer for most of our benchmarks. (It also has a GeForce MX330, which we used in quite a few tests as yet another point of reference.)

We also have desktop PCs for testing as well, and we used an AM4 platform and an Intel platform. While Intel now offers LGA1200 motherboards and Comet Lake CPUs, the integrated GPU remains the same UHD 630 as in Kaby Lake and Coffee Lake (7th, 8th, and 9th Gen Intel desktop CPUs). We're using a Core i7-9700K for our UHD 630 testing, but the GPU is the biggest bottleneck—even a lowly Pentium Gold G5500 or Core i3-9100 should be nearly as fast. Just stay away from parts with UHD 610 if you're actually concerned with integrated graphics performance, as it's basically half of the UHD 630.

Besides checking performance in Battlefield V on the Tirailleur map, we also ran benchmarks on our standard GPU test suite of nine games. As before, we've conducted these tests at 720p and minimum quality settings, but we also ran tests at 1080p medium—the lowest setting we normally test at for dedicated GPUs. We've omitted lesser integrated graphics solutions from the 1080p testing, as they were already 'too slow' at 720p, but Ice Lake and the AMD Picasso APUs (Zen+) are more capable. They can almost (sometimes) handle 1080p medium, depending on the game.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Ice Lake Iris Plus Gen11 Graphics Performance on Core i7-1065G7

Let's start with a quick discussion of Battlefield V performance, and you can see the video of testing above. Running Battlefield V at 1080p high in the same region that Intel used for the Tiger Lake test, the 15W setting yielded just 12-13 fps, while the 25W setting boosted that to 16-18 fps. Either way, Tiger Lake looks like a big step up from the current Ice Lake-U chips. Can Intel legitimately double performance within the same power restrictions? That would be huge.

Let's put it a different way. The HP Envy 17t includes GeForce MX330 graphics with 4GB of dedicated GDDR5 memory. At 1080p high, it still only managed 18-20 fps. Clearly HP and Razer have tuned their respective laptops differently. Considering the HP is a 17.3-inch chassis, there ought to be plenty of thermal headroom for higher TDPs, but the laptop plays it conservative. Maybe it's just the slightly slower memory, but it’s more likely that the firmware is tuned to prioritize CPU over GPU performance. At least, that's how it felt during testing. The fact that the dedicated MX330 only barely beat the Razer's Iris Plus 25W configuration isn't a good look for HP, though.

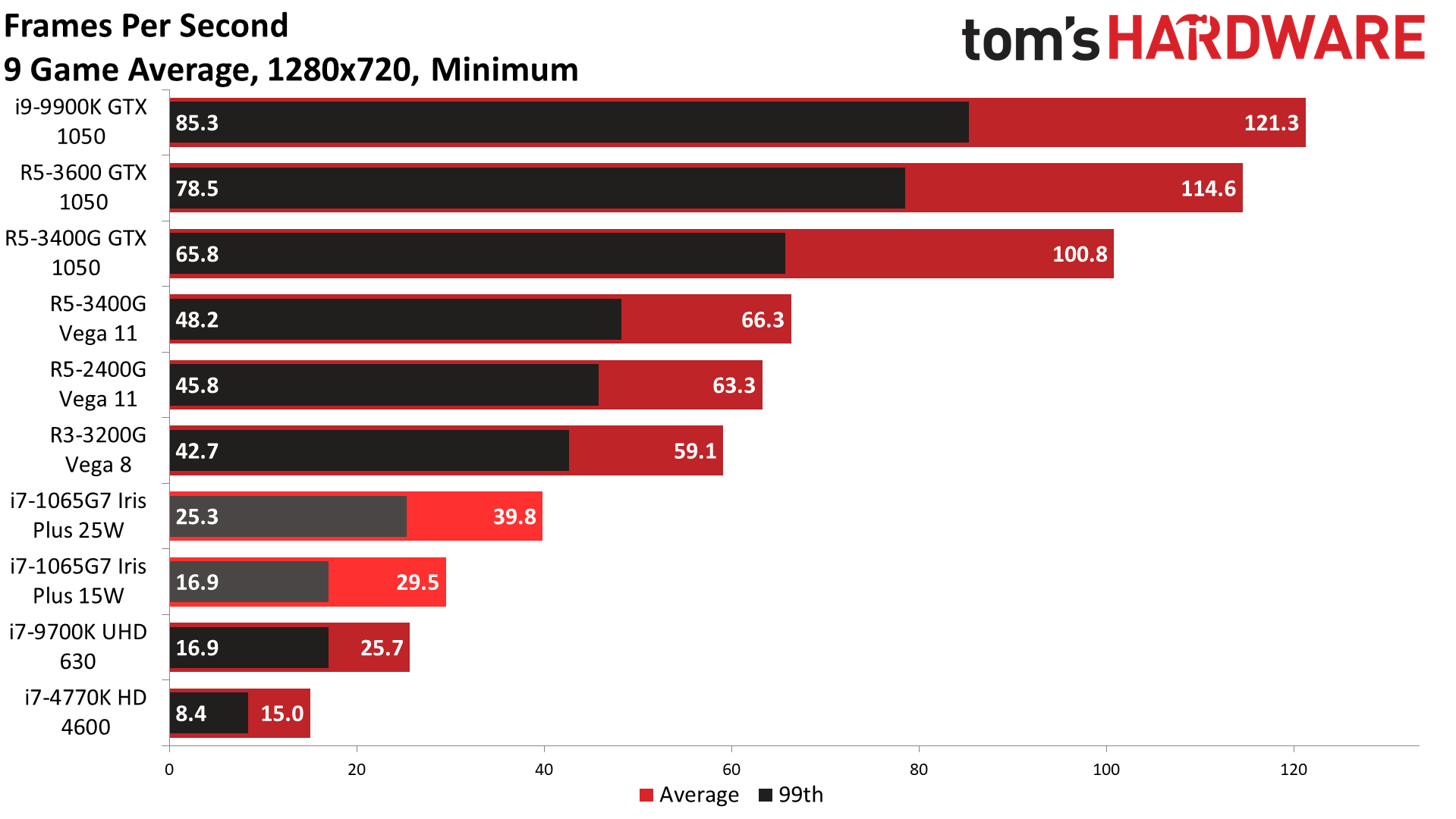

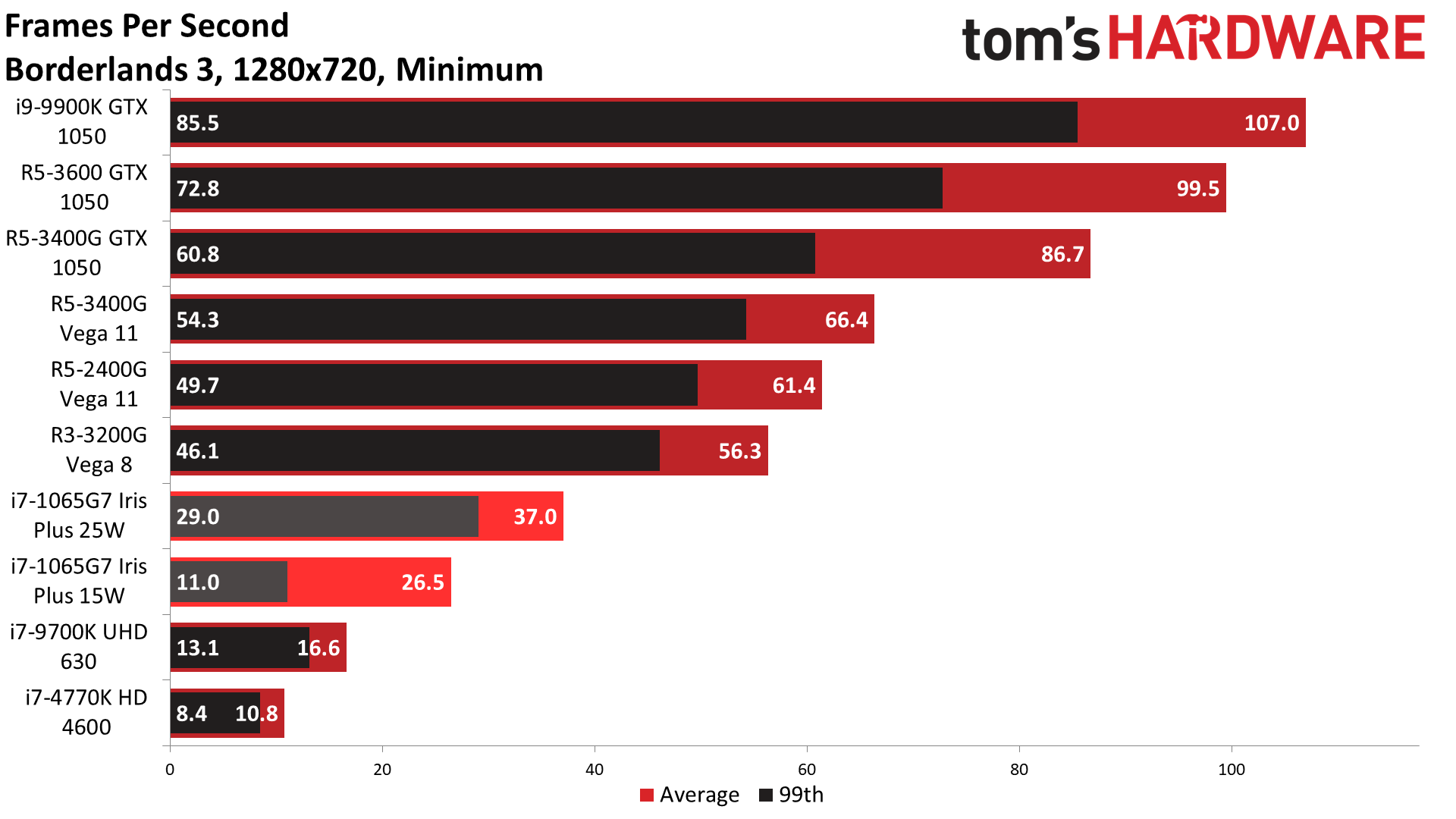

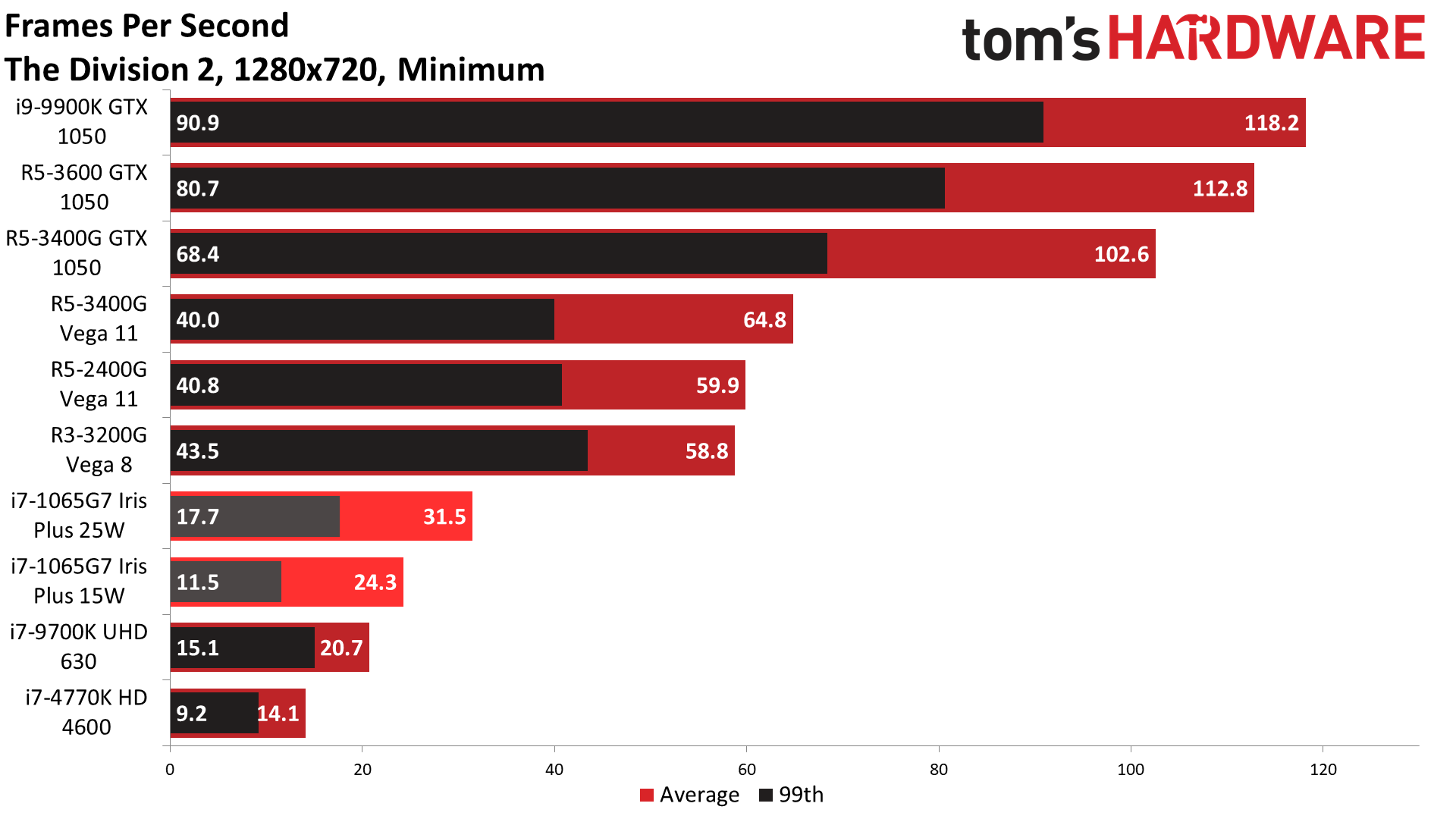

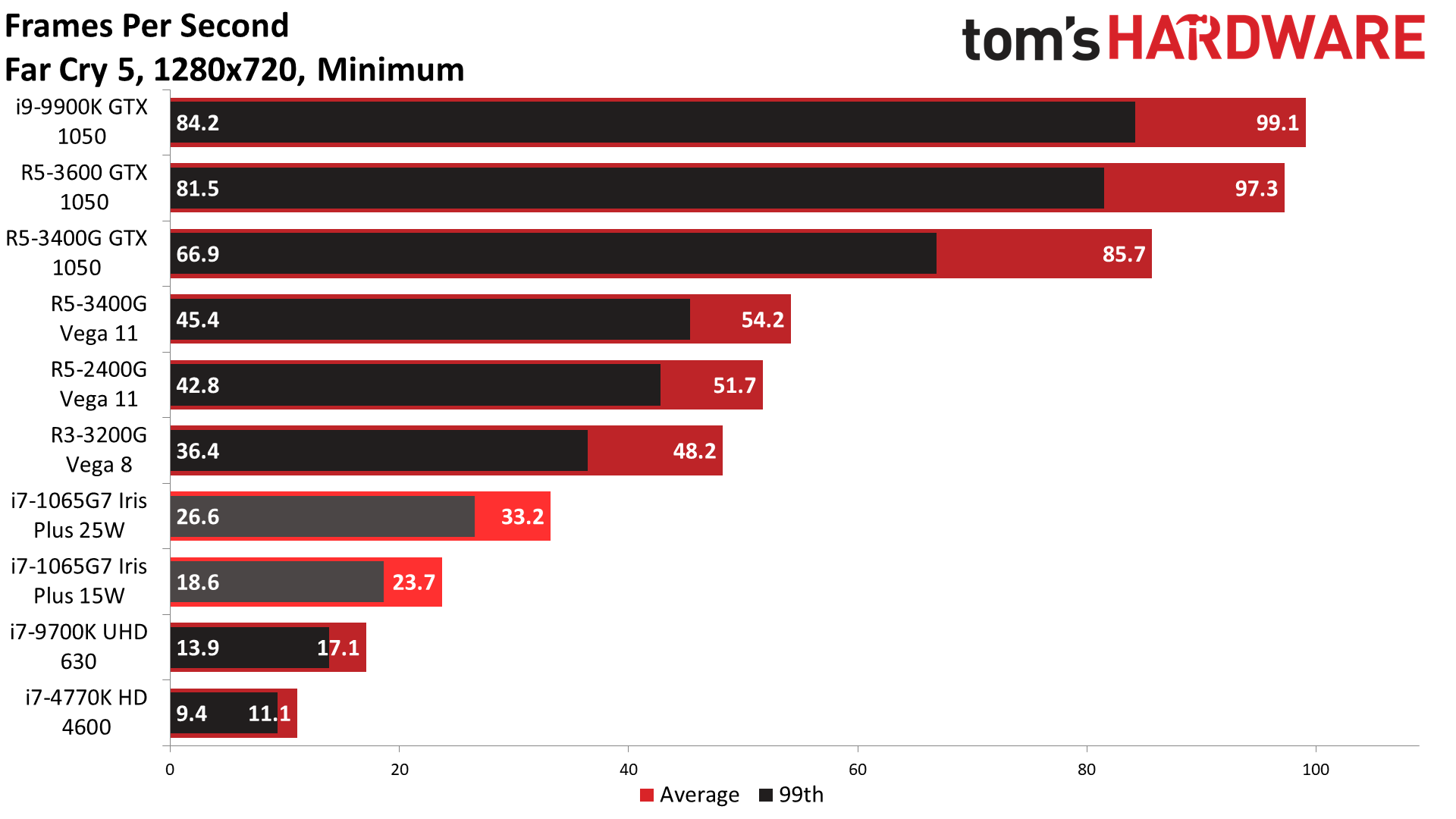

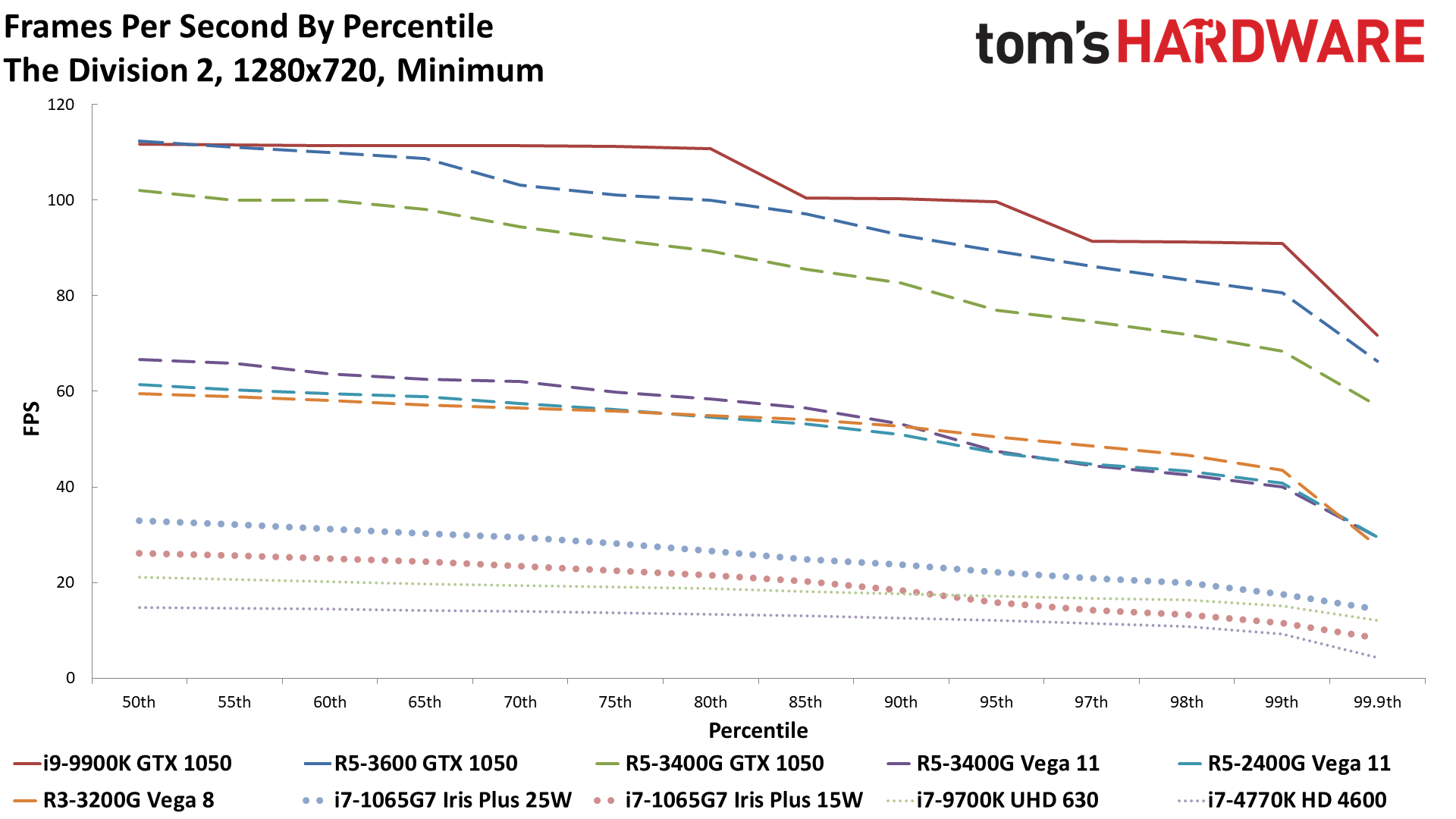

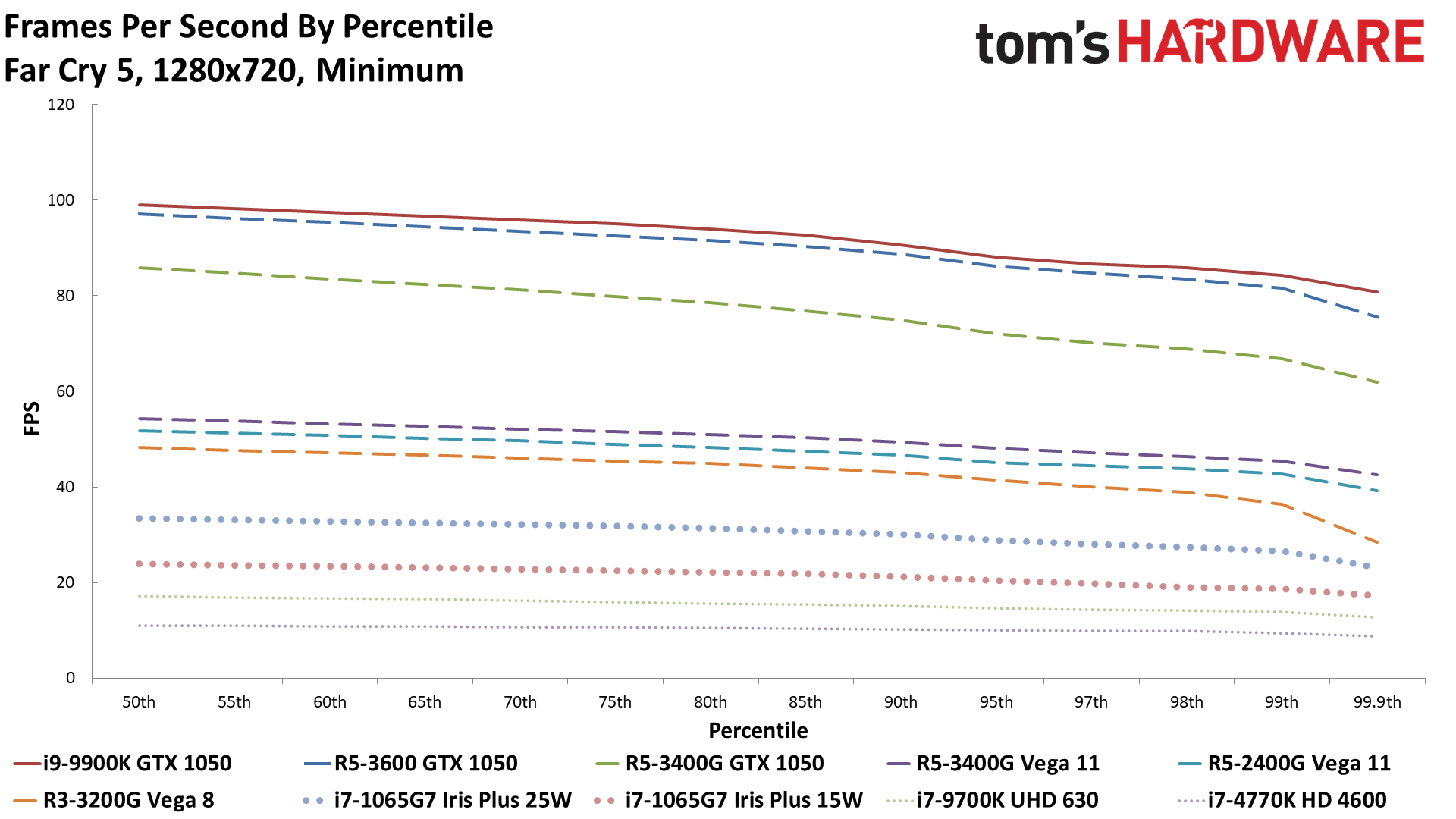

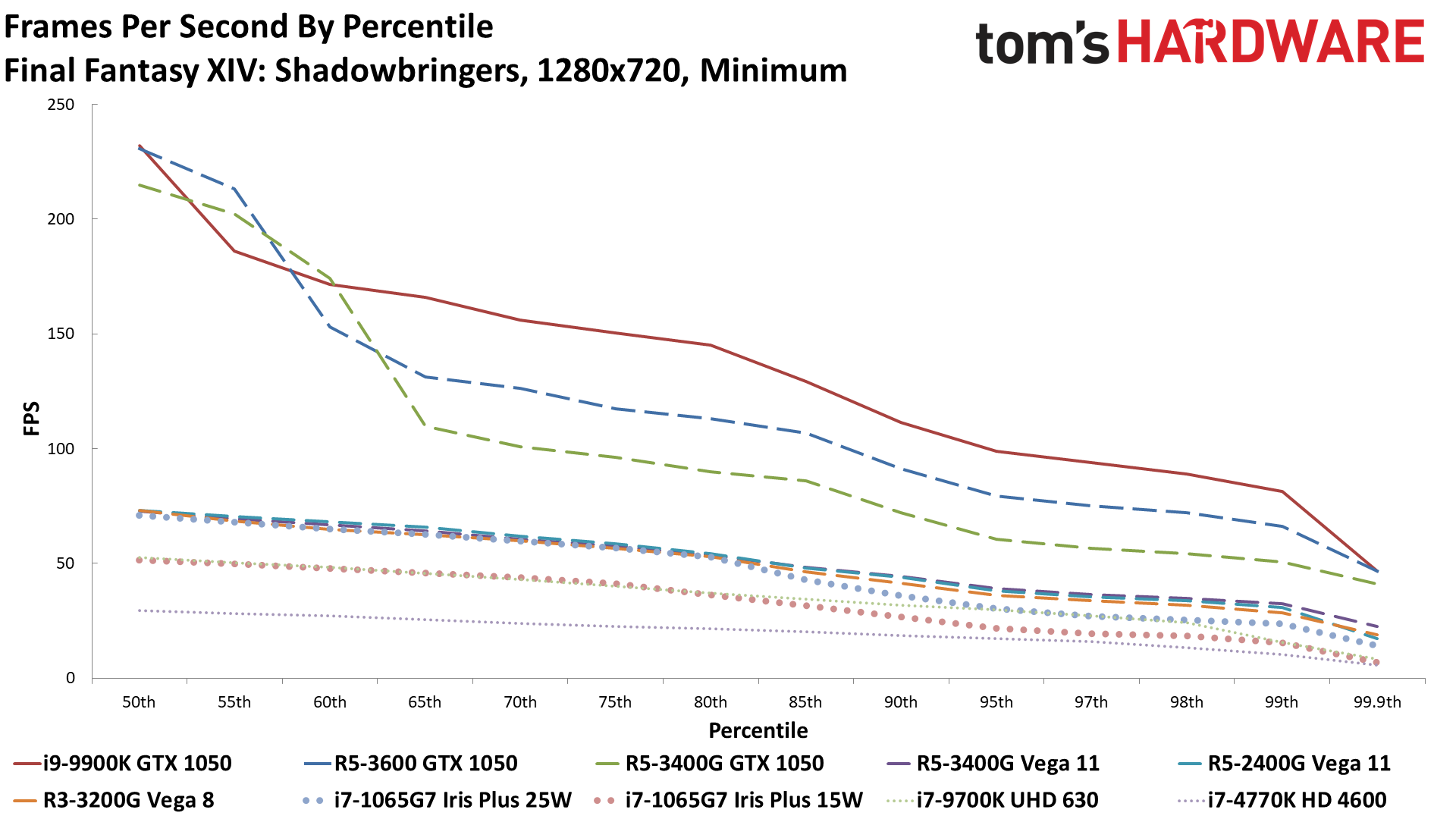

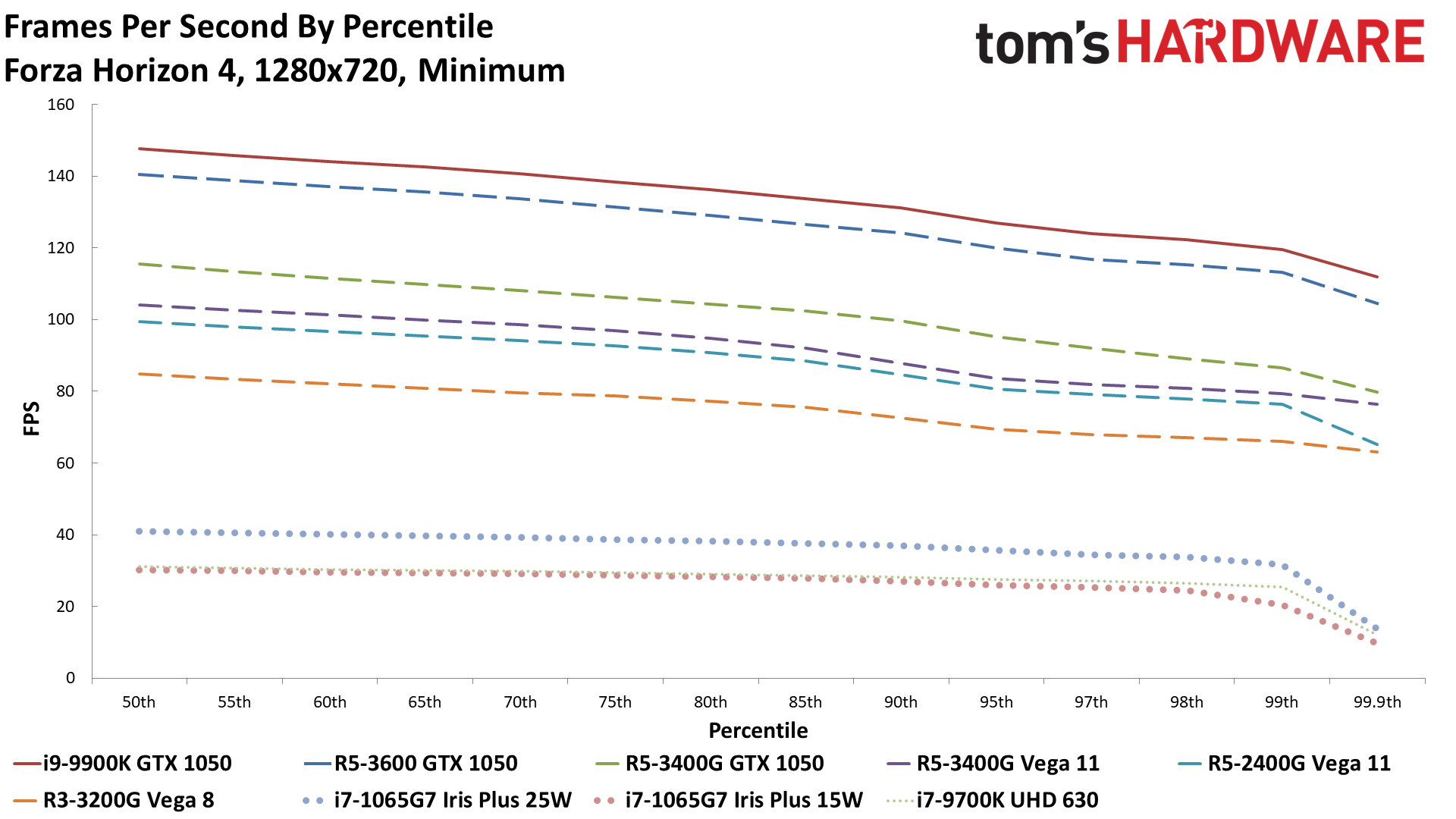

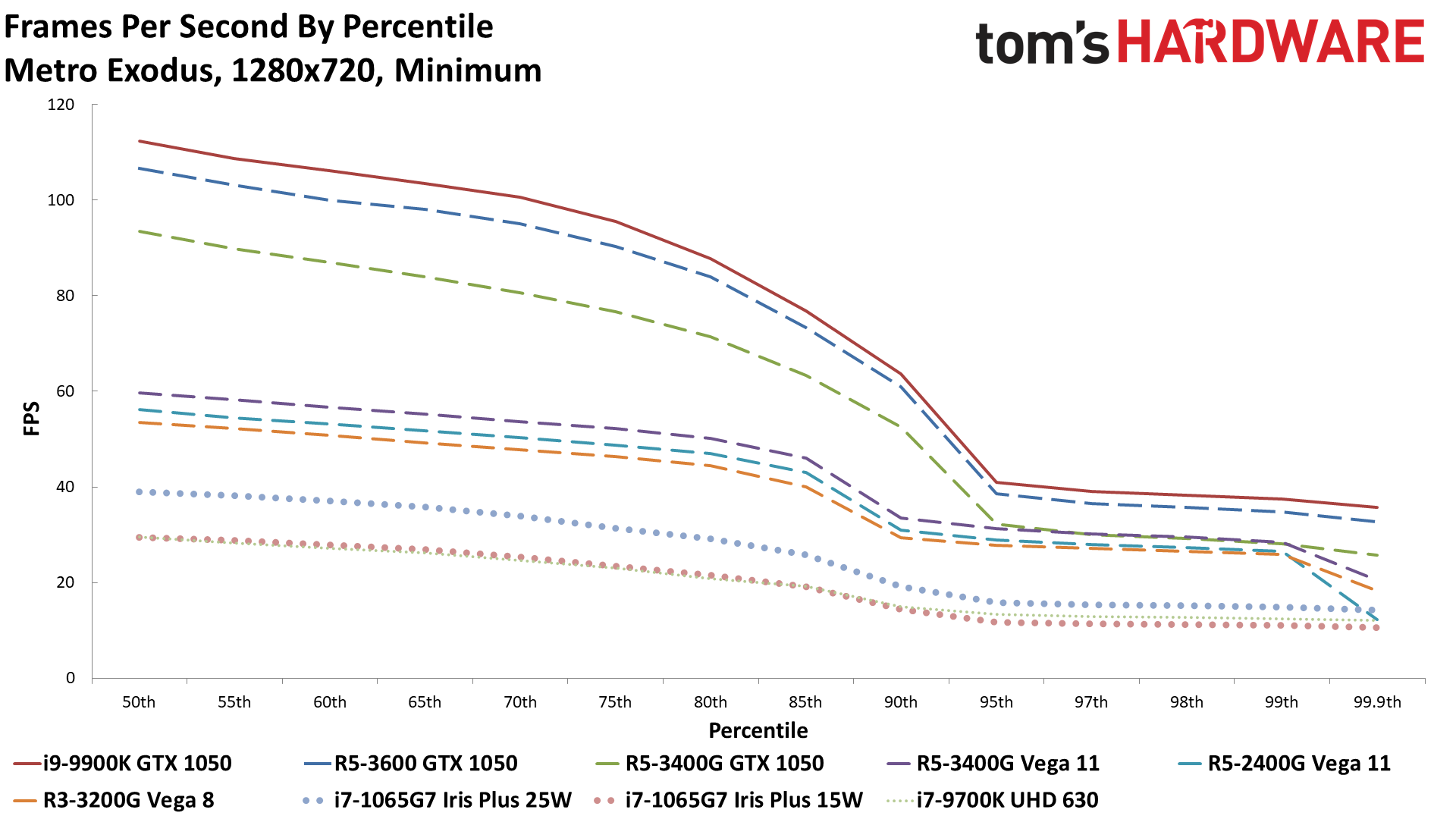

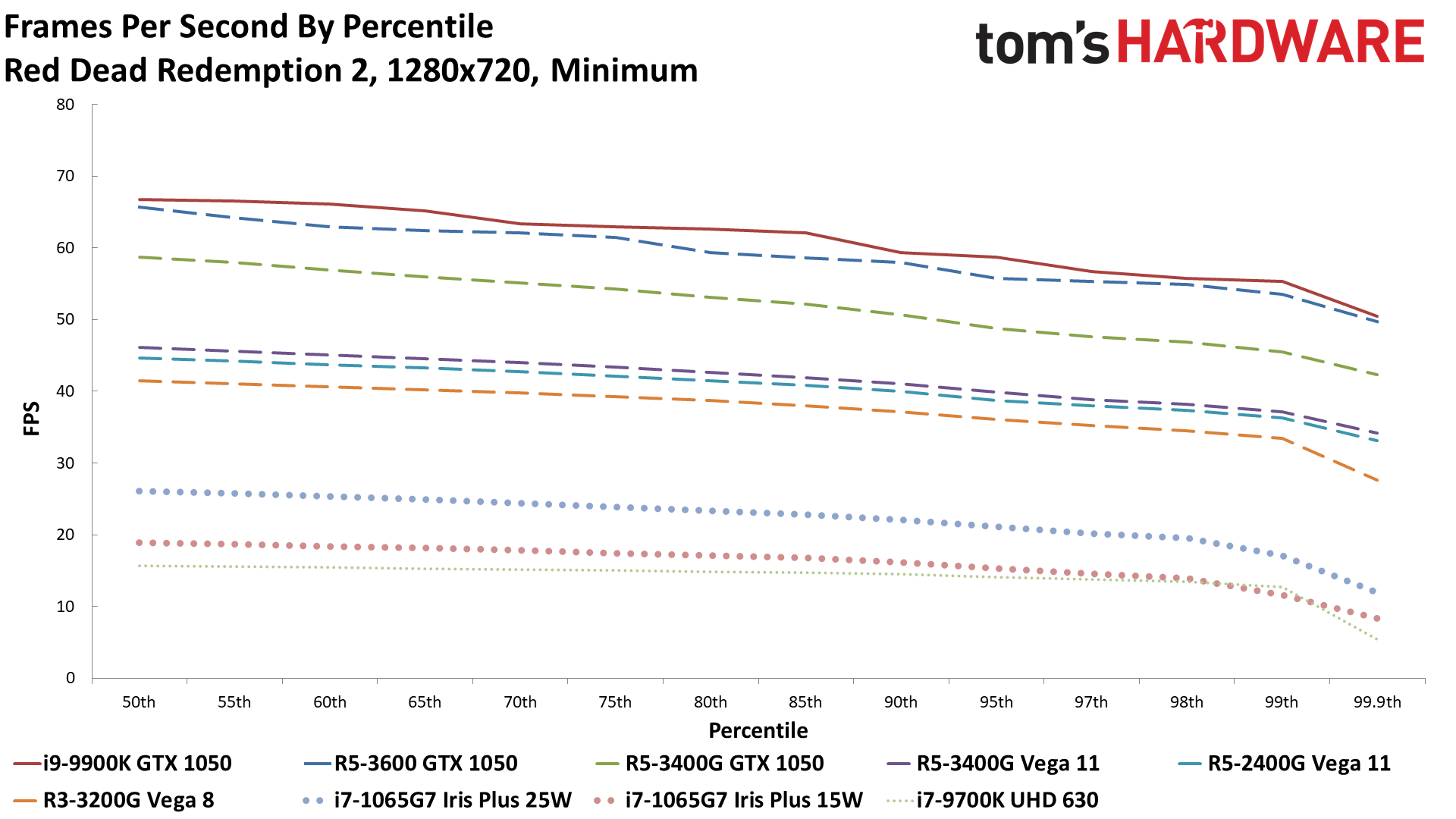

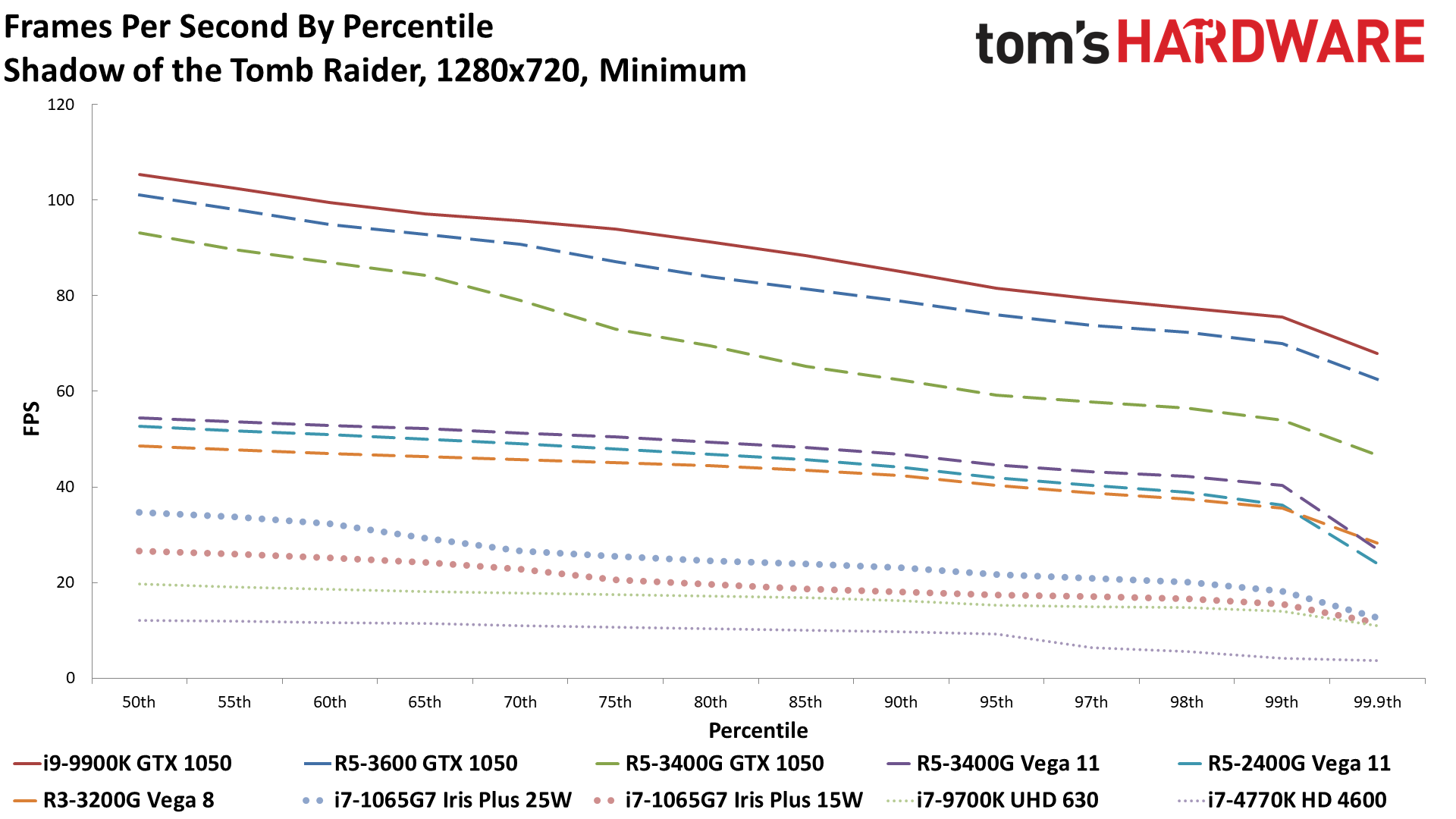

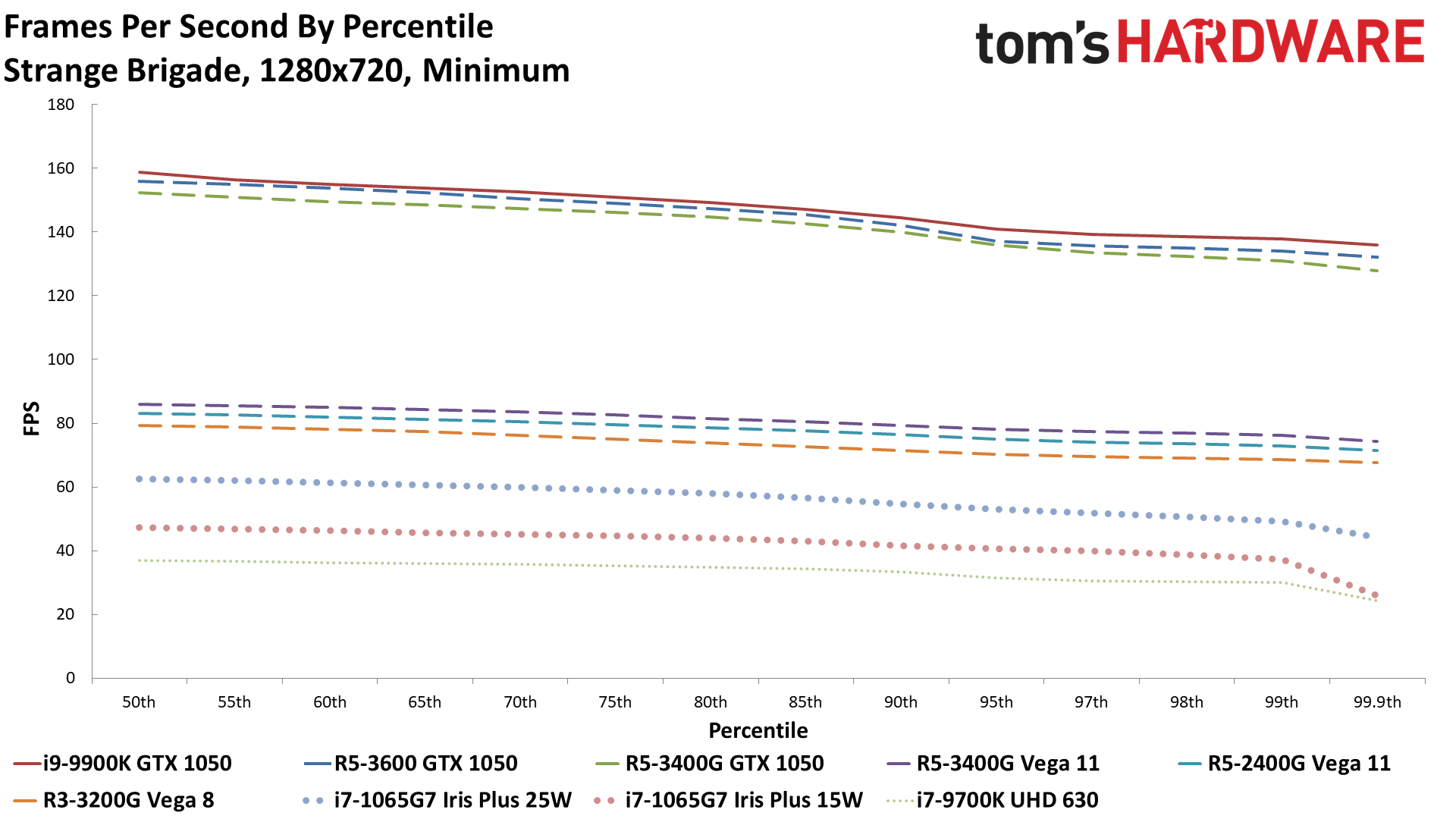

Now let's move on to comparative benchmarks, using our own benchmarks and looking at Ice Lake-U at two different settings. We'll start with the 720p results, which we're going to lump together in one large gallery of 20 slides (first the bar charts, then the percentile line charts). We've highlighted the Gen11 Iris Plus Graphics in these charts:

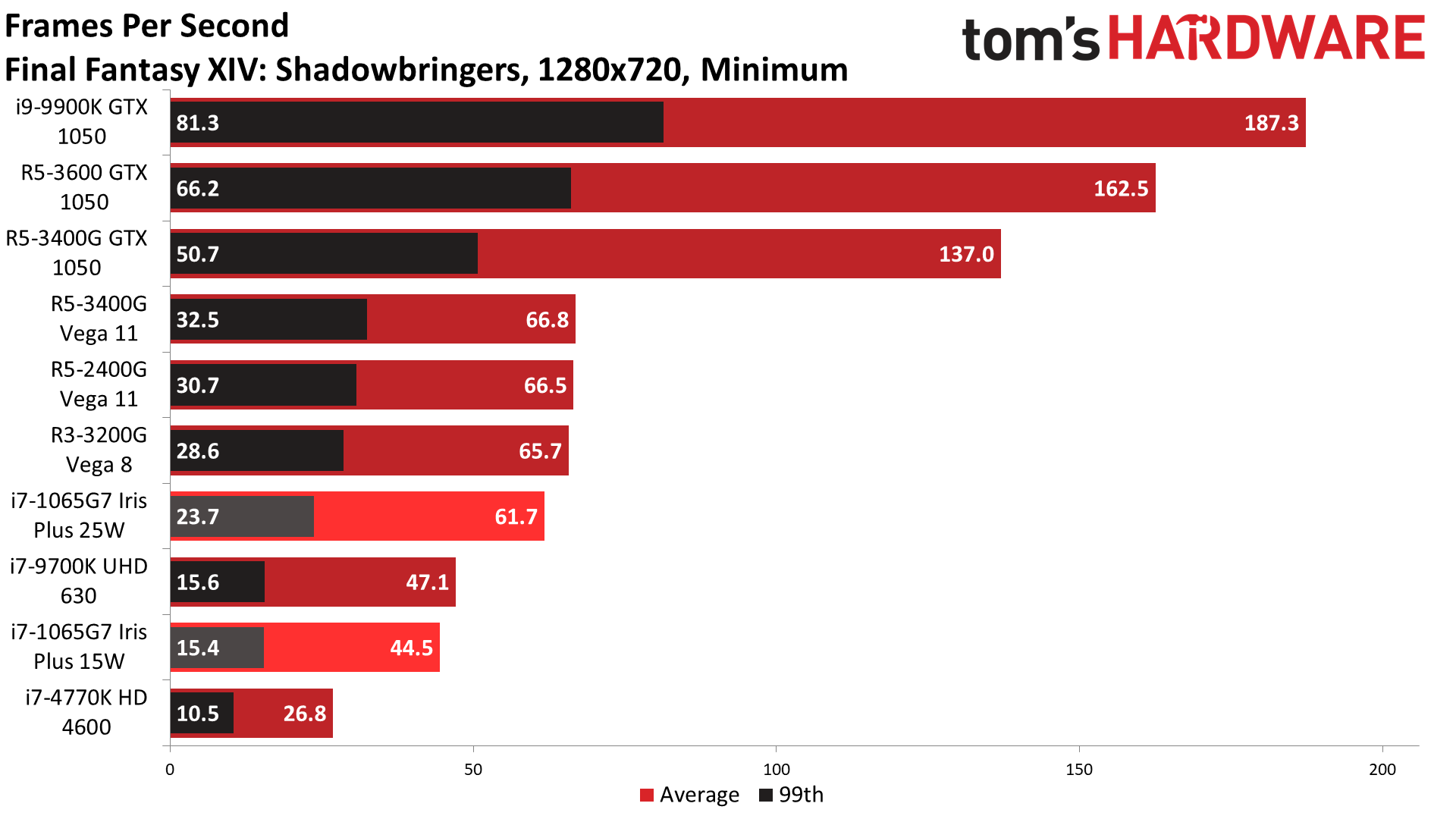

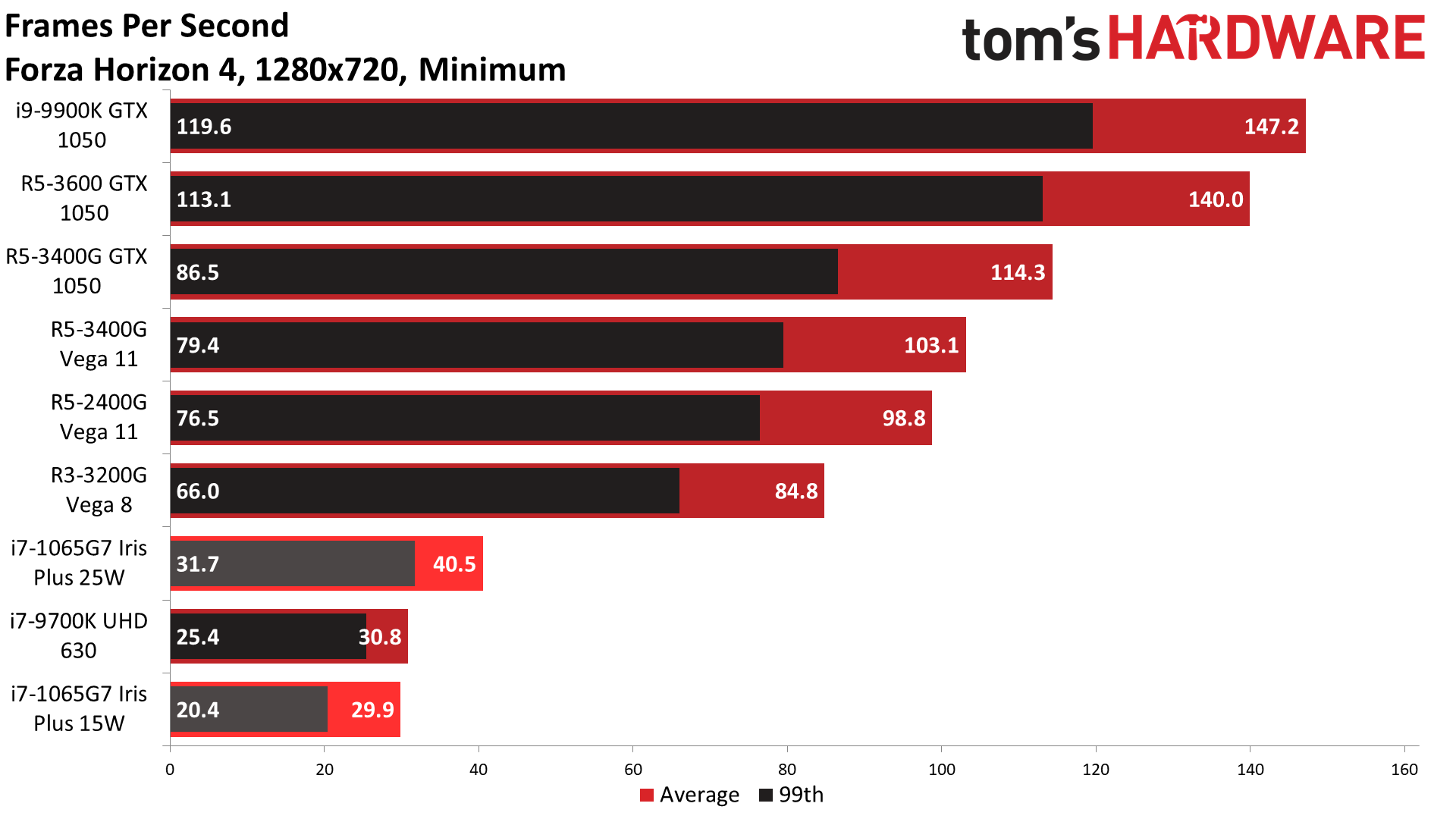

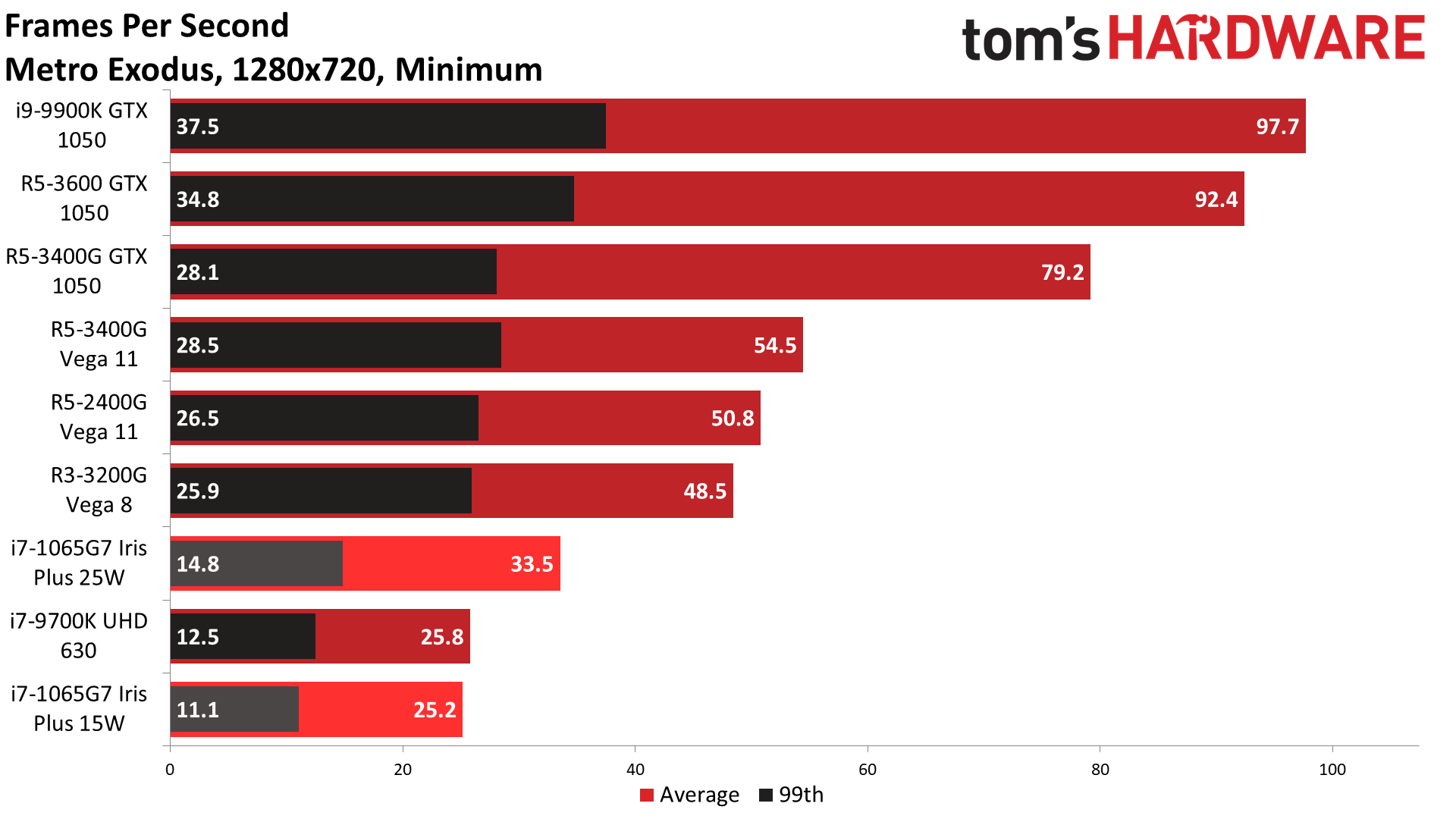

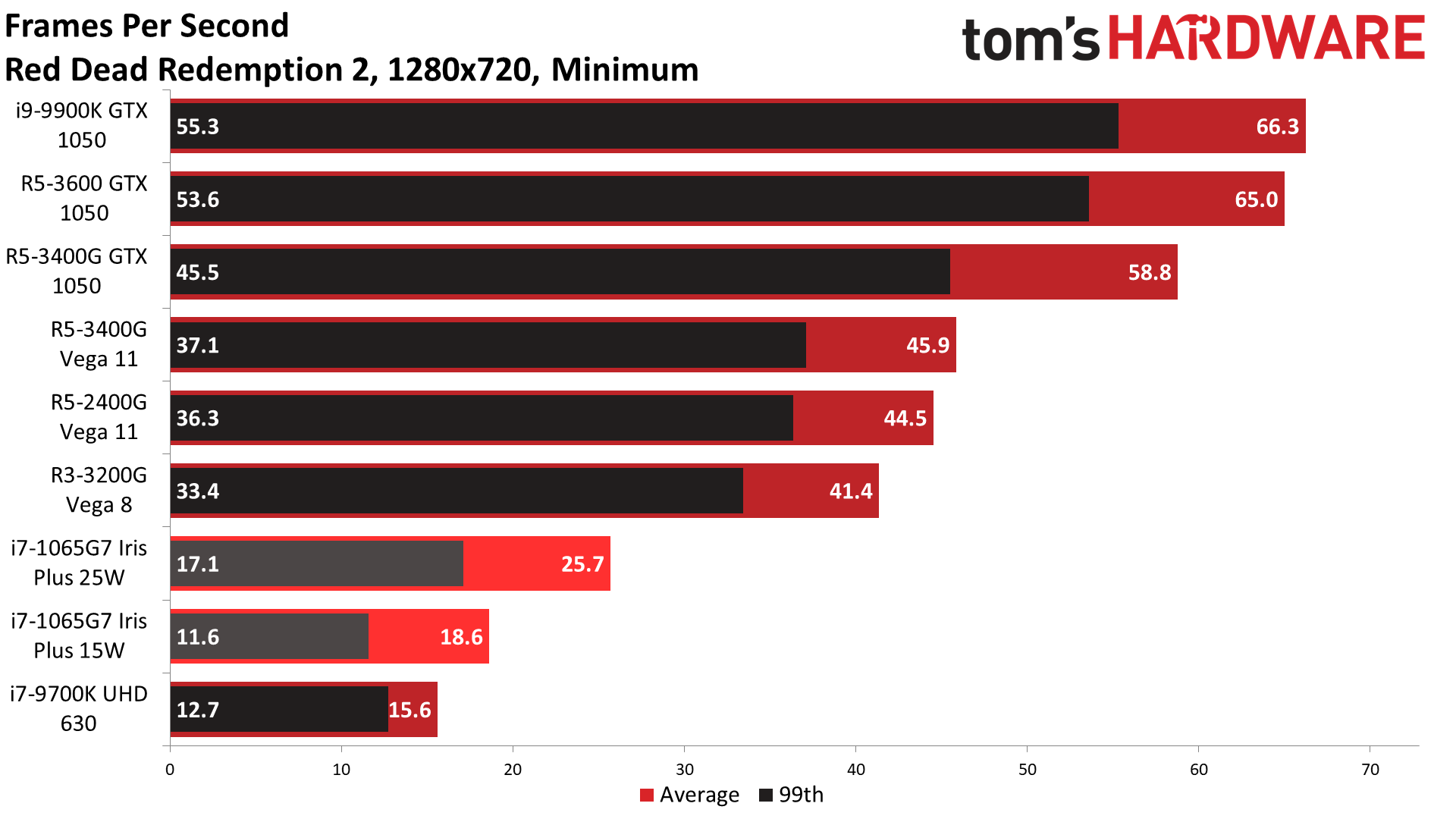

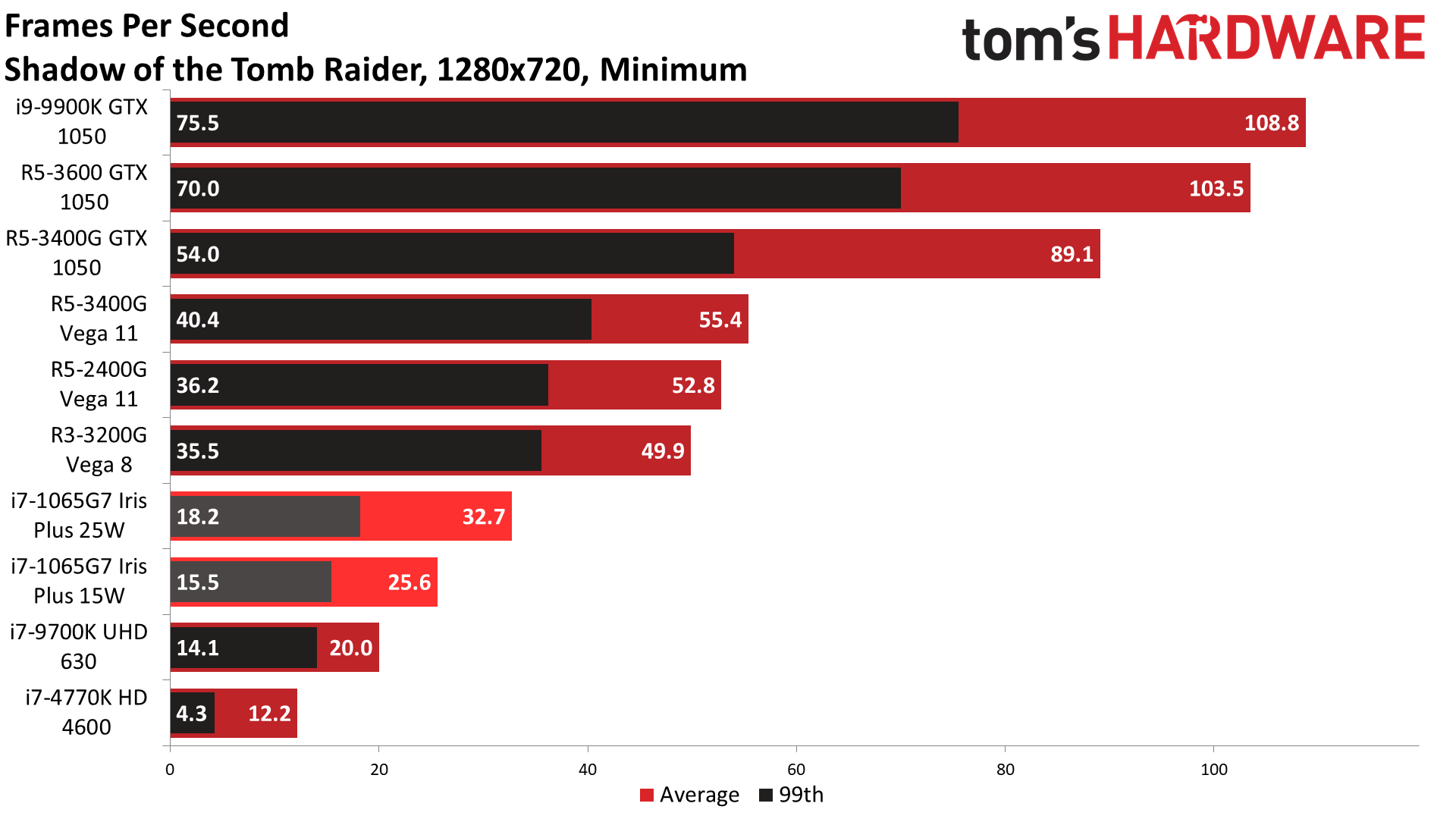

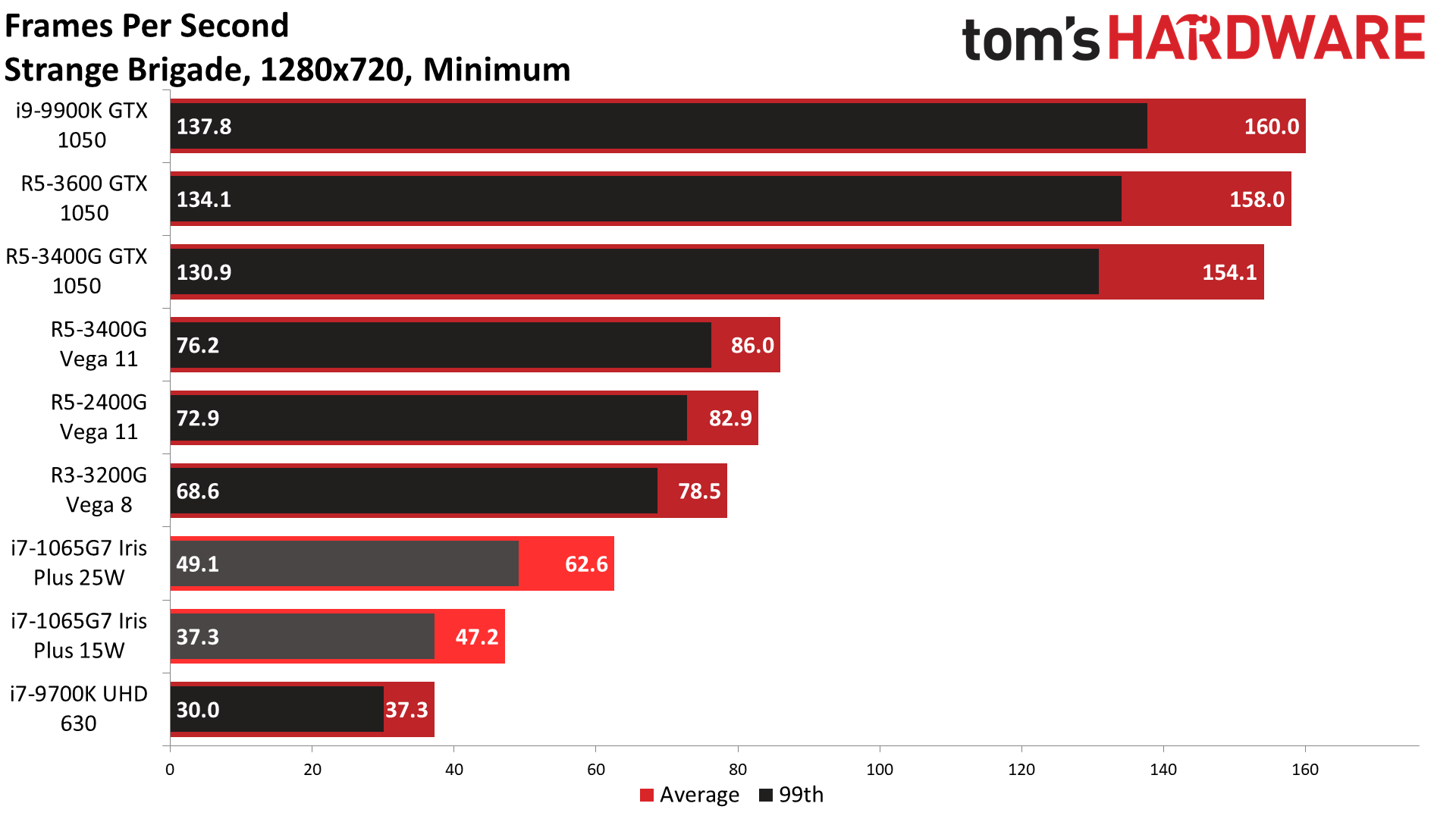

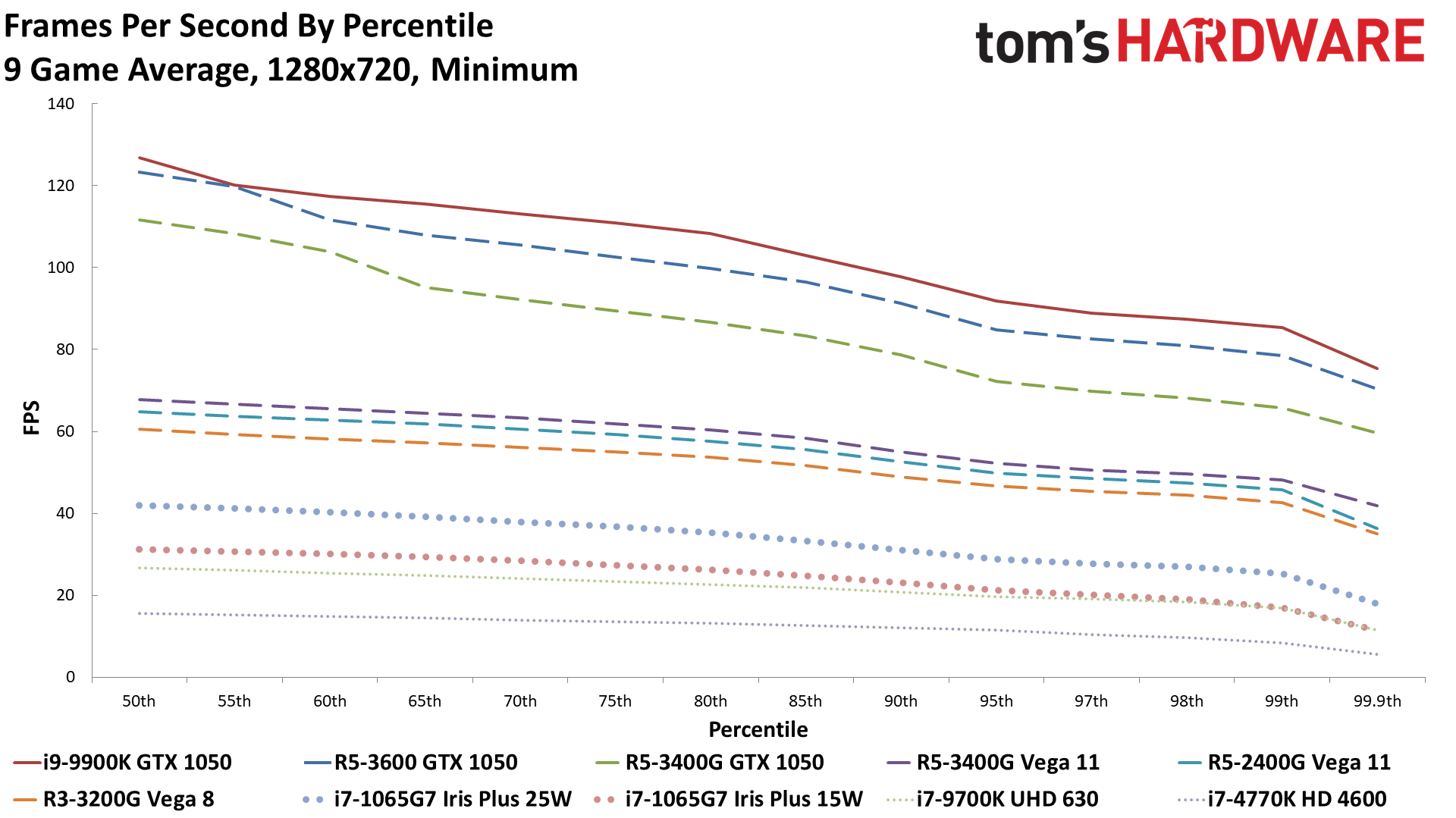

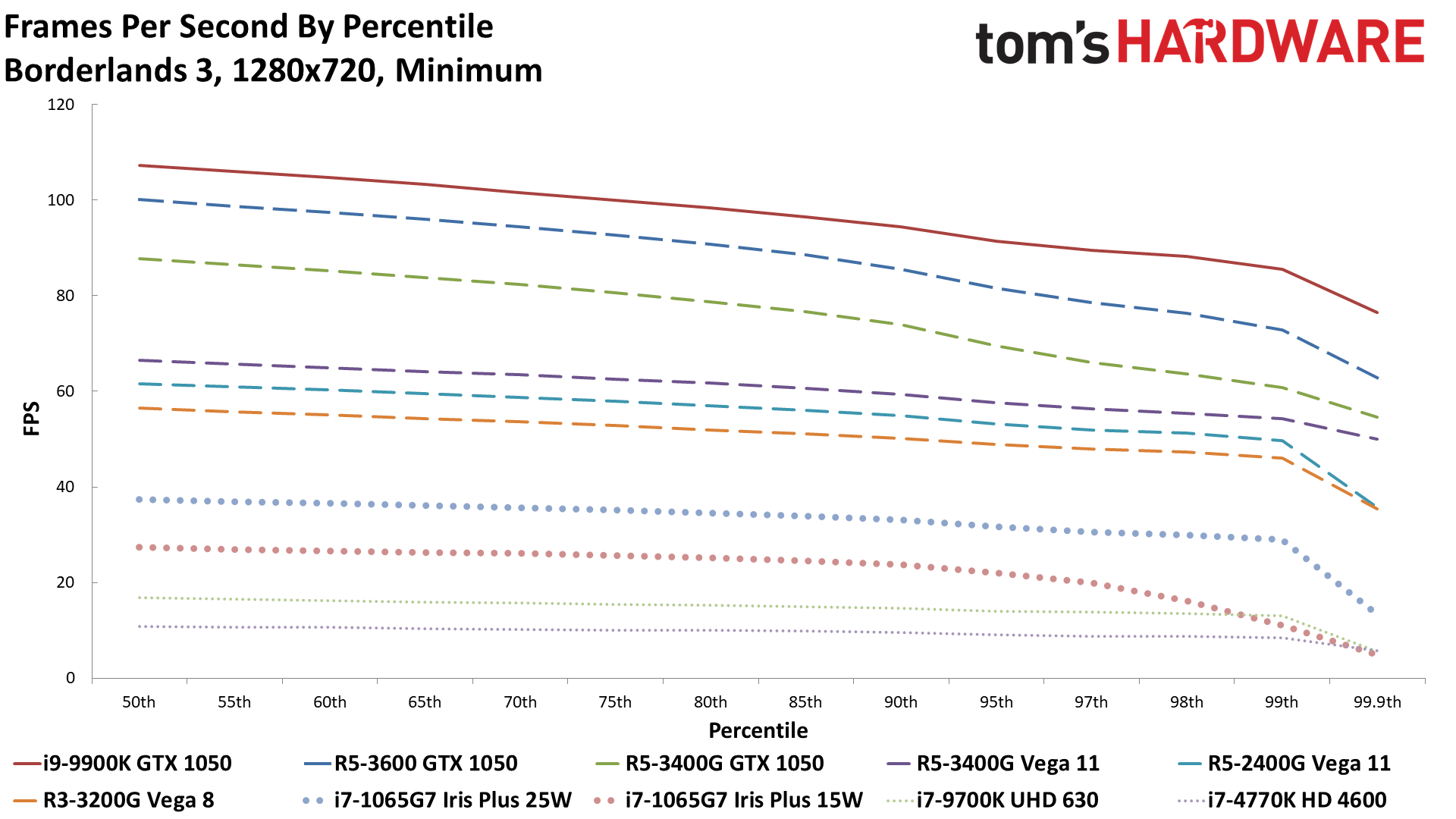

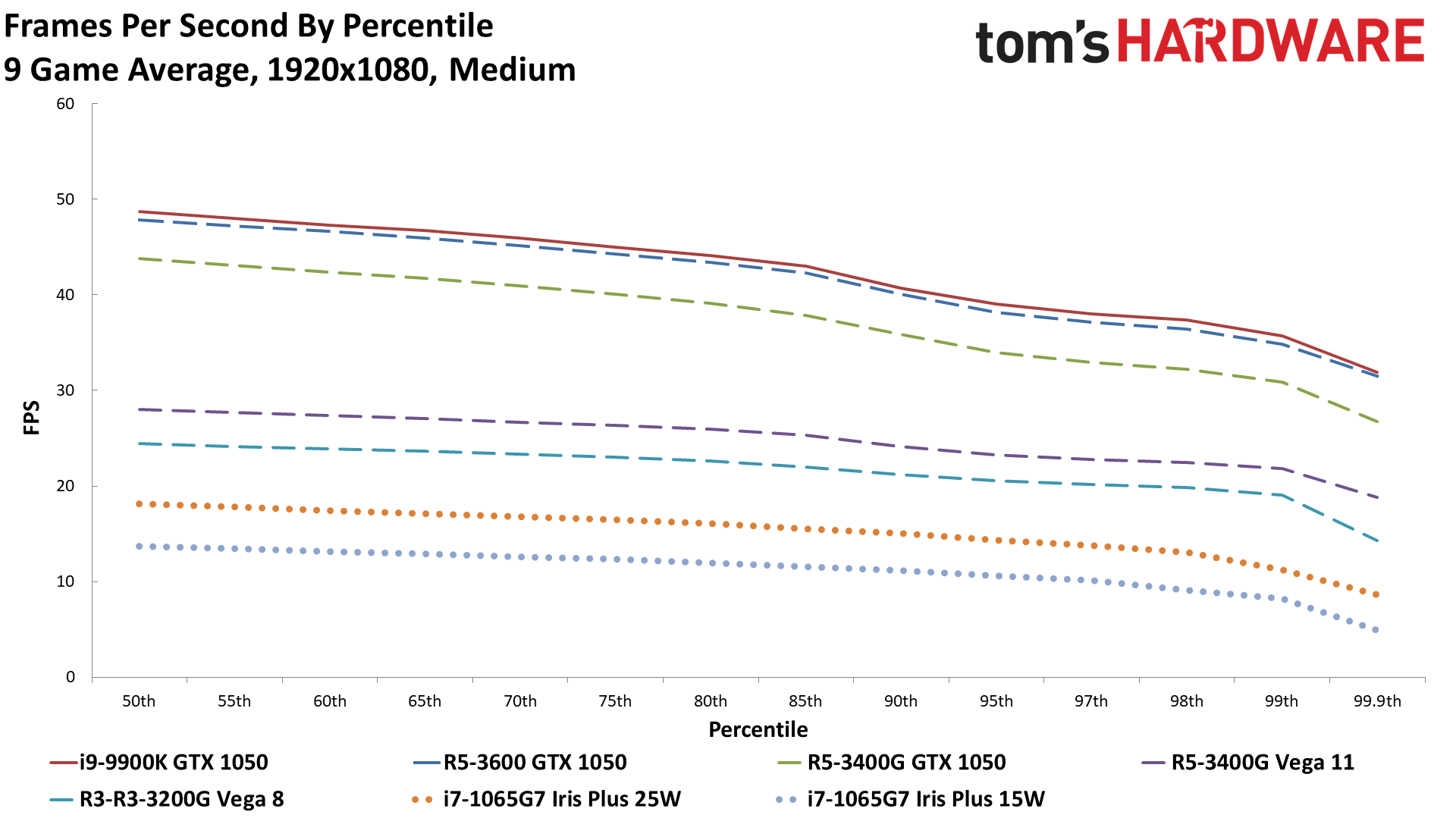

The first thing you'll notice is just how big of a jump in gaming performance Razer gets by raising the TDP from 15W to 25W. On average, it's 35% faster than the 15W configuration, and it's also 55% faster than the desktop UHD 630 with a 95W TDP. What's more, it breaks 30 fps in eight of the nine games tested—Red Dead Redemption 2 is the only exception, though it's average of 26 fps is still somewhat playable in a pinch.

Once you're finished being impressed by the performance improvements over Intel's previous Gen9.5 graphics (maybe), things are a bit less exciting. Even Vega 8, AMD's lowest integrated solution on a Ryzen processor (Ryzen 3 3200G in this case), ends up beating the 25W Iris Plus configuration by 49%. Sure, some of that is TDP, but Vega 11 (3400G) stretches the lead to 67%. Clearly it's not just a memory bandwidth bottleneck holding Iris Plus back, especially since the Razer laptop has more bandwidth than the Ryzen APUs.

Here's the thing: Going back to the Tiger Lake demonstration, Intel might be able to double (or more) what we see from Ice Lake. That would put even ultra-mobile chips well ahead of AMD's current desktop chips. Of course, AMD also has desktop Renoir parts slated to arrive in the near future, but Intel could at least be competitive. It took Intel about a decade to go from lackluster HD Graphics to where it is today, but there's definitely been progress.

From the Gen5 Graphics found in the 1st Gen Core processors like the Core i5-670—and back then, Intel was still omitting integrated graphics on its fastest chips like the i7-875K—to the current Gen11 graphics found in 10th Gen Ice Lake, Intel has gone from an anemic (and not even fully capable) 35.2 GFLOPS to 1126 GFLOPS. That's a 33X increase (and in practice, it's more than that because new features are available with the most recent GPUs) … but is it enough?

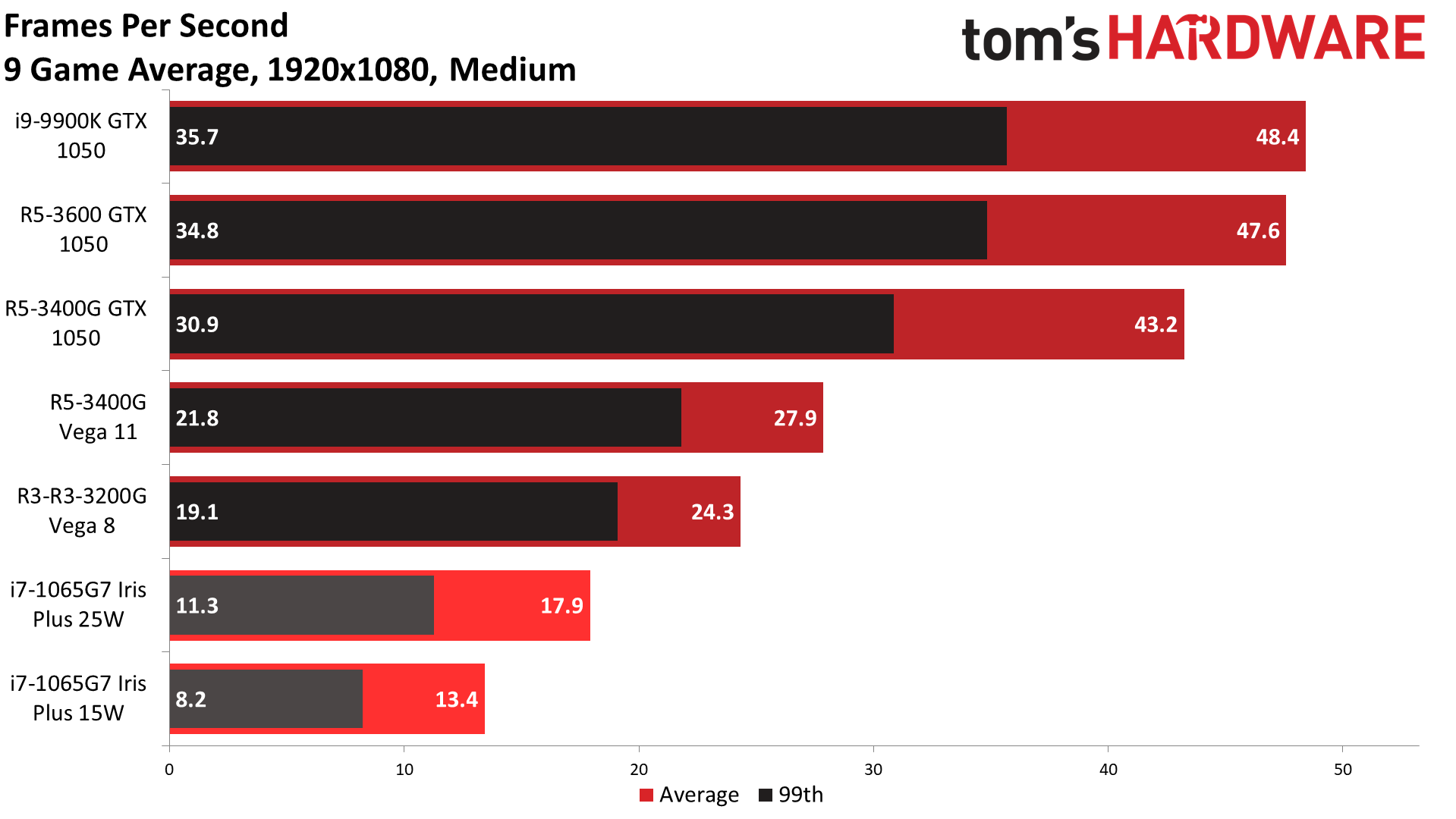

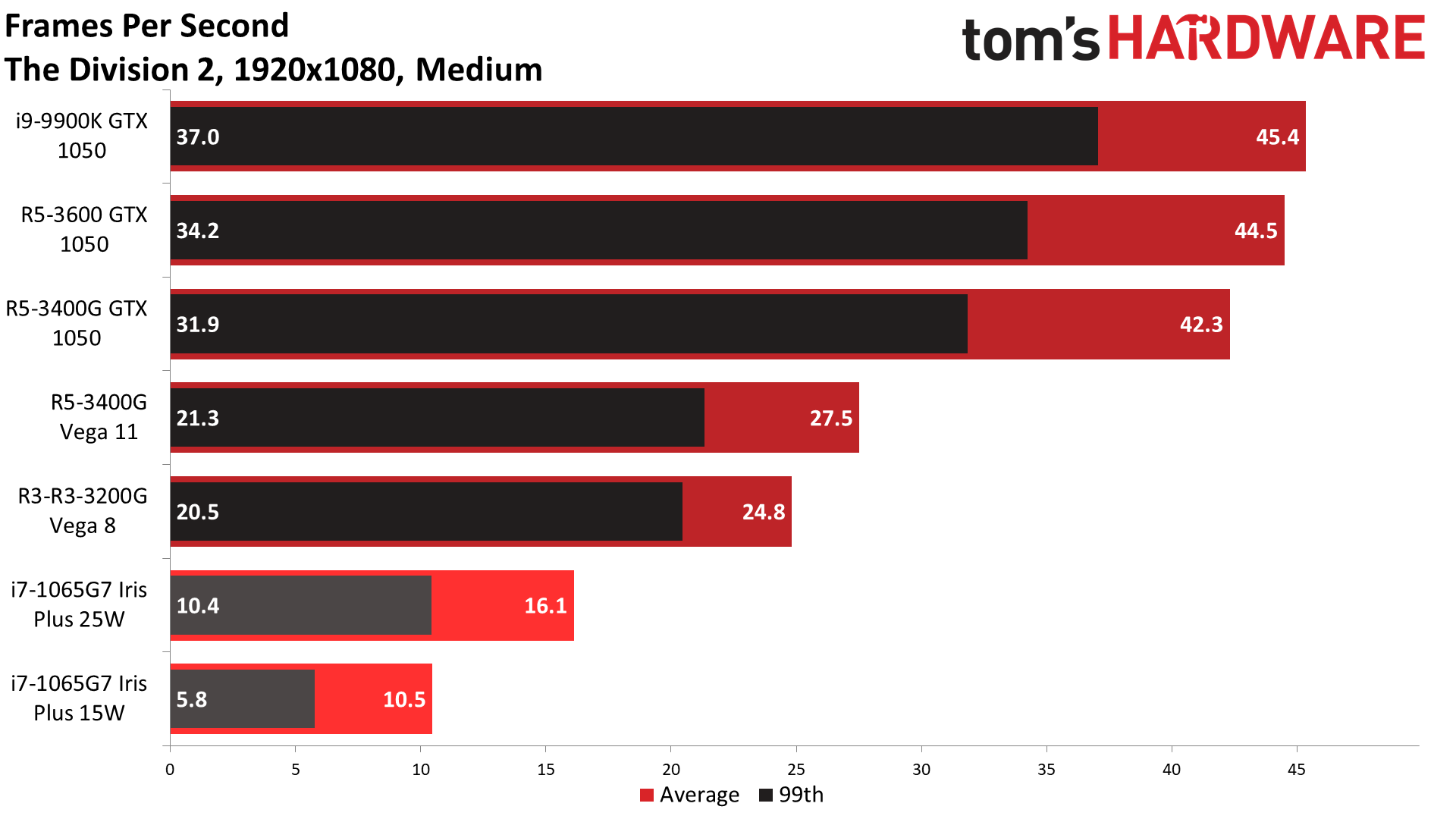

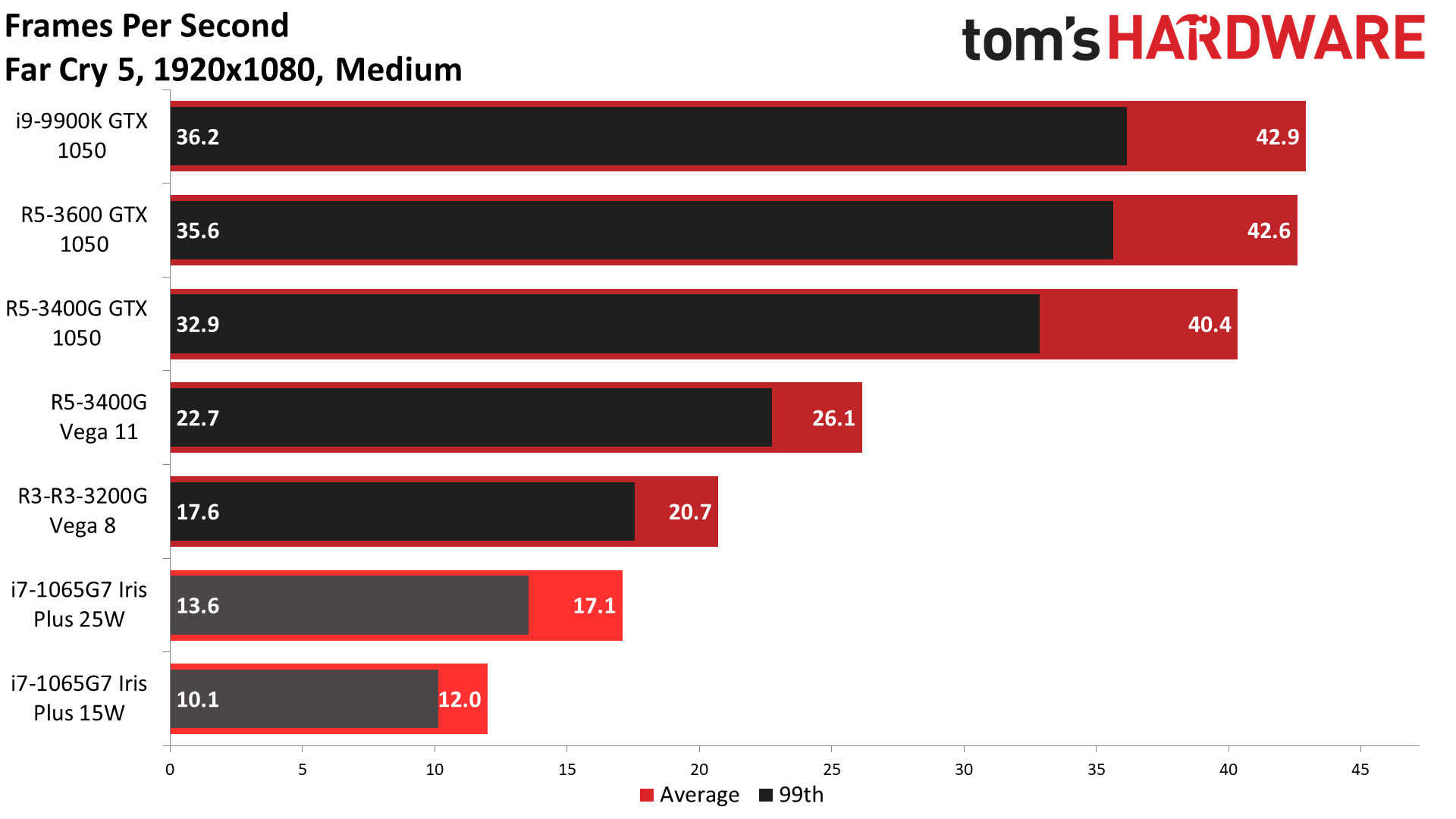

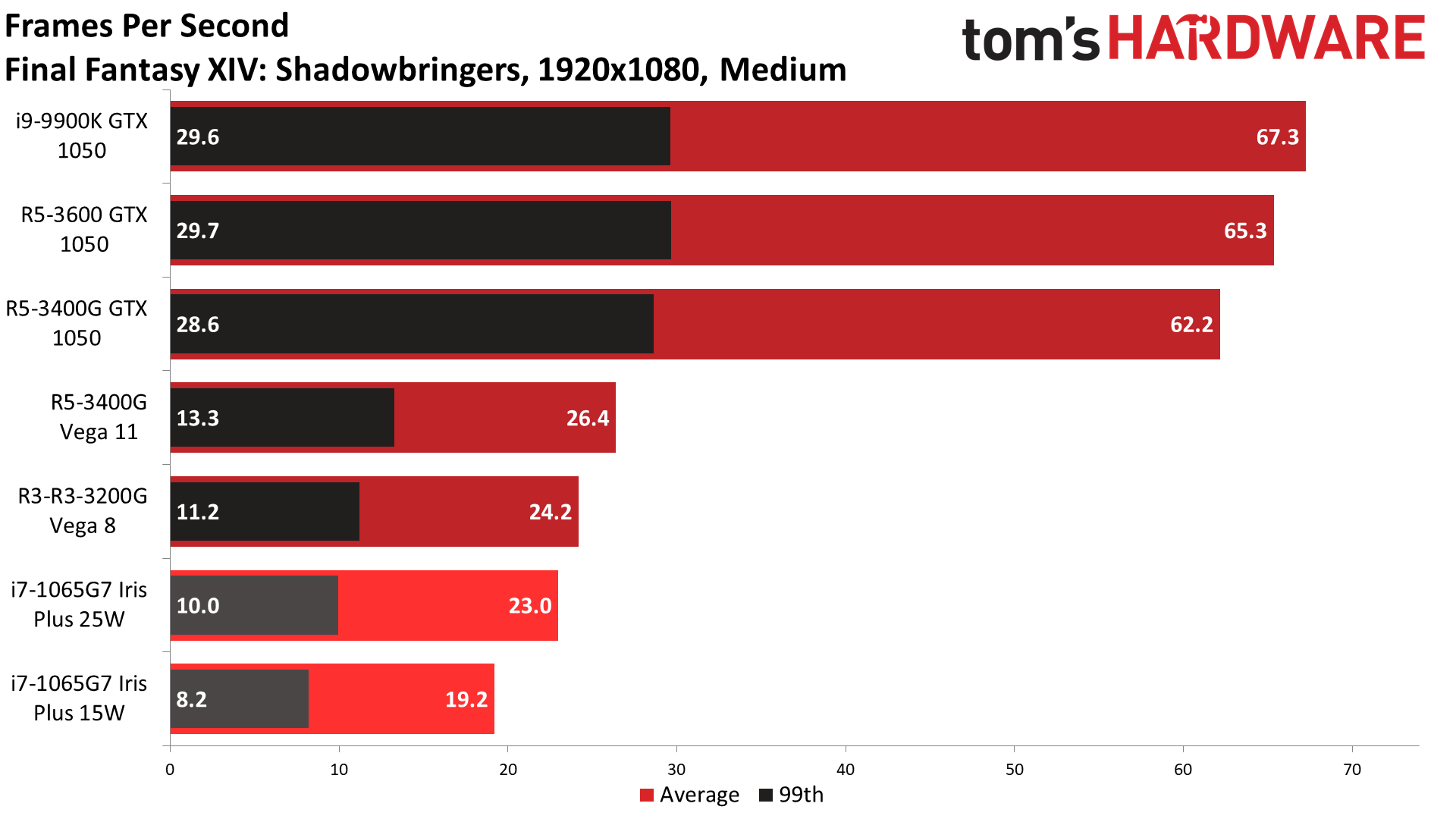

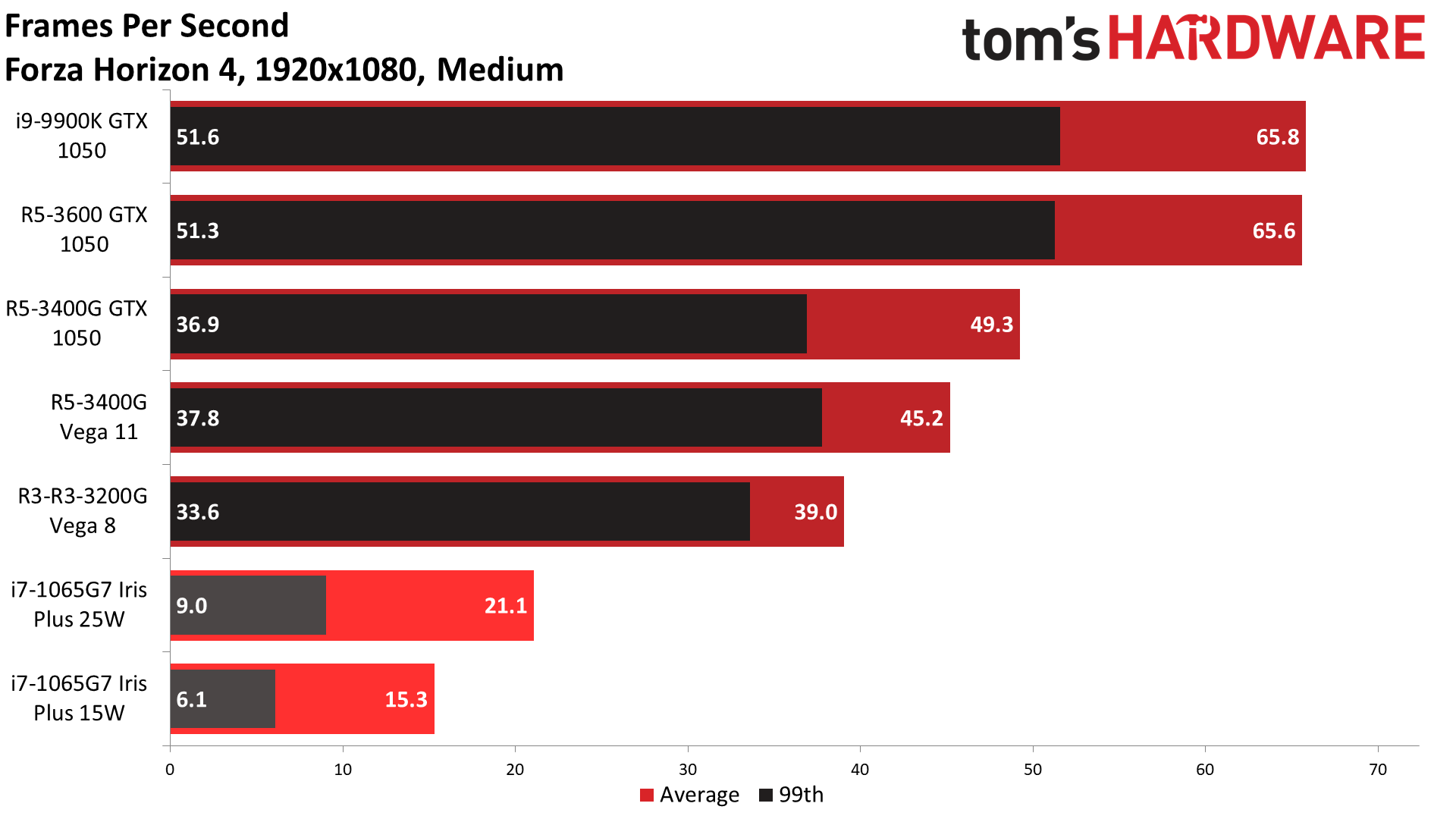

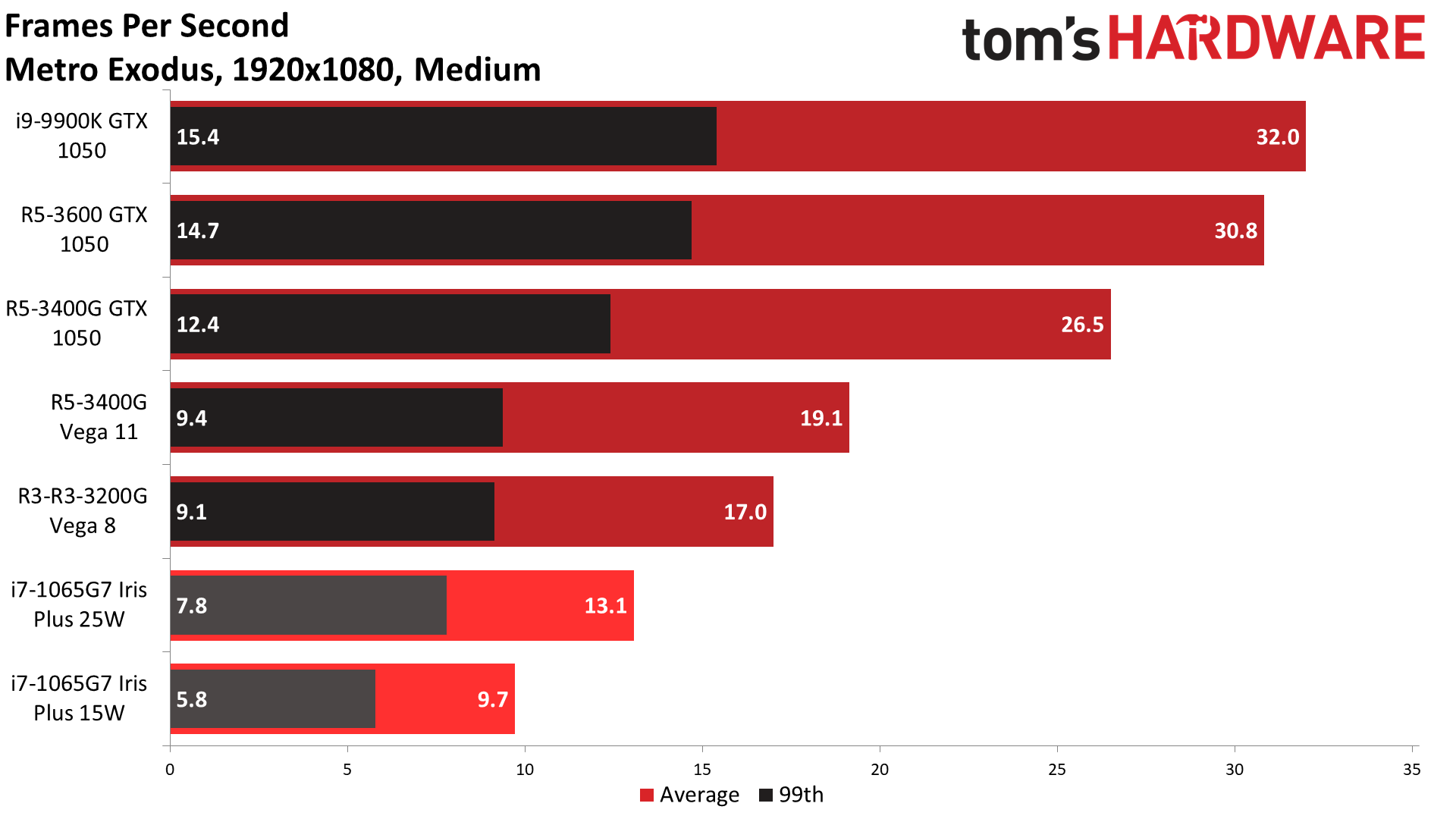

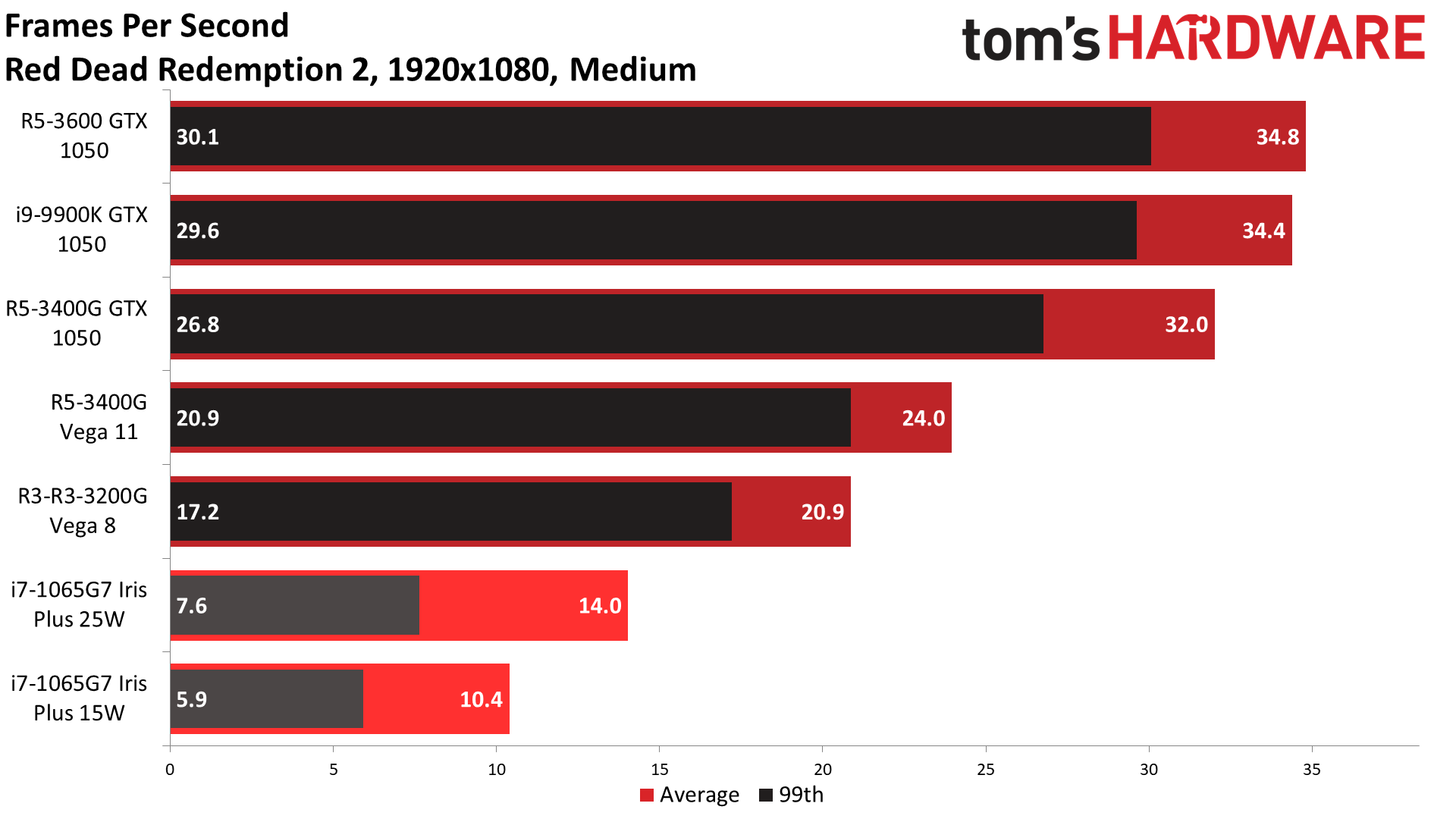

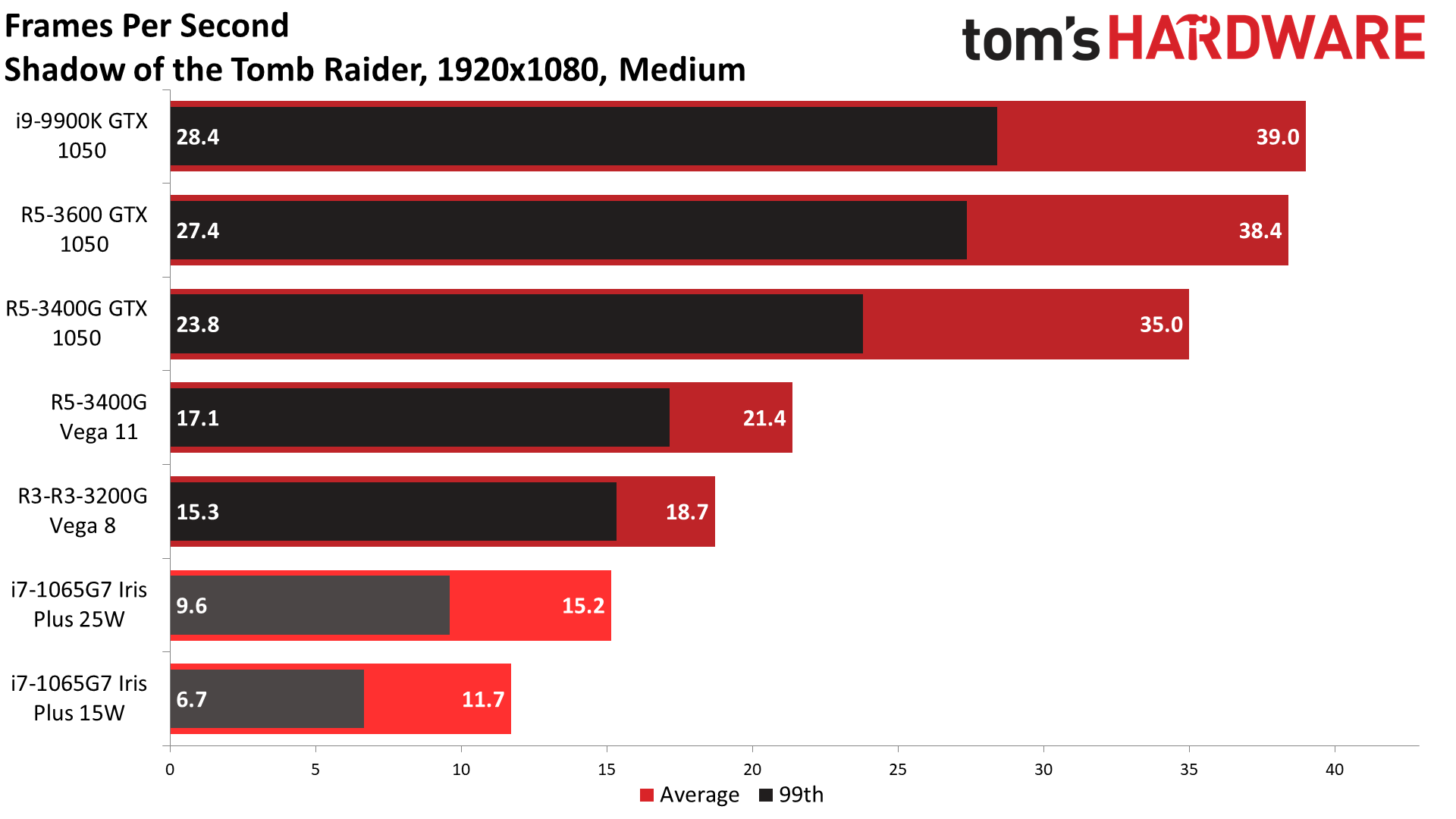

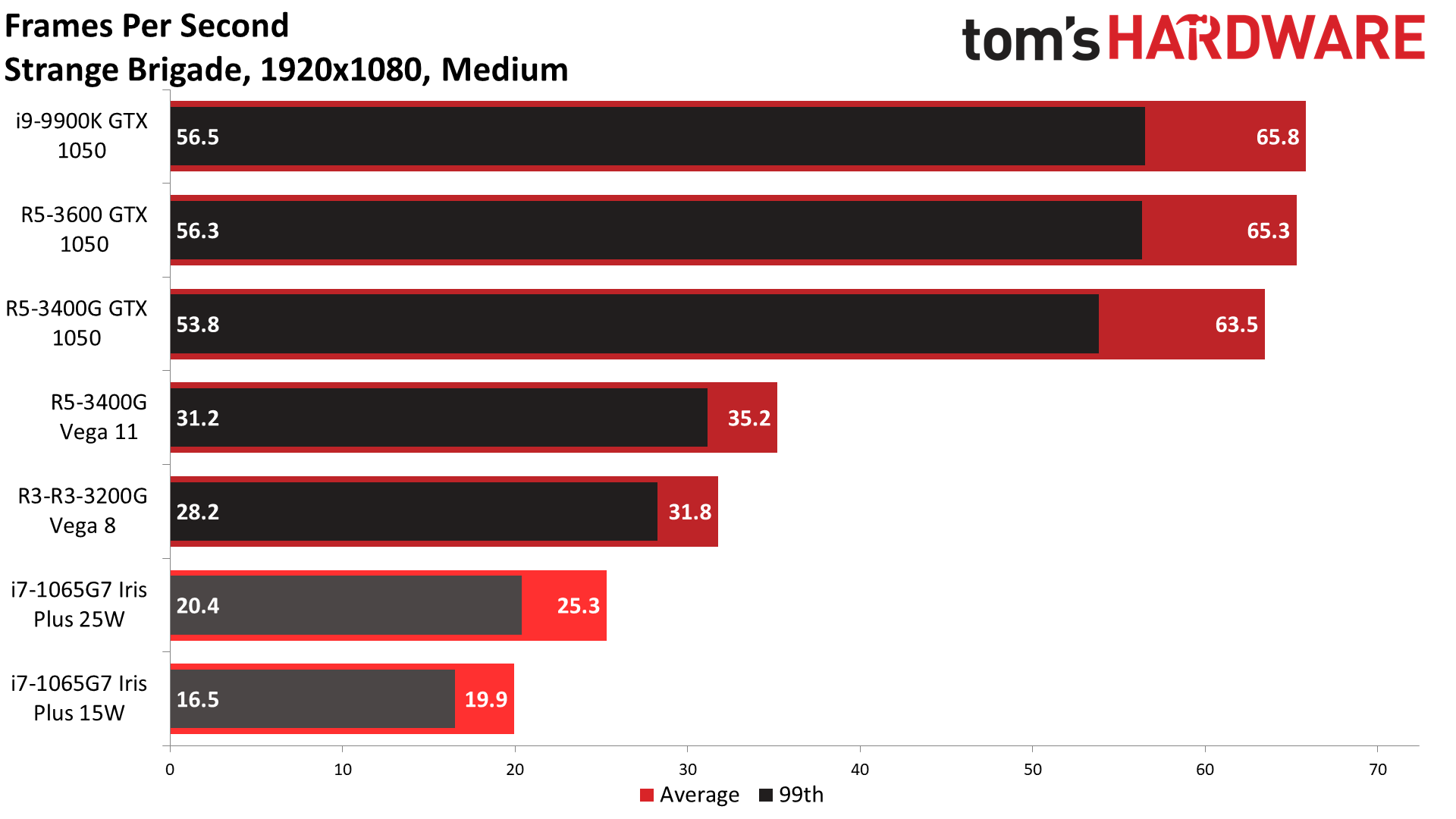

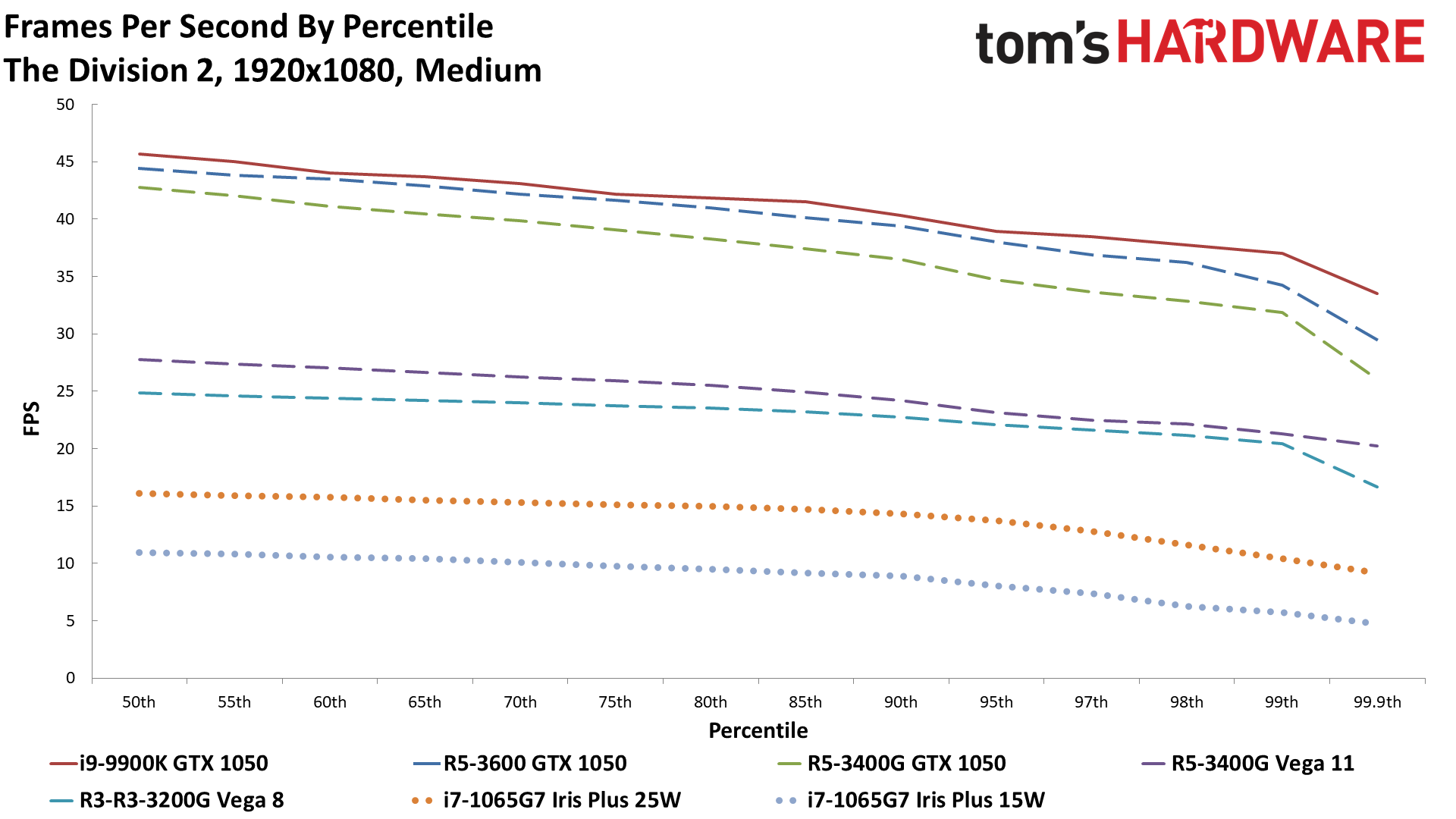

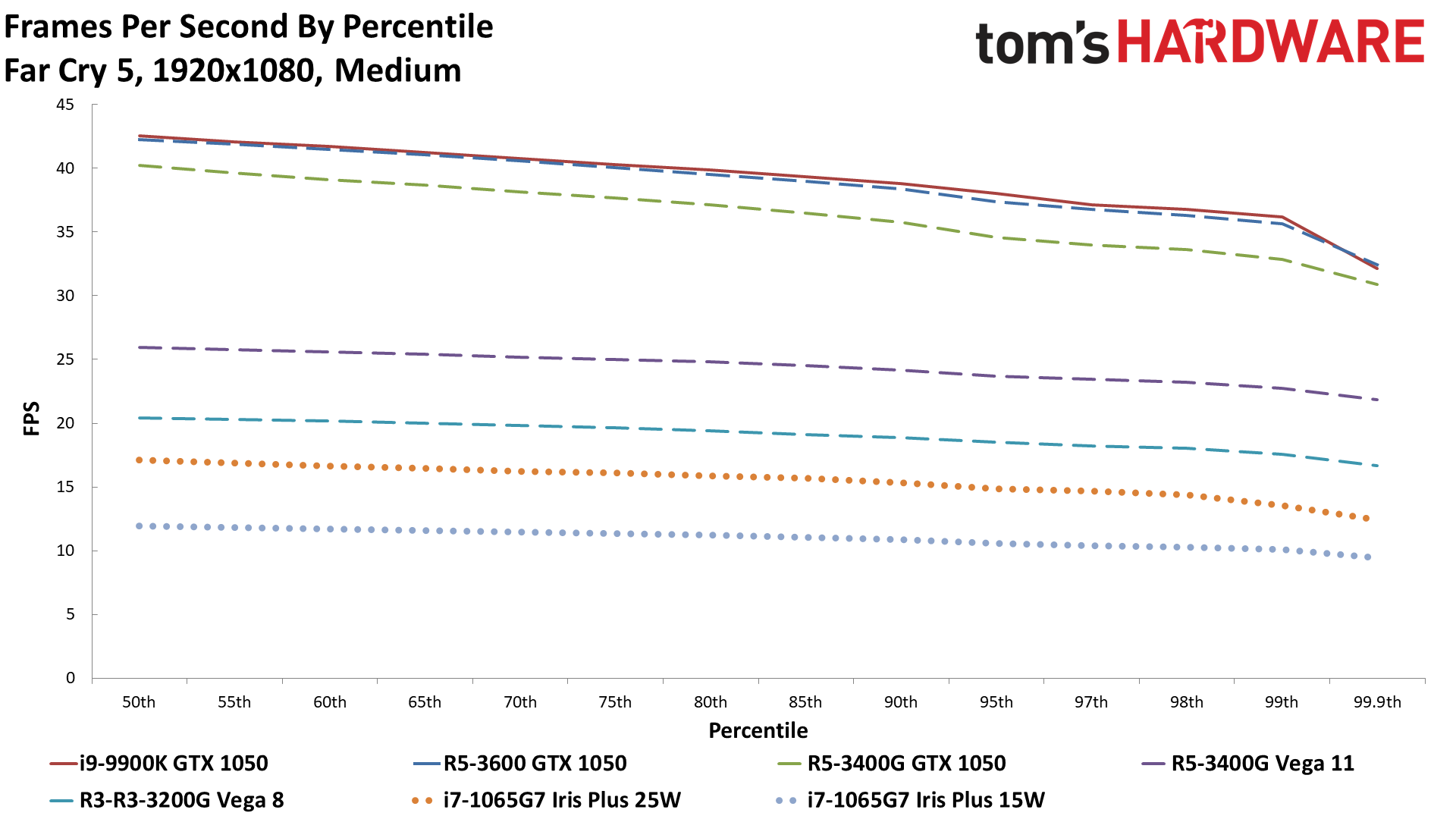

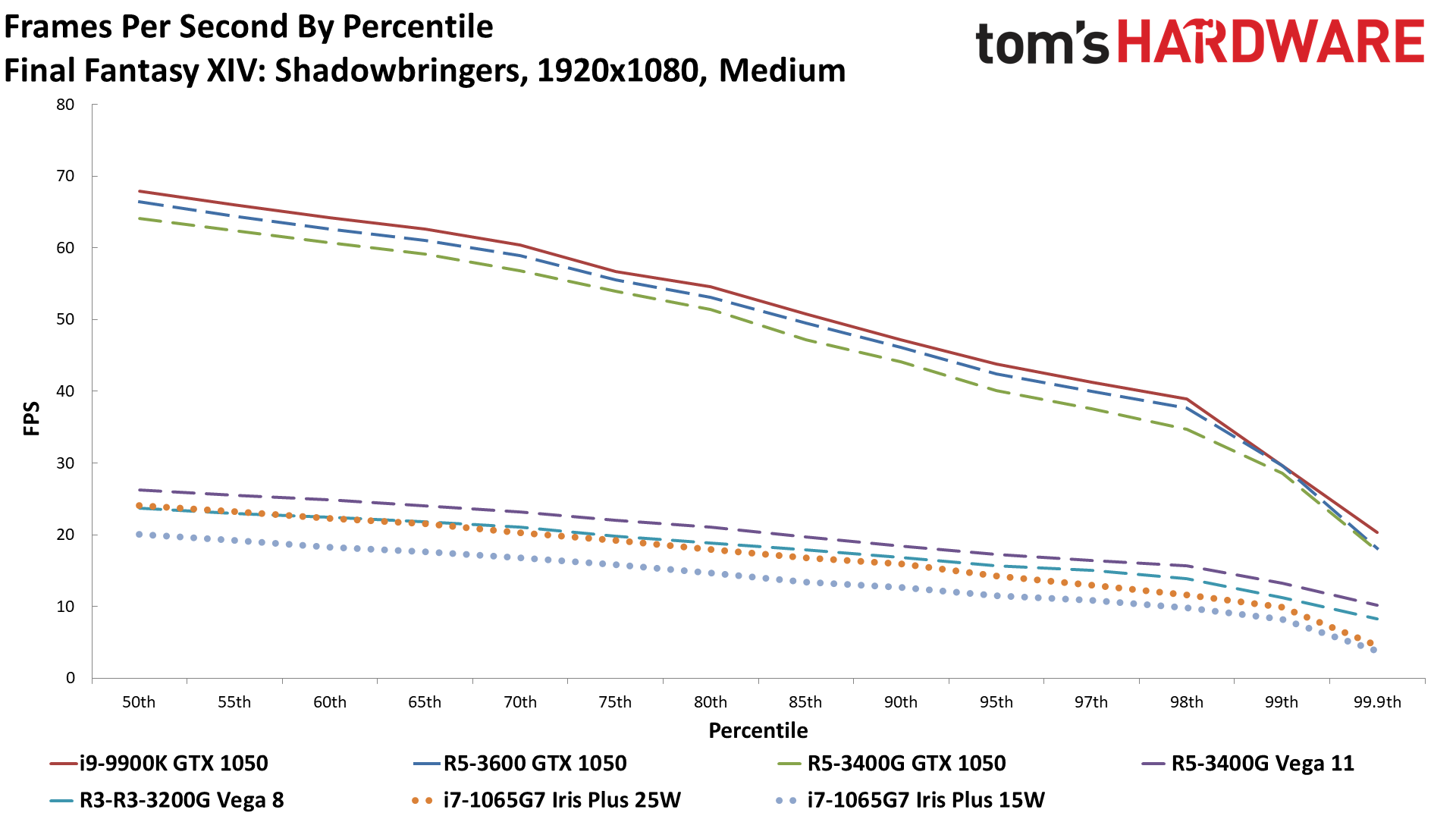

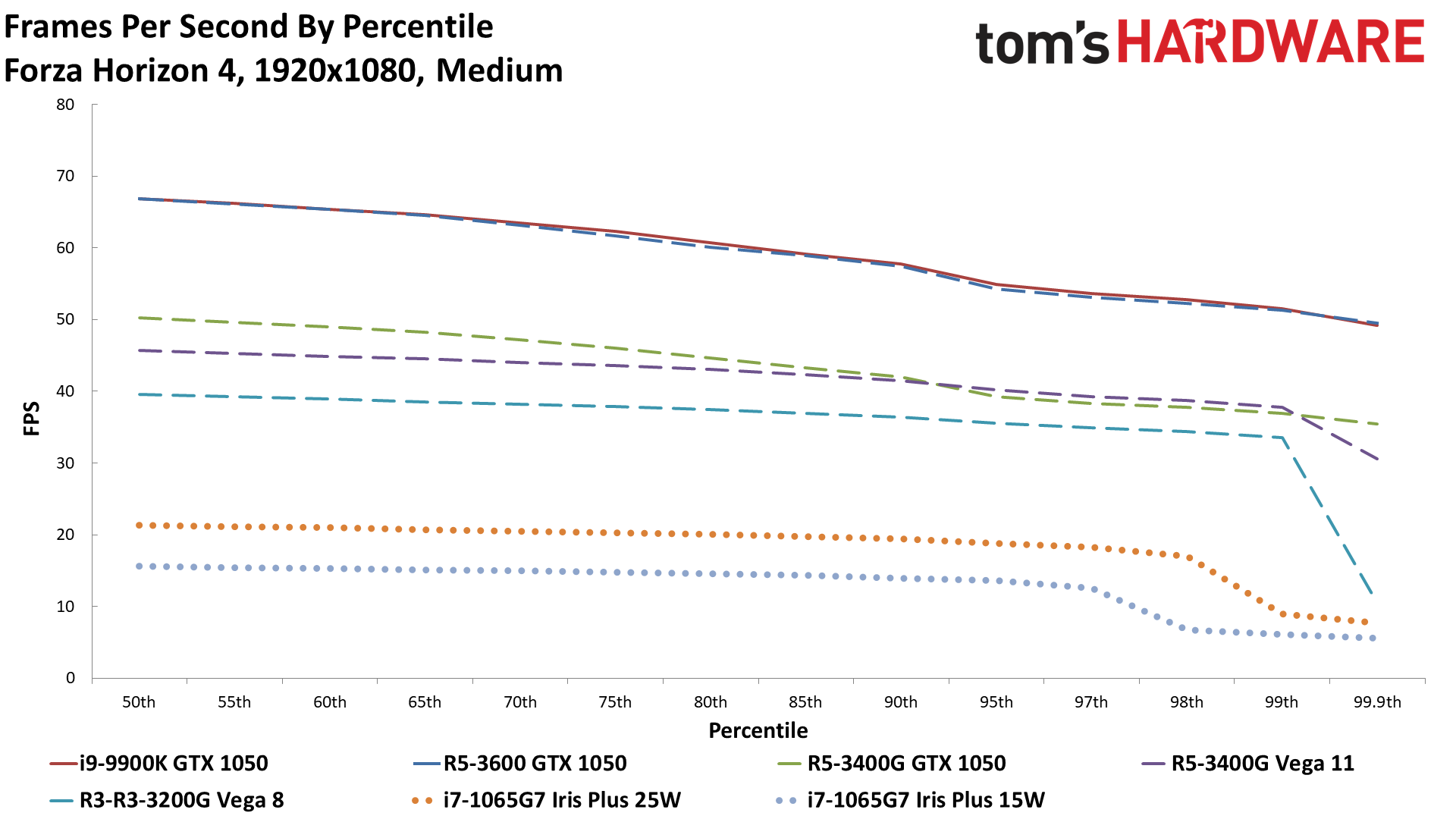

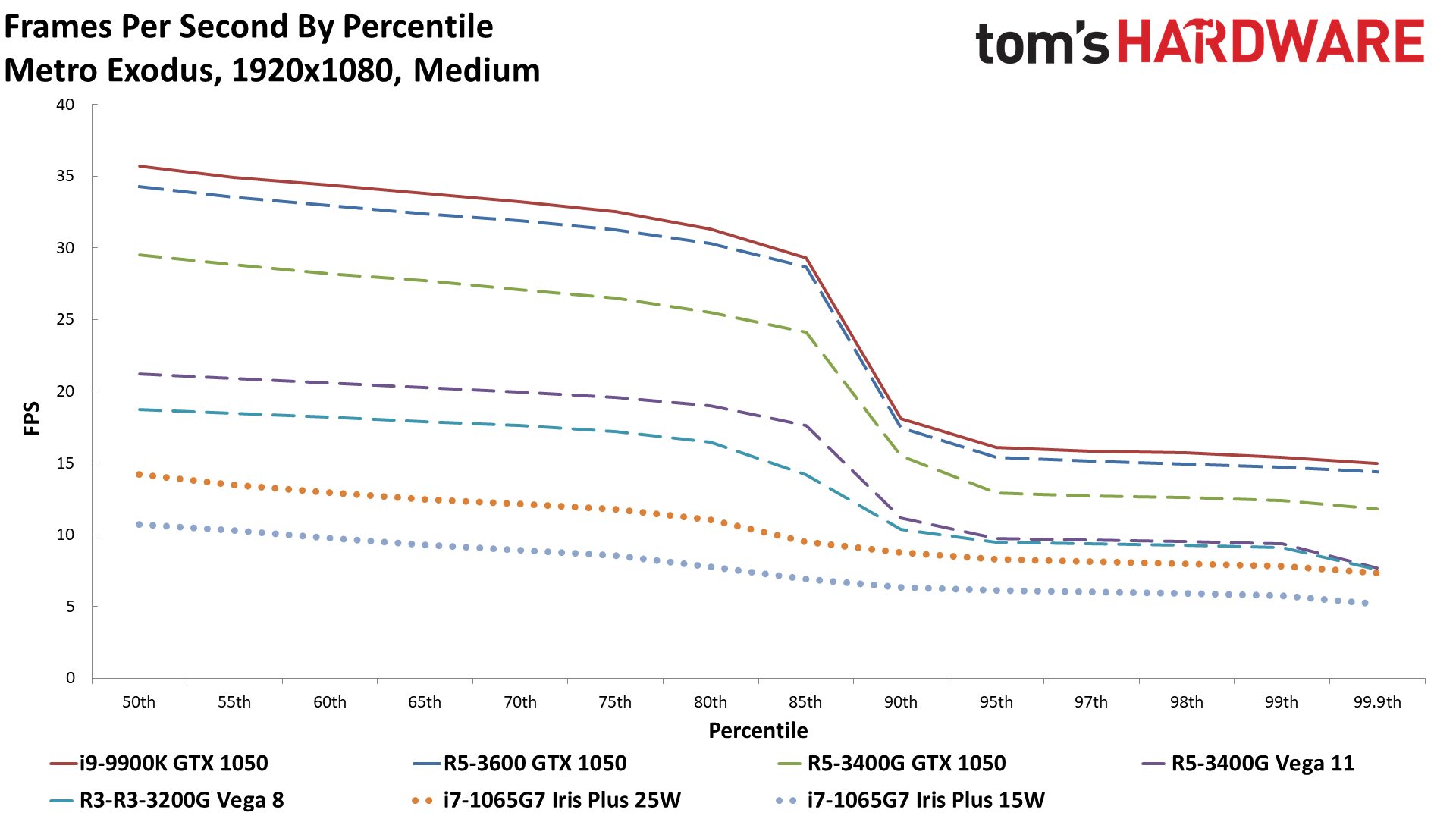

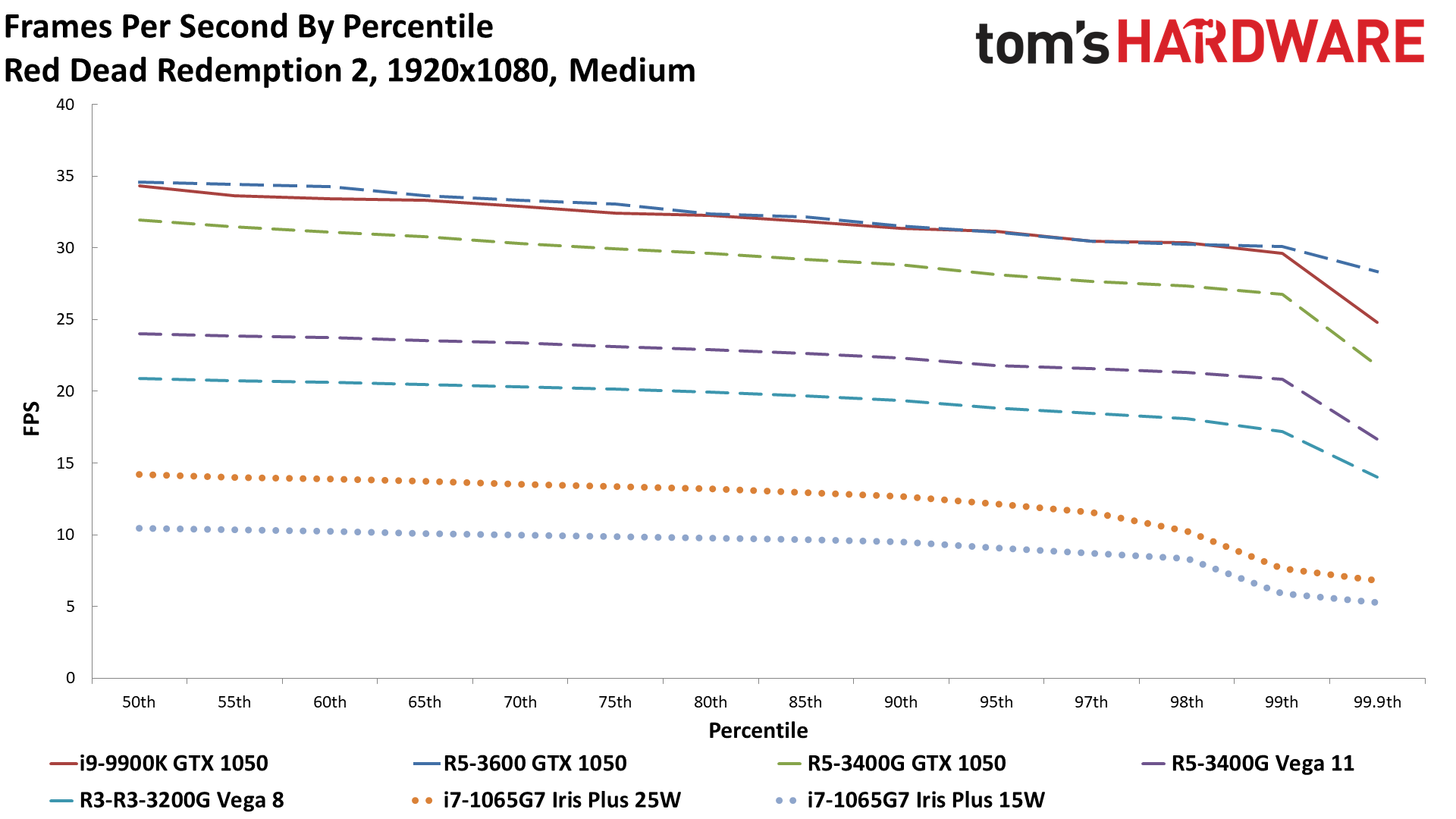

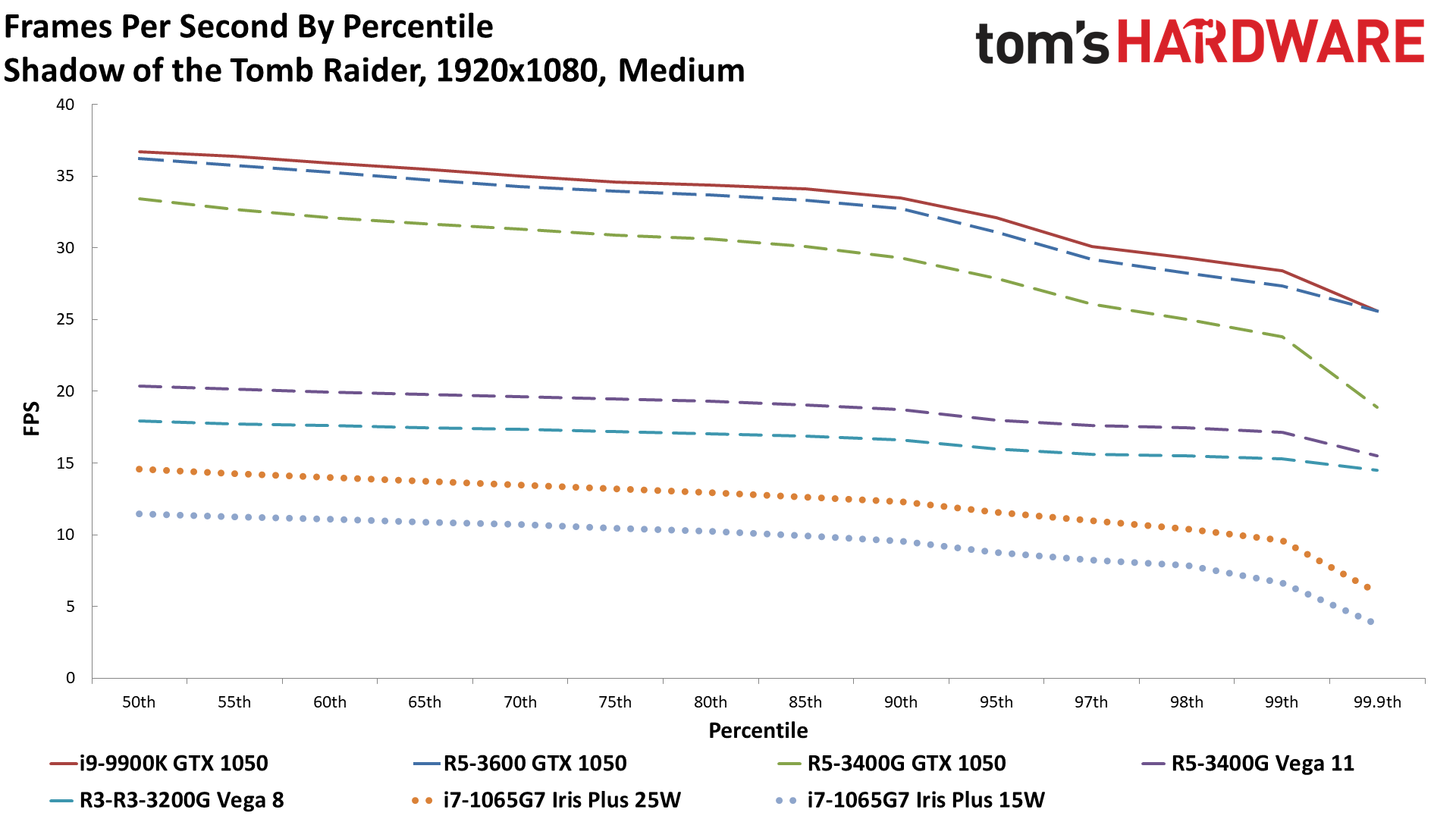

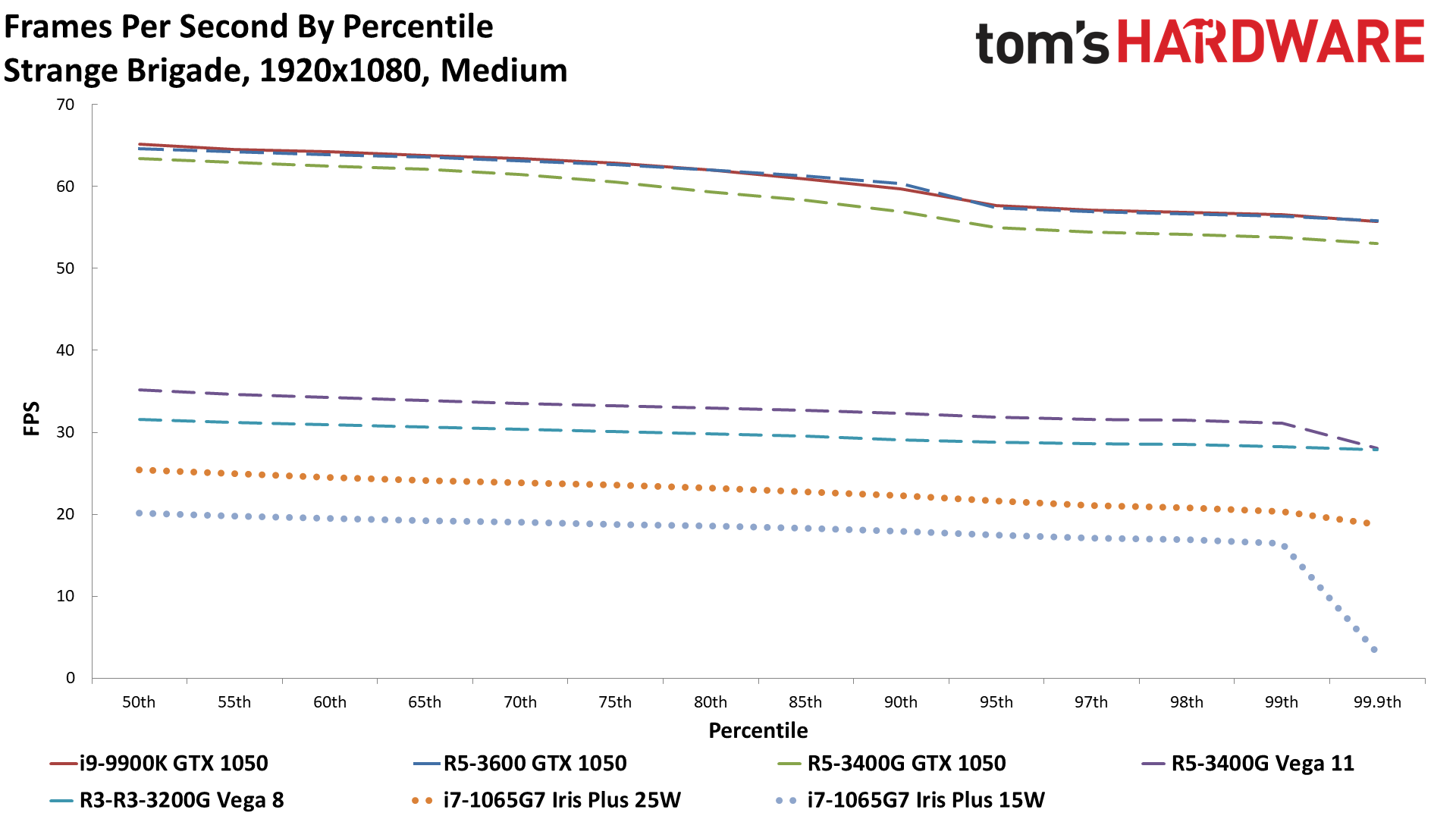

Performance takes a dive when we move up to 1080p medium. Where the Razer with i7-1065G7 averaged 40 fps at 720p and minimum settings, it dropped to just 18 fps at 1080p medium. Enforce a 15W TDP limit, and it putters along at a mere 13 fps. Not even the lightest games in our test suite can hit 30 fps at 15W, or even 25W. Again, that makes the Tiger Lake demonstration of 30+ fps in Battlefield V all the more impressive.

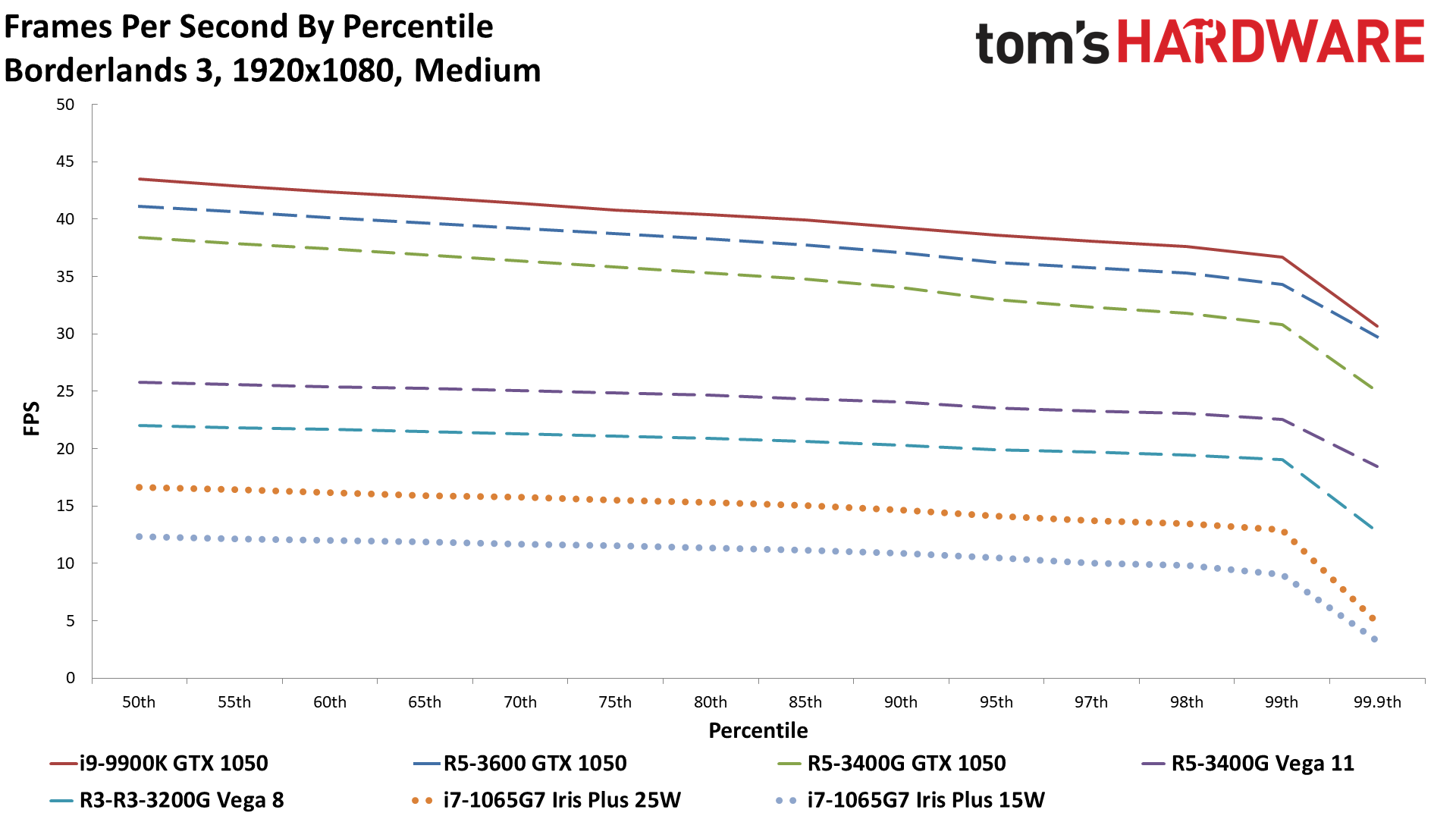

AMD's Vega 8 and Vega 11 also come up short at 1080p medium, though at least a couple of the games break 30 fps—Forza Horizon 4 and Strange Brigade. Half of the remaining games are in the mid-20s on Vega 11, which is sort of playable if you're forgiving of lower fps. AMD basically would need another 20% performance boost to Vega 11 to get these games above 30 fps, which desktop Renoir chips like the upcoming Ryzen 7 4700G might manage. Rumors have the 4700G running 8 CUs at up to 2.1 GHz, so it will be close at least.

Also of interest is the difference in performance between the GTX 1050 running with a top-tier Core i9-9900K CPU, compared to the same GPU with the Ryzen 5 3400G. Even though it's not realistic to use such a high-end CPU with a budget dedicated GPU, the i9 is our GPU testbed standard. There are potentially a few factors in play, like the x8 PCIe link, but I honestly wasn't expecting much of a difference at 1080p medium. It turns out that even at 1080p medium, there's still a 12% difference in overall performance, skewed somewhat by the far higher than expected results in Forza Horizon 4 (34%) and Metro Exodus (21%).

It was a big enough gap that I also tested the GTX 1050 with a Ryzen 5 3600, as that's the next lowest Ryzen CPU I had available. That's a healthy step up in CPU performance, and it narrows the gap to just 2% overall, which is more in line with what we'd expect. (At 720p min, the 9900K with a GTX 1050 still leads by 5% overall, if you're wondering.)

Getting back to the GFLOPS and theoretical performance stuff mentioned earlier, it’s important to note that Intel's upcoming Tiger Lake CPUs are rumored to have 96 EUs running at up to 1.45GHz (on the highest-performance i7-1185G7 processor). That gives 768 ALU/shader 'cores' and 2227 GFLOPS of compute. Put another way, it's legitimately double the performance of the i7-1065G7, and even the slightly lower i7-1165G7 still can push 1920 GFLOPS. That potentially puts Intel's integrated Xe Graphics on equal footing (not accounting for drivers) with Nvidia's MX350 and AMD's Renoir Vega 8 Graphics.

Closing Thoughts: Intel Ice Lake Graphics and Looking Forward to Tiger Lake

While we can't say that we're amazed with Gen11 Graphics, it's certainly the best GPU Intel has created so far. Out of the games tested, everything ran properly under every API. DX11 still tends to be the best option if you have a choice, but DX12- and Vulkan-only games still worked fine. That alone gives us a lot of hope for Xe Graphics. Still, not everything was on an equal footing to AMD and Nvidia GPUs.

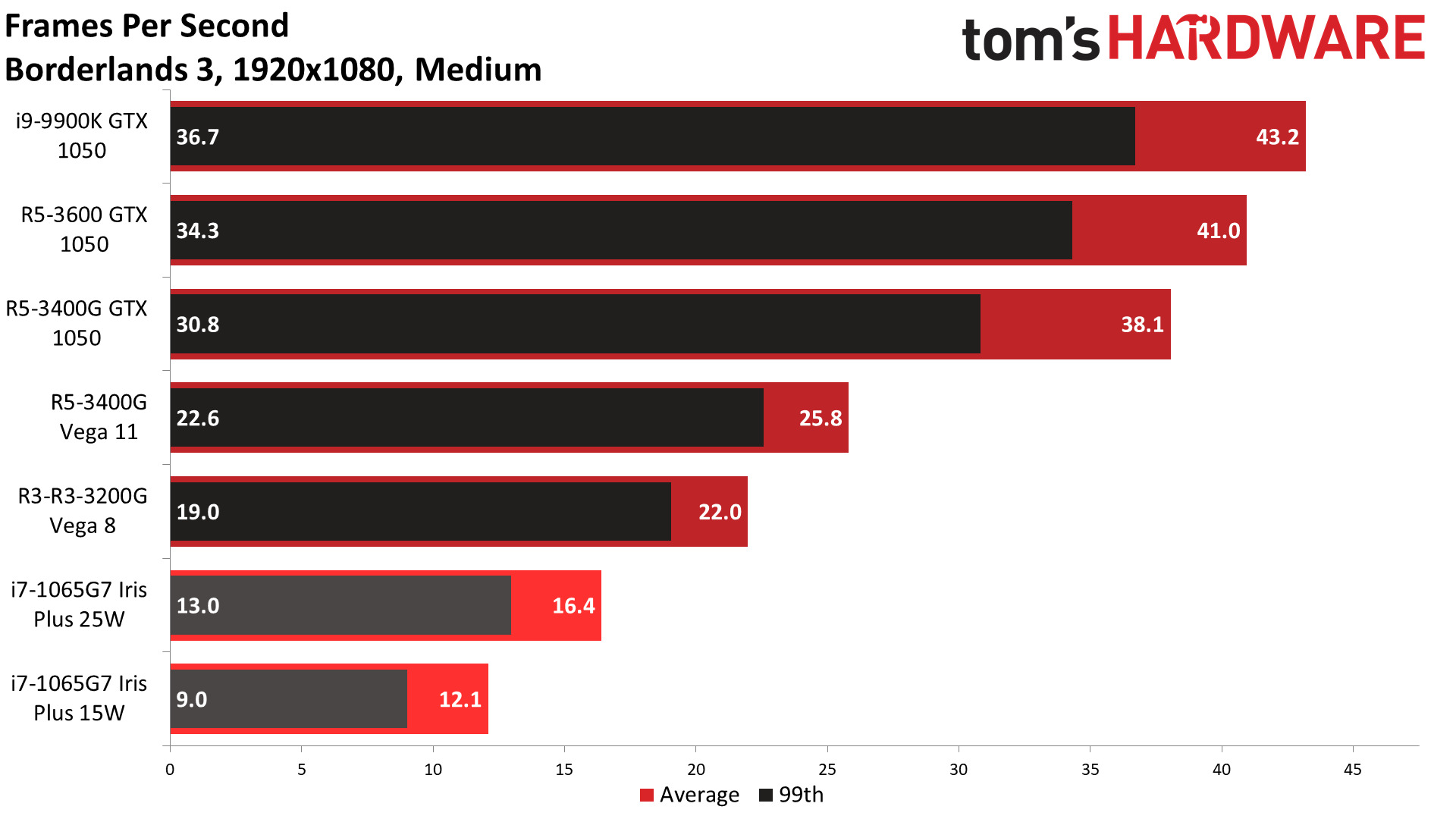

For example, one thing the benchmarks don't show is just how loooooong it takes to launch certain games the first time, specifically DirectX 12 or Vulkan games. Forza Horizon 4 is the worst example. It has to precompile shaders for your GPU architecture, which normally takes 30-60 seconds depending on your CPU and GPU with an AMD or Nvidia card. With Intel's Iris Plus, though, it took about ten minutes—yup, I timed it. Again, that's only the first time you run the game, but it's still about an order of magnitude slower than any other GPU I've tested. Borderlands 3 in DX12 mode also takes a while to compile shaders the first launch, though it wasn't quite as bad as FH4, and DX11 mode ran better for Intel regardless.

What's interesting is how the ratio of CPU to GPU in Intel's chips has been changing over the years. With Ice Lake, the GPU takes up slightly more space than the CPU (not counting the System Agent portion of the die for either one). Tiger Lake's Xe Graphics is supposedly quite similar to Gen11 Graphics, only with 50% more GPU cores. The Willow Cove CPU architecture is different as well, but for mobile solutions, it looks like Tiger Lake could use more transistors and die area on the GPU than the CPU. However, looking at some Tiger Lake die shots, Xe Graphics with 96 EUs appears to be of similar size to Gen11 Graphics with 64 EUs, only with substantially higher performance.

Dramatically improved GPU performance is a big change for Intel, a traditionally CPU-focused company. Based on what we've seen of Battlefield V, Tiger Lake's GPU looks to be about 80% faster than the fastest Ice Lake GPU—provided both aren't limited by a 15W TDP, naturally. If that relative increase holds across other games, the upcoming integrated solutions could be pretty decent. And by that, we mean 'original Xbox One GPU performance,' but that's still a big improvement over UHD 630.

Dedicated GPUs will still reign as the fastest gaming solution, of course, but moving forward, 'budget' dedicated GPUs are going to have to step up their game. Generally speaking, we want to see at least double the integrated GPU performance from a discrete graphics card, whether it's in a laptop or a desktop. With Xe Graphics and Renoir APUs set to pass the 2 TFLOPS mark, that means GTX 1660 and RX 5500 are basically the minimum we want from a dedicated graphics card. We'll probably still see lesser solutions, at least for a little while. Still, it's hard to imagine a laptop going to the trouble of adding a second graphics card unless it has serious benefits, considering the higher power use and increased complexity of the overall design.

Intel isn't stopping with integrated Xe Graphics either. It will have dedicated Xe HP graphics cards, delivering potentially 5-8 times the performance of the integrated solution based on rumored specs. It's also planning Xe HPC solutions for the data center that could potentially deliver another 8X improvement over Xe HP (at a massive price, but let's not worry about that).

Right now, Ice Lake laptops feel constrained by the 4-core CPU design and very limited TDP. We're not sure how far Tiger Lake will push things, but the CPU side is probably okay if it gets more thermal headroom. Combine a 28W Tiger Lake processor with Xe Graphics, and even modest laptops could become serviceable gaming PCs—no dedicated GPU required. The next-gen Razer Blade Stealth will certainly be interesting.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

nofanneeded It really puzzles me that intel never made the iris Graphics a standard in the desktop market CPU's ....Reply -

DavidC1 ReplySure, some of that is TDP, but Vega 11 (3400G) stretches the lead to 67%. Clearly it's not just a memory bandwidth bottleneck holding Iris Plus back, especially since the Razer laptop has more bandwidth than the Ryzen APUs.

Pretty much ALL is due to TDP. The 1065G7's Iris Plus graphics is just as fast as mobile Vega in Picasso.

The Willow Cove CPU architecture is different as well, but for mobile solutions, it looks like Tiger Lake could use about 70% more transistors and die area on the GPU than the CPU.

Incorrect. The Xe iGPU in Tiger Lake is barely larger than one in Icelake. The CPU gets quite a bit larger though with the caches. All in all in Tigerlake the 4 CPU cores and its caches are roughly equal to the size of the 96EU Xe GPU. -

TCA_ChinChin ReplyDavidC1 said:Pretty much ALL is due to TDP. The 1065G7's Iris Plus graphics is just as fast as mobile Vega in Picasso.

I'm not really sure its completely due to TDP. Iris Plus is nice for decent graphics at a lower power usage, but I'm pretty sure its efficiency optimized, not built for higher TDP. Even if you pushed the same power to the 1065G7's graphics as what is available to the Vega 11 graphics, it probably wouldn't scale that much higher. I'm sure Intel could have released a higher TDP optimized Ice Lake with this gen, but decided against it. I'm also not sure where you are getting that Iris Plus graphics is just as fast a mobile Vega when Tom's tests here clearly show the contrary. -

JarredWaltonGPU Reply

I didn't test mobile Vega chips, though. A 35W Ryzen 7 3750H with Vega 10 Graphics isn't going to be as fast as a 65W Ryzen 5 3400G with Vega 11 Graphics. I don't think it would be that much slower, however -- probably 15-20%.TCA_ChinChin said:I'm not really sure its completely due to TDP. Iris Plus is nice for decent graphics at a lower power usage, but I'm pretty sure its efficiency optimized, not built for higher TDP. Even if you pushed the same power to the 1065G7's graphics as what is available to the Vega 11 graphics, it probably wouldn't scale that much higher. I'm sure Intel could have released a higher TDP optimized Ice Lake with this gen, but decided against it. I'm also not sure where you are getting that Iris Plus graphics is just as fast a mobile Vega when Tom's tests here clearly show the contrary.

But you're definitely correct that Ice Lake and Gen11 wouldn't continue to increase in performance with higher TDPs. Going from 15W to 25W is a 67% increase in power -- and at the outlet I saw power use go from ~30W to ~45W -- and performance improved by 35% on average. If the TDP were raised to 65W, unless the GPU clocks could scale much higher than 1100 MHz, performance and power would max out at some point well below the 65W limit.

As for the relative sizes of GPU and CPU ... I need to correct that. I've looked at some die shots now, and it does appear that Xe Graphics is far more compact than Gen11. Interesting. It's about the same size for the CPU cores and GPU cores in TGL. -

TCA_ChinChin Reply

Oops. I don't know why I jumped to associated Vega 11 with the mobile Vega chips. Just thinking that mobile vega especially now in the 4000 series mobile chips are just as fast if not faster than the 3000 series mobile chips.JarredWaltonGPU said:I didn't test mobile Vega chips, though. A 35W Ryzen 7 3750H with Vega 10 Graphics isn't going to be as fast as a 65W Ryzen 5 3400G with Vega 11 Graphics. I don't think it would be that much slower, however -- probably 15-20%. -

DavidC1 ReplyTCA_ChinChin said:I'm not really sure its completely due to TDP. Iris Plus is nice for decent graphics at a lower power usage, but I'm pretty sure its efficiency optimized, not built for higher TDP.

Not talking about the Iris scaling, but it'll likely perform better. Even the UHD 630 is faster than the mobile UHD 620.

I was talking about Vega.

https://www.techspot.com/review/2003-amd-ryzen-4000/

You can see that the 3750H is quite a bit faster than the 3700U. When you reach playable frame rates, not only the GPU matters, but the faster CPU starts to come into play. Also with the higher TDP headroom it avoids scenarios where some games perform poorly due to either the CPU or the GPU hogging too much of the power.

Thanks @JarredWaltonGPU -

bit_user Reply

The Iris chips are pretty expensive, and anyone wanting good-performance graphics in a PC can easily do better by using a non-Iris CPU and popping in a $100 dGPU. Same or less $$$.nofanneeded said:It really puzzles me that intel never made the iris Graphics a standard in the desktop market CPU's ....

Where Iris makes a lot of sense is in thin & light laptops.

BTW, Intel did sell some NUCs with Iris graphics. I've seen a Broadwell i7 NUC with 48 EUs. -

bit_user Reply

It is interesting. I know Gen12 dropped register scoreboarding, but that shouldn't save much die area. I know they also cut back on fp64, but I thought Gen11 already had that.JarredWaltonGPU said:I've looked at some die shots now, and it does appear that Xe Graphics is far more compact than Gen11. Interesting.

The CPU cores in TGL are a new uArch, and therefore probably bigger than ICL. I think they also have some new AVX-512 instructions, for deep learning.JarredWaltonGPU said:It's about the same size for the CPU cores and GPU cores in TGL.

Mabye it's more a case of TGL cores getting bigger - not Gen12 EUs getting smaller. -

JarredWaltonGPU Reply

Yeah, I expected the Willow Cove cores to be larger than the Sunny Cove cores. But with 50% more EUs, I really expected Xe in TGL to be a large chunk of the die area. On ICL, the GPU is clearly larger than the CPU section. It certainly makes me wonder just how much wasted 'junk' was present in the Gen11 (and earlier) graphics that ended up getting cut / optimized for Xe.bit_user said:It is interesting. I know Gen12 dropped register scoreboarding, but that shouldn't save much die area. I know they also cut back on fp64, but I thought Gen11 already had that.

The CPU cores in TGL are a new uArch, and therefore probably bigger than ICL. I think they also have some new AVX-512 instructions, for deep learning.

Mabye it's more a case of TGL cores getting bigger - not Gen12 EUs getting smaller. -

nofanneeded Replybit_user said:The Iris chips are pretty expensive, and anyone wanting good-performance graphics in a PC can easily do better by using a non-Iris CPU and popping in a $100 dGPU. Same or less $$$.

Where Iris makes a lot of sense is in thin & light laptops.

BTW, Intel did sell some NUCs with Iris graphics. I've seen a Broadwell i7 NUC with 48 EUs.

First , Iris is not that expensive as you make it look like , it is no way near the $100 dGPU as you said , it is roughly some $30 increase in the CPU Price.

Actually onboard ARM GPU are becoming faster than stupid intel non Iris graphics by DOUBLE and they are much cheaper than any intel offerings.