AMD vs Intel Integrated Graphics: Can't We Go Any Faster?

Integrated graphics tested: ubiquitous and oh-so-slow!

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Who makes the best integrated graphics solution, AMD or Intel? The answer is simple right now if you check our GPU benchmarks hierarchy: AMD wins, easily, at least on the desktop. Current Ryzen APUs with Vega 11 Graphics are about 2.5 times faster than Intel's UHD Graphics 630. Of course, even the fastest integrated solutions pale in comparison to a dedicated GPU, and they're not on our list of the best graphics cards for a good reason. However, a lot of changes are coming this year, sooner than later.

Update: We've added Intel's Gen11 Graphics using an Ice Lake Core i7-1065G7 processor. Thanks to Razer loaning us a Razer Blade Stealth 13, and HP chiming in with an Envy 17t, we were able to test Intel Gen11 Graphics with both a 15W (default) and 25W (Razer) TDP. We've also added GTX 1050 results running on a Ryzen 5 3400G, which limits performance a bit at 720p and minimum quality. We have not fully updated the text, as we'll have a separate article looking specifically at Gen11 Graphics performance.

Judging by all the leaks, AMD’s Renoir desktop APUs should show up very soon. Meanwhile, AMD's RNDA 2 architecture is coming (and should eventually end up in an APU), and Intel's Tiger Lake with Xe Graphics should also arrive this summer. Unfortunately, as the saying goes, the more things change…

To give us a clear picture of where we are and where we've come from, specifically in regards to integrated graphics solutions, we've run updated benchmarks using our standard GPU test suite—with a few modifications. We're using the same nine games (Borderlands 3, The Division 2, Far Cry 5, Final Fantasy XIV, Forza Horizon 4, Metro Exodus, Red Dead Redemption 2, Shadow of the Tomb Raider, and Strange Brigade), but we're running at 720p (no resolution scaling) and minimum fidelity settings.

Some of these games are still relatively demanding, even at 720p, but all have been available for at least six months, which is plenty of time to fix any driver issues—assuming they could be fixed. We intend to see if the games work at all, and what sort of performance you can expect. The good news: Every game was able to run! Or, at least, they ran on current GPUs. Spoiler alert: Intel's HD 4600 and older integrated graphics don't have DX12 or Vulkan drivers, which eliminated several games from our list.

We benchmarked Intel's current desktop GPU (UHD Graphics 630) along with an older i7-4770K (HD Graphics 4600) and compared them with AMD's current competing desktop APUs (Vega 8 and Vega 11). For this update, we also have results from Ice Lake's Gen11 Graphics, but that's only for mobile solutions, so it's in a different category. We're still working to get a Renoir processor (AMD Ryzen 7 4800U) in for comparison, along with desktop Renoir when that launches.

We've also included performance from a budget dedicated GPU, the GTX 1050. The GTX 1050 is by no means one of the fastest GPUs right now, though you can try picking up a used model off eBay for around $100. (Note: don't get the 'fake' China models, as they likely aren't using an actual GTX 1050 chip!) And before you ask, no, we didn't have a previous-gen AMD A10 (or earlier) APU for comparison.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Test Hardware

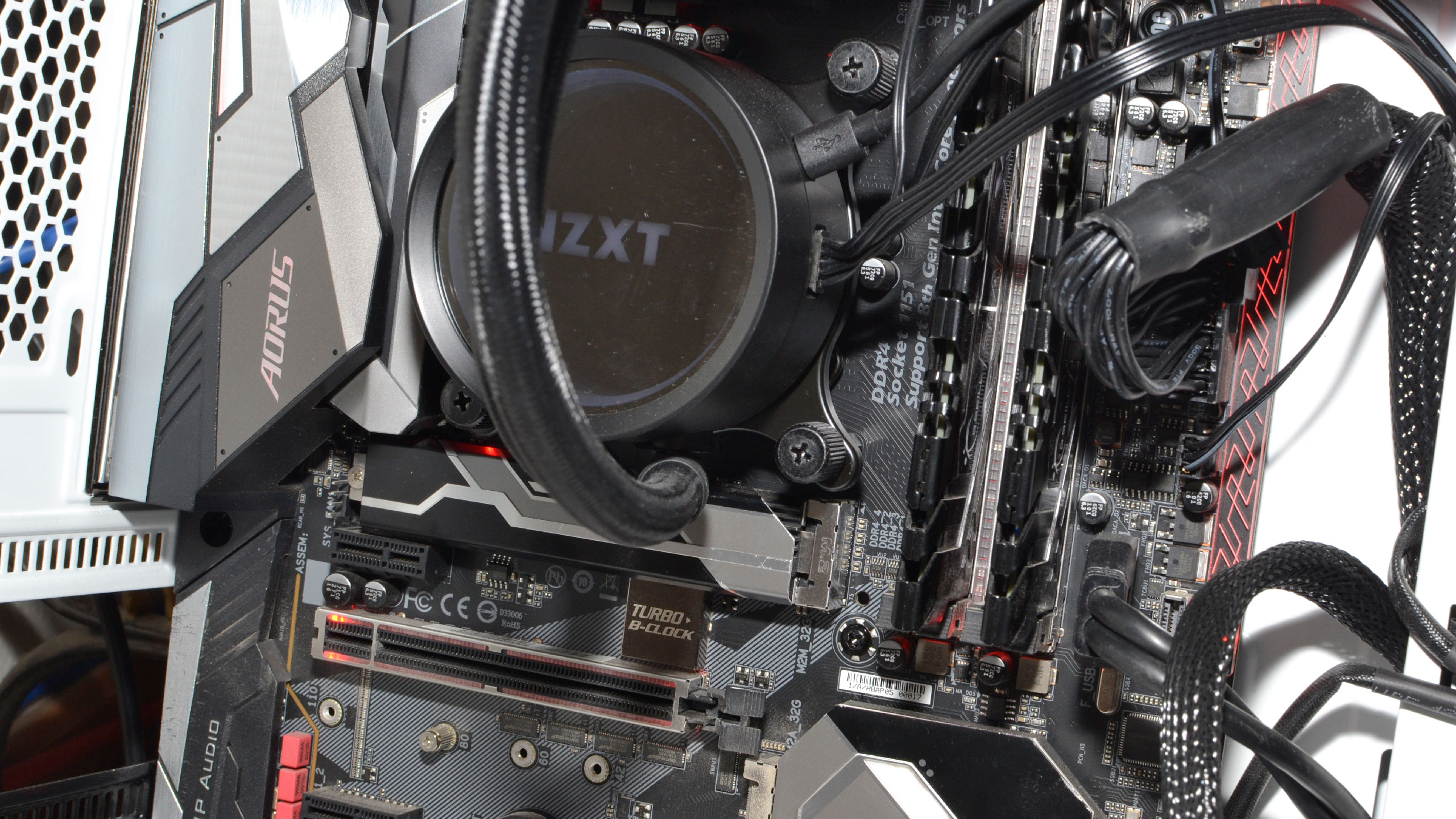

Because we're looking at multiple different integrated graphics solutions, our test hardware needed three different platforms. We've used generally high-end parts, including better-than-average memory and storage, but the systems are not (and couldn't be) identical in all respects. One particular issue is that we needed motherboards with video output support on the rear IO panel, which restricted options. Here are the testbeds and specs.

| Intel UHD Graphics 630 | AMD Vega 11/8 Graphics | Intel HD Graphics 4600 | Intel Iris Plus Graphics (25W) | Intel Iris Plus Graphics (15W) |

|---|---|---|---|---|

| Core i7-9700K | Ryzen 5 3400G, 2400G | Core i7-4770K | Core i7-1065G7 | Core i7-1065G7 |

| Gigabyte Z370 Aorus Gaming 7 | Ryzen 3 3200G | Gigabyte Z97X-SOC Force | Razer Blade Stealth 13 | HP Envy 17t (15W) |

| 2x16GB Corsair DDR4-3200 CL16 | MSI MPG X570 Gaming Edge WiFi | 2x8GB G.Skill DDR3-1600 CL9 | 2x8GB LPDDR4X-3733 | DDR4-3200 |

| XPG 8200 Pro 2TB M.2 | 2x8GB G.Skill DDR4-3200 CL14 | Samsung 860 Pro 1TB | 256GB NVMe SSD | 512GB NVMe SSD |

| Row 4 - Cell 0 | Corsair MP600 2TB M.2 | Row 4 - Cell 2 | Row 4 - Cell 3 | Row 4 - Cell 4 |

We equipped the AMD Ryzen platform with 2x8GB DDR4-3200 CL14 memory because our normal 2x16GB CL16 kit proved troublesome for some reason. It shouldn't matter much, as none of the tests benefit from large amounts of RAM (preferring throughput instead), and the tighter timings may even give a very slight boost to performance. The older HD 4600 setup used the only compatible motherboard I still have around, and the only DDR3 kit as well—but both were previously high-end options.

The Razer and HP laptops for Intel's 10th Gen Core i7-1065G7 Iris Plus Graphics are better than previous GPUs, with the Razer running LPDDR4X-3733 memory, while the HP uses DDR4-3200 memory. That gives Razer (and Gen11 Graphics) a slight advantage that may account for some of the difference in performance, though the higher 25W TDP when using the performance profile appears to be the biggest factor based on our testing.

The storage also varied based on what was available (and I didn't want to reuse the same drive, as that would entail wiping it between system tests). It shouldn’t be a factor for these gaming and graphics tests, though testing large games off the Razer's 256GB (minus the OS) storage was a pain in the rear. How can 256GB be the baseline on a $1,700 gaming laptop in 2020?

Performance of AMD vs Intel Integrated Graphics

Let's get cut straight to the heart of the matter. If you want to run modern games at modest settings like 1080p medium, none of these integrated graphics solutions will suffice—at least, not across all games. At 1080p and medium settings, AMD's Vega 11 in the 3400G averaged 27 fps across the nine games, with only two games (Forza Horizon 4 and Strange Brigade) breaking the 30 fps mark—and Metro Exodus failed to hit 20 fps.

1080p medium actually looks quite decent, not far off what you'd get from an Xbox One or PlayStation 4 (though not the newer One X or PS4 Pro). Dropping the resolution or tuning the quality should make most other games playable, but we opted to do both, running at 1280x720 and minimum quality settings. We've also tested 3DMark Fire Strike and Time Spy for synthetic graphics performance.

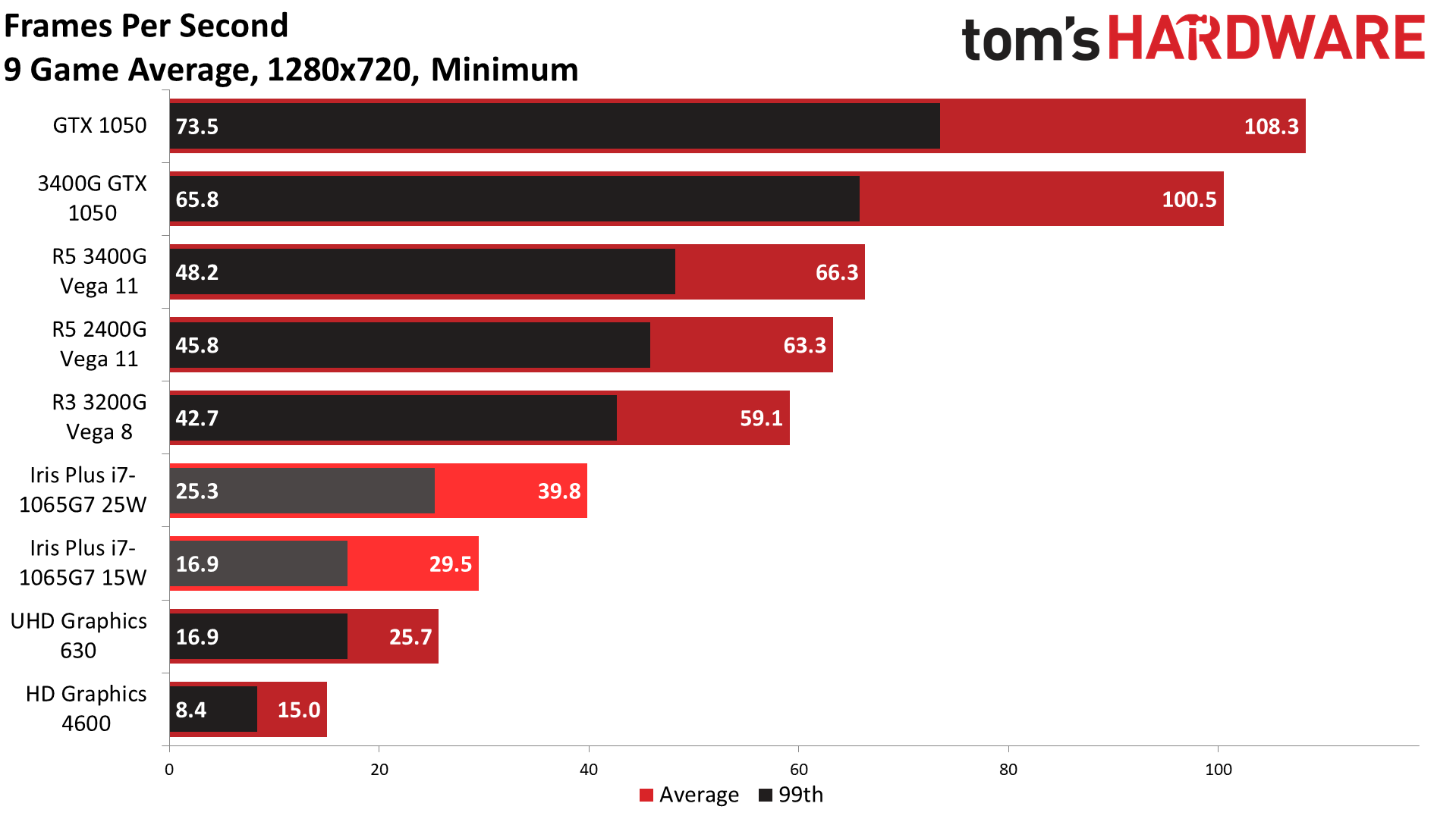

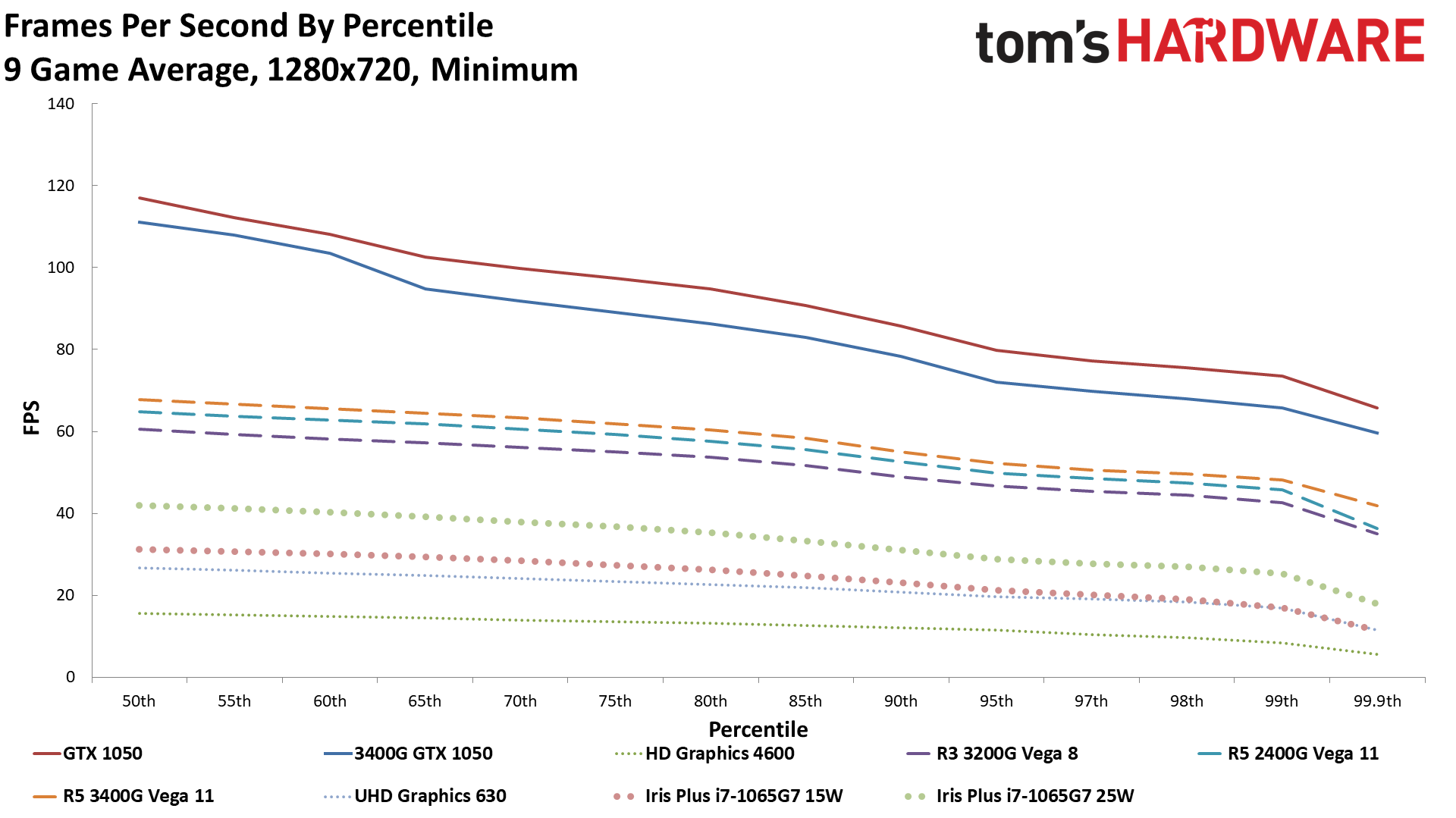

Starting with the big-picture overall view of gaming performance, you might be surprised to see the GTX 1050 basically doubling the performance of the fastest integrated graphics solution. Yup, it's a pretty big gap, helped by the faster desktop CPU as well (you can see that performance drops a bit with a Ryzen 5 3400G). Interestingly, a few games like Red Dead Redemption 2 close the gap as the shared system RAM helps. GTX 1050 has over double the bandwidth (112 GBps dedicated vs. 51.2 GBps shared), but only for 2GB of memory. Still, even the slowest dedicated GPU we might consider recommending ends up being far better than integrated graphics.

And that's only when we're looking at AMD's current offerings. The chasm between Vega 8 and Intel's UHD Graphics 630 is even larger than the gap between Vega 11 and the GTX 1050. AMD's Ryzen 3 3200G with Vega 8 Graphics is 2.3 times faster than Intel's Core i7-9700K and UHD Graphics 630. Other current-generation Intel chips can further reduce performance by limiting clocks or disabling some of the GPU processing clusters—we didn't test anything with UHD 610, for example.

Intel's latest Iris Plus solution in the 10th Gen Ice Lake processor does better, sort of. When constrained to 15W TDP for the entire chip, it's only 15% faster than UHD 630. Boosting the TDP to 25W improves performance another 35%, however—55% faster than the desktop UHD 630, even though the desktop still has an additional 70W of TDP available.

Even the fastest Iris Plus Graphics still can't catch up to a desktop Ryzen 3 3200G and Vega 7 Graphics. Part of that is the higher TDP, but a bigger factor is that AMD's GPU architecture is simply superior. Intel is hoping to fix that with Xe Graphics, and Tiger Lake looks reasonably promising. Intel just showed off TGL (TGL-U, maybe?) running Battlefield V at 1080p high settings and around 30-33 fps. I ran that same test on 15W ICL-U and got just 10-13 fps, and 17-21 fps at 25W, with the caveat that we don't know the TDP of the Tiger Lake chip.

Compared to its current desktop graphics solutions, Intel has a lot of ground to make up. Tripling the performance of UHD 630 would get Intel to competitive levels, which isn't actually out of the question. Ice Lake and Gen11 Graphics are already 55% faster than UHD Graphics when equipped with a 25W TDP, and Tiger Lake with Xe Graphics could potentially deliver 75% (or more) performance than Gen11. Combined, that would be 2.75X the performance of UHD 630, which would reach acceptable levels of performance in many games. We'll have to wait and conduct our own testing of Tiger Lake to see how Xe Graphics performs, but early indications are that it might not be too shabby.

If you're wondering about even older Intel GPUs, the HD 4600 is roughly half as fast as UHD 630. Okay, technically it's only 42% slower, but it did fail to run four of the nine games. Three of those require either DX12 or Vulkan, while Metro Exodus supports DX11 and tried to run … but it kept locking up a few seconds into the benchmark. Below are the full suite of benchmarks.

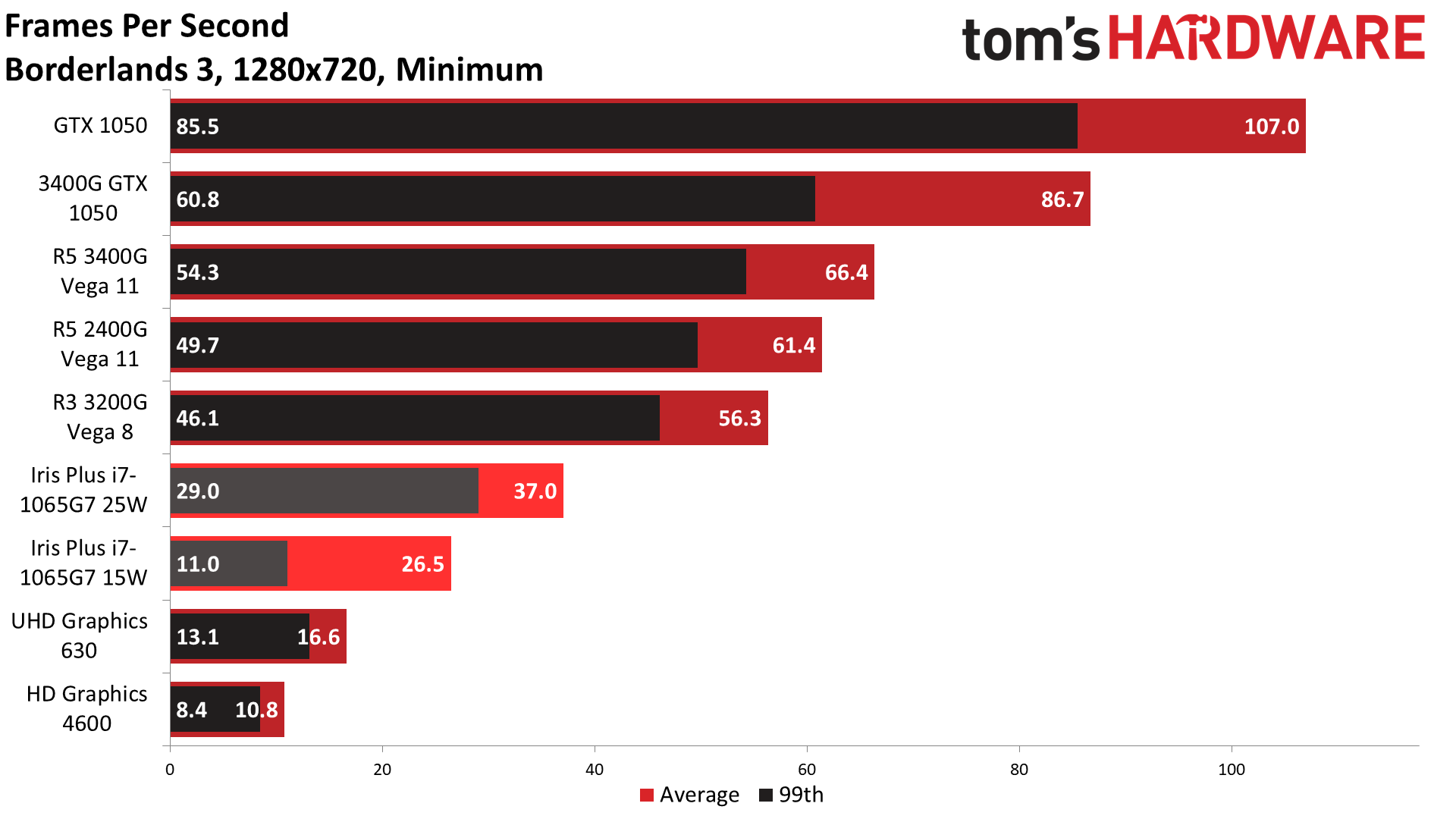

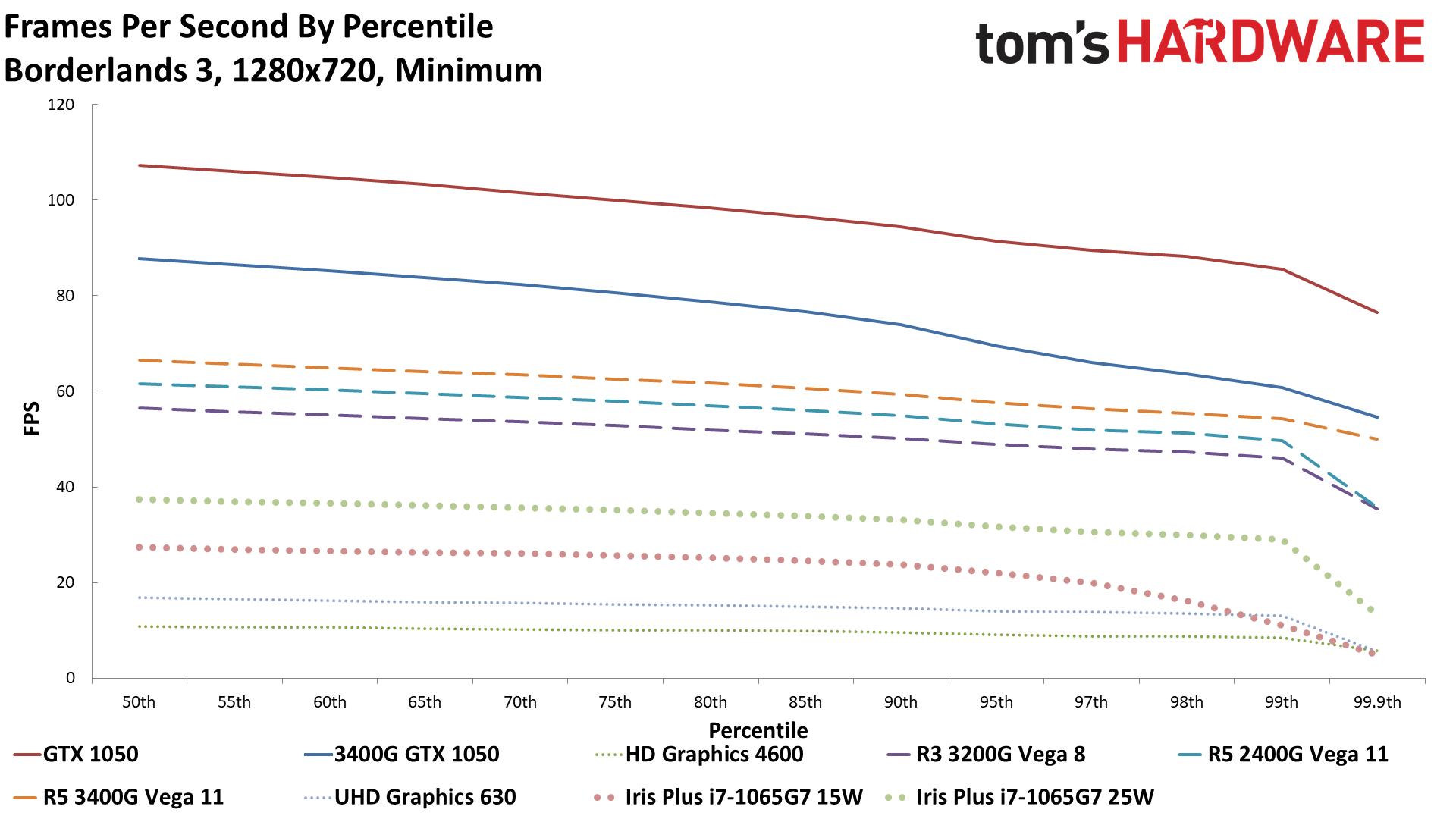

Borderlands 3 supports DX12 and DX11 rendering modes, with the latter performing better on the Intel and Nvidia GPUs. AMD meanwhile gets a modest benefit from DX12, even on its integrated graphics, so Vega 11 is 'only' 38% slower than the GTX 1050, compared to the overall 43% deficit. Meanwhile, Intel falls further behind Vega 8 than in the overall metric, trailing by 70% here compared to 57% overall. Even at minimum settings and 720p, UHD 630 can't muster a playable experience.

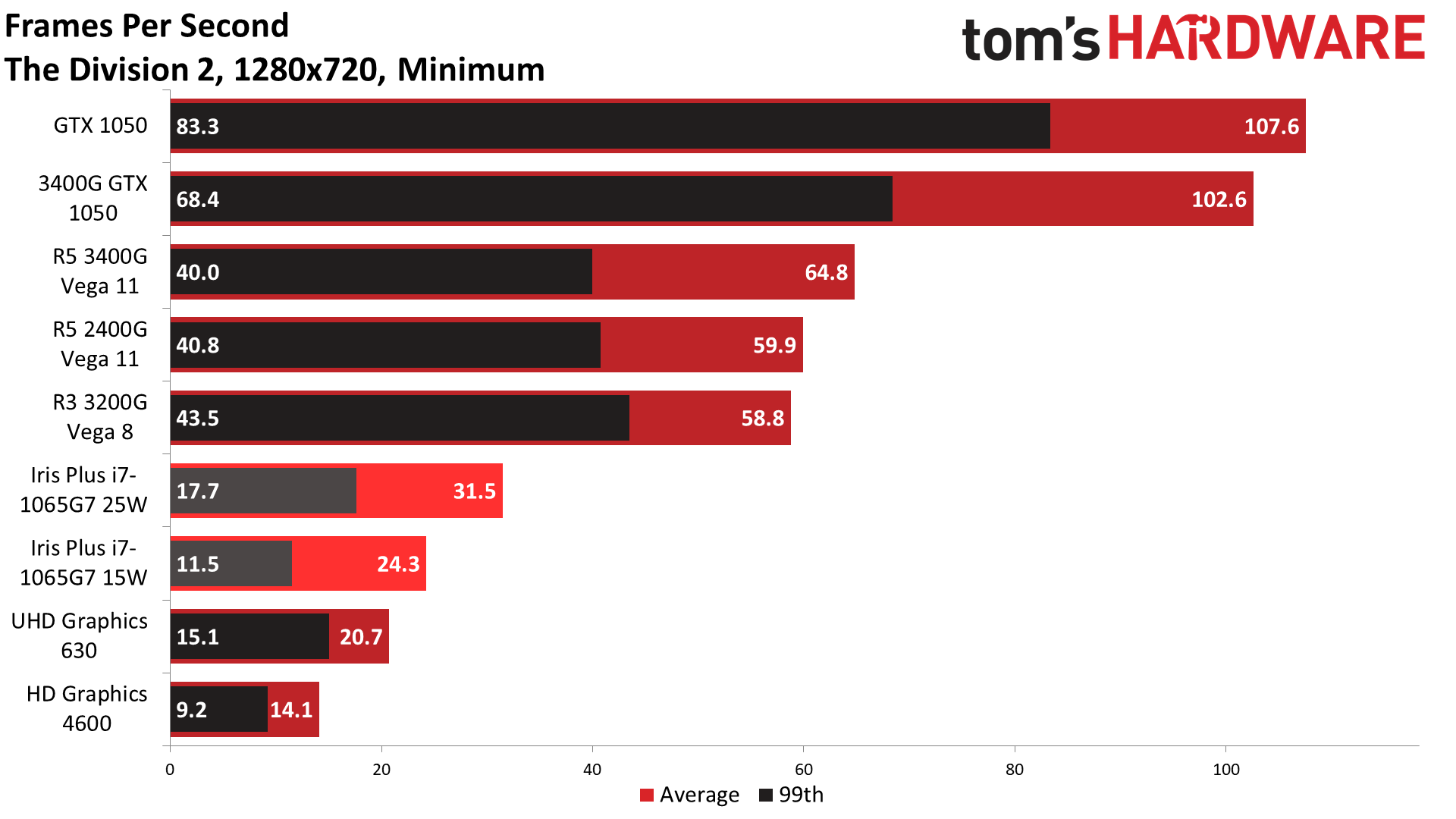

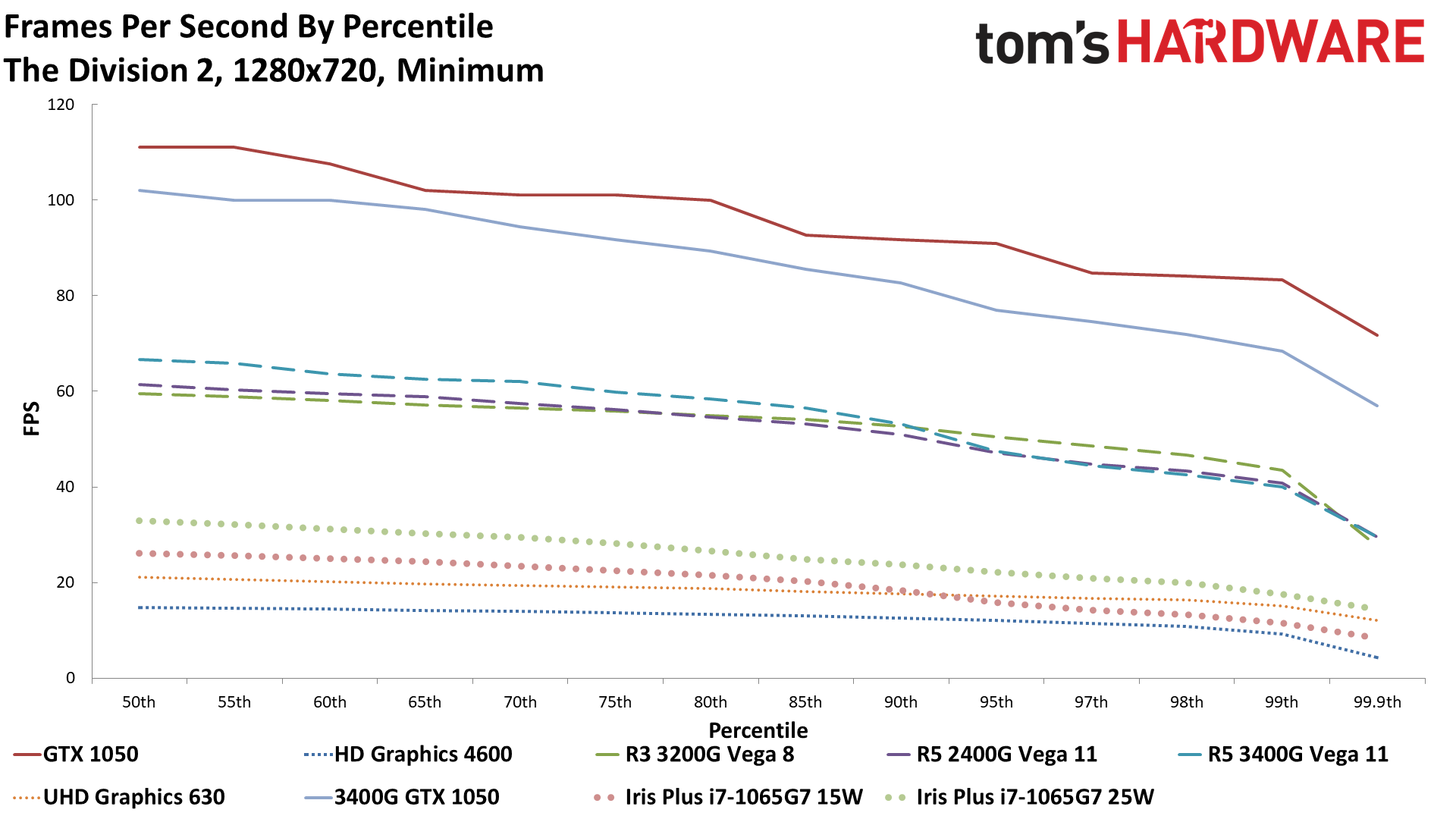

The Division 2 performance shows the trend we'll see in a lot of the more demanding games. Even at minimum quality (other than resolution scaling), Intel's UHD 630 isn't really playable. You could struggle through the game at 20 fps, but it wouldn't be very fun and dips into the low-to-mid teens occur frequently. AMD meanwhile averages more than 60 fps on the 3400G and comes close with the 3200G. Framerates aren't consistent, however, with odd behavior on the different AMD APUs. Specifically, the 99th percentile fps decreased as average fps increased.

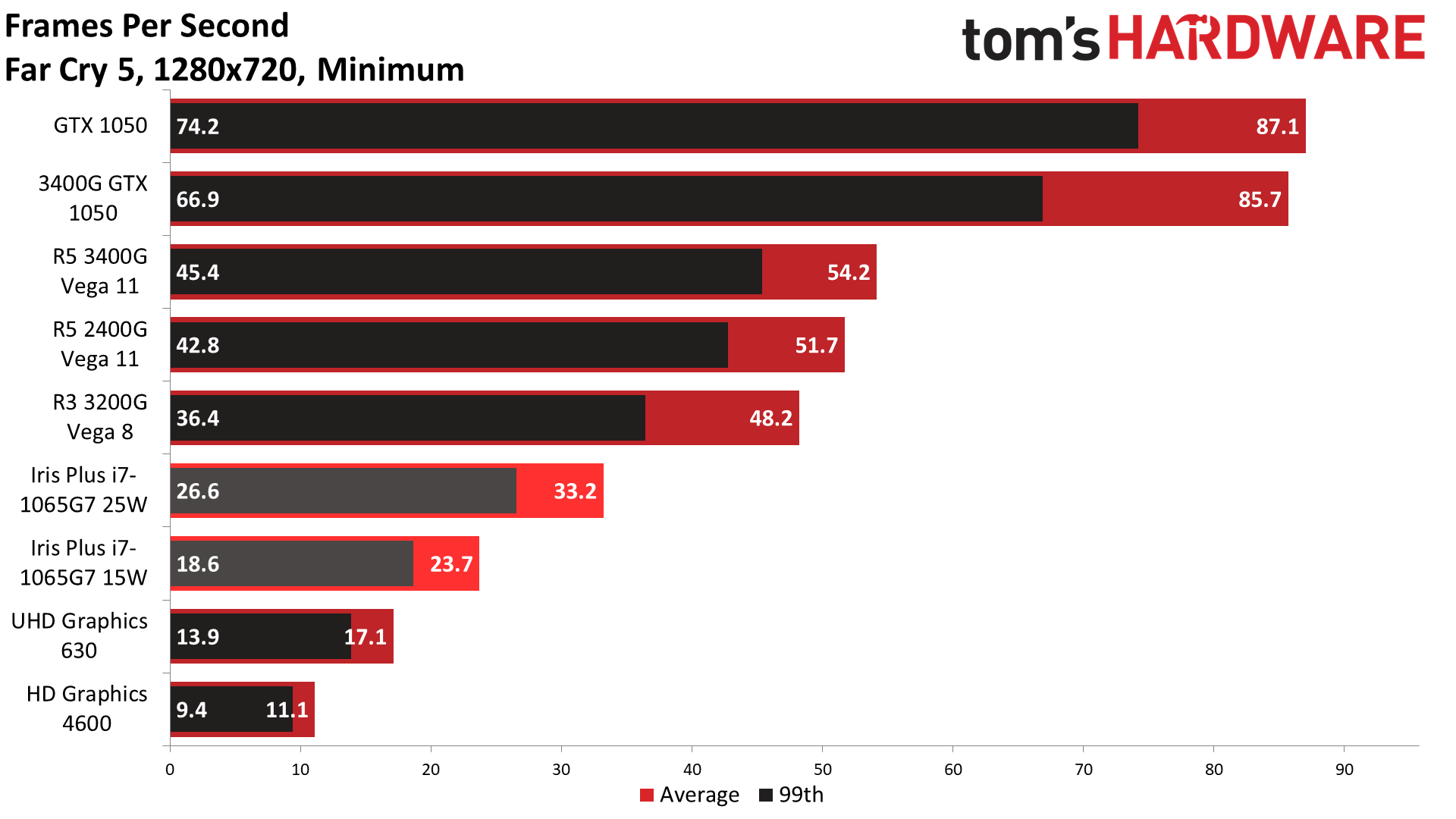

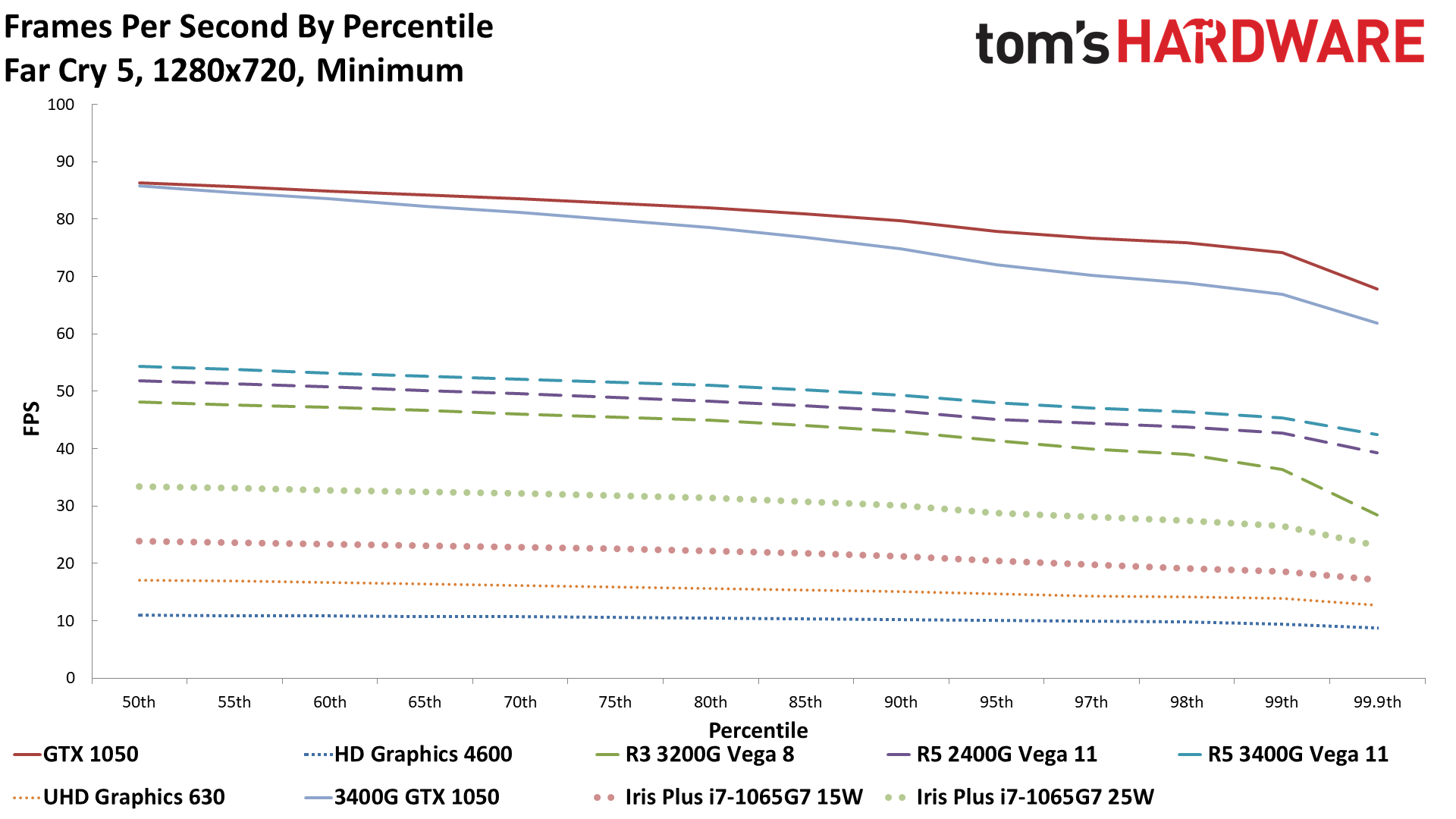

Far Cry 5 relative performance is almost an exact repeat of The Division 2: Vega 8 is 1.8 times faster than UHD 630, and the 1050 is about 60% faster than the 3400G. Average framerates are lower, however, so even at minimum quality, you won't get 60 fps from any of the integrated graphics solutions we tested. Hopefully Renoir—and maybe Xe Graphics—will change that later this year.

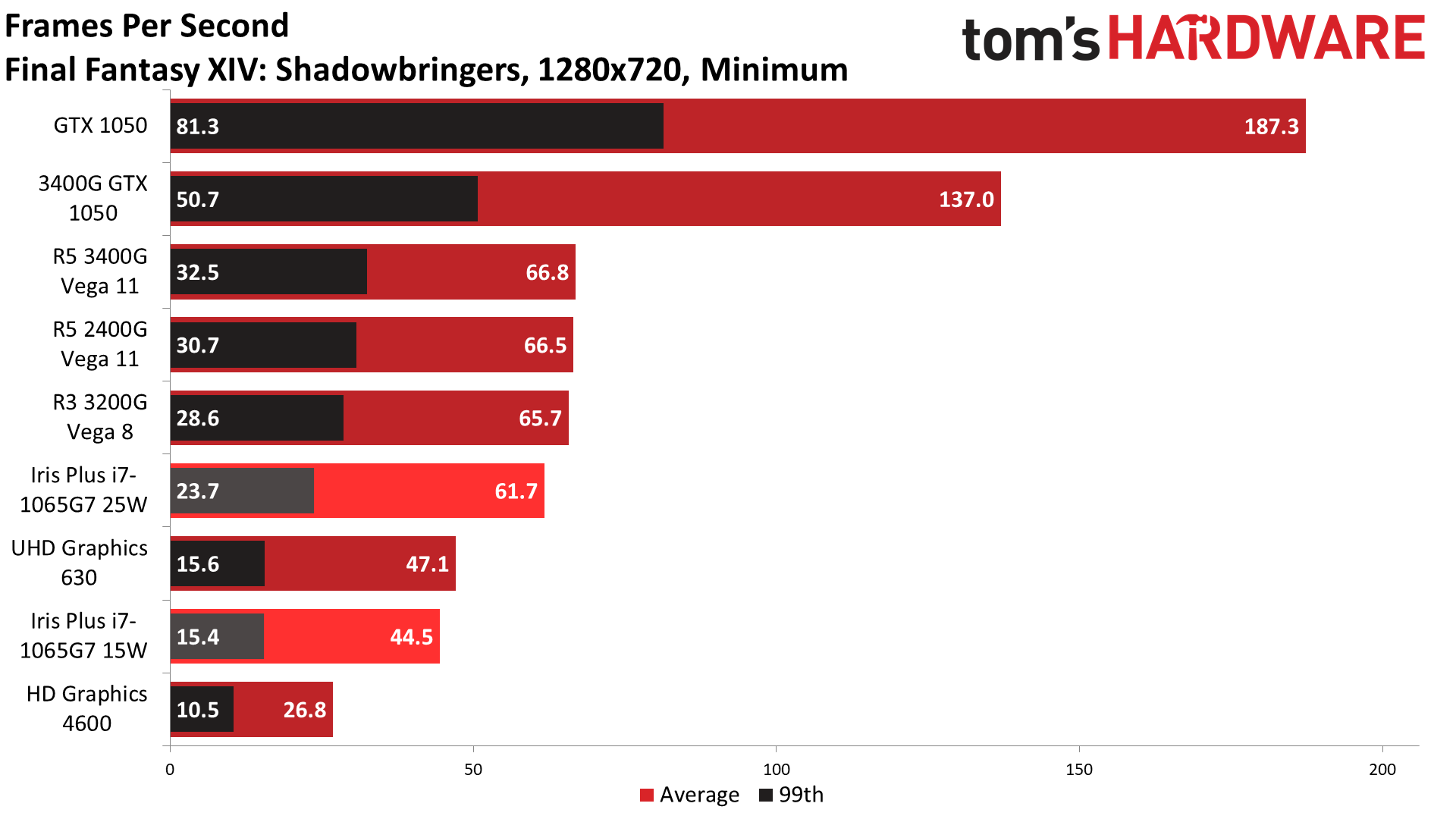

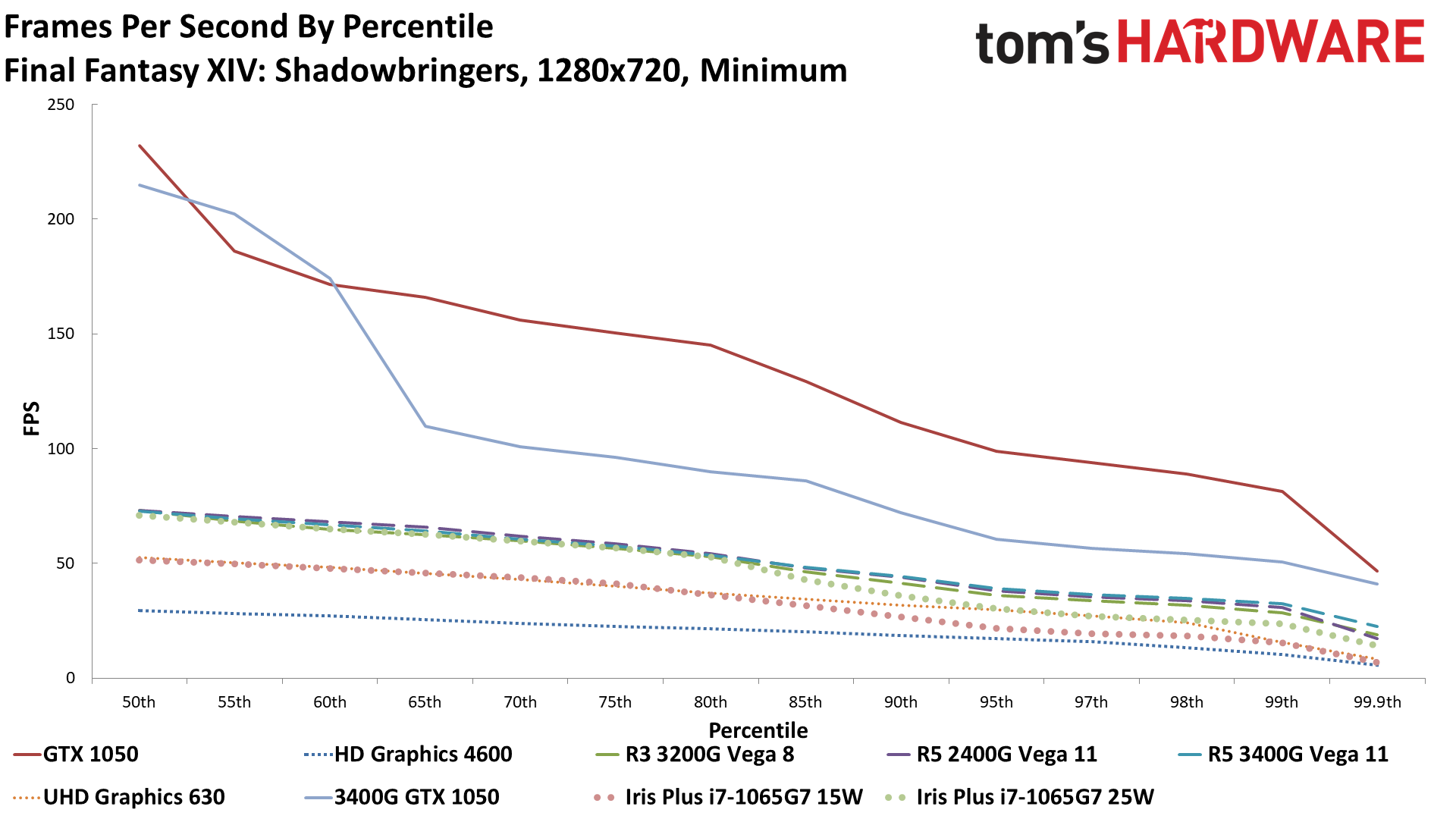

Final Fantasy XIV is the closest Intel comes to AMD's performance, trailing by just 28%. A lot of that has to do with the game being far less demanding, especially at lower quality settings, and even Intel manages a playable 47 fps. For that matter, even the old Intel HD 4600 is somewhat playable at 27 fps. Meanwhile, the GTX 1050 has its largest lead over Vega 11, with 187 fps and 180% higher framerates. This is one of those cases where GPU memory bandwidth likely plays a bigger role, as all three AMD APUs cluster together at the 66-67 fps mark.

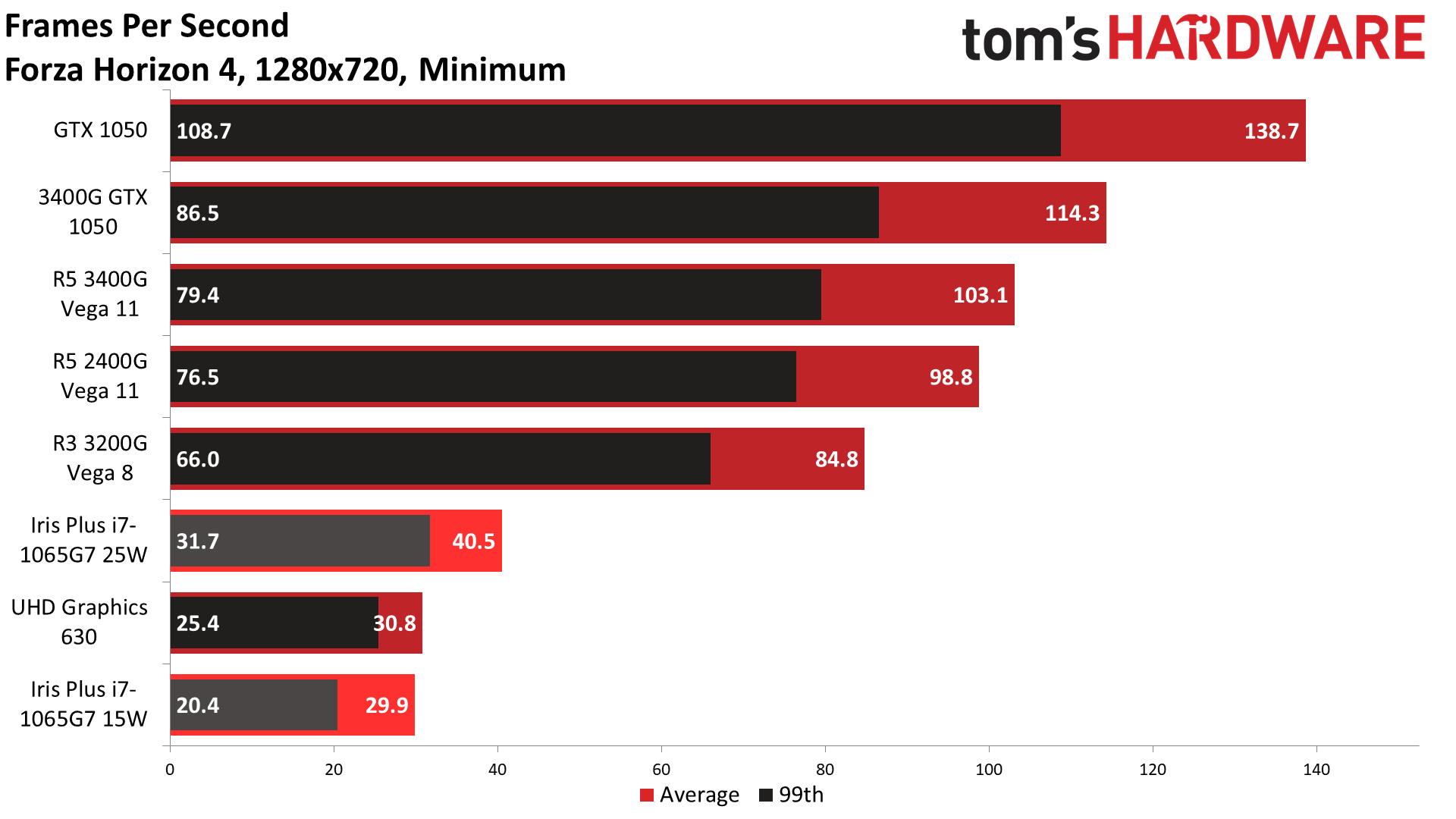

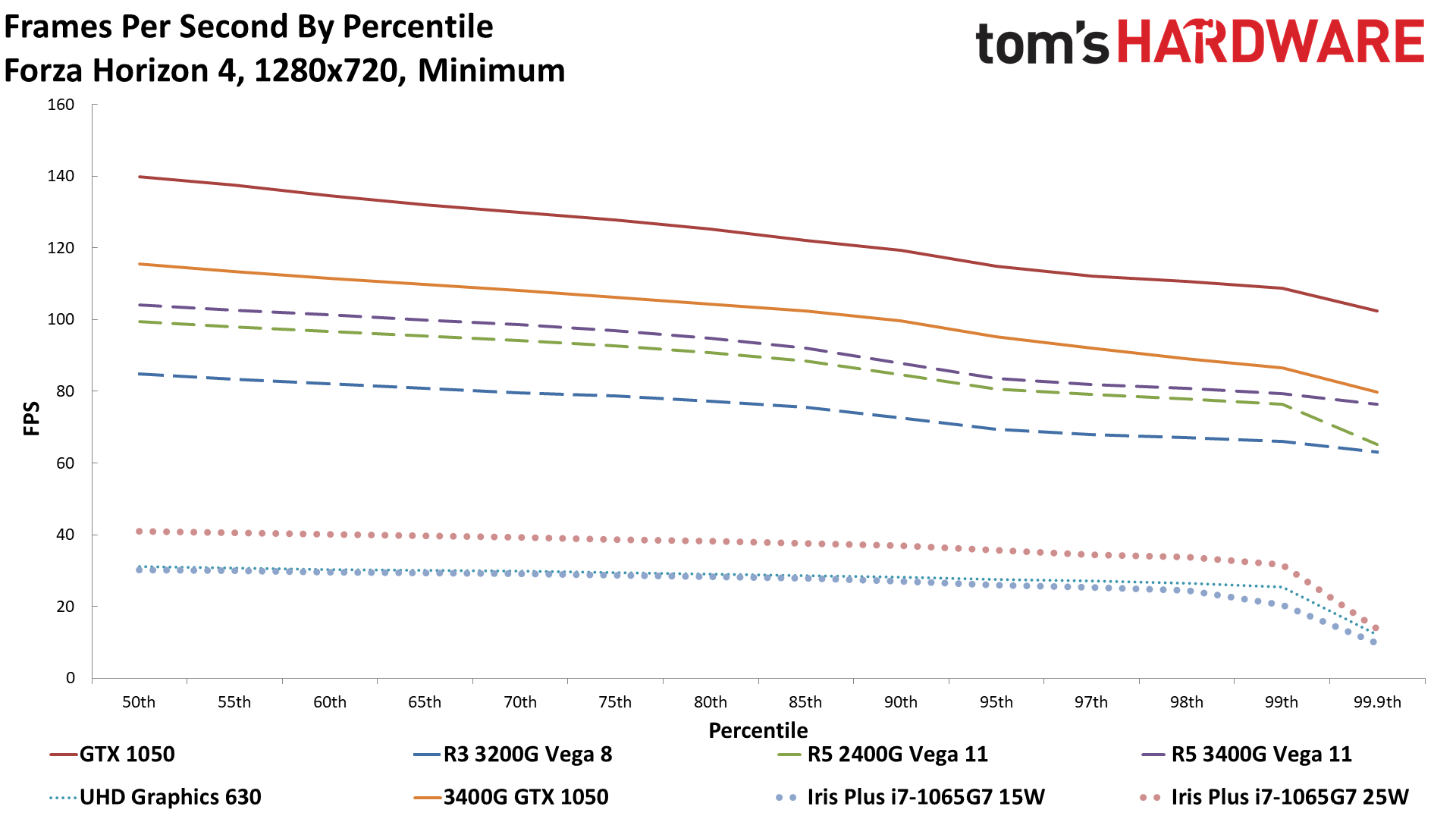

Shifting gears to Forza Horizon 4, we come to the first game that simply won't run on older Intel GPUs. It's a Windows 10 universal app and requires DX12, so you need at least a Broadwell (5th Gen) Intel CPU. Otherwise, performance is quite good on the AMD solutions, with the best result of the games we tested. The 3400G breaks 100 fps and even keeps minimums above 60, and the GTX 1050 is only 35% faster than the 3400G's Vega 11 Graphics. Intel's UHD 630 is back in the dumps, with the 3200G beating it by 175%, though it does manage a playable 31 fps—not silky smooth, but it should suffice in a pinch.

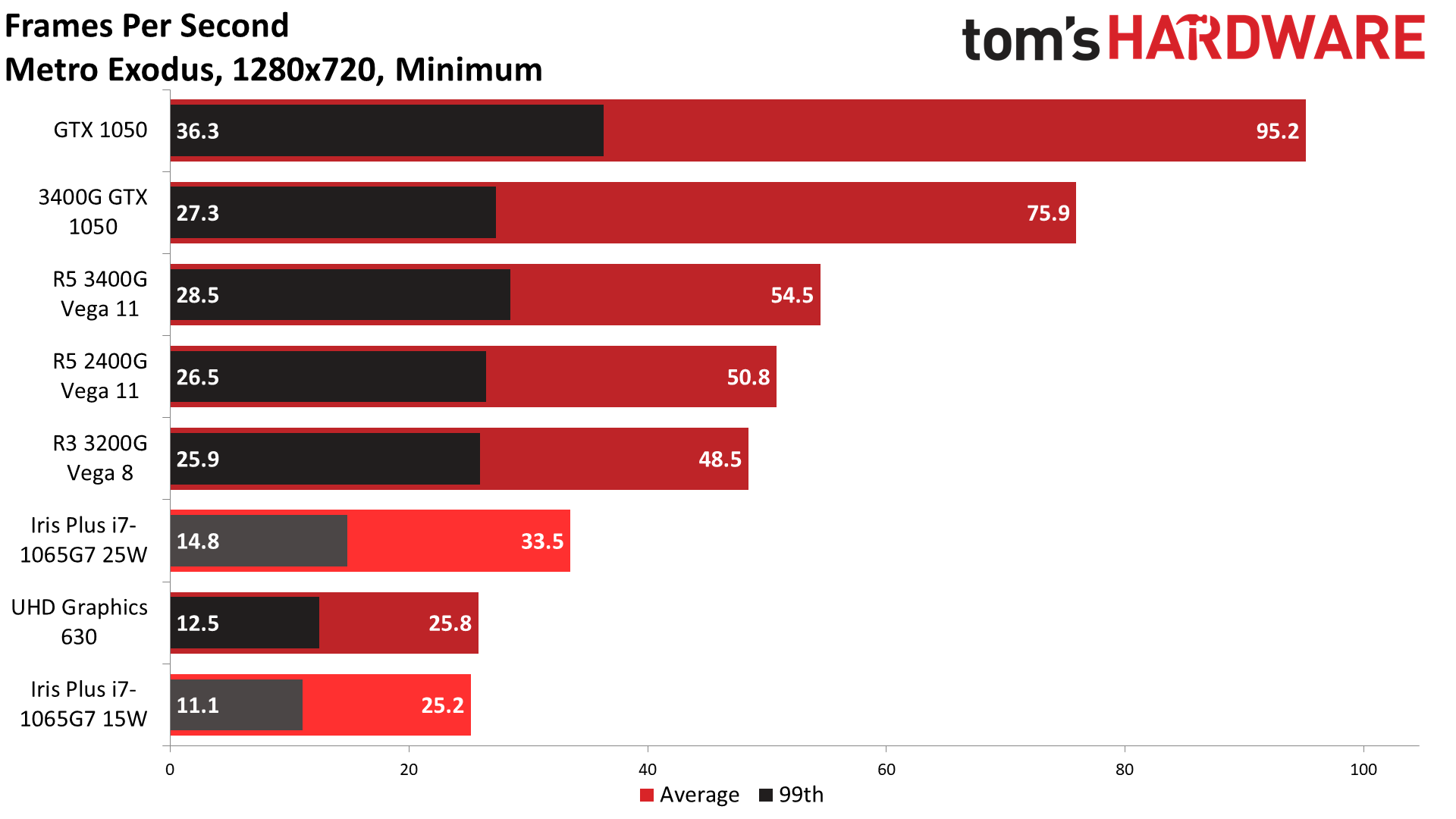

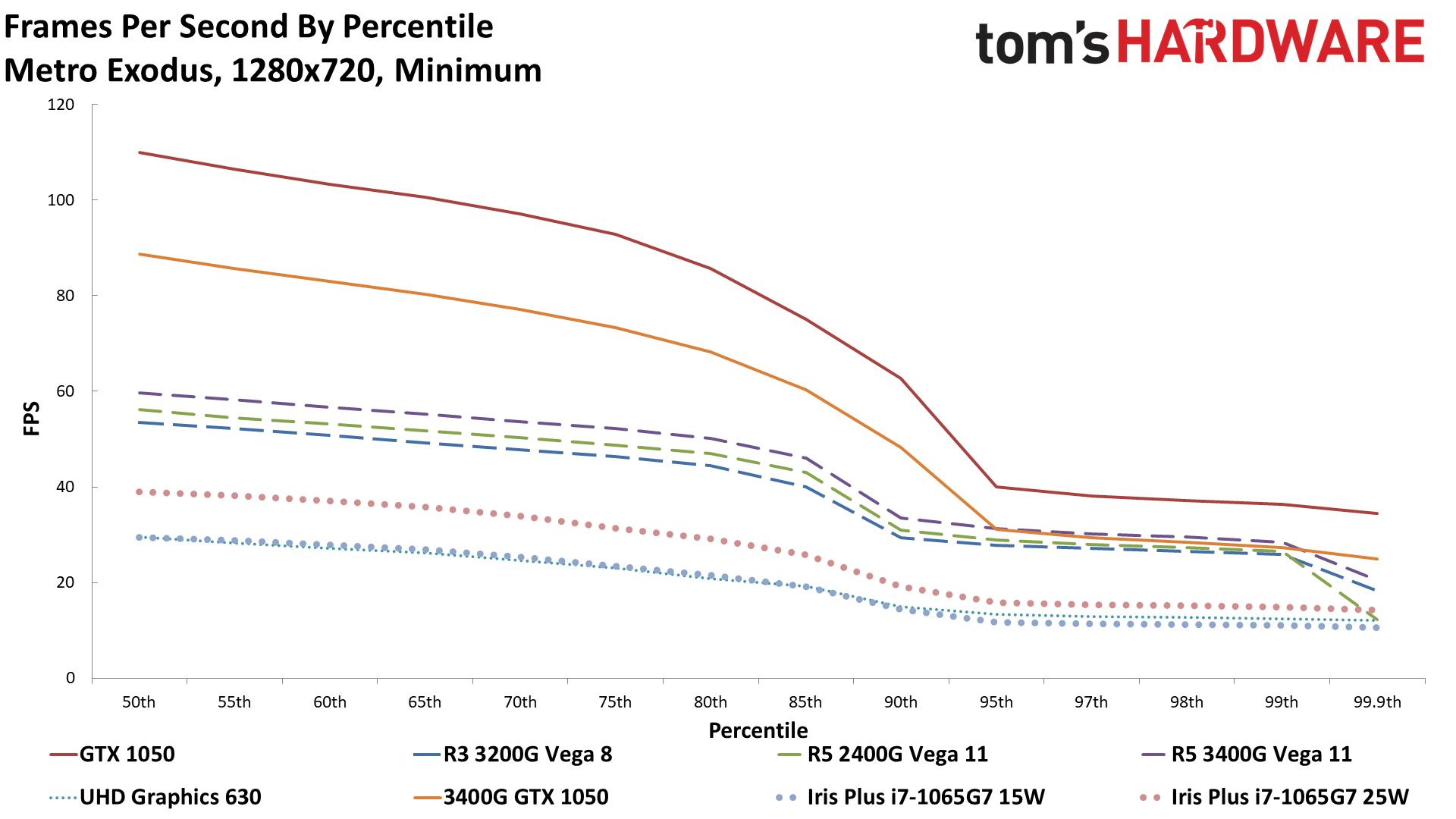

Metro Exodus ends up being one of the better showings for Intel's UHD 630, as the 3200G is 'only' 88% faster. This is another game where GPU memory bandwidth tends to be a bigger bottleneck, and you still can't get 30 fps with Intel. The HD 4600 would also launch the game and run for maybe 10-15 seconds before locking up, but not at acceptable framerates—we've omitted it from the results because it couldn't complete the benchmark. The GTX 1050 is back to a comfortable 75% lead over the closest APU.

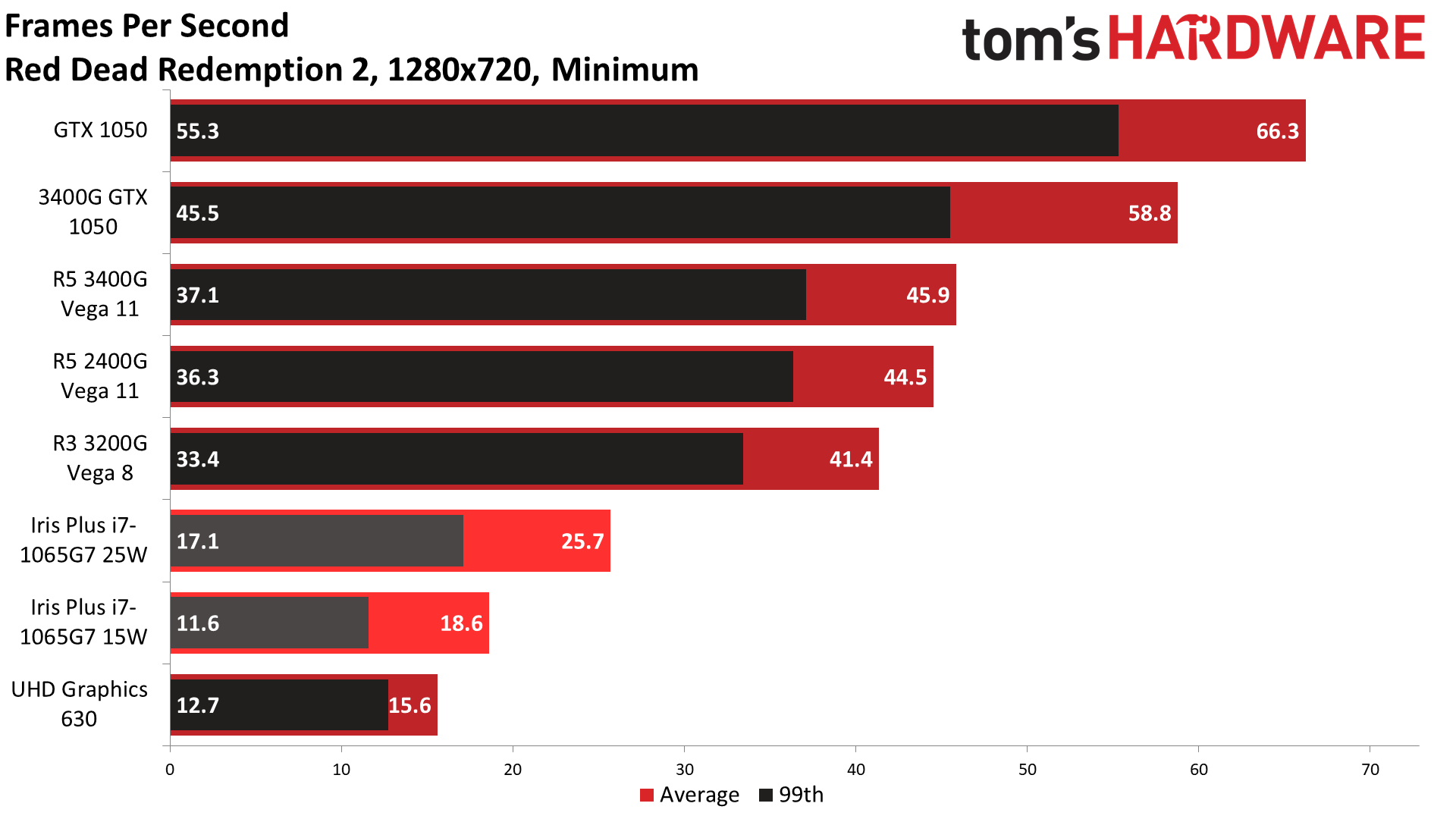

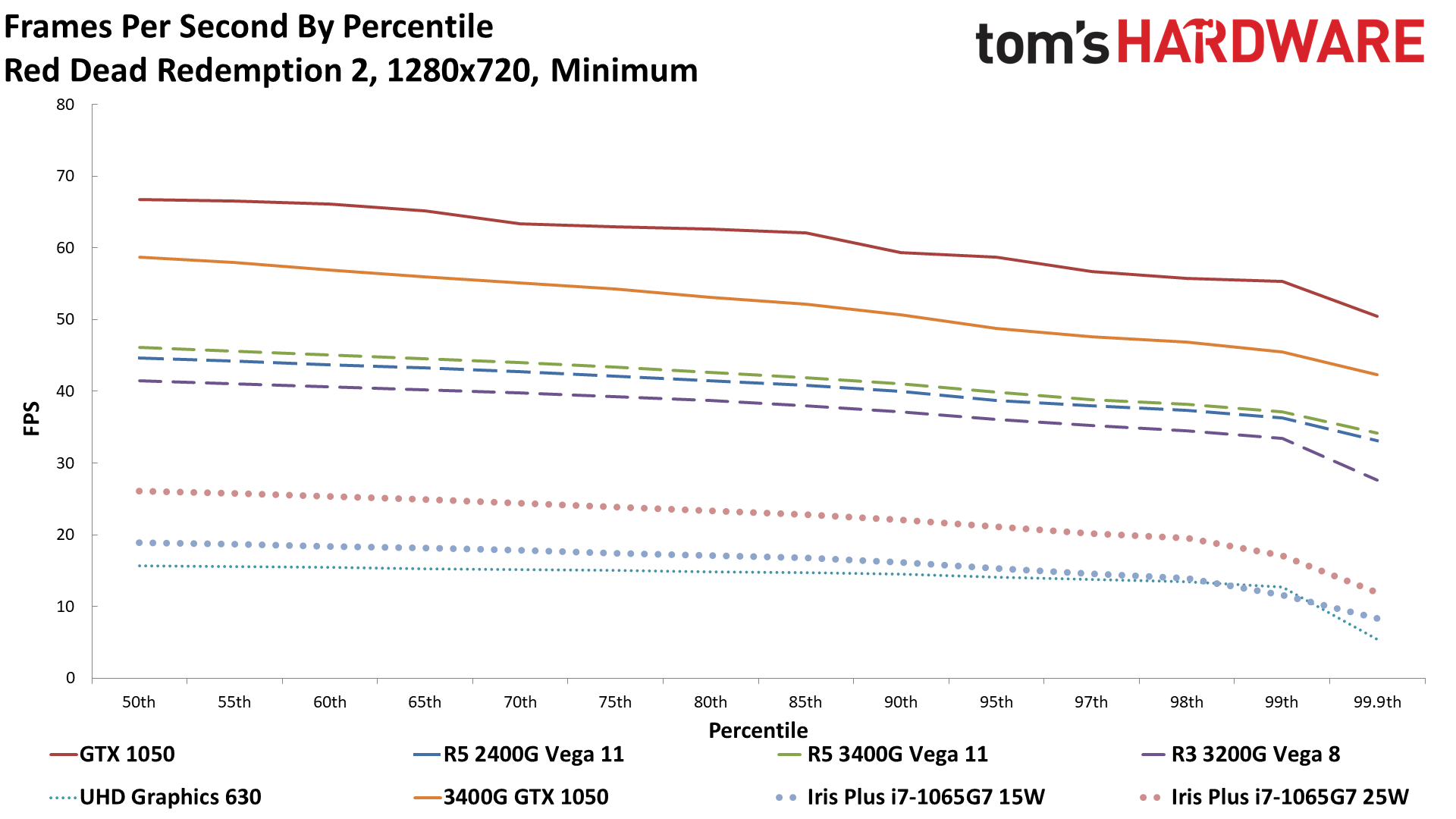

Red Dead Redemption 2 is the most demanding game we tested, with performance of just 46 fps on the 3400G Vega 11, even at 720p and minimum quality. It's still playable at least, though not on Intel's UHD 630 where framerates are in the low-to-mid teens. We tested with the Vulkan API, and like Forza, HD 4600 can't even attempt to run the game. The 3200G with Vega 8 notches up another big lead of 165% over UHD 630, while the GTX 1050 has another relatively close result with the dedicated GPU leading the 3400G and Vega 11 by only 45%.

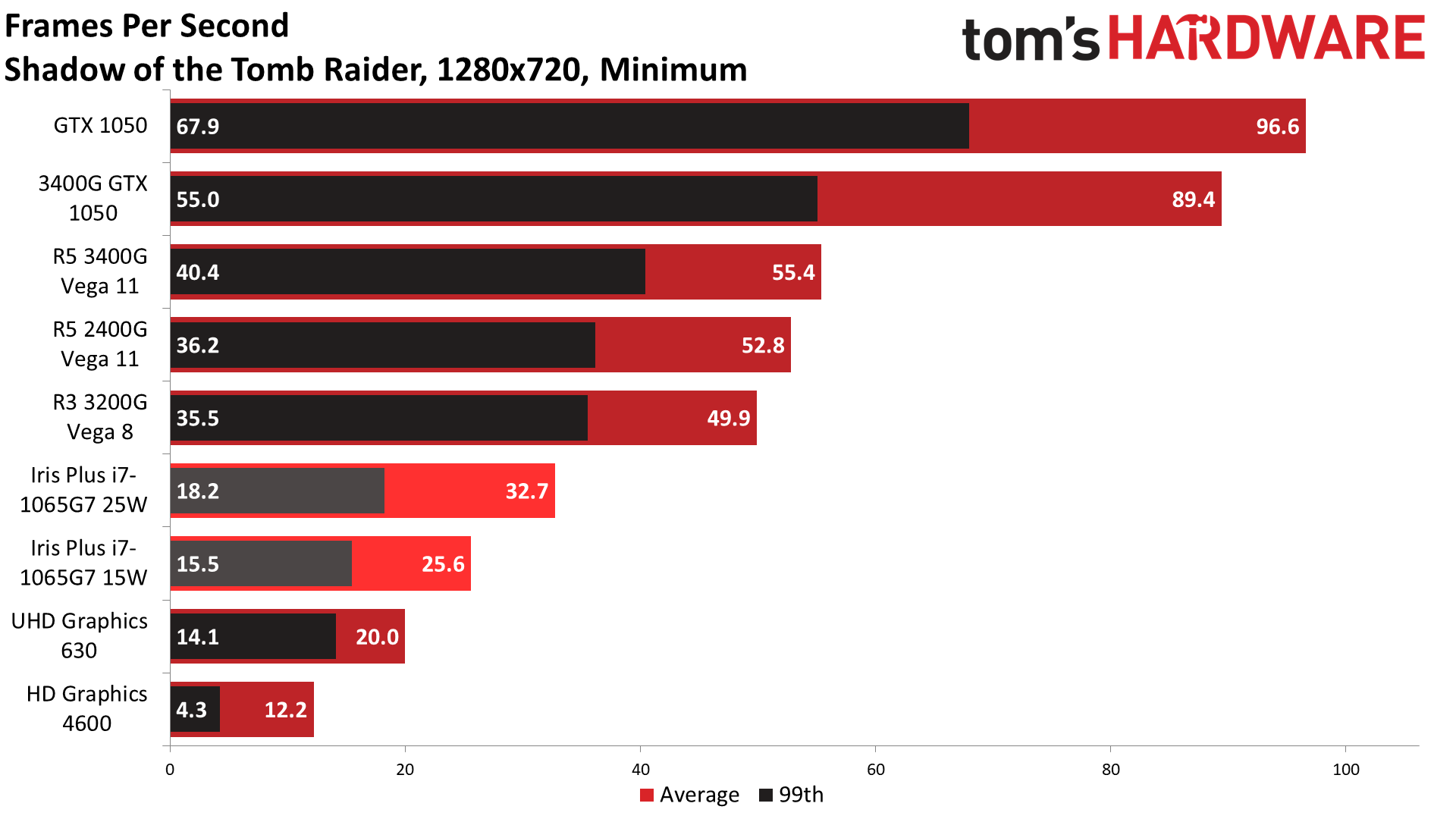

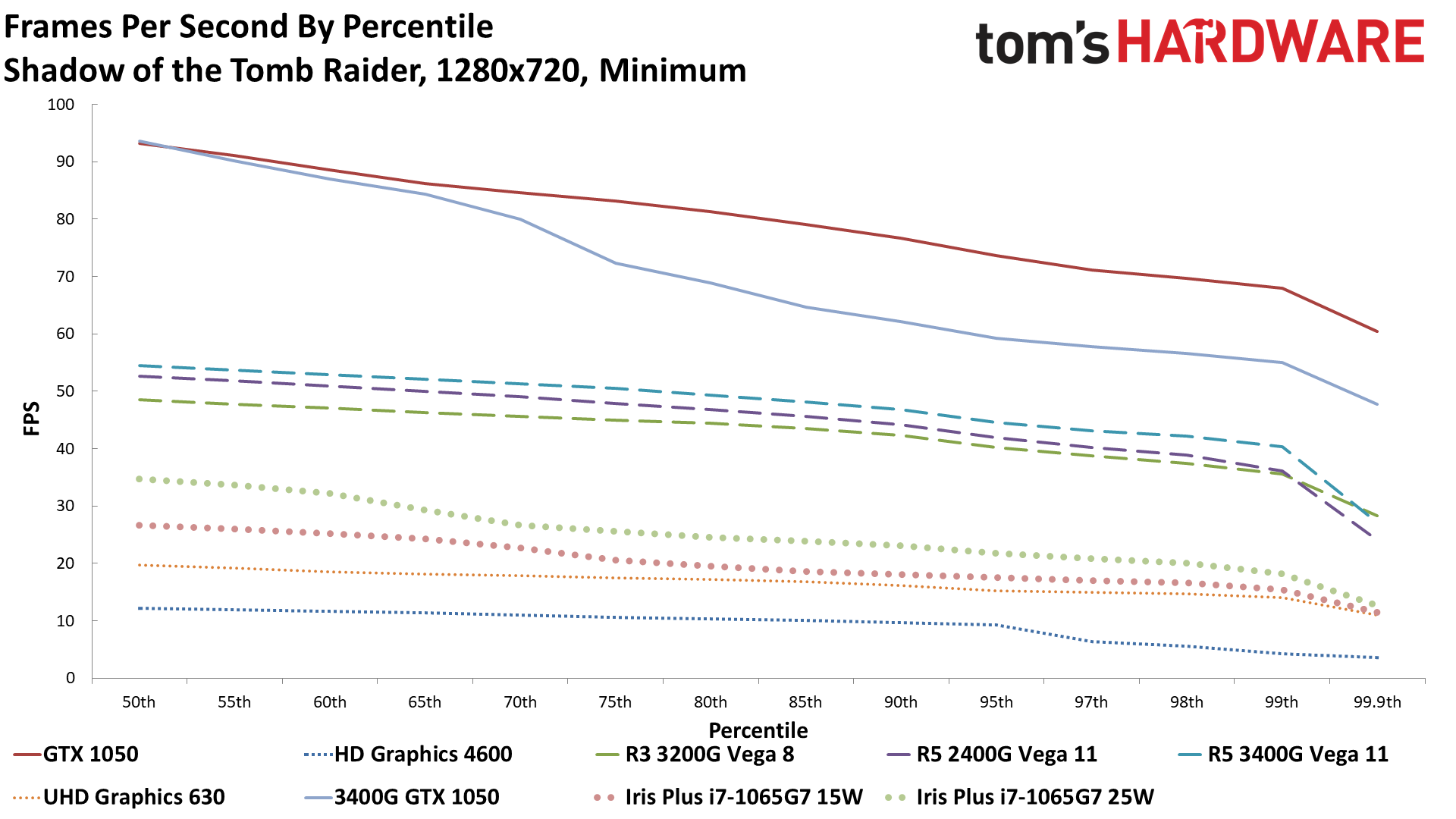

Shadow of the Tomb Raider is back to business as usual and comes closest to matching our overall average results. The GTX 1050 leads 3400G's Vega 11 by 74%, while the 3200G's Vega 8 is 150% faster than UHD 630. All of the AMD APUs manage a very playable 50 fps or more, with minimums above 30 fps. Intel, on the other hand, needs to double its UHD 630 performance to hit an acceptable level, which it theoretically does with something like the Core i7-1065G7, but I'm still working on getting one of those to run my own tests.

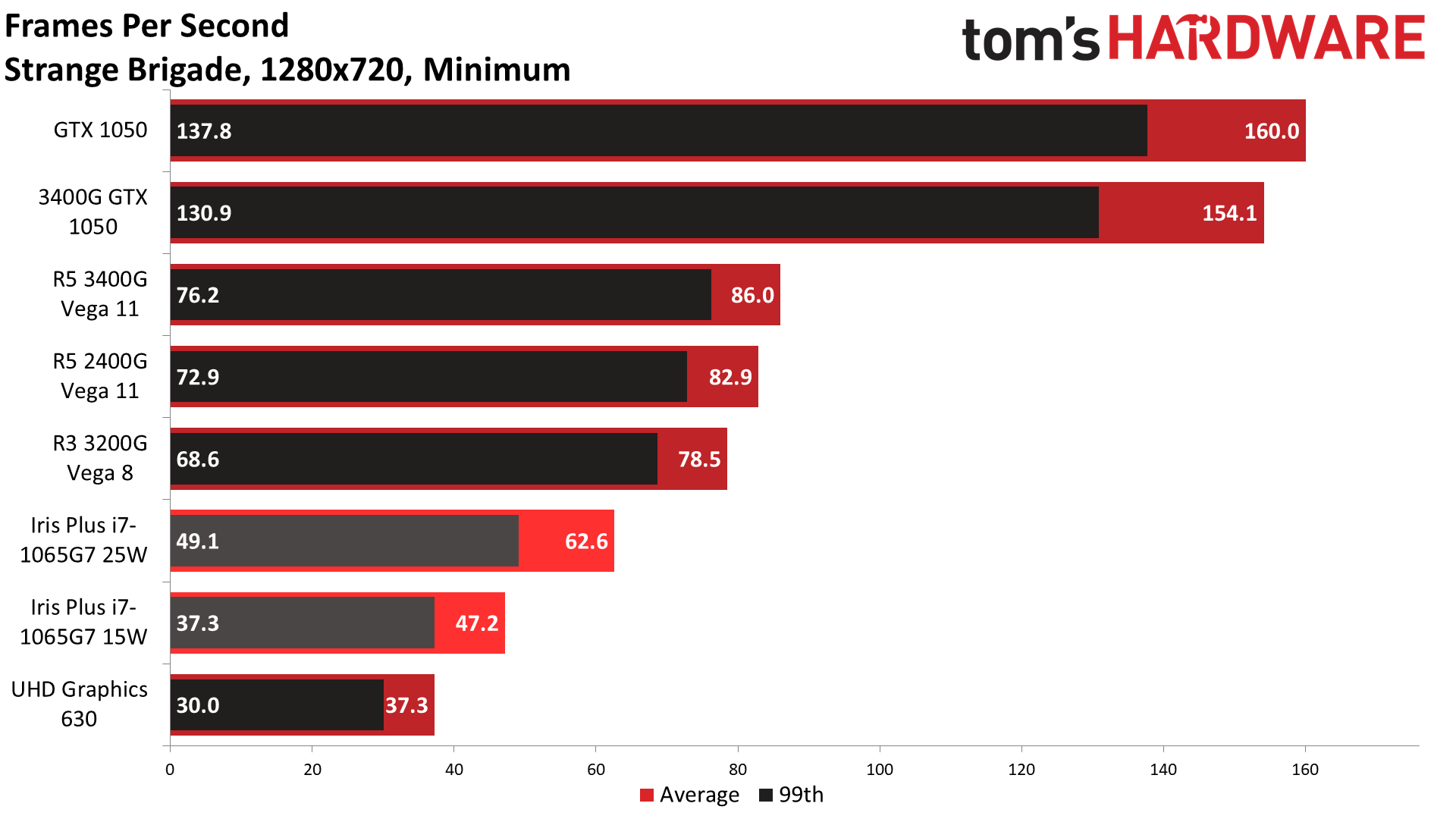

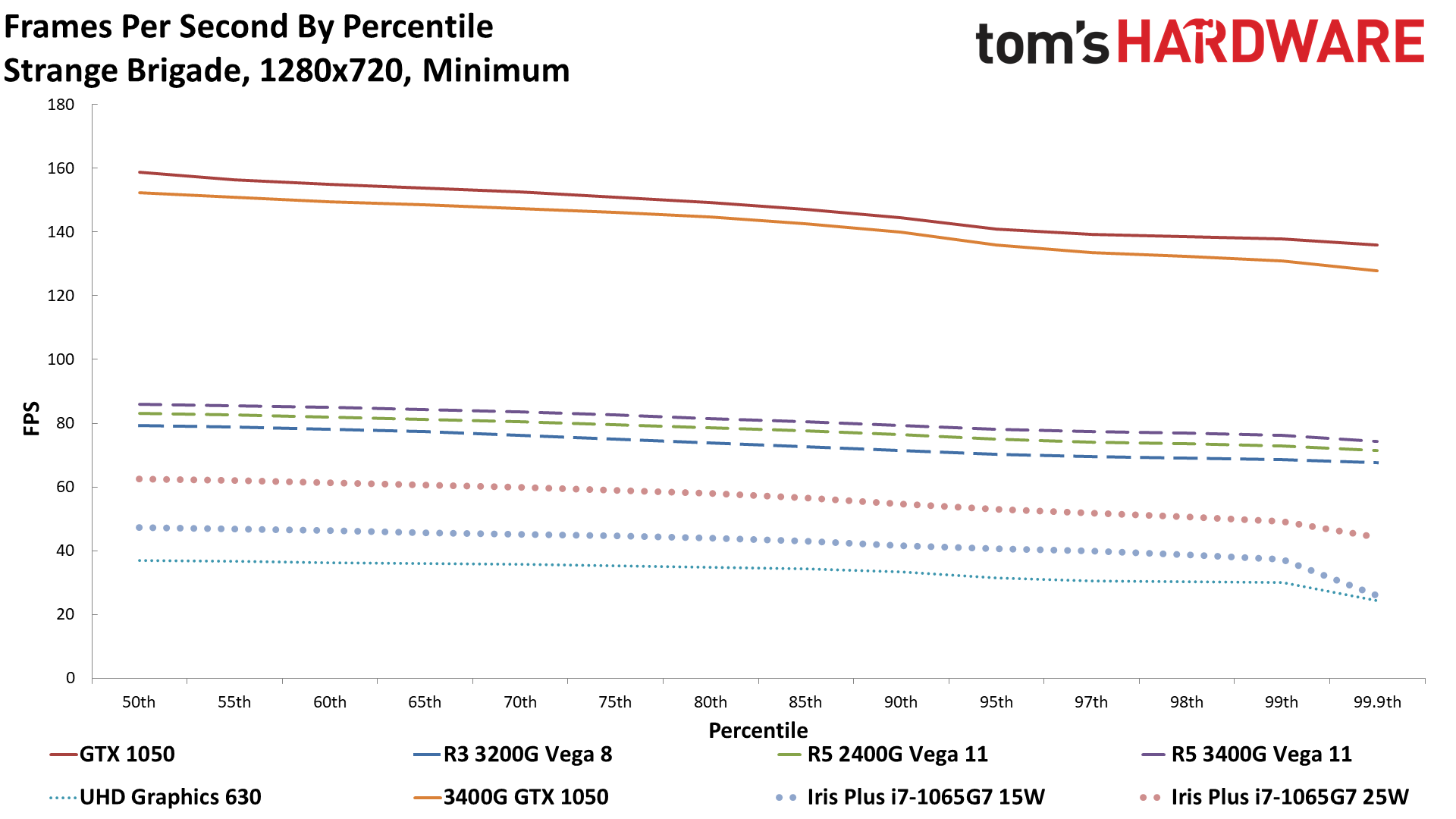

Last, we have Strange Brigade, which, like RDR2, only supports the DX12 and Vulkan APIs. That knocks out HD 4600, but the remaining GPUs can all run it at acceptable levels—and even smooth levels of 60+ fps on the AMD APUs. This is one of only three games we tested where 60 fps on AMD's integrated graphics is possible, and not coincidentally also one of the three games where Intel's UHD 630 breaks 30 fps.

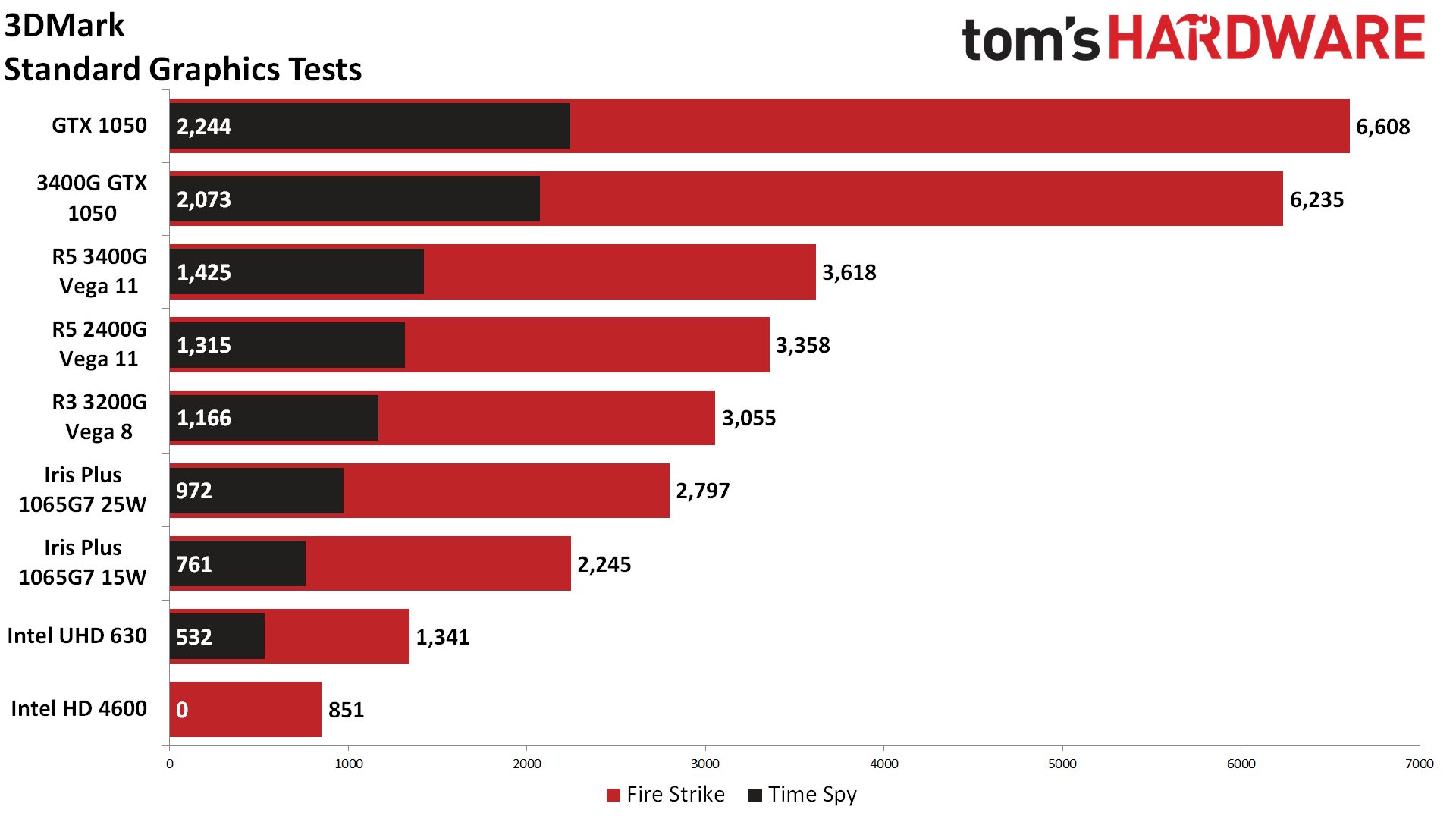

We also wanted to look at synthetic graphics performance, as measured by 3DMark Fire Strike and Time Spy. Time Spy required DX12, so again the HD 4600 can't run it, but the rest of the GPUs could. In the past, 3DMark has been accused of being overly kind to Intel—or perhaps it's that Intel has put more effort into optimizing its drivers for 3DMark. But taking the overall gaming performance we showed earlier, the 3400G Vega 11 was 168% faster than the 9700K UHD 630. In Fire Strike, the result was almost identical: Vega 11 leads by 170%. Time Spy also matched up perfectly, showing Vega 11 with a 168% lead.

Which isn't to say that 3DMark is the only testing needed. Looking at the GTX 1050 GPU shows at least one point of contention. In our overall metric, the GTX 1050 was 72% faster than the 3400G Vega 11. In Fire Strike, the 1050 is 83% faster—slightly more favorable to the dedicated GPU. Time Spy, on the other hand, drops the lead to just 57%, a massive swing, and that was only with multiple runs. One run even had a score that was lower than the 3400G.

Part of that is going to be DirectX 12, but Time Spy is also a newer 'forward-looking' benchmark that taxes the 1050's limited 2GB of VRAM. The benchmark tells you as much ("Your hardware may not be compatible"), and it skews the results. It's not wrong as such, but it's important to not simply take such results at face value.

With this round of testing out of the way—and a big part of why we wanted to do this was to prepare for the incoming Xe Graphics and AMD Renoir launches—inevitably, people wonder why the gulf between iGPU and dGPU (integrated and discrete GPUs) remains so large. Take one look at the PlayStation 5 and Xbox Series X specs, and it's clearly possible to make something much faster. So why hasn't this happened?

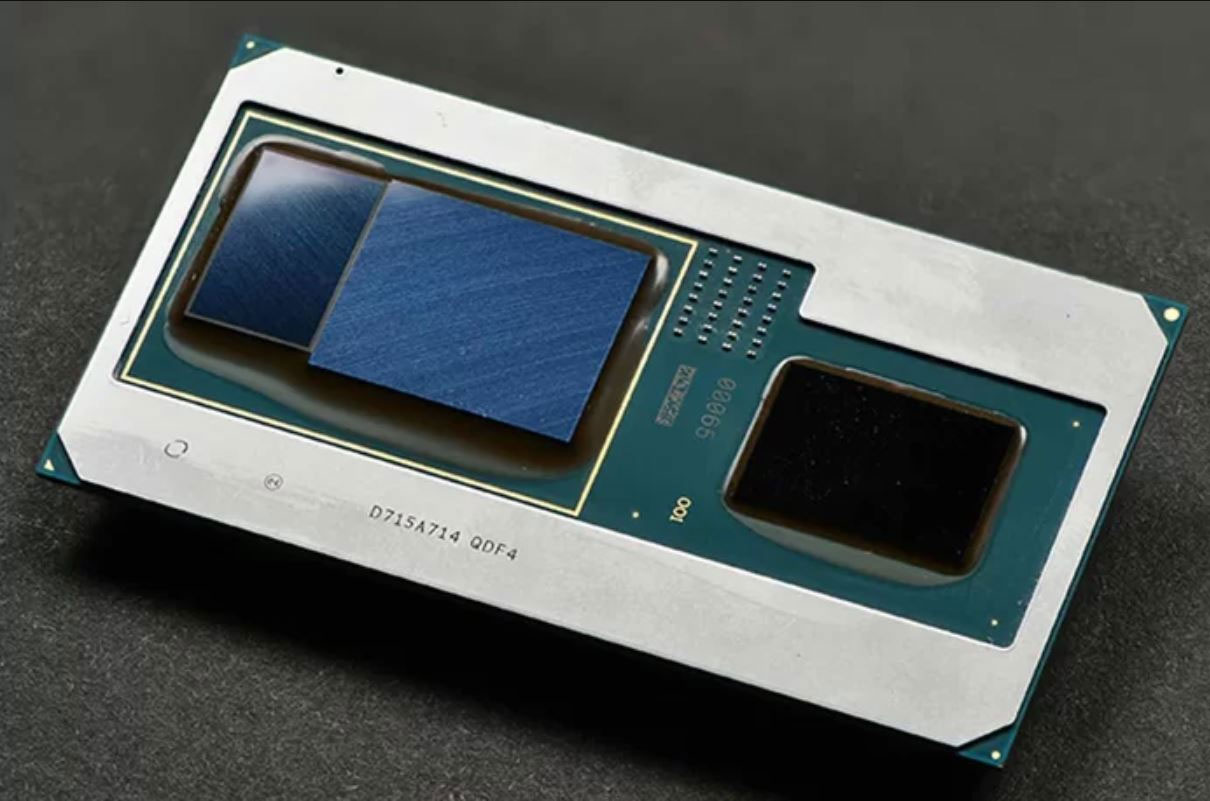

First, it's not entirely correct to say that this hasn't happened in the PC space. Intel shocked a lot of us when it announced Kaby Lake G in 2018, which combined a 4-core/8-thread Kaby Lake (7th Gen) CPU with a custom AMD Vega M GPU, and tacked on 4GB of HBM2 for good measure. We looked at how the Intel NUC with a Core i7-8809G performed at the time. In short, performance was decent, nearly matching an RX 570—which is significantly faster than anything we've included here. There were only a few problems.

First, Kaby Lake G was incredibly expensive for the level of performance it delivered. Intel likes its profit margins, and the cost of the Intel CPU, AMD GPU, and HBM2 memory was not going to be cheap. AMD sells the Ryzen 5 3400G for $150, but the only way to get the Core i7-8809G was either with an expensive NUC or an expensive laptop.

Second, and perhaps more critically, support was a joke. Intel initially said it would "regularly" update the graphics drivers for the Vega M GPU, but that didn't actually happen. There was a gap of nearly 12 months where no new drivers were made available. Then Intel finally passed the buck to AMD and said users should download AMD's drivers, and less than two months later, AMD removed Vega M from its list of drivers.

But the biggest issue is that higher-performance integrated graphics is a limited market. It makes sense for a laptop where tighter integration can reduce the size and complexity, plus you can get better power balancing between the CPU and GPU. For the desktop, though, you're better off getting a dedicated CPU and a dedicated GPU, which you can then upgrade separately.

That's not the way the console world works, and when Sony or Microsoft are willing to foot the bill for custom silicon, impressive things can be done. The current PS4 Pro and Xbox One X pack 36 CUs and 40 CUs, respectively. That's three to four times as many GPU clusters as the Ryzen 5 3400G's Vega 11 Graphics (the 11 comes from the number of CUs). The PS5 will stick with 36 CUs, but they'll be AMD's new RDNA 2 architecture, which potentially boosts performance per watt by over 100% compared to the older GCN architecture. Xbox Series X will kick that number up to 52 CUs, again with the RDNA 2 architecture.

More importantly for consoles, they can integrate a bunch of high-performance memory—GDDR5 for the current stuff and GDDR6 for the upcoming consoles. They don't need to worry about users wanting to upgrade the RAM, which makes it possible to use higher performance GDDR5/GDDR6 memory. The Xbox Series X will include 16GB of 14 Gbps GDDR6 running on a 512-bit memory interface, giving 896 GBps of total bandwidth. Even with 'overclocked' DDR4-3200 system memory running in a dual-channel configuration, traditional PC integrated graphics solutions share the resulting 51.2 GBps bandwidth with the CPU. That's a huge bottleneck.

We can see this in the performance difference between AMD's Vega 8 and Vega 11 Graphics in the above charts. Vega 8 runs at 1250 MHz and has a theoretical 1280 GFLOPS of compute performance, while Vega 11 runs at 1400 MHz and has 1971 GFLOPS of compute. In theory, Vega 11 should be 54% faster than Vega 8. In our testing, the largest lead was 22% (Forza Horizon 4), the smallest was 2% (Final Fantasy XIV), and on average, the difference was 12%. The reason for that is mostly the memory bandwidth bottleneck.

Another example is the GTX 1050, which has a theoretical 1,862 GFLOPS of performance. AMD and Nvidia GPUs aren't the same, however, and Nvidia usually gets about 10% more effective performance per GFLOPS. We see this, for example, with the GTX 1070 Ti with 8,186 GFLOPS, which ends up performing around the same level as a Vega 56 with 10,544 GFLOPS. Except the 1070 Ti actually runs at closer to 1.85 GHz, so basically 9,000 GFLOPS. Still, AMD needed about 15% more GFLOPS to match Nvidia with the previous architectures. So why does the GTX 1050 end up performing 72% faster? Simple: It has over twice the memory bandwidth.

If AMD or Intel wants to create a true high-performance integrated graphics solution, it will need a lot more memory bandwidth. We already see some of this in Ice Lake, with official support for LPDDR4-3733 memory (59.7 GBps compared to just 38.4 GBps for Coffee Lake U-series with DDR4-2400 memory). But integrated graphics should benefit from memory bandwidth up to and beyond 100 GBps. DDR5-6400 will basically double the bandwidth of DDR4-3200 memory, but we won't see support for DDR5 in PCs until 2021.

As a potentially interesting aside, initial leaks of Intel's future Rocket Lake CPUs, which may have Xe Graphics, surfaced recently. It's only 3DMark Fire Strike and Time Spy, but the numbers aren't particularly impressive. The Time Spy result is only 14% higher than UHD 630, while Fire Strike is 30% faster. And, as noted earlier, 3DMark scores can be kinder to Intel's GPUs than actual gaming tests. However, we don't know the Rocket Lake GPU configuration—as a desktop chip, Intel might once again be castrating performance. Mobile Coffee Lake for example is available with up to twice as many Execution Units (EUs) as desktop Coffee Lake, even though it generally ends up TDP limited. We'll have to wait and see what Rocket Lake actually brings to the table in late 2020 or early 2021.

Another alternative, which Intel already has tried, is various forms of dedicated GPU caching or dedicated VRAM. Earlier versions of Iris Pro Graphics included up to a 128MB eDRAM cache, and Kaby Lake G included a 4GB HBM2 stack. Both helped alleviate the need for lots of system memory bandwidth, but they of course cost extra. Pairing 'cheap' integrated graphics with 'expensive' integrated VRAM sort of defeats the purpose. But that may change. Intel has 3D chip stacking technology that could reduce the cost and footprint of dedicated GPU VRAM. It's called Foveros, and that's something we're looking forward to testing in future products.

AMD meanwhile didn't change much with its Renoir integrated graphics. Clock speeds are up to 350 MHz higher than the 3400G, but it's now a Vega 8 GPU. That's likely because AMD knows stuffing in more GPU cores without boosting memory bandwidth is mostly an exercise in futility. The hope is that future Zen 3 APUs will include Navi 2x GPUs and potentially address the bandwidth issue, but don't hold your breath for that. Plus, Zen 3 APUs are likely still a year or more off.

In short, there are ways to make integrated graphics faster and better, but they cost money. For desktop users, it will remain far easier to just buy a decent dedicated graphics card. Meanwhile, laptop and smartphone makers are working to improve performance per watt, and some promising technologies are coming. In the meantime, PC integrated graphics solutions are going to remain a bottleneck, and even more memory bandwidth likely won't change things.

After all, Intel ships more GPUs than AMD and Nvidia combined, and yet it's now planning to enter the discrete GPU market. Intel may still look at HBM2 or stacked chips to add dedicated RAM for future integrated GPUs, but we don't expect those to be desktop solutions.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

King_V ReplyAnd before you ask, no, we didn't have a previous-gen AMD A10 (or earlier) APU for comparison.

Dammit, @JarredWaltonGPU , it was only a passing thought, stop reading my mind!! :LOL:

(ok, maybe closer to morbid curiosity than passing thought, with an "I think the iGPU of Bristol Ridge would do better than Intel" ) -

cryoburner ReplyOf course, even the fastest integrated solutions pale in comparison to a dedicated GPU, and they're not on our list of the best graphics cards for a good reason.

Is it because they are not a card? : 3

The GTX 1050 is by no means one of the fastest GPUs right now, though you can pick up barebones models off eBay for a song (if you're willing to deal with shipment from China).

That unbranded "GTX 1050" you linked to appears to be some other card rebadged as a 1050, seeing as the image shows a VGA D-Sub port, something 10-series cards shouldn't have. -

DZIrl Did you guys check how your graphs look like?Reply

Text is unreadable and could read it only when full screen. Could not past screenshot but text is 1/10th of bar height. Even when graph is open numbers in graphs are hardly readable and full screen is required. -

JarredWaltonGPU Reply

Partly! And because they're slow and I don't want to saddle someone with a slow GPU and a slow CPU that only has an x8 PCIe link width for dedicated graphics.cryoburner said:Is it because they are not a card? : 3

That unbranded "GTX 1050" you linked to appears to be some other card rebadged as a 1050, seeing as the image shows a VGA D-Sub port, something 10-series cards shouldn't have.

After checking a bit further, I believe you're right and these are scam cards. I found a video where a guy bought a supposed GTX 1050 Ti. It clearly has four out of six memory chips on the board, which suggests it's an older card that has a tweaked BIOS to identify it as a GTX 1050 Ti:

PNTmDQdV6u4View: https://www.youtube.com/watch?v=PNTmDQdV6u4

I was thinking with the Chinese market, there could be hardware still in use that's relatively ancient. I don't think Nvidia killed off the possibility of VGA output in Pascal, and on a budget card it might actually make sense. I'm almost tempted to buy one of these, just to prove what's actually being used. But then I'd be out $70 or whatever.

I've updated the text now to remove the link. Note that there are legitimate 1050 cards available on eBay -- just don't get the non-brand fake models. :) -

JarredWaltonGPU Reply

Sorry, I usually have charts with 20 GPUs listed and have to size the text small, and I forgot to bump up the font for this. We do have the full size images for a reason, but I'll see about replacing these with legible versions. (And I usually view the normal images when writing the article, not the shrunk down monstrosities our CMS forces on us.)DZIrl said:Did you guys check how your graphs look like?

Text is unreadable and could read it only when full screen. Could not past screenshot but text is 1/10th of bar height. Even when graph is open numbers in graphs are hardly readable and full screen is required.

And now the charts are updated. -

barureddy1 I'm surprised that AMD doesn't sell a similiar apu to what they put into the Xbox or PS. They should make a 100-150watt TDP apu. In the process, they would canibalize their low end market, but also in the process, they would dominate the PC gaming market.Reply -

mitch074 Reply

RAM bottleneck, on top of the custom design.barureddy1 said:I'm surprised that AMD doesn't sell a similiar apu to what they put into the Xbox or PS. They should make a 100-150watt TDP apu. In the process, they would canibalize their low end market, but also in the process, they would dominate the PC gaming market. -

JarredWaltonGPU Reply

This is all prepping for the upcoming launches -- I'm trying to get a Renoir laptop in for testing, I've got an Ice Lake laptop coming apparently. And when desktop Renoir and Xe Graphics arrive, I've now got numbers I can compare them with. As for Zen 3, that's probably six months out for the CPU, never mind the APU variant that's probably at least a year off.mgutt said:Why didn't you wait for Ryzen 4000 / Zen 3?! -

JarredWaltonGPU Reply

That's the whole bottom section of the article -- why AMD and Intel don't make processors with much faster integrated graphics. Best bet for a custom design would be in a laptop. It would be interesting to see someone like Razer put together a laptop with something close to Xbox Series X graphics, and 16GB of GDDR6. But then you'd need driver updates for that special chip, which is a pain. I won't say it will never happen, but it's extremely unlikely to occur any time soon.barureddy1 said:I'm surprised that AMD doesn't sell a similiar apu to what they put into the Xbox or PS. They should make a 100-150watt TDP apu. In the process, they would canibalize their low end market, but also in the process, they would dominate the PC gaming market.