LIVE BLOG: AFDS 2012 Keynote 2 - Gaikai, SRS, Microsoft

We're live here in Bellevue, WA bringing you live updates of the latest from AMD and its partners.

8:45 We're here again for day two of the keynote for the AMD Fusion Developer Summit. Up today is David Perry of Gaikai (CEO and co-founder), Alan D. Kraemer of SRS Labs (SVP and CTO), and Steven Bathiche of Microsoft (Director of Research, Applied Sciences Group). Stay tuned here for the next couple of hours to get the play-by-play of the keynote!

8:55 Five minute warning! Only a handful of minutes left to enjoy the red lighting and dance music. Looking for something cool to read while we wait? Have you heard about AMD's video card for "display walls" featuring Eyefinity for up to six 4K screens?

9:02 It's starting off with a reel introducing today's guests. Manju Hegde and John Taylor are back from yesterday.

9:06 David Perry takes the stage to tell us about some gaming. He's talking about his Northern Irish heritage, with some good humour. And now some background out old day gaming... with some crazy concepts, such as the "Rabbit Mating Game". Games in 16K back then, which is around the same as the Ebay logo.

9:12 Dave is going into how cloud gaming is the next big advancement in media. He says that retail is a little resistant, but even GameStop is coming around. To him, retail = boxes and digital = files. Files are a hassle, he says, as he has to store them and back them up. Now it's about "virtual data" where the user doesn't need to store anything.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

He sees free to play as a big shift. He saw it in Asia and now it's spreading here to the west.

As for web gaming, Farmville isn't for everyone. Some people want to play BF3. Also, interesting factoid, 1 in 10 social gamers end up in real life relationships from the game. No, NOT Farmville, but rather MMO games. WoW weddings, anyone?

They asked gamers what they use to determine whether or not to buy a game. By a large margin gamers want to try the demo. In second place are reviews, but nothing beats the first hand experience.

Dave demos what gamers have to go through to just get started on a trial for World of Warcraft. He's definitely getting laughs from the audience of how many hoops one has to jump through to just try something. According to him, nearly a third of people just skip the whole process once they see they have to fill things out.

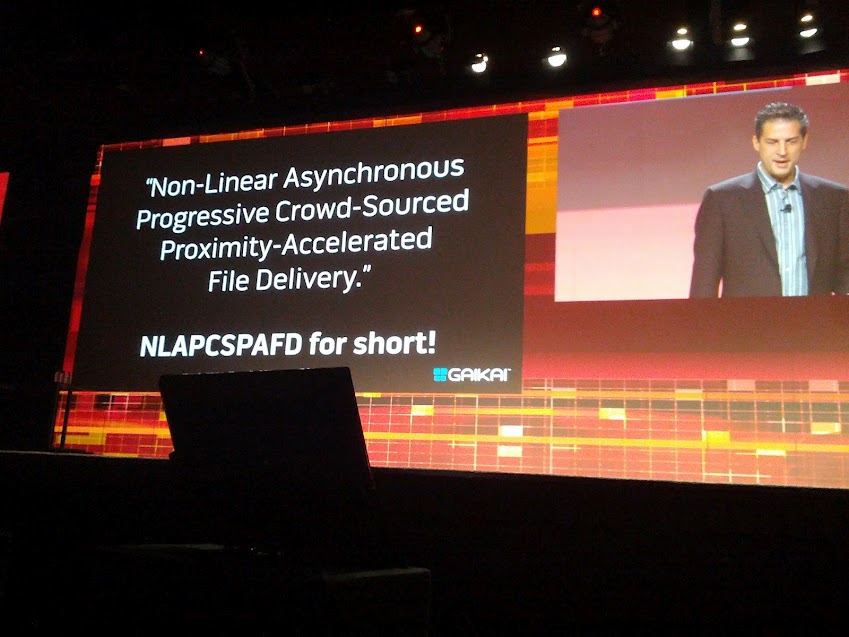

Gaikai had to build its own server network. Over 1000 PC games launched last year, but finding the right one is hard. Gamers want to try a game right away. A demo video of Crysis 2 streaming over the cloud. Now ANYTHING can play Crysis. He calls this... uh, some really long term. See photo:

Dave references Netflix and Hulu and wonders why it has to be that hard to get something the same for video games. Now Gaikai is in Walmart and YouTube, and of course publisher sites. If you remember from E3, Gaikai is in LG TVs. And last week they added Samsung. This could be important when the new consoles launch next year (according to David) and people have to buy new hardware. Will they skip that and go to the cloud?

As you PC gamers know, developers going for the lowest common denominator means games that don't take advantage of your high-end GPUs. Dave thinks that with the cloud, developers can scale up to the max spec and people can just stream that to their puny machines.

Look, Call of Duty on an HTC One X:

Dave's done. That's 100 slides in 30 minutes! Time for a bit of Q&A...

Q about how this was integrated into the TV with no mods. Dave's A: data comes in from ethernet or Wi-Fi, so some firmware to support USB and to make the streaming path as fast as possible between the network and the TV. Gaikai is now working on latency. Apparently there is up to 100ms of latency in the display, and that could be more than the internet itself. Gaikai uses UDP. Dave says that next year they could have a path to the TV that's even faster than HDMI, perhaps making it lower latency than consoles. Wow, if true.

Tying it into AFDS, heterogeneous computing will help reduce costs. David hopes that in 5 years, server costs should be negligible.

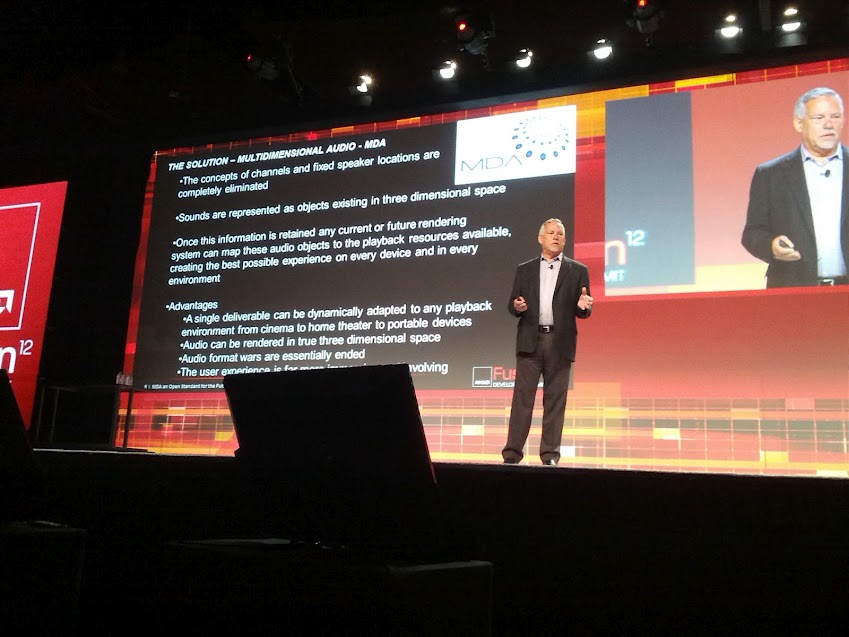

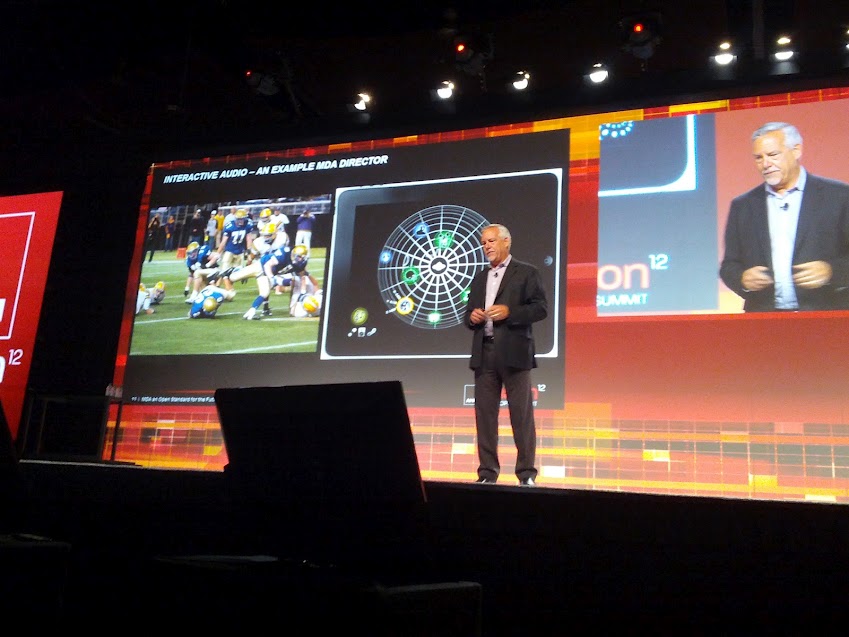

9:48 An introduction for Alan Kraemer of SRS labs. Yes, that's right... GPU technology for audio. Alan says that audio right now is trapped by channels. Being only able to place audio in 2, 5, or even 7 channels is far too limiting, he says. Adding more channels isn't the answer. This also is a problem with people listening on different formats, not even 5.1 or 7.1, but people listening in stereo on personal devices with headsets.

What's the solution? MDA or multidimensional audio. Create the audio in a 4D space -- all information that will get lost in just flat channels. Some of this scared Hollywood because it changes everything. Alan wanted to do a demo of this in the giant keynote room, but it would take 50 speakers and would have been too costly -- but imagine this in a cinema.

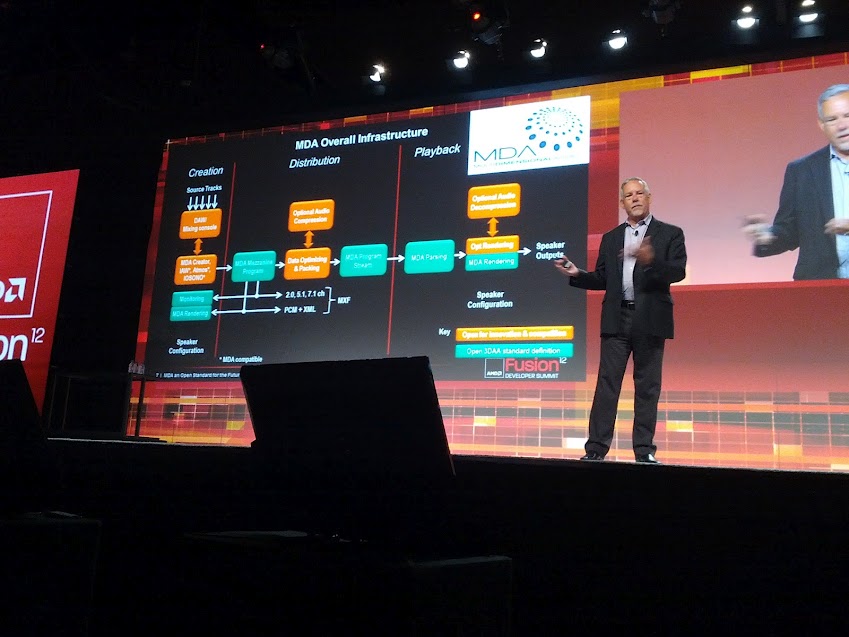

What is MDA? Open language to describe audio in 4D. It's not a new codec, but just a new form of PCM. A common, flexible channel and speaker format for audio. MDA does not compete with other rendering systems because all can render MDA if they choose to implement.

Ahh, it all comes together. The entire process for MDA creation works very well with heterogeneous processing. "Psycho acoustic rendering."

The MDA player is now running on a PC and Mac. The binary streaming format is defined and in test. MDA's been demo'ed to most movie studios.

MDA now targets basically all industries, but definitely cinema, home theater, games, music. There's also an interesting example of teleconferencing, where the listener can hear exactly where the speaker is sitting.

There's a 3DAA - 3D audio alliance. AMD is obviously a founding member.

Back to HSA. Traditional audio is DSP based, but MDA requires lots of parallel threads, so HSA is highly relevant to this space. Like HSA, 3DAA is an open standard.

Q&A - Q: Will it be available on Linux? A: Yes.

Q: Can this 4D info be used for force feedback for things like games? A: Yes, the format can carry the data to deliver specific force feedback.

Q: Is Hollywood on board? A: We think so. They're coming us to talk, and they seem receptive to open formats.

Q: Can you describe what it's like, for those of us not here? A: It creates an emotional response and pulls you into the content. It's an 11.2 system, but not mixed traditionally.

10:26 Next up is Steven Bathiche from Microsoft. Human interface stuff. He says his work is all about interfaces. What separates us from other creatures is the ability to use and invent tools.

A demo for an early version of Surface. A lot of cool games! "We want to create a machine that's not just about touch... more about understanding what the user wants to do."

Now with all the different cameras and sensors, HSA becomes even more important and critical.

Steve's talking about Harlow's Monkey's (Google it) and how we can't really love our machines too much. Hmm, that's tough. I know I love my machines. Now for the Microsoft future of computing concept video (we'll have a link to it a bit later).

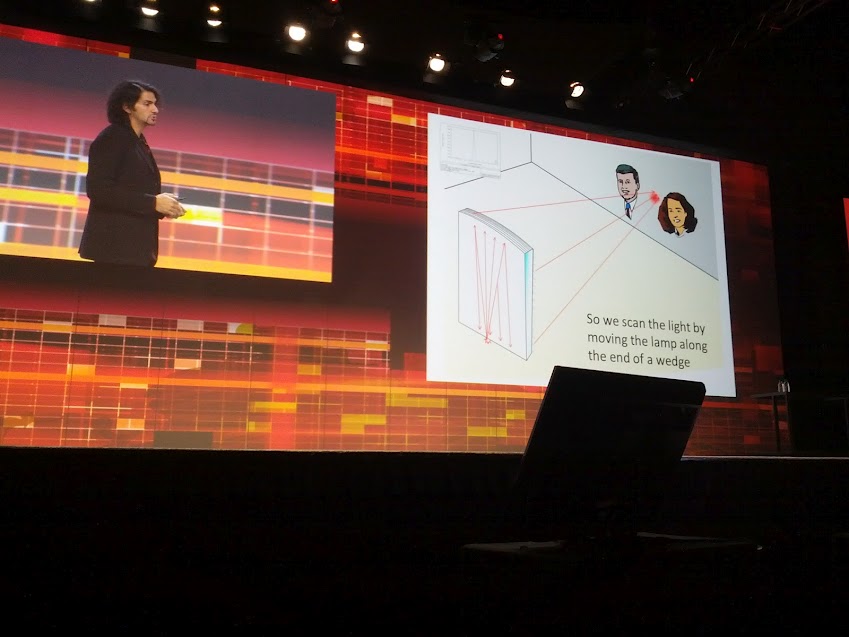

"Today we're just looking at our screens, flat 2D plane" Now he wants to do motion parallax, so when you move, you see something behind it. Stereopsis. Very impressive future looking stuff... except now Steven is telling us how to make it! It's got a lot to do with tons of cameras -- light field cameras -- to track the users. An early concept is using Kinect as a head tracker so that the user on the screen is fooled into thinking that it's like looking through a window.

The future of 3D may be stereopsis where there is no need for glasses, but rather the screen can send a different image to each eye. The same technology can be applied to different viewers... so in theory, this technology could be used to project a private beam to each viewer. For example, this could be used to feed a different screen to each gamer so they can play on the same display but with different views.

Now there's a video of the transparent OLED. We reported on this one before too. Definitely very cool interface.

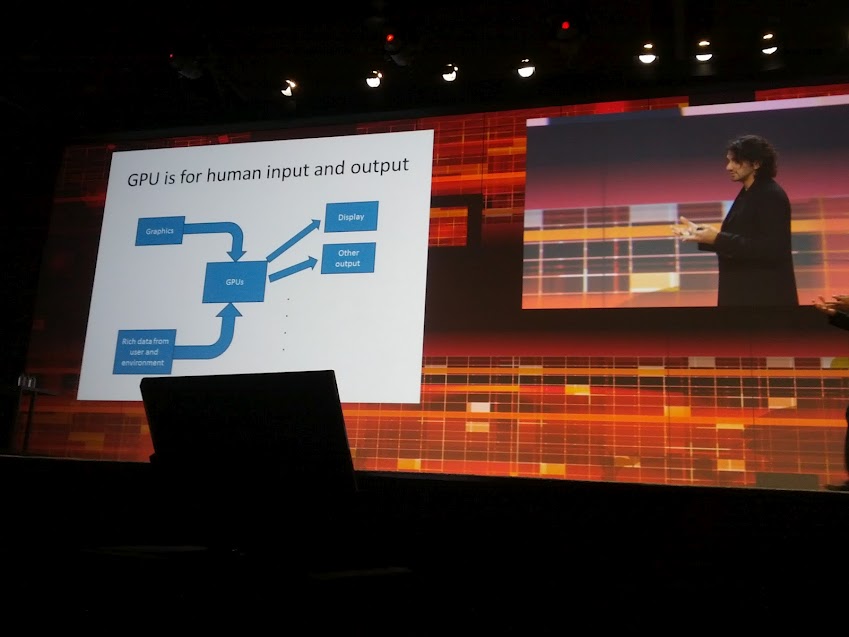

The GPU is hugely important for this sort of technology because of all the computations required.

And now playing that futuristic classroom video that's been around for a little while, but no less impressive.

Quick Q&A:

Q: Can a computer sense my emotions? A: Ross Picard at MIT media labs is researching this. There's some work, but nothing central.

Q: If you combine your demo with the SRS, will this be the holodeck? In our lifetime? A: It depends who's lifetime. I think it's possible,

That appears to be it for this morning!

-

silverblue Various MMOs have gone from being subs-based to F2P (with the caveat that some content can only be purchased), however they - esp. Lineage II - have been subs-based for a very long time. Do you charge a lot for the initial purchase or make it so that people need to make the occasional purchase? I wish we could go back to the days of Phantasy Star Online where you buy the game for a reasonable price and just pay for your internet access (which was free for us in Europe if we entered our own ISP settings; pre-DreamKey 3.0 we had to use the US-version of the setup disc :P ). The best of both worlds.Reply -

s3anister Reply1 in 10 social gamers end up in real life relationships from the game.

With the other 9 out of 10 being foreveralone.jpg. -

-Fran- Ah, some Audio improvements!Reply

It was about time we had some news regarding more enrichment in the Audio department. Good news indeed.

Cheers!